Abstract

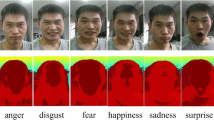

Automatic recognition of facial expression is a necessary step toward the design of more natural human-computer interaction systems. This work presents a user-independent approach for the recognition of facial expressions from image sequences. The faces are normalized in scale and rotation based on the eye centers’ locations into tracks from which we extract features representing shape and motion. Classification and localization of the center of the expression in the video sequences are performed using a Hough transform voting method based on randomized forests. We tested our approach on two publicly available databases and achieved encouraging results comparable to the state of the art.

Chapter PDF

Similar content being viewed by others

References

Darwin, C.: The Expression of the Emotions in Man and Animals. John Murray (1872)

Ekman, P., Friesen, W.: Constants across cultures in the face and emotion. Journal of Personality and Social Psychology 17, 124–129 (1971)

Sebe, N., Sun, Y., Bakker, E., Lew, M., Cohen, I., Huang, T.: Towards authentic emotion recognition. In: International Conference on Systems, Man and Cybernetics (2004)

Cohn, J.F.: Foundations of human computing: facial expression and emotion. In: International Conference on Multimodal Interfaces, pp. 233–238 (2006)

Fasel, B., Luettin, J.: Automatic facial expression analysis: a survey. Pattern Recognition 36, 259–275 (2003)

Ekman, P., Friesen, W., Hager, J.: Facial action coding system: A technique for the measurement of facial movement (1978)

Ballard, D.H.: Generalizing the hough transform to detect arbitrary shapes. Pattern Recognition 13, 111–122 (1981)

Maji, S., Malik, J.: Object detection using a max-margin hough transform. In: Computer Vision and Pattern Recognition, pp. 1038–1045 (2009)

Gall, J., Lempitsky, V.: Class-specific hough forests for object detection. In: Computer Vision and Pattern Recognition (2009)

Ommer, B., Malik, J.: Multi-scale object detection by clustering lines. In: International Computer Vision Conference (2009)

Fanelli, G., Gall, J., Van Gool, L.: Hough transform-based mouth localization for audio-visual speech recognition. In: British Machine Vision Conference (2009)

Yao, A., Gall, J., Van Gool, L.: A hough transform-based voting framework for action recognition. In: Computer Vision and Pattern Recognition (2010)

Suwa, M., Sugie, N., Fujimora, K.: A preliminary note on pattern recognition of human emotional expression. In: International Joint Conference on Pattern Recognition (1978)

Zeng, Z., Pantic, M., Roisman, G.I., Huang, T.S.: A survey of affect recognition methods: Audio, visual, and spontaneous expressions. Trans. Patt. Anal. Mach. Intell. 31, 39–58 (2009)

Buenaposada, J.M., Muñoz, E., Baumela, L.: Recognising facial expressions in video sequences. Pattern Anal. Appl. 11, 101–116 (2008)

Bartlett, M.S., Littlewort, G., Frank, M., Lainscsek, C., Fasel, I., Movellan, J.: Recognizing facial expression: Machine learning and application to spontaneous behavior. In: Computer Vision and Pattern Recognition, pp. 568–573 (2005)

Aleksic, P.S., Katsaggelos, A.K.: Automatic facial expression recognition using facial animation parameters and multi-stream hmms. Trans. on Information Forensics and Security (1) (2006)

Dornaika, F., Davoine, F.: Simultaneous facial action tracking and expression recognition in the presence of head motion. Int. J. Comput. Vision 76, 257–281 (2008)

Shang, L., Chan, K.P.: Nonparametric discriminant hmm and application to facial expression recognition. In: Computer Vision and Pattern Recognition, pp. 2090–2096 (2009)

Essa, I., Pentland, A.: Coding, analysis, interpretation, and recognition of facial expressions. Transactions on Pattern Analysis and Machine Intelligence 19, 757–763 (1997)

Yeasin, M., Bullot, B., Sharma, R.: Recognition of facial expressions and measurement of levels of interest from video. Transactions on Multimedia 8, 500–508 (2006)

Wu, T., Bartlett, M., Movellan, J.: Facial expression recognition using gabor motion energy filters. In: CVPR Workshop on Human Communicative Behavior Analysis (2010)

Shan, C., Gong, S., McOwan, P.: Facial expression recognition based on Local Binary Patterns: A comprehensive study. Image and Vision Computing 27, 803–816 (2009)

Zhao, G., Pietikäinen, M.: Boosted multi-resolution spatiotemporal descriptors for facial expression recognition. Pattern Recogn. Lett. 30, 1117–1127 (2009)

Littlewort, G., Bartlett, M.S., Fasel, I., Susskind, J., Movellan, J.: Dynamics of facial expression extracted automatically from video. Image and Vision Computing 24, 615–625 (2006); Face Processing in Video Sequences

Lin, Z., Jian, Z., Davis, L.S.: Recognizing actions by shape-motion prototype trees. In: International Computer Vision Conference (2009)

Reddy, K.K., Liu, J., Shah, M.: Incremental action recognition using feature-tree. In: International Computer Vision Conference (2009)

Breiman, L.: Random forests. Machine Learning 45, 5–32 (2001)

Grabner, H., Grabner, M., Bischof, H.: Real-time tracking via on-line boosting. In: British Machine Vision Conference, pp. 47–56 (2006)

Valenti, R., Gevers, T.: Accurate eye center location and tracking using isophote curvature. In: Computer Vision and Pattern Recognition, pp. 1–8 (2008)

Schindler, K., Van Gool, L.J.: Action snippets: How many frames does human action recognition require? In: Computer Vision and Pattern Recognition (2008)

Field, D., et al.: Relations between the statistics of natural images and the response properties of cortical cells. Journal of the Optical Society of America A 4, 2379–2394 (1987)

Fukushima, K.: Neocognitron: a self-organizing neural network model for mechanisms of pattern recognition unaffected by shift in position. Biol. Cybernetics 36, 193–202 (1980)

Kanade, T., Cohn, J.F., Tian, Y.: Comprehensive database for facial expression analysis. In: Automatic Face and Gesture Recognition, pp. 46–53 (2000)

Pantic, M., Valstar, M., Rademaker, R., Maat, L.: Web-based database for facial expression analysis. In: International Conference on Multimedia and Expo, p. 5 (2005)

Lipori, G.: Manual annotations of facial fiducial points on the cohn kanade database, LAIV laboratory, University of Milan (2010), http://lipori.dsi.unimi.it/download/gt2.html

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2012 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Fanelli, G., Yao, A., Noel, PL., Gall, J., Van Gool, L. (2012). Hough Forest-Based Facial Expression Recognition from Video Sequences. In: Kutulakos, K.N. (eds) Trends and Topics in Computer Vision. ECCV 2010. Lecture Notes in Computer Science, vol 6553. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-35749-7_15

Download citation

DOI: https://doi.org/10.1007/978-3-642-35749-7_15

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-35748-0

Online ISBN: 978-3-642-35749-7

eBook Packages: Computer ScienceComputer Science (R0)