Abstract

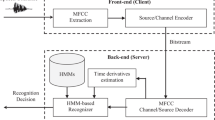

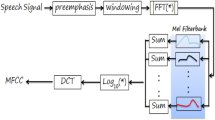

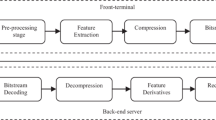

In this paper, the framework of multi-stream combination has been explored to improve the noise robustness of automatic speech recognition systems. The main important issues of multi-stream systems are which features representation to combine and what importance (weights) be given to each one. Two stream features have been investigated, namely the MFCC features and a set of complementary features which consists of pitch frequency, energy and the first three formants. Empiric optimum weights are fixed for each stream. The multi-stream vectors are modeled by Hidden Markov Models (HMMs) with Gaussian Mixture Models (GMMs) state distributions. Our ASR is implemented using HTK toolkit and ARADIGIT corpus which is data base of Arabic spoken words. The obtained results show that for highly noisy speech, the proposed multi-stream vectors leads to a significant improvement in recognition accuracy.

Chapter PDF

Similar content being viewed by others

References

Janin, A., Ellis, D., Morgan, N.: Multi-stream speech recognition: ready for prime time. In: Proc. of Eurospeech, Budapest (1999)

Guo, H., Chen, Q., Huang, D., Zhao, X.: A Multi-stream Speech Recognition System Based on The Estimation of Stream Weights. In: Proc. ICISP, pp. 3479 – 3482 (2010)

Sanchez-soto, E., Potaminos, A., Daoudi, K.: Unsupervised stream weights computation in classification and recognition Tasks. IEEE Trans. Audio, Speech and Language Processing 17(3), 436–445 (2009)

Potamianos, A., Sánchez-Soto, E., Daoudi, K.: Stream weight computation for multi-stream classifiers. In: Proc. ICASSP, pp. 353–356 (2006)

Li, X., Tao, J., Johanson, M.T., Soltis, Savage, J.: Stress and emotion classification using jitter and shimmer features. In: Proc. ICASSP, vol. 4, pp. IV-1081–IV-1084(2007)

Holmes, J.N., Holmes, W.J.: Using formant frequencies in speech recognition. In: Proc. Eurospeech, Rhodes, pp. 2083–2086 (1997)

Selouani, S.A., Tolba, H.: Distinctive features, formants and cepstral coefficients to improve automatic speech recognition. In: Proc. IASTED, pp. 530–535 (2002)

Selouani, S.A., Tolba, H., O’Shaughnessy, D.: Auditory-based acoustic distinctive features and spectral cues for automatic speech recognition using a multi-stream paradigm. In: Proc. of ICASSP, pp. 837–840 (2002)

Tolba, H., Selouani, S.A., O’Shaughnessy, D.: Comparative experiments to evaluate the use of auditory-based acoustic distinctive features and formant cues for robust automatic speech recognition in low snr car environments. In: Proc. of Eurospeech, pp. 3085–3088 (2003)

Chongjia, N.I., Wenju, L., Xu, B.: Improved Large Vocabulary Mandarin Speech Recognition Using Prosodic and Lexical Information in Maximum Entropy Framework. In: Proc. CCPR 2009, pp. 1–4 (2009)

Ma, B., Zhu, D., Tong, R.: Chinese Dialect Identification Using Tone Features Based on Pitch Flux. In: Proc. ICASSP, p. I (2006)

Gurbuz, S., Tufekci, Z., Patterson, E., Gowdy, John, N.: Multi-stream product modal audio-visual integration strategy for robust adaptive speech recognition. In: Proc. ICASSP, pp. II-2021–II-2024 (2002)

Guoyun, L.V., Dongmei, J., Rongchun, Z., Yunshu, H.: Multi-stream Asynchrony Modeling for Audio-Visual Speech Recognition. In: Proc. ISM, pp. 37–44 (2007)

Addou, D., Selouani, S.A., Boudraa, M., Boudraa, B.: Transform-based multi-feature optimization for robust distributed speech recognition. In: Proc. GCC, pp. 505– 508 (2011)

Davis, S.B., Mermelstein, P.: Comparison of parametric representations for monosyllabic word recognition in continuously spoken sentences. Proc. IEEE Trans. ASSP 28, 357–366 (1980)

Mary, L., Yegnanarayana, B.: Extraction and representation of prosodic features for language and speaker recognition. Proc. Speech Communication 50, 782–796 (2008)

Doss, M.: Using auxiliary sources of knowledge for automatic speech recognition. Ph.D Theses; École Polytechnique Fédérale de Lausane (2005)

Ververidis, D., Kotropoulos, C.: Emotional speech recognition: resources, features, and methods. Proc. Speech Communication 48, 1162–1181 (2006)

Hermansky, H.: Perceptual linear predictive (PLP) analysis of speech. The Journal of the Acoustical Society of America 87, 1738–1752 (1990)

Slifka, J., Anderson, T.R.: Speaker modification with lpc pole analysis. In: Proc. of ICASSP, pp. 644–647 (1995)

Rabiner, L.R.: On the Use of Autocorrelation Analysis for Pitch Detection. IEEE Transaction on Acoustics, Speech, and Signal Processing 25, 1 (1977)

Davis, S.B., Mermelstein, P.: Comparison of parametric representations for monosyllable word recognition in continuously spoken sentences. IEEE Trans. on Speech and Audio Processing 28(4), 357–366 (1980)

Young, S., Odell, J., et al.: The HTK Book Version 3.3. Speech group, Engineering Department. Cambridge University Press (2005)

Amrouche, A.: Reconnaissance automatique de la parole par les modèles connexionnistes. Ph.D Theses, Faculty of Electronics and Computer Sciences, USTHB (2007)

Boersma, P., Weenink, D.: Praat: doing phonetics by computer (2008), http://www.praat.org/

Varga, A.P., Steeneken, H.J.M., et al.: The NOISEX-92 study on the effect of additive noise on automatic speech recognition. In: NOISEX 1992 CDROM (1992)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2012 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Amrous, A.I., Debyeche, M. (2012). Robust Arabic Multi-stream Speech Recognition System in Noisy Environment. In: Elmoataz, A., Mammass, D., Lezoray, O., Nouboud, F., Aboutajdine, D. (eds) Image and Signal Processing. ICISP 2012. Lecture Notes in Computer Science, vol 7340. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-31254-0_65

Download citation

DOI: https://doi.org/10.1007/978-3-642-31254-0_65

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-31253-3

Online ISBN: 978-3-642-31254-0

eBook Packages: Computer ScienceComputer Science (R0)