Abstract

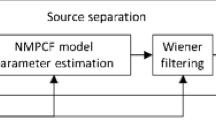

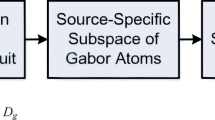

A system for user-guided audio source separation is presented in this article. Following previous works on time-frequency music representations, the proposed User Interface allows the user to select the desired audio source, by means of the assumed fundamental frequency (F0) track of that source. The system then automatically refines the selected F0 tracks, estimates and separates the corresponding source from the mixture. The interface was tested and the separation results compare positively to the results of a fully automatic system, showing that the F0 track selection improves the separation performance.

This work was funded by the Swiss CTI agency, project n. 11359.1 PFES-ES, in collaboration with SpeedLingua SA, Geneva, Switzerland.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Benaroya, L., Bimbot, F., Gribonval, R.: Audio source separation with a single sensor. IEEE Transactions on Audio, Speech and Language Processing 14(1), 191–199 (2006)

Cardoso, J.F., Souloumiac, A.: Blind beamforming for non Gaussian signals. IEE Proceedings-F 140(6), 362–370 (1993)

Durrieu, J.L., Richard, G., David, B.: A musically motivated representation for pitch estimation and musical source separation. IEEE Journal of Selected Topics on Signal Processing 5(6), 1180–1191 (2011)

Durrieu, J.L., Richard, G., David, B., Févotte, C.: Source/filter model for unsupervised main melody extraction from polyphonic audio signals. IEEE Transactions on Audio, Speech, and Language Processing 18(3), 564–575 (2010)

Han, Y.S., Raphael, C.: Desoloing monaural audio using mixture models. In: Proceedings of the International Conference on Music Information Retrieval, Vienna, Austria, September 23 - 27 (2007)

Klapuri, A.: A method for visualizing the pitch content of polyphonic music signals. In: Proceedings of the 10th International Society for Music Information Retrieval Conference, Kobe, Japan, October 26-30, pp. 615–620 (2009)

Ozerov, A., Fevotte, C., Blouet, R., Durrieu, J.L.: Multichannel nonnegative tensor factorization with structured constraints for user-guided audio source separation. In: Proc. of the IEEE International Conference on Acoustics, Speech and Signal Processing, Prague, Czech Republic, May 22-27, pp. 257–260 (2011)

Pant, S., Rao, V., Rao, P.: A melody detection user interface for polyphonic music. In: Proc. of the National Conference on Communications, Madras, Chennai, India, January 29-31 (2010)

Ryynänen, M., Virtanen, T., Paulus, J., Klapuri, A.: Accompaniment separation and karaoke application based on automatic melody transcription. In: IEEE International Conference on Multimedia and Expo., pp. 1417–1420 (2008)

SiSEC: Professionally produced music recordings (2011), http://sisec.wiki.irisa.fr/tiki-index.php?page=Professionally+produced+music+recordings

Smaragdis, P., Mysore., G.: Separation by “humming”: User-guided sound extraction from monophonic mixtures. In: Proceedings of IEEE Workshop on Applications Signal Processing to Audio and Acoustics, October 18-21, pp. 69–72 (2009)

Tosi, S.: Matplotlib for Python developers. Packt Publishers (2009)

Vincent, E.: Musical source separation using time-frequency source priors. IEEE Transactions on Audio, Speech and Language Processing 14(1), 91–98 (2006)

Vinyes, M., Bonada, J., Loscos, A.: Demixing commercial music productions via human-assisted time-frequency masking. Convention Paper, The 120th AES Convention, Paris, France, May 20-23 (2006)

Wang, B., Plumbley, M.D.: Musical audio stream separation by non-negative matrix factorization. In: Proc. of the DMRN Summer Conference, July 23-24 (2005)

Author information

Authors and Affiliations

Editor information

Rights and permissions

Copyright information

© 2012 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Durrieu, JL., Thiran, JP. (2012). Musical Audio Source Separation Based on User-Selected F0 Track. In: Theis, F., Cichocki, A., Yeredor, A., Zibulevsky, M. (eds) Latent Variable Analysis and Signal Separation. LVA/ICA 2012. Lecture Notes in Computer Science, vol 7191. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-28551-6_54

Download citation

DOI: https://doi.org/10.1007/978-3-642-28551-6_54

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-28550-9

Online ISBN: 978-3-642-28551-6

eBook Packages: Computer ScienceComputer Science (R0)