Abstract

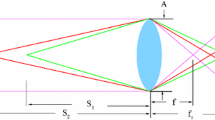

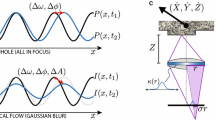

Depth from defocus (DFD) is a 3D recovery method based on estimating the amount of defocus induced by finite lens apertures. Given two images with different camera settings, the problem is to measure the resulting differences in defocus across the image, and to estimate a depth based on these blur differences. Most methods assume that the scene depth map is locally smooth, and this leads to inaccurate depth estimates near discontinuities. In this paper, we propose a novel DFD method that avoids smoothing over discontinuities by iteratively modifying an elliptical image region over which defocus is estimated. Our method can be used to complement any depth from defocus method based on spatial domain measurements. In particular, this method improves the DFD accuracy near discontinuities in depth or surface orientation.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Asada, N., Fujiwara, H., Matsuyama, T.: Seeing Behind the Scene: Analysis of Photometric Properties of Occluding Edges by the Reversed Projection Blurring Model. IEEE Trans. on Patt. Anal. and Mach. Intell. 20, 155–167 (1998)

Bhasin, S., Chaudhuri, S.: Depth from Defocus in Presence of Partial Self Occlusion. In: Proc. Intl. Conf. on Comp. Vis., pp. 488–493 (2001)

Debevec, P., Malik, J.: Recovering High Dynamic Range Radiance Maps from Photographs. In: Proc. SIGGRAPH, pp. 369–378 (1997)

Chaudhuri, S., Rajagopalan, A.: Depth from Defocus: A Real Aperture Imaging Approach. Springer, Heidelberg (1999)

Ens, J., Lawrence, P.: Investigation of Methods for Determining Depth from Focus. IEEE Trans. on Patt. Anal. and Mach. Intell. 15(2), 97–108 (1993)

Favaro, P., Soatto, S.: Seeing beyond occlusions (and other marvels of a finite lens aperture). In: Proc. CVPR 2003, vol. 2, pp. 579–586 (June 2003)

Favaro, P., Soatto, S.: A Geometric Approach to Shape from Defocus. IEEE Trans. on Patt. Anal. and Mach. Intell. 27(3), 406–417 (2005)

Gökstorp, M.: Computing Depth from Out-of-Focus Blur Using a Local Frequency Representation. In: Proc. of the IAPR Conf. on Patt. Recog., pp. 153–158 (1994)

Hasinoff, S.W., Kutulakos, K.N.: Confocal Stereo. In: Leonardis, A., Bischof, H., Pinz, A. (eds.) ECCV 2006. LNCS, vol. 3951, pp. 620–634. Springer, Heidelberg (2006)

McCloskey, S., Langer, M., Siddiqi, K.: The Reverse Projection Correlation Principle for Depth from Defocus. In: Proceedings of the 3rd International Symposium on 3D Data Processing, Visualization and Transmission (2006)

Nayar, S.K., Watanabe, M.: Minimal Operator Set for Passive Depth from Defocus. In: Proc. CVPR 1996, pp. 431–438 (June 1996)

Pentland, A.: A New Sense for Depth of Field. IEEE Trans. on Patt. Anal. and Mach. Intell. 9(4), 523–531 (1987)

Pentland, A., Scherock, S., Darrell, T., Girod, B.: Simple Range Cameras Based on Focal Error. J. of the Optical Soc. Am. 11(11), 2925–2935 (1994)

Subbarao, M., Surya, G.: Depth from Defocus: A Spatial Domain Approach. Intl. J. of Comp. Vision 13, 271–294 (1994)

Subbarao, M.: Parallel Depth Recovery by Changing Camera Parameters. In: Proc. Intl. Conf. on Comp. Vis., pp. 149–155 (1998)

Xiong, Y., Shafer, S.A.: Moment Filters for High Precision Computation of Focus and Stereo. In: Proc. Intl. Conf. on Robotics and Automation, pp. 108–113 (1995)

Zhang, L., Nayar, S.K.: Projection Defocus Analysis for Scene Capture and Image Display. In: Proc. SIGGRAPH, pp. 907–915 (2006)

Author information

Authors and Affiliations

Editor information

Rights and permissions

Copyright information

© 2007 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

McCloskey, S., Langer, M., Siddiqi, K. (2007). Evolving Measurement Regions for Depth from Defocus. In: Yagi, Y., Kang, S.B., Kweon, I.S., Zha, H. (eds) Computer Vision – ACCV 2007. ACCV 2007. Lecture Notes in Computer Science, vol 4844. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-540-76390-1_84

Download citation

DOI: https://doi.org/10.1007/978-3-540-76390-1_84

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-76389-5

Online ISBN: 978-3-540-76390-1

eBook Packages: Computer ScienceComputer Science (R0)