Abstract

In this paper local and non-local denoising methods are jointly employed in order to improve the visual quality of the final denoised image. Based on the evidence that the output images of non local denoising methods are not pointwise better everywhere than the outputs images of local methods and than the noisy image itself, the cascade of two improvement steps is applied to the output image of a non local denoising method. The first step aims at correcting the output image by recovering the lost information directly from the noisy one. The second step aims at recovering those good estimations provided by a local regularization method. A pointwise weighted average between the involved image pair is used at each step. The weights are estimated from the noisy data using adaptive and automatic procedures. Experimental results show that the proposed approach allows us to greatly improve the results of patch based non local denoising in terms of both peak signal to noise ratio (PSNR) and structural similarity index (SSIM).

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Denoising is a longstanding and investigated topic. Despite the huge number of approaches proposed for its solution, it still remains a challenging and open research problem. Linear and non linear filtering, bayesian methods, shrinkage methods, variational models, local and non local filtering are only few examples of the different approaches and ideas underlying the existing solutions — see [10] for a complete and recent review. The most recent and performing denoisers are based on non local patch regularization. This kind of regularization allows us to reach high standard results, which are considerably better than those achieved by traditional local denoisers. Noteworthy examples are Non Local Means (NLM) [3] and Block Matching 3D (BM3D) [5], especially for the removal of additive white Gaussian noise. They mainly consist of grouping similar patches in the image and then removing noise by exploiting replicates of the same patch. NLM is based on a weighted mean of similar patches in the whole image; BM3D is based on the concept of collaborative filtering. It mainly consists of a proper and joint use of non local similarities search (through the block matching algorithm) and standard transform-based denoising applied to 3D data, i.e. the stack of similar blocks.

Despite the very high performance of such approaches, patch-based non local denoising is not perfect. In fact, the average of non local but similar patches leads to an unavoidable smoothing of different parts of the noisy image under study, especially in case of patches misalignments due to the presence of noise or due to the lack of point-wise correspondence between patches. In addition, in order to reduce the prohibitive computational effort required by the search of similarities in the whole image, this search is limited in a neighborhood of each pixel; as a result not all image similarities contribute to the denoising process. A lot of research effort has been devoted to make non local methods fast and usable in real applications; in particular, methods for making faster the search of similarities have been proposed as well as for improving the visual quality of denoised images, see for example [2, 4, 6, 7, 9, 11, 12]. With regard to the last point, a classical way for improving the denoising result is the residual method [1]. It mainly consists of applying an edge preserving denoising filtering to the residual image, i.e. the one which is obtaining by subtracting the denoised image from the noisy one.

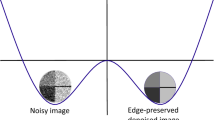

The aim of this paper is to show that there are several image pixels where local, even traditional, denoising performs better than non local one. In particular, it is possible to show that there is a class of pixels where classical Wiener filter performs better than the most performing non local denoiser, i.e. BM3D. The interesting aspect is that pixels belonging to this class are not necessarily located in correspondence to image edges. In fact, the performance of non local denoising ‘locally’ depends on how many and how similar patches are within the image. For a similar reason, somewhat more anti intuitive, it is possible to show that there is also another class of pixels for which it is better to leave the noisy image unchanged rather than performing a patch based denoising. Since it is very hard to predict which are the pixels belonging to the two aforementioned classes, in this paper a strategy based on two different convex combinations of local and non local information is proposed. The main aim is to embed local information into a non local denoising result. The first convex combination involves the output image of BM3D and the noisy image itself. In cascade, the second convex combination involves the output of the first step and the output image of a simple Wiener filter when applied to the noisy image. The parameters employed in the convex combinations are properly estimated from the data using two different approaches and they involve all image pixels, not only the ones belonging to each specific class.

Experimental results achieved on various test images show that the proposed approach is able to increase the visual quality of BM3D image of 1 db on average at low levels of noise and of about .5 db at higher noise levels.

2 The Proposed Model

In the denoising problem, the noisy image Y is modelled as follows

where X is the original (clean) image, N is an i.i.d. Gaussian white noise with zero mean and variance \(\sigma ^2\) and \(\varOmega \) is the image domain. \(\mathbf {i}\) stands for pixel location. Denoising goal is to get an estimate \(\hat{X}\) of X from Y. The recent literature has shown that non local patch-based denoisers, like BM3D (whose output image will be denoted by \(\hat{X}\)) are more effective in terms of SNR than traditional local denoisers, like Wiener filtering (whose output will be denoted by \(\bar{X}\)). However, this global result is not pointwise satisfied: there are some pixels for which \(\bar{X}\) is better than \(\hat{X}\) and others where Y is a better candidate than \(\hat{X}\). As a result, we can define two distinct classes of pixels as follows

An example of \(\varOmega _1\) and \(\varOmega _2\) is shown in Fig. 1. More in general, this means that some image high frequencies have been lost in the patch based non local method due to the regularization process; on the other hand, some low frequencies have been lost since some artifacts are introduced due to misalignments or not good similarities matching. That is why it is necessary to reintroduce in the image both some high and low frequency content. To this aim, we should construct the following sequence of images

with \(X_0 = \hat{X}\). \(\alpha _n\) is a parameter to be estimated; \(Y_n\) is the sequence of images having decreasing high frequency content from which recovering the lost information in \(X_0\). \(Y_1 = Y\) since it contains all image high frequencies, while \(Y_n, \quad n>1\), is a more and more regularized version of the noisy image as n increases. \(\alpha _n\) quantifies the amount of local frequency content that has to be recovered at each step. Eq. (4) can be rewritten as follows

As a result, for \(\alpha _n \in [0,1]\), \(X_n\) is a convex combination of two regularized versions of the noisy image, i.e. two distinct estimations of the original one X. Based on these considerations, since it is not trivial to find out \(\varOmega _1\) and \(\varOmega _2\) in Eqs. (2) and (3), in this paper we propose to use just two iterations of the sequence in Eq. (5). The first one involves the noisy image Y and the output image \(\hat{X}\) of a patch based non local denoiser (BM3D), i.e.

The second one involves \(X_1\) and the output image of a local denoiser (Wiener filter) \(\bar{X}\), i.e.

\(\alpha _1\) and \(\alpha _2\) can be defined as the solution of a joint least squares minimization problem, i.e. \(\; (\alpha _1,\alpha _2) = argmin_{\alpha _1,\alpha _2 \in [0,1]} \Vert X-X_2\Vert _2^2,\;\) or as the solutions of two separated minimization processes independently applied at each step of the proposed procedure, i.e. \(\; \alpha _1 = argmin_{\alpha _1 \in [0,1]} \Vert X - X_1\Vert _2^2,\;\) and \(\;\alpha _2 = argmin_{\alpha _2 \in [0,1]} \Vert X - X_2\Vert _2^2\).

Unfortunately, as shown in the Appendix, these estimates depend on the original image through terms that cannot be considered negligible. As a matter of facts, in the estimation of \(\alpha _1\), the independence of noise from the original image allows us to get the following reliable estimate for \(\alpha _1\):

On the contrary, it is not the case for \(\alpha _2\), where the term \(\frac{\sum _{\varOmega } N (X-X_1)}{||Y- \hat{X}||^2_2}\) is not negligible and its robust estimation is not trivial. In the following an estimation method based on the Minimum Description Length (MDL) is proposed for \(\alpha _2\).

2.1 MDL for \(\alpha _2\) Estimation

MDL is based on the rationale that during a compression phase, the distortion between the compressed signal and the original one should be kept low; at the same time, the description of the compressed signal should use as few bits as possible. Since these two objectives are conflicting, a suitable criterion for reaching a compromise is needed, as for example Rissanen’s MDL principle [8]. Let fix a class of models from which selecting the one which best represents the data. According to the MDL principle, given a sequence of observations, the best model is the one that yields the shortest description length for describing the data using the model — the description length can be interpreted as the number of bits needed for encoding it. This description can be done through a two-part code: the first one describes the model, the other describes the data using the model. In our case, \(X_1\) represents the data (observations) and we wish to find a model \(M(\alpha _2)\) that describes it according to Eq. (7). \(M(\alpha _2)\) minimizes the following two-part code-length:

where \(L(X_1,M(\alpha _2)) = L(X_1|M(\alpha _2)) + \lambda L(M(\alpha _2))\), \(\lambda \) is a parameter that should balance the two terms. Using PSNR as error measure, \(\; L(X_1|M(\alpha _2)) = 10 \log _{10}\frac{255^2}{\sum \sum (X_1 - X_{2,\alpha _2})^2 }, \) while \(X_{2,\alpha _2}\) is Eq. (7) for a given \(\alpha _2\). \(L(M(\alpha _2))\) gives the bits necessary to encode \(X_2\), i.e. the bits necessary to code \(X_1\), \(\alpha _2 \bar{X}\) and the parameter \(\alpha _2\). Note that the bits budget required by \(\alpha _2\) is constant and can be neglected in the minimization. In order to make automatic the minimization process (choice of \(\lambda \)), PSNR is given with a 2 decimal digits precision; B is the bits budget for \(X_1\) while just \(\alpha _2 B\) bits are required for \(\alpha _2 \bar{X}\). Hence, also for \(L(M(\alpha _2))\) two decimal digits have been considered. The parameter \(\lambda \) is then set as the ratio between the maximum of \(L(X_1|M(\alpha _2))\) and the maximum for \(L(M(\alpha _2))\) with respect to \(\alpha _2 \in [0,1]\).

3 Experimental Results and Concluding Remarks

The proposed model, namely WN-BM3D, has been tested on several images corrupted by zero mean Gaussian noise with standard deviation ranging from 5 to 100. In this paper we will show the results on two \(512 \times 512 \times 8\) bits test images: Lena and Fingerprint. Results have been evaluated in terms of Peak Signal to Noise Ratio (PSNR) and Structural SIMilarity index (SSIM). Table 1 refers to Lena image and shows the value of the parameters \(\alpha _1\) and \(\alpha _2\) when two distinct oracle minimization procedures are performed. Oracle means that the original image is known and each minimization reaches the minimum MSE. PSNR after the first process (Eq. 6) and the second one (Eq. 7) as well as PSNR increase with respect to BM3D are shown. As it can be observed, PSNR increase is up 1.38 db and it grows as noise standard deviation decreases. Table 1 also gives \(\alpha _1\) and \(\alpha _2\) which have been estimated respectively using Eqs. (8) and (9). As it can be observed, the proposed method allows us to reach PSNR values which are very close to the oracle ones, confirming the robustness of the proposed minimization procedures. It is worth observing that as noise standard deviation increases, the second step is the one which contributes more to the refinement of BM3D image. Table 2 compares WN-BM3D and BM3D in terms of PSNR and SSIM for both Lena and Fingerprints images. WN-BM3D outperforms BM3D for both images and for all \(\sigma \)s. In particular, on more difficult images (where edges density is higher) like Fingerprint, the increase in terms of PSNR provided by WN-BM3D is even higher. This may be explained considering that on such images patch based non local denoiser is not able to find ’faithful’ patches for a given point, as the image is very irregular and sharp. In this case, an injection of local regularization can help to outperform the final result. Figure 2 allows us to evaluate the visual quality of the final images. As it can be observed, the proposed method provides images that show a reduced over-smoothing in flat regions. Finally, it is worth outlining that each step of WN-BM3D has been optimized with respect to PSNR. That is why SSIM may be worst than the BM3D for higher levels of noise. However, as Table 2 shows, by selecting Wiener filter iterated twice as local denoiser, the proposed method, namely 2WN-BM3D, is able to provide better results even with respect to SSIM for higher level of noise. The aim of future research will be twofold. On the one hand, the properties of the local methods to use in the proposed iterative residual procedure will be studied; on the other hand, starting from the empirical observation that the pointwise solution of a non local method can be successfully substituted for the one provided by a traditional local method or the noisy datum itself, methods for the selection of the best pointwise solution will be investigated. The latter goal will enable the proposed method to not only improve denoising results but to also provide a considerable computational saving with respect to non local methods.

4 Appendix

Joint Minimization. By putting Eq. (6) into Eq. (7) we get \(\Vert (X_2-X)\Vert _2^2 = \Vert (\hat{X} + \alpha _1 (1 - \alpha _2) (Y-\hat{X}) + \alpha _2 (\bar{X} - \hat{X}) - X)\Vert _2^2. \) By imposing \(\frac{\partial \Vert (X_2-X)\Vert _2^2}{\partial \alpha _1} = 0\) and \(\frac{\partial \Vert (X_2-X)\Vert _2^2}{\partial \alpha _2} = 0 \) and denoting by \(<*,*>\) the scalar product, using some algebra we get

\(\alpha _1 = - \frac{<\hat{X} - X + \alpha _2 (\bar{X}-\hat{X}), \bar{X}-\hat{X}>}{(1-\alpha _2) <Y-\hat{X}, \bar{X}-\hat{X}>}\) and \(\alpha _2 = \frac{\frac{<\hat{X} - X,Y-\hat{X}>}{\Vert Y-\hat{X}\Vert _2^2} - \frac{<\hat{X} - X),\bar{X}-\hat{X}>}{<Y-\hat{X},\bar{X}-\hat{X}>} }{\frac{\Vert \bar{X}-\hat{X}\Vert _2^2}{<Y-\hat{X},\bar{X}-\hat{X}>} - \frac{<\bar{X}-\hat{X},Y-\hat{X}>}{\Vert Y-\hat{X}\Vert _2^2}}\)

\(\alpha _1\) estimation. By using Eq. (1) and setting \(\hat{X} = X + N_0\), where \(N_0 = \hat{X}-X \) is BM3D approximation error, we have \(\frac{d \Vert (X-X_1)\Vert _2^2}{d\alpha _1} = 0 \Leftrightarrow \) \(\alpha _1 = \frac{<X - \hat{X},Y- \hat{X}>}{\Vert Y- \hat{X}\Vert _2^2}= \frac{<Y - \hat{X}- N,Y- \hat{X}>}{||Y- \hat{X}||^2_2} = 1 - \frac{\Vert N\Vert _2^2}{||Y- \hat{X}||^2_2} + \frac{<N, N_0>}{||Y- \hat{X}||^2_2 } \simeq 1 - \frac{\sigma ^2 |\varOmega |}{||Y- \hat{X}||^2_2}, \; \) where the term \(\frac{\sum _{\varOmega } N N_0}{||Y- \hat{X}||^2_2} \) has been neglected since it is close to zero, as N is independent of X and nearly globally independent of \(\hat{X}\).

\(\alpha _2\) estimation. \(\frac{d \Vert (X-X_2)\Vert _2^2}{d\alpha _2} = 0 \Leftrightarrow \alpha _2 = \frac{<X - X_1,\bar{X} - X_1>}{\Vert \bar{X} - X_1\Vert _2^2} =\) \(\frac{<Y - X_1,\bar{X} - X_1>}{\Vert \bar{X} - X_1\Vert _2^2} - \frac{<N,\bar{X} - X_1>}{\Vert \bar{X} - X_1\Vert _2^2}.\) Unfortunately, the last term is neither known nor negligible.

References

Brunet, D., Vrscay, E.R., Wang, Z.: The use of residuals in image denoising. In: Kamel, M., Campilho, A. (eds.) ICIAR 2009. LNCS, vol. 5627, pp. 1–12. Springer, Heidelberg (2009). https://doi.org/10.1007/978-3-642-02611-9_1

Bruni, V., Panella, D., Vitulano, D.: Non local means image denoising using noise-adaptive SSIM. In: Proceedings of IEEE EUSIPCO (2015)

Buades, A., Coll, B., Morel, J.: A review of image denoising algorithms, with a new one. Multiscale Mod. Simul. 4(2), 490–530 (2005)

Chaudhury, K.: Acceleration of the shiftable o(1) algorithm for bilateral filtering and non-local means. IEEE Trans. Image Proc. 22, 1291–1300 (2012)

Dabov, K., Foi, A., Katkovnik, V., Egiazarian, K.: Image denoising by sparse 3D transform-domain collaborative filtering. IEEE Trans. Image Proc. 16(8), 2080–2095 (2007)

Deledalle, C., Duval, V., Salmon, J.: Non-local methods with shape-adaptive patches (NLM-SAP). J. Math. Imaging Vis. 43(2), 103–120 (2012)

Feng, X., Li, X., Wang, W., Jia, X.: Improvement of BM3D algorithm based on wavelet and directed diffusion. In: Proceedings of International Conference on Machine Vision and Information Technology (CMVIT) (2017)

Grunwald, P.D.: A tutorial introduction to the minimum description length principle. In: Grunwald, P.D., Myung, J., Pitt, M.A. (eds.) Advances in Minimum Description Length: Theory and Applications. MIT Press, Cambridge (2004)

Hou, Y.K., Zhao, C.X., Yang, D.Y., Cheng, Y.: Comments on image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. Image Process. 20(1), 268–270 (2011)

Pizurica, A.: Image denoising algorithms: from wavelet shrinkage to nonlocal collaborative filtering. In: Wiley Encyclopedia of Electrical and Electronics Engineering (2017)

Vignesh, R., Oh, B.T., Kuo, C.C.: Fast non-local means (NLM) computation with probabilistic early termination. IEEE Signal Proc. Lett. 17(3), 277–280 (2010)

Zhong, H., Ma, K., Zhou, Y.: Modified BM3D algorithm for image denoising using nonlocal centralization prior. Signal Process. 106, 342–347 (2015)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Bruni, V., Vitulano, D. (2018). Image Denoising Using Collaborative Patch-Based and Local Methods. In: Mansouri, A., El Moataz, A., Nouboud, F., Mammass, D. (eds) Image and Signal Processing. ICISP 2018. Lecture Notes in Computer Science(), vol 10884. Springer, Cham. https://doi.org/10.1007/978-3-319-94211-7_4

Download citation

DOI: https://doi.org/10.1007/978-3-319-94211-7_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-94210-0

Online ISBN: 978-3-319-94211-7

eBook Packages: Computer ScienceComputer Science (R0)