Abstract

Logistic regression as implemented in PLINK is a powerful and commonly used framework for assessing gene-gene (GxG) interactions. However, fitting regression models for each pair of markers in a genome-wide dataset is a computationally intensive task. Performing billions of tests with PLINK takes days if not weeks, for which reason pre-filtering techniques and fast epistasis screenings are applied to reduce the computational burden.

Here, we demonstrate that employing a combination of a Xilinx UltraScale KU115 FPGA with an Nvidia Tesla P100 GPU leads to runtimes of only minutes for logistic regression GxG tests on a genome-wide scale. In particular, a dataset of 53,000 samples genotyped at 130,000 SNPs was analyzed in 8 min, resulting in a speedup of more than 1,000 when compared to PLINK v1.9 using 32 threads on a server-grade computing platform. Furthermore, on-the-fly calculation of test statistics, p-values and LD-scores in double-precision make commonly used pre-filtering strategies obsolete.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Genome-wide association study (GWAS)

- Genome-wide interaction study (GWIS)

- Gene-gene (GxG) interaction

- Linkage disequilibrium (LD)

- BOOST

- Hardware accelerator

- Hybrid computing

- Heterogeneous architecture

1 Introduction

Gene-gene (GxG) interactions (epistasis) are believed to be a significant source of unexplained genetic variation causing complex chronic diseases. Several studies provided evidence for statistical GxG interaction between the top disease-associated single nucleotide polymorphisms (SNPs) of complex chronic diseases, including ankylosing spondylitis [21], Behçet’s disease [15], type 2 diabetes [14], and psoriasis [6]. Particularly, in psoriasis a significant interaction (\(p=6.95\times 10^{-6}\)) as measured by logistic regression has been detected between the genes ERAP1 (rs27524) and HLA-C (rs10484554). The biological consequence of this interaction is that the ERAP1 SNP only has an effect in individuals carrying at least one copy of the risk allele at the HLA-C SNP.

In general, detection of GxG interactions poses a great challenge for genome-wide association studies (GWAS) due to the computational burden of testing billions of pairs of SNPs (as a result of the number of tests being quadratic in the number of SNPs). Traditional logistic regression analysis is still the gold-standard to detect statistical GxG interactions in case/control studies, but too slow in practice to screen for GxG interactions on a genome-wide scale. Thus, many approximate methods for epistasis screening have been proposed applying a variety of heuristic and filtering techniques to conduct genome-wide interaction studies (GWIS) in a reasonable amount of time. Well-established methods include the Kirkwood Superposition Approximation (KSA) of the Kullback-Leibler divergence implemented in BOOST [23] as well as the joint effects test introduced by Ueki et al. [22]. Another exhaustive interaction method, called GWIS [7], employs a permutation-based approach to calibrate test statistics. Similarly, MBMDR [3] uses permutations to adjust the p-value of the significance test. However, it is able to reduce the dimensionality of any problem into one dimension categorizing into high-risk, low-risk and no evidence groups before calculating a chi-squared test statistic. Other tools defining different test statistics include BiForce [8], iLOCi [18] and EDCF [27]. The latter uses a clustering approach in order to reduce the computational burden. Recently, entropy-based measures for GxG interaction detection gained increasing attention. A well-written overview can be found in [5].

However, no convincing GxG loci have been identified exclusively from GWIS using these approaches. Many of the methods derive an upper bound on the test statistic in order to prune the search space and conduct follow-up model-fitting analysis using logistic regression on a pre-filtered subset of pairs [24]. Furthermore, the computational load for preliminary epistasis screenings is not negligible. Accordingly, several tools emerged to speedup this process employing hardware accelerators, such as GPUs in GBOOST [28] or SHEsisEpi [9]. Another way to reduce the computational burden is to reduce the number of SNPs in advance by pre-filtering for linkage disequilibrium (LD), although it can be shown that SNPs supposed to be in LD may also reveal an interaction effect [2, 10].

An attempt to reduce the computational load for logistic regression tests is made in [17] by using GLIDE [11]. To our knowledge, GLIDE is the fastest currently available GPU implementation of the logistic regression GxG interaction test. More recently, CARAT-GxG [16] emerged. It also offers linear regression including covariate analysis on GPUs, but provides a poor performance when compared to GLIDE (12 days for a dataset containing 500,000 SNPs and not more than 1,000 samples using 32 Nvidia Tesla M2070 GPUs).

In this paper, we show that we are able to perform an exhaustive genome-wide logistic regression analysis for SNP-SNP interactions on datasets consisting of hundreds of thousands of SNPs and tens of thousands of samples in minutes, thus eliminating the needs for epistasis screening or LD-filtering as a preprocessing step. If required, LD-filtering can directly be applied as a postprocessing step, thanks to on-the-fly calculation of \(r^2\). Furthermore, we perform our calculations in double-precision floating point format in order to overcome precision problems that may occur during floating point accumulations.

We run our benchmark against PLINK v1.9 using 32 threads on a computing system with two Intel Xeon E5-2667v4 eight-core CPUs. We already gain a 10--11 times speedup by sacrificing the support for sample covariates (re-enabling it in our method is still under development) and adapting the logistic regression test to be used with contingency tables. This reduces the computational complexity from \(\mathcal {O}(NT)\) to \(\mathcal {O}(N+T)\) (with N indicating the number of samples and T the number of iterations required for a single test). By harnessing a combination of only two hardware accelerators, namely a Xilinx Kintex UltraScale KU115 FPGA and an Nvidia Tesla P100 GPU, we gain another 100 times speedup resulting in a total of >1,000 times speedup compared to multi-threaded PLINK on a server-grade platform. Exemplary, for analyzing a dataset consisting of 130k SNPs and 53k samples our method requires only 8 min while PLINK running with 32 threads almost requires 6 days. Finally, it turns out that our method is even more than 300 times faster than GLIDE, which harnesses 12 Nvidia GTX 580 GPUs.

2 Pairwise Epistasis Testing

2.1 Logistic Regression Test

In this article we address the efficient implementation of a genotype-based statistical test for binary traits. Let Y be a random variable correlated with the trait. Correspondingly, for the trait being a disease, we define the two possible outcomes of Y as \(Y=1\) if the sample is a case affected by the disease, and \(Y=0\) if the samples is a control unaffected by the disease. Furthermore, for a pairwise test, we define \(X_A\) and \(X_B\) as random variables correlated with the observation of genotypes at SNPs A and B, respectively. The possible outcomes of \(X_{A/B}\) are \(g_{A/B} \in \{0,1,2\}\) representing the observed genotype (0 = homozygous reference, 1 = heterozygous, 2 = homozygous variant). PLINK [4, 20] uses the following multiplicative logistic regression affection model with \(\beta _3\) indicating the interaction effect of SNPs A and B.

PLINK employs Newton’s method to iteratively obtain ML estimates of the model parameters. It firstly generates a covariate matrix \(\mathbf C\) with entries \(C_{ij}\) whereby i indicates a sample of the input dataset and \(j\in {0,1,2,3}\) indicates a column for each \(\beta _j\). The matrix is defined as follows:

In detail, for a variable number of iterations \(t = 0,\ldots ,T-1\), fitting the vector \(\varvec{\beta }\) is performed in a stepwise manner. \(\varvec{\beta }^{(0)}\) is initialized with \(\beta _{j}^{(0)}=0\ \forall j\) for the first iteration \(t=0\).

-

1.

For each sample i, compute intermediate variables

$$\begin{aligned} p_i^{(t)} = \bar{p}_i^{(t)} - y_i \quad \text {and} \quad v_i^{(t)} = \bar{p}_i^{(t)} \left( 1 - \bar{p}_i^{(t)}\right) \end{aligned}$$(3)where

$$\begin{aligned} \bar{p}_i^{(t)} = \left( 1+\exp \left( -\sum \limits _j\beta _j^{(t)}C_{ij}\right) \right) ^{-1}. \end{aligned}$$(4) -

2.

Compute gradient

$$\begin{aligned} \varvec{\nabla }^{(t)}=\left\{ \nabla _j^{(t)}\right\} _{j=0}^3 = \sum _i C_{ij}p_i^{(t)}. \end{aligned}$$(5) -

3.

Compute Hessian matrix

$$\begin{aligned} \varvec{H}^{(t)} = \left\{ h_{jk}^{(t)}\right\} _{j,k=0}^3 = \left\{ \begin{array}{ll} 0 &{} \text {if } k>j\\ \sum \limits _iC_{ij}C_{ik}v_i^{(t)} &{} \text {if } k \le j \end{array}\right. . \end{aligned}$$(6) -

4.

Compute \({\varvec{\varDelta }\varvec{\beta }}^{(t)}=\left\{ \varDelta \beta _j^{(t)}\right\} _{j=0}^3\) by efficiently solving the linear system

$$\begin{aligned} {\varvec{H}^ {(t)}}{\varvec{\varDelta }\varvec{\beta }}^{(t)} = {\varvec{\nabla }}^{(t)} \end{aligned}$$(7)using the Cholesky decomposition of \({\varvec{H}}^{(t)}\).

-

5.

Update model parameters

$$\begin{aligned} {\varvec{\beta }}^{(t+1)} \leftarrow {\varvec{\beta }}^{(t)} - {\varvec{\varDelta }\beta }^{(t)}. \end{aligned}$$(8)

If \(\sum _j\varDelta \beta _j^{(t)}\) approaches zero, i.e. there is no more significant change, the process stops with \(\varvec{\beta }^{(t+1)}\) as the current result. Otherwise, the next iteration is started with step 1. However, if the change does not converge to zero, the process stops after a fixed number of iterations. PLINK uses at maximum 16 iterations and a close-to-zero threshold of 0.0001. Additional tests for convergence failure are implemented but omitted here for the sake of brevity.

The result of the logistic regression test in PLINK is composed of three components, namely the test statistic, its approximate p-value and the odds-ratio. The test statistic \(\chi ^2\) is calculated as

\(\varepsilon \) is the standard error for the \(g_Ag_B\)-term in (1). It can directly be determined by solving the linear system \(\varvec{H}^{(t)} \varvec{e} = (0,0,0,1)\) and defining \(\varepsilon ^2 = e_3\).

According to PLINK, the test statistic is assumed to follow a chi-squared distribution \(\chi ^2_1\) with one degree of freedom, which implies that the approximate p-value can directly be determined from its cumulative distribution function. Finally, the odds-ratio is defined as \(e^{\beta _3}\).

Obviously, steps 1 to 3 in each iteration have linear complexity in N, i.e. \(\mathcal {O}(N)\) whereby N is the number of samples. Let T be the number of iterations, then \(\mathcal {O}(NT)\) is the total complexity for a single test. In the next Sects. 2.2 and 2.3, we show how to do a linear precomputing step to generate a contingency table and how to apply the contingency table in the logistic regression test, which results in a constant computation complexity for each iteration.

2.2 Contingency Tables

For any SNP pair (A, B) a contingency table represents the number of samples in a dataset that carry a specific genotype information. In particular, an entry \(n_{ij}\) represents the number of samples that carry the information \(g_A = i\) at SNP A and \(g_B = j\) at SNP B. Thus, a contingency table for pairwise genotypic tests contains \(3\times 3\) entries. Since we are focusing on binary traits, we require a contingency table for each state, w.l.o.g. one for the case and control group, respectively, and denote their entries by \(n^{\text {case}}_{ij}\) and \(n^{\text {ctrl}}_{ij}\) (see Fig. 1).

For a given SNP pair generating the contingency tables is clearly linear in the number of samples. In the next section (Sect. 2.3) we show how to incorporate contingency tables into logistic regression.

2.3 Logistic Regression with Contingency Tables

The information in the contingency tables for case and control group can be used to simplify steps 1 to 3 in Sect. 2.1. Steps 4 and 5 as well as the calculation of the test statistic, the odds-ratio and the p-value remain the same.

-

1.

From a given contingency table we compute the following intermediate variables.

$$\begin{aligned} p_{ij}^{(t)} = \left( 1+\exp \left( -\left( \beta _0^{(t)} + i\beta _1^{(t)} + j\beta _2^{(t)} + ij\beta _3^{(t)}\right) \right) \right) ^{-1} \end{aligned}$$(10)$$\begin{aligned} p^{(t),\text {ctrl}}_{ij} = p_{ij}^{(t)},\quad p^{(t),\text {case}}_{ij} = p_{ij}^{(t)} - 1,\quad v_{ij}^{(t)} = p_{ij}^{(t)}\left( 1-p_{ij}^{(t)}\right) \left( n^{\text {case}}_{ij} + n^{\text {ctrl}}_{ij}\right) \end{aligned}$$(11) -

2.

The gradient \(\varvec{\nabla }^{(t)}\) from (5) can now be computed as

$$\begin{aligned} \varvec{\nabla }^{(t)}=\left( \sum \limits _{ij}N_{ij}^{(t)}, \sum \limits _{ij}iN_{ij}^{(t)}, \sum \limits _{ij}jN_{ij}^{(t)}, \sum \limits _{ij}ijN_{ij}^{(t)} \right) \end{aligned}$$(12)where

$$\begin{aligned} N_{ij}^{(t)}=\left( n^{\text {case}}_{ij}p^{(t),\text {case}}_{ij} + n^{\text {ctrl}}_{ij}p^{(t),\text {ctrl}}_{ij}\right) \end{aligned}$$(13) -

3.

The Hessian matrix \(\varvec{H}^{(t)}\) from (6) evaluates to

$$\begin{aligned} \varvec{H}^{(t)} = \left\{ h_{pq}^{(t)}\right\} _{p,q=0}^3 = \left( \begin{array}{llll} \sum v_{ij}^{(t)} &{} 0 &{} 0 &{} 0 \\ \sum iv_{ij}^{(t)} &{} \sum i^2v_{ij}^{(t)} &{} 0 &{} 0\\ \sum jv_{ij}^{(t)} &{} \sum ijv_{ij}^{(t)} &{} \sum j^2v_{ij}^{(t)} &{} 0\\ \sum ijv_{ij}^{(t)} &{} \sum i^2jv_{ij}^{(t)} &{} \sum ij^2v_{ij}^{(t)} &{} \sum i^2j^2v_{ij}^{(t)} \end{array}\right) \end{aligned}$$(14)where each sum is evaluated over all indexes i and j.

Obviously, the complexity of each iteration step is now constant, i.e. \(\mathcal {O}(1)\). As in Sect. 2.1, let N be the total number of samples and T the number of iterations. We recall the complexity of the method used by PLINK with \(\mathcal {O}(NT)\). Our proposed method improves this complexity to \(\mathcal {O}(N+T)\) which can directly be observed in a significant increase in computation speed (see Sect. 4).

2.4 Linkage Disequilibrium

Our ultimate aim is the exhaustive testing of all SNP pairs on a genome-wide scale without pre-filtering with regard to linkage disequilibrium (LD). However, to be able to apply posthoc LD filtering we compute the \(r^2\)-score on-the-fly. \(r^2\) is a measure of similarity between two SNPs. It is defined as

D is the distance between the observed allele frequency \(p_{AB}\) at loci A and B and the expected allele frequency \(p_A p_B\) assuming statistical independence. Thus, \(r^2\) is a normalized measure for D which can be used for comparison of different SNP pairs. The allele frequencies \(p_A\) and \(p_B\) can directly be determined as

whereby \(n_{ij} = n^{\text {case}}_{ij} + n^{\text {ctrl}}_{ij}\) for all i, j. Unfortunately, the determination of the allele frequency \(p_{AB}\) from genotypic data is not straightforward. This is due to the unknown phase when two heterozygous genotypes face each other in a SNP pair. Basically, it can be defined as

with x meeting \(x\le n_{11}\). x has to satisfy the following equation whose solution is omitted here for simplicity:

where \(f_{ij}\) is the number of allele combinations ij we know for sure, e.g. \(f_{00} = 2n_{00} + n_{01} + n_{10}\) and \(f_{11} = 2n_{22} + n_{21} + n_{12}\).

PLINK does not compute the \(r^2\)-score jointly with the logistic regression test. However, one can create a table of \(r^2\) scores explicitly for all pairs of a given range (--r2 switch), or compute the \(r^2\)-score for a single pair (--ld switch). This process is in linear complexity for each pair of SNPs to determine the respective allele frequencies. In comparison, we are using the information of the precomputed contingency table which allows us to calculate the \(r^2\)-score in constant time.

3 Implementation

3.1 Heterogeneous FPGA-GPU Computing Architecture

Our implementation targets a heterogeneous FPGA-GPU computing architecture. We improved our architecture proposed in [26] by adding high-end off-the-shelf components, namely a server-grade mainboard hosting two Intel Xeon E5-2667v4 8-core CPUs @ 3.2 GHz and 256 GB of RAM, an NVIDIA Tesla P100 GPU, and an Alpha Data ADM-PCIE-8K5 FPGA accelerator card.

The GPU accelerator is equipped with 16 GB of graphics memory and is connected via PCI Express Gen3 x16. The FPGA accelerator hosts a recent Xilinx Kintex UltraScale KU115 FPGA with two attached 8 GB SODIMM memory modules. It is connected via PCI Express Gen3 x8 allowing high-speed communication with the host and the GPU. The system runs a Ubuntu 17.10 Linux OS (Kernel version 4.13).

Due to driver restrictions, it is currently not possible to perform direct peer transfers, i.e. moving data from an FPGA accelerator to a GPU or vice-versa. Therefore, both devices are placed in slots that are served by the same CPU to reduce transmission overhead as described in [13].

According to the PCIe specifications, the net transmission rate between FPGA and GPU is about 7.3 GB/s. This absolutely fits our application demands, such that the transmission interface does not become a bottleneck.

3.2 Task Distribution

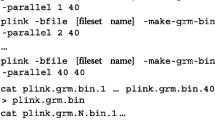

Similar to our method for testing third-order SNP interactions based on information gain [26], we split the application into three subtasks. Firstly, the creation of pairwise contingency tables (see Sect. 2.2) is done by the FPGA module. Secondly, all computations required for the logistic regression test based on the contingency tables are performed by the GPU. And thirdly, the host collects and filters the results created by the GPU.

As before, data transmission between the modules is performed by DMA transfers via PCI Express, and since there is no direct connection between the FPGA and the GPU module, the transmission of the contingency tables is redirected via the host memory.

The input dataset is assumed to be in binary PLINK format, i.e. three files in .bed, .bim, .fam format. The output is in plain text format containing for each result the information on the respective SNP pair (name and ID), \(\chi ^2\) test statistic, odds-ratio, approximate p-value and \(r^2\)-score.

Contingency Table Creation on the FPGA. The FPGA pipeline for contingency table generation is based on our previous work for pairwise [12, 25] and third-order interactions [26]. Thus, we omit details here and only remark the differences.

Shortly summarized, the pipeline consists of a chain of 480 process elements (PEs) divided into two subchains of 240 PEs each. After a short initialization phase, the chain produces 480 contingency tables in parallel while the genotype data of one SNP is streamed through the pipeline at a speed of 266 MHz and 8 genotypes/cycle. This sums up to a peak performance of about 40.8 million contingency tables per second for a dataset containing about 50,000 samples, as used in our performance evaluation in Sect. 4.

In previous publications, we used a sparse contingency table representation lacking support for unknown genotypes. The disadvantage of such a design is, that datasets containing unknown genotypes could not be supported because the assumption that the sum of all entries stays the same over all tables is disproved in the presence of unknowns. In order to remove this limitation, we now transfer complete tables from the FPGA to the GPU. Unfortunately, this increases the transmission rate significantly. Therefore, we encode each table entry into two bytes, i.e. 18 B per table. For each pair of corresponding case and control tables 4 B for the pair ID is added, which accumulates to 40 B per table pair. Hence, the peak transmission rate for the example above is about 816 MB/s, compared to 245 MB/s required for the sparse representation. However, the peak transmission rate of the architecture is 7.3 GB/s according to PCIe Gen3 specifications which theoretically allows us to process datasets down to 5,200 samples without the transmission link becoming the bottleneck.

Processing Contingency Tables on the GPU. The GPU stores the buffers from the FPGA containing contingency tables in graphics memory. We used a transmission buffer size of 256 MB which may hold up to 6.7 million table pairs. The computation process follows a simple parallelization scheme over GPU threads. By setting the block size to the maximum supported block size and the grid size to evenly distribute the contingency tables over the blocks, each thread processes exactly one contingency table pair, and only one kernel call per buffer is required.

Logistic regression and LD computation have been implemented as described in Sects. 2.3 and 2.4. However, in contrast to PLINK, we use the double precision floating point format in all our computations. The output is written into a result buffer. We provide one result buffer for each table transmission buffer, which is transferred to the host as soon as processing a table buffer has finished.

By evenly distributing the contingency tables over the blocks, we most likely introduce an unequal load resulting from a varying number of Newton iterations per thread. However, the average number of iterations per block remains virtually constant.

Transmission Buffer Management and Result Collection on the Host. We used a similar transmission buffer management as presented in [26], but introduced some improvements. In order to reduce transmission overhead, we used different adapted buffer sizes for contingency table transmission between FPGA and GPU, and result transmission from GPU to host. We used a transmission buffer size of 256 MB for contingency tables leading to 230.4 MB for results (reserving space for one result per contingency table pair). As before, the buffers are page-locked to ensure a fast transmission without delay, and the number of buffers allocated for each connection is equal (eight per default).

Multiple threads on the host system perform the collection of results by filtering by a given significance threshold and finally providing them sorted with regard to the test statistic. For this purpose, the min-max fine heap data structure [1, 13] is employed. Each thread keeps its own instance of a min-max heap to avoid lock conditions and inserts a result only if the test statistic exceeds the threshold. Then, the output file is composed by iteratively extracting the single best result over all heaps until the heaps are drained or the number of requested results is reached, whichever occurs first.

The complete workflow on our heterogeneous FPGA-GPU-based architecture is illustrated in Fig. 2.

Workflow on our heterogeneous system. 1. Genotypic data is sent to the FPGA. 2. For each pair of SNPs the FPGA creates contingency tables. 3. The contingency tables are sent to the GPU employing a memory buffer on the host. 4. The GPU calculates the logistic regression and LD. 5. Results (p-value, odds-ratio and LD-score) are transferred back to host. 6. Result are filtered using a min-max heap on host.

4 Performance Evaluation

For performance evaluation we prepared six datasets based on in-house cohorts. Dataset “A” and “B” contain 14,513 and 19,085 cases of autoimmune diseases, respectively, and share a common collection of 34,213 healthy controls. Modified instances of sets “A” and “B” were generated by applying an LD filter with \(r^2\)-threshold of 0.2, resulting in sets “A LD” and “B LD”, respectively. Furthermore, we reduced the latter two datasets to only comprise SNPs located on chromosomes 5 and 6. The resulting sets have been denoted by “A LD chr5,6” and “B LD chr5,6”. An overview of these datasets can be found in Table 1.

Our target system was the architecture described in Sect. 3.1. We compiled our implementation with GCC 5.4.1 and CUDA 9.0. The FPGA code was written in VHDL and compiled with Xilinx Vivado 2017.3. For comparison, we used the to date most recent 64-bit PLINK v1.9 built published on Jan 9th, 2018. We ran PLINK on all six datasets in two ways. Firstly, we computed the standard logistic regression tests with flags --epistasis --epi1 5e-8 which filters the results by a genome-wide significance threshold of \(5\cdot 10^{-8}\) according to the approximate p-value. Secondly, we applied a faster epistasis screening test with the BOOST [23] method (included in PLINK) with the same threshold flag, but replacing --epistasis with --fast-epistasis boost. Both runs used all available 32 threads (--threads) on our 2x Intel Xeon E5-2667v4 system.

We ran our implementation from a hybrid built, i.e. using both accelerators (Xilinx Kintex UltraScale KU115 FPGA and Nvidia Tesla P100 GPU) and a CPU-only built using all 32 threads. In contrast to PLINK, our implementations perform all calculations in double-precision floating point format, while PLINK only uses single-precision. Furthermore, we calculated the \(r^2\)-score in order to test for linkage disequilibrium on all SNP pairs, which PLINK does not.

We verified the correctness of our implementation by comparing our results with the PLINK results. At first, we encountered a lot of differences in the score and also in the order. Thus, we modified the source code of PLINK to let it do the calculations in double-precision as well. This modification increased the runtime of PLINK by a factor of about 5.7, but the results were almost exactly equal now, showing that the inconsistencies were caused by the different precisions. We believe the remaining small inconsistencies were due to numerical problems in PLINK when accumulating small floating-point values over all samples in steps 2 and 3 of computing the logistic regression test (see (5) and (6) in Sect. 2.1).

The wall-clock runtimes were measured with the GNU time command and without additional system load. The results are listed in Table 2. The measures demonstrate that by applying our method that reduces runtime complexity by using contingency tables, we can gain a 10--11 times speedup. With an additional application of the combination of two hardware accelerators, namely FPGA and GPU, we gain an additional speedup of about 100, resulting in a total computation speed that it is more than 1,000 times faster than that of PLINK on a server-grade high-end platform. The performance is underlined by the additional burden on our implementation, which is a higher calculation precision and the additional on-the-fly \(r^2\)-score computation, which is not performed by the PLINK software. Furthermore, our full logistic regression test is still almost 7 times faster than the quick but imprecise pre-scanning method BOOST [23].

However, Table 2 also shows, that small datasets, that have a very short runtime on our hybrid system, do not gain a high speedup. The reason is a large overhead for file reading, buffer preparation, device initialization and file output. In particular, these processes take about 10 seconds for the two smallest datasets in our ensemble, which implies a total pipeline run of less than two seconds for the real task. Since this processes cannot be simplified for a single task, we implemented a scheduling system that allows exclusive access to the accelerator pipeline for parallel tasks, but pre- and post-processing can be run concurrently.

We exemplary compared our computational speed to GLIDE [11]. For this purpose, we extrapolated GLIDE’s presented interaction speed to the number of samples used in our evaluation datasets. Combined with the number of interaction tests required for our data, we calculated the runtime of GLIDE for dataset “A” as 44.7 h and for dataset “B” as 48.9 h. This leads to a speedup of 361 and 364 respectively.

5 Conclusions and Future Work

In this paper, we presented two ways of improving performance of PLINK’s logistic regression epistasis test [4, 20]. Firstly, we reduced the computational complexity from \(\mathcal {O}(NT)\) to \(\mathcal {O}(N+T)\) for a single test by introducing contingency tables (see Sect. 2). This already led to a speedup of a factor of more than 10 for all our example datasets, although we were even calculating in double-precision format.

The second improvement was made by applying a two-step hardware acceleration pipeline (see Sect. 3). By generating contingency tables on a Kintex UltraScale KU115 FPGA and computing the logistic regression based on the tables on an Nvidia Tesla P100 GPU, we gained a total speedup of more than 1,000 when compared to the original PLINK v1.9 software run with 32 threads on a server-grade two processor (Intel Xeon E5-2667v4) system.

Furthermore, we demonstrated that by employing contingency tables, the LD-score \(r^2\) can be computed on-the-fly. In combination, this provides a powerful tool for epistasis analysis on large datasets, making LD-filtering deprecated as a pre-processing step.

Consequently, we are able to calculate a full logistic regression test in double-precision format on all pairs of hundreds of thousands of SNPs with tens of thousands of samples in a few minutes and allow to filter the results by score and/or by LD in the post-processing stage.

Currently, our method does not support the use of a covariate matrix as additional user input. However, we are currently working on a solution based on weighted contingency tables in order to be able to incorporate covariate information.

In order to make the system available for the scientific community, we are currently working on a much more powerful successor by enhancing it with three additional Xilinx UltraScale FPGAs and Nvidia Tesla P100 GPUs. Furthermore, we aim to develop a web interface to allow scientists to perform genome-wide epistasis tests on our system.

References

Atkinson, M.D., Sack, J.R., Santori, N., et al.: Min-max heaps and generalized priority queues. Commun. ACM 29(10), 996–1000 (1986)

Bulik-Sullivan, B.K., Loh, P.R., Finucane, H.K., et al.: LD score regression distinguishes confounding from polygenicity in genome-wide association studies. Nat. Genet. 47, 291–295 (2015). https://doi.org/10.1038/ng.3211

Cattaert, T., Calle, M.L., Dudek, S.M., et al.: Model-based multifactor dimensionality reduction for detecting epistasis in case-control data in the presence of noise. Ann. Hum. Genet. 75(1), 78–89 (2011)

Chang, C.C., Chow, C.C., Tellier, L.C., Vattikuti, S., Purcell, S.M., Lee, J.J.: Second-generation PLINK: rising to the challenge of larger and richer datasets. Gigascience 4, 1–16 (2015). https://doi.org/10.1186/s13742-015-0047-8

Ferrario, P.G., König, I.R.: Transferring entropy to the realm of GxG interactions. Briefings in Bioinform., 1–12 (2016). https://doi.org/10.1093/bib/bbw086

Genetic Analysis of Psoriasis Consortium, et al.: A genome-wide association study identifies new psoriasis susceptibility loci and an interaction between HLA-C and ERAP1. Nat. Genet. 42, 985–990 (2010). https://doi.org/10.1038/ng.694

Goudey, B., Rawlinson, D., Wang, Q., et al.: GWIS: model-free, fast and exhaustive search for epistatic interactions in case-control GWAS. Lorne Genome 2013 (2013)

Gyenesei, A., Moody, J., Semple, C.A., et al.: High-throughput analysis of epistasis in genome-wide association studies with BiForce. Bioinformatics 28(15), 1957–1964 (2012). https://doi.org/10.1093/bioinformatics/bts304

Hu, X., Liu, Q., Zhang, Z., et al.: SHEsisEpi, a GPU-enhanced genome-wide SNP-SNP interaction scanning algorithm, efficiently reveals the risk genetic epistasis in bipolar disorder. Cell Res. 20, 854–857 (2010)

Ibrahim, Z.M., Newhouse, S., Dobson, R.: Detecting epistasis in the presence of linkage disequilibrium: a focused comparison. In: 2013 IEEE Symposium on CIBCB, pp. 96–103 (2013). https://doi.org/10.1109/CIBCB.2013.6595394

Kam-Thong, T., Azencott, C.A., Cayton, L., et al.: GLIDE: GPU-based linear regression for detection of epistasis. Hum. Hered. 73, 220–236 (2012). https://doi.org/10.1159/000341885

Kässens, J.C., Wienbrandt, L., et al.: Combining GPU and FPGA technology for efficient exhaustive interaction analysis in GWAS. In: 2016 IEEE 27th International Conference on ASAP, pp. 170–175 (2016). https://doi.org/10.1109/ASAP.2016.7760788

Kässens, J.C.: A hybrid-parallel architecture for applications in bioinformatics. No. 2017/4 in Kiel Computer Science Series, Department of Computer Science, CAU Kiel (2017). Dissertation, Faculty of Engineering, Kiel University. https://doi.org/10.21941/kcss/2017/4

Keaton, J.M., Hellwege, J.N., Ng, M.C.Y., et al.: Genome-wide interaction with selected type 2 diabetes loci reveals novel loci for type 2 diabetes in African Americans. Pac. Symp. Biocomput. 22, 242–253 (2016). https://doi.org/10.1142/9789813207813_0024

Kirino, Y., Bertsias, G., Ishigatsubo, Y., et al.: Genome-wide association analysis identifies new susceptibility loci for Behçet’s disease and epistasis between HLA-B*51 and ERAP1. Nat. Genet. 45, 202–207 (2013). https://doi.org/10.1038/ng.2520

Lee, S., Kwon, M.S., Park, T.: CARAT-GxG: CUDA-accelerated regression analysis toolkit for large-scale gene-gene interaction with GPU computing system. Cancer Inform. 13s7, CIN.S16349 (2014). https://doi.org/10.4137/CIN.S16349

van Leeuwen, E.M., Smouter, F.A.S., Kam-Thong, T., et al.: The challenges of genome-wide interaction studies: lessons to learn from the analysis of HDL blood levels. PLoS ONE 9, e109290 (2014). https://doi.org/10.1371/journal.pone.0109290

Piriyapongsa, J., Ngamphiw, C., Intarapanich, A., et al.: iLOCi: a SNP interaction prioritization technique for detecting epistasis in genome-wide association studies. BMC Genom. 13(Suppl 7), S2 (2012). https://doi.org/10.1186/1471-2164-13-s7-s2

Purcell, S., Chang, C.: PLINK v1.90p 64-bit, 9 January 2018. www.cog-genomics.org/plink/1.9/

Purcell, S., Neale, B., Todd-Brown, K., et al.: PLINK: a tool set for whole-genome association and population-based linkage analyses. Am. J. Hum. Genet. 81, 559–575 (2007). https://doi.org/10.1086/519795

The Australo-Anglo-American Spondyloarthritis Consortium (TASC), et al.: Interaction between ERAP1 and HLA-B27 in ankylosing spondylitis implicates peptide handling in the mechanism for HLA-B27 in disease susceptibility. Nat. Genet. 43, 761–767 (2011). https://doi.org/10.1038/ng.873

Ueki, M., Cordell, H.J.: Improved statistics for genome-wide interaction analysis. PLoS Genet. 8(4), e1002625 (2012). https://doi.org/10.1371/journal.pgen.1002625

Wan, X., Yang, C., Yang, Q., et al.: BOOST: a fast approach to detecting gene-gene interactions in genome-wide case-control studies. Am. J. Hum. Genet. 87(3), 325–340 (2010)

Wang, Y., Liu, G., Feng, M., Wong, L.: An empirical comparison of several recent epistatic interaction detection methods. Bioinformatics 27(21), 2936–2943 (2011)

Wienbrandt, L., Kässens, J.C., González-Domínguez, J., et al.: FPGA-based acceleration of detecting statistical epistasis in GWAS. Proc. Comput. Sci. 29, 220–230 (2014). https://doi.org/10.1016/j.procs.2014.05.020

Wienbrandt, L., Kässens, J.C., et al.: Fast genome-wide third-order SNP interaction tests with information gain on a low-cost heterogeneous parallel FPGA-GPU computing architecture. Proc. Comput. Sci. 108, 596–605 (2017). https://doi.org/10.1016/j.procs.2017.05.210

Xie, M., Li, J., Jiang, T.: Detecting genome-wide epistases based on the clustering of relatively frequent items. Bioinformatics 28(1), 5–12 (2012)

Yung, L.S., Yang, C., Wan, X., et al.: GBOOST: a GPU-based tool for detecting gene-gene interactions in genome-wide case control studies. Bioinformatics 27(9), 1309–1310 (2011)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Wienbrandt, L., Kässens, J.C., Hübenthal, M., Ellinghaus, D. (2018). 1,000x Faster Than PLINK: Genome-Wide Epistasis Detection with Logistic Regression Using Combined FPGA and GPU Accelerators. In: Shi, Y., et al. Computational Science – ICCS 2018. ICCS 2018. Lecture Notes in Computer Science(), vol 10861. Springer, Cham. https://doi.org/10.1007/978-3-319-93701-4_28

Download citation

DOI: https://doi.org/10.1007/978-3-319-93701-4_28

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-93700-7

Online ISBN: 978-3-319-93701-4

eBook Packages: Computer ScienceComputer Science (R0)