Abstract

In the most recent international comparisons, Switzerland ranks as one of the top three countries with the most institutionalized evaluation systems. This is a recent development, one which has been especially rapid in recent decades. This chapter retraces this evolution and the factors contributing to it. They include, in particular, many actors at the interface between different universes, the incorporation of an obligation to evaluate not only in the Swiss Constitution but also in specific laws, and the use of evaluation results both in political contexts and in public administration. To continue the momentum, Switzerland’s challenge today is to continue to disseminate a culture of evaluation; this will make it possible to promote enlightened democratic debate.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

At the end of the 1980s, the evaluation of public policy in Switzerland was largely conducted by academics (Horber-Papazian and Thévoz 1990); nowadays, it has gained international recognition. The most recent comparison (Jacob et al. 2015) places Switzerland among the top three countries, along with Canada and Finland, on the basis of the following criteria: the existence of evaluations in different policy domains, professional competency in evaluation, the presence of a national discourse about evaluation, the existence of a national evaluation society, the institutionalization of evaluation in government and parliament, the existence of evaluation in the highest auditing institution, that a plurality of institutions or evaluators conduct evaluations in different areas, and finally that a certain proportion of evaluations focus on impact and outcome rather than output and process.

A finding of this kind justifies that one takes interest in examining factors which have favored this institutionalization. Our thesis is that the strong institutionalization of public policy evaluation since the 1990s can be explained by a convergence of conducive factors. They include a context favorable to conducting evaluations, a variety of actors at the interface between differing universes, the incorporation and inclusion of an obligation to evaluate into the national Constitution, the emergence of political strategies which use evaluation to achieve political goals (including using clauses in laws that call for evaluation), and the use of the results of evaluations both in politics and in public administration. As data on cantonal and communal evaluation practices are both poor and quite disparate, the following discussion focuses on the federal level.

2 A Context Favorable to Evaluation

Many factors were responsible for putting questions about the effectiveness of state action, the implementation of governmental decisions, and the auditing methods being used onto the political agenda in the late 1980s and early 1990s. One of these was a crisis in public finance. Another was a larger debate about the role, or place, of the state in the context of the rise in ‘neoliberal’ ideology. Many public projects, particularly in the area of infrastructure, were called into question at the time, and there was also a loss of confidence in relations between the federal parliament and the executive. Deficits in public policy implementation had been identified by various national research programs. Finally, voters were calling for more efficiency and more transparency in actions undertaken by the state.

The combination of these factors led numerous actors, especially those at the interface between social universes and in contact with one another, to engage in reflections which began to converge. Their discourse first sensitized the communities to which these actors belonged about the important space which evaluation could occupy in the public policy cycle and then led to them becoming active in the process of institutionalizing such evaluations.

3 The Actors at the Interface Between Academic, Administrative, and Political Worlds

3.1 The Federal Department of Justice and Police

At the instigation of the author of the first thesis about legislative evaluation in Switzerland (Mader 1985), the Federal Department of Justice and Police created a working group in 1987 (the Arbeitsgruppe Gesetzesevaluation—AGEVAL) whose goal was to analyze the utility and value of policy evaluation in the Swiss federal government. The group brought federal and cantonal officials together with academic researchers. Following a proposal contained in the final report of this working group (Arbeitsgruppe Gesetzesevaluation 1991), an ‘evaluation network in the federal administration’ was brought to life in 1995. This group is still active and allows participants, who may be officials, clients, or users, to share their knowledge about, and experiences with, the results of evaluations.

In 1987, the Federal Department of Justice and Police also suggested that a national research program be launched to study the ‘Efficiency of Governmental Actions’ (NRP 27). Its principal goal was to identify assessment tools or methods most suited to the Swiss context. It was also an effort to increase knowledge about the effects different types of evaluations had on state activity, one coordinated by an administrator in the Federal Office of Justice who would later become the first president of the Swiss Evaluation Society (SEVAL). Thus, this Federal Department played a central role in promoting the use of evaluation within the federal administration and gave impetus to conducting further research on evaluation.

3.2 The Federal Parliament and Parliamentary Control of the Administration

Since the 1980s, the Control Committees in both houses of parliament, oversight committees which review the work conducted by the Federal Council and the federal administration, have ‘worked to reinforce this supervision’ and have ‘demanded that, in addition to the criteria of legality, regularity and appropriateness in taking action on the part of government and administration, that the effectiveness of the measures taken by the state be controlled in a more systematic manner’ (Bättig and Schwab 2015, 2).

In 1990, the parliament decided to set up a specialized evaluation body, the Parliamentary Control of the Administration (PCA). This body is responsible, in particular, for carrying out analyses of the effectiveness of public policies and public services on behalf of the Control Committees of the two houses. Today it has eight employees (six FTEs) specialized in evaluation, and it works completely independently; it can also call on external experts.

The work of the PCA serves as the basis for reports prepared by the Control Committees and recommendations they make to the executive, which is required to answer. If they are not satisfied with the response, the Committees can demand fuller answers. At the same time, if they believe that the results of the evaluation show important steps that need to be taken, the Committees can require the government to submit propositions for legislative revisions through a motion or propose those revisions themselves through a parliamentary initiative.

It is also the parliament, and more particularly the Committees, which requested including Article 170 into the new 1999 Constitution. It stipulates that ‘the Federal Assembly shall ensure that federal measures are evaluated with regard to their effectiveness’. By including such a broad clause in the Constitution, the parliament was a pioneer (Bussmann 2007). One result is that evaluation has shifted from being a tool for parliamentary oversight to being an instrument which covers all parliamentary activities, including legislative. The relevant literature thus widely accepts that Art. 170 sends or is a strong signal from parliament about the importance of the term ‘evaluation’ and about the importance of evaluation in legislative activity (Bussmann 2008; Horber-Papazian 2006, 135). Of all the parliaments considered in the aforementioned international comparison, the Swiss parliament has most institutionalized evaluation.

3.3 The Swiss Federal Audit Office

The revision of the Financial Control Act in 1994 extended the powers of the Swiss Federal Audit Office (SFAO) in line with the guidelines of the International Organization of Supreme Audit Institutions; it also encouraged the use of evaluations. This means the SFAO needs to examine whether resources are being used in an economical fashion and if the cost–benefit relationship is advantageous; it must also ensure that the budgeted expenditures are having the desired effect. In 2002, a new competency center focusing on performance audit and evaluation was created within the SFAO. It has the power to examine the effects and implementation of high-expenditure federal policies, and it can propose ways action taken by the Confederation can be made more efficient. In exercising this oversight, it assists the joint finance delegation of both parliamentary chambers, a body which, like the Federal Council, can entrust it with mandates to conduct summative and retrospective evaluations.

Like the PCA , the SFAO can approach topics in an intersectoral perspective. By contrast, it is the SFAO which formulates the recommendations which are given to the evaluated bodies—or more rarely, to political bodies like the Federal Council or parliaments (Crémieux and Sangra 2015). To avoid duplication, the SFAO and the PCA coordinate their activities in making their annual plans for conducting evaluations. Drafting such plans is required by the Financial Control Act. Helpfully, the evaluation criteria these actors focus on are not the same. The PCA focuses on the implementation of federal policies from a legal point of view, as well as with respect to appropriateness and effectiveness,Footnote 1 while the SFAO concentrates on fiscal oversight with respect to (financial) regularity , legality, and profitability.Footnote 2

Finally, following the federal model, it is worth noting that the cantons of Geneva and Vaud have each introduced their own Court of Auditors to serve their cantonal parliaments and cantonal executives.

3.4 The Administrative Units

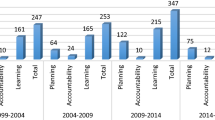

Evaluation activity in various administrative units helped institutionalize it. However, there are notable differences between these units, the most active of which have been the State Secretariat for Economic Affairs, the Federal Office of Public Health, the Federal Office for Professional Education and Technology, the Federal Office for Education and Science, the Federal Social Insurance Office, and the Swiss Agency for Development and Cooperation (Balthasar 2015). Balthasar and Strotz (2017) have noted an increase in the institutionalization of evaluation functions within the federal administration between 1999 and 2015, particularly in creating positions specifically devoted to evaluation. However, the total number of evaluations carried out within, or on behalf of, the federal administration has remained stable during this period, at about 80 per year (Balthasar 2015).

Data on evaluation practices at the cantonal level are too sparse to allow a similar inventory. However, as at the federal level, there appears to be a culture of evaluation in certain domains (e.g., training and health), where evaluations are more common than in others (Wirths and Horber-Papazian 2016). The phenomenon of ‘implementing federalism’, where the Confederation calls on the cantons to carry out directives on fighting unemployment or controlling migration but then also requires them to report on their actions, appears to have an influence on cantonal evaluation practices (Horber-Papazian and Rosser, forthcoming). At the municipal level, one can observe more and more towns evaluating measures taken in social policy, the planning of public space, policy toward youths, or sustainable development, all of which are in their sphere of competence. At both cantonal and municipal levels, evaluations are usually outsourced.

3.5 The Swiss Evaluation Society

SEVAL was created in 1996 with the aim of encouraging dialogue, exchanging information, and sharing evaluation experiences among politicians, administrators, university researchers, and consulting firms. It also intended to promote the dissemination of evaluation practices and to encourage quality. It currently has more than 500 members and helps create a favorable climate for evaluation in the country. SEVAL organizes an annual congress, holds brief courses, and supports the creation of working groups which focus on specific issues. It has also supported the development of quality standards for Switzerland and contributed to disseminating them; these standards were most recently revised in 2016 by a SEVAL working group. The Swiss standards were based on the Program Evaluation Standards originally drafted in the USA by the Joint Committee on Standards for Educational Evaluation (Widmer and Neuenschwander 2004). A debate has been underway in SEVAL for some time now about the professionalization of evaluators (Horber-Papazian 2015).

3.6 Universities and Higher Education Institutions

The earliest developments in the academic study of evaluation were made in French-speaking Switzerland. Centre d’étude, de technique et d’évaluation législatives (CETEL), the Center for Legislative Study, Technique, and Evaluation at the University of Geneva, was a forerunner in research on the effect of laws (Delley et al. 1982). The first seminar which brought together political leaders, administrative heads, and key researchers, however, was organized by the Urban and Regional Planning Community at Lausanne’s Ecole polytechnique fédérale de Lausanne (EPFL) in 1988; it gave rise to the first volume devoted to the evaluation of public policies in Switzerland (Horber-Papazian 1990).

In 1992, Institut de hautes études en administration publique (IDHEAP), the Swiss Graduate School of Public Administration, was the first to offer a 15-day postgraduate course devoted to public policy evaluation (Bergman et al. 1998). Since then, evaluation courses have been offered in various social science degree programs at Swiss universities and universities of applied sciences. A postgraduate degree and a master’s degree in evaluation are offered by the University of Bern, for example, and various other shorter courses are available, largely meant for political and administrative actors.

As far as research is concerned, it is regrettable that once the national research program ‘Efficiency of Governmental Actions’ (NRP 27) ended in 1997, after ten years’ work, it took another 16 years for a successor project to emerge. In 2013, researchers from the Universities of Bern, Geneva, Lausanne (IDHEAP), Lucerne, and Zurich launched a new national project entitled ‘Policy Evaluation in the Swiss Political System—Roots and Fruits’. The conclusions from this research, some of which are included here, were published in a collective work edited by Fritz Sager, Thomas Widmer, and Andreas Balthasar (2017). Apart from their implication in teaching and research, academics employed at Swiss universities are quite active in publishing in the major international evaluation journals (Jacob 2015).

3.7 Evaluators

It is the evaluators themselves who are among the most important players in the development of evaluation in the country; the SEVAL website at present lists 202 evaluators and 28 institutions which give out mandates to conduct evaluations.Footnote 3 The vast majority of these are in German-speaking Switzerland (only 33 are active in the French-speaking part of the country), and so it is also in the German-speaking area that one finds the most important evaluation offices. This can certainly be explained by their proximity to federal institutions, the largest providers of evaluation mandates. The SEVAL list indicates that the largest numbers of active evaluators in French-speaking Switzerland are associated with university institutes. Evaluators in Switzerland are generally very well trained and have extensive professional experiences both in terms of how many evaluations they have completed and how many years’ practice they have (Pleger et al. 2017).

4 The Available Resources for Constitutionally Mandated Evaluations

The resources available for evaluation in Switzerland are another aspect which helps explain its institutionalization. If one refers to resources, one means not just the demonstrated competence shown by evaluators and the structures and organizations, but also the consensus that there is a need for evaluation. As many of these were addressed already, the rest of this section looks at available financial and legal resources.

4.1 Financial Resources

No official data exist on the financial resources allocated to evaluations. However, all evaluations conducted at the federal level are included in a national database (ARAMIS), and so Balthasar (2015) has used this database to calculate that in the 2009–2012 period: 31.6 million Swiss francs was spent at the federal level on evaluations, or around 8 million Swiss francs per year. This is equivalent to the annual amount spent between 1999 and 2002, he notes, and hence the absolute amount spent in the federal administration for evaluations has remained essentially unchanged from 1999 to 2012.

4.2 Legal Resources

4.2.1 Article 170 of the Federal Constitution

The incorporation of an obligation to evaluate the impact of measures taken by the state is one of the criteria for determining the degree to which evaluation is institutionalized (Jacob et al. 2015). As noted above, Art. 170 of the Federal Constitution reflects the importance accorded to evaluation in Switzerland. The report of the interdepartmental working group in the Swiss federal administration on the ‘evaluation of effectiveness’ (IDEKOWI 2004) underscores that this article refers to all forms of state action, regardless of juridical basis (e.g., whether in the constitution, or a law, federal decree, ordinance, or directive). It also applies to all organs—parliament, executive, administration, courts, external bodies, and cantons inasmuch as they implement measures of the Confederation—which originate such state action. The phrase dass die Massnahmen des Bundes auf ihre Wirksamkeit überprüft werden (‘that federal measures are evaluated with regard to their effectiveness’) meant, in the eyes of this working group, that the most open approach possible has been chosen, one that includes both prospective and retrospective evaluation, monitoring, controlling, and quality control (IDEKOWI 2004, 11).

4.2.2 Evaluation Clauses Incorporated into Legislation

Article 170 of the Federal Constitution played a catalyzing role, particularly in defining ‘sectoral’ evaluation clauses (meaning in laws related to specific public policies) at the federal level, and in both general and sectoral clauses at the cantonal level. Among 262 evaluation clauses found at federal and cantonal levels in 2015 (Wirths and Horber-Papazian 2016), 80% came into force after Art. 170 became part of the Federal Constitution. Today, 14 cantons have followed the Confederation and introduced general evaluation clauses into their own constitutions. With the exception of Nidwalden, all other cantons have integrated a general clause into their political practice; it is applicable to the entirety of their legislation. For both cantons and federal government, evaluation clauses appear in nine out of ten cases already at the point when laws are being drafted, and this is even more so at the federal than at the cantonal level (Wirths and Horber-Papazian 2016, 496). A comparison between the cantons and the Confederation also reveals an important detail: it is at the cantonal level that political actors introduce the most clauses, mainly in the committees.

This is particularly true in Geneva. On the one hand, this can be explained by the strong links between academic actors, active in working to increase awareness of the importance of legislative evaluation, and the political world. On the other hand, it is also the result of the creation, in Geneva, of an ‘External Commission for Public Policy Evaluation’ in 1995. This permanent body, external to the administration and composed of actors from civil society, can be called upon by the government and parliament, and has the power to launch its own inquiries. Its activities were transferred to the Court of Auditors (Cour des Comptes) in 2013. The strong presence of a culture of evaluation in Geneva is also reflected in the results of a recent survey of Swiss parliaments (Eberli et al. 2014). Elsewhere, 20% of the parliamentarians surveyed said that they had proposed including an evaluation clause—but in Geneva it was 57%.

4.2.3 Evaluation Clauses as a Strategic Resource in the Political Decision-Making Process

The possibility of using a tool such as evaluation clauses, or calling for an evaluation, evidently has an influence on parliamentary debates. An analysis conducted in the Geneva parliament showed that, aside from reducing uncertainty in politically new domains or creating the possibility of controlling the actions of the executive, parliamentarians who proposed adding evaluation clauses could obtain a consensus. The prospect of a (subsequent) evaluation gave opponents a means to have their objections be taken into account and could lead, when necessary, to modifications, or even the cancelation of the legislation under consideration (Horber-Papazian and Rosser, forthcoming).

The survey conducted by Eberli et al. (2014) also reveals that parliamentarians make more calls for evaluations than they use their results. This can certainly be explained by the fact that, in the great majority of cases, it is up to the administration to launch the evaluations called for in evaluation clauses. When the evaluation clauses have a ‘low normative density’, that is, when they only define few elements like the object of or the criteria for evaluation (Wirths 2016), the administration’s resulting room for maneuver means it can define the evaluation questions of interest for it. The focus is therefore on managerial issues, and the results of such evaluations are generally not discussed among parliamentarians: they tend to be more interested in questions of relevance and impact.

4.2.4 The Freedom of Information Act

Since their introduction, the SEVAL standards have encouraged transparency in evaluations. The 2004 introduction of the Freedom of Information Act in the Administration (FoIA) at the national level (implemented at the cantonal level) has made it possible to significantly strengthen such transparency, particularly because one can generalize access to the results of public policy evaluations. This act ‘seeks to promote transparency with regard to the mandate, organization and activities of the administration’ and contains an article specifically about evaluation: Access to reports on the evaluation of the performance of the Federal Administration and the effectiveness of its measures is guaranteed’ (Art. 8(5), FoIA ). This transparency can thus be active. Those who mandate an evaluation publicize the evaluations through various channels, including their websites, publications, or even press conferences. Yet it can also be passive, in which case the report must be communicated to the person (or agency) which requested it.

5 Utilization of Evaluation Results

Among the factors contributing to strong institutionalization is that the results of evaluations are actually used. An analysis conducted by the SFAO focused on the specific effects of 115 evaluation clauses introduced from 2006 to 2009 (Swiss Federal Audit Office 2011); it showed that these resulted in 62 actual evaluations. In 45% of the cases, their purpose was to improve the implementation of the measures. In 35% of the cases, their main purpose was to provide information, in the accountability reports, on their implementation status, while 9% of the evaluations led to a modification of the law. The remaining cases served to justify the continuation and financing of programs and measures taken by the Confederation.

Other analyses examining the utilization of administration-initiated evaluations have also found that they have had effects. At the federal level, Balthasar (2015) notes that more than 65% of those responsible feel that among the evaluations that they have seen to completion, the use of the results has been high. This accords with the analysis of Bättig and Schwab (2015, 10), who find that ‘following nearly all PCA evaluations, corrective measures related to the recommendations of the Control Committees can be identified. In any case, the scope of these measures may differ’. The same is true for evaluations conducted within the audit and evaluation unit of the SFAO . One analysis of implementation found that among 15 evaluations containing 100 recommendations, 55% were applied, 24% were partly implemented, and 21% were not implemented at all (Crémieux and Sangra 2015).

If it is important that an evaluation allows for adjustments in implementing a public policy, then it is essential that the results can also be used. Policymakers should be able to move from ‘single-loop’ learning , which involves the administration, to ‘double-loop’ learning , integrating political decision-makers and giving them an opportunity to reconsider the objectives and formulation of a public policy (Leeuw et al. 1994). An extract from a PCA annual report provides reassurance on this point:

The results of the PCA’s work are taken into consideration in diverse ways in the parliamentary and executive decision-making process. The Control Committees made numerous recommendations to the Federal Council or initiated legislative revisions based on PCA evaluations. (…) Moreover, evaluation results are regularly mentioned in parliamentary interventions and debates, or mentioned by the Federal Council in its messages calling for revisions of the law (2014, 5).

A similar analysis was conducted at the cantonal level by Geneva’s Court of Auditors. Its annual report indicated that of its seven published evaluations from 2014 to 2017, three led to modifications of the law that has either entered into force or are still under review by the cantonal legislature (Court of Auditors 2017).

These various analyses indicate the importance of institutional legitimacy in order that evaluation results be taken into account. To reinforce the use of evaluation results, the external bodies which mandate evaluations increasingly turn to advisory groups. These are often composed of those who are in some sense the ‘constituents’ of an evaluation, such as communal or cantonal politicians, or the representatives of groups the evaluations concern. Such advisory groups are kept informed about the different stages of the evaluation, the results of the analyses, and are consulted about the clarity, feasibility, and acceptability of the recommendations. This facilitates the process of learning about the content of the evaluation, reduces apprehensions it may engender, and serves as a means to explain a not small part of the recommendations which are to be implemented both at the political and administrative levels.

6 What Can One Learn from the Swiss Example with Respect to the Institutionalization of Public Policy Evaluation?

Diverse factors have contributed to the institutionalization of public policy evaluation in Switzerland, as this chapter has highlighted. What stands out most, in international comparison, is the place evaluation occupies in the functioning of the federal parliament. The Swiss parliament is composed of militia politicians concerned about their prerogatives in the area of executive oversight. They saw evaluation as an additional tool that could strengthen the means at their disposal to exert control, and thereby their power to intervene. By creating a structure, the PCA, composed of professionals who carry out evaluations on topics chosen by its Control Committees, parliament has a powerful tool at its disposal which allows it to respond to questions of interest, and these are related to the appropriateness , legality, and effectiveness of the measures taken and implemented. By reserving the right to make recommendations to the government, which must take a position on the subject, the parliament gives evaluations a strong political dimension. This is reinforced by the competence it has to control the implementation of its recommendations. Indeed, it even has the power to replace executive decisions—on the basis of parliamentary initiatives—by its own proposals, which can include repealing or enacting laws should the government not propose amendments. This is certainly one explanation for why, at the federal level, evaluation not only has effects in terms of implementing public policies but also in terms of political decisions.

The (at times) close relations between the different actors involved in an evaluation, and especially between academics and administrative officials, which result from mutual learning and the links between theory and practice it permits, are another strong explanatory factor for the institutionalization of evaluation. It is, in fact, common in Switzerland that senior officials teach classes on evaluation practice at institutions of higher education. Academics whose research focuses on evaluation may well also be contracted to conduct evaluations, enriching their teaching and strengthening their ties to other actors in this world. For their part, politicians increasingly have training in evaluation and are prepared to discuss the effects of their decisions not just in terms of political values or sensibilities, but relative to data collected and analyzed in a rigorous and independent manner.

The procedural institutionalization of evaluation brought about by introducing a general evaluation clause in the Federal Constitution, from which specific clauses have been derived and are now found in most legislation, has had a significant effect on the dissemination of evaluation through various political policy domains. Anchoring evaluation in the juridical texts has also meant that the influence of evaluation has been extended to the cantonal level. With one exception, all the cantons have followed Art. 170 in the Federal Constitution and integrated a general evaluation clause into their own constitutions.

Moreover, the strategic utilization of evaluation clauses in political debates is an element which has strengthened parliamentarians’ control over actions taken by the state, particularly in terms of the relevance and effectiveness of the measures taken. This aspect might be even further increased should they understand the full interest they (potentially) have in proposing clauses with ‘high normative density’ of a kind which maintains control over the evaluation criteria.

The guarantee of access to evaluation reports which is given by the Freedom of Information Act is an important step, as it allows citizens who wish to inform themselves about the effectiveness of actions taken by public administrations—notably bodies whose tasks citizens finance. A public administration thus then moves from seeming to be a mysterious, all-powerful ‘black box’ to become an institution that is accountable to the citizens.

Finally, the issue SEVAL recently raised about the accreditation of evaluators makes it possible to question the training which is currently available. It certainly reinforces the idea of providing short-term training to enable evaluators to keep abreast of developments, especially from the point of view of new evaluation methods.

These generally positive points should not obscure that financial resources allocated for evaluation at the federal level have stagnated for many years. It is also true that while one can say that evaluation is strongly institutionalized at the federal level, data on evaluation practices at levels below it are lacking; one thus cannot make a reliable diagnosis of where evaluation stands, say, at the cantonal level. Finally, even if the Freedom of Information Act requires that evaluations be made accessible, this does not mean they are known beyond the immediate circle of those they concern. At present, the results of evaluations are not sufficiently disseminated to the general public; it is also rare to find them mentioned in the press (Stucki and Schlaufer 2017). If the press does report on them, then often in truncated or even biased ways (Horber-Papazian and Bützer 2008).

So in addition to maintaining its rank as the country in which evaluation is most highly institutionalized, Switzerland faces another challenge: finding a way to enable evaluation to promote an enlightened democratic debate.

Notes

- 1.

Art. 10 Order on the Parliament’s administration, in combination with Art. 52 Parliamentary Act.

- 2.

Art. 5 Financial Control Act.

- 3.

As of the beginning of February, 2018.

References

Arbeitsgruppe Gesetzesevaluation. (1991). Die Wirkungen staatlichen Handelns besser ermitteln: Probleme, Möglichkeiten, Vorschläge. Schlussbericht an das Eidgenössische Justiz- und Polizeidepartement. Bern: EDMZ.

Balthasar, A. (2015). L’utilisation de l’évaluation par l’administration fédérale. In K. Horber-Papazian (Ed.), Regards croisés sur l’évaluation en Suisse (pp. 115–132). Lausanne: PPUR.

Balthasar, A., & Strotz, C. (2017). Verbreitung und Verankerung von Evaluation in Bundesverwaltung. In F. Sager, T. Widmer, & A. Balthasar (Eds.), Evaluation im politischen System der Schweiz (pp. 89–117). Zurich: Neue Zürcher Zeitung libro.

Bättig, C., & Schwab, P. (2015). La place de l’évaluation dans le cadre du contrôle parlementaire. In K. Horber-Papazian (Ed.), Regards croisés sur l’évaluation en Suisse (pp. 1–23). Lausanne: PPUR.

Bergman, M. M., Cattacin, S., & Läubli-Loud, M. (1998). Evaluators evaluating evaluators: Peer-assessment and training opportunities in Switzerland. Geneva: RESOP-Université de Genève.

Bussmann, W. (2007). Institutionalisierung der Evaluation in der Schweiz. Verfassungsauftrag, Konkretisierungsspielräume und Umsetzungsstrate-gie. In H. Schäffer (Ed.), Evaluierung der Gesetze / Gesetzesfolgenabschätzung (II) (pp. 1–20). Vienna: Manzsche Verlags und Universitätsbuchhandlung.

Bussmann, W. (2008). The emergence of evaluation in Switzerland. Evaluation, 14(4), 499–506.

Court of Auditors. (2017). Rapport annuel d’activité 2017. Tome 2: Annexes. Geneva: République et Canton de Genève.

Crémieux, L., & Sangra, E. (2015). La place de l’évaluation dans le cadre du Contrôle fédéral des finances. In K. Horber-Papazian (Ed.), Regards croisés sur l’évaluation en Suisse (pp. 37–57). Lausanne: PPUR.

Delley, J.-D., Morand, C.-A., Derivaz, R., & Mader, L. (1982). Le droit en action – Etude de mise en œuvre de la loi Furgler. St-Saphorin: Georgi.

Eberli, D., Bundi, P., Frey, K., & Widmer, T. (2014). Befragung: Parlamente und Evaluationen. Ergebnisbericht. Zurich: Universität Zürich.

Horber-Papazian, K. (Ed.). (1990). Evaluation des politiques publiques en Suisse. Pourquoi? Pour qui? Comment? Lausanne: PPUR.

Horber-Papazian, K. (2006). La place de l’évaluation des politiques publiques en Suisse. In J.-L. Chappelet (Ed.), Contributions à l’action publique/Beiträge zum öffentlichen Handeln (pp. 131–144). Lausanne: PPUR.

Horber-Papazian, K. (2015). Regards croisés sur l’évaluation en Suisse. Lausanne: PPUR.

Horber-Papazian, K., & Bützer, M. (2008). Dissemination of evaluation reports in newspapers: The case of CEPP evaluations in Geneva, Switzerland. In R. Boyle, J. D. Breul, & P. Dahler-Larsen (Eds.), Open to the public: Evaluation in the public (pp. 43–66). New Brunswick: Transaction Publishers.

Horber-Papazian, K., & Rosser, R. (forthcoming). From law to reality – A critical view on the institutionalization of evaluation in the Swiss Canton of Geneva’s parliament. In J.-E. Furubo & N. Stame (Eds.), The evaluation enterprise. London: Routledge.

Horber-Papazian, K., & Thévoz, L. (1990). Switzerland: Moving towards evaluation. In R. C. Rist (Ed.), Program evaluation and the management of government (pp. 133–143). New Brunswick: Transaction Publishers.

IDEKOWI. (2004). Efficacité des mesures prises par la Confédération. Proposi-tions de mise en œuvre de l’art. 170 de la Constitution fédérale dans le contexte des activités du Conseil fédéral et de l’administration fédérale. Bern: Office fédéral de la justice.

Jacob, S. (2015). La recherche sur l’évaluation en Suisse. In K. Horber-Papazian (Ed.), Regards croisés sur l’évaluation en Suisse (pp. 267–284). Lausanne: PPUR.

Jacob, S., Speer, S., & Furubo, J.-E. (2015). The institutionalization of evaluation matters: Updating the international atlas of evaluation 10 years later. Evaluation, 21(1), 6–31.

Leeuw, F. L., Rist, R. C., & Sonnichsen, R. C. (Eds.). (1994). Can governments learn? Comparative perspectives on evaluation and organizational learning. New Brunswick: Transaction Publishers.

Mader, L. (1985). L’évaluation législative – Pour une analyse empirique des effets de la législation. Geneva: Université de Genève.

PCA. (2014). Rapport annuel 2013 du Contrôle parlementaire de l’administration. Berne: Services du Parlement.

Pleger, L., Wittwer, S., & Sager, F. (2017). Wer sind die Evaluierenden in der Schweiz? In F. Sager, T. Widmer, & A. Balthasar (Eds.), Evaluation im politischen System der Schweiz (pp. 189–208). Zurich: Neue Zürcher Zeitung libro.

Sager, F., Widmer, T., & Balthasar, A. (Eds.). (2017). Evaluation im politischen System der Schweiz. Zurich: Neue Zürcher Zeitung libro.

Stucki, I., & Schlaufer, C. (2017). Die Bedeutung von Evaluationen im direktdemokratischen Diskurs. In F. Sager, T. Widmer, & A. Balthasar (Eds.), Evaluation im politischen System der Schweiz (pp. 279–310). Zurich: Neue Zürcher Zeitung libro.

Swiss Federal Audit Office. (2011). Umsetzung der Evaluationsklauseln in der Bundesverwaltung. Bern: Contrôle fédéral des finances.

Widmer, T., & Neuenschwander, P. (2004). Embedding evaluation in the Swiss Federal Administration: Purpose, institutional design and utilization. Evaluation, 10(4), 388–409.

Wirths, D. (2016). Procedural institutionalization of the evaluation through legal basis: A new typology of evaluation clauses in Switzerland. Statute Law Review, 38(1), 23–39.

Wirths, D., & Horber-Papazian, K. (2016). Les clauses d’évaluation dans le droit des cantons suisses: leur diffusion, leur contenu et la justification à l’origine de leur adoption. LeGes, 2016(3), 485–502.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2019 The Author(s)

About this chapter

Cite this chapter

Horber-Papazian, K., Baud-Lavigne, M. (2019). Factors Contributing to the Strong Institutionalization of Policy Evaluation in Switzerland. In: Ladner, A., Soguel, N., Emery, Y., Weerts, S., Nahrath, S. (eds) Swiss Public Administration. Governance and Public Management. Palgrave Macmillan, Cham. https://doi.org/10.1007/978-3-319-92381-9_21

Download citation

DOI: https://doi.org/10.1007/978-3-319-92381-9_21

Published:

Publisher Name: Palgrave Macmillan, Cham

Print ISBN: 978-3-319-92380-2

Online ISBN: 978-3-319-92381-9

eBook Packages: Political Science and International StudiesPolitical Science and International Studies (R0)