Abstract

Word and Sign is a mobile AR application that was developed to support the literacy development of Arabic children who are deaf or hard of hearing. The utilization of AR is intended to support reading, particularly the process of mapping a printed word to its corresponding ArSL. In this paper, the performances of elementary grade children who are deaf or hard of hearing are assessed after they are taught thirteen new words using two methods: AR using Word and Sign and a traditional teaching approach using ArSL, fingerspelling and pictures. The assessment is conducted as a series of tasks in which participants are asked to associate a printed word with its corresponding picture and ArSL. The findings show that participants who were taught via the AR application completed significantly more tasks successfully and with significantly fewer errors compared to participants who were taught the new words via a traditional approach. These findings encourage the utilization of AR inside and outside the classroom to support the literacy development of children with hearing impairments.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Recent statistics from the General Authority for Statistics in Saudi Arabia reported that approximately 3% of the population was registered as being hearing impaired in 2017 [17]. This segment of the population shares a common native language, i.e., Arabic sign language (ArSL), with Arabic (the official language in Saudi Arabia) being considered a second language. The prevalence of hearing disabilities varies geographically in Saudi Arabia, with almost two thirds of the people with hearing disabilities found residing in rural areas [4]. A study carried out in Saudi Arabia before 2010 showed that a majority of people with disabilities did not have access to psychological and educational services from an early age. This isolation resulted in below-average literacy skills when entering schools, hence negatively impacting academic progression and achievements [13].

Children are exposed to language early in their development, which leads to the acquisition of the literacy skills necessary for their growth [9]. As a child learns to read, language familiarity is one of the two factors that impact reading. The other factor is the process of cognitively mapping printed text into a familiar language, i.e., decoding, by recognizing the patterns that make letters and words [12, 27]. While children who can hear grow familiar with spoken language, children who are deaf are disadvantaged by a limited or non-existent exposure to language at a young age. This late acquisition of language delays the development of literacy skills, which greatly impacts educational, social, and vocational development [22].

Augmented reality (AR) is a user interface paradigm that involves the direct superimposition of virtual objects onto a real environment. This supports the primacy of the physical world while still supplementing the user experience and enhancing the interaction with virtual components. AR was principally introduced in 1993 [28] and has since rapidly expanded, considering the technological advances of the past few decades and the continuously growing amount of information exposed to the user. AR supports users’ actions within the current context as they navigate a new enhanced reality using reality sensors [18]. In recent years, mobile or phone-based AR systems have become more prevalent, as they layer information onto any environment without constraining users’ locations [24]. Mobile AR has the potential to support users’ interactions with a plethora of computer-supported information without distracting them from the real world [10].

The value of AR as an educational tool has increasingly been recognized by researchers, as the enhanced reality can allow for the visualization of abstract concepts, the experience of phenomena, and interaction with computer-supported synthetic objects [29]. The benefits of AR adoption for educational purposes, i.e., increased motivation, attention, concentration, and satisfaction, have regularly been documented [11]. AR has also been found to support active and collaborative learning, interactivity, and information accessibility, which encourage exploration. The value of AR as an educational tool has also been revealed in various studies considering children with varied disabilities, such as autism [8, 20, 25], intellectual deficiency [25], and hearing impairment [23].

Current teaching techniques in Saudi Arabian schools rely heavily on sound-based approaches that disadvantage the literacy development of students who are hearing impaired. To address this issue, Word and Sign, a mobile AR application, was developed to support the reading comprehension and literacy of Arabic children who are deaf. In this paper, this AR application is evaluated via a comparative user study. The purpose of the study is to determine the effectiveness of the mobile AR application at supporting the acquisition of new printed vocabulary and the necessary decoding onto their visual representations. The results show that the use of AR has the potential to support the decoding process and thus improve the reading comprehension and literacy of Arabic children who are hearing impaired.

The remainder of this paper is organized as follows. Section 2 reviews existing works that adopted AR for the educational development of individuals who are hearing impaired. The section particularly considers children’s literacy development and reading comprehension. Next, in Sect. 3, a mobile AR application that supports the decoding of printed Arabic vocabulary from fingerspelling and ArSL, i.e., Word and Sign, is briefly described. The experimental setup and procedure for evaluating Word and Sign are presented in Sect. 4. This section also reports the results of the experiment. These results are then discussed in Sect. 5. Finally, Sect. 6 summarizes and concludes the paper. It also briefly discusses future work.

2 Related Work

Several research efforts have been devoted towards supporting the educational development of children who have hearing impairments. This section reviews a variety of AR applications (mobile and otherwise) that aim to advance the educational progress of hearing-impaired children in various subject areas, including science, religion, reading comprehension and literacy. The review also considers AR applications developed for various sign languages, such as Malaysian, Arabic, American, and Slovenian sign languages.

A sign language teaching model (SLTM) was proposed to support preschool and primary education for children who are deaf [5, 6]. The proposed model, i.e., multi-language cycle for sign language understanding (MuCy), was developed to improve the communication skills of children who are deaf by utilizing AR as a complementary tool for teaching sign language. The MuCy model is a continuous psycho-motor cycle with two levels of education. The first level addresses the learning of the correct use of a sign in conjunction with their visual and written representations. The second level verbalizes these words via imitation of face, mouth, and tongue movements. The model utilizes a sign language book and an AR desktop application. Two pilot lessons involving four children were carried out using the MuCy model, and additional feedback was collected from parents and teachers. Data were collected via observation of the children and interviews with parents and teachers. The findings showed that the model can easily be adapted by teachers and can improve sign language communication skills in children who are deaf.

A mobile AR application was developed to augment pictures that represent word signs performed by an interpreter with sign language interpretation videos that are played on a mobile phone [19]. The application is intended for signers who are deaf or hard of hearing and also those interested in learning Slovenian sign language. A comparative user study was conducted to evaluate the efficiency of using AR when learning sign language and users? perception of augmented content. The study compared the success rate of signing words when using (a) a picture, (b) the mobile AR application, and (c) a physically present sign language interpreter. The study recruited 25 participants with a mean age of approximately 30 years old, 11 of which were deaf or hard of hearing and 14 of which were hearing non-signers. The results from a between-within subjects analysis showed a significant success rate when utilizing the AR application or an interpreter compared to using a picture. Of these two, the latter showed an increment in success rate of 9% compared to the AR application. The findings also showed that there was no significant difference between participants who are deaf or hard of hearing and hearing non-signers. These results suggest the potential of AR for learning sign language; nevertheless, the study did not objectively measure sign language skills prior to the study.

A communication board was developed to support the communication skills of children who are deaf as a means to supplement speech [7]. The communication board is based on the Fitzgerald Keys teaching method, which involves a linguistic code of visual representation that utilizes color codes in the form of questions in which a word sequence is put together. The proposed communication board was designed based on findings from an observational study involving children in secondary education who are deaf and do not have a grasp of the American sign language. In the study, five mobile applications that each function as a communication board and a newly developed application called Literacy with Fitzgerald were comparatively observed. The results revealed that the digital application can potentially produce more favorable results when allowing for interaction with a real environment. This led to the development of a physical communication board that consists of physical cards that correspond to virtual content of visual information via an augmented 3D model.

The visual needs of children who are deaf were elicited from a preliminary study that was conducted on three groups of respondents: education officers, teachers, and learners who are deaf [21]. The purpose of the study was to identify the visual needs that can support scientific acquisition when learning about science. The findings from the interview with the education officers highlight the lack of material that supports the science education of children who are deaf. Science teachers were similarly interviewed, and the results underline several difficulties faced by students who are deaf: difficulty understanding abstract concepts and a lack of visual materials. While observing three students who are deaf, several difficulties were identified as they attempted to recognize text compared to pictures, understand abstract concepts, and grasp complex words. These findings enabled the development of a Malaysian secondary (fifth year) science courseware, PekAR-Mikroorganisma, that utilizes AR in a web-based environment to augment a physical notebook with 3D objects [30]. The new system was heuristically evaluated by seven usability experts to identify design problems, who provided positive feedback highlighting the potential of AR in enhancing learning for science courseware.

Najeeb is a mobile AR application that was developed to educate children who are deaf or hard of hearing on religious traditions [3]. Prior to development, a review was conducted to explore current ArSL mobile AR applications and explore parameters that impact their effectiveness as educational tools. The review considered signing avatars, analyzing texts, and dictionaries. The findings signify the importance of using 3D imagery to allow for realistic and fluid movements between fingers and hands. Several of the reviewed applications support text analysis, which can provide assistance to individuals who either are deaf or can hear during the learning process. Due to their different functionalities, the applications maintained words that differ from one application to another. More importantly, the findings show an unavailability of educational resources for individuals who are deaf or hard of hearing. Najeeb is an educational Islamic tool that teaches basic tenets of Islam to children who are deaf or hard of hearing. The application uses images, video, and 3D animations to motivate and support the learning process. The design of Najeeb was grounded in a theoretical framework of the information processing theory that promotes engagement and learning in an e-learning environment. Tenets are presented in the application as multimode tutorials that take into account the presentation of information that can impact comprehension and memorization.

The use of Google glass as a head-mounted display for an AR application was proposed to improve the reading process of children who are deaf or hard of hearing [16]. Using the prototype, a child would point to a word in an augmented book to request its representative American sign language. The user’s finger is detected by Google glass, which determines the word location above the fingertip, after which a blob detection algorithm is used to detect the edge of the image. Once the word boundaries are recognized the image is cropped and passed on to an optical character recognition algorithm, which converts the cropped image into text and finally to a corresponding sign video. Several limitations were identified when it comes to displaying the correct sign. The meaning of the word also varies based on its context; thus, the accuracy of the sign is affected when the word is extracted regardless of its contextual meaning.

The purpose of this review was to introduce several efforts made towards the adoption of AR with various sign languages to supplement and enhance communication and learning. The review focuses on AR applications that are intended for educating children or adults who are deaf or hard of hearing. The majority of the articles reviewed address the visual communication needs of individuals who are deaf or the development of supportive information. Nevertheless, only a few of these studies conducted user studies to assess their effectiveness as educational tools in real contextual settings. This underlines the value of this work’s contribution, as evaluations were conducted in a class against a control group to asses the effectiveness of a mobile AR application for the literacy development of children who are deaf or hard of hearing.

3 Word and Sign

A series of user studies was previously conducted to elicit requirements that highlight the visual needs of children who are deaf or hard of hearing as they learn new printed Arabic vocabulary [2]. These needs were determined from various perspectives, i.e., teachers and interpreters, parents, and children who are deaf or hard of hearing, via interviews, surveys, and observations, respectively. The visual preferences determined based on the user studies are summarized and presented in Table 1.

According to interviews conducted, teachers of children who are deaf or hard of hearing advocated for the use of sign language to represent printed text. They also recommended the use of pictures and/or videos to further supplement the mapping of printed text with its sign. Survey results collected from parents of children who are deaf revealed higher precedence for picture and videos than for sign language. Observations of first-grade elementary school children who are hard of hearing were made as they completed tasks that required linking printed text with pictures, videos, fingerspelling using signs of individual letters, and sign language. These findings showed that students performed the tasks best when mapping a printed text with fingerspelling. The observations showed that children’s performances were better when the signer face and body were shown, i.e., not limited to showing the hands. Intriguingly, children also performed better when the interpreter was an actual person and not an avatar. The children fared the worst with sign language, which clearly indicates a weakness when decoding signs into printed text.

Fingerspelling has been utilized for reading by educators of children who are deaf and hard of hearing in a systematic way by using sequences called “chaining” and “sandwiching”. Both techniques comprise a series of steps used to create links between signs, printed text, and fingerspelling. The chaining technique begins with the educator pointing to a printed word, followed by the fingerspelling of each of the letters in that word, and finally signing of the word. In sandwiching, the educator essentially ’sandwiches’ the fingerspelling of a printed word with its corresponding sign, or vice versa. The utility of such techniques has previously been documented for American sign language [1, 14, 26]; however, this was not reported in the interviews, with no systematic technique reportedly being utilized when learning new vocabulary and reading [2].

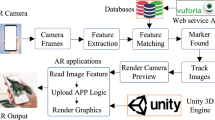

These findings led to the development of Word and Sign, a mobile AR application for the literacy development of Arabic children who are deaf or hard of hearing. Unity and Vuforia were used to develop the application for Android smartphones. The application is supported by an electronic book that shows a series of Arabic words along with picture representations. These words were collected from the original first-grade courseware to support educators in the classroom, acting as a supplement to existing teaching methods. When a phone’s camera detects a word, the picture is superimposed with a video depicting the word using either the chaining or sandwiching techniques. This is to guarantee that the text is always evident to encourage decoding. Due to the lack of video resources for ArSL, a female child interpreter aged 10 years old was video recorded. These videos are maintained in the video-based dictionary. A sound option is included to encourage the participation of hearing friends or family in learning ArSL. The user is also presented with a video option to display video representing the detected word. Figure 1 shows the Word and Sign application with the three main options: ArSL chaining or sandwiching video (middle), sound (right), and representative video (left). The figure also shows the settings menu, which provides access to the electronic book.

4 Assessing the Efficacy of Word and Sign

The efficacy of utilizing a mobile AR application for the literacy development of children who are deaf or hard of hearing was assessed by conducting a user experiment. The goal of the experiment was to comparatively assess the performance of children who are deaf when taught new vocabulary using the traditional method of teaching (control group) and with the aid of the mobile AR application. The experiment adopted a between-participants design. In the experiment, we studied the similarities and differences between these two groups as they completed a series of association tasks that link the new taught words with representative pictures and ArSL based on three measures: completion rate, number of errors, and time on task. Based on these measures, the following hypotheses are proposed:

-

H1: Compared to the traditional method of learning new printed vocabulary, utilizing Word and Sign increases the completion rate and reduces the error rate in an association task between a new learned word and a representative picture or ArSL.

-

H2: The time needed to complete the association tasks is reduced when the participants are taught the new word via Word and Sign compared to the traditional method.

4.1 Participants

Twenty participants who are deaf or hard of hearing were recruited from Al-Amal Institute (six students) and public elementary school 300 (fourteen students). Al-Amal Institute is an exclusive deaf institute, while public elementary school 300 is a public school with exclusive classes for students who are deaf or hard of hearing. Both schools utilize the elementary curriculum provided by the Ministry of Education in Saudi Arabia. All participants were female first-grade students with mild (four students) or moderate (5 participants) to severe (11 participants) hearing loss. None of the students suffered from any additional disabilities. Students’ ages ranged from 7 to 12 years old, with a mean of 8.5 years and a standard deviation (SD) of 1.28 years. All students were familiar with tablets and/or smartphones.

The reading levels of the students, prior to recruitment, were determined with the assistance of the educators using two scales: students ability to understand a word and link it with its corresponding sign and the ability to fingerspell the word. The teachers were asked to assess the reading level of a student as either poor, good (i.e., average), or excellent based on these scales. The recruited participants were then divided equally into two experimental groups based on this assessment (10 students for each group). Each group consisted of two poor students, three average students, and five excellent students.

4.2 Materials

An Android smartphone with the Word and Sign application was utilized for the experiment. The electronic book provided with the application was printed to produce a physical book, as recommended by the educators, to support the learning process. Morae, a usability software tool, was used to record the users’ interaction with the application and log test results, which were then analyzed and visualized.

Experimental Word Set. Twenty six words were originally selected from the current first-grade reading and comprehension curriculum. A knowledge test was performed a week prior to the experiment to exclude already known vocabulary. The knowledge test was carried out by asking the participant to associate a printed word with its corresponding pictures and ArSL. Of the twenty six words, thirteen words were excluded. This leaves thirteen words that were taught to the students.

Experimental Task. To assess the effectiveness of utilizing Word and Sign when learning new printed vocabulary and thus its effectiveness in aiding reading, a series of association tasks were used to determine the acquisition of the new words by the participants. The tasks were divided into two groups to determine two levels of possible new vocabulary acquisition via pictures or decoding to ArSL. Children who are deaf or hard of hearing are arguably known as visual learners [15]. When associating printed text with its corresponding pictures, a child maintains the image as a memory and recalls it when presented with the text. In the case of decoding into ArSL, a child who is deaf maps the sign to the corresponding text, therefore bilingually linking the Arabic (second language) written word with its corresponding sign from their native language, i.e., ArSL. These two levels of acquisition were represented by two tasks:

-

Associate printed word with corresponding picture: in this task, participants are presented with one of the taught words and four pictures, only one of which corresponds to the printed word.

-

Associate printed word with corresponding ArSL: this task asks the participants to link the printed word with one of four videos of a human interpreter performing the word’s corresponding sign.

Each of the tasks consisted of ten subtasks, which were selected from thirteen words not known to the students. The main goal of the tasks was to determine if there are any learning differences when using the traditional method of vocabulary teaching and when using AR with Word and Sign.

4.3 Procedure

The twenty participants were divided into two equal groups. The first group was taught the thirteen new words using a traditional method of teaching. This involved the teacher presenting the printed word and then using pictures, fingerspelling, and ArSL to convey its meaning. The second group was taught the new words using Word and Sign. In this case, the teacher presented the written word and used the application in groups of students to convey the word’s meaning. Prior to evaluation, the AR group and the educator were introduced to the AR application using the words already known by the students. The size of the phone often required the educator to group a smaller number of students together to show them the application. To assure the attentiveness of the educator while teaching, the first group was similarly instructed in subgroups of students after an initial traditional approach was used. Each group was taught the thirteen words over three days. The same collection of words was taught in the same order over the three days for each group.

On the final day of the evaluation, the tasks were presented to the participants individually. This session was held in the classroom and lasted approximately five hours for each experimental group, i.e., half an hour for each participant. Teaching was, of course, held in the classroom as well. For each task, the participant was asked to form the correct association between the printed word and picture or ArSL. The participants were allowed two trials to obtain the correct answer. If by the second trial they did not give the right answer, the participant was shown the correct association. The association tasks were counterbalanced across participants for pictures and ArSL. All sessions were video recorded via a video recorder, and the task answers were recorded via Morae.

4.4 Results

The results were analyzed using a mixed factorial analysis of variance (ANOVA) that treated the teaching methods, i.e., groups, as a between-participant factor (AR versus traditional) and the association tasks as a within-participant repeated measure (picture versus ArSL).

The completion rate measures the ability of the participants to complete each of the association tasks successfully. Figure 2 shows average successful completion rate for participants between groups and association tasks. An ANOVA showed that participants taught via AR using Word and Sign (\(mean=83.33\%, SD=12.09\%\)) completed significantly more tasks successfully (\(F_{1,18}=18.78, p<0.01\)) compared to the group in which the participants were taught the new words via the traditional teaching approach (\(mean=61.67\%, SD=19.19\%\)). The analysis showed that there was no significant difference (\(F_{1,18}=2.18, p=0.16\)) in completion rate for the same participants on the two association tasks: link printed word with picture and with ArSL. There was also no significant group \(\times \) task interaction (\(F_{1,18}=0.24, p=0.63\)).

The number of errors was also logged and analyzed. Each participant can have zero, one, or two errors. Participants with zero or one error were considered to have completed the task successfully; however, those with two errors did not complete the task successfully. Figure 3 shows the average number of errors for participants between groups and association tasks. An ANOVA showed that the number of errors was significantly higher (\(F_{1,18}=11.27, p<0.01\)) for the traditional group (\(mean=0.57\%, SD=0.32\%\)) than for the AR group (\(mean=0.95\%, SD=0.36\%\)). No significant difference in number of errors (\(F_{1,18}=2.72, p=0.12\)) was found between the two association tasks. There was also no significant group \(\times \) task interaction (\(F_{1,18}=0.49, p=0.49\)).

Time on task refers to the time required by a participant to associate a printed word with its corresponding picture or ArSL. Figure 4 shows the average time on task for participants between groups and association tasks. An ANOVA showed that participants taught the new words via AR (\(mean=0.18\) min, \(SD=0.04\) min) took significantly less time to perform the tasks (\(F_{1,18}=9.12, p<0.01\)) than those that were taught via the traditional method (\(mean=0.23\) min, \(SD=0.06\) min). The analysis showed that there was no significant difference (\(F_{1,18}=2.79, p=0.11\)) in time on task between the two association tasks. However, there was a significant group \(\times \) task interaction (\(F_{1,18}=8.17, p=0.01\)), with the time difference between the two groups significantly widening when participants associated the printed text with ArSL (\(mean=0.22\) min, \(SD=0.02\) min) compared to associating the words with pictures (\(mean=0.19\) min, \(SD=0.07\) min).

5 Discussion

This section discusses the results in view of our hypotheses, which argue for better performances (completion rate, number of errors, and time on task) when completing the association task after being taught thirteen new vocabulary words.

The completion rates of participants on the association tasks were significantly higher after being taught the new printed vocabulary using Word and Sign, a mobile AR application. This significance was noted for the two evaluated tasks, i.e., associating printed text with either pictures or ArSL, with no observed interaction between the groups and tasks. This evidence partly supports our hypothesis (H1), which states that, compared to the traditional method of learning new printed vocabulary, utilizing Word and Sign will increase the completion rate and reduce the number of errors for participants as they complete the evaluation tasks. The second part of the hypothesis (H1) regarding the number of errors was similarly supported. The participants were given two chances to complete the tasks successfully, and errors were logged. The results showed that participants made fewer mistakes when having learned the words via AR compared to the traditional method.

The second hypothesis (H2) states that the time needed to complete the association tasks will be significantly reduced for participants who are taught the new words via the mobile AR application compared to those taught using the traditional approach. The results support this hypothesis, with the participants requiring significantly less time to associate printed words with a picture or ArSL when having learned the words via AR compared to the traditional approach. The analysis also showed that there was an interaction between the type of group and association task, with participants taking significantly longer when associating the printed words with ArSL. This was to be expected, as the struggle with ArSL was previously observed for the same participants [2].

Several observations were noted as the children interacted with the mobile AR application. The children took to the application easily after an initial introduction to its features. This is understandable since previous findings have shown that a majority of parents of deaf children encourage their children to use technology for educational and entertainment purposes [2]. While using Word and Sign, the majority of the children signed along with the child interpreter, as can be seen in Fig. 5. The use of a child interpreter for the ArSL videos was not previously considered in the literature, where the majority of studies either utilized avatars or videos of adult interpreters (see Sect. 2). It was said by the teacher that this might have encouraged the children’s participation with the interpreter.

These findings highlight the value of incorporating visually enhancing technologies, such as AR, to aid literacy when teaching children who are deaf or hard of hearing. The reception of the technology by educators and the children was greatly encouraging, signifying the considerable need of this societal niche in Saudi Arabia. The mobility of the AR application can also support access outside the classroom, thus encouraging parental participation as well. The tool can also be used by hearing non-signers to learn ArSL.

6 Conclusions and Future Work

This paper evaluated the use of Word and Sign, an AR application created for the literacy development of children who are deaf or hard of hearing. The performance of the AR application was compared against that of traditional teaching methods that utilize pictures, fingerspelling, and ArSL. Twenty students who were deaf or hard of hearing were recruited for the evaluation and divided into two groups: one that was taught via AR and the other via a traditional approach. Through a series of association tasks (linking printed words with pictures and ArSL), the students’ performances were logged to determine the completion rate, number of errors, and time on task. The AR group was found to have performed significantly better, with a greater completion rate and fewer errors, compared to the traditional group. This group also took significantly less time to associate printed words with pictures and ArSL. These results highlight the effectiveness of AR for the literacy development of Arabic children who are hearing impaired.

In future work, we intend to improve and extend the AR application and examine various factors in the following ways:

-

Expand the number of words to incorporate vocabulary from other elementary grades.

-

Thematically divide the presentation of words to mimic current elementary curricula and thus better support learning.

-

Provide support for Android-based tablets and consider further expansion to other mobile operating systems, e.g., iOS.

-

Conduct evaluations to assess the performances of children who are deaf or hard of hearing over time.

References

Allen, T.E.: ASL skills, fingerspelling ability, home communication context and early alphabetic knowledge of preschool-aged deaf children. Sign Lang. Stud. 15(3), 233–265 (2015)

Almutairi, A., Al-Megren, S.: Preliminary investigations on augmented reality for the literacy development of deaf children. In: Badioze Zaman, H., et al. (eds.) IVIC 2017. LNCS, vol. 10645, pp. 412–422. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-70010-6_38

Alnafjan, A., Aljumaah, A., Alaskar, H., Alshraihi, R.: Designing ‘Najeeb’: technology-enhanced learning for children with impaired hearing using Arabic sign-language ArSL applications. In: 2017 International Conference on Computer and Applications, ICCA 2017, pp. 238–273. IEEE (2017)

Alyami, H., Soer, M., Swanepoel, A., Pottas, L.: Deaf or hard of hearing children in Saudi Arabia: status of early intervention services. Int. J. Pediatr. Otorhinolaryngol. 86, 142–149 (2016)

Cadeñanes, J., Arrieta, A.G.: Development of sign language communication skill on children through augmented reality and the MuCy model. In: Mascio, T.D., Gennari, R., Vitorini, P., Vicari, R., de la Prieta, F. (eds.) Methodologies and Intelligent Systems for Technology Enhanced Learning. AISC, vol. 292, pp. 45–52. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-07698-0_6

Cadeñanes, J., González Arrieta, M.A.: Augmented reality: an observational study considering the MuCy model to develop communication skills on deaf children. In: Polycarpou, M., de Carvalho, A.C.P.L.F., Pan, J.-S., Woźniak, M., Quintian, H., Corchado, E. (eds.) HAIS 2014. LNCS (LNAI), vol. 8480, pp. 233–240. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-07617-1_21

Cano, S., Collazos, C.A., Flórez Aristizábal, L., Moreira, F.: Augmentative and alternative communication in the literacy teaching for deaf children. In: Zaphiris, P., Ioannou, A. (eds.) LCT 2017. LNCS, vol. 10296, pp. 123–133. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-58515-4_10

Cihak, D.F., Moore, E.J., Wright, R.E., McMahon, D.D., Gibbons, M.M., Smith, C.: Evaluating augmented reality to complete a chain task for elementary students with autism. J. Spec. Educ. Technol. 31(2), 99–108 (2016)

Clark, M.D., Hauser, P.C., Miller, P., Kargin, T., Rathmann, C., Guldenoglu, B., Kubus, O., Spurgeon, E., Israel, E.: The importance of early sign language acquisition for deaf readers. Read. Writ. Q. 32(2), 127–151 (2016)

Craig, A.B.: Mobile augmented reality (Chap. 7 ). In: Understanding Augmented Reality (2013)

Diegmann, P., Schmidt-Kraepelin, M., Eynden, S.V.D., Basten, D.: Benefits of augmented reality in educational environments - a systematic literature review. Wirtschaftsinformatik 3(6), 1542–1556 (2015)

Goldin-Meadow, S., Mayberry, R.I., Read, T.O.: How do profoundly deaf children learn to read? Learn. Disabil. Res. Pract. 16(4), 222–229 (2001)

Hanafi, A.: The reality of support services for students with disabilities and their families audio and satisfaction in the light of some of the variables from the viewpoint of teachers and parents. In: Conference of Special Education, pp. 189–260 (2007)

Haptonstall-Nykaza, T.S., Schick, B.: The transition from fingerspelling to english print: facilitating english decoding. J. Deaf Stud. Deaf Educ. 12(2), 172–183 (2007)

Herrera-Fernández, V., Puente-Ferreras, A., Alvarado-Izquierdo, J.: Visual learning strategies to promote literacy skills in prelingually deaf readers. Revista Mexicana de Psicologia 31(1), 1–10 (2014)

Jones, M., Bench, N., Ferons, S.: Vocabulary acquisition for deaf readers using augmented technology. In: 2014 2nd Workshop on Virtual and Augmented Assistive Technology, VAAT 2014; Co-located with the 2014 Virtual Reality Conference - Proceedings, pp. 13–15. IEEE (2014)

Kingdom of Saudi Arabia General Authority of Statistic: Demographic Survey (2016). https://www.stats.gov.sa/en/852

Kipper, G., Rampolla, J.: Augmented Reality. Elsevier, New York (2013)

Kožuh, I., Hauptman, S., Kosec, P., Debevc, M.: Assessing the efficiency of using augmented reality for learning sign language. In: Antona, M., Stephanidis, C. (eds.) UAHCI 2015. LNCS, vol. 9176, pp. 404–415. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-20681-3_38

McMahon, D.D., Cihak, D.F., Wright, R.E., Bell, S.M.: Augmented reality for teaching science vocabulary to postsecondary education students with intellectual disabilities and autism. J. Res. Technol. Educ. 48(1), 38–56 (2016)

Zainuddin, N.M.M., Badioze Zaman, H., Ahmad, A.: Learning science using AR book: a preliminary study on visual needs of deaf learners. In: Badioze Zaman, H., Robinson, P., Petrou, M., Olivier, P., Schröder, H., Shih, T.K. (eds.) IVIC 2009. LNCS, vol. 5857, pp. 844–855. Springer, Heidelberg (2009). https://doi.org/10.1007/978-3-642-05036-7_80

Mellon, N.K., Niparko, J.K., Rathmann, C., Mathur, G., Humphries, T., Jo Napoli, D., Handley, T., Scambler, S., Lantos, J.D.: Should all deaf children learn sign language? Pediatrics 136(1), 170–176 (2015)

Oka Sudana, A.A.K., Aristamy, I.G.A.A.M., Wirdiani, N.K.A.: Augmented reality application of sign language for deaf people in Android based on smartphone. Int. J. Softw. Eng. Appl. 10(8), 139–150 (2016)

Singh, M., Singh, M.P.: Augmented reality interfaces. In: IEEE Internet Computing (2013)

Smith, C.C., Cihak, D.F., Kim, B., McMahon, D.D., Wright, R.: Examining augmented reality to improve navigation skills in postsecondary students with intellectual disability. J. Spec. Educ. Technol. 32(1), 3–11 (2017)

Stone, A., Kartheiser, G., Hauser, P.C., Petitto, L.A., Allen, T.E.: Fingerspelling as a novel gateway into reading fluency in deaf bilinguals. PLoS ONE 10(10), e0139610 (2015)

Torgesen, J.K., Hudson, R.F.: Reading fluency: critical issues for struggling readers. In: What Research Has to Say About Fluency Instruction, pp. 130–158 (2006)

Wellner, P., Mackay, W., Gold, R.: Computer-augmented environments: back to the real world. Commun. ACM 36(7), 24–27 (1993)

Wu, H.K., Lee, S.W.Y., Chang, H.Y., Liang, J.C.: Current status, opportunities and challenges of augmented reality in education. Comput. Educ. 62, 41–49 (2013)

Zainuddin, N.M.M., Zaman, H.B., Ahmad, A.: Heuristic evaluation on augmented reality courseware for the deaf. In: International Conference on User Science and Engineering, pp. 183–188 (2011)

Acknowledgments

This work was supported by the Deanship of Scientific Research at King Saud University. We thank the students, educators, and directors of Al-Amal Institute and public elementary school 300 for their encouragement and cooperation throughout the assessment.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Al-Megren, S., Almutairi, A. (2018). Assessing the Effectiveness of an Augmented Reality Application for the Literacy Development of Arabic Children with Hearing Impairments. In: Rau, PL. (eds) Cross-Cultural Design. Applications in Cultural Heritage, Creativity and Social Development. CCD 2018. Lecture Notes in Computer Science(), vol 10912. Springer, Cham. https://doi.org/10.1007/978-3-319-92252-2_1

Download citation

DOI: https://doi.org/10.1007/978-3-319-92252-2_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-92251-5

Online ISBN: 978-3-319-92252-2

eBook Packages: Computer ScienceComputer Science (R0)