Abstract

Pearson is committed to offering learning products that are designed with empirical learning science research in mind and that demonstrate efficacy in enhancing learning outcomes. In applying the learning sciences to our designs, however, we must be mindful of human factors, particularly ensuring that our research-based products are designed in a way that meets the needs of our students and instructors. Accordingly, we have implemented Design-Based Research (DBR) processes to investigate student needs, iteratively test product designs, and inform design decisions. Our continually refined and scalable process has yielded many successes in both current and forthcoming products. This paper first outlines the history and theoretical underpinnings of design-based research. Additionally, the need for design-based research at Pearson is described. Subsequently, we provide an overview of our design-based research approach and processes. Finally, successes informed by design-based research are shared.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Evolving Design-Based Research to Support Human Factors

In designing learning experiences, it is imperative to look to the learning sciences research for guidance on what will yield positive learning outcomes. However, much learning research is conducted in lab settings or other controlled environments ignoring the inseparable relationship of cognition and context. When applying learning principles to product design there are important considerations to be made in terms of the product’s context in the learning sequence: student support needs, cognitive and noncognitive student attributes, course implementation, and numerous other human factors.

For this reason, Design-Based Research (DBR) at Pearson has been created and implemented using a set of evidence-based methodologies for integrating learning design principles to experience design using a student- and instructor-centered approach [1]. DBR is an effective way to operationalize evidence-based practice across our research and design teams. “Design-based research, which blends empirical educational research with the theory-driven design of learning environments, is an important methodology for understanding how, when, and why educational innovations work in practice” [2, p. 5]. DBR was created as a research method initially employed in classrooms to extend laboratory findings about learning to assessing instructional and contextual needs when measuring the learning impact of innovative instructional practices [3]. Our approach to DBR is focused on the same problem of bringing the evidence, context and human needs together to inform innovative design ideas and decisions. From its infancy in the early 1990s, DBR has continued to evolve as an evidence-based design practice that is now being used by researchers and designers to inform digital learning tools and hybrid learning experiences [4].

There are few defined processes for DBR in the academic literature as most experts that leverage this method develop their own set of practices, processes and tools. However, the emerging field of User Experience Design pioneered by innovators at Ideo and Stanford’s d.School provide some starting point processes and toolkits that can be leveraged to further operationalize DBR, but pioneering for specific contexts and evolving these toolkits is essential to any organization looking to create DBR as an integral part of an evidence-based, experience design strategy [5].

Also, it is vital to draw on the evidence provided by psychology, anthropology, business and learning science similar to the way a cross-field approach is currently being taken in game design [6]. The main goal of creating and evolving DBR practices and tools is to develop learning-strategy oriented explanations of outcomes across the learning ecology, using specific models and tools [7]. A well-designed DBR process combines multidisciplinary evidence, student need themes, evidence-based design decisions, and context into one design and validation process.

2 Improving Learning Efficacy Using Design-Based Research

The need for an operationalized DBR process has stemmed from Pearson’s stated goals to design and develop efficacious learning experiences. In recent years Pearson has become increasingly transparent about its processes and products’ impacts, as demonstrated by the sharing of its Learning Design Principles and publicly conducting external-facing efficacy evaluations in cooperation with third party evaluators. In order to lay a solid foundation for efficacy measurement, Pearson’s Learning Designers strive to design learning experiences that explicitly support and boost student outcomes (i.e. processes, strategies, motivation, engagement, relevance, self-monitoring, grades, etc.), as demonstrated by achievement evidence and usage analytics. To achieve these design goals, we needed processes and testing efficiencies to build a scalable capacity for rigorously and rapidly testing ideas within our design and product development process. Essentially, we wanted the ability to test (at multiple points in the design process) whether our application of learning science based principles in specific product designs was meeting student needs as intended, and to be able to do so quickly and reliably.

Accordingly, the main purpose of the Pearson DBR function and team of Learning Researchers is to support a wide-range of product creators (e.g., Learning Designers, UX Designers, User Assistance) and product owners (Product Managers and Portfolio leaders) as they seek to understand the needs of their students. While there are multiple established frameworks for design-based research [8,9,10,11], we used these frameworks to inform our own model in order to better align to our unique product portfolio and their respective business requirements and workflows.

Our team aims to build a body of evidence that the design decisions we make will support learning. We do this throughout the design process, from discovery and exploratory phases through development, testing, and market release. By systematically testing designs with students and instructors throughout this process, we create a contextualized understanding of students, learning tools, and learning environments, from which we can progressively refine learning experiences in an iterative manner. With this in mind, Sect. 3 below outlines our DBR program in greater detail, followed by Sect. 4, which highlights various student-centered successes we have experienced as a result of our DBR efforts.

3 Pearson’s Design-Based Research Program

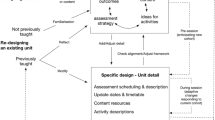

Incorporating Design-Based Research into our design process has vastly improved our capacity to apply learning science to design in ways that account for important human factors. Projects can begin and move through the process iteratively at various points in the process depending on the stakeholder and business requirements (See Fig. 1). We consistently seek an understanding of the current state of the market and specifically the key learning problems to solve, and then use a variety of methods to discover pain points and needs of students in terms of context, attitudes, skills, and behaviors to understand how to best shape a solution. Next, we consider evidence on efficacious practices from the learning sciences literature. Finally, we design and validate innovative learning experiences directly with students and seek to understand in live workshops, surveys, and focus groups how we can improve an experience and identify where we need additional evidence to inform designs. This process ensures that we base our iterative design decisions not only on the learning sciences literature, but also on real student and instructor needs to provide impactful and efficacious learning solutions. Essentially, it is how we translate research into evidence-based practice and application.

3.1 Discovering Learning Needs and Validating Design Decisions with Students

Our Design-Based Research process uses an iterative, mixed methods research approach to discover students’ ways of working and understand their learning needs across specific learning contexts. We then use this data—aligned with learning science evidence—to inform design ideas and decisions and then iteratively validate those design ideas and decisions with students.

Since instituting our Design-Based Research program in early 2016, we have achieved a scaling capacity that sees learning ideas and design decisions being tested every day with real students. A core component of operationalizing this scaled offering is to conduct discovery and validation research with students and instructors to better understand their learning strategies and processes. Using this feedback data we conduct ongoing thematic analyses and use other qualitative research methods to curate prioritized lists of core student needs that are supported by learning science evidence and business strategies. We continually evaluate our effectiveness with our internal stakeholders to determine how to inform design investments in the most useful ways. Continuing to understand these external and internal stakeholder needs allows us to prioritize and inform business investments as well as inform learning experience design decisions that will help create digital learning tools that students want to use and that are efficacious.

Our latest prioritized needs include the following, which inform digital product design as part of a robust Higher Education DBR strategy:

-

Real-world relevance, defined by students as overt connections provided in the learning experience between concepts, courses, skills and outcomes that demonstrate how content and activities are designed to explicitly support real-world applications including setting and achieving career goals.

-

Engagement and motivation, defined by students as the will and skill to make prioritized decisions to get started and persist with studying especially when other alternatives of where to spend their time are more appealing.

-

Self management, defined by students as strategies and tools for managing study time and completing assignments including preparing for class and exams in efficient and effective ways.

-

Information management, defined by students as strategies and tools for discovering, saving, referencing, citing and combining content to generate ideas and produce quality deliverables including writing assignments.

-

21st century skills, defined by students as career readiness and strategies needed to succeed in the modern workplace especially collaboration.

Establishing these prioritized needs informs our overall design strategy, and in turn has directly impacted efficacy and product revenue. As we continually work to understand the most pressing student needs, we partner with our Product teams to implement next-generation, student-centered design ideas and decisions, based on a nimble and customized collection of research methods.

Generally our DBR research teams gather student and instructor (i.e., user) feedback using surveys, focus groups, and interviews. Some protocols might also include co-design workshops and possible cutting edge technology like biometric testing. However, the effectiveness of our DBR strategy does not come from which methods we choose; instead it is how we use each of them in an interconnected research process within a larger product development cycle. Each method is planned and executed with scientific rigor while continually orchestrating the respective data inputs throughout a design process with multiple stakeholders. Our strength comes from how this stream of data is continually viewed through the lens of the learning sciences—the decades-long body of research regarding what has been demonstrated to reliably support learning. The following are more detailed descriptions of the research methods we are using and customizing to meet the needs of our varied stakeholders.

A first method is ethnographies, a staple of any social or behavior research toolkit. We only recently scaled our process to use this type of research, but it has already helped create a better understanding of our students, their needs, and how we can better support them when interacting with our products. Conducted in-person or remotely, these semi-structured interviews are conducted to allow research and design teams to take a closer look at a “week in the life of a student.” What is their course load? Are they already working in the field they are studying? When do they feel the most stressed? Do they work/study with classmates, in small groups, or alone? This research is the foundation upon which student profiles (or personas) can and will be created.

Survey research is used during our DBR process for early student validation testing by gathering data (remotely, asynchronously) from our database of students. We can begin the body-of-evidence story by better understanding the current state of things such as study and reading habits, their initial impressions about a proposed enhancement or learning tool, as well as other preferences, perceptions, values, processes, and strategies. We have created a scalable process wherein a learning scientist and other stakeholders receive feedback from dozens of students within a matter of days. This efficiency empowers the design team to test out new ideas in an informative and risk-free manner. After receiving survey feedback, adjusting the design accordingly, and testing again as needed, learning scientists and designers may wish to delve deeper with students face-to-face and for an extended period of time, such as in a focus group.

Building on multiple iterations of survey feedback, focus groups are conducted where researchers meet with small groups of students (in-person or remotely via video chat) to continue soliciting feedback, but now on the specific areas of interest identified in prior tests. The focus group participants might be asked to describe their ideal experience or may be asked to compare their processes and strategies to the mockups or wireframes of the enhancement proposed by the moderator on behalf of the design team. The participants have multiple opportunities to compare their ideas with each other and to disagree or challenge ideas and suggestions being presented. Focus groups allow learning scientists and design teams to better understand specific aspects of the learning experiences from the student perspective and to progressively narrow their research questions accordingly.

Cognitive task analysis, conducted both in-person and remotely, provide opportunities for tasks to be observed in more authentic and contextualized ways. This affords researchers, learning scientists, and designers ways to better account for how the different elements of a learning environment are interrelated and dependent on each other. Additionally, this method brings student needs into context and is the beginning of more sophisticated, often longitudinal, testing of learning experiences.

Stemming from the field of Design [12,13,14], we conduct co-design workshops using a suite of real-world, collaborative feedback tools with students during two, full-day workshops. The goal of this tool is to explore more fully ways to support the need for real-world relevance in Higher Education and inform our design efforts with key pieces of evidence from the learning science literature. Co-designing directly with students allows us to see how they define learning challenges and what types of solutions would be most supportive to their learning goals. We expand on a tool’s design internally following the co-design workshops and validate it with additional groups of Higher Education students. Moving beyond a focus on content and technology to synthesize human factors and fundamental learning needs allows us to innovate faster and generate a solution that is readily received by students.

When interested in measuring user’s ‘performance’ during a learning experience, we use biometrics research methods to observe learning in action. This moves beyond self-report and leverages objective observational data [15]. Biometrics is the study of user biosignals (e.g., eye-tracking, facial expressions, galvanic skin response (GSR), electrocardiogram (ECG), electromyogram (EMG) and electroencephalography (EEG) during a user experience [16]. The benefit of using this research approach is the ability to measure unconscious biosignals that provide insights on what is happening in the user’s information processing situation, reducing the potential of research biases. Triangulating the student performance and potential product improvements using biometrics, survey research, co-design, and other types of learning science research is powerful for informing the accuracy and completeness with which specific users can achieve specific instructional and learning goals in specific learning contexts.

4 User-Centered Design-Based Research Examples

Our DBR process, as described above, has informed the iterative, evidence-based enhancement of multiple market-leading learning experiences, a sampling of which are as follows.

4.1 Efficiently Integrating Writing Process Tools into Word Processing

Ongoing, iterative design-based research was instrumental to designing a writing experience in a current product that provides students with a set of tools for applying the writing process in the context of a word processor, enabling the use of research-based strategies without ever leaving their writing application. Through DBR surveys and focus groups, the design team was able to determine which features would be most valuable to students while drafting their work, and identified ways to seamlessly surface them in the context of a word processor (see Fig. 2). This included citation/bibliography creation tools, outlining, and references on topics including grammar/usage, guidance for the steps in the writing process, and idea generation for various writing genres.

4.2 Digital Annotation to Scaffold Studying and Review

In exploring the needs of students while they read, we discovered that they do not feel an adequate solution exists for note-taking despite the many tools available to them. Accordingly, we iteratively designed and validated a note-taking system that helps students identify and categorize important information in the moment as they read, flagging it for later review/studying. The system is designed with empirical learning science findings in mind and embodies them based on students’ expressed preferences and study habits. While at face value this effort sounds simplistic, it garnered some of the most positive responses we have received from DBR participants because it was explicitly tailored to meet their needs and assist them in a task that is important to their academic success.

4.3 Algorithm-Driven Memorization Support

In hearing from students that studying for multiple exams can be both intimidating and difficult to manage, we iteratively developed an adaptive studying tool that helps them prioritize what (and when) to study. The tool determines algorithm-driven, optimal study plans based on student confidence and memory progress during practice sessions, and also leverages the benefits of spaced repetition [17].

4.4 Designing an Emoji-Inspired Reading Discussion Environment

In response to low student motivation to participate in required writing prompts, we designing a reading discussion environment intended to engage them in a way similar to social media experiences. Rather than writing with no perceived audience, the experience requires students to read and respond to each other’s work, but also scaffolds high quality responses by providing guidance that is inspired in part by emoji-driven, threaded commenting. This helps students learn, in the moment, how to give each other specific, high quality feedback and drive productive discussion.

4.5 Triangulating Student Needs Using Biometrics

The use of biometrics for understand user behavior isn’t new, but the majority of research using these devices are primarily focused on websites or shopping experiences. As mentioned, what is being demonstrated in this paper is not only examining products designed to help users learn, but also looking for patterns that are specific to learning—while students are acquiring new knowledge and/or skills using digital products.

For instance, to test out a new bite-size reading experience, a human communication course was tested using biometric research. In addition to improving accessibility, the concepts, theories, and trends were designed to be fun and relatable. Students found that the unique features helped them to identify and understand their own communication behaviors, as well the communication behavior of others. Specifically, taking a DBR approach, we sought to understand where students focus their attention, and what types of student journeys occur when using a sample chapter to prepare for class versus prepare for an exam. Figures 3 and 4, illustrate where students focused their attention and their observed learning paths.

5 What’s Next for the Pearson DBR Program?

As our learning designers and researchers continue to refine our processes, protocols, and methodologies, we pursue opportunities to leverage our operational strengths and design and research acumen. This includes exploring the potential learning benefit of future-facing technologies such as mobile augmented and virtual reality, authentic, immersive simulations, biometric driven learning experiences, and various integrations with natural language processes and other artificial intelligence implementations.

Strengthening our alignment to product development while continuing to advocate for the student and their needs remain our priorities even as we scale and diversify our research and design capabilities. We welcome the challenges not only of the new technologies and broader impact of our work but also of improving the collective knowledge and skills of our many stakeholder partners, including the students we interact with on a daily basis.

We encourage others to explore creating or evolving a DBR program in their organizations including implementing design-based research thinking processes to support a mindset and operational shift toward more student (user) and experience-centered design, implementation and evaluation. Our organization continues to scale this service but currently our nimble team of 10–12 learning researchers support the majority of teams across Pearson responsible for product design and development. Starting small and scaling up and then collecting small wins along the way has proven to be a effective and impactful strategy. Our goal remains to shift mindsets and establish ways of working that continually improve the support of human factors in the design and validation of digital learning experiences.

References

Learning Design Principles. Pearson (2016)

Kelly, A.E.: Theme issue: the role of design in educational research. Educ. Res. 32(1), 3–4 (2003)

Brown, A.L.: Design experiments: theoretical and methodological challenges in creating complex interventions in classroom settings. J. Learn. Sci. 2(2), 141–178 (1992)

Payne, A.: Learning experience design thinking findings story. Pearson (2017)

Lanoue, S.: IDEO’s 6 Step Human-Centered Design Process: How to Make Things People Want. UserTesting Blog, vol. 9 (2015)

Schell, J.: The Art of Game Design: A Deck of Lenses. Schell Games (2008)

Reimann, P.: Design-based research: designing as research. In: Luckin, R., Puntambekar, S., Goodyear, P., Grabowski, B., Underwood, J., Winters, N. (eds.) Handbook of Design in Educational Technology, pp. 44–52 (2013)

Fishman, B.J., Penuel, W.R., Allen, A.-R., Cheng, B.H., Sabelli, N.: Design-based implementation research: An emerging model for transforming the relationship of research and practice. In: National Society for the Study of Education, vol. 112(2), pp. 136–156 (2013)

Penuel, W.R., Fishman, B.J., Cheng, B.H., Sabelli, N.: Organizing research and development at the intersection of learning, implementation, and design. Educ. Res. 40(7), 331–337 (2011)

Barab, S.: Design-based research: a methodological toolkit for engineering change. In: The Cambridge Handbook of the Learning Sciences, Second Edition, Cambridge University Press (2014)

Barab, S.A., Squire, S.A.: Design-based Research: Clarifying the Terms. A Special Issue of the Journal of the Learning Sciences. Psychology Press (2016)

Sanders, E.B.-N., Stappers, P.J.: Co-creation and the new landscapes of design. CoDesign 4(1), 5–18 (2008)

Brown, T.: Change by Design. Harper Collins, New York (2009)

Sanders Elizabeth, B.N., Stappers, P.: Convivial Toolbox: Generative Research for the Front End of Design. Bis Publishers, Amsterdam (2012)

Farnsworth, B.: What is Biometric Research? iMotions. https://imotions.com/blog/what-is-biometric-research/. Accessed 19 Apr 2017

Davis, S., Cheng, E., Burnett, I., Ritz, C.: Multimedia user feedback based on augmenting user tags with EEG emotional states. In: 2011 Third International Workshop on Quality of Multimedia Experience, pp. 143–148 (2011)

Cepeda, N.J., Pashler, H., Vul, E., Wixted, J.T., Rohrer, D.: Distributed practice in verbal recall tasks: a review and quantitative synthesis. Psychol. Bull. 132(3), 354–380 (2006)

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Payne, A., Sadauskas, J., Conley, Q., Shapera, D. (2018). Designing and Validating Learner-Centered Experiences. In: Zaphiris, P., Ioannou, A. (eds) Learning and Collaboration Technologies. Design, Development and Technological Innovation. LCT 2018. Lecture Notes in Computer Science(), vol 10924. Springer, Cham. https://doi.org/10.1007/978-3-319-91743-6_13

Download citation

DOI: https://doi.org/10.1007/978-3-319-91743-6_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-91742-9

Online ISBN: 978-3-319-91743-6

eBook Packages: Computer ScienceComputer Science (R0)