Abstract

Hand gesture recognition using electromyography signals (EMG) has attracted increased attention due to the rise of cheaper wearable devices that can record accurate EMG data. One of the outstanding devices in this area is the Myo armband, equipped with eight EMG sensors and a nine-axis inertial measurement unit. The use of Myo armband in virtual reality, however, is very limited, because it can only recognize five pre-set gestures. In this work, we do not use these gestures, but the raw data provided by the device in order to measure the force applied to a gesture and to use Myo vibrations as a feedback system, aiming to improve the user experience. We propose two techniques designed to explore the capabilities of the Myo armband as an interaction tool for input and feedback in a VRE. The objective is to evaluate the usability of the Myo as an input and output device for selection and manipulation of 3D objects in virtual reality environments. The proposed techniques were evaluated by conducting user tests with ten users. We analyzed the usefulness, efficiency, effectiveness, learnability and satisfaction of each technique and we conclude that both techniques had high usability grades, demonstrating that Myo armband can be used to perform selection and manipulation task, and it can enrich the experience making it more realistic by using the possibility of measuring the strength applied to the gesture and the vibration feedback system.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

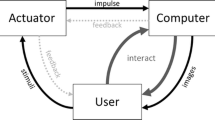

Flexibility and freedom are always desired in virtual reality environments (VREs). Traditional inputs, like mouse or keyboard, hamper the interactions between the user and the virtual environment. To improve the interaction in qualitative terms in a virtual environment, the interaction must be as natural as possible, and because of that, hand gestures have become a popular means of Human-Computer Interaction.

Human gestures can be defined as any meaningful body movement that involves physical movements of different body parts, like fingers and hands, with the aim of expressing purposeful information or communicating with the environment [1]. The interaction through hand gesture recognition is called gesture control, and it is not only used in virtual reality environments, we can find it in applications such control of robots [2], drones [3], electronics [4], and simple applications like games, slides presentation, music, video or camera. Sign language recognition and gesture control are two major applications for hand gesture recognition technologies [5]. Particularly in virtual reality environments, the gesture recognition and gesture control may resolve some problems like losing the reference of the input controls in the real world.

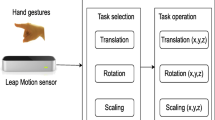

Hand gestures recognition has two approaches until now [6], the first is using cameras and image processing, and it is called visual gesture recognition. The other is using devices that record the electromyography signals (EMG) of the arm and, with the additional information of an accelerometer and a gyroscope, translate them into gestures [5].

Hand gestures recognition using EMG has attracted increased attention due to the rise of cheaper wearable devices that can record accurate EMG data. One of the outstanding devices in this area is Myo armband (https://www.myo.com), equipped with eight EMG sensors and a nine-axis inertial measurement unit (IMU).

Hand gesture control empowers the developers with tools to offer the user a better experience when it comes to selection and manipulation of the objects in virtual environments. Additionally, with the new wearable devices based in EMG recognition and translation into gestures, we are provided with a new variable: the intensity of the electrical activity produced by muscles involved in a gesture. From now on, in this work, we will refer to it as the “force” applied to the gesture.

The objective of this work is to evaluate the usability of the Myo armband as a device for selection and manipulation of 3D objects in virtual reality environments, aiming to improve the user experience by taking advantage of the ability to calculate the force applied to a gesture and leveraging the Myo’s vibrations as a feedback system. For that purpose, we propose two techniques (called Soft-Grab and Hard-Grab techniques) designed to explore the capabilities of the Myo armband as an interaction tool for input and feedback in a VRE.

This paper is structured as follows. The next section presents the basic concepts and summarizes related works. Then, in Sect. 3, we present the methodology of this work, including the tasks and techniques proposed, the definitions of the test environment and performing the user’s evaluations. Section 4 shows the results of the tests and present the comparison between the techniques. The last section brings the conclusions of this work.

2 Background

2.1 Electromyography Signal Recognition

Electromyography signal recognition plays an important role in Natural User Interfaces. EMG is a technique for monitoring the electrical activity produced by muscles [7]. There is a variety of wearable EMG devices such as Myo armband, Jawbone, and some types of smartwatches. When muscle cells are electrically or neurologically activated, these devices monitor the electric potential generated by muscle cells in order to analyze the biomechanics of human movement.

Recognition of EMG activity patterns specific of each hand movement allows us to increase the amount of information input into the simulation and to realize a more natural, and hence satisfactory, reproduction of the user’s gestures. Fundamentally, a pattern recognition-based system consists of three main steps [8]: (i) signal acquisition, in this case, EMG activity acquired by an array of sensors; (ii) Feature extraction, consisting in the calculation of relevant characteristics from the signals, e.g. mean, energy, waveform length, etc.; and (iii) Feature translation, or classification, to assign the extracted features to the class (gesture) they most probably belong to. Once the gesture attempted by the user of the system is recognized, it can be mapped towards the controlled device.

Working directly with the EMG raw signals has two main difficulties:

-

Battery life: Processing the raw data onboard using classifiers that are optimized for power efficiency results in significantly better battery’s life than streaming that amount of data through Bluetooth.

-

User Experience: Working with EMG signals is hard. Building an application that works for a few people is straightforward, but building something that will work for everyone is entirely another question. The signals produced when different people make similar gestures can be wildly different. Different amounts of hair, fatty tissue and sweat can affect the signals, and this is compounded by the fact that the Myo armband can be worn on either arm and in any orientation.

On the other hand, accessing the raw EMG data allows new uses for the Myo armband like prosthetics control, muscle fatigue and hydration monitoring, sleep state monitoring, and identity authentication based on unique muscle signals.

As we will see, most of the works related to EMG-based gesture recognition do not take advantage of the intensity of the electrical activity like a variable to measure the force applied to the gesture.

2.2 Myo Armband

Myo armband is a wearable device equipped with several sensors that can recognize hand gestures and the movement of the arms, placed just below the elbow. Its platform provides some strong functionality that involves techniques of electromyographic signals processing, gesture recognition, and vibration feedback system.

Myo has eight EMG sensors to capture the electrical impulses generated by arm’s muscles. Due to differences in skin tissue and muscle size, each user has to take a calibration step before using the gadget. In addition to the EMG sensors, the Myo also has an IMU, which enables the detection of arm movement. The IMU contains a three-axis gyroscope, three-axis accelerometer, and a three-axis magnetometer.

From this data, and based on machine learning processes, the Myo can recognize the gestures performed by the user.

Besides the EMG signals, Myo armband provides two kinds of data to an application, spatial and gestural data. Spatial data informs the application about the orientation and movement of the user’s arm. Gestural data tells the application what the user is doing with their hands. The Myo SDK provides gestural data in the form of some preset poses, which represent configurations of the user’s hand. Out of the box, the SDK can recognize five gestures: closed fist, double tap, finger spread, wave left, and wave right.

There are a few drawbacks in the current generation of Myo armband. First, the poses recognized out of the box are limited. Second, using only five gestures to interact with the environment may be considered a user-friendly design that largely reduces the operation complexity. However, the limited number of gestures restricts application development. Finally, the accuracy of gesture recognition is not completely satisfactory, especially in a complex interaction. When a user aims to perform complicated tasks that combine several gestures, the armband is not sensitive enough to detect the quick change of user’s gestures.

2.3 Literature Review

Although most of the works about Myo are not related to virtual reality, since its very beginning the creators thought it would be a valuable tool in that field. Some works using Myo in VREs are described below.

Some authors [9] proposed a navigation technique to explore virtual environments, detecting the swing of the arms using the Myo, and translating them into a walk in the virtual environment. Other authors [10] describes a mid-air pointing and clicking interaction technique using Myo, the technique uses enhanced pointer feedback to convey state, a custom pointer acceleration function, and correction and filtering techniques to minimize side-effects when combining EMG and IMU input. Besides, [11] created a virtual prototype for amputee patients to train how to use a myoelectric prosthetic arm, using the Oculus Rift, Microsoft’s Kinect and Myo. A couple of immersive games was also developed and is available at Myo Market (https://market.myo.com/category/games).

All reviewed works used Myo’s predefined gesture set and they did not use the Myo’s vibration system to improve the user experience. It is important to note that all of them focus their efforts in the recognition of the gesture and do not take advantage of the intensity of the electrical activity as a way to measure the force applied to the gesture. Additionally, we did not find any study about usability of Myo for selection/manipulation of 3D objects in VRE.

3 Methodology

In order to evaluate the use of the Myo armband as a device for selection and manipulation of 3D objects in VREs, this work proposes two techniques called Soft-Grab and Hard-Grab. The latter leverages a technique developed to assess the force applied by the user during the closed fist gesture. We describe these techniques below.

3.1 Assessing Gesture Force

Based on the observation that the intensity of the EMG signals detected while the users were doing the closed fist gesture was proportional to the force they were doing, and based on previews works about gesture recognition and EMG data [7, 12], we decided to use that intensity as quantity measure of the applied force.

To calculate this measure of force applied by the user during the closed fist gesture, we used the mean of the eight EMG raw channels of the Myo (Fig. 1) and then the mean of those values in a window of ten samples, starting right after the detection of the gesture.

Before users’ interaction tests, we made a pilot experiment to determinate a range of force values that we could realistically expect from them during the closed fist gesture. User’s EMG signals were measured while they closed their hands using as much force as possible (Fig. 2), and then relaxed the hand again. This experiment was repeated two more times, but instead of closing the hand with force, the users had to squeeze a rubber ball or a hand grip.

With the obtained data, we extracted the maximum of all the minimum values and the minimum of all maximums values to determinate a possible range of force valid for all users. The obtained ranges and the user comfort of the three experiments were compared and the results were used to setup the tests.

This range of force values was then mapped to a scale of virtual weights that we could use to ascertain if the user was doing enough force during the closed fist gesture to lift a virtual object.

3.2 Proposed Interaction Techniques

Manipulation in virtual environments is frequently complicated and inexact because users have difficulty in keeping the hand motionless in a position without any external help of devices or haptic feedback. Object position and orientation manipulation are among the most fundamental and important interactions between humans and environments in virtual reality applications [13].

Many approaches have been developed to maximize the performance and the usability of 3D manipulation. However, each manipulation metaphor has its limitations. Most of the existing procedures that attempt to solve the problem of selecting and manipulating remote objects, fit into two categories called arm-extension techniques and ray-casting techniques [14]. In an arm-extension technique, the user’s virtual arm is made to grow to the desired length so the hand can manipulate the object. Ray-casting techniques make use of a virtual light ray to grab an object, with the ray’s direction specified by the user’s hand.

The techniques in the present work are based on the ray-casting model. For the scope of these tests, the objects can be moved only in the plane perpendicular to the user’s point of view, like in a three-shell game. The virtual environment used in the tests was written in C# using Unity3D 5.6, Microsoft Visual Studio 2015 and the Myo SDK 0.9.0 to connect the Myo.

Usage Scenario.

The scenario used to test both manipulation techniques is a big surface with three boxes with different colors: blue, red, and green, and a pointer (Fig. 3). Pointing was implemented using the Myo IMU and the Raycast method in Physics class from Unity’s framework. At the beginning of each test, the user must calibrate the arm’s orientation by stretching the arm to the front and making the fingers spread gesture. This calibration procedure can be repeated every time that the user wants to reset the pointer’s initial position.

When the ray collides with a box, there is a visual feedback by highlighting the box with yellow color and the Myo’s short vibration is activated.

Soft-Grab Technique.

While the object is being pointed to, the user can select it by making the closed fist gesture. The Myo’s medium vibration is activated when the closed fist gesture is recognized to let the user know that the box is selected and it can be moved.

While selected, the box follows the hand’s movements in the (x, y) plane, so the user can position it by pointing the new place for the box. To release it, the user needs to relax the hand and release the closed fist gesture. When the box is released, it is returned to its original color and a large vibration is activated in Myo.

Hard-Grab Technique.

In this technique, each box has an associated virtual weight, and as an extra feedback, there is a bar that shows the intensity of the gesture made by the user.

Pointing works the same way, but to select a box, the user must make the closed fist gesture with enough force to offset the virtual weight of the box (as described in Sect. 3.1). When the user reaches the necessary force to lift the box, a Myo short vibration is activated. Manipulation then works the same as in the first technique. To keep the object selected the user must keep the closed fist gesture force within a small range around the activation point. When the force applied falls below this range, the Myo’s long vibration is activated and the object is released, returning to its original color.

3.3 User Tests

The user tests conducted in this work followed the guidelines presented by [15] including how user studies should be prepared, executed and analyzed.

Ten participants between 25–35 years old were recruited. Each one voluntarily participated in one test session. Only half of them had experience with 3D interactions and video games, while the other half had no or very little experience. None of them had previous experience with the Myo armband. Two of them were left-handed and the others eight right-handed, so the Myo was used in the preferred hand of the user and that hand was used throughout the test as the hand driving the interaction technique.

The following task list was used throughout the user tests. Before each task, the boxes were restored to their original positions, blue, red, green, from left to right.

-

1.

Explorative (think-aloud) Tasks

-

a.

Select the left-most box (the blue box), lift it and put it down in the same place.

-

b.

Select the middle box (the red box), lift it and put it down to the right-hand side of the others.

-

a.

In the following tasks the user was instructed to not think-aloud, so that an estimation of the time to complete the tasks without interference could be measured.

-

2.

Soft-Grab Test Tasks:

-

a.

Select the right-most box (the green box) and put it beside the blue box in the left extremity.

-

b.

Sort the boxes by colors, from left to right, red, blue, green.

-

c.

Sort the boxes by colors, from left to right, red, green, blue.

-

d.

Sort the boxes by colors, from left to right, green, red, blue.

-

a.

-

3.

Hard-Grab Test Tasks:

-

a.

Select the left-most box (the blue box) and put it beside the green box in the right extremity.

-

b.

Sort the boxes by colors, from left to right, red, blue, green.

-

c.

Sort the boxes by weight from left to right, from lightest to heaviest.

-

a.

The user test sessions were performed in a private room at our university. A laptop was used as the test platform: 8 GB RAM, 1T HD, 2 GB Graphic Card, with Windows 10 operative system. The Myo model MYO-00002-001 was connected to the laptop via Bluetooth. A moderator observed the user’s interaction all the time.

The data collected was used to complete two evaluations: first the usability of each individual interaction technique, and secondly the general preference (comparison) of the two interaction techniques.

4 Results

This section describes the results of the user’s tests, starting with the achieved usefulness and the measured efficiency for each task included in the test. It follows the qualitative feedback on the perceived effectiveness of each interaction technique. Then, the observed learnability of each technique along with comments from the users are detailed. Lastly, the stated satisfaction of the users on which technique they preferred overall, and for each interaction task, is shown.

The tasks were divided in three groups: Explorative, Soft-Grab Test Tasks, and Hard-Grab Test Tasks. The Explorative tasks were not measured; they were the tasks that the users always did first. The objective of these tasks was to let the user understand how to use the Myo armband. The order of Soft-Grab and Hard-Grab Test Tasks was modified for each user to avoid that the learning of the users influenced in the general result of the test.

4.1 Task Completion: Usefulness

The Soft-Grab tasks group consists, in general, in selecting a box, and positioning it in another place using Myo gesture recognition system. The group has 4 tasks. The first one was to take a box from the right extreme and put it in the opposite extreme, and the other three were sort the three boxes by color, each time with a different arrangement. Table 1 shows the number of users that completed each task.

The failure in the second task occurred due to the user misunderstanding the task instructions, this user had very little experience with 3D interactions. The four times when a user needed help to finish a task happened for two users only, and were in the same two tasks. Both were confused about the way on how to close the hand to select the boxes and maintain the hand closed to keep the box selected. In both cases, they expected that once they had selected the box, it would stay selected even if they opened their hands. The moderator had to read again the part of the script that explained how to perform the task. They claimed that they had forgotten that part. From the ten users, just three could not complete all the group of tasks without any help.

The tasks in the Hard-Grab group, in general, involve selecting a box and positioning it in another place using Myo’s gesture recognition system and the proposed method to assess the force applied to the gesture. The group is composed of three tasks, the first was to take the box from the left side and put it in the right side, the second was to sort the boxes by colors, and the last was to sort the boxes by weight. It is important to note that in this scenario each box has a virtual weight associated.

In this group of tasks, a single user failed both sorting tasks due to difficulties selecting the boxes (Table 2). The user clearly struggled to reach the minimum force and maintain it to hold the box. The same user completed with help the first task of getting one box from one place and move it to another. To complete the first task, he/she selected the box and could not put it in the indicated place before releasing the box, so the box went down in the middle of the other two boxes. The user then asked if he/she could select it again and put it in the indicated place. The answer was positive and the user completed the task.

Two other users had trouble with the sort by weight task. They wrongly sorted the boxes. Then they selected each box again to measure the weight of the boxes and finally correct the arrangement. They asked if they could correct their arrangements, but since they did explicitly announce that they had finished the task, the moderators allowed them to do it. From the three users that could not complete the task without help or did not complete it, two were right handed and one left handed.

In terms of completion, we did not see any important difference between the two techniques and both had good rates of achievement. Also, it was clear that the users who did not complete the tasks were those with less experience in virtual reality environments. To be right-handed or left-handed did not influence the performance and completion rate of the tasks.

4.2 Task Duration: Efficiency of Use

Efficiency was measured by tracking the completion times of the group of tasks: failed tasks were not counted; neither was counted the time when the user stopped to ask for help or to make a question. The tasks were grouped by technique used and the skill required by the user. Table 3 shows the results. We calculated the average and the standard deviation for each group.

The first two groups of tasks were completed faster with Soft-Grab technique, but it is important to note that some difference in time execution was expected due to the nature of the techniques. Also, it is important to note that the difference was not so big. Some of the average values of Hard-Grab technique collide with outsider values of Soft-Grab technique, making those hard-grab values to be inside the range of the standard deviation of Soft-Grab technique.

Another interesting point of these measures is that even when the measure of the task “Sort the three boxes by weight” could not be compared with the Soft-Grab technique due the nature of the techniques, its average time and standard deviation shows that its execution time do not differ so much from the task of “Sort the three boxes by color”, and the delay time was expected because the user had to select all the boxes to know which one were heavier, and sometimes they need to do that more than once.

4.3 Effectiveness

The effectiveness of the interaction techniques was measured qualitatively by how well the user could use the techniques to select and manipulate virtual objects, based on the think-aloud process, the observed behavior, and the post-test interview results.

Selection and positioning were very similar in both techniques. The difference resides in the force that the user must to apply to the gesture to select the object. Besides that, the key to select an object was pointing it and making a fist gesture, and for positioning the user must point to the new place while the object is selected.

With Soft-Grab technique, the major trend was that the grabbing motion felt natural or comfortable, imitating the way a user would grab an item in real life. Also, they liked that pointing to the box was easy and precise. Two users said that the pointer would be even better if it was shown in the scenario all the time; as implemented, the only visual feedback available is the box highlight when it is hit by the ray cast.

Two users said that, at first, they expected that once they had selected the box, it would remain selected until they had performed the fingers spread gesture. However, both techniques require that they maintain the closed fist gesture to hold the box selected. Relaxing the hand is enough to deselect the box, the fingers spread gesture is not needed for deselection.

Another point that emerged in the interview was the vibration feedback. The users mostly agree and use almost the same explanation for it. They said that at first they could not differ between the three types of vibrations or what they meant, but after two or three times it was very helpful to them to know what was going on.

With the Hard-Grab technique that includes the measure of the force applied to the gesture, the major trend was that it felt very realistic, but it was also more difficult to achieve the selection.

4.4 Learnability and Satisfaction

The major trend for the learnability of both interaction techniques was that the basics for each technique was easy to understand. The major problem was with the Hard-Grab Technique, to learn the force needed to select a given box, and how to maintain the force inside the range required to keep it selected. In the task of sorting by weight, some users were insecure because they needed to lift and put down all the boxes to know their weights, but in general, it takes just a few seconds for them to discover it.

In the post-test questionnaire, we asked the users to evaluate the effort they did to learn how to do each type of interaction (selecting and positioning) with each technique. Soft-Grab required a little less effort for selection than Hard-Grab, but in positioning the difference was much larger, confirming what the users told in the interview.

In general, it was observed a fast learning process; the users were more comfortable with each task, independently of the order of the test applied. The first tasks were always more difficult than the rest of the tests. However, Soft-Grab requires less cognitive effort than Hard-Grab from their point of view.

With the Hard-Grab Technique, the necessity of making more or less force to select a box was in general very well received. The common opinions about it were that it was more difficult, but they could do it and it felt realistic, the users were quite excited about it.

When the moderator asked them which technique they preferred, the majority answered the Hard-Grab Technique. Table 4 shows how many users preferred which technique for each interaction type and overall. The positioning interaction was controversial because some users that preferred the Hard-Grab technique for selection affirmed that they would prefer to simply maintain the closed fist gesture during positioning instead of having to maintain the same force applied for selection. They argued that it is tiresome, and therefore, they actually would prefer a combination of both techniques.

5 Conclusions

This work proposed two selection/manipulation techniques using the Myo’s SDK to capture and analyze the spatial and gestural data of the users. Additionally, to take advantage of the new resources that Myo offers, we used the intensity of the electrical activity obtained from the EMG raw data, and we simulated the force that the user was applying to the virtual object. Additionally, we created a feedback system that includes visual and haptic feedback, using the Myo’s vibration system.

We evaluated the proposed techniques by conducting user tests with ten users. We analyzed the usefulness, efficiency, effectiveness, learnability and satisfaction of each technique and we conclude that both techniques had high usability grades, demonstrating that Myo armband can be used to perform selection and manipulation tasks, and can enrich the experience making it more realistic by using a measure of the force applied to the gesture and its vibration feedback system. Although, from the interviews, we note that the user’s muscle fatigue is an important factor to be deeply analyzed in future studies.

We conclude that the Myo armband has a high grade of usability for selection/manipulation of 3D objects in Virtual Reality Environments. Myo seems to have a promising future as a device for interaction in VRE. More than just navigation, selection and manipulation, it can also be used as a device to input force, offering new ways of interaction in VRE, and in many possible applications like immersive training apps, video games, and motor rehabilitation systems where the possibility of measuring the force applied to the gesture may have a significant meaning. Then, more extensive studies are needed to determine all the advantages and possible uses of the Myo as interaction device in VRE.

References

Rautaray, S.S., Kumar, A., Agrawal, A.: Human computer interaction with hand gestures in virtual environment. In: Kundu, M.K., Mitra, S., Mazumdar, D., Pal, S.K. (eds.) PerMIn 2012. LNCS, vol. 7143, pp. 106–113. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-27387-2_14

Sathiyanarayanan, M., Mulling, T., Nazir, B.: Controlling a robot using a wearable device (MYO). Int. J. Eng. Dev. Res. 3(3), 1–6 (2015)

Nuwer, R.: Armband adds a twitch to gesture control (2013). https://www.newscientist.com/article/dn23210-armband-adds-a-twitch-to-gesture-control/. Accessed 7 Feb 2018

Premaratne, P., Nguyen, Q.: Consumer electronics control system based on hand gesture moment invariants. IET Comput. Vis. 1(1), 35–41 (2007)

Zhang, X., Chen, X., Li, Y., Lantz, V., Wang, K., Yang, J.: A framework for hand gesture recognition based on accelerometer and EMG sensors. IEEE Trans. Syst. Man Cybern.-Part A: Syst. Hum. 41(6), 1064–1076 (2011)

Mann, S.: Intelligent Image Processing. IEEE (2002)

Polsley, S.: An analysis of electromyography as an input method for resilient and affordable systems: human-computer interfacing using the body’s electrical activity. J. Undergrad. Res. (Summer 2013-Spring 2014), 30–36 (2014)

Riillo, F., Quitadamo, L.R., Cavrini, F., Saggio, G., Pinto, C.A., Pastò, N.C., Sbernini, L., Gruppioni, E.: Evaluating the influence of subject-related variables on EMG-based hand gesture classification. In: IEEE International Symposium on Medical Measurements and Applications (MeMeA), pp. 1–5. IEEE (2014)

McCullough, M., Xu, H., Michelson, J., Jackoski, M., Pease, W., Cobb, W., Kalescky, W., Ladd, J., Williams, B.: Myo arm: swinging to explore a VE. In: Proceedings of the ACM SIGGRAPH Symposium on Applied Perception, pp. 107–113. ACM (2015)

Haque, F., Nancel, M., Vogel, D.: Myopoint: pointing and clicking using forearm mounted electromyography and inertial motion sensors. In: Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, pp. 3653–3656. ACM (2015)

Phelan, I., Arden, M., Garcia, C., Roast, C.: Exploring virtual reality and prosthetic training. In: IEEE Virtual Reality (VR), pp. 353–354. IEEE (2015)

Chen, X., Zhang, X., Zhao, Z.Y., Yang, J.H., Lantz, V., Wang, K.Q.: Multiple hand gesture recognition based on surface EMG signal. In: The 1st International Conference on Bioinformatics and Biomedical Engineering, ICBBE, pp. 506–509. IEEE (2007)

Nguyen, T.T.H., Duval, T.: Poster: 3-point++: a new technique for 3D manipulation of virtual objects. In: IEEE Symposium on 3D User Interfaces (3DUI), pp. 165–166. IEEE (2013)

Bowman, D.A., Hodges, L.F.: An evaluation of techniques for grabbing and manipulating remote objects in immersive virtual environments. In: Proceedings of the Symposium on Interactive 3D Graphics, pp. 35–ff. ACM (1997)

Rubin, J., Chisnell, D.: Handbook of Usability Testing, Second Edition: How to Plan, Design, and Conduct Effective Tests. Wiley Publishing, Indianapolis (2008)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Garnica Bonome, Y., González Mondéjar, A., Cherullo de Oliveira, R., de Albuquerque, E., Raposo, A. (2018). Design and Assessment of Two Handling Interaction Techniques for 3D Virtual Objects Using the Myo Armband. In: Chen, J., Fragomeni, G. (eds) Virtual, Augmented and Mixed Reality: Interaction, Navigation, Visualization, Embodiment, and Simulation. VAMR 2018. Lecture Notes in Computer Science(), vol 10909. Springer, Cham. https://doi.org/10.1007/978-3-319-91581-4_3

Download citation

DOI: https://doi.org/10.1007/978-3-319-91581-4_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-91580-7

Online ISBN: 978-3-319-91581-4

eBook Packages: Computer ScienceComputer Science (R0)