Abstract

The relationship between poor air quality and ill health concerns citizens and health practitioners the world over. An increasing number of Air Quality Data (AQD) apps quantify air quality numerically, yet many of us remain confused about the ‘real’ extent of air pollution, in part because we tend to ignore imperceptible pollution despite data alerting us to its danger. Further, even if we understand it, data about air quality alone does not sustain citizens’ interest in environmental issues. We report on our use of Augmented Reality (AR) to create an app that visualizes AQD as an affective miniature tree whose health corresponds to live AQD. We argue that AR is polyaesthetic, demanding a more embodied engagement from its users which deepens their understanding of AQD. Our participatory design study with 60 users shows the salience of using locally relevant imagery and working with users as co-designers to add features that support social interaction to design an app that gamifies citizens’ interactions with AQD.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Views from Hong Kong’s gleaming skyscrapers across the harbor can be breathtaking, but in January the wind direction changes, bringing the cool northeast monsoon which has become notable as much for the smog associated with it as for its increased rainfall. Pollutants from mainland China travel south blown in on these northeastern winds and our breath is taken away for all the wrong reasons as shown in Fig. 1. While January brings even more government warnings for children to play inside to avoid the worsening air, Hong Kong, like most cities around the world, has a year-long problem with pollution that it creates itself, predominantly as a result of emissions from diesel vehicles on its roads and waterways. The World Health Organization (WHO) found that “more than 80% of people living in urban areas that monitor air pollution are exposed to air quality levels that exceed WHO limits.” This is not only in low-income cities. More than half (56%) of cities with 100,000+ citizens in high-income countries are experiencing pollution levels above WHO guidelines [1]. Despite the WHO data, many people are relatively unaware of the levels of pollution that they are living in as we tend not to consider poor air quality or check pollution levels until pollution becomes tangible in our daily lives. So how might a deeper awareness and an engagement with the science of air quality be achieved?

While sustainable HCI research has tended to focus on ‘improving’ public understanding of data (arguably an educational approach) and changing public behavior [2], a more dialogic process is gaining favor that situates science in what has been described as “circuits of culture” [3]. Instead of communicating a “correct” view of the science in question, the message is neutral. Proponents of the circuit model assert that meaning-making activities are dynamic, context-specific and change over time [3]. Sustainable HCI studies have used the term “discursive practice” [4] to describe a similar, situated, approach to design.

1.1 Awareness of Air Pollution is a Matter of Perception

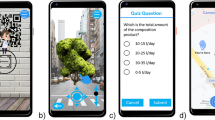

To make healthier choices when planning our commutes and outdoor leisure activities in Hong Kong, we turned to computation, using air quality apps to get real-time AQD in numerical form. These apps (see Fig. 1) break AQD down into a number of categories that, together, present a complex picture. Even simplified green, amber and red ‘traffic light’ categorizations are accompanied by numeric readings, as seen in the Clean Air Network’s (CAN) app [5]. The HK Air Pollution app [6] displays overall air cleanliness levels according to different international guidelines. Often these app readouts did not correlate to what we saw or smelled. The air might look clear but data showed pollution levels were high, conversely sometimes the air smelled bad but, according to the apps, pollution was moderate. It turns out such mismatches between subjective sense of levels of pollution versus factual data about pollution, are typical.

Research shows [7] that our awareness of air pollution is a matter of perception, strongly influenced by what is observable and tangible, which does not always correlate with empirical measurements [8]. In addition, AQD is often broken down into the five key pollutants regulated under the Clean Air Act: ground-level ozone, particulate matter, carbon monoxide, sulfur dioxide, and nitrogen dioxide. Typically, AQD apps display information about each of these pollutants, creating a complex picture. Studies of public understanding of science show that the abstract yet complex nature of so-called ‘wicked problems’, like pollution and climate change, is notoriously difficult to understand [9], and that our lack of understanding is distancing [10]. Sustainable HCI has explored novel uses of personal informatics to gather data on how we behave in relation to these difficult-to-comprehend challenges [11, 12], to close this ‘distance’ [11, 13,14,15] and to prompt ‘transformative reflection’ [16].

Numerical data alone does not fully close the distance, so many projects visualize data to make it perceptible. Sustainable HCI research into invisible indoor air pollution found that visualization increased awareness and subsequent positive behavioral change [17]. But how such data is visualized, matters. There is somewhat surprising data about the use of supposedly affective icons, such as using polar bears to visualize climate change. Studies show that we are disinterested in images of places and animals [17] that are not part of our everyday lives [18] which applies to polar bears, for the majority of us that live outside polar bear habitat. The converse is also true, locally relevant images and cultural artefacts are more likely to carry scientific knowledge in ways we find engaging [19]. HCI studies have determined that an emotional engagement with data can be achieved by going beyond visualization, for example, by translating live data into multisensory experiences [13]. Therefore, we asked the question: How can we create multisensory experiences to emotionally engage particular local users with scientific data about air quality?

2 Designing to Improve Understanding of Air Quality Data

2.1 Dynamic Meaning Making by Citizens Through Networked Practices

In their study of risk analysis and mass media reporting of climate change, sociologists and media theorists propose a revised, more reflexive version of the older “circuits of culture model” which is relevant to our project. In this revised circuits of culture model “[m]eanings are remade in the contexts of social interaction at the local level as media texts are re-embedded in daily life” [3]. This helps to account for users making meanings about AQD and pollution from using our app and it places users at the center of meaning-making, recognizing their role in adapting and expanding the app and its features through dissemination and interaction with designers and researchers. We therefore took the circuit of culture model further, extending and formalizing the role of the user in meaning-making via our participatory design workshop which we discuss later.

Sociologists have argued that rather than being apolitical, young citizens’ “participation in social movements, rallies, protests, consumer boycotts all point to the possible displacement of traditional models of representative democracy as the dominant cultural form of engagement by alternative approaches increasingly characterized through networking practices” [20]. They go on to argue that young citizens’ attitudes are shaped more by their participation and interaction through social networks “which they themselves have had a significant part in constructing” [20]. Our previous research proposed that “mobile device practices in Hong Kong enable the significant extension of mobile leisure and gaming experiences into open and public spaces” [21]. Additionally, networking practices were central to the civic engagement and protests of Hong Kong’s Occupy Central movement [22, 23]. Pocket Penjing builds on these findings to create a mobile leisure experience that encourages civic engagement.

2.2 Local and Social Salience

Our decision to develop an AR app that only runs on smartphones was informed by the local relevance, or ubiquity, of mobile technologies. Statistics for smartphone use in Hong Kong [24] show that 85% of all Hong Kong residents over the age of 10 years have a smartphone. Of 15–49 year olds more than 97% have a smartphone and this drops by only 5% to 92% for 50–59 year olds.

In addition to choosing a locally-relevant platform, we drew on regionally-relevant imagery to represent AQD in our app, Pocket Penjing. The app takes its name from the Chinese “penjing”, tray plantings of miniature trees which pre-date bonsai. In contrast to Japanese bonsai, penjing place greater emphasis on landscape, environment and people’s relationship to the tree [25], reinforcing the idea that our virtual miniature trees are connected to the environment and to the human users that interact with them. We model a cherry blossom tree because of its ancient and ongoing iconic status in Chinese culture. Before we conducted our participatory design study, we produced a functioning prototype comprising a simplified computational simulation of a cherry tree that shows the impact of AQD on tree growth and displays the tree in AR.

2.3 Description of the Prototype App

The simulation described here is a prototype developed by our team of six, using sketches and group discussion to develop low fidelity prototypes, which we then iteratively adapted to make the stable prototype Android app which we describe here.

Scrape Live AQD, Create a Tree and Save It

The Android app is built using Unity3D. The introductory animation ends with an image of the canopy of a stylized cherry tree. By tapping on an empty area, users name their new tree. Up to three trees can be stored. Next, the user spins a 3D globe using a swipe motion, to choose one from a choice of flagged locations that represent live online air quality monitoring stations. The prototype uses data sources from live online air quality monitoring stations in Hong Kong [26] and Wuhan [27, 28]. The tree’s initial simplified environment is created from the selected location, using data recorded from the previous day in order to include actual pollution, rainfall and sunshine totals over a 24-h period. Changes are input to the model via data from the selected station each time a saved tree is loaded and additional random inputs come from the users themselves as they seek to mitigate extreme weather or pollution by, for example, tapping relevant icons to add virtual shade to their tree to protect it from sun or wind, or to virtually water it (see Fig. 2).

Display Lindenmeyer Tree in AR, Speed Up Growth and Prune the Tree

We use a basic deterministic 3D L-System [29, 30] to structure cherry blossom trees using turtle interpretation methods to calculate branching. The tree is defined at initialization, its final shape affected by three randomized branching angles (yaw, pitch, roll) that produce similar, but slightly differing, tree structures based on the same rules (see Fig. 2). The rules are informed by research into the growth and development of cherry trees [31, 32]. Pre-built sectional model components are assembled to form a three-dimensional tree. The app calculates the tree’s daily growth amount from the selected live weather and AQD, incrementally scaling the tree’s 3D components accordingly. The cumulative effects of applying each day’s growth factor causes the tree to grow. We have not found any previous research that renders 3D forms, such as an L-Systems tree, in real-time from live data in AR.

A printed beer-mat sized reference image (see Fig. 2) registers the base of the tree’s shoot/trunk and as soon as the tree has been made the AR window loads. When users first plant their tree only one set of historical data is available - the AQD that has just been scraped from the selected online air monitoring station. As this is the first data point, when users tap to plant their tree little or no growth will be visible. They have, in effect, planted a seed. On successive days, as people reload saved trees, users will see the tree growing, its rate and form based on the cumulative effect of the stored data. From when users first plant a seed, they have another option to fast forward tree growth, by tapping the ‘speed up growth’ icon to see the shoot becoming a fully-grown tree in a couple of minutes. Figure 2 shows weather and air quality data displayed via small icons with numeric AQD readings underneath, icons turn red to alert users about data changes that will inhibit tree growth. As with real-life gardening, intervention is required to mitigate the weather data to enable the tree to grow despite, for example, overly polluted conditions. Users tap to alter temperature, pollution or rainfall. People can prune and shape the tree by removing branches (not the main trunk). There is no undo, just as when we cut the branch from a real penjing tree. After making the Android app prototype we conducted a participatory design workshop with 63 undergraduates to improve the prototype.

3 Using a Participatory Design Method to Develop the AR App

We conducted two, two-part formative participatory design workshops, which gave users one week to experience the app, reflect on its design, consider new design ideas, and share their ideas with the other participants and researchers. The format of workshops followed the method for conducting participatory design workshop studies, inspired by Gaver, et al.’s [33] notion of open design approaches, which are typically divided into three stages: (1) users are given an opportunity to get acquainted with the prototype through free-play or guided interactions; (2) users are asked to develop, categorize, and organize their own ideas into design concepts; and (3) the researchers will discuss these concepts with the users [33,34,35]. In the second part of the workshop, two researchers undertook a collaborative mind mapping exercise to capture emerging categories of ideas as they were described by users. We also audio and video recorded the participants’ sharing and discussion of their design concepts for further analysis. Following the workshops, we further discussed the ideas and implemented the most popular in the next version of the app.

3.1 Recruitment of Participants

We recruited 63 participants from an introductory course on computer games at our university in Hong Kong. The researchers obtained permission to conduct these workshops from the course instructor, who found value in using this activity as part of the students’ design assignments. The students of this course were divided into two classes, one running on Tuesday and the other running on Wednesday. In the Tuesday class, we recruited 33 participants, 23 (69.7%) were male and 10 (30.3%) female. In the Wednesday class, we recruited 30 participants, 17 male (56.7%) and 13 female (43.3%). Of all 63 participants, 63.5% were male and 36.5% were female. The gender breakdown is typical of university students who historically enrolled in this course. Participants were compensated with 5% towards their course grade. This course was conducted in English, the standard teaching language across all courses of the university.

3.2 Conducting the Two-Part Workshops

To accommodate the schedules of the two classes, we conducted two sets of the two-part workshop across two consecutive weeks - one for each class. In the first part, the researchers gave participating students of each class a one-hour briefing, including a live demonstration of the app. During the demonstration, two researchers helped students to download the app onto their Android phones and test it out immediately. The researchers also assisted any students who wanted extra information about, for example, the user interface. Students without an Android phone either shared use of the app with members of their group who had an Android device or used one of the five Android phones we provided for loan for the entire week. We only had two loan requests from students across the two classes. After the demonstration, students were given a standard ‘prompt’: “How do we redesign Pocket Penjing so as to most effectively persuade its players to care about environments in Hong Kong and Wuhan? Provide drawings, sketches, and other diagrams to illustrate your ideas.” Students continued working in pre-existing groups of four or five from their computer game classes. There were seven groups for Tuesday’s workshops and eight groups for Wednesday’s workshops. Each group was tasked with spending one week using the app, considering the prompt, and presenting their ideas for improving the app. They were asked to work together but to take the following approach: “To consider that every idea is a good idea, and not to remove any idea that they or their team-mates came up with.”

In the second section of the workshops, a week later, each group presented their ideas in 15 min to a total of three hours with the researchers acting as moderators. There had been no guidance or restrictions on how students might present their ideas. Every group made a group presentation using one Powerpoint, during which they all chose to give each member a chance to speak, each individual presenting their own ideas in turn, which was perhaps due to our instruction of “not to remove any idea,” but this turn-taking approach was also typical of the way students in that course had made previous presentations. Presentations were predominantly verbal with text-based slides, but most added illustrations or their own sketches. After each presentation, there was time for one or two questions, usually to elaborate on points covered in the presentation. In keeping with most other student presentations in this Hong Kong university, students were allowed to but did not ask their peers questions while the researchers did. Of the 15 total presentations, 13 groups gave us informed consent to audio and video record their presentations. We did not record the 2 groups that did not give consent. All students were assured that withholding consent would not be penalized in any way, such as by a reduced grade.

3.3 Data Analysis

To analyze any emerging categories of design concepts, and to map how such categories related to each other, we performed iterative coding during and after the participants’ presentations to capture [60] knowledge extracted during participatory design sessions. We used a collaborative mind mapping web tool, Coogle (coogle.it), shown in Fig. 3, that enables multiple users to construct a mind map together. Using Coogle, two researchers made a mind map to document ideas, features, and designs that the students shared in each of the two workshop sessions. The two mind maps captured key points from all the presentations, including those made from groups that did not give consent to audio and video documentation. Both researchers accessed the same mind map document, each from their own laptops, and simultaneously added categories as the presentations took place. They could modify each other’s categories and alter the mind maps live. No categories were discussed or developed by the researchers beforehand - all were determined, openly and inductively, in response to the presentations.

After the workshops, we transcribed the audio recordings and performed more coding of the transcriptions modifying our initial codes. For example, during the mind mapping exercise, the two researchers had coded part of the social interaction features, such as flowers and seeds, into the category “virtual items.” Research team members’ iterative coding and analysis made it clear that certain virtual items are mediating social interactions - a salient theme found in many of the presentations. Thus, we conducted additional iterations of inductive and axial coding until themes presented within the findings section had emerged [36].

4 Findings

4.1 Preference for Locally Recognizable Features

Both the mind maps and transcripts of the videoed presentations show numerous references to the desire for additional features to the app that relate strongly to the local environment. Half the groups (6 out of 12 groups, 28 out of 63 participants), suggested the addition of regionally specific weather events and environmental conditions such as smog, typhoons, and heavy rain. Locally important, socio-cultural features were also suggested such this idea (see Fig. 4), “To encourage the players to interact with each other, we allow the players to plant conjoined trees ( ). In Chinese culture it is similar to getting marr[ied to] the other players.” The participant referred to a particular phrase in a Tang Dynasty poem written by Bai Juyi (

). In Chinese culture it is similar to getting marr[ied to] the other players.” The participant referred to a particular phrase in a Tang Dynasty poem written by Bai Juyi ( ,

, ), translated as “In heaven, let us be birds with shared wings and body, and on earth, let us be trees conjoined.” The participant combines two Chinese cultural notions, penjing and a Chinese idiom about intimate social relationships. Another idea was to send trees to other players. Both ideas have social interaction at their core, conforming to suggestions made in early studies of social interaction in multiplayer games, to “reward the players who make these locations truly social environments” [18]. In the case of Pocket Penjing, the participants’ designs pointed to the salience of social interaction for understanding science.

), translated as “In heaven, let us be birds with shared wings and body, and on earth, let us be trees conjoined.” The participant combines two Chinese cultural notions, penjing and a Chinese idiom about intimate social relationships. Another idea was to send trees to other players. Both ideas have social interaction at their core, conforming to suggestions made in early studies of social interaction in multiplayer games, to “reward the players who make these locations truly social environments” [18]. In the case of Pocket Penjing, the participants’ designs pointed to the salience of social interaction for understanding science.

4.2 Salience of Social Interaction for Understanding Science

Our participant designers suggested ways to integrate Pocket Penjing with social practices typical of the local Hong Kong student population. More than two thirds (49 out of 63) participants designed features to enhance social interaction which supports the theory that “[s]cience is a social process, yet scientists often pretend that it is not.” [10]. The most popular suggested new features were sharing images of the trees on Facebook, exchanging tips about how to cultivate a healthy tree and giving tree-related gifts, like seeds, produced by the tree, or sending someone an entire carefully cultivated and pruned tree. Making the tree part of established social festivals was also suggested, “[on] Valentine’s Day the players can give their flowers to their girlfriends or boyfriends and on […] Mother’s Day they can […] give the flower to their mothers.” (see Fig. 5). New features suggested by participants were sometimes coded as both game play and social interaction, for example, adding seeds and flowers that could be saved when users grew a healthy tree, was suggested by many participants. These items were described both as rewards for players, and as gifts that could be exchanged. There was a social dimension to the new difficulties, or levels, participants designed for the app. For example, the success associated with growing a tree in difficult situations was something not only personally motivating but also connected to a desire to show the results of overcoming that difficulty to their friends. This was as much about sharing tips for how to cultivate a tree as it was about bragging. One participant saw the potential of the app to connect with users from beyond the local region and understand other climate and pollution issues, they suggested more options for scraping AQD from a wider range of “places around the world in regard to different climate differences from different places, besides just Hong Kong and Wuhan. […] Perhaps different penjing got different climate conditions in favour in their growing. So that it will actually increase the interaction for the players and also make it more and more fun.”

Socializing and Learning Enhanced by More Difficult Individual Play Elements

Participants’ came up with ideas to increase the number of simulations of local real-world phenomena, such as typhoons and smog, and often reasoned that this would result in better understanding of related pollution and weather. For example, this participant correlates exposure to risk to their tree with increasing awareness of local environmental issues, “in response to the large amount of rain during summer in Hong Kong, landslides may occur which may immensely affect the growth of the players’ penjing. And then the players may be more careful in protecting the growth of the plant and may be informed by the environmental issues surrounding them.” One unexpected outcome was participants’ desire to work harder to grow their tree, “introduce some sudden damages such as typhoon, bugs attacks or other disasters. So, when there is typhoon they have to use a barrier to protect the plant in order to stay away from damage. […] players […] pay more attention on the condition of the plant and then change their strategies”. This also introduces the idea of strategy, a game-like element that our prototype simulation did not support.

Most participants expected the app to be more game-like, with tokens and levels, and less a simple simulation. Although we had not described it as a game, we had described ‘playing’ with it in our prompt during the first workshop and our participant designers were from a games course, leading to a potential bias towards seeing the app as a game. However, sustainable HCI researchers investigating green transportation behaviors [15] using the UbiGreen Transportation Display mobile tool also found that, while they did not describe their product as a game, many users interpreted it as one and expected more game-like features such as points and levels.

4.3 The Importance of Real-World Interaction in AR Experiences

More than half (7 out of 12) of the groups of participants suggested additional real-world interaction to integrate the tree more fully into their local environment in ways that would involve users more bodily. 36 out of 63 presenters suggested more advanced AR and real-world interactive functions, including support of head-mounted displays, hand gesture recognition, audio and sunlight detection. Research has shown that AR prompts a deeper haptic or bodily engagement [37]. Participant designers echoed this with ideas for gesture recognition such as snipping their fingers (see Fig. 6) to prune the tree and modeling the impact of real-world physics on plant growth, typified by a sketch showing, “when we turn over the phone, the tree will grow upside down, and so a penjing may grow like this” (see Fig. 6).

During the week between the two workshops, when they were exploring the app and coming up with ideas for the next version, some participants discovered our website [38] and were excited by screengrabs there that placed the AR tree in a variety of settings. They expanded on this idea, again making it more social, with the idea of placing a number of graphical markers together in parks so that multiple players could meet up to make a garden.

5 Discussion

5.1 Local (Poly)Aesthetics and the Salience of Social Networks for Learning

The aesthetic experience of Pocket Penjing reflects regional visual aesthetics of Chinese paintings of cherry blossom and Chinese paper cuts (see Fig. 7). However, as soon as the AR window loads it brings with it the aesthetics of the user’s offline world (see Fig. 7). While VR replaces visual cues from the local environment with an immersive artificial space, AR shows virtual objects within live camera images of our immediate surroundings, thereby going further than VR to “encourage us to occupy two locations at once – to think of ourselves as both ‘here and there’ – situated in our immediate physical and cultural location as well as in the virtual world of the Internet” [39]. Our decision to use AR locates the virtual tree firmly in the local situation, emphasizing the importance of local context, “[o]ur augmented reality mobile apps can be seen as experiments through which we test hypotheses that mixed reality opens up the possibilities for more affective experiences” [39].

The way that AR blends the online and offline [40] means that Pocket Penjing’s visual aesthetics are not fixed, but rather they constantly emerge as different people use the app and take pictures of the trees, in different places. Our use of participatory design to co-develop features also multiply implications of the aesthetic. We therefore suggest that participatory design itself is polyaesthetic, bringing together multiple designers’ ideas, including features that take account of proprioception and embodiment. This brings us to third aspect of polyaesthetics in Pocket Penjing, that of multisensory involvement in AR [5]. For example, the use of a finger snipping action mentioned earlier, or another participant’s idea to shade the tree by cupping their hand over the phone screen to literally cast a shadow onto the screen. These gestures are typical of the way that our experience of using AR reminds us of our embodiment as we twist and turn our devices, and ourselves, to see virtual and real worlds combined on the screens and as we physically move in order to experience AR. AR affords a sense of spatial and emotional immersion that has been described as “polyaesthetic” [39] as it engages “multiple senses, and not only the senses of sight, hearing, and touch but proprioception as well” [37]. These multisensory and polyaesthetic affordances enhance learning [37], and support social interaction [41] and informal learning [42]. But what is the advantage of these complex and multilayered aesthetics? Do they motivate us to engage with AQD? Help us to learn? Or do they confuse and distract?

Constructivist learning theory, developed from psychology, extends the circuits of knowledge model [3], suggesting that we learn by constructing knowledge for ourselves, making meaning of the world both individually and socially [43, 44] and both these models are relevant when core users of the app are young citizens whose attitudes are shaped by networked social interaction [20]. Experts in learning technologies see synergy between AR and the power of situated and constructivist learning, arguing that “mobile devices equipped with AR experiences can enhance learning by situating data collection activities in a larger, meaningful context that connects to students’ activities at the real-world setting” [45]. Humans are social animals and these real-world settings are populated with other people. Playful engagement lends itself to social, even collaborative interaction with others. Therefore, we argue that a collaborative learning approach to science is likely to lead to higher achievement outcomes, and students will be more motivated and develop better social skills than learning science via more didactic approaches [37].

5.2 Persuasive Games, Learning and Sharing

The playful, rather than didactic, structure of our app has much in keeping with persuasive games [46] or ‘gamification’ [47]. It simulates the experience of having a small tree and learning what is necessary to nurture it as it responds, positively or negatively, to changing weather and air pollution. The values of our participant designers exist outside the game, and through their work with us they “focused on the social practices of playing the game, rather than the social practices represented in the game. […] [games] are also media where cultural values themselves can be represented—for critique, satire, education, or commentary” [46]. This is part of what has been termed the ‘civic turn’ in HCI, the use of technology in civic action, engagement and participation in civic life [48]. The procedural rhetorical devices of league tables and scores, suggested as essential new features by our participants, are typical of many games and have been shown by sustainability HCI studies to enable comparisons between players and datasets [11] and incentivize engagement [49]. Kjledskov et al.’s HCI study of electricity consumption displayed on mobile devices showed comparative visualization to be beneficial to the increase of awareness necessary for the support of more sustainable behaviors [11]. While that study visualized different household’s energy usage, our mobile app supports multiple trees that can each represent AQD from a different monitoring station, hence enabling comparisons of the effect of air quality on trees in different geographic regions. Another game, Echo Chamber [50], uses procedural rhetoric, the practice of using computational processes persuasively, to make a game about the rhetoric and language of the debate the ‘wickedly complex’ topic of climate science. The designers found that the game was incidentally a learning tool for climate change, though its main focus was on utilising effective communication techniques. Like Echo Chamber, users of Pocket Penjing will learn about air pollution incidentally, while their main focus is on nurturing their tree and sharing that experience with others.

5.3 Scientific Data and Local Connections

By situating our participatory design workshops in Hong Kong, we have gone some way towards accounting for, and reflecting, those participants’ shared cultural connections. Using techniques from game design, persuasive games “support existing social and cultural positions, but they can also disrupt and change those positions, leading to potentially significant long-term social change” [46].

Users interactions with data-driven rendered 3D cherry trees in Pocket Penjing create visual narratives “capable of providing clarity to the complicated and contested nature of toxic issues that would be considered controversial if stated in words” [52]. The narratives are not only visual though, as our sense of AQD is made tangible by being rendered as an AR experience that requires us to relate bodily to the data using multiple senses. AR experiences are not only multisensory, they are social, cultural and collaborative. The CAN app mentioned at the beginning of this paper (see Fig. 1) tip-toes towards more social engagement as it enables users to add comments which are then marked on a map with a footprint icon. However, there is no support of social interaction between commenters. Our prototype app provides numeric data about air quality but this is secondary to the representation of that data in a visual form. Like UbiGreen, our prototype was “not inherently social” [15] but, nevertheless, our participatory design study found that its graphics were conversation-starters, people wanted to know how the trees were progressing and to be able to share trees. Similar findings lead the UbiGreen team to plan future work to “explore the value of sharing application data among social groups” and we have now also begun to do that, as described below [63].

In summary, we have found it useful to converge changing trends that seem to run in parallel: the didactic communication of science of twenty years ago that has become the more circuit-based, or dialogic, engagement with science of more recent years; the move from didactic learning to constructivist and collaborative learning; the move from the “Modernist design in the 20th century [that] emphasized perfect integration of elements into a single unified form” [5] to the polyaesthetics of AR that exist in the aesthetic era of glitch and socially engaged art practice which emphasize the importance of locality and localness.

5.4 Implementing Ideas from the Participatory Design Workshops

Since completing the participatory design workshops we have implemented the following ideas: firstly, to increase the gamification and provide more visual feedback when users take actions. Participant designers wanted the addition of more international air quality index stations and we have added five, with more to follow (see Fig. 9). Our co-designers in the workshops suggested tapping icons was boring and more dynamic graphic representations were needed to show the tree’s changes in health instead of it always looking perky. We have therefore developed a wider range of leaf and blossom textures to express health, for example the brown dry leaves in Fig. 8. Now, when the icons show a red alert, users access a toolbox and our first implementation of new visual tools to replace tapping on icons, in this case the low-water icon, is a watering can animation that users activate (see Fig. 8). A number of different social sharing functions are in development but we have completed and implemented a gallery area within the app (see Fig. 9) and a related Facebook sharing feature (see Fig. 9).

Left: idea presented during participatory design workshop to replace tapping on icon to water tree, with image of a watering can. Middle: our implementation of the idea, plus implementation of idea to render leaves brown to show dryness. Right: our addition of animated tipping of can and particle systems water flow.

5.5 Future Work

Designing with participants from Hong Kong in an English-speaking university setting presented few problems but we plan to conduct further studies in other localities and languages in order to explore the impact of language on the study and within the app itself. As described above we have begun to implement some of the features suggested from the participatory design workshops. We have more work to do in this regard, especially in relation to developing tokens, levels as part of a more gamified user experience. After the new features have been implemented we will conduct more naturalistic user studies that focus on how much more people understand about AQD after using the app in their daily life.

6 Conclusion

Pocket Penjing uses AR to enhance the sense of local context. The app supports informal, unstructured learning by making the focus of its persuasive simulation the tree and the users’ relationship to it, while the scientific data that determines the tree’s default condition is robust but downplayed. Instead attention is on our application of engaging data visualisation mechanics using real-time rendering of 3D trees. Learning how to engage with and interpret AQD is situated in a physical and cultural context in keeping with ideas that “knowledge is situated [53]. We designed the app on the premise that knowledge cannot be separated from the context in which it is learned [53] or, going further, that knowledge emerges through intra-actions between humans (scientists who develop metrics for measuring AQD, designers of App, users) and non-humans (ubiquitous computing networks, various mobile devices, air monitoring stations). Our use of participatory design studies shows that a more holistic approach to understanding AQD through embodied experiences situated in users’ local real-world contexts, with playable media that have a number of features to support social interaction, are more powerful and engaging than abstract reasoning or relying on interpreting numeric AQD. Based on these findings, and on research studies showing that we engage and commit more if activities further social relationships, we argue that a deeper understanding of AQD may be fostered from embodied and socially-mediated interaction with data in local, blended offline/online spaces of AR.

References

Chriscaden, K., Osseiran, N.: Air pollution levels rising in many of the world’s poorest cities (2016). http://www.who.int/mediacentre/news/releases/2016/air-pollution-rising/en/. Accessed 29 Jan 2018

Zhang, Z., Zhang, J.: A survey of public scientific literacy in China. Public Underst. Sci. 2(1), 21–38 (1993)

Carvalho, A., Burgess, J.: Cultural circuits of climate change in U.K. broadsheet newspapers, 1985–2003. Risk Anal. 25(6), 1457–1469 (2005)

DiSalvo, C., Sengers, P., Brynjarsdóttir, H.: Mapping the landscape of sustainable HCI. In: CHI 2010 Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 1975–1984 (2010)

Clean Air Network NGO. Clean Air Network (2009). http://www.hongkongcan.org/. Accessed 31 Jan 2018

The Air You Breathe. Hong Kong Air Pollution app [Internet] (2012)

Wakefield, E.L., Elliott, C.D., Eyles, J.D.: Environmental risk and (re) action: air quality, health, and civic involvement in an urban industrial neighbourhood. Health Place 7(3), 163–177 (2001)

Barker, L.: Planning for environmental indices. In: Craik, K.H., Zube, E. (eds.) Perceiving Environmental Quality. ESRH, vol. 9, pp. 175–203. Springer, Boston (1976). https://doi.org/10.1007/978-1-4684-2865-0_10

Cohen, S., Demeritt, D., Robinson, J., Rothman, D.: Climate change and sustainable development: towards dialogue. Glob. Environ. Change 8(4), 341–371 (1998)

Lorenzoni, I., Jones, M., Turnpenny, J.R.: Climate change, human genetics, and post-normality in the UK. Futures 39(1), 65–82 (2007)

Kjeldskov, J., Skov, M., Paay, J., Pathmanathan, R.: Using mobile phones to support sustainability: a field study of residential electricity consumption. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 2347–2356 (2012)

Pierce, J., Paulos, E.: A phenomenology of human-electricity relations. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 2405–2408 (2011)

Jacobs, R., Benford, S., Selby, M., Golembewski, M., Price, G.G.: A conversation between trees: what data feels like in the forest. In: Proceedings of the SIGCHI Conference on Human Factors in Computing System, pp. 129–138 (2013)

Friedberg, E., Lank, E.: Learning from green designers: green design as discursive practice. In: Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, pp. 1312–1323 (2016)

Froehlich, J., Dillahunt, T., Klasnja, P., Mankoff, J., Consolvo, S., Harrison, B.: UbiGreen: investigating a mobile tool for tracking and supporting green transportation habits. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 1043–1052 (2009)

Ganglbauer, E., Fitzpatrick, G., Güldenpfennig, F.: Why and what did we throw out?: probing on reflection through the food waste diary. In: Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, pp. 1105–1114 (2015)

Kim, S., Paulos, E.: In air: sharing indoor air quality measurements and visualizations. In: Proceedings of the Conference on Human Factors in Computing (CHI), pp. 1861–1870 (2010)

Webster, T., Dyball, M.: Independent Review of Beacons for Public Engagement Evaluation Findings. Final Report. RCUK, HEFCE and the Wellcome Trust (2010)

Csikszentmihalyi, M., Hermanson: What makes visitors want to learn? Intrinsic motivation in museums. Museum News 74(3), 34–37 (1995)

Loader, B.D., Vromen, A., Xeno, A.: The networked young citizen: social media, political participation and civic engagement. Inf. Commun. Soc. 17(2), 143–150 (2014)

Prophet, J.P.H.: Ubiquitous Alife in TechnoSphere 2.0. In: Ekman, B.J.D., Díaz, L., Sondergaard, M., Engberg, M. (eds.) Ubiquitous Computing, Complexity and Culture, pp. 254–266. Routledge (2015)

Mutsvairo, B., Harris, S.T.G.: Rethinking mobile media tactics in protests: a comparative case study of Hong Kong and Malawi. In: Wei, R. (ed.) Mobile Media, Political Participation, and Civic Activism in Asia. MCALIGI, pp. 215–231. Springer, Dordrecht (2016). https://doi.org/10.1007/978-94-024-0917-8_12

Kow, Y.M., Kou, Y., Semaan, B., Cheng, W.: Mediating the undercurrents: using social media to sustain a social movement. In: CHI Conference on Human Factors in Computing Systems, pp. 3883–3894 (2016)

Census and Statistics Department. Information Technology usage: Women and Men in Hong Kong. Key Statistics. Hong Kong: The Government of the Hong Kong Special Adminsitrative Region. The Governement of the Hong Kong Special Administrative Region, 27 July 2017

Ming, J., Liao, H., Chen, H., Wang, M.: Bonsai (penjing) systematic classification. J. Nanjing Forestry Univ. 25(6), 59–63 (2001)

World Air Quality Index. Hong Kong Air Pollution: Real-time Air Quality Index (AQI) (2018). http://www.aqicn.org/city/hongkong. Accessed 16 Jan 2018

World Weather Online. Wuhan air quality monitoring station. http://www.aqicn.org/city/wuhan. Accessed 07 Aug 2017

World Air Quality Index. Wuhan Air Pollution: Real-time Air Quality Index (AQI). http://www.aqicn.org/city/wuhan. Accessed 07 Aug 2017

Lindenmayer, A.: Mathematical models for cellular interactions in development 1. Filaments with one-sided inputs. J. Theoret. Biol. 18(3), 280–299 (1968)

Rodkaew, Y., Chuai-Aree, S., Suchada, S., Chidchanok, L., Chongstitvatana, P.: Animating plant growth in L-system by parametric functional symbols. Int. J. Intell. Syst. 19(1–2), 9–23 (2004)

Prusinkiewicz, P., Hammel, M., Hanan, J., Mech, R.: L-Systems: from the theory to visual models of plants. In: Proceedings of the 2nd CSIRO Symposium on Computational Challenges in Life Sciences, pp. 1–32 (1996)

James, P., Measham, P.F.: Australian Cherry Production Guide. Cherry Growers Australia Inc. (2011)

Gaver, B., Pacenti, P.E.: Design: cultural probes. Interactions 6(1), 21–29 (1999)

Denef, S., Ramirez, L., Dyrks, T., Schwartz, T., Al-Akkad, A.A.: Participatory design workshops to evaluate multimodal applications. In: Proceedings of the 5th Nordic Conference on Human-Computer Interaction: Building Bridges, pp. 459–462 (2008)

Tohidi, M., Buxton, W., Baecker, R., Sellen, A.: User sketches: a quick, inexpensive, and effective way to elicit more reflective user feedback. In: Proceedings of the 4th Nordic conference on Human-Computer Interaction: Changing Roles, pp. 105–114

Corbin, J., Strauss, A.: Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory. Sage, Thousand Oaks (2007)

Bolter, J.D., Engberg, M., MacIntyre, B.: Media studies, mobile augmented reality, and interaction design. Interactions 20(1), 36–45 (2013)

Prophet, J., Hurry, M., Kow, Y.M.: Pocket Penjing Homepage (2016). http://www.pocketpenjing.com/. Accessed 01 Feb 2018

Engberg, M., Bolter, J.D.: Cultural expression in augmented and mixed reality. Convergence: Int. J. Res. New Media Technol. 20(1), 3–9 (2014)

Prophet, J., Pritchard, H., E Asian Ubicomp and ALife: Roaming and homing with technosphere 2.0 computational companions. In: CHI 2015 Extended Abstracts: Between the Lines: Reevaluating the Online/Offline Binary (2015)

Asai, K., Sugimoto, Y., Billinghurst, M.: Exhibition of lunar surface navigation system facilitating collaboration between children and parents in science museum. In: ACM, pp. 119–124 (2010)

Falk, J.H.: Free-choice environmental learning: framing the discussion. Environ. Educ. Res. 11(3), 265–280 (2005)

Wadsworth, J.: Piaget’s Theory of Cognitive and Affective Development: Foundations of Constructivism. Longman Publishing, White Plains (1996)

Hein, G.: Constructivist learning theory (1991). https://www.exploratorium.edu/education/ifi/constructivist-learning. Accessed 24 April 2017

Dede, C.: Interweaving Assessments into Immersive Authentic Simulations: Design Strategies for Diagnostic and Instructional Insights. Educational Testing Service, Princeton (2012)

Bogost, I.: Persuasive Games: The Expressive Power of Videogames. MIT Press, Cambridge (2007)

Deterding, S., Dixon, D., Khaled, R., Nacke, L.: From game design elements to gamefulness: defining gamification. In: Proceedings of the 15th International Academic MindTrek Conference: Envisioning Future Media Environments, pp. 9–15 (2011)

Johnson, I.G., Vines, J., Taylor, N., Jenkins, E., Marshall, J.: Reflections on deploying distributed consultation technologies with community organisations. In: Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, pp. 2945–2957 (2016)

O’Neill, S., Nicholson-Cole, S.: Fear won’t do it: promoting positive engagement with climate change through visual and iconic representations. Sci. Commun. 30(3), 355–379 (2009)

Heath, C., Vom Lehn, D., Osborne, J.: Interaction and interactives: collaboration and participation with computer-based exhibits. Public Underst. Sci. 14(1), 91–101 (2005)

Knowles, B., Blair, L., Coulton, P., Lochrie, M.: Rethinking plan A for sustainable HCI. In: Proceedings of the 32nd Annual ACM Conference on Human Factors in Computing Systems, pp. 3593–3596 (2014)

Peeples, J.: Toxic sublime: imaging contaminated landscapes. Environ. Commun. J. Nat. Cult. 5(4), 373–392 (2011)

Geertz, C.: Local Knowledge: Further Essays in Interpretative Anthropology. Basic Books, New York (1983, 2000)

Aristodemou, E., Boganegra, L.M., Mottet, L., Pavlidis, D., Constantinou, A., Pain, C., Robins, A., ApSimon, H.: How tall buildings affect turbulent air flows and dispersion of pollution within a neighbourhood. Environ. Pollut. 233, 782–796 (2018)

DiSalvo, T.L., Jenkins, T., Lukens, J., Kim, T.: Making public things: how HCI design can express matters of concern. In: Proceedings of the 32nd Annual ACM Conference on Human Factors in Computing Systems, pp. 2397–2406 (2014)

The European Travel Commission (ETC). ETC Country Reports: China (2014). http://www.tourism-generis.com/_res/file/3721/49/0/ETC_Country_Reports_China.pdf. Accessed 31 Jan 2018

Openweather. Weather forecast in Wuhan, China. http://www.weatherforecastmap.com/china/wuhan/. Accessed 16 Jan 2018

Acknowledgement

The work described in this paper was partially supported by a grant from the Research Grants Council of the Hong Kong Special Administrative Region, China (Project No. CityU 11608515). We also thank Pocket Penjing team members: Xinyi Chen, Graphic artist; Meichen Zhou, Research Associate; and Tsz Yan Andy Cheung, Research Associate.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Prophet, J., Kow, Y.M., Hurry, M. (2018). Cultivating Environmental Awareness: Modeling Air Quality Data via Augmented Reality Miniature Trees. In: Schmorrow, D., Fidopiastis, C. (eds) Augmented Cognition: Intelligent Technologies. AC 2018. Lecture Notes in Computer Science(), vol 10915. Springer, Cham. https://doi.org/10.1007/978-3-319-91470-1_33

Download citation

DOI: https://doi.org/10.1007/978-3-319-91470-1_33

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-91469-5

Online ISBN: 978-3-319-91470-1

eBook Packages: Computer ScienceComputer Science (R0)