Abstract

Higher performance is the eternal purpose for super-resolution (SR) methods to pursue. Since the deep convolution neural network is introduced into this issue successfully, many SR methods have achieved impressive results. To further improve the accuracy that current SR methods have achieved, we propose a high-accuracy deep convolution network (HDCN). In this article, deeper network structure is deployed for reconstructing images with a fixed upscaling factor and the magnification becomes alternative by cascading HDCN. \(L_2\) loss function is substituted by a more robust one for reducing the blurry prediction. In addition, gradual learning is adopted for accelerating the rate of convergence and compacting the training process. Extensive experiment results prove the effectiveness of these ingenious strategies and demonstrate the higher-accuracy of proposed model among state-of-the-art SR methods.

Similar content being viewed by others

Keywords

1 Introduction

Limited by imaging equipment, digital images tend to be low-resolution (LR) for missing high-frequency constituent. Single image super-resolution (SISR) is introduced to recover the high-resolution (HR) from its LR input. This technique has been widely adopted in security monitoring and medical imaging where requires extra image details.

In recent years, Convolutional neural network (CNN) provides impetus for SISR methods. Dong et al. [2] certify the validity of learning a nonlinear LR-to-HR mapping in an end-to-end manner. They further deploy a shrink layer and a expand layer [3] in their model, termed FSRCNN, to downsize the scale of parameters and maintain the accuracy. Kim et al. [6] substitute a plain structure composed of 20 convolutional layers for anterior shallow network. This very deep CNN (VDSR) achieves impressive results for its substantial increase in accuracy. Subsequent articles demonstrate various ingenious methods, only a few of them, however, could surpass VDSR in accuracy.

In order to further improve the accuracy of current SR methods, we propose a high-accuracy deep convolution neural network to reconstruct image in a fix upscaling factor. By cascading HDCN, we can achieve scalable super-resolution and larger magnification. The merits of proposed HDCN are stated as follows:

-

(1)

For the purpose of reducing the blurry or over-smooth prediction, a robust penalty function is adopted to substitute for \(L_2\) loss function.

-

(2)

During training process, gradual training method is introduced to accelerate the rate of convergence. Training samples are divided into individual parts via calculating the average local gray value difference (ALGD) of their origin and residual patches.

-

(3)

Experimental results on benchmark datasets demonstrate the superior accuracy of proposed HDCN to some state-of-art SR methods.

2 Related Work

Cascade network for SISR: Original neural network based SISR methods can only reconstruct HR images in a fixed upscaling factor [2, 3]. Altering the scale factor would be accompanied by fine-tuning the whole model. It’s quite an ordeal for those non-professionals, their computers would cost extensive time. In order to augment practicability, researchers dedicate to implement models with feasible upscaling factors. Some scalable SISR methods, such as VDSR, utilize the similarity that images across different scales share some common structures and textures. Thus, images with different scales are combined into a large dataset to train a multi-scale model. Other methods cascade a fixed scale network until the desired size is reached. Wang et al. observe that a cascade of sparse coding based network (CSCN) trained for small scaling factors performs better than the single sparse coding based network trained for a large scaling factor. This strategy is simple but suffers the risk of error accumulation during repetitive upscaling. Fortunately, this risk could be reduced by enhancing the performance of each fixed-scale network. For instance, Zhen et al. employ non-local self-similarity (NLSS) search and shrink the upscaling factor to a small degree in their experiment. In contrast to the multi-scale model trained directly, cascade network could simplify training period and achieve better accuracy. In addition, larger upscaling factor can be achieved by cascading more sub-models.

Residual-like learning: Before introducing residual-like learning, NN based SISR methods tend to implement narrow network and prudent step during iteration. Gradient exploding/vanishing problem hampers deeper network structure and rate of convergence. Since He et al. accomplish a very deep residual network for image recognition and achieve impressive results, subsequent SISR methods tend to adopt residual-like structure to improve the performance of their models. On behalf of them, VDSR attempts to learn the residual image, defined as the difference between input and output image, rather than origin ground-truth image and substantial increases the accurate of reproduce results. From the perspective of network structure, VDSR, inspired by the merits of VGG net, deploys a deep and plain network. In order to approximate the initial structure of residual network, Yang et al. carry out identity mapping shortcuts as a projection to change feature dimensions. Considering the deep structure deployed in proposed model, residual learning is also utilized to improve the efficiency of training process.

3 Experiment

3.1 Model Structure

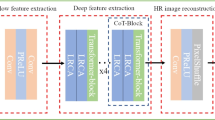

Inspired by ‘the deeper, the better’ [6], proposed network, as shown in Fig. 1, deploys a plain structure with d layers (\(d=25\)). The first layer casts as the feature extraction and representation operating on the input patches. 64 filters with size of \(3\times 3\) are utilized in this layer. Subsequent layers, except the last, dedicate to learn the end-to-end mapping from LR patch to its relative HR patch. 64 filters with size \(3\times 3\times 64\) are deployed in these layers. The last layer is aimed to reconstruct the HR image. One \(3\times 3\times 64\) filter is implemented to reproduce the image.

A single HDCN can only upscale the image with a fix factor s. To achieve other upscaling factors, HDCN can be cascaded as shown in Fig. 1. Considering the practicability and flexibility, s is set to 2. Thus, \(4\times \)magnification can be implemented by cascading two HDCN models and images with upscaling factor 3 can be reconstructed from \(4\times \)magnification via using bicubic interpolation. In addition, in Fig. 1, I represents the input LR image and x is the bicubic result of I. We denote the residual image as \(r=y-x\) where y is the output of single HDCN. Obviously, \(x_1\) and \(y_1\) are the input and output image of the next HDCN.

3.2 Enhancement Strategies

Loss function: When an original NN based SR model is utilized to reconstruct images, it can be observed that the reproduce results tend to contain blurry prediction or over-smooth edge. The primary cause is one LR image patch may correspond different similar HR patches while \(L_2\) loss function fails to recognize the complex potential relation [8]. Hence, a robust penalty function, proposed by Charbonnier et al., is introduced in proposed model to substitute for \(L_2\) loss function.

The loss function is defined as (1):

In (1), we denote R as the residual image, computed as the difference between the x and ground truth image. In addition, N is the amount of patches in each mini-batch and \(\xi \) is set to \(1e-3\) empirically.

Gradual training: The chosen of training images is a crucial factor of model’s performance. With the structure of network going deeper, more training images are required to suffice the training process, specifically, improve the model performance and overcome overfitting. However, extensive training images arise attendant problem, retarding the rate of convergence. Meanwhile, convergence curve of training process tends to be wavery. Those small undulations indicate instability of the model in some degree. The primary cause is the inhomogeneous distribution of edge-like patterns. Patches with sharp edges are easier to be learned than other kinds of patches. Considering the extensive patches (over 700000) prepared for the experiment, gradual learning is implemented to improve the efficiency of training process. In contrast to the gradual upsampling network (GUN) proposed by Zhao et al. [14], residual learning and gradient clipping strategies are retained to expedite rate of convergence. In addition, edge-like patches are classified by means of the ALGD, computed as (2):

where \(N_p\) denotes the amount of pixels in an image patch and \(G_p\) (\(p=1,2,\cdots , N_p\)) is the gray value of according pixel while \(\bar{G}\) represents the average gray value of that patch. The ALGD of the whole training samples is also calculated and denoted as \(\overline{V_{ALGD}}\). Hence, the evaluation parameter \(\delta \) of each patch can be calculated as (3):

The essential of the gradual learning is the learning process from easy to difficult. In other words, the model would achieve better performance gradually. The rate of convergence will be accelerated due to the reduction of instable undulations. While selecting edge-like patches, the ALGD of residual images is also taken into consideration to ensure the simpleness. We denote \(\delta _r\) as the ALGD of residual patches and \(\delta _o\) presents the ALGD of original patches. The training set is divided into three parts in accordance with the discriminant condition as shown in Table 1. These parameters are set by referring to [14].

In Fig. 2, we demonstrate several image samples. Patches in blue border are easier to be learned by model than patches in green one, whereas their \(\delta _o\) are both greater than 1.2. It indicates the significance of deploying the ALGD of residual patches.

Those images with small parameters are combined in one subset for their tiny contribution on improving performance. During gradual learning, sharp-edge patches of first part are prior utilized to form the initial training set. After temporary convergence, we then feed second subset to the network and it can be observed in Fig. 3 that the reproduce image becomes brilliant. Ultimately, those remainder with small parameters are added to fine-tune the model. However, the improvement is not perceptible for human visual. Details are demonstrated in the next chapter.

4 Implement Details

Training dataset: The origin training set is comprised of two datasets, 91 images from Yang et al. [12] and 200 images from Berkeley Segmentation Dataset [9]. VDSR and RFL [10] employ the identical dataset. Data augmentation is a crucial measure of overcoming the overfitting problem. The training data is augmented through three approaches. (1) Rotation: images can be rotated by 90, 180 or 270. Other degrees are not adopted for unnecessary imresize process. (2) Flipping: flip images horizontally and vertically. (3) Scaling: randomly downscale between [0.5, 1.0]. Limited by hardware, we operate the augmentation on Yang’s dataset and part of Berkeley’s. Ultimately, training set consists of approximately 2000 images.

Test dataset: SISR experiments are carried on four datasets: Set5 [1], Set14 [13], urban100 [4], B100. Set5 and Set14 are two benchmarks used for years. Urban100 and B100 are adopted extensively among recent state-of-the-art papers.

Parameters settings: As other mature models, momentum parameter is set to 0.9 and the weight decay is \(1e-4\). The initial learning rate is set to 0.1 and then decreases by a factor of 10 every 10 epochs (not less than 0.001).

The model converges very quickly with the first part of training set, approximately 3–5 epochs. We then feed the second part, consists of over 200000 image patches, to the network and decrease the learning rate to 0.01 during the training process and it costs 12–15 epochs to converge. Ultimately the remainder is utilized to fine-tune the model and the learning rate drops to 0.001 after 20 epochs. The total training process requires 30–35 epochs and roughly costs 30 h on a personal computer using a GTX 970. In addition, image patches with \(\delta _r<0.5\) are deserted due to their residual images are almost dark completely. It can be observed in Fig. 4 that the model with gradual learning converges faster and performs better than origin model.

Proposed model is implemented underwith Caffe [5]. Each mini-batch consists of 64 sub-images with size of 51*51. An epoch has 7812 iterations.

indicates the best and

indicates the best and  indicates the second best performance.

indicates the second best performance.Experiment results: Proposed model is compared to 6 state-of-the-art SR methods: A+[11], RFL, SRCNN, FSRCNN, DRCN [7], VDSR.

In Figs. 5 and 6, it can be observed that proposed model reduces blurry prediction, which proves the effectiveness of deploying robust loss function. In addition, Table 2 shows that proposed model can achieve higher accuracy in most of test set. Meanwhile, experiment results in B100 also indicate the existence of error accumulation during repetitive upscaling. However, cascaded model is more flexible to reconstruct images with larger magnification. Considering the Laplacian Pyramid Networks model [8], termed LapSRN, can also reproduce image with larger scale, proposed model is compared to LapSRN and VDSR. In Fig. 7, proposed model and LapSRN can both reconstruct image with less blurry prediction in virtue of the robust loss function, while proposed model performs better due to the model structure and gradual learning strategy.

5 Conclusions and Future Perspectives

In this article, we present a high accuracy SR method using deep convolution neural network. Proposed model can reduce the blurry prediction and reconstruct images with larger scale. After a more stable training process, proposed model achieves higher accuracy than some state-of-the-art works. Our further work is to combine other image process techniques, such as object recognition and optical character recognition (OCR), into SISR model. We believe that the more useful information is involved, the more brilliant reconstruct images we can obtain.

References

Bevilacqua, M., Roumy, A., Guillemot, C., Alberi Morel, M.L.: Low-complexity single-image super-resolution based on nonnegative neighbor embedding. In: British Machine Vision Conference (BMVC), pp. 1–12 (2012)

Dong, C., Loy, C.C., He, K., Tang, X.: Learning a deep convolutional network for image super-resolution. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8692, pp. 184–199. Springer, Cham (2014). doi:10.1007/978-3-319-10593-2_13

Dong, C., Loy, C.C., Tang, X.: Accelerating the super-resolution convolutional neural network. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9906, pp. 391–407. Springer, Cham (2016). doi:10.1007/978-3-319-46475-6_25

Huang, J.B., Singh, A., Ahuja, N.: Single image super-resolution from transformed self-exemplars. In: 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 5197–5206 (2015)

Jia, Y., Shelhamer, E., Donahue, J., Karayev, S., Long, J., Girshick, R.B., Guadarrama, S., Darrell, T.: Caffe: convolutional architecture for fast feature embedding. CoRR abs/1408.5093 (2014)

Kim, J., Lee, J.K., Lee, K.M.: Accurate image super-resolution using very deep convolutional networks. CoRR abs/1511.04587 (2015)

Kim, J., Lee, J.K., Lee, K.M., undefined, undefined, undefined, undefined: deeply-recursive convolutional network for image super-resolution. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1637–1645 (2016)

Lai, W., Huang, J., Ahuja, N., Yang, M.: Deep laplacian pyramid networks for fast and accurate super-resolution. CoRR abs/1704.03915 (2017)

Martin, D., Fowlkes, C., Malik, J., Tal, D.: A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In: IEEE International Conference on Computer Vision, vol. 02, p. 416 (2001)

Schulter, S., Leistner, C., Bischof, H.: Fast and accurate image upscaling with super-resolution forests. In: 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3791–3799 (2015)

Timofte, R., De Smet, V., Van Gool, L.: A+: adjusted anchored neighborhood regression for fast super-resolution. In: Cremers, D., Reid, I., Saito, H., Yang, M.-H. (eds.) ACCV 2014. LNCS, vol. 9006, pp. 111–126. Springer, Cham (2015). doi:10.1007/978-3-319-16817-3_8

Yang, J., Wright, J., Huang, T.S., Ma, Y.: Image super-resolution via sparse representation. Trans. Img. Proc. 19(11), 2861–2873 (2010)

Zeyde, R., Elad, M., Protter, M.: On single image scale-up using sparse-representations. In: Boissonnat, J.-D., Chenin, P., Cohen, A., Gout, C., Lyche, T., Mazure, M.-L., Schumaker, L. (eds.) Curves and Surfaces 2010. LNCS, vol. 6920, pp. 711–730. Springer, Heidelberg (2012). doi:10.1007/978-3-642-27413-8_47

Zhao, Y., Wang, R., Dong, W., Jia, W., Yang, J., Liu, X., Gao, W.: GUN: gradual upsampling network for single image super-resolution. CoRR abs/1703.04244 (2017)

Acknowledgment

The paper is supported in part by the Natural Science Foundation of China (No. 61672022 and No. 61272036), the Key Discipline Foundation of Shanghai Second Polytechnic University (No. XXKZD1604), and the Graduate Innovation Program (No. A01GY17F022).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Tan, W., Guo, X. (2017). High-Accuracy Deep Convolution Neural Network for Image Super-Resolution. In: Yin, H., et al. Intelligent Data Engineering and Automated Learning – IDEAL 2017. IDEAL 2017. Lecture Notes in Computer Science(), vol 10585. Springer, Cham. https://doi.org/10.1007/978-3-319-68935-7_23

Download citation

DOI: https://doi.org/10.1007/978-3-319-68935-7_23

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-68934-0

Online ISBN: 978-3-319-68935-7

eBook Packages: Computer ScienceComputer Science (R0)