Abstract

Academic reading plays an important role in researchers’ daily life. To alleviate the burden of seeking relevant literature from rapidly growing academic repository, different kinds of recommender systems have been introduced in recent years. However, most existing work focused on adopting traditional recommendation techniques, like content-based filtering or collaborative filtering, in the literature recommendation scenario. Little work has yet been done on analyzing the academic reading behaviors to understand the reading patterns and information needs of real-world academic users, which would be a foundation for improving existing recommender systems or designing new ones. In this paper, we aim to tackle this problem by carrying out empirical analysis over large scale academic access data, which can be viewed as a proxy of academic reading behaviors. We conduct global, group-based and sequence-based analysis to address the following questions: (1) Are there any regularities in users’ academic reading behaviors? (2) Will users with different levels of activeness exhibit different information needs? (3) How to correlate one’s future demands with his/her historical behaviors? By answering these questions, we not only unveil useful patterns and strategies for literature recommendation, but also identify some challenging problems for future development.

Similar content being viewed by others

Keywords

1 Introduction

A major part of researchers’ daily life is academic reading, which can help them acquire new knowledge, find related work in their domains and keep them up-to-date with the research frontier [17]. Traditionally, academic users rely on keyword-based search or browsing through proceedings of conferences and journals to find interested literature. However, such information seeking process becomes more and more difficult since a huge number of academic papers are coming out from a lot of conferences and journals [14].

To ease this difficulty, various literature recommender systems have been introduced for academic users, such as TechLens [18], CiteULike [3] SciRecSys [1], Refseer [11] and so on. Although different types of recommendation techniques have been adopted in literature recommender systems, little work has yet been done on analyzing the academic reading behaviors to understand the reading patterns and information needs of real-world academic users. There are some early work on analysis of information seeking behaviors of academic users [2, 7, 10, 14, 16, 20]. For example, Hemminger et al. [10] conducted a census survey to quantify the transition to electronic communications and how this affects different aspects of information seeking. Luis et al. [20] tried to understand the most frequent type of academic search conducted by different users through transaction log analysis. However, these work either relied on questionnaires to survey very limited faculty members [10, 14, 16], or only focused on analyzing users’ query patterns based on some search logs [2, 7, 9, 20].

In this work, we propose to conduct some in-depth analysis over academic reading behaviors using real-world scholarly usage data, to unveil the underlying reading patterns and information needs of academic users. We argue that this type of research would be useful for improving existing literature recommender systems or designing new ones. Specifically, we take the large scale academic access data from OpenURLFootnote 1 as a good proxy of users’ academic reading behaviors, with the assumption that papers accessed by a user are those he/she read or would like to readFootnote 2. We then match all the access records to a large academic repository to identify the corresponding papers, and extract different types of meta-data (e.g., author, venue, and publication time) which would be useful in detailed analysis.

We then conduct different types of analysis over this user behavioral dataset, including global analysis, group-based analysis and sequence-based analysis. By these analysis, we aim to address the following research questions:

- Q1::

-

Are there any regularities in users’ academic reading behaviors? (global analysis)

- Q2::

-

Will users with different levels of activeness exhibit different reading patterns? (group-based analysis)

- Q3::

-

How to correlate one’s future reading with his/her reading history? (sequence-based analysis)

In brief, here are some take-away conclusions based on our analysis:

-

1.

The regularities in both frequency and time show that literature recommendation is in general a difficult problem due to the long-tail phenomena and diverse reading patterns in user behaviors. A good point is that systems can obtain sufficient recommendation resources by only focusing on a small set of important authors and venues.

-

2.

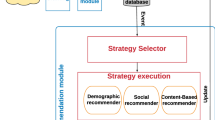

Users with different levels of activeness exhibit different reading patterns in terms of recency and popularity of the papers. Therefore, a smart recommender system needs to emphasize different factors for different groups of users rather than to employ a unified model.

-

3.

Both content-based filtering and collaborative filtering can cover partial future readings based on historical behaviors with different efficiencies. However, there are a good number of future readings difficult in finding by these means, which will be a critical challenge for designing a better literature recommender system.

The rest of the paper is organized as follows: In Sect. 2, we describe the dataset and some pre-processing steps. We then present the global analysis, group-based analysis, and sequence-based analysis in Sects. 3, 4 and 5, respectively. Section 6 discusses the related work while conclusions are made in Sect. 7.

2 Dataset Description

Although user behavior analysis should be the foundation of designing a good recommender system, this is often difficult to be conducted due to the lack of user data or some privacy issues. In this work, we make use of publicly available paper access logs as well as a large scale academic repository to conduct the analysis on academic reading behaviors.

The public paper access logs are from OpenURL router data. Typically, each entry in the log records who (encryptedUserIP) accessed which paper (a title) at what time (logData, logTime). Note that the encryptedUserIP (anonymised IP address or session identifier) can be taken as the unique ID to identify a user. we collected 17, 040, 154 records between April 1, 2011 and December 31, 2014 from OpenURL. By removing duplicated records which a same user generates in a short time interval and some portal IPsFootnote 3, we obtained 8, 675, 280 distinct records over 1, 089, 916 users containing 6, 667, 942 papers.

We then map these papers to a large scale academic repository which contains over 10 million papers. In this way, 285, 541 distinct papers were successfully matched, covering 441, 840 records and 118, 436 users in total. We extract all the meta-data over these papers, including author, venue, publication time, keywords, domain and citations for later analysis. This matched dataset are then taken as a proxy of academic reading behaviors in the following analysis.

3 Global Analysis

In this section, we conduct some global analysis over this dataset, with the purpose to identify the regularities in users’ academic reading behaviors to guide system design. Here we call the whole sequence of reading records of a distinct user as the user’s reading profile. By this analysis, we try to obtain some idea of the overall interests and behavior of users in reading papers.

3.1 Regularities in Frequency

As might be expected, users vary greatly in the frequency of their academic reading. As shown in Fig. 1(a), user’s reading count follows the power law distribution, indicating most users read very few papers while a few users read very frequently. Specifically, about 92.14% users read less than 4 papers and average reading count is about 3.93. It shows that the reading behavioral data is extremely sparse due to the inactivity of large proportion of users, which is a typical phenomenon in recommender systems. This makes the literature recommendation problem very challenging, since it is usually difficult to achieve good recommendation performance on long-tail users. We further analyze the reading frequencies with respect to venue. As shown in Fig. 1(b), which also follows the Zipf’s law. The results show that most readings focus on a small set of venues’ publications, e.g., \(84.39\%\) readings are within 20% venues. This Zipf distribution is in contrary good news for recommender systems. It indicates that the recommender system can obtain sufficient paper resources for recommendtion by only collecting publications from a small set of important venues.

As users read more and more papers, the venue and keyword lists corresponding to the papers grow over time. These lists may exhibit very different growth rates, however, reflecting how users’ interests develop and change over time. As shown in Fig. 2, some users’ (#675,#577) venue lists grow steadily, reflecting concentrated reading behaviors. While some users’ (#475,#373) venue lists grow rapidly, reflecting very broad reading behaviors. The different growth rates can also be viewed with respect to keywords, reflecting very focused interests (#675) and diverse interests (#373) in reading respectively. It is more interesting to find that users who read broadly may have very focused interests (e.g., user #475 has a large venue list but a relatively small keyword list), while users who read in a narrow range may exhibit diverse interests (e.g., user #577 has a relatively small venue list but a large keyword list). All these above cases reflect the different reading patterns among users, calling for corresponding recommendation strategies (e.g., diverse or focused recommendations) when designing literature recommender systems.

3.2 Regularities in Time

Although different users may read at different time, there are general reading patterns with respect to time. As shown in Fig. 3, we can see that most users access the papers during the afternoon, and there are clearly rise-and-fall patterns with respect to months, with peaks reached usually in March and November. This phenomenon might be related to users’ work time. Since most academic users are faculty members and students, it is not surprising to see the low reading intensity between June to September when summer vacation takes place.

Different reading patterns from two specific users (#2583, #7116). (A, D) Time stamps of reading events. Each vertical line represents a reading event occurring at the time stamp. (B, E) The time intervals between two adjacent events, where the height corresponds to the interval. (C) Log-log plot of the distribution of time intervals, which follows a power law distribution \(P(\tau )\simeq \tau ^{-1.39}\); (F) Log-linear plot of the distribution of time intervals, which follows an exponential distribution \(P(\tau )\simeq 10^{-0.45\tau }\).

We further examine the time intervals of academic readings of different users. We find that there are two interesting temporal patterns as shown in Fig. 4. The user #2583 in the top read 302 papers from 2012/05/16 to 2014/12/04 (932 days), and show a burst phenomenon in temporal patterns, i.e., short time frames of intense readings are separated by long idle periods. The existence of bursty reading behaviors can also be supported by the evidence that the reading time interval follows the power law distribution as depicted in Fig. 4(C). While the user #7116 in the bottom who have read 509 papers from 2011/04/01 to 2012/02/02 (306 days) exhibits quite different reading patterns. Readings happen regularly in a Poisson process, resulting in an exponential distribution of time interval as shown in Fig. 4(F).

To further check the proportions of users that exhibit power-law and exponential temporal distributions, we randomly sampled 1, 000 users who have read more than 100 papers. We then apply the goodness-of-fit test based on bootstrapping and the Kolmogorov-Smirnov (K-S) statistic to check which hypothesis is plausible [6].

As a result, we find that 51.21% users follow a power law temporal distribution, while 25.65% users follow a exponential temporal distribution. Besides, there are 23.14% users that cannot be modeled by either distribution confidently, which might be mixed behaviors and require further investigation in the future. From the results we can see that there are quite different temporal patterns in academic reading, and literature recommender systems should take these patterns into account to design better recommendation strategies. For example, most recommender systems would like to push recommendations regularly to users via email or notification. This might be acceptable for users with regular reading behaviors (i.e., exponential temporal distribution), but annoying for users with bursty reading behaviors (i.e., power law distribution).

4 Group-Based Analysis

In this section, we aim to analyze this problem by conducting finer group-based analysis. Specifically, we divide users into three groups. Users who have read very few (i.e., less than 4 papers) are categorized as inactive users. Users who have read a lot (i.e., more than 100 papers) are categorized as active users. The rest are categorized as normal users. We then analyze what kind of papers these three groups of users prefer to read in terms of recency and popularity.

4.1 Recency

We first investigate the question whether users prefer newly published papers or some older ones. To answer this question, we define the recency of a reading as the time interval between users’ reading time and the paper’s publication time (i.e., the year a user read the paper minus the year it published), and plot the average distribution of recency over different groups of users in Fig. 5.

As we can see, in general users prefer reading newly published papers. In average, more than \(53\%\) readings are within five years of the paper’s publication over all the users. Therefore, a good literature recommender system should assign priority to recent publications. However, when we compare different groups, we can see that inactive users read much less newly published papers but more older papers as compared with normal and active users. For example, the percentage of reading within five years is about \(50.13\%\) for inactive users, while \(56.5\%\) for active users. We conduct the K-S test among the distributions of the three groups and find the differences are significant (p\(\prec \)0.05).

The possible reason of the above observation is that many inactive users might be fresh men in research or occasional users, who would be more likely to survey or read some existing old work. While the active users are more likely to be serious academic users (e.g., Ph.D. students, researchers or faculties), who read frequently and would take more attention to the up-to-date work since they have already been familiar with their domains.

4.2 Popularity

Finally, we check the popularity of the papers read by different groups of users. Here we define the popularity of a paper as the number of distinct users who have read it. We then depict the average distributions of paper popularity read by users in these three groups in Fig. 6. As we can see, users’ most readings are non-popular papers (i.e. papers with popularity less than 5). However, inactive users seem to be more likely to read popular papers than normal and active users. Specifically, in average more than \(23.58\%\) of readings are papers with popularity larger than 5 for inactive users, while the corresponding percentage is \(15.19\%\) for normal users and \(11.25\%\) for active users, respectively. The results indicate that recommending popular papers would be more effective for inactive users than normal and active users.

5 Sequence-Based Analysis

In literature recommendation, one aims to recommend a set of papers for each user based on his/her historical behaviors. In real recommender systems, A common practice is to score over a proper candidate set rather than the entire academic repository for recommendation. Therefore, it would be of great importance to generate the candidate set effectively. In this section, we conduct sequence based analysis over users’ reading behaviors to investigate this correlation.

Specifically, we partition each user’s readings into two parts according to the time stamp, and take the latest paper read by the user as the future reading and the rest as the historical readings. We then examine the connections between these two parts from both the content-based filtering and collaborative filtering views. We aim to investigate whether these filtering methods can generate proper candidate sets covering the future readings.

5.1 Coverage of Content-Based Filtering

Firstly, we investigate whether the content-based filtering can generate the candidate set with good coverage over users’ future readings. Given users’ reading history, we analyze the following five ways for candidate generation based on the meta-data of the historical readings.

-

\(*\) Co-venue: users would like to follow the same venues of the historical readings to find the next reading.

-

\(*\) Co-author: users would like to follow the same authors in the historical readings to find the next reading.

-

\(*\) Co-keyword: users would like to use the same keywords in the historical readings to find the next reading.

-

\(*\) Ref & Co-ref: users would like to follow the references of the historical readings to find the next reading. Note here we take the direct references of the historical readings, as well as papers that share at least one same reference of the historical readings (co-reference) as the candidates.

-

\(*\) Cite & Co-cite: users would like to follow the citations of the historical readings to find the next reading. Note here we take the direct citations of the historical readings, as well as papers that share at least one same citation of the historical readings (co-citation) as the candidates.

For each way of candidate generation, we calculate the coverage ratio as follows

where \(\#(Hit)\) denotes the number of users whose future reading is within the generated candidate set, and \(\#(User)\) denotes the total number of users in calculation. The analysis is conducted over different groups of users and also the overall users, and the results are depicted in Fig. 7. From the results we observe that: (1) The coverage ratio increases with the richness of users’ historical readings. For example, the coverage ratio with all the five ways on inactive users is only \(43.7\%\) while that on active users is about \(96.1\%\). (2) Among the five ways, Co-venue and Co-keyword can cover more users’ future readings than others. This is not surprising since the candidate sets generated in these two ways are much larger than the others, and we will further discussed this in Sect. 5.3. (3) With all the five ways of candidate generation methods, the coverage ratio over all the users reaches 52.5%, which represents the upper-bound of user coverage of the content-based filtering methods. In other words, there are about 47.5% users whose future readings cannot even be included in the candidate sets from content-based filtering methods.

5.2 Coverage of Collaborative Filtering

Another widely used recommendation method is collaborative filtering (CF), which can be further categorized as user-based CF and item-based CF. Here we analyze the coverage ratio of users over these two types of CF methods.

Specifically, for each CF method, we vary the size of nearest neighbor to see whether a user’s future reading is within the corresponding candidate set generated by the CF method. Note here Jaccard similarity is used to compute the similarity between users/items since the user-item relation matrix is typically binary. We depict the coverage ratio over different groups of users and overall users in Fig. 8.

We have the following observations over the results: (1) When neighbor size is small, the coverage ratio of item-based CF is much better than user-based CF. However, when taking all the neighbors into account, the user-based CF get better coverage since its neighbor size is much larger than that of item-based CF. (2) The coverage ratio of user-based CF is higher than item-based CF for inactive users but lower for normal and active users. Overall, user-based CF wins a little bit since there are more inactive users. (3) The overall coverage ratio with both two types of CF methods reaches \(32.25\%\), leaving a large proportion of users uncovered at all.

5.3 Integrated Analysis and Discussion

Based on the above analysis, we can see that both content-based filtering and collaborative filtering can cover some proportions of users. We further compared the set of users covered by these two types of methods by computing the Jaccard similarity between them. We find that the Jaccard similarity is \(35.45\%\), indicating that there is some overlap between the user set covered by content-based filtering and collaborative filtering. If we integrate both filtering methods, the overall coverage ratio can reach \(64.68\%\).

As mentioned previously, it is somehow unfair to only compare the coverage of different methods since the candidate sets generated by different methods vary largely. A method that can generate a large candidate set, like Co-venue in content-based filtering, would naturally be able to cover more users. However, by introducing a larger and inevitably noisier candidate set, it will increase the computational complexity and also the prediction difficulty. To take these factors into account, here we define the efficiency of a method as follows

where \(R_{cover}\) is the coverage ratio defined in Eq. 1, and \(\bar{R}_{Shrink}\) denotes the average shrinkage ratio of the method with respect to the whole academic repository

where \(\#(Candidate_{i})\) denotes the candidate size for the i-th user generated by the method, \(\#(C)\) denotes the repository size, and the summation is taken over all the users. From the above definition we can see, a method is efficient if it can generate a small candidate set but cover a large proportion of users’ future reading.

We compare the efficiency of different methods as show in Table 1. As a result, we can see that although Co-venue and Co-keyword can achieve better coverage ratios as shown in Fig. 7, they are not the most efficient ways since they will generate very large candidate sets. Meanwhile, the efficiencies of the two CF methods are not so high since their coverage ratios are low. The most efficient ways for candidate generation are Cite & Co-cite and Ref & Co-ref, followed by Co-author. For literature recommender systems, the efficiency would then be a good reference metric when choosing which methods for candidate generation given limited computational resources.

6 Related Work

In this section, we briefly review the related work of academic information seeking behavior analysis and usage of academic access data.

6.1 Academic Information Seeking Behavior Analysis

There have been many previous studies [2, 7, 10, 14, 16, 17, 20] on analyzing the academic information seeking behaviors to help design library systems or academic search engines. For example, Niu et al. [14] examined the relationships between scientists’ information-seeking behaviors and their personal and environmental factors. Tenopir et al. [16, 17] analyzed article seeking and reading patterns in academic faculty readers through surveys. They found that subject discipline of the reader influences many patterns, including amount of reading, format of reading, and average time spent per reading. However, these work either relied on questionnaires to survey very limited faculty members [10, 14, 16, 17], or only focused on analyzing users’ query patterns based on some search logs [2, 7, 20].

6.2 Usage of Academic Access Data

The public paper access logs from OpenURL have been utilized in different ways for literature recommendation [4, 13, 15, 19]. For example, Pohl et al. [15] used the access data to identify related papers for literature recommendation. The BibTip [13] recommended related literatures based on the observation of user patterns and the statistical evaluation of the usage data. Although academic access data have been leveraged for literature recommendation, there is little work on analyzing the data for better understanding the reading patterns and information needs of academic users.

Usage log data, defined as a collection of individual usage events recorded for a given period time [12], has drawn a lot of attention in recent years [4, 5, 8, 12, 19]. Bollen et al. [4] presents a technical, standard-based architecture for sharing +usage information. They found the generated relationship networks encode which journals are related in their usage to can be used to recommend documents. Gorraiz et al. [8] focused on the disciplinary differences observed for the behavior of citations and downloads. They pointed the fact that citations can only measure the impact in the ‘publish or perish’ community.

7 Conclusion

In this paper, we present some in-depth analysis of academic reading behaviors based on a large scale scholarly usage data. We perform the global analysis, group-based analysis as well as sequence-based analysis to unveil the underlying reading patterns and information needs of real-world academic users.

The analysis of user behaviors is a foundation of designing any interactive systems. All the findings in our work may provide some guidelines to improve existing literature recommender systems as well as designing new ones. One of our future work is to build an literature recommender system to integrate the factors revealed in this paper and verify these conclusions from users’ feedbacks.

Notes

- 1.

- 2.

- 3.

Note here we treat those IPs with extremely high volume of accessed papers (i.e., more than 3000 a year) as portals rather than real users.

References

Le Anh, V., Hoang Hai, V., Tran, H.N., Jung, J.J.: SciRecSys: a recommendation system for scientific publication by discovering keyword relationships. In: Hwang, D., Jung, J.J., Nguyen, N.-T. (eds.) ICCCI 2014. LNCS (LNAI), vol. 8733, pp. 72–82. Springer, Cham (2014). doi:10.1007/978-3-319-11289-3_8

Asunka, S., Chae, H.S., Hughes, B., Natriello, G.: Understanding academic information seeking habits through analysis of web server log files. J. Acad. Librarianship 35(1), 33–45 (2009)

Bogers, T., van den Bosch, A.: Recommending scientific articles using citeulike

Bollen, J., de Sompel, H.V.: An architecture for the aggregation and analysis of scholarly usage data. In: Proceedings of ACM/IEEE Joint Conference on Digital Libraries. Chapel Hill, pp. 298–307, 11–15 June 2006

Bollen, J., Van de Sompel, H., Rodriguez, M.A.: Towards usage-based impact metrics: first results from the mesur project. JCDL 2008, pp. 231–240. ACM, New York (2008)

Clauset, A., Shalizi, C.R., Newman, M.E.J.: Power-law distributions in empirical data. SIAM Review 51(4), 661–703 (2009)

Dogan, R.I., Murray, G.C., Névéol, A., Lu, Z.: Understanding pubmed\(^{\textregistered }\) user search behavior through log analysis. Database, 2009 (2009)

Gorraiz, J., Gumpenberger, C., Schlögl, C.: Usage versus citation behaviours in four subject areas. Scientometrics 101(2), 1077–1095 (2014)

Han, H., Jeong, W., Wolfram, D.: Log analysis of academic digital library: user query patterns. iConference 2014 Proceedings (2014)

Hemminger, B.M., Lu, D., Vaughan, K.T.L., Adams, S.J.: Information seeking behavior of academic scientists. JASIST 58(14), 2205–2225 (2007)

Huang, W., Wu, Z., Mitra, P., Giles, C.L.: Refseer: a citation recommendation system. In: JCDL. U.K, pp. 371–374, 8–12 Sep 2014

Kurtz, M.J., Bollen, J.: Usage bibliometrics. CoRR, abs/1102.2891 (2011)

Mönnich, M., Spiering, M.: Adding value to the library catalog by implementing a recommendation system. D-Lib Magazine 14(5), 4 (2008)

Niu, X., Hemminger, B.M.: A study of factors that affect the information-seeking behavior of academic scientists. JASIST 63(2), 336–353 (2012)

Pohl, S., Radlinski, F., Joachims, T.: Recommending related papers based on digital library access records. In: JCDL 2007, Proceedings. Vancouver, Canada, pp. 417–418, 18–23 June 2007

Tenopir, C., King, D.W., Bush, A.: Medical faculty’s use of print and electronic journals: changes over time and in comparison with scientists. J. Med. Libr. Assoc. 92(2), 233 (2004)

Tenopir, C., Volentine, R., King, D.W.: Article and book reading patterns of scholars: findings for publishers. Learn. Publish. 25(4), 279–291 (2012)

Torres, R., McNee, S.M., Abel, M., Konstan, J.A., Riedl, J.: Enhancing digital libraries with techlens+. In: ACM/IEEE Joint Conference on Digital Libraries, JCDL 2004, Proceedings. Tucson, pp. 228–236, 7–11 June 2004

Vellino, A.: A comparison between usage-based and citation-based methods for recommending scholarly research articles. ASIST 47(1), 1–2 (2010)

Villn-Rueda, L., Senso, J.A., de Moya-Anegn, F.: The use of OPAC in a large academic library: a transactional log analysis study of subject searching. J. Acad. Librarianship 33(3), 327–337 (2007)

Acknowledgements

The work was funded by 973 Program of China under Grant No. 2014CB340401, the National Key R&D Program of China under Grant No. 2016QY02D0405, the National Natural Science Foundation of China (NSFC) under Grants No. 61232010, 61472401, 61433014, 61425016, and 61203298, the Key Research Program of the CAS under Grant No. KGZD-EW-T03-2, and the Youth Innovation Promotion Association CAS under Grants No. 20144310 and 2016102.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Fan, Y., Guo, J., Lan, Y., Xu, J., Cheng, X. (2017). Academic Access Data Analysis for Literature Recommendation. In: Wen, J., Nie, J., Ruan, T., Liu, Y., Qian, T. (eds) Information Retrieval. CCIR 2017. Lecture Notes in Computer Science(), vol 10390. Springer, Cham. https://doi.org/10.1007/978-3-319-68699-8_4

Download citation

DOI: https://doi.org/10.1007/978-3-319-68699-8_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-68698-1

Online ISBN: 978-3-319-68699-8

eBook Packages: Computer ScienceComputer Science (R0)