Abstract

Head-Mounted displays, while providing unprecedented immersiveness and engagement in interaction, can substantially add mental workload and visual strain on users. Being a novel technology, users often do not know what to expect and therefore accept visual stress as being state of the art. Assessing visual discomfort is currently possible through questionnaires and interviews that interrupt the interaction and provide only subjective feedback. Electroencephalography (EEG) can provide insights about the visual discomfort and workload of HMDs. We evaluate the use of a consumer-grade Brain Computer Interface for estimating visual discomfort in HMD usage in a study with 24 participants. Our results show that the usage of a BCI to detect uncomfortable viewing conditions is possible with a certainty of 83% in our study. Further the results give insights on the usage of BCIs in order to increase the detection certainty by reducing costs for the hardware. This can pave the way for designing adaptive virtual reality experiences that consider user visual fatigue without disrupting immersiveness.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Visual stress, eye strain or other symptoms caused by the visual load in a head mounted display (HMD) are under research for decades (e.g. [1, 15]). Reasons for the existing discomfort are the physical and optical properties of the HMD and its eyepieces or the mismatch to natural vision caused by the computer rendered picture [1, 12, 15]. Arising symptoms of asthenopia (eye strain) range from double vision, prismatic effects, blurry vision and more [1, 15]. With the introduction of fully immersive HMDs like the Oculus Rift to the consumer market, new challenges arise for the usability of HMDs [11]. With the absence of professional guidance during private use, these symptoms can lead to a bad experience or might even cause health risks [1].

During the usage of a HMD, stress caused by the visual channel is even worse then in traditional screen-based applications. The reason for this is that the HMD user can not simply look away from the screen to relax his eyes and further the HMD should not be taken off in order to keep the users’ mental state of being present in the virtual environment (VE). A possible reason for uncomfortable vision are virtual objects appearing in very close position to the users eyes. In this case disparity between the left and the right eyes picture and very strong vergence-accommodation conflict are the reason for the discomfort [10]. To assess if a user experiences visual discomfort, the most common method is to use qualitative questionnaires as used by Shibata et al. [19], with the drawback of missinterpretations and missing real time ability [3]. Frey et al. [3] presented an objective method to assess visual discomfort using medical-grade Electroencephalography (EEG) device using a screen-based setup [3]. Their results show, that it is possible to detect the users brain reacting to the visual discomfort. In our work, we focus on the detection of visual discomfort using consumer EEG devices in a VE. We focus on a low number of electrodes and a consumer EEG device building on top of Frey et al.’s earlier work [4] to test the feasibilty of automatic detection of visual discomfort in a setup that is wearable and low-cost compared to medical-grade EEG. In a study with 24 participants in a VE we test the impact of close and far object locations in a VE on EEG data and prove the feasibility of detecting visual discomfort with a certainity of 83% with 2 electrodes.

2 Background and Related Work

The research on the effects of viewing stereoscopic pictures is ongoing for decades (e.g. [9, 10, 12, 17]), in particular as visual discomfort is a central health issue when using a HMD [1]. The important outcome of this research regarding our study is that mistakes in the rendering of the left and right picture of the stereo image pair can trigger visual discomfort or even pain. For binocular pictures the zone of comfortable viewing [3] can be violated, for example when looking at an object that is very close to the user’s eyes. This happens, as the computer generated picture for the left and the right eyes image need to be disparate to create the binocular perception of depth [10]. At one point the disparity gets too high and the user’s brain is unable to fuse the two images into one.

There are several models which describe the emergence of this effect, but not to its full extent and without recognizing individual differences [10]. Visual discomfort describes this individual feeling of a user under certain visual conditions. Visual fatigue is the counterpart that can be objectively measured for example due to accommodation power or visual acuity [10]. These measurement methods need optometric instruments which cannot be used when wearing a HMD or for detecting visual stress in real time. Further they do not reflect the individual properties of the user, therefore questionnaires are used [10]. These include the user experience and expectations on the technology. However questionnaires have the risk of misinterpretation by the user. There are several different questionnaires as summarized by Lambooij et al. [10], which rate uncomfortable vision, burning or irritation of the eyes, to name but few. We will build upon a questionnaire suggested by Sheedy et al. [18].

With the onset of consumer EEG devices in the market, their use in interface and system evaluation is made more feasible. Monitoring brain activity using consumer EEG is a promissing way of detecting visual stress, as it enables near real time reaction to the user’s visual perception and does not interfere with the visual experience of the user in the HMD [4]. An medical EEG device can detect the brain wave signals introduced by a stereoscopic image on a screen and classify the results within the time window of one second as it could be shown by Frey et al. [4]. Our focus is on the objective detection of visual discomfort by measuring the brain activity in VR in order to take individual factors like individual predisposition or training into account.

3 Study

Our study is designed to evaluate the use of consumer EEG for detecting visual discomfort in VR. Similar to Frey et al. [3], we use Shibatas et al. [19] estimation of comfortable (C) and non-comfortable (NC) depths to show objects in the VE.

3.1 Apparatus

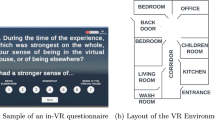

An Oculus Rift CV1, with a minimum of 90 frames per second rendered with Unity 5.4.1f1, was used during the study. The focal point of the Oculus is calculated to be about 1.3 m away from the users eyes [16]. EEG signals were acquired using the Emotiv EPOC with a sampling rate of 128 HzFootnote 1 (Fig. 1, left). The EPOC has 14 felt electrodes that are positioned according to the 10–20 positioning system (e.g. [7]).

3.2 Stimuli

To generate visual stress, we build upon the method suggested by Frey et al. [3]. They use the vergence-accommodation conflict (VAC) to stimulate the visual system. Our independent variable therefore is the spatial position of objects in front of the users eyes, which codes the visual disparity leading to visual discomfort. Objects in six different spatial levels are presented to the participant (Fig. 1, right). The presented objects are scaled in order to be perceived to be constant in size on all levels of depth. Three of these levels lie in a range of visual comfort (C) and three in a visual discomfort (NC). As calculated, only objects presented in the visual discomfort zone are supposed to create visual stress [19] and the calculated border matches the general advice from the Oculus Rift developer guide with 0.75 m [16]. In Fig. 1 the objects position changes in increments of 0.1 m with NC between 0.1 und 0.4 cm and C conditions between 1.1 m and 1.4 m in front of the users’ eyes. The VAC neutral position at 1.3 m defines the spatial depth without difference in vergence and accommodation. The objects presented are ball, cylinder and cube in random depths levels, timespans, position in X and Y direction frontal to the user, rotation and time, between 2.7 s to 3.2 s.

3.3 Measures

We collected subjective measures during a questionnaire phase in order to confirm existing knowledge. EEG data measured by the EPOC EEG are recorded during the measurement phase. Questionnaire phase and measurement phase are conducted alternating, three times each. Both phases are described in detail below.

Questionnaire phase - subjetive rating of stimuli position: In this phase participants had to rate the stimulus on a 7 point likert scale from none, slight, medium to severe. Intermediate stages like none to slight are also taken into account. For better handling during the study, the items as suggested by Sheedy [18] are clustered into three questions: Do your eyes feel impaired? For example: burning, aching, irritation, watery or dry?, Is your vision impaired? For example: blurred or double? and How much headache do you feel?. The single items were ranked when the clustered questions deviated from none. While asking the questions a cube of a contrasting color is shown at the VAC distance (Fig. 1). All six comfort levels are rated three times by each participants.

Measurement phase - EEG recording with accompanying attention task: The pure EEG measures were recorded during the measurement phase, when the random object was presented. After that the participants had to conduct an attention task to prevent looking away from the object. The procedure was the same as in the questionnaire-phase, but instead of asking the participants for a subjective rating after the object presentation, a small green ball in the center of the view appears for 0.7 to 1 s at the VAC position after the random stimuli object disappeared. The users task was to move this ball to the X and Y position of the presented stimuli by a game pad. After that the next randomized object appears until all 6 comfort levels are presented. In total each level is presented 60 times.

3.4 Participants

We advertised the study through University mailing lists and social media. 24 participants took part in our study (8 female, Mage = 25 years, SD = 5). All participants had normal or corrected-to-normal vision. None of the participants suffered from neurological disorders. 59% participants had prior experience with HMDs. Participants were awarded 10 Euro for participation. A Titmus ring stereotest with a minimum detection of 100 arcseconds disparity was passed by all participants to check for missing binocular vision [20].

3.5 Procedure

Participants were first greeted and the study procedure and purpose was explained. They then signed informed consent forms, answered demographics questions and the Titmus stereo test was conducted. We then fitted participants with the Emotiv EPOC and the Oculus Rift devices. The EPOC was adjusted and the electrodes were wet using saline solution. The EPOC control panel software was used to ensure that all electrodes were achieving excellent connectivity. The interpupillar distance was set for the HMD as measured with a pupillometer [2]. Participants practiced the measurement phase until they felt comfortable with the gamepad. A simulator sickness questionnaire (SSQ) was asked before and after the study [8]. Then the study started with a questionnaire phase alternating followed by a measurement phase. Both phases are presented three times during the study. Between the phases users where allowed to rest and move their heads. The duration of the study was approximately 1 h with 30 min using the HMD. The study took part in a quiet room with dimmed light.

4 Results

4.1 Subjective Rating of the Comfort Zones

All ratings in the questionnaire phase (none, slight, medium and severe) are translated into points from 0 to 4 respectively. Using a Wilcoxon Signed Rank test, we found significantly higher score for the NC conditions than the C conditions, for the following items: eye-discomfort:MdnNC = 10.2, MdnC = 3.6 (T = 102.00, p <.02), vision: MdnNC = 12.5, MdnC = .01 (T = 300.00; p <.01). The headache item was insignificant (p >.125). The overall results for the short questionnaires show a significantly higher rating in NC condition (Mdn = 12.5), than in C condition (Mdn = .01, T = 300.00, p <.01). The comparison of the total SSQ score shows significant higher results after the experiment (Mdn = 13.64) then before the experiment (Mdn = 6.1), T = 245.5, p <.01).

4.2 EEG Analysis

EEG analysis was done using EEGLab V14 toolbox and Matlab 2017A. We first applied a band pass filter between 0.5 Hz and 25 Hz to remove DC drift and high frequency artifacts from muscle movements. Independent Component Analysis was applied to identify components with eye-movement. The identified muscle and eye-movement components were rejected and the rest of the analysis was done using the remaining components. The data was then divided into epoches starting 0.7 s before each stimulus and ending 2.7 s after each stimulus. This resulted in 198 epochs per participant and overall 4752 epochs. As suggest by Ghaderi [5] electrooculographic activity are identified with the ADJUST toolbox and removed. Epochs containing electrodes with a distance from positive to negative peaks of more than 150\(\upmu \)V are regarded noisy and rejected [13]. Figure 2 shows the average EEG activity in microvolts for all participants between NC and C conditions and all 14 electrodes just before, during, and after the onset of the stimulus (at 0 ms). The red graph represents the event related potentials (ERP) of NC conditions and the green graph the ERP of C conditions. Both graphs develop quite similarly, but part in between 500 ms and 1400 ms. Close similarity can be found in the peak at 300 ms representing the P300 signals. Both graphs start to rise to this peak at the same time, the graph for NC condition drops down at a later time. Analysing the data shows, that the electrodes P7, P8, O1 and O2 show the strongest brain reaction.

ERP of perceptual related brain areas with electrodes P7 and P8 [6], visualized into NC and C conditions with stimuli appearance at 0 ms.

Figure 3 shows the ERP for C and NC conditions using electrodes P7 and P8 on the parietal lobe of the brain, wich is responsible for perception [6]. A steep peak at 300 ms is followed by a more consistent rise with a climax at about 550 ms and a slow descent that reaches ground level at 1000 ms. The graph of NC condition shows minor peaks in the first 300 ms, where the graph representing C condition rather drops below ground level. Overall the graph of NC condition is at a higher level in between 0 ms and 1000 ms.

Figure 4 shows the ERPs for C and NC conditions from the two electrodes O1 and O2 on the occipital lobe related to vision [6]. In both graphs, a peak at 300 ms and around 500 ms appears. The first peak, at 300 ms, reaches a higher level in C condition than in NC. The second peak, around 500 ms is reached steeper in C than in NC condition. The graph in C condition drops faster to ground level than the graph from NC condition. Based on the anomalies in between the delay of the peak arising at 500 ms we build a binary classifier. The classifier compares the values of the interval between 547 ms to 570 ms with the values between 586 ms to 609 ms after the events stimulus. Based on the average values, it is decided whether a participants’ ERP is created out of NC or C conditions. The classifiers’ accuracy results in 71% correct classification for C condition and 83% for NC condition, by using the data from O1 and O2 electrodes.

ERP of visual related brain areas O1 and O2 [6] in NC and C conditions with stimuli appearance at 0 ms. The graphs part in between 300 ms and 900 ms.

5 Limitations

The SSQ showed a degradation of the user discomfort before and after the experiment. This might have a negative effect on the ratings in the later questionnaire phases and especially on the rating in the C zone. However, as discussed above, we are still able to detect a difference to a high certainty, which is important when using a BCI during development or usage of a HMD experience. That our results hold in a field environment, when movement and the content of the experience comes into play, needs to be examined in future work.

6 Discussion

Our study indicates the feasibility of using consumer BCIs to objectively detect visual stress when using a HMD and the ability to classify the level of discomfort experienced by the user. The used questionnaire confirmed the existing literature on calculating zones of comfort and discomfort for stereoscopic images [19]. Participants report more visual discomfort in NC condition than in C condition. The symptoms got worse from eye strain, to double vision without the ability to fuse the stereoscopic picture the closer the object appeared in the NC condition. This means our EEG measurements represent the users’ actual experience. The EEG analysis gives promising insight on detecting visual stress either through monitoring the parietal or occipital lobes of the brain with 2 electrodes. The high classification rate of 71% for C and 83% for NC condition when using the O1 and O2 electrode [6] proves the applicability of using a BCI to detect visual stress within a HMD. Furthermore, the finding that only two electrodes are needed make it easy to wear as it might be integrated into the headstraps of an HMD. Also it makes it a relatively low-cost tool that does not interfere with the users experience at all. The signal monitored in the two brain regions react differently to the presented stimuli. The perceptual related areas react with a delay of about 500 to 700 ms later in the NC then in the C condition, which might be explained by the higher cognitive workload needed in the NC condition [14]. The visual related areas react to the stimuli between 300 and 500 ms with approximately 1\(\upmu \)V higher values for the C then for the NC condition. In combination this means, for faster detection the visual parts of the brain should be monitored and monitoring the perceptual related areas increase the certainty of the detection. The approach of cleaning the data and classifying the detected signal is simple and fast enough to be used in real time during the HMD usage. Therefore our system can be extended as a tool to detect and adapt virtual environments by scientist and practitioners during the runtime of a HMD experience.

7 Conclusion and Future Work

We could show that using consumer BCIs can be used to detect visual stress of a HMD user using two electrodes with up to 83% accuracy. In the future we will be testing the system in more natural virtual reality experiments as well as testing other factors causing visual stress such as blurred pictures. In addition, we will investigate increasing the classification accuracy by looking at combinations between the occipital and parietal lobe electrodes as well as more sophisticated machine learning classifiers.

Notes

- 1.

References

Costello, P.J.: Health and safety issues associated with virtual reality - a review of current literature. Advisory Group Comput. Graph. 37, 371–375 (1997)

Essilor Intruments USA: The corneal reflection pupillometer for precise pd measurements (2017). http://www.essilorinstrumentsusa.com/DispensingArea/MeasurementDispensingTools/Pages/Pupillometer.aspx

Frey, J., Appriou, A., Lotte, F., Hachet, M.: Estimating visual comfort in stereoscopic displays using electroencephalography: a proof-of-concept (2015). arXiv:1505.07783

Frey, J., Appriou, A., Lotte, F., Hachet, M.: Classifying EEG signals during stereoscopic visualization to estimate visual comfort. Comput. Intell. Neurosci. 2016, 7:1–7:7 (2016)

Ghaderi, F., Kim, S.K., Kirchner, E.A.: Effects of eye artifact removal methods on single trial P300 detection, a comparative study. J. Neurosci. Methods 221, 41–47 (2014)

Jasper, H.H.: The ten twenty electrode system of the international federation. Electroencephalogr. Clin. Neurophysiol. 10, 371–375 (1958)

Jurcak, V., Tsuzuki, D., Dan, I.: 10/20, 10/10, and 10/5 systems revisited: their validity as relative head-surface-based positioning systems. NeuroImage 34(4), 1600–1611 (2007)

Kennedy, R.S., Lane, N.E., Berbaum, K.S., Lilienthal, M.G.: Simulator sickness questionnaire: an enhanced method for quantifying simulator sickness. Int. J. Aviat. Psychol. 3(3), 203–220 (1993)

Kooi, F.L., Toet, A.: Visual comfort of binocular and 3d displays. Displays 25(2), 99–108 (2004)

Lambooij, M., Fortuin, M., Heynderickx, I.: Visual discomfort and visual fatigue of stereoscopic displays: a review. J. Imaging Sci. Technol. 53(3), 030201–030214 (2009)

Lamkin, P.: The best VR headsets: the virtual reality race is on (2017). https://www.wareable.com/vr/best-vr-headsets-2017

Menozzi, M.: Visual ergonomics of head-mounted displays. Jpn. Psychol. Res. 42(4), 213–221 (2000)

MNE Developers: Rejecting bad data (channels and segments) (2017). http://martinos.org/mne/dev/auto_tutorials/plot_artifacts_correction_rejection.html

Mun, S., Park, M.C., Yano, S.: Evaluation of viewing experiences induced by curved 3D display. In: Proceedings of SPIE, vol. 9495 (2015)

Nichols, S., Patel, H.: Health and safety implications of virtual reality: a review of empirical evidence. Appl. Ergon. 33, 251–271 (2002)

Oculus VR Inc.: Binocular vision, stereoscopic imaging and depth cues (2017). https://developer3.oculus.com/documentation/intro-vr/latest/concepts/bp_app_imaging/

Patterson, R., Winterbottom, M.D., Pierce, B.J.: Perceptual issues in the use of head-mounted visual displays. Hum. Factors 48(3), 555–573 (2006)

Sheedy, J.E., Hayes, J.N., Engle, J.: Is all asthenopia the same? Optom. Vis. Sci. 80(11), 732–739 (2003). Official publication of the American Academy of Optometry

Shibata, T., Kim, J., Hoffman, D.M., Banks, M.S.: Visual discomfort with stereo displays: effects of viewing distance and direction of vergence-accommodation conflict. In: Proceedings of SPIE, vol. 7863, pp. 78630P–78630P-9 (2011)

Vision Assessment Corporation: Random Dot Stereopsis Test with LEA Symbols®(2016). http://www.visionassessment.com/1005.shtml

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 IFIP International Federation for Information Processing

About this paper

Cite this paper

Mai, C., Hassib, M., Königbauer, R. (2017). Estimating Visual Discomfort in Head-Mounted Displays Using Electroencephalography. In: Bernhaupt, R., Dalvi, G., Joshi, A., K. Balkrishan, D., O’Neill, J., Winckler, M. (eds) Human-Computer Interaction – INTERACT 2017. INTERACT 2017. Lecture Notes in Computer Science(), vol 10516. Springer, Cham. https://doi.org/10.1007/978-3-319-68059-0_15

Download citation

DOI: https://doi.org/10.1007/978-3-319-68059-0_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-68058-3

Online ISBN: 978-3-319-68059-0

eBook Packages: Computer ScienceComputer Science (R0)