Abstract

Various sources have revealed that cryptographic standards and components have been subverted to undermine the security of users, reigniting research on means to achieve security in presence of such subverted components. In this paper we consider direct anonymous attestation (DAA) in this respect. This standardized protocol allows a computer with the help of an embedded TPM chip to remotely attest that it is in a healthy state. Guaranteeing that different attestations by the same computer cannot be linked was an explicit and important design goal of the standard in order to protect the privacy of the user of the computer. Surprisingly, none of the standardized or otherwise proposed DAA protocols achieves privacy when the TPM is subverted, but they all rely on the honesty of the TPM. As the TPM is a piece of hardware, it is hardly possible to tell whether or not a given TPM follows the specified protocol. In this paper we study this setting and provide a new protocol that achieves privacy also in presence of subverted TPMs.

This work has been supported by the ERC under Grant PERCY #321310.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

Direct anonymous attestation (DAA) is a cryptographic protocol for a platform consisting of a host and a TPM chip (Trusted Platform Module). The TPM serves as a trust anchor of the platform and anonymously attests either to the host’s current state or some other message chosen by the host. Thus, DAA can be used to convince a communication partner that the platform has not been compromised, i.e., modified by malware. The main design goal of DAA is that such attestations are anonymous, i.e., while a verifier can check that the signature stems from a legitimate platform, it does not learn the identity of the platform, or even recognize that multiple attestations stem from the same platform.

DAA was introduced by Brickell, Camenisch, and Chen [15] for the Trusted Computing Group and was standardized in the TPM 1.2 specification in 2004 [59]. Their paper inspired a large body of work on DAA schemes [9, 16,17,18, 23, 25, 36,37,38, 40], including more efficient schemes using bilinear pairings as well as different security definitions and proofs. One result of these works is the recent TPM 2.0 specification [50, 60] that includes support for multiple pairing-based DAA schemes, two of which are standardized by ISO [49]. Over 500 million TPMs have been sold, making DAA probably the most complex cryptographic scheme that is widely implemented. Recently, the protocol has gotten renewed attention for authentication: An extension of DAA called EPID is used in Intel SGX [41], the most recent development in the area of trusted computing. Further, the FIDO alliance, an industry consortium designing standards for strong user authentication, is in the process of standardizing a specification using DAA to attest that authentication keys are securely stored [21].

The first version of the TPM specification and attestation protocol had received strong criticism from privacy groups and data protection authorities as it imposed linkability and full identification of all attestations. As a consequence, guaranteeing the privacy of the platform, i.e., ensuring that an attestation does not carry any identifier, became an important design criteria for such hardware-based attestation. Indeed, various privacy groups and data protection authorities had been consulted in the design process of DAA.

Trusting Hardware for Privacy? Surprisingly, despite the strong concerns of having to trust a piece of hardware when TPMs and hardware-based attestation were introduced, the problem of privacy-preserving attestation in the presence of fraudulent hardware has not been fully solved yet. The issue is that the original DAA protocol as well as all other DAA protocols crucially rely on the honesty of the entire platform, i.e., host and TPM, for guaranteeing privacy. Clearly, assuming that the host is honest is unavoidable for privacy, as it communicates directly with the outside world and can output any identifying information it wants. However, further requiring that the TPM behaves fully honest and aims to preserve the host’s privacy is an unnecessarily strong assumption and contradicts the initial design goal of not having to trust the TPM.

Even worse, it is impossible to verify this strong assumption as the TPM is a chip that comes with pre-installed software, to which the user only has black-box access. While black-box access might allow one to partly verify the TPM’s functional correctness, it is impossible to validate its privacy guarantees. A compromised TPM manufacturer can ship TPMs that provide seemingly correct outputs, but that are formed in a way that allows dedicated entities (knowing some trapdoor) to trace the user, for instance by encoding an identifier in a nonce that is hashed as part of the attestation signature. It could further encode its secret key in attestations, allowing a fraudulent manufacturer to frame an honest host by signing a statement on behalf of the platform. We stress that such attacks are possible on all current DAA schemes, meaning that, by compromising a TPM manufacturer, all TPMs it produces can be used as mass surveillance devices. The revelations of subverted cryptographic standards [5, 56] and tampered hardware [46] indicate that such attack scenarios are very realistic.

In contrast to the TPM, the host software can be verified by the user, e.g., being compiled from open source, and will likely run on hardware that is not under the control of the TPM manufacturer. Thus, while the honesty of the host is vital for the platform’s privacy and there are means to verify or enforce such honesty, requiring the TPM to be honest is neither necessary nor verifiable.

1.1 Our Contribution

In this paper we address this problem of anonymous attestation without having to trust a piece of hardware, a problem which has been open for more than a decade. We further exhibit a new DAA protocol that provides privacy even if the TPM is subverted. More precisely, our contributions are twofold: we first show how to model subverted parties within the Universal Composability (UC) model and then propose a protocol that is secure against subverted TPMs.

Modeling Subversion Attacks in UC. We modify the UC-functionality of DAA recently proposed by Camenisch, Drijvers, and Lehmann [25] to model the preserved privacy guarantees in the case where the TPM is corrupt and the host remains honest. Modeling corruption in the sense of subverted parties is not straightforward: if the TPM was simply controlled by the adversary, then, using the standard UC corruption model, only very limited privacy can be achieved. The TPM has to see and approve every message it signs but, when corrupted, all these messages are given to the adversary as well. In fact, the adversary will learn which particular TPM is asked to sign which message. That is, the adversary can later recognize a certain TPM attestation via its message, even if the signatures are anonymous.

Modeling corruption of TPMs like this gives the adversary much more power than in reality: even if a TPM is subverted and runs malicious algorithms, it is still embedded into a host who controls all communication with the outside world. Thus, the adversary cannot communicate directly with the TPM, but only via the (honest) host. To model such subversions more accurately, we introduce isolated corruptions in UC. When a TPM is corrupted like this, we allow the ideal-world adversary (simulator) to specify a piece of code that the isolated, yet subverted TPM will run. Other than that, the adversary has no control over the isolated corrupted party, i.e., it cannot directly interact with the isolated TPM and cannot see its state. Thus, the adversary will also not automatically learn anymore which TPM signed which message.

A New DAA Protocol with Optimal Privacy. We further discuss why the existing DAA protocols do not offer privacy when the TPM is corrupt and propose a new DAA protocol which we prove to achieve our strong security definition. In contrast to most existing schemes, we construct our protocol from generic building blocks which yields a more modular design. A core building block are split signatures which allow two entities – in our case the TPM and host – each holding a secret key share to jointly generate signatures. Using such split keys and signatures is a crucial difference compared with all existing schemes, where only the TPM contributed to the attestation key which inherently limits the possible privacy guarantees. We also redesign the overall protocol such that the main part of the attestation, namely proving knowledge of a membership credential on the attestation key, can be done by the host instead of the TPM.

By shifting more responsibility and computations to the host, we do not only increase privacy, but also achieve stronger notions of non-frameability and unforgeability than all previous DAA schemes. Interestingly, this design change also improves the efficiency of the TPM, which is usually the bottleneck in a DAA scheme. In fact, we propose a pairing-based instantiation of our generic protocol which, compared to prior DAA schemes, has the most efficient TPM signing operation. This comes for the price of higher computational costs for the host and verifier. However, we estimate signing and verification times of under 40 ms, which is sufficiently fast for most practical applications.

1.2 Related Work

The idea of combining a piece of tamper-resistant hardware with a user-controlled device was first suggested by Chaum [33] and applied to the context of e-cash by Chaum and Pedersen [34], which got later refined by Cramer and Pedersen [42] and Brands [14]. A user-controlled wallet is required to work with a piece of hardware, the observer, to be able to withdraw and spend e-cash. The wallet ensures the user’s privacy while the observer prevents a user from double-spending his e-cash. Later, Brands in 2000 [13] considered the more general case of user-bound credentials where the user’s secret key is protected by a smart card. Brands proposes to let the user’s host add randomness to the smart card contribution as a protection against subliminal channels. All these works use a blind signature scheme to issue credentials to the observers and hence such credentials can only be used a single time.

Young and Yung further study the protection against subverted cryptographic algorithms with their work on kleptography [62, 63] in the late 1990s. Recently, caused by the revelations of subverted cryptographic standards [5, 56] and tampered hardware [46] as a form of mass-surveillance, this problem has again gained substantial attention.

Subversion-Resilient Cryptography. Bellare et al. [7] provided a formalization of algorithm-substitution attacks and considered the challenge of securely encrypting a message with an encryption algorithm that might be compromised. Here, the corruption is limited to attacks where the subverted party’s behavior is indistinguishable from that of a correct implementation, which models the goal of the adversary to remain undetected. This notion of algorithm-substitution attacks was later applied to signature schemes, with the goal of preserving unforgeability in the presence of a subverted signing algorithm [4].

However, these works on subversion-resilient cryptography crucially rely on honestly generated keys and aim to prevent key or information leakage when the algorithms using these keys get compromised.

Recently, Russell et al. [57, 58] extended this line of work by studying how security can be preserved when all algorithms, including the key generation can be subverted. The authors also propose immunization strategies for a number of primitives such as one-way permutations and signature schemes. The approach of replacing a correct implementation with an indistinguishable yet corrupt one is similar to the approach in our work, and like Russell et al. we allow the subversion of all algorithms, and aim for security (or rather privacy) when the TPM behaves maliciously already when generating the keys.

The DAA protocol studied in this work is more challenging to protect against subversion attacks though, as the signatures produced by the TPM must not only be unforgeable and free of a subliminal channel which could leak the signing key, but also be anonymous and unlinkable, i.e., signatures must not leak any information about the signer even when the key is generated by the adversary. Clearly, allowing the TPM to run subverted keys requires another trusted entity on the user’s side in order to hope for any privacy-protecting operations. The DAA setting naturally satisfies this requirement as it considers a platform to consist of two individual entities: the TPM and the host, where all of TPM’s communication with the outside world is run via the host.

Reverse Firewalls. This two-party setting is similar to the concept of reverse firewalls recently introduced by Mironov and Stephens-Davidowitz [53]. A reverse firewall sits in between a user’s machine and the outside world and guarantees security of a joint cryptographic operation even if the user’s machine has been compromised. Moreover, the firewall-enhanced scheme should maintain the original functionality and security, meaning the part run on the user’s computer must be fully functional and secure on its own without the firewall. Thus, the presence of a reverse firewall can enhance security if the machine is corrupt but is not the source of security itself. This concept has been proven very powerful and manages to circumvent the negative results of resilience against subversion-attacks [39, 43].

The DAA setting we consider in this paper is not as symmetric as a reverse firewall though. While both parties contribute to the unforgeability of attestations, the privacy properties are only achievable if the host is honest. In fact, there is no privacy towards the host, as the host is fully aware of the identity of the embedded TPM. The requirement of privacy-protecting and unlinkable attestation only applies to the final output produced by the host.

Divertible Protocols and Local Adversaries. A long series of related work explores divertible and mediated protocols [3, 11, 20, 54], where a special party called the mediator controls the communication and removes hidden information in messages by rerandomizing them. The host in our protocol resembles the mediator, as it adds randomness to every contribution to the signature from the TPM. However, in our case the host is a normal protocol participant, whereas the mediator’s sole purpose is to control the communication.

Alwen et al. [2] and Canetti and Vald [32] consider local adversaries to model isolated corruptions in the context of multi-party protocols. These works thoroughly formalize the setting of multi-party computations where several parties can be corrupted, but are controlled by different and non-colluding adversaries. In contrast, the focus of this work is to limit the communication channel that the adversary has to the corrupted party itself. We leverage the flexibility of the UC model to define such isolated corruptions.

Generic MPC. Multi-party computation (MPC) was introduced by Yao [61] and allows a set of parties to securely compute any function on private inputs. Although MPC between the host and TPM could solve our problem, a negative result by Katz and Ostrovsky [52] shows that this would require at least five rounds of communication, whereas our tailored solution is much more efficient. Further, none of the existing MPC models considers the type of subverted corruptions that is crucial to our work, i.e., one first would have to extend the existing models and schemes to capture such isolated TPM corruption. This holds in particular for the works that model tamper-proof hardware [48, 51], as therein the hardware is assumed to be “perfect” and unsubvertable.

TPM2.0 Interfaces and Subliminal Channels. Camenisch et al. [22] recently studied the DAA-related interfaces that are provided by hardware modules following the current TPM2.0 specification, and propose a revision to obtain better security and privacy guarantees from such hardware. The current APIs do not allow to prove the unforgeability of the TPM’s parts in the DAA protocols, and provide a static Diffie-Hellman oracle. Fixes to these problems have been proposed, but they create new issues: they enable a fraudulent TPM to encode information into an attestation signature, which could be used to break anonymity or to leak the secret key. This creates a subliminal channel already on the hardware level, which would annihilate any privacy guarantees against malicious TPMs that are achieved on the protocol level. Camenisch et al. address this problem and present a revised set of interfaces that allow for provable security and do not introduce a subliminal channel. Further, two new DAA protocols are presented that can be build from these revised APIs and guarantee privacy even when the hardware is subverted, which is termed strong privacy and builds upon our isolated corruption model. In contrast to our work, the protocols in [22] do not provide privacy against malicious TPMs in the standard corruption model, and the privacy guarantees in the isolated model are slightly weaker than in our optimal privacy definition. We give a brief comparison of strong and optimal privacy in Sect. 2.3 and refer to [22] for a detailed discussion. The protocols proposed in [22] are realizable with only minor modifications to the TPM specification, though, whereas our protocol with optimal privacy would require more significant changes.

2 A Security Model for DAA with Optimal Privacy

This section presents our security definition for anonymous attestation with optimal privacy. First, we informally describe how DAA works and what the desired security and (optimal) privacy properties are. Then we present our formal definition in Sect. 2.1, and describe how it improves upon existing work in Sect. 2.2. Finally, in Sect. 2.3, we elaborate on the inherent limitations the UC framework imposes on privacy in the presence of fully corrupted parties and introduce the concept of isolated corruptions, which allow one to overcome this limitations yet capture the power of subverted TPMs.

High-Level Functional and Security Properties. In a DAA scheme, we have four kinds of entities: a number of TPMs, a number of hosts, an issuer, and a number of verifiers. A TPM and a host together form a platform which performs the join protocol with the issuer who decides if the platform is allowed to become a member. Once being a member, the TPM and host together can sign messages with respect to basenames \( bsn \), where the basename steers the platform’s anonymity. If a platform signs with a fresh basename, the signature must be anonymous and unlinkable to any previous signatures. That is, any verifier can check that the signature stems from a legitimate platform via a deterministic verify algorithm, but the signature does not leak any information about the identity of the signer. However, signatures the platform makes with the same basename can be linked to each other via a (deterministic) link algorithm.

For security, one requires unforgeability: when the issuer is honest, the adversary can only sign in the name of corrupt platforms. More precisely, if n platforms are corrupt, the adversary can forge at most n unlinkable signatures for one basename. By corrupt platform we mean that both the host and TPM are corrupt, and thus a platform is called honest if at least one of the TPM or host is honest. This is in fact stronger than the unforgeability notion covered in all previous definitions which only rely on the honesty of the TPM.

Non-frameability captures the property that no adversary can create signatures on a message m w.r.t. basename \( bsn \) that links to a signature created by a platform with an honest host, when this platform never signed m w.r.t. \( bsn \).

Finally, we require anonymity for attestations. An adversary that is given two signatures, w.r.t. two different basenames cannot determine whether both signatures stem from the same platform. All previous works considered anonymity only for fully honest platforms, i.e., consisting of an honest TPM and honest host, whereas our goal is to guarantee anonymity even if the TPM is corrupt. Note that anonymity can only hold if the host is honest, though, as it has full control over its output and can, e.g., always choose to append its identity to a signature. Thus, the best one can hope for is preserved anonymity when the TPM is corrupt but the host is honest, which is the setting that this work addresses.

Universal Composability. Our security definition has the form of an ideal functionality \(\mathcal {F}_{\mathsf {pdaa}}\) in the Universal Composability (UC) framework [31]. Informally, a protocol \(\varPi \) securely realizes an ideal functionality \(\mathcal {F}\)if the real world is as secure as the ideal world. As \(\mathcal {F}\)performs the task at hand in an ideal fashion, i.e., \(\mathcal {F}\)is secure by construction, there are no meaningful attacks on the ideal world, so there are no meaningful attacks on the real world. More precisely, \(\varPi \) securely realizes \(\mathcal {F}\)if for every adversary \(\mathcal {A}\), there exists a simulator \(\mathcal {S}\) such that no environment \(\mathcal {E}\) can distinguish the real world (with \(\varPi \) and \(\mathcal {A}\)) from the ideal world (with \(\mathcal {F}\)and \(\mathcal {S}\)).

2.1 Ideal Functionality \(\mathcal {F}_{\mathsf {pdaa}}\)

We now formally define our ideal DAA-with-optimal-privacy functionality \(\mathcal {F}_{\mathsf {pdaa}}\), which is based on \(\mathcal {F}_\mathsf {daa}^{l}\) by Camenisch et al. [25]. The crucial difference between the two functionalities is the resilience against corrupt TPMs: \(\mathcal {F}_\mathsf {daa}^{l}\) guarantees anonymity, non-frameability and unforgeability only when both the TPM and the host are honest. Our modified version \(\mathcal {F}_{\mathsf {pdaa}}\) guarantees all properties as long as the host is honest, i.e., even when the TPM is corrupt. We explain these differences in detail in Sect. 2.2. We start by describing the interfaces and guaranteed security properties in an informal manner, and present the detailed definition of \(\mathcal {F}_{\mathsf {pdaa}}\) in Fig. 1.

Setup. The \(\mathsf {SETUP}\) interface on input \( sid = (\mathcal {I}, sid')\) initiates a new session for the issuer \(\mathcal {I} \) and expects the adversary to provide algorithms \((\mathsf {ukgen}, \mathsf {sig}, \mathsf {ver}, \mathsf {link}, \mathsf {identify})\) that will be used inside the functionality. \(\mathsf {ukgen}\) creates a new key \( gsk \) and a tracing trapdoor \(\tau \) that allows \(\mathcal {F}_{\mathsf {pdaa}} \) to trace signatures generated with \( gsk \). \(\mathsf {sig}\), \(\mathsf {ver}\), and \(\mathsf {link}\) are used by \(\mathcal {F}_{\mathsf {pdaa}} \) to create, verify, and link signatures, respectively. Finally, \(\mathsf {identify}\) allows to verify whether a signature belongs to a certain tracing trapdoor. This allows \(\mathcal {F}_{\mathsf {pdaa}}\) to perform multiple consistency checks and enforce the desired non-frameability and unforgeability properties.

Note that the \(\mathsf {ver}\) and \(\mathsf {link}\) algorithms assist the functionality only for signatures that are not generated by \(\mathcal {F}_{\mathsf {pdaa}} \) itself. For signatures generated by the functionality, \(\mathcal {F}_{\mathsf {pdaa}} \) will enforce correct verification and linkage using its internal records. While \(\mathsf {ukgen}\) and \(\mathsf {sig}\) are probabilistic algorithms, the other ones are required to be deterministic. The \(\mathsf {link}\) algorithm also has to be symmetric, i.e., for all inputs it must hold that \(\mathsf {link}(\sigma , m, \sigma ', m', bsn ) \leftrightarrow \mathsf {link}(\sigma ', m', \sigma , m, bsn )\).

Join. A host \(\mathcal {H} _j\) can request to join with a TPM \(\mathcal {M} _i\) using the \(\mathsf {JOIN}\) interface. If both the TPM and the issuer approve the join request, the functionality stores an internal membership record for \(\mathcal {M} _i, \mathcal {H} _j\) in \(\texttt {Members} \) indicating that from now on that platform is allowed to create attestations.

If the host is corrupt, the adversary must provide \(\mathcal {F}_{\mathsf {pdaa}}\) with a tracing trapdoor \(\tau \). This value is stored along in the membership record and allows the functionality to check via the \(\mathsf {identify}\) function whether signatures were created by this platform. \(\mathcal {F}_{\mathsf {pdaa}}\) uses these checks to ensure non-frameability and unforgeability whenever it creates or verifies signatures. To ensure that the adversary cannot provide bad trapdoors that would break the completeness or non-frameability properties, \(\mathcal {F}_{\mathsf {pdaa}}\) checks the legitimacy of \(\tau \) via the “macro” function \(\mathsf {CheckTtdCorrupt}\). This function checks that for all previously generated or verified signatures for which \(\mathcal {F}_{\mathsf {pdaa}}\) has already seen another matching tracing trapdoor \(\tau ' \ne \tau \), the new trapdoor \(\tau \) is not identified as a matching key as well. The detailed definition is given in the full version of this paper [24].

Sign. After joining, a host \(\mathcal {H} _j\) can request a signature on a message m with respect to basename \( bsn \) using the \(\mathsf {SIGN}\) interface. The signature will only be created when the TPM \(\mathcal {M} _i\) explicitly agrees to signing m w.r.t. \( bsn \) and a join record for \(\mathcal {M} _i, \mathcal {H} _j\) in \(\texttt {Members} \) exists (if the issuer is honest).

When a platform wants to sign message m w.r.t. a fresh basename \( bsn \), \(\mathcal {F}_{\mathsf {pdaa}}\) generates a new key \( gsk \) (and tracing trapdoor \(\tau \)) via \(\mathsf {ukgen}\) and then signs m with that key. The functionality also stores the fresh key \(( gsk , \tau )\) together with \( bsn \) in \(\texttt {DomainKeys} \), and reuses the same key when the platform wishes to sign repeatedly under the same basename. Using fresh keys for every signature naturally enforces the desired privacy guarantees: the signature algorithm does not receive any identifying information as input, and thus the created signatures are guaranteed to be anonymous (or pseudonymous in case \( bsn \) is reused).

Our functionality enforces this privacy property whenever the host is honest. Note, however, that \(\mathcal {F}_{\mathsf {pdaa}}\) does not behave differently when the host is corrupt, as in this case its output does not matter due to way corruptions are handled in UC. That is, \(\mathcal {F}_{\mathsf {pdaa}}\) always outputs anonymous signatures to the host, but if the host is corrupt, the signature is given to the adversary, who can choose to discard it and output anything else instead.

To guarantee non-frameability and completeness, our functionality further checks that every freshly generated key, tracing trapdoor and signature does not falsely match with any existing signature or key. More precisely, \(\mathcal {F}_{\mathsf {pdaa}}\) first uses the \(\mathsf {CheckTtdHonest}\) macro to verify whether the new key does not match to any existing signature. (The detailed definition of \(\mathsf {CheckTtdHonest}\) is given in the full version of this paper [24].) Likewise, before outputting \(\sigma \), the functionality checks that no one else already has a key which would match this newly generated signature.

Finally, for ensuring unforgeability, the signed message, basename, and platform are stored in \({\texttt {Signed}}\) which will be used when verifying signatures.

Verify. Signatures can be verified by any party using the \(\mathsf {VERIFY}\) interface. \(\mathcal {F}_{\mathsf {pdaa}}\) uses its internal \({\texttt {Signed}}\), \(\texttt {Members} \), and \(\texttt {DomainKeys} \) records to enforce unforgeability and non-frameability. It uses the tracing trapdoors \(\tau \) stored in \(\texttt {Members} \) and \(\texttt {DomainKeys} \) to find out which platform created this signature. If no match is found and the issuer is honest, the signature is a forgery and rejected by \(\mathcal {F}_{\mathsf {pdaa}}\). If the signature to be verified matches the tracing trapdoor of some platform with an honest TPM or host, but the signing records do not show that they signed this message w.r.t. the basename, \(\mathcal {F}_{\mathsf {pdaa}}\) again considers this to be a forgery and rejects. If the records do not reveal any issues with the signature, \(\mathcal {F}_{\mathsf {pdaa}}\) uses the \(\mathsf {ver}\) algorithm to obtain the final result.

The verify interface also supports verifier-local revocation. The verifier can input a revocation list \(\texttt {RL} \) containing tracing trapdoors, and signatures matching any of those trapdoors are no longer accepted.

Link. Using the \(\mathsf {LINK}\) interface, any party can check whether two signatures \((\sigma , \sigma ')\) on messages \((m, m')\) respectively, generated with the same basename \( bsn \) originate from the same platform or not. \(\mathcal {F}_{\mathsf {pdaa}}\) again uses the tracing trapdoors \(\tau \) stored in \(\texttt {Members} \) and \(\texttt {DomainKeys} \) to check which platforms created the two signatures. If they are the same, \(\mathcal {F}_{\mathsf {pdaa}}\) outputs that they are linked. If it finds a platform that signed one, but not the other, it outputs that they are unlinked, which prevents framing of platforms with an honest host.

The full definition of \(\mathcal {F}_{\mathsf {pdaa}} \) is given in Fig. 1. Note that when \(\mathcal {F}_{\mathsf {pdaa}}\) runs one of the algorithms \(\mathsf {sig}\), \(\mathsf {ver}\), \(\mathsf {identify}\), \(\mathsf {link}\), and \(\mathsf {ukgen}\), it does so without maintaining state. This means all user keys have the same distribution, signatures are equally distributed for the same input, and \(\mathsf {ver}\), \(\mathsf {identify}\), and \(\mathsf {link}\) invocations only depend on the current input, not on previous inputs.

2.2 Comparison with \(\mathcal {F}_\mathsf {daa}^{l}\)

Our functionality \(\mathcal {F}_{\mathsf {pdaa}}\) is a strengthened version of \(\mathcal {F}_\mathsf {daa}^{l}\) [25], as it requires fewer trust assumptions on the TPM for anonymity, non-frameability and unforgeability. It also includes a syntactical change which allows for more efficient constructions, as we discuss at the end of this section.

Optimal Privacy. The most important difference is that \(\mathcal {F}_\mathsf {daa}^{l}\) guarantees anonymity only when both the TPM and the host are honest, whereas our modified version \(\mathcal {F}_{\mathsf {pdaa}}\) guarantees anonymity as long as the host is honest, i.e., even when the TPM is corrupt. As discussed, the honesty of the host is strictly necessary, as privacy is impossible to guarantee otherwise.

In the ideal functionality \(\mathcal {F}_\mathsf {daa}^{l}\) proposed by Camenisch et al. [25] the signatures are created in the \(\mathsf {SIGNPROCEED}\) step in two different ways, depending on whether the TPM is honest or not. For the case of a corrupt TPM, the signature is provided by the adversary, which reflects that the adversary can recognize and link the signatures and \(\mathcal {F}_\mathsf {daa}^{l}\) does not guarantee any privacy. If the TPM (and the host) is honest, \(\mathcal {F}_\mathsf {daa}^{l}\) creates anonymous signatures inside the functionality using the signing algorithm \(\mathsf {sig}\) and \(\mathsf {ukgen}\). As signatures are generated with fresh keys for every new basename, the functionality enforces the desired unlinkability and anonymity.

In our functionality \(\mathcal {F}_{\mathsf {pdaa}}\), we also apply that approach of internally and anonymously creating signatures to the case where the TPM is corrupt, instead of relying on a signature input by the adversary. Thus, \(\mathcal {F}_{\mathsf {pdaa}}\) guarantees the same strong privacy for both settings of a corrupt and honest TPM. In fact, for the sake of simplicity we let \(\mathcal {F}_{\mathsf {pdaa}}\) even generate the signatures for corrupt hosts within the functionality now (whereas \(\mathcal {F}_\mathsf {daa}^{l}\) used adversarially provided ones). However, as \(\mathcal {F}_{\mathsf {pdaa}}\) outputs that signature to the host \(\mathcal {H} _i\), who will be the adversary if \(\mathcal {H} _i\) is corrupt, the behaviour of \(\mathcal {F}_{\mathsf {pdaa}}\) with respect to privacy does not matter in that case: the adversary can simply ignore the output. We present a summary of the privacy properties guaranteed by \(\mathcal {F}_\mathsf {daa}^{l}\) and \(\mathcal {F}_{\mathsf {pdaa}}\) in Table 1.

Another difference between both functionalities is that in \(\mathcal {F}_{\mathsf {pdaa}}\) we assume a direct communication channel between the host and TPM, which is necessary to achieve the desired privacy properties (see Sect. 2.3). Note that in the real-world, such a direct channel is naturally enforced by the physical proximity of the host and TPM forming the platform, i.e., if both are honest, an adversary can neither alter nor read their internal communication, or even notice that communication is happening. Consequently, our functionality gets a bit simpler compared to \(\mathcal {F}_\mathsf {daa}^{l}\) as we omit in \(\mathsf {JOIN}\) and \(\mathsf {SIGN}\) all dedicated interfaces and outputs that informed the simulator about communication between \(\mathcal {H} _j\) and \(\mathcal {M} _i\) and waited for a proceed input by the simulator to complete their communication.

Stronger Non-frameability and Unforgeability. While the focus of this work is strengthening the privacy properties in the presence of a subverted TPM, we also lift the trust assumption for non-frameability and unforgeability. Whereas \(\mathcal {F}_\mathsf {daa}^{l}\) and all other prior security models [15, 17] guarantee non-frameability only if the entire platform is honest, our modified definition \(\mathcal {F}_{\mathsf {pdaa}}\) enforces that property as long as the host is honest. Our stronger version of non-frameability is enforced by modifying the \(\mathsf {JOINPROCEED}\) interface such that it allows the adversary to provide a tracing trapdoor \(\tau \) (which steers the non-frameability checks by \(\mathcal {F}_{\mathsf {pdaa}}\)) only when the host is corrupt, as it set \(\tau \leftarrow \bot \) whenever the host is honest. This replaces the original condition of discarding the adversarial \(\tau \) when both, the host and TPM are honest. Note that similar to anonymity, requiring an honest host is strictly necessary for non-frameability too, as we can never control the signatures that a corrupt host outputs. In particular, a corrupt host with an honest TPM could additionally run a corrupt TPM and “frame itself” by outputting signatures from the corrupt TPM.

In terms of unforgeability, all previous definitions including \(\mathcal {F}_\mathsf {daa}^{l}\) solely rely on the honesty of the TPM (and issuer of course). In \(\mathcal {F}_{\mathsf {pdaa}}\) we provide a stronger version and guarantee that attestations cannot be forged unless the entire platform is corrupted, i.e., here we ensure unforgeability if at least one of two entities, TPM or host, is honest. This change is reflected in our functionality \(\mathcal {F}_{\mathsf {pdaa}}\) as follows: In the \(\mathsf {SIGNPROCEED}\) interface we store the host identity as part of the signature record \(\langle \sigma , m, bsn , \mathcal {M} _i, \mathcal {H} _j \rangle \in {\texttt {Signed}}\) when signatures are created. Further, the \(\mathsf {VERIFY}\) interface now requires the existence of such record whenever the signature to be verified belongs to an honest host or honest TPM. In \(\mathcal {F}_\mathsf {daa}^{l}\) only \(\langle \sigma , m, bsn , \mathcal {M} _i \rangle \) was stored and required when the TPM was honest. For unforgeability, relaxing the condition on the honesty of the TPM is not as crucial as for privacy and non-frameability. Thus, if only the standard unforgeability notion is sufficient, one can easily derive a functionality with optimal privacy but standard unforgeability by reverting the changes we just described.

Dedicated Tracing Key. Our functionality also includes some syntactical changes. \(\mathcal {F}_\mathsf {daa}^{l}\) uses keys \( gsk \) for two purposes: to create signatures for honest platforms (via \(\mathsf {sig}\)), and to trace signatures (via \(\mathsf {identify}\)) when enforcing non-frameability and unforgeability. A key \( gsk \) can be provided by the adversary when a \(\mathsf {JOIN}\) request is completed for a corrupt host, or is generated internally via \(\mathsf {ukgen}\) whenever an anonymous signature is created. In \(\mathcal {F}_{\mathsf {pdaa}}\) we split this into two dedicated values: \( gsk \) which is used to sign, and \(\tau \) to trace signatures. Consequently, the \(\mathsf {identify}\) algorithm now takes \(\tau \) instead of \( gsk \) as input. The adversary has to provide \(\tau \) in the \(\mathsf {JOIN}\) interface, as its input is only used to ensure that a corrupt host cannot impersonate or frame another honest platform. The internally created keys are used for both, signing and tracing, and hence we modify \(\mathsf {ukgen}\) to output a tuple \(( gsk , \tau )\) instead of \( gsk \) only.

The idea behind that change is to allow for more efficient schemes, as the tracing key \(\tau \) is usually a value that needs to be extracted by the simulator in the security proof. In the scheme we propose, it is sufficient that \(\tau \) is the public key of the platform whereas \( gsk \) is its secret key. Using only a single \( gsk \) would have required the join protocol to include an extractable encryption of the platform’s secret key, which would not only be less efficient but also a questionable protocol design. Clearly, our approach is more general than in \(\mathcal {F}_\mathsf {daa}^{l}\), one can simply set \(\tau = gsk \) to derive the same definition as \(\mathcal {F}_\mathsf {daa}^{l}\).

2.3 Modeling Subverted Parties in the UC Framework

As just discussed, our functionality \(\mathcal {F}_{\mathsf {pdaa}}\) guarantees that signatures created with an honest host are unlinkable and do not leak any information about the signing platform, even if the TPM is corrupt. However, the adversary still learns the message and basename when the TPM is corrupt, due to the way UC models corruptions. We discuss how this standard corruption model inherently limits the achievable privacy level, and then present our approach of isolated corruptions which allow one to overcome this limitation yet capture the power of subverted TPMs. While we discuss the modeling of isolated corruptions in the context of our DAA functionality, we consider the general concept to be of independent interest as it is applicable to any other scenario where such subversion attacks can occur.

Conditional Privacy Under Full TPM Corruption. According to the UC corruption model, the adversary gains full control over a corrupted party, i.e., it receives all inputs to that party and can choose its responses. For the case of a corrupt TPM this means that the adversary sees the message m and basename \( bsn \) whenever the honest host wants to create a signature. In fact, the adversary will learn which particular TPM \(\mathcal {M} _i\) is asked to sign m w.r.t. \( bsn \). Thus, even though the signature \(\sigma \) on m w.r.t. \( bsn \) is then created by \(\mathcal {F}_{\mathsf {pdaa}}\) and does not leak any information about the identity of the signing platform, the adversary might still be able to recognize the platform’s identity via the signed values. That is, if a message m or basename \( bsn \) is unique, i.e., only a single (and corrupt) TPM has ever signed m w.r.t. \( bsn \), then, when later seeing a signature on m w.r.t. \( bsn \), the adversary can derive which platform had created the signature.

A tempting idea for better privacy would be to change the functionality such that the TPM does not receive the message and basename when asked to approve an attestation via the \(\mathsf {SIGNPROCEED}\) message. As a result, this information will not be passed to the adversary if the TPM is corrupt. However, that would completely undermine the purpose of the TPM that is supposed to serve as a trust anchor: verifiers accept a DAA attestation because they know a trusted TPM has approved them. Therefore, it is essential that the TPM sees and acknowledges the messages it signs.

Thus, in the presence of a fully corrupt TPM, the amount of privacy that can be achieved depends which messages and basenames are being signed – the more unique they are, the less privacy \(\mathcal {F}_{\mathsf {pdaa}}\) guarantees.

Optimal Privacy Under Isolated TPM Corruption. The aforementioned leakage of all messages and basenames that are signed by a corrupt TPM is enforced by the UC corruption model. Modeling corruption of TPMs like this gives the adversary much more power than in reality: even if a TPM is subverted and runs malicious algorithms, it is still embedded into a host who controls all communication with the outside world. Thus, the adversary cannot communicate directly with the TPM, but only via the (honest) host.

To model such subversions more accurately and study the privacy achievable in the presence of subverted TPMs, we define a relaxed level of corruption that we call isolated corruption. When the adversary corrupts a TPM in this manner, it can specify code for the TPM but cannot directly communicate with the TPM.

We formally define such isolated corruptions via the body-shell paradigm used to model UC corruptions [31]. Recall that the body of a party defines its behavior, whereas the shell models the communication with that party. Thus, for our isolated corruptions, the adversary gets control over the body but not the shell. Interestingly, this is exactly the inverse of honest-but-curious corruptions in UC, where the adversary controls the shell and thus sees all inputs and outputs, but cannot change the body, i.e., the parties behavior remains honest.

In our case, an adversary performing an isolated corruption can provide a body, which models the tampered algorithms that an isolated corrupt TPM may use. The shell remains honest though and handles inputs, and subroutine outputs, and only forwards the ones that are allowed to the body. In the real world, the shell would only allow communication with the host in which the TPM is embedded. In the ideal world, the shell allows inputs to and outputs from the functionality, and blocks anything else.

Figures 2 and 3 depict the different levels of corruption in the real world and ideal world, respectively. In the ideal word, an isolated corruption of a TPM replaces the dummy TPM that forwards inputs and outputs between the environment and the ideal functionality with an isolated simulator comprising of the adversarial body and honest shell.

Modeling of corruption in the real world. Left: an honest TPM applies the protocol \(\varPi _{\mathcal {M}}\), and communicates with the host running \(\varPi _{\mathcal {H}}\). Middle: a corrupt TPM sends any input the adversary instructs it to, and forwards any messages received to the adversary. Right: an isolated corrupt TPM is controlled by an isolated adversary \(\mathcal {A} _\mathcal {M} \), who can communicate with the host, but not with any other entities.

Modeling of corruption in the ideal world. Left: an honest TPM is a dummy party \(d_{\mathcal {M}}\) that forwards inputs and outputs between the environment \(\mathcal {E}\) and the functionality \(\mathcal {F}_{\mathsf {pdaa}}\). Middle: a corrupt TPM sends any input the adversary instructs it to, and forwards any subroutine output to the adversary. Right: an isolated corrupt TPM is controlled by an isolated simulator \(\mathcal {S} _\mathcal {M} \), who may send inputs and receive outputs from \(\mathcal {F}_{\mathsf {pdaa}}\), but not communicate with any other entities.

When designing a UC functionality, then all communication between a host and the “embedded” party that can get corrupted in such isolated manner must be modeled as direct channel (see e.g., the \(\mathsf {SIGN}\) related interfaces in \(\mathcal {F}_{\mathsf {pdaa}}\)). Otherwise the simulator/adversary will be aware of the communication between both parties and can delay or block messages, which would contradict the concept of an isolated corruption where the adversary has no direct channel to the embedded party. Note that the perfect channel of course only holds if the host entity is honest, if it is corrupt (in the standard sense), the adversary can see and control all communication via the host anyway.

With such isolated adversaries we specify much stronger privacy. The adversary no longer automatically learns which isolated corrupt TPM signed which combination of messages and basenames, and the signatures created by \(\mathcal {F}_{\mathsf {pdaa}}\) are guaranteed to be unlinkable. Of course the message m and basename \( bsn \) must not leak information about the identity of the platform. In certain applications, the platform would sign data generated or partially controlled by other functions contained in a TPM. This is out of scope of the attestation scheme, but the higher level scheme using \(\mathcal {F}_{\mathsf {pdaa}}\) should ensure that this does not happen, by, e.g., letting the host randomize or sanitize the message.

Comparison with Strong Privacy \(\textit{(}{\mathcal {F}_\mathsf {pdaa+}}{} \textit{)}\). Recently, Camenisch et al. [22] proposed a variant \(\mathcal {F}_\mathsf {pdaa+} \) of our functionality that, when considering only isolated TPM corruptions, provides an intermediate level of anonymity, termed strong privacy (the \(+\) in \(\mathcal {F}_\mathsf {pdaa+} \) refers to the addition of attributes and signature-based revocation). In \(\mathcal {F}_\mathsf {pdaa+} \) all signatures are generated internally by the functionally, just as in optimal privacy. The difference is that in strong privacy these signatures are revealed to the TPM which can then base its behavior on the signature value. Thus, while the actual signature shown to the TPM is still guaranteed to be anonymous, the TPM can influence the final distribution of the signatures by blocking certain values. In the isolated corruption model, where the corrupt TPM cannot communicate the learned signatures to the adversary, \(\mathcal {F}_\mathsf {pdaa+} \) provides an interesting relaxation of optimal privacy which allows for significantly simpler constructions as shown in [22].

3 Insufficiency of Existing DAA Schemes

Our functionality \(\mathcal {F}_{\mathsf {pdaa}}\) requires all signatures on a message m with a fresh basename \( bsn \) to have the same distribution, even when the TPM is corrupt. None of the existing DAA schemes can be used to realize \(\mathcal {F}_{\mathsf {pdaa}}\) when the TPM is corrupted (either fully or isolated). The reason is inherent to the common protocol design that underlies all DAA schemes so far, i.e., there is no simple patch that would allow upgrading the existing solutions to achieve optimal privacy.

In a nutshell, in all existing DAA schemes, the TPM chooses a secret key \( gsk \) for which it blindly receives a membership credential of a trusted issuer. To create a signature on message m with basename \( bsn \), the platform creates a signature proof of knowledge signing message m and proving knowledge of \( gsk \) and the membership credential.

In the original RSA-based DAA scheme [15], and the more recent qSDH-based schemes [18, 19, 23, 40], the proof of knowledge of the membership credential is created jointly by the TPM and host. After jointly computing the commitment values of the proof, the host computes the hash over these values and sends the hash c to the TPM. To prevent leaking information about its key, the TPM must ensure that the challenge is a hash of fresh values. In all the aforementioned schemes this is done by letting the TPM choose a fresh nonce n and computing the final hash as \(c' \leftarrow \mathsf {H} (n, c)\). An adversarial TPM can embed information in n instead of taking it uniformly at random, clearly altering the distribution of the proof and thus violating the desired privacy guarantees.

At a first glance, deriving the hash for the proof in a more robust manner might seem a viable solution to prevent such leakage. For instance, setting the nonce as \(n \leftarrow n_t \oplus n_h\), with \(n_t\) being the TPM’s and \(n_h\) the host’s contribution, and letting the TPM commit to \(n_t\) before receiving \(n_h\). While this indeed removes the leakage via the nonce, it still reveals the hash value \(c' \leftarrow \mathsf {H} (n, c)\) to the TPM with the hash becoming part of the completed signature. Thus, the TPM can base its behavior on the hash value and, e.g., only sign messages for hashes that start with a 0-bit. When considering only isolated corruptions for the TPM, the impact of such leakage is limited though as argued by Camenisch et al. [22] and formalized in their notion of strong privacy. In fact, Camenisch et al. show that by using such jointly generated nonces, and also letting the host contribute to the platform’s secret key, the existing DAA schemes can be modified to achieve strong privacy in the isolated corruption model. However, it clearly does not result in signatures that are equally distributed as required by our functionality, and thus the approach is not sufficient to obtain optimal privacy.

The same argument applies to the LRSW-based DAA schemes [9, 25, 38], where the proof of a membership credential is done solely by the TPM, and thus can leak information via the Fiat-Shamir hash output again. The general problem is that the signature proofs of knowledge are not randomizable. If the TPM would create a randomizable proof of knowledge, e.g., a Groth-Sahai proof [47], the host could randomize the proof to remove any hidden information, but this would yield a highly inefficient signing protocol for the TPM.

4 Building Blocks

In this section we introduce the building blocks for our DAA scheme. In addition to standard components such as additively homomorphic encryption and zero-knowledge proofs, we introduce two non-standard types of signature schemes. One signature scheme we require is for the issuer to blindly sign the public key of the TPM and host. The second signature scheme is needed for the TPM and host to jointly create signed attestations, which we term split signatures.

The approach of constructing a DAA scheme from modular building blocks rather than basing it on a concrete instantiation was also used by Bernhard et al. [9, 10]. As they considered a simplified setting, called pre-DAA, where the host and platform have a joint corruption state, and we aim for much stronger privacy, their “linkable indistinguishable tag” is not sufficient for our construction. We replace this with our split signatures.

As our protocol requires “compatible” building blocks, i.e., the different schemes have to work in the same group, we assume the availability of public system parameters  generated for security parameter \(\tau \). We give \( spar \) as dedicated input to the individual key generation algorithms instead of the security parameter \(\tau \). For the sake of simplicity, we omit the system parameters as dedicated input to all other algorithms and assume that they are given as implicit input.

generated for security parameter \(\tau \). We give \( spar \) as dedicated input to the individual key generation algorithms instead of the security parameter \(\tau \). For the sake of simplicity, we omit the system parameters as dedicated input to all other algorithms and assume that they are given as implicit input.

4.1 Proof Protocols

Let \( NIZK \{(w): s (w) \}(ctxt)\) denote a generic non-interactive zero-knowledge proof that is bound to a certain context ctxt and proves knowledge of a witness w such that statement \( s (w)\) is true. Sometimes we need witnesses to be online-extractable, which we denote by underlining them: \(\textit{NIZK}\{(\underline{w_1},w_2): s (w_1,w_2) \}\) allows for online extraction of \(w_1\).

All the \( NIZK \) we give have efficient concrete instantiations for the instantiations we propose for our other building blocks. We will follow the notation introduced by Camenisch and Stadler [29] and formally defined by Camenisch, Kiayias, and Yung [26] for these protocols. For instance, \( PK \{(a): y=g^a \}\) denotes a “zero-knowledge Proof of Knowledge of integer a such that \(y=g^{a}\) holds.” \( SPK \{\ldots \}(m)\) denotes a signature proof of knowledge on m, that is a non-interactive transformation of a proof with the Fiat-Shamir heuristic [45].

4.2 Homomorphic Encryption Schemes

We require an encryption scheme \((\mathsf {EncKGen},\mathsf {Enc},\mathsf {Dec})\) that is semantically secure and that has a cyclic group \(\mathbb {G}= \langle g \rangle \) of order q as message space. It consists of a key generation algorithm  , where \( spar \) defines the group \(\mathbb {G}\), an encryption algorithm

, where \( spar \) defines the group \(\mathbb {G}\), an encryption algorithm  , with \(m \in \mathbb {G}\), and a decryption algorithm \(m \leftarrow \mathsf {Dec}( esk , C)\).

, with \(m \in \mathbb {G}\), and a decryption algorithm \(m \leftarrow \mathsf {Dec}( esk , C)\).

We further require that the encryption scheme has an appropriate homomorphic property, namely that there is an efficient operation \(\odot \) on ciphertexts such that, if \(C_1 \in \mathsf {Enc}( epk , m_1)\) and \(C_2 \in \mathsf {Enc}( epk , m_2)\), then \(C_1 \odot C_2 \in \mathsf {Enc}( epk ,m_1 \cdot m_2)\). We will also use exponents to denote the repeated application of \(\odot \), e.g., \(C^2\) to denote \(C \odot C\).

ElGamal Encryption. We use the ElGamal encryption scheme [44], which is homomorphic and chosen plaintext secure. The semantic security is sufficient for our construction, as the parties always prove to each other that they formed the ciphertexts correctly. Let \( spar \) define a group \(\mathbb {G}= \langle g \rangle \) of order q such that the DDH problem is hard w.r.t. \(\tau \), i.e., q is a \(\tau \)-bit prime.

- \(\mathsf {EncKGen}( spar )\mathrm{{:}}\) :

-

Pick

, compute \({y} \leftarrow g^{x}\), and output \( esk \leftarrow {x}, epk \leftarrow {y}\).

, compute \({y} \leftarrow g^{x}\), and output \( esk \leftarrow {x}, epk \leftarrow {y}\). - \(\mathsf {Enc}( epk , m)\mathrm{{:}}\) :

-

To encrypt a message \(m \in \mathbb {G}\) under \( epk = {y}\), pick

and output the ciphertext \((C_1,C_2) \leftarrow ({y}^r, g^r m)\).

and output the ciphertext \((C_1,C_2) \leftarrow ({y}^r, g^r m)\). - \(\mathsf {Dec}( esk , C)\mathrm{{:}}\) :

-

On input the secret key \( esk = {x}\) and a ciphertext \(C = (C_1,C_2) \in \mathbb {G}^2\), output \(m' \leftarrow C_2 \cdot C_1^{-1/{x}}\).

4.3 Signature Schemes for Encrypted Messages

We need a signature scheme that supports the signing of encrypted messages and must allow for (efficient) proofs proving that an encrypted value is correctly signed and proving knowledge of a signature that signs an encrypted value. Dual-mode signatures [27] satisfy these properties, as therein signatures on plaintext as well as on encrypted messages can be obtained. As we do not require signatures on plaintexts, though, we can use a simplified version.

A signature scheme for encrypted messages consists of the algorithms \((\mathsf {SigKGen}, \mathsf {EncSign}, \mathsf {DecSign}, \mathsf {Vf})\) and also uses an encryption scheme \((\mathsf {EncKGen}, \mathsf {Enc},\mathsf {Dec})\) that is compatible with the message space of the signature scheme. In particular, the algorithms working with encrypted messages or signatures also get the keys  of the encryption scheme as input.

of the encryption scheme as input.

- \(\mathsf {SigKGen}( spar )\mathrm{{:}}\) :

-

On input the system parameters, this algorithm outputs a public verification key \( spk \) and secret signing key \( ssk \).

- \(\mathsf {EncSign}( ssk , epk , C)\mathrm{{:}}\) :

-

On input signing key \( ssk \), a public encryption key \( epk \), and ciphertext \(C = \mathsf {Enc}( epk ,m)\), outputs an “encrypted” signature \(\overline{\sigma }\) of C.

- \(\mathsf {DecSign}( esk , spk , \overline{\sigma })\mathrm{{:}}\) :

-

On input an “encrypted” signature \(\overline{\sigma }\), secret decryption key \( esk \) and public verification key \( spk \), outputs a standard signature \(\sigma \).

- \(\mathsf {Vf}( spk ,\sigma ,m)\mathrm{{:}}\) :

-

On input a public verification key \( spk ,\) signature \(\sigma \) and message m, outputs 1 if the signature is valid and 0 otherwise.

In terms of security, we require completeness and unforgeability as defined in [27], but omit the oracle for signatures on plaintext messages in the unforgeability experiment. Clearly, any secure dual-mode signature is also unforgeable according to our notion. The simplified security model is given in the full version of this paper [24].

AGOT+ Signature Scheme. To instantiate the building block of signatures for encrypted messages we will use the AGOT+ scheme of [27], which was shown to be a secure instantiation of a dual-mode signature, hence is also secure in our simplified setting. Again, as we do not require signatures on plaintext messages we omit the standard signing algorithm. The AGOT+ scheme is based on the structure-preserving signature scheme by Abe et al. [1], which is proven to be unforgeable in the generic group model.

The AGOT+ scheme assumes the availability of system parameters \((q, \mathbb {G}_1, \mathbb {G}_2, \mathbb {G}_T, e, g_1, g_2, x)\), where \(\mathbb {G}_1, \mathbb {G}_2, \mathbb {G}_T\) are groups of prime order q generated by \(g_1\), \(g_2\), and \(e(g_1, g_2)\) respectively, e is a non-degenerate bilinear map \(e: \mathbb {G}_1 \times \mathbb {G}_2 \rightarrow \mathbb {G}_T\), and x is an additional random group element in \(\mathbb {G}_1\).

- \(\mathsf {SigKGen}( spar )\mathrm{{:}}\) :

-

Draw

, compute \(y \leftarrow g_2^v\), and return \( spk = y\), \( ssk = v\).

, compute \(y \leftarrow g_2^v\), and return \( spk = y\), \( ssk = v\). - \(\mathsf {EncSign}( ssk , epk , M)\mathrm{{:}}\) :

-

On input a proper encryption \(M = \mathsf {Enc}( epk , m)\) of a message \(m \in \mathbb {G}_1\) under \( epk \), and secret key \( ssk = v\), choose a random

, and output the (partially) encrypted signature \(\bar{\sigma } = (r,S,T,w)\): $$ r \leftarrow g_2^u, \,\,\,\, S \leftarrow (M^{v} \odot \mathsf {Enc}( epk , x))^{1/u}, \,\,\,\, T \leftarrow (S^{v} \odot \mathsf {Enc}( epk , g_1))^{1/u}, \,\,\,\, w \leftarrow g_1^{1/u}. $$

, and output the (partially) encrypted signature \(\bar{\sigma } = (r,S,T,w)\): $$ r \leftarrow g_2^u, \,\,\,\, S \leftarrow (M^{v} \odot \mathsf {Enc}( epk , x))^{1/u}, \,\,\,\, T \leftarrow (S^{v} \odot \mathsf {Enc}( epk , g_1))^{1/u}, \,\,\,\, w \leftarrow g_1^{1/u}. $$ - \(\mathsf {DecSign}( esk , spk , \overline{\sigma })\mathrm{{:}}\) :

-

Parse \(\overline{\sigma }= (r,S,T,w)\), compute \(s \leftarrow \mathsf {Dec}( esk ,S)\), \(t \leftarrow \mathsf {Dec}( esk ,T)\) and output \(\sigma = (r,s,t,w)\).

- \(\mathsf {Vf}( spk , \sigma , m)\mathrm{{:}}\) :

-

Parse \(\sigma = (r,s,t,w')\) and \( spk = y\) and output 1 iff \(m,s,t \in \mathbb {G}_1\), \(r \in \mathbb {G}_2\), \(e(s,r) = e(m,y )\cdot e(x,g_2) \), and \(e(t,r) = e(s,y) \cdot e(g_1,g_2). \)

Note that for notational simplicity, we consider w part of the signature, i.e., \(\sigma = (r,s,t,w)\), altough signature verification will ignore w. As pointed out by Abe et al., a signature \(\sigma = (r,s,t)\) can be randomized using the randomization token w to obtain a signature \(\sigma ' = (r',s',t')\) by picking a random  and computing \( r' \leftarrow r^{u'}, \quad s' \leftarrow s^{1/u'}, \quad t' \leftarrow (t w^{(u'-1)})^{1/u'^2}. \)

and computing \( r' \leftarrow r^{u'}, \quad s' \leftarrow s^{1/u'}, \quad t' \leftarrow (t w^{(u'-1)})^{1/u'^2}. \)

For our construction, we also require the host to prove that it knows an encrypted signature on an encrypted message. In Sect. 6 we describe how such a proof can be done.

4.4 Split Signatures

The second signature scheme we require must allow two different parties, each holding a share of the secret key, to jointly create signatures. Our DAA protocol performs the joined public key generation and the signing operation in a strict sequential order. That is, the first party creates his part of the key, and the second party receiving the ‘pre-public key’ generates a second key share and completes the joined public key. Similarly, to sign a message the first signer creates a ‘pre-signature’ and the second signer completes the signature. We model the new signature scheme for that particular sequential setting rather than aiming for a more generic building block in the spirit of threshold or multi-signatures, as the existence of a strict two-party order allows for substantially more efficient constructions.

We term this new building block split signatures partially following the notation by Bellare and Sandhu [8] who formalized different two-party settings for RSA-based signatures where the signing key is split between a client and server. Therein, the case “MSC” where the first signature contribution is produced by an external server and then completed by the client comes closest to out setting.

Formally, we define a split signature scheme as a tuple of the algorithms \(\mathsf {SSIG}=(\mathsf {PreKeyGen}, \mathsf {CompleteKeyGen}, \mathsf {VerKey}, \mathsf {PreSign}, \mathsf {CompleteSign}, \mathsf {Vf})\):

- \(\mathsf {PreKeyGen}( spar )\mathrm{{:}}\) :

-

On input the system parameters, this algorithm outputs the pre-public key \( ppk \) and the first share of the secret signing key \( ssk _1\).

- \(\mathsf {CompleteKeyGen}( ppk )\mathrm{{:}}\) :

-

On input the pre-public key, this algorithm outputs a public verification key \( spk \) and the second secret signing key \( ssk _2\).

- \(\mathsf {VerKey}( ppk , spk , ssk _2)\mathrm{{:}}\) :

-

On input the pre-public key \( ppk \), the full public key \( spk \), and a secret key share \( ssk _2\), this algorithm outputs 1 iff the pre-public key combined with secret key part \( ssk _2\) leads to full public key \( spk \).

- \(\mathsf {PreSign}( ssk _1, m)\mathrm{{:}}\) :

-

On input a secret signing key share \( ssk _1\), and message m, this algorithm outputs a pre-signature \(\sigma '\).

- \(\mathsf {CompleteSign}( ppk , ssk _2, m, \sigma ')\mathrm{{:}}\) :

-

On input the pre-public key \( ppk \), the second signing key share \( ssk _2\), message m, and pre-signature \(\sigma '\), this algorithm outputs the completed signature \(\sigma \).

- \(\mathsf {Vf}( spk , \sigma , m)\mathrm{{:}}\) :

-

On input the public key \( spk \), signature \(\sigma \), and message m, this algorithm outputs a bit b indicating whether the signature is valid or not.

We require a number of security properties from our split signatures. The first one is unforgeability which must hold if at least one of the two signers is honest. This is captured in two security experiments: type-1 unforgeability allows the first signer to be corrupt, and type-2 unforgeability considers a corrupt second signer. Our definitions are similar to the ones by Bellare and Sandhu, with the difference that we do not assume a trusted dealer creating both secret key shares. Instead, we let the adversary output the key share of the party he controls. For type-2 unforgeability we must ensure, though, that the adversary indeed integrates the honestly generated pre-key \( ppk \) when producing the completed public key \( spk \), which we verify via \(\mathsf {VerKey}\). Formally, unforgeability for split signatures is defined as follows.

Definition 1

(Type-1/2 Unforgeability of \(\mathsf {SSIG}\)). A split signature scheme is type-1/2 unforgeable if for any efficient algorithm \(\mathcal {A} \) the probability that the experiments given in Fig. 4 return 1 is negligible (as a function of \(\tau \)).

Further, we need a property that we call key-hiding, which ensures that signatures do not leak any information about the public key for which they are generated. This is needed in the DAA scheme to get unlinkability even in the presence of a corrupt TPM that contributes to the signatures and knows part of the secret key, yet should not be able to recognize “his” signatures afterwards. Our key-hiding notion is somewhat similar in spirit to key-privacy for encryption schemes as defined by Bellare et al. [6], which requires that a ciphertext should not leak anything about the public key under which it is encrypted.

Formally, this is captured by giving the adversary a challenge signature for a chosen message either under the real or a random public key. Clearly, the property can only hold as long as the real public key \( spk \) is not known to the adversary, as otherwise he can simply verify the challenge signature. As we want the property to hold even when the first party is corrupt, the adversary can choose the first part of the secret key and also contribute to the challenge signature. The adversary is also given oracle access to \(\mathcal {O}^\mathtt{{\mathsf {CompleteSign}}} \) again, but is not allowed to query the message used in the challenge query, as he could win trivially otherwise (by the requirement of signature-uniqueness defined below and the determinism of \(\mathsf {CompleteSign}\)). The formal experiment for our key-hiding property is given below. The oracle \(\mathcal {O}^\mathtt{{\mathsf {CompleteSign}}} \) is defined analogously as in type-1 unforgeability.

Definition 2

(Key-hiding property of \(\mathsf {SSIG}\)). We say a split signature scheme is key-hiding if for any efficient algorithm \(\mathcal {A} \) the probability that the experiment given in Fig. 5 returns 1 is negligible (as a function of \(\tau \)).

Finally, we need correctness, i.e., honestly generated signatures verify correctly, and two uniqueness properties for our split signatures. The first is key-uniqueness, which states that every signature is only valid under one public key. Second, we require signature-uniqueness, which guarantees that one can compute only a single valid signature on a certain message under a certain public key. These properties are formally defined in the full version of this paper [24].

Instantiation of split signatures (split-BLS). To instantiate split signatures, we use a modified BLS signature [12]. Let \(\mathsf {H} \) be a hash function \(\{0,1\} \rightarrow \mathbb {G}_1^*\) and the public system parameters be the description of a bilinear map, i.e., \( spar = (\mathbb {G}_1, \mathbb {G}_2, \mathbb {G}_T, g_1, g_2, e, q)\).

- \(\mathsf {PreKeyGen}( spar )\mathrm{{:}}\) :

-

Take

, set \( ppk \leftarrow g_2^{ ssk _1}\), and output \(( ppk , ssk _1)\).

, set \( ppk \leftarrow g_2^{ ssk _1}\), and output \(( ppk , ssk _1)\). - \(\mathsf {CompleteKeyGen}( spar , ppk )\mathrm{{:}}\) :

-

Check \( ppk \in \mathbb {G}_2\) and \( ppk \ne 1_{\mathbb {G}_2}\). Take

and compute \( spk \leftarrow ppk ^{ ssk _2}\). Output \(( spk , ssk _2)\).

and compute \( spk \leftarrow ppk ^{ ssk _2}\). Output \(( spk , ssk _2)\). - \(\mathsf {VerKey}( spar , ppk , spk , ssk _2)\mathrm{{:}}\) :

-

Output 1 iff \( ppk \ne 1_{\mathbb {G}_2}\) and \( spk = ppk ^{ ssk _2}\).

- \(\mathsf {PreSign}( spar , ssk _1, m)\mathrm{{:}}\) :

-

Output \(\sigma ' \leftarrow \mathsf {H} (m)^{ ssk _1}\).

- \(\mathsf {CompleteSign}( spar , ppk , ssk _2, m, \sigma ')\mathrm{{:}}\) :

-

If \(e(\sigma ', g_2) = e(\mathsf {H} (m), ppk )\), output \(\sigma \leftarrow \sigma '^{ ssk _2}\), otherwise \(\bot \).

- \(\mathsf {Vf}( spar , spk , \sigma , m)\mathrm{{:}}\) :

-

Output 1 iff \(\sigma \ne 1_{\mathbb {G}_1}\) and \(e(\sigma , g_2) = e(\mathsf {H} (m), spk )\).

The proof of the following theorem is given in the full version of this paper [24].

Theorem 1

The split-BLS signature scheme is a secure split signature scheme, satisfying correctness, unforgeability-1, unforgeability-2, key-hiding, key-uniqueness, and signature-uniqueness, under the computational co-Diffie-Hellman assumption and the DDH assumption in \(\mathbb {G}_1\), in the random oracle model.

5 Construction

This section describes our DAA protocol achieving optimal privacy. On a very high level, the protocol follows the core idea of existing DAA protocols: The platform, consisting of the TPM and a host, first generates a secret key \( gsk \) that gets blindly certified by a trusted issuer. Subsequently, the platform can use the key \( gsk \) to sign attestations and basenames and then prove that it has a valid credential on the signing key, certifying the trusted origin of the attestation.

This high-level procedure is the main similarity to existing schemes though, as we significantly change the role of the host to satisfy our notion of optimal privacy. First, we no longer rely on a single secret key \( gsk \) that is fully controlled by the TPM. Instead, both the TPM and host generate secret shares, \( tsk \) and \( hsk \) respectively, that lead to a joint public key \( gpk \). For privacy reasons, we cannot reveal this public key to the issuer in the join protocol, as any exposure of the joint public key would allow to trace any subsequent signed attestations of the platform. Thus, we let the issuer sign only an encryption of the public key, using the signature scheme for encrypted messages. When creating this membership credential \( cred \) the issuer is assured that the blindly signed key is formed correctly and the credential is strictly bound to that unknown key.

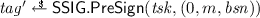

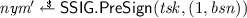

After having completed the \(\mathsf {JOIN}\) protocol, the host and TPM can together sign a message m with respect to a basename \( bsn \). Both parties use their individual key shares and create a split signature on the message and basename (denoted as \( tag \)), which shows that the platform intended to sign this message and basename, and a split signature on only the basename (denoted as \(\textit{nym}\)), which is used as a pseudonym. Recall that attestations from one platform with the same basename should be linkable. By the uniqueness of split signatures, \(\textit{nym}\) will be constant for one platform and basename and allow for such linkability. Because split signatures are key-hiding, we can reveal \( tag \) and \(\textit{nym}\) while preserving the unlinkability of signatures with different basenames.

When signing, the host proves knowledge of a credential that signs \( gpk \). Note that the host can create the full proof of knowledge because the membership credential signs a joint public key. In existing DAA schemes, the membership credential signs a TPM secret, and therefore the TPM must always be involved to prove knowledge of the credential, which prevents optimal privacy as we argued in Sect. 3.

5.1 Our DAA Protocol with Optimal Privacy \(\varPi _{\mathsf {pdaa}}\)

We now present our generic DAA protocol with optimal privacy \(\varPi _{\mathsf {pdaa}}\) in detail. Let \(\mathsf {SSIG}=(\mathsf {PreKeyGen}, \mathsf {CompleteKeyGen}, \mathsf {VerKey}, \mathsf {PreSign}, \mathsf {CompleteSign}, \mathsf {Vf})\) denote a secure split signature scheme, as defined in Sect. 4.4, and let \(\mathsf {ESIG}=(\mathsf {SigKGen}, \mathsf {EncSign}, \mathsf {DecSign}, \mathsf {Vf})\) denote a secure signature scheme for encrypted messages, as defined in Sect. 4.3. In addition, we use a CPA secure encryption scheme \(\mathsf {ENC}=(\mathsf {EncKGen}, \mathsf {Enc}, \mathsf {Dec})\). We require all these algorithms to be compatible, meaning they work with the same system parameters.

We further assume that functionalities \((\mathcal {F}_\mathsf {crs}, \mathcal {F}_\mathsf {ca}, \mathcal {F}_\mathsf {auth*})\) are available to all parties. The certificate authority functionality \(\mathcal {F}_\mathsf {ca}\) allows the issuer to register his public key, and we assume that parties call \(\mathcal {F}_\mathsf {ca}\) to retrieve the public key whenever needed. As the issuer key \(( ipk , \pi _ ipk )\) also contains a proof of well-formedness, we also assume that each party retrieving the key will verify \(\pi _ ipk \).

The common reference string functionality \(\mathcal {F}_\mathsf {crs}\) provides all parties with the system parameters \( spar \) generated via \(\mathsf {SParGen}(1^\tau )\). All the algorithms of the building blocks take \( spar \) as an input, which we omit – except for the key generation algorithms – for ease of presentation.

For the communication between the TPM and issuer (via the host) in the join protocol, we use the semi-authenticated channel \(\mathcal {F}_\mathsf {auth*}\) introduced by Camenisch et al. [25]. This functionality abstracts the different options on how to realize the authenticated channel between the TPM and issuer that is established via an unauthenticated host. We assume the host and TPM can communicate directly, meaning that they have an authenticated and perfectly secure channel. This models the physical proximity of the host and TPM forming the platform: if the host is honest an adversary can neither alter nor read their internal communication, or even notice that communication is happening.

To make the protocol more readable, we omit the explicit calls to the sub-functionalities with sub-session IDs and simply say e.g., issuer \(\mathcal {I} \) registers its public key with \(\mathcal {F}_\mathsf {ca}\). For definitions of the standard functionalities \(\mathcal {F}_\mathsf {crs}\) and \(\mathcal {F}_\mathsf {ca}\) we refer to [30, 31].

1. Issuer Setup. In the setup phase, the issuer \(\mathcal {I} \) creates a key pair of the signature scheme for encrypted messages and registers the public key with \(\mathcal {F}_\mathsf {ca}\).

-

(a)

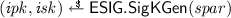

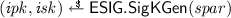

\(\mathcal {I}\) upon input \((\mathsf {SETUP}, sid )\) generates his key pair:

-

Check that \( sid = (\mathcal {I}, sid ')\) for some \( sid '\).

-

Get

and prove knowledge of the secret key via \(\pi _ ipk \leftarrow \mathsf {NIZK}\{(\underline{ isk }): ( ipk , isk ) \in \mathsf {ESIG}.\mathsf {SigKGen}( spar )\}( sid ).\)

and prove knowledge of the secret key via \(\pi _ ipk \leftarrow \mathsf {NIZK}\{(\underline{ isk }): ( ipk , isk ) \in \mathsf {ESIG}.\mathsf {SigKGen}( spar )\}( sid ).\)

-

Initiate \(\mathcal {L}_\mathsf {JOINED}\leftarrow \emptyset \).

-

Register the public key \(( ipk , \pi _ ipk )\) at \(\mathcal {F}_\mathsf {ca}\), and store \(( isk , \mathcal {L}_\mathsf {JOINED})\).

-

Output \((\mathsf {SETUPDONE}, sid )\).

-

Join Protocol. The join protocol runs between the issuer \(\mathcal {I} \) and a platform, consisting of a TPM \(\mathcal {M} _i\) and a host \(\mathcal {H} _j\). The platform authenticates to the issuer and, if the issuer allows the platform to join, obtains a credential \( cred \) that subsequently enables the platform to create signatures. The credential is a signature on the encrypted joint public key \( gpk \) to which the host and TPM each hold a secret key share. To show the issuer that a TPM has contributed to the joint key, the TPM reveals an authenticated version of his (public) key contribution to the issuer and the host proves that it correctly incorporated that share in \( gpk \). A unique sub-session identifier \( jsid \) distinguishes several join sessions that might run in parallel.

2. Join Request. The join request is initiated by the host.

-

(a)

Host \(\mathcal {H} _j\), on input \((\mathsf {JOIN}, sid , jsid , \mathcal {M} _i)\) parses \( sid = (\mathcal {I}, sid ')\) and sends \(( sid , jsid )\) to \(\mathcal {M} _i\).Footnote 1

-

(b)

TPM \(\mathcal {M} _i\), upon receiving \(( sid , jsid )\) from a party \(\mathcal {H} _j\), outputs \((\mathsf {JOIN}, sid , jsid )\).

3. \(\mathcal {M} \) -Join Proceed. The join session proceeds when the TPM receives an explicit input telling him to proceed with the join session \( jsid \).

-

(a)

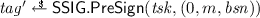

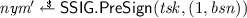

TPM \(\mathcal {M} _i\), on input \((\mathsf {JOIN}, sid , jsid )\) creates a key share for the split signature and sends it authenticated to the issuer (via the host):

-

Run

.

. -

Send \( tpk \) over \(\mathcal {F}_\mathsf {auth*}\) to \(\mathcal {I} \) via \(\mathcal {H} _j\), and store the key \(( sid , \mathcal {H} _j, tsk )\).

-

-

(b)

When \(\mathcal {H} _j\) notices \(\mathcal {M} _i\) sending \( tpk \) over \(\mathcal {F}_\mathsf {auth*}\) to the issuer, it generates its key share for the split signature and appends an encryption of the jointly produced \( gpk \) to the message sent towards the issuer.

-

Complete the split signature key as

-

Create an ephemeral encryption key pair

.

. -

Encrypt \( gpk \) under \( epk \) as

.

. -

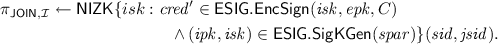

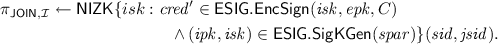

Prove that C is an encryption of a public key \( gpk \) that is correctly derived from the TPM public key share \( tpk \):

-

Append \((\mathcal {H} _j, epk , C, \pi _{\mathsf {JOIN}, \mathcal {H}})\) to the message \(\mathcal {M} _i\) is sending to \(\mathcal {I} \) over \(\mathcal {F}_\mathsf {auth*}\) and store \(( sid , jsid , \mathcal {M} _i, esk , hsk , gpk )\).

-

-

(c)

\(\mathcal {I}\), upon receiving \( tpk \) authenticated by \(\mathcal {M} _i\) and \((\mathcal {H} _j, epk , C, \pi _{\mathsf {JOIN}, \mathcal {H}})\) in the unauthenticated part, verifies that the request is legitimate:

-

Verify \(\pi _{\mathsf {JOIN}, \mathcal {H}}\) w.r.t. the authenticated \( tpk \) and check that \(\mathcal {M} _i \notin \mathcal {L}_\mathsf {JOINED}\).

-

Store \(( sid , jsid , \mathcal {H} _j, \mathcal {M} _i, epk , C)\) and output \((\mathsf {JOINPROCEED}, sid , jsid , \mathcal {M} _i)\).

-

4. \(\mathcal {I} \) -Join Proceed. The join session is completed when the issuer receives an explicit input telling him to proceed with join session \( jsid \).

-

(a)