Abstract

In urban public transport, the traffic flow prediction is a classical non-linear complicated optimization problem, which is very important for public transport system. With the rapid development of the big data, Smart card data of bus which is provided by millions of passengers traveling by bus across several days plays a more and more important role in our daily life. The issue that we address is whether the data mining algorithm and the intelligent optimization algorithm can be applied to forecast the traffic flow from big data of bus. In this paper, a novel algorithm which called mixed support vector regression with sub-space orthogonal pigeon-Inspired Optimization (SOPIO-MSVR) is used to predict the traffic flow and optimize the algorithm progress. Results show the SOPIO-MSVR algorithm outperforms other algorithms by a margin and is a competitive algorithm. And the research can make the significant contribution to the improvement of the transportation.

Similar content being viewed by others

Keywords

1 Introduction

In public transportation area, advanced public transportation collection systems are applied to collect revenue. At the same time, they also generate huge amounts of data which is stored in the database. The data is very useful not only for individual travels but also for government agencies [1]. There are still many challenges using data for transportation analysis, such as a crucial need for data fusing approaches combining data with different features, structures and resolutions, data processing and mining techniques and a suite of schemes based on cyber and physical space data [2].

The public transportation card has become the preferred payment method of the city’s transportation. The potential it holds focuses on these aspects: traffic route prediction [4], travel patterns mining [5], visual exploration of urban data [6], traffic flow prediction [7], passenger demand analysis [8] and a few of many examples based on its comprehensive categories and new applications. Traffic flow prediction is a universal problem that has aroused widespread attention. Although many models and algorithms have been applied to predict the traffic flow, simple traffic models and results somewhat don’t meet the requirements.

The booming demand for big data provides new methods of traffic flow. We hope to utilize more suitable models and more efficient algorithms by means of such rich amount of algorithms. The approaches to traffic flow prediction now are divided into three categories: parametric methods, nonparametric methods and simulations. Parametric methods consist of time-series models and Kalman filtering models, etc. The time-series models mainly indicate significant ARIMA (0,1,1) [9] and its improved models such as KARIMA [10], ARIMAX [11], SARIMA [12] models. These models have requests of estimation about a large number of parameters in multivariate space [13].

Because of stochastic and nonlinear traffic flow data, the research focuses more on nonparametric methods. The nonparametric methods consist of nonparametric regressions, Neural networks and support vector machine, etc. Davis et al. presented KNN methods for short-term traffic flow prediction [14]. Sun used a Bayesian network to forecast traffic flow [15]. And an example in supervised weighting-online learning algorithm was presented in [16] for prediction. Optimized neural networks combining with genetic approach was applied by Vlahogianni [16] to predict traffic flow under typical and untypical conditions. Zhong et al. put forward Hybrid models which they called designed regression and time delay neural network models [17]. A computational intelligence-based approach in the article [18] performed well on the effect of target values. Simulation approaches need to develop new tools to predict traffic flows.

Many research on the comparison of pros and cons about flow prediction models have been made. Chen et al. contrast the ARMA, ARIMA, SARIMA, SVR, Bayesian network, ANN, K-Nearest Neighbor, Naïve models, and pointed out different models are suitable for different time periods of the day [19]. Lippi et al. proposed that improved SVR models performed well than SARIMA models during the most congested periods [20]. Hinsbergen et al. did experiments with parametric and nonparametric models and results indicated that not one of the methods can be considered the best method in any situation [21]. More reviews can be found in [22,23,24,25].

SVM models are a type of machine learning models that can be utilized to predict the values in different fields and have effect on the accuracy of prediction [26, 27]. Due to intrinsic multi-input nature, SVM is favored among the multivariate or time-spatial models. Although SVM has drawn public attention from the research community around the world, some studies such as optimal design still deserve to be discovered and supplemented [28]. Nonparametric SVM algorithms had difficulty on parameters selection. And how to prevent local convergence when adjusting parameter is significant to some degree. Biological inspired algorithms are the valuable solution on complex optimization problems and local convergence problems. Pigeon inspired optimization was first proposed by Duan et al. and is a novel swarm intelligence algorithm on the basis of the movement of the flock of pigeons [29], the algorithm is used for Image restoration [30], three-dimensional path planning [31], Target Assignment [32], model prediction control [33]. The parameters according to pigeon inspired optimization (PIO) technology was used correctly for traffic flow prediction about travel logs (big data) of Guangzhou to serve as an input of the kernel optimal parameters in the SVM training. And comparative algorithms are conducted to present the relative dominance of the new algorithm. Solving the prediction problem need the better mathematic model and also better algorithm model.

In this paper, we solve the following problems, which are implicitly related:

-

(1)

Can we improve the performance of the algorithm model and heighten precision of prediction using modified algorithm model?

-

(2)

How should we deal with big data and do feature extraction by the tools such as SQL, Highcharts?

To answer these questions, we first introduce the algorithm model which called SOPIO-MSVM, PSO-SVM, DT-SVM, GA-SVM, ARMA. Next, we process the original data and research on the variation ruler during different time horizons. Finally, we evaluate the performance of different mathematic models and algorithm models and select the best mathematic model and algorithm model.

The structure of this paper is organized as follows. Section 1 introduces the research status of the short-term traffic flow prediction. Section 2 presents the basic method - SOPIO-SVM algorithm for traffic flow forecasting. Section 3 shows the experimental results with real data to verify the performance of the algorithm. Concluding remarks and future research opportunities are described in Sect. 4.

2 Theory of the Mathematic Model and New Algorithm Model

Principle of Basic PIO

The pigeons can find their ways back home via three methods: (1) The earth’s magnetic field. (2) The sun. (3) The landmark. And using above tools, they can select the best strategies and reach the destination through the optimal route. A novel algorithm called PIO was put forward by imitating and studying the homing pigeon’s behavior. There are two important operators in the algorithm: one is map and compass operator which is used to adjust the direction based on the magnetic field and the sun, and the other is landmark operator which is defined according to the quantitative values of the landmark (Fig. 2).

Map and Compass Operator

In the map and compass operator, Variate \( X_{i} \) is the pigeon \( i \) position, Variate \( V_{i} \) is the pigeon \( i \) velocity and \( D \) is the dimension of the search space about the pigeon \( i \). Variance \( X_{g} \) denotes the current global best position which could be obtained by comparing all the positions among all the pigeons. Variance \( R \) denotes the extent to which the velocity on next generation will vary with the former generation and is called the map and compass factor [28, 29].

The relationship between the parameters shows in Eqs. (1)–(2)

Landmark Operator

In the landmark operator, we let \( X_{c} (t) \) denote the center of pigeon’s position, \( X_{i} (t) \) denotes the position of pigeon I at the \( t \) th iteration and \( X_{c} (t) \) denotes the center position at the \( t \) th iteration. We present the strategy that half of these pigeons which are badly-behaved will be discarded and other pigeons which are unfamiliar the landmarks in the remaining pigeons will go after the nearest pigeons in the circle. In our definition, we use the formula (3)–(5) to state the behavior of the pigeons.

where \( fitness \) is the quality of the pigeon individual. For the minimum optimization problems, we can choose \( fitness(X_{i} (t)) = \frac{1}{{f(X_{i} (t)) + \varepsilon }} \) for maximum optimization problems [28].

Proposed Pigeon Inspiration Algorithm

Sub-space Division Orthogonal Design

The orthogonalization design can ensure population distribution within the feasible, and also can solve the problem of too much complexity. Let \( L_{M} (H^{n} ) \) be an orthogonal array of \( n \) factors and \( H \) levels, where \( L \) denotes a Latin square, \( H^{n} \) is the full size of the orthogonal array, and \( M \) is the number of combinations of levels. \( L_{M} (H^{n} ) \) is a matrix of numbers arranged in \( M \) rows and \( n \) columns, where each row represents a combination of levels, and each column represents a specific factor that can be changed [34].

The basic orthogonal design of space \( [l_{1} ,u_{1} ] \) about parameter a is presented in Table 1.

The scope of parameters about SVM is wide, so M is larger. It is obvious that more representative initial population which means that a higher value M is better. But \( M \) is limited by \( n \) and \( H \). The sub-space division orthogonal design is adopted to solve the problem. The sub-space division orthogonal design steps is as follows:

-

(1)

The feasible solution space \( [l,u] \) is divided into S subspaces \( [l_{1} ,u_{1} ],\,[l_{2} ,u_{2} ], \ldots \ldots ,\,[l_{s} ,u_{s} ] \);

-

(2)

Use orthogonal design showed in Table 1 to produce \( M \) individuals, and select best \( S_{size} \) bodies in every subspace \( [l_{i} ,u_{i} ](i = 1,2,3 \ldots s) \).

-

(3)

Choose the best S bodies from the \( S \times S_{size} \) [35].

Mixed Support Vector Machine

Support Vector Machine were put forward by Vladimir Vapnik and have been a statistical learning technique for regression and classification problems. The basic target of SVR is adopted a function which is shown as Eq. (6) to get predicted results \( f(x) \) by pre-determined input \( x \)

where \( w \) and \( b \) are the two parameters of SVR, which need to be optimized to fit the training dataset. \( \phi (x) \) is the nonlinear mapping about \( x \) to meet the requirement that the relationship of \( \phi (x) \) and \( y \) is linear when the relationship of \( x \) and \( y \) is nonlinear.

The goal of \( SVR \) is to minimize the expected risk \( R_{emp} \) which is defined in (7)–(8)

where \( L_{\varepsilon } \) is called \( \varepsilon \) -insensitive loss function by Vapnik.

By introducing Lagrange multipliers and converting the optimization problem into the problem, the function in (6) can be transformed into the following form:

where \( a_{i}^{*} ,a_{i} \) are the Lagrange multipliers that can be got by solving the dual problem and \( K(x_{i} ,x_{j} ) \) is the kernel function that equals the inner product of \( \phi (x_{i} ) \) and \( \phi (x_{j} ) \) [36].

The effects of the SVR algorithm model are strongly dependent on the SVM hyperparameters: the regularization factor \( C \), the hyperparameter \( \varepsilon \) that defines the \( \varepsilon \) – insensitive tube, \( \sigma \) that represents the kernel parameter about the RBF kernel. \( \zeta \), \( d \) that represents the kernel parameter about the PLOY kernel. From [33], we can see that PLOY kernel has better extrapolation abilities higher orders of degrees for good interpolation, but the RBF kernel has good interpolation abilities and fails to provide longer range extrapolation. Using mixtures of kernels can ensure having both good interpolation and extrapolation abilities. The basic convex combination of the two kernels \( K_{POLY} \) [37].

And \( K_{RBF} \) can be computed as (11):

Different kernels functions are described as follows:

The RBF function \( K_{RBF} \) is presented in (12)

The polynomial kernel \( K_{PLOY} \) is presented in (13)

where \( \sigma \), \( \xi \) and \( b \) are the parameters of description about the kernel’s behavior.

The fitness of the new SOPIO-SVM is defined as:

where \( \mathop X\limits^{ \wedge } (k) \) is the prediction value and \( X(k) \) is the real value.

The SVR-based model has the smaller performance criterion than other models that the reason is the SVR-based model can solve the traffic flow prediction problem with the nonlinearity and wave property than some model. Thus, employing the SVR model to build models about the traffic flow forecasting is appropriate.

SOPIO-SVM Algorithm

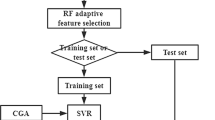

The calculation process of the new SOPIO-SVM is in Fig. 1.

The detailed procedure of SOPIO-SVM for traffic flow prediction showing in Fig. 3 is described as follows:

-

Step 1:

Set the parameters of the SOPIO algorithm model which include the dimension M and the range for values on every parameter like the input parameter \( C,\varepsilon ,\sigma ,\xi ,d \) of the mixed SVR, the population size N, the Map and compass operator iteration Nc1max, the landmark operator iteration Nc2max, Compass operator iteration factor R and the number of orthogonalization space M, the factor of every orthogonalization space H.

-

Step 2:

Generate an initial array according to the five parameters of the mixed SVR. And we choose that divided the array of the parameter into 5 sub-space. In every sub-space, we set M = 10 and then realize the orthogonal design. Finally, we choose the best values as the initial population.

-

Step 3:

Operate the map and compass operator. Calculate the velocity and update the position of each pigeon according to Eqs. (1)–(2). And the fitness is defined as the output of the MSVR. Put the initial population into MSVR to find the fitness of each pigeon and record the new best value. If Nc > Nc1max, go to step 5.

-

Step 4:

If Nc < Nc1max, renew the count of iteration with Nc = Nc + 1 and return to Step 3.

-

Step 5:

Execute the landmark operator. Calculate the fitness of each pigeon and rank the pigeons based on their fitness values. Half of the pigeons whose fitness values are worse will be abandoned. The other half is valuable for find the best pigeon. To expel influence of these better pigeon, we choose to continue to search between the range of activities about the half pigeons. So we find the center of the left half of the pigeons according to (3)–(4) as the temporary destination. The Remaining Pigeons will fly to the intermediate destination by adjusting their flying direction according to (5).

-

Step 6:

Rank the pigeons according to their new updated fitness values and find the best value. If Nc > Nc2max, go to Step 10. Otherwise, renew the count of iteration with Nc = Nc + 1 and go to Step 6, next the number remaining pigeon are one-fourth the initial number of the pigeon. With the method, the number of the remaining pigeon is \( \frac{1}{{2^{n} }}(n = 1,2,3 \ldots ) \) of the initial number of the pigeon.

-

Step 7:

Stop SOPIO and train SVM with the optimal parameters.

-

Step 8:

Predict the flow of passengers and output the result. By analyzing the error, we find the best algorithm model and mathematic model.

3 Experiments

Data Description

Compared with data from other industries, there are a few features on bus IC card data: a lot of dirty data, obvious nonlinear trend on traffic flow in weekends, workdays and holidays, difference with the significance of geographical position, road structure and weather. The row data collected is on the weekdays of the last five months of the year 2014 in Guangzhou, Guangdong province. The everyday traffic data is from 10 am to 12 pm.

Index of Performance

By analyzing the mathematical statistics theory, this paper concludes three characteristic indexes as the criterions to appraise the performance of the mathematic and improved algorithm which are measures of the deviation between actual and predicted values. Root Mean Square Error (RMSE) and Mean Square Error which evaluate the absolute error and Mean relative error (MRE) which is the performance criteria of the relative error. The smaller values of these of these indexes, the more accurate are the result of the traffic flow prediction. The calculation formulas of these indexes are shown as Eqs. (14)–(16)

where \( \mathop {m_{i} }\limits^{ \wedge } \) is the predicted value of the traffic flow, and \( m_{i} \) is the experimented value of the Traffic flow. \( n \) represents the total number of the traffic data.

Experimental Environment

Original data volume has reached 4.5 G and we store data in a Mysql database. Data statistics have been carried out in SQL programming language. The visualization chart is conducted with Highcharts in Chrome Chrome. Numerical experiments and proposed algorithm have been implemented in Matlab programming language with a 1.8 GHz Core (TM) 4 CPU personal computer (PC) and 8.0 G memory under Microsoft Window7. We adopt the static forecasting method to prevent the deviation propagating forward, which means that the forecasting value of the traffic flow at \( t + 1 \) will use the real value at \( t \) instead of the forecasting value at \( t \).

Results

The algorithm model needs to be compared with other algorithm models to show its good performance. But previous research focuses on less on the data handling techniques and have different data structures and data features. It is impossible to have a direct comparison on the results. Therefore, this paper uses the sample data operating on the existing methods. Figure 3 presents the output of the algorithm aiming at the former three models for the traffic flow prediction of the route 10 and also it has a comparison on the model predicted effect from the three characteristic indexes RMSE, MAE, MRE. Table show all the three error indexes: RMSE, MAE, MRE, can be used independently to evaluate a prediction model. To test the superiority of the SOPIO MSVM, PSO-SVM, BP Neural Network, GD-SVM, GA-SVM, PIO-SVM, ARMA algorithm is also apply to evaluate the advantages and disadvantages of these algorithms. In building these six modes, the \( MSE \) (Mean Squared Error) is used as the fitness function for these six algorithms. To reduce the influence of the parameter setting, the parameters of the PSO, DT, GA, SOPIO which are applied to optimize the parameters of the MSVR model should be treated as the same criteria, excepting the parameter is required to be determined specially.

The range of five parameters in the MSVR model are set as \( C \in [0.01,100],\,\varepsilon \in [0.01,1],\sigma \in [0.01,1],\zeta \in [0.01,10],d \in [0.01,1] \). The population size \( pop\_size \) is 30, The crossover validation fold is 5. The maximum iterations are defined as 300. Considering the randomness of the intelligent optimization algorithm, the former six algorithms are adopted to optimize the parameters of the mixed support vector machine for 10 times random, the prediction results are described and the best fitness is shown as Fig. 4 based on last 100 h’s test data.

From Table we can see that:

-

(1)

Regular passenger: The performance of the SOPIO-SVR algorithm model are better than other four algorithms on the same condition. Also, it shows that the parameters resulting from PIO algorithm model make the parameter searching about SVR easier and gets the best forecasting result. Therefore, the SOPIO is more appropriate to search the parameter of SVR.

-

(2)

Medium passenger: compared with other three models, both SOPIO-SVR and BP has a smaller error with the traffic rate. Among these two models, the BP algorithm model is more effective and promising for traffic flow forecasting.

-

(3)

Random passenger: The prediction accuracy about SOPIO-SVR is promising comparable with the reported results. According to this statistic, the SVR with the mixed kernel is the best model for estimating the traffic flow.

The all traffic flow put the three passenger’s traffic flow as an input of the BP neural network. And the result indicates that the synthesize is better than tradition model. And the RMSE, MAE, MRE of the new model is more Smaller than the traditional model (Table 2).

4 Conclusion

We propose a new SOPIO-SVM algorithm with a passenger classification prediction model for traffic flow forecasting. Unlike the previous methods that consider the overall traffic flow, we adopt the different algorithm for a different type of passenger (regular passenger, medium passenger, random passenger) and put three results of the different type of passengers into BP neural network to get the final result. Then we evaluated the effect on the results about three models and compare prediction results with the BP, PSO-SVM. GT-SVM, GA-SVM algorithm, and the results obtained in this paper reveal that the mathematic model and proposed algorithm are a valid alternative and superior to other mathematic models and algorithms under some circumstances.

For future work, it would be useful to search more appropriate methods to classify the passenger into different types and more appropriate parameter combination for SVR model. Also, we would try to apply the mathematic model and algorithm on different traffic data sets to validate the good or bad.

To sum up the conclusions we conducted as follows:

-

(1)

Through the comparison with other the usual algorithms, the propose SOPIO-MSVR algorithm model is confirmed to have good performance on the traffic flow prediction.

-

(2)

To find a proper pigeon inspired optimization for mixed support vector regression, the paper proposed sub-space division orthogonal design strategies to initialize the parameters as the basic PIO algorithm input which we called SOPIO-MSVM algorithm.

References

Pelletier, M.P., Trépanier, M., Morency, C.: Smart card data use in public transit: a literature review. Transp. Res. Part C: Emerging Technol. 19(4), 557–568 (2011)

Zheng, X., Chen, W., Wang, P., et al.: Big data for social transportation. IEEE Trans. Intell. Transp. Syst. 17(3), 620–630 (2016)

Min, Y.H., Ko, S.J., Kim, K.M., et al.: Mining missing train logs from Smart Card data. Transp. Res. Part C: Emerging Technol. 63, 170–181 (2016)

Dimond, M., Smith, G., Goulding, J.: Improving route prediction through user journey detection. In: Proceedings of the 21st ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, pp. 476–479. ACM (2013)

Ma, X., Wu, Y.J., Wang, Y., et al.: Mining smart card data for transit riders’ travel patterns. Transp. Res. Part C: Emerging Technol. 36, 1–12 (2013)

Ferreira, N., Poco, J., Vo, H.T., et al.: Visual exploration of big spatio-temporal urban data: a study of New York city taxi trips. IEEE Trans. Vis. Comput. Graph. 19(12), 2149–2158 (2013)

Jin, F., Sun, S.: Neural network multitask learning for traffic flow forecasting. In: 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), pp. 1897–1901. IEEE (2008)

Ma, Z., Xing, J., Mesbah, M., et al.: Predicting short-term bus passenger demand using a pattern hybrid approach. Transp. Res. Part C: Emerging Technol. 39, 148–163 (2014)

Levin, M., Tsao, Y.-D.: On forecasting freeway occupancies and volumes. Transp. Res. Rec. 773, 47–49 (1980)

van der Voort, M., Dougherty, M., Watson, S.: Combining Kohonen maps with ARIMA time series models to forecast traffic flow. Transp. Res. C Emerging Technol. 4(5), 307–318 (1996)

Williams, B.M.: Multivariate vehicular traffic flow prediction—evaluation of ARIMAX modeling. Transp. Res. Rec. 1776, 194–200 (2001)

Williams, B.M., Hoel, L.A.: Modeling and forecasting vehicular traffic flow as a seasonal ARIMA process: theoretical basis and empirical results. J. Transp. Eng. 129(6), 664–672 (2003)

Ghosh, B., Basu, B., O’Mahony, M.: Multivariate short-term traffic flow forecasting using time-series analysis. IEEE Trans. Intell. Transp. Syst. 10(2), 246–254 (2009)

Davis, G.A., Nihan, N.L.: Nonparametric regression and short-term reeway traffic forecasting. J. Transp. Eng. 117(2), 178–188 (1991)

Sun, S., Zhang, C., Guoqiang, Y.: A Bayesian network approach to traffic flow forecasting. IEEE Intell. Transp. Syst. Mag. 7(1), 124–132 (2006)

Jeong, Y.S., Byon, Y.J., Castro-Neto, M.M., Easa, S.M.: Supervised weighting-online learning algorithm for short-term traffic flow prediction. IEEE Trans. Intell. Transp. Syst. 14(4), 1700–1707 (2013)

Zhong, M., Sharma, S., Lingras, P.: Short-term traffic prediction on different types of roads with genetically designed regression and time delay neural network models. J. Comput. Civil Eng. 19(1), 94–103 (2005)

Zargari, S.A., Siabil, S.Z., Alavi, A.H., Gandomi, A.H.: A computational intelligence-based approach for short-term traffic flow prediction. Expert Syst. 29(2), 124–142 (2012)

Chen, C., Wang, Y., Li, L., Hu, J., Zhang, Z.: The retrieval of intra-day trend and its influence on traffic prediction. Transp. Res. C Emerging Technol. 22, 103–118 (2012)

Lippi, M., Bertini, M., Frasconi, P.: Short-term traffic flow forecasting: an experimental comparison of time-series analysis and supervised Dlearning. IEEE Trans. Intell. Transp. Syst. 14(2), 871–882 (2013)

Van Hinsbergen, C.P., Van Lint, J.W., Sanders, F.M.: Short term traffic prediction models. Presented at the ITS World Congress, Beijing, China (2007)

Karlaftis, M.G., Vlahogianni, E.I.: Statistical methods versus neural networks in transportation research: differences, similarities and some insights. Transp. Res. Part C: Emerging Technol. 19(3), 387–399 (2011)

Huang, W., Song, G., Hong, H., et al.: Deep architecture for traffic flow prediction: deep belief networks with multitask learning. IEEE Trans. Intell. Transp. Syst. 15(5), 2191–2201 (2014)

Castro-Neto, M., Jeong, Y.-S., Jeong, M.-K., Han, L.D.: Online-SVR for short-term traffic flow prediction under typical and atypical traffic conditions. Expert Syst. Appl. 36(3), 6164–6173 (2009)

Sun, S.: Traffic flow forecasting based on multitask ensemble learning. In: Proceedings of the 1st ACM/SIGEVO Summit Genetic Evolutionary Computation, pp. 961–964 (2009)

Cortes, C., Vapnik, V.: Support-vector networks. Mach. Learn. 20(3), 273–297 (1995)

Meyer, D., Wien, F.H.T.: Support vector machines. The Interface to libsvm in package e1071 (2015)

Nieto, P.J.G., Garcia-Gonzalo, E., Lasheras, F.S., et al.: Hybrid PSO–SVM-based method for forecasting of the remaining useful life for aircraft engines and evaluation of its reliability. Reliab. Eng. Syst. Saf. 138, 219–231 (2015)

Duan, H., Qiao, P.: Pigeon-inspired optimization: a new swarm intelligence optimizer for air robot path planning. Int. J. Intell. Comput. Cybern. 7(1), 24–37 (2014)

Duan, H., Wang, X.: Echo state networks with orthogonal pigeon-inspired optimization for image restoration. IEEE Trans. Neural Netw. Learn. Syst. 27, 2413–2425 (2016). doi:10.1109/TNNLS.2015.2479117

Zhang, B., Duan, H.: Three-dimensional path planning for uninhabited combat aerial vehicle based on predator-prey pigeon-inspired optimization in dynamic environment. IEEE/ACM Trans. Comput. Biol. Bioinform. 14, 97–107 (2016). doi:10.1109/TCBB.2015.2443789

Li, C., Duan, H.: Target detection approach for UAVs via improved pigeon-inspired optimization and edge potential function. Aerosp. Sci. Technol. 39, 352–360 (2014)

Dou, R., Duan, H.: Pigeon inspired optimization approach to model prediction control for unmanned air vehicle. Aircr. Eng. Aerosp. Technol. 88(1), 108–116 (2016)

Wang, Y., Liu, H., Cai, Z., et al.: An orthogonal design based constrained evolutionary optimization algorithm. Eng. Optim. 39(6), 715–736 (2007)

Li, M.W., Han, D.F., Wang, W.: Vessel traffic flow forecasting by RSVR with chaotic cloud simulated annealing genetic algorithm and KPCA. Neurocomputing 157, 243–255 (2015)

Zhang, Y., Zhang, Y., Haghani, A.: A hybrid short-term traffic flow forecasting method based on spectral analysis and statistical volatility model. Transp. Res. Part C: Emerging Technol. 43, 65–78 (2014)

Smits, G.F., Jordaan, E.M.: Improved SVM regression using mixtures of kernels. In: Proceedings of the 2002 International Joint Conference on Neural Networks, IJCNN 2002, vol. 3, pp. 2785–2790. IEEE (2002)

Acknowledgments

The research is supported by the NSFC (National Science Foundation of China) Projects (No. 61572057) in China, the National High-Tech Research and Development Plan of China under Grant No. 2015AA042101.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Cheng, X., Ren, L., Cui, J., Zhang, Z. (2017). Traffic Flow Prediction with Improved SOPIO-SVR Algorithm. In: Zhang, L., Ren, L., Kordon, F. (eds) Challenges and Opportunity with Big Data. Monterey Workshop 2016. Lecture Notes in Computer Science(), vol 10228. Springer, Cham. https://doi.org/10.1007/978-3-319-61994-1_17

Download citation

DOI: https://doi.org/10.1007/978-3-319-61994-1_17

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-61993-4

Online ISBN: 978-3-319-61994-1

eBook Packages: Computer ScienceComputer Science (R0)