Abstract

This short paper addresses the question of whether practitioners perceive requirements for the cognitive effectiveness of a visual notation to have different importance when that visual notation is used with modeling experts (i.e., developers, modelers) and novices (i.e., business stakeholders, end-users). Through analysis of data resulting from an ongoing empirical study we show that some requirements differ in how important they are perceived for modeling expert and novice use, but that these differences are difficult to meaningfully assess without further in-depth qualitative work.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

It is important that the visual notations of conceptual models used in development processes communicate their intended meaning effectively and correctly: that they are cognitively effective [2]. With the multitude of stakeholders involved in a typical information system development process, many of them will likely have little to no modeling expertise. This leads to an expert–novice distinction that should be kept in mind while creating diagrams to be shown to such non-modeling expert stakeholders [6].

This expert–novice distinction has been noted widely in literature [4], as well the positive effect that training has on the correct grasping of information contained in diagrams [1]. Theory on cognitively effective design for visual notations tells us that representation ought to be tailored to different users and media [5], noting in particular the following expert–novice differences:

-

Novices have more difficulty discriminating between symbols.

-

Novices are more affected by complexity as they lack “chunking” strategies.

-

Novices have to consciously remember what symbols mean.

This paper treats the following research questions: (i) do modeling experts weigh requirements for cognitively effective design differently if models are intended for use with non-experts?, and if so, (ii) do these differences reflect the expert–novice differences noted by [5]?

2 Empirical Study

The data we use here result from an ongoing study into the requirements that practitioners who employ conceptual modeling techniques in their daily practice have towards visual notations [3]. This study consists of a questionnaire set out via LinkedIn Professional Groups, targeting practitioners working in relevant fields, e.g., software engineering/architecture, requirements engineering, enterprise engineering/architecture, business analysis.

The questionnaire consists of three parts, (i) demographic data, (ii) qualitative elicitation of modeling purpose & foci, and (iii) quantitative weighting of requirements for cognitively effective visual notations. Here we focus on iii. To assess whether modeling experts weigh requirements (as given by the Physics of Notations [5]) differently when using them with modeler experts or novices, we posed the following question, followed by having participants rate these requirements on a 5 points Likert scale ranging from not important at all to very important. “Suppose that for your modeling efforts you would be able to have an ideal visual notation, suited especially to your purposes. You would be using this notation only among fellow modeling experts. On a scale of 1 to 5, how important would the following requirements be for this notation? It should...”

-

have a 1:1 correspondence between semantic constructs and graphical symbols (semiotic clarity, SemCla)

-

clearly distinguish between different symbols (perceptual discriminability, PerDis)

-

use visual representations whose appearance suggests their meaning (semantic transparency, SemTra)

-

have explicit mechanisms for dealing with complexity (complexity management, CogMan)

-

have explicit mechanisms to support integration of information from different diagrams (cognitive integration, CogInt)

-

use the full range and capacity of visual variables such as shape, color, size, etc. (visual expressiveness, VisExp)

-

use text to complement graphics (dual coding, DuaCod)

-

have no more than a cognitively manageable number of different graphical symbols (graphic economy, GraEco)

-

use different visual dialects for different tasks and audiences (cognitive fit, CogFit)

After rating each item, participants were asked “are there any requirements you feel are not covered by the ones you just saw, specific to the use of a visual notation among fellow modeling experts?”. We then repeated the weighting with the same items, but posed the question for modeling novices, by noting “You would be using this notation also with other stakeholders that have no expertise in modeling, such as business experts or end-users.”

3 Findings

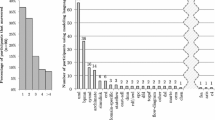

The findings are based on an initial sample of 84 participants. In Fig. 1 the distribution of scores for each requirement are shown, divided into using models with (a) experts, and (b) novices.

On a first glance there seem to be differences in how modeling experts weigh these requirements. To compare the two sets of responses, we show the median scores for each requirement in Fig. 2. The median scores for requirements when models are used with modeling experts (XP) and modeling novices (nXP) differ only (slightly, 0.5 to 1) for three principles: (i) semantic transparency, (ii) dual coding, and (iii) graphic economy.

To check more clearly whether there are differences between the expert and non-expert answers, we used Wilcoxon signed rank testing to assess how distinct each requirement pair is. Four principles gave significant (\(p<0.05\)) difference, namely semantic transparency (\(p=0.0054\)), visual expressiveness (\(p=0.0477\)), graphic economy (\(p=0.04036\)), and cognitive fit (\(p=0.00142\)). However, to interpret these findings, we need to look at the actual distributions of answers for these requirements, shown in Fig. 3.

As can be seen in Fig. 3, even though the distribution of responses differs, whether it does so meaningfully is debatable. For example, semantic transparency, the use of visual representations whose appearance suggests their meaning, is considered important by \(\approx \)70% of participants when used with only modeling experts. This rises to \(\approx \)85% when used with modeling novices. Whether a difference of 15% constitutes a significant enough difference to answer research question (i) do modeling experts weigh requirements for cognitively effective design differently if models are intended for use with non-experts? requires more consideration, incorporating more qualitative research to assess how important such differences are perceived to be.

For research question (ii), do these differences reflect the expert–novice differences noted by [5]? the data seems to both hint affirmatively and contradict itself. The requirements in Fig. 3 are linked to the expert/novice distinction, as optimizing a visual ‘dialect’ of sorts for e.g., a novice user (cognitive fit) involves [5] involves restricting visual vocabulary size (graphic economy), ensuring graphical symbols suggest meaning (semantic transparency), and the use of visually expressive symbols. However, the noted importance of dealing with complexity as novices lack chunking strategies is not clearly reflected in the data, although the difference between expert and novice results for this requirement was just above the threshold of statistic significance (\(p=0.06148\)).

4 Thoughts

When looking purely at the quantitative data resulting from this study so far, there does not seem to be a significant meaningful difference in how important the different requirements are perceived depending on the audience affected. As noted, likely the qualitative data analysis is required to fully assess the significance and meaning of these results. For example, when we asked participants to elaborate on any missed requirements, several responses dealt with re-emphasizing what is most important to them. These, albeit individual responses, paint a different color than the quantitative analysis above. For example, one participant noted:

“I cannot do the formal models without’artist impressions’ or rich pictures tailored to specific stakeholders or stakeholder groups, even fellow modeling insiders/experts.”

This can be interpreted in at least two ways. First, that models should indeed be designed differently depending on the stakeholder group used, or the stage of development - in line with the need to accommodate expert–novice differences in visual notation design. Second, that before models are created, different representations altogether should be used for e.g., requirements elicitation and stakeholder alignment, before any capturing of information is done in conceptual models. This would negate, or at least severely reduce the need for expert–novice differences in visual representation, as the conceptual models would no longer be used with those stakeholders that are novices in modeling.

On the other hand, another participant noted the importance of having visual representation tailored to different stakeholders as well, but related it specifically to modeling languages:

“Highlight how important is to have flexibility to communicate to several audiences perhaps incorporating a more complex visual design. The simplicity of the visual design of UML could be perfect for a software engineer but very cold for a Business User.”

Here, we find more of a hint towards the need to have meaningful variability in the visual representation of the modeling languages – not different kinds representations altogether.

5 Concluding Outlook

This short paper discussed the importance, as perceived by modeling practitioners, of different requirements for cognitively effective visual notation design. We noted that, while in the quantitative findings there does not seem to be a clear distinction for how important these requirements are that links with expert/non-expert distinction, the qualitative findings of the same study can be used to contextualize them more meaningfully.

Thus, for further work on this study we will assess the outcomes of the quantitative data specifically in context of the findings resulting from the qualitative data. In particular, it seems that to get clear answers for whether modeling languages ought to support variability or differentiation for the expert–novice distinction we need to incorporate more qualitative studies, such as in-depth interviews with selected practitioners.

A consideration on the limitations discussed here can be that the limited differentiation in scores of some requirements reflect their, perhaps, ambiguous descriptions, and general counter-intuitive nature. For example, while most people will intuitively understand what it means that a graphical symbol suggests its meaning, and that adding more symbols leads to more complexity, requirements such as ‘have explicit mechanisms to support integration of information from different diagrams’ are less simple to understand at a first glance. However, in order to elicit a large dataset from practitioners, it is necessary to keep average answering time for the questionnaire down, making it difficult to present more details or examples.

References

DeSanctis, G., Jarvenpaa, S.L.: Graphical presentation of accounting data for financial forecasting: an experimental investigation. Acc. Organ. Soc. 14(5–6), 509–525 (1989)

Larkin, J.H., Simon, H.A.: Why a diagram is (sometimes) worth ten thousand words. Cogn. Sci. 11(1), 65–100 (1987)

Linden, D., Hadar, I.: User involvement in applications of the PoN. In: Krogstie, J., Mouratidis, H., Su, J. (eds.) CAiSE 2016. LNBIP, vol. 249, pp. 109–115. Springer, Cham (2016). doi:10.1007/978-3-319-39564-7_11

Lohse, G.L., Biolsi, K., Walker, N., Rueter, H.H.: A classification of visual representations. Commun. ACM 37(12), 36–50 (1994)

Moody, D.L.: The “physics” of notations: toward a scientific basis for constructing visual notations in software engineering. IEEE Trans. Software Eng. 35(6), 756–779 (2009)

Narayanan, N.H., Hübscher, R.: Visual language theory: towards a human-computer interaction perspective. In: Marriott, K., Meyer, B. (eds.) Visual Language Theory, pp. 87–128. Springer, New York (1998)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

van der Linden, D. (2017). Using Visual Notations with Modeling Experts or Novices: What do the Experts Think?. In: Metzger, A., Persson, A. (eds) Advanced Information Systems Engineering Workshops. CAiSE 2017. Lecture Notes in Business Information Processing, vol 286. Springer, Cham. https://doi.org/10.1007/978-3-319-60048-2_5

Download citation

DOI: https://doi.org/10.1007/978-3-319-60048-2_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-60047-5

Online ISBN: 978-3-319-60048-2

eBook Packages: Computer ScienceComputer Science (R0)