Abstract

In this paper we show the advantage of modeling dependencies in supervised classification. The dependencies among variables in a multivariate data set can be linear or non linear. For this reason, it is important to consider flexible tools for modeling such dependencies. Copula functions are able to model different kinds of dependence structures. These copulas were studied and applied in classification of pixels. The results show that the performance of classifiers is improved when using copula functions.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Classification is an important task in Pattern Recognition. The goal in supervised classification is to assign a new object to a category based on its features [1]. Applications in this subject use training data in order to model the distribution of features for each class. In this work we propose the use of bivariate copula functions in order to design a probabilistic model. The copula function allows us to properly model dependencies, not necessarily linear dependencies, among the object features.

By using copula theory, a joint distribution can be built with a copula function and, possibly, several different marginal distributions. Copula theory has been used for modeling multivariate distributions in unsupervised learning problems [3, 5, 9, 13] as well as in supervised classification [4, 6, 7, 10, 12, 14, 15]. For instance, in [4], a challenging classification problem is solved by means of copula functions and vine graphical models. However, all marginal distributions are modelled with gaussian distributions and the copula parameter is calculated by inverting Kendall’s tau. In [10, 15], simulated and real data are used to solve classification problems within the framework of copula theory. No graphical models are employed and marginal distributions are based on parametric models. In this paper, we employed flexible marginal distributions such as Gaussian kernels and the copula parameter is estimated by using the maximum likelihood method. Moreover, the proposed classifier takes into account the most important dependencies by means of a graphical model. The reader interested in applications of copula theory in supervised classification is referred to [6, 7, 12, 14].

The content of the paper is the following: Sect. 2 is a short introduction to copula functions, Sect. 3 presents a copula based probabilistic model for classification. Section 4 presents the experimental setting to classify an image database, and Sect. 5 summarizes the results.

2 Copula Functions

The copula theory was introduced by [11] to separate the effect of dependence from the effect of marginal distributions in a joint distribution. Although copula functions can model linear and nonlinear dependencies, they have rarely been used in supervised classification where nonlinear dependencies are common and need to be represented.

Definition 1

A copula function is a joint distribution function of standard uniform random variables. That is,

where \(U_{i} \sim U(0,1)\) for \(i=1,\ldots ,d.\)

Due to the Sklar’s Theorem, any d-dimensional density f can be represented as

where c is the density of the copula C, \(F_{i}(x_{i})\) is the marginal distribution function of random variable \(x_{i}\), and \(f_{i}(x_{i})\) is the marginal density of variable \(x_{i}\). Equation (1) shows that the dependence structure is modeled by the copula function. This expression separates any joint density function into the product of copula density and marginal densities. This is contrasted with the usual way to model multivariate distributions, which suffers from the restriction that the marginal distributions are usually of the same type. The separation between marginal distributions and a dependence structure explains the modeling flexibility given by copula functions.

In this paper we use two-dimensional parametric copula functions to model the dependence structure of random variables associated by a joint distribution function. The densities of these copula functions are shown in Table 1. We consider the Farlie-Gumbel-Morgenstern (FGM) copula function, elliptical copulas (Gaussian) and archimedean copulas (Independent, Ali-Mikhail-Haq (AMH), Clayton, Frank, Gumbel). These copula functions have been chosen because they cover a wide range of dependencies. For instance, the AMH, Clayton, FGM, Frank and Gaussian copula functions can model negative and positive dependences between the marginals. One exception is the Gumbel copula, which does not model negative dependence. The AMH and FGM copula functions are adequate for marginals with modest dependence. When dependence is strong between extremes values, the Clayton and Gumbel copula functions can model left and right tail association respectively. The Frank copula is appropriate for data that exhibit weak dependence between extreme values and strong dependence between centered values, while the Gaussian copula is adequate for data that exhibit weak dependence between centered values and strong dependence between extreme values. In general, when the Gaussian copula is used with standard Gaussian marginals, then the joint probabilistic model is equivalent to a multivariate normal distribution.

The dependence parameter \(\theta \) of a bivariate copula function can be estimated using the maximum likelihood method (ML). To do so, the one-dimensional log-likelihood function

is maximized. Assuming the marginal distributions are known, the pseudo copula observations \(\left\{ ({u_{1}}_{i},{u_{2}}_{i})\right\} _{i=1}^{n}\) in Eq. (2) are obtained by using the marginal distribution functions of variables \(X_{1}\) and \(X_{2}\). Once the maximum likelihood estimator of \(\theta \) has been found, it is represented by the notation \({\hat{\theta }}\). It has been shown in [16] that the ML estimator \({\hat{\theta }}\) has better properties than other estimators.

3 The Probabilistic Model for Classification

The proposed classifier explicitly considers dependencies among variables. The dependence structure for the design of the probabilistic classifier is based on a chain graphical model. Such model, for a d-dimensional continuous random vector \(\varvec{X}\), represents a probabilistic model with the following density:

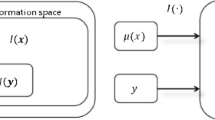

where \(\varvec{\alpha }=(\alpha _{1},\ldots ,\alpha _{d})\) is a permutation of the integers between 1 and d. Figure 1 shows an example of a chain graphical model for a three dimensional vector. Notice that a permutation could not be unique, in the sense that different permutations could yield the same density values in (3).

In practice the permutation \(\varvec{\alpha }\) is unknown and the chain graphical model must be learnt from data. A way of choosing the permutation \(\varvec{\alpha }\) is based on the Kullback-Leibler divergence (\(D_{KL}\)). This divergence is an information measure between two distributions. It is always non-negative for any two distributions, and is zero if and only if the distributions are identical. Hence, the Kullback-Leibler divergence can be interpreted as a measure of the dissimilarity between two distributions. Then, the goal is to choose a permutation \(\varvec{\alpha }\) that minimizes the Kullback-Leibler divergence between the true distribution \(f({\mathbf {x}})\) of the data set and the distribution associated to a chain model, \(f_{\text {chain}}({\mathbf {x}})\). For instance, the Kullback-Leibler divergence between joint densities f and \(f_{\text {chain}}\) for a continuous random vector \({\mathbf {X}}=(X_{1},X_{2},X_{3})\) is given by:

The first term in Eq. (4), \(H({\mathbf {X}})\), is the entropy of the joint distribution \(f({\mathbf {x}})\) and does not depend on the permutation \(\varvec{\alpha }\). By using copula theory and Eq. (1), the second term can be decomposed into the product of marginal distributions and bivariate copula functions.

The second term of Eq. (5), the sum of marginal entropies, also does not depend on the permutation \(\varvec{\alpha }\). Therefore, minimizing Eq. (5) is equivalent to maximize the sum of the last two terms. Once a sample of size n is obtained from the joint density f, the last two terms can be approximated by a Monte Carlo approach:

Through Eq. (6), the \(D_{KL}\) is minimized by maximizing the sum of the log-likelihood for the copula parameters. It is worth to noting that the log-likelihood allows us to estimate the copula parameter and to select the appropriate permutation \(\varvec{\alpha }\). Finally, by means of copula theory, a chain graphical model for a three dimensional vector has the density

3.1 The Probabilistic Classifier

Here, we present the incorporation of bivariate copula functions and a chain graphical model in order to design a probabilistic classifier.

The Bayes’ theorem states the following:

where \(P(K=k|{\mathbf {X}}={\mathbf {x}})\) is the posterior probability, \(P({\mathbf {X}}={\mathbf {x}}|K=k)\) is the likelihood function, \(P(K=k)\) is the prior probability and \(P({\mathbf {X}}={\mathbf {x}})\) is the data probability.

Equation (8) has been used as a tool in supervised classification. A probabilistic classifier can be designed comparing the posterior probability that an object belongs to the class K given its features \({\mathbf {X}}\). The object is then assigned to the class with the highest posterior probability. For practical reasons, the data probability \(P({\mathbf {X}})\) does not need to be evaluated for comparing posterior probabilities. Furthermore, the prior probability P(K) can be substituted by a uniform distribution if the user does not have an informative distribution.

The joint density in Eq. (7) can be used for modeling the likelihood function in Eq. (8). In this case, the Bayes’ theorem can be written as:

where \(F_{i}\) are the marginal distribution functions and \(f_{i}\) are the marginal densities for each feature. The function c is a bivariate copula density taken from Table 1. As can be seen in Eq. (9), each class determines a likelihood function.

4 Experiments

We use Eq. (9) and copula functions from Table 1 in order to classify pixels of 50 test images. Hence, we prove seven probabilistic classifiers. The image database was used in [2] and is available online [8]. This image database provides information about two classes: the foreground and the background. The training data and the test data are contained in the labelling-lasso files [8], whereas the correct classification is contained in the segmentation files. Figure 2 shows the description of one image from the database. Although the database is used for segmentation purposes, the aim of this work is to model dependencies in supervised classification. Only color features are considered for classifying pixels.

(a) The color image. (b) The labelling-lasso image with the training data for background (dark gray), for foreground (white) and the test data (gray). (c) The correct classification with foreground (white) and background (black). (d) Classification made by independence. (e) Classification made by Frank Copula.

Three evaluation measures are used in this work: accuracy, sensitivity and specificity. These measures are described in Fig. 3. The sensitivity and specificity measures explain the percentage of well classified pixels for each class, foreground and background, respectively. We define the positive class as the foreground and the negative class as the background.

4.1 Numerical Results

In Table 2 we summarize the measure values reached by the classifiers according to the copula function used to model the dependencies.

To properly compare the performance of the probabilistic classifiers, we conducted an ANOVA test for comparing the accuracy mean among the classifiers. The test reports a statistical difference between Clayton, Frank, Gaussian and Gumbel copula functions with respect to the Independent copula (p-value < 0.05). The major difference of accuracy with respect to the independent copula is given by the Frank copula.

4.2 Discussion

According to Table 2, the classifier based on the Frank copula shows the best behavior for accuracy. For sensitivity, Frank and Gaussian copulas provide the best results. The best mean specificity is reached by the classifier based on the Clayton copula.

As can be seen, the average performance of a classifier is improved by the incorporation of the copula functions. The lowest average performance corresponds to the classifier that uses the independence assumption. Figure 4 shows how the accuracy is increased when dependencies are taken into account by the probabilistic classifier. The line of Fig. 4(a) represents the identity function, so the points above this line correspond to a better accuracy than the accuracy achieved by the classifier based on the independent copula. To get a better insight, Fig. 4(b) shows the difference in accuracy between using copula functions respect to the naive classifier (independent copula).

Table 2 also shows information about the standard deviations for each evaluation measure. For accuracy, the standard deviation indicates that using a Frank copula in pixel classification is more consistent than the other classifiers.

Figure 2 shows the results of one of the 50 images mentioned before, once we worked on them. In (d), we can see the resultant image when it is classified by independence, (e) shows the same image classified by Frank copula. It is possible to visually perceive the improvement that the use of Frank copula provides to the classifier. For this image, the color data for each class is shown in Fig. 5. In this case, it can be seen that the dependence structure does not correspond to the dependence structure of a bivariate Gaussian distribution. According to the numerical results, the copula Frank is the best model for this kind of dependence.

5 Conclusions

In this paper we have compared the performance of several copula based probabilistic classifiers. The results show that the dependence among features provides important information for supervised classifying. For the images used in this work, the Gumbel copula performs very well in most of the cases. One advantage of using a chain graphical model consists in detecting the most important dependencies among variables. This can be valuable for different applications where associations among variables gives additional knowledge of the problem. Though accuracy is increased by the classifiers based on copula functions, the selection of the copula function has relevant consequences for the performance of the classifier. For instance, in Fig. 4, a few classifiers do not improve the performance achieved by the classifier based on the independent copula. It suggests more experiments are needed in order to select the adequate copula function for a given problem. Moreover, as future work, the classifier based on copula functions must be proved in other datasets and compared with other classifiers in order to achieve a better insight of its benefits and limitations.

References

Bishop, C.: Pattern Recognition and Machine Learning. Information Science and Statistics. Springer, New York (2007)

Blake, A., Rother, C., Brown, M., Perez, P., Torr, P.: Interactive Image Segmentation Using an Adaptive GMMRF Model. In: Pajdla, T., Matas, J. (eds.) ECCV 2004. LNCS, vol. 3021, pp. 428–441. Springer, Heidelberg (2004). doi:10.1007/978-3-540-24670-1_33

Brunel, N., Pieczynski, W., Derrode, S.: Copulas in vectorial hidden Markov chains for multicomponent image segmentation. In: Proceedings of the 2005 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2005), pp. 717–720 (2005). doi:10.1109/ICASSP.2005.1415505

Carrera, D., Santana, R., Lozano, J.: Vine copula classifiers for the mind reading problem. Prog. Artif. Intell. (2016). doi:10.1007/s13748-016-0095-z

Mercier, G., Bouchemakh, L., Smara, Y.: The use of multidimensional Copulas to describe amplitude distribution of polarimetric SAR Data. In: IGARSS 2007 (2007). doi:10.1109/IGARSS.2007.4423284

Ouhbi, N., Voivret, C., Perrin, G., Roux, J.: Real grain shape analysis: characterization and generation of representative virtual grains. application to railway ballast. In: Oñate, E., Bischoff, M., Owen, D., Wriggers, P., Zohdi, T. (eds.) Proceedings of the IV International Conference on Particle-based Methods Fundamentals and Applications (2015)

Resti, Y.: Dependence in classification of aluminium waste. J. Phys. Conf. Ser. 622(012052), 1–6 (2015). doi:10.1088/1742-6596/622/1/012052

Rother, C., Kolmogorov, V., Blake, A., Brown, M.: Image and video editing. http://research.microsoft.com/en-us/um/cambridge/projects/visionimagevideoediting/segmentation/grabcut.htm

Sakji-Nsibi, S., Benazza-Benyahia, A.: Multivariate indexing of multichannel images based on the copula theory. In: IPTA08 (2008)

Sen, S., Diawara, N., Iftekharuddin, K.: Statistical pattern recognition using Gaussian Copula. J. Stat. Theor. Pract. 9(4), 768–777 (2015). doi:10.1080/15598608.2015.1008607

Sklar, A.: Fonctions de répartition à n dimensions et leurs marges. Publications de l’Institut de Statistique de l’Université de Paris 8, 229–231 (1959)

Slechan, L., Górecki, J.: On the accuracy of Copula-based Bayesian classifiers: an experimental comparison with neural networks. In: Núñez, M., Nguyen, N.T., Camacho, D., Trawiński, B. (eds.) ICCCI 2015. LNCS, vol. 9329, pp. 485–493. Springer, Cham (2015). doi:10.1007/978-3-319-24069-5_46

Stitou, Y., Lasmar, N., Berthoumieu, Y.: Copulas based multivariate gamma modeling for texture classification. In: Proceedings of the 2009 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2009), pp. 1045–1048. IEEE Computer Society, Washington, DC (2009). doi:10.1109/ICASSP.2009.4959766

Voisin, A., Krylov, V., Moser, G., Serpico, S., Zerubia, J.: Classification of very high resolution SAR images of urban areas using copulas and texture in a hierarchical Markov random field model. IEEE Geosci. Remote Sens. Lett. 10(1), 96–100 (2013). doi:10.1109/LGRS.2012.2193869

Ščavnický, M.: A study of applying copulas in data mining. Master’s thesis, Charles University in Prague, Prague (2013)

Weiß, G.: Copula parameter estimation by maximum-likelihood and minimum-distance estimators: a simulation study. Comput. Stat. 26(1), 31–54 (2011). doi:10.1007/s00180-010-0203-7

Acknowledgments

The authors acknowledge the financial support from the National Council of Science and Technology of México (CONACyT, grant number 258033) and from the Universidad Autónoma de Aguascalientes (project number PIM17-3). The student Ángela Paulina also acknowledges to the CONACYT for the financial support given through the scholarship number 628293.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Salinas-Gutiérrez, R., Hernández-Quintero, A., Dalmau-Cedeño, O., Pérez-Díaz, Á.P. (2017). Modeling Dependencies in Supervised Classification. In: Carrasco-Ochoa, J., Martínez-Trinidad, J., Olvera-López, J. (eds) Pattern Recognition. MCPR 2017. Lecture Notes in Computer Science(), vol 10267. Springer, Cham. https://doi.org/10.1007/978-3-319-59226-8_12

Download citation

DOI: https://doi.org/10.1007/978-3-319-59226-8_12

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-59225-1

Online ISBN: 978-3-319-59226-8

eBook Packages: Computer ScienceComputer Science (R0)