Abstract

The classification process in the domain of brain computer interfaces (BCI) is usually carried out with simple linear classifiers, like LDA or SVM. Non-linear classifiers rarely provide a sufficient increase in the classification accuracy to use them in BCI. However, there is one more type of classifiers that could be taken into consideration when looking for a way to increase the accuracy - boosting classifiers. These classification algorithms are not common in BCI practice, but they proved to be very efficient in other applications.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

A brain-computer interface (BCI) is a control and communication system in which the control commands and messages are not transmitted via the standard outputs of a central nervous system, but are read directly from the user’s brain. Nowadays there are a lot of different devices for recording the brain activity, however, due to relatively low costs, high mobility, and non-invasiveness, EEG devices are usually used for outside-lab BCIs. There are three main types of EEG-BCIs: SSVEP-BCI, P300-BCI, and MI-BCI. In each of them, different brain potentials are used to control the interface.

SSVEP-BCI is controlled by steady state visually evoked potentials (SSVEPs). These potentials are recorded from the occipital cortex when a user is exposed to a visual stimulus flickering with a steady frequency. Since the stimulus fundamental frequency (and also the harmonics) can be observed in EEG recording, different control commands are encoded with stimuli of different frequency, each delivered by different stimuli providers (usually LEDs).

The control signals in P300-BCI are positive potentials that appear over the parietal cortex when a user perceives a rare and significant stimulus. The P300 potential can be detected in the brain activity approximately 300 ms after the stimulus is presented. The control process in P300-BCI is based on picking out the objects from a set of objects displayed on the screen [3]. The user’s task is to focus the attention on one of the objects. The objects are highlighted randomly - each time the object chosen by the user is highlighted, P300 potential appears over the parietal cortex.

While the brain potentials used in SSVEP-BCI and P300-BCI are evoked potentials (both need external stimulation to be evoked in the user’s brain), MI-BCI is based on spontaneous potentials, evoked by the user. The potentials used for controlling MI-BCI, called motor rhythms, can be detected over the motor cortex when the user performs real or imagery movements.

Out of these three types of BCI, MI-BCI is the most natural and so the most welcome in practical applications. Its main benefit is that it does not require any external stimulation – the mental states needed to perform the actions are evoked only at the user’s will. This means that the user can use the interface at any time while performing other actions (e.g. reading a text). Hence, the interface can be always in the stand-by state waiting for a user’s command. In theory P300-BCI or SSVEP-BCI could be also constantly on, but in practice flickering light or highlighting objects constantly present at the user’s field of view would be extremely tiring. However, there is no free lunch, MI-BCI is the most convenient for the user, but it provides the smallest number of control states. Usually only 2–4 direct commands can be obtained with this type of interface (corresponding to the motor imagery of: left and right hand, feet, and tongue). Moreover, the potentials related to motor imagery are difficult to extract from a scalp EEG, especially in the case of a beginner user.

In fact, MI-BCI can be successfully used in practice, but the user has to learn first how to perform the motor imagery at all, and then how to perform it effectively. As Pfurtscheller et al. and Guger et al. report in [7, 8, 15] even 80–97% of classification accuracy can be obtained after 6–10 twenty-minute sessions. If the training is shorter, the results are not so impressive. In [5] Gauger et al. report that only in 20% of 99 subjects the brain patterns related to left-right hand motor imagery were possible to be distinguished with an accuracy greater than 80% after 20–30 min of training. For 70% of 99 subjects the classification accuracy was about 60–80%. In the case of remaining subjects, the classification accuracy of motor imagery of left and right hand was below 60%.

In MI-BCI and other biometric systems, simple individual classifiers are usually used in the classification process, e.g. linear discriminant analysis (LDA) [6], Fisher linear discriminant analysis (FDA) [13], k-NN classifier [16, 17], distinction sensitive learning vector quantization classifier (DSLVQ) [15] or minimum Mahalanobis distance (MDA) classifier [14]. The boosting algorithms are also an effective method of producing a very accurate classification rule [9], however, they are rarely used in BCI domain [12]. In general, they are a combination of so-called weak classifiers. A weak classifier learns on various training examples sampled from the original learning set. The sampling procedure is based on the weight of each example. In each iteration, the weights of examples are changed. The final decision of the boosting algorithm is determined on the ensemble of classifiers derived from each iteration of the algorithm.

The aim of this paper is to compare the performance of four boosting classifiers (AdaBoost, RealAdaBoost, GentleAdaBoost, and our modification of AdaBoost formulated in Sect. 2) and three classic classification algorithms: LDA, SVM, and k-NN. We would like to find out whether the boosting scheme, where the weak classifiers are joined to create a strong one, will provide at least similar results as the methods that proved to be very successful in BCI field. We perform our analysis over EEG data that were acquired during a MI-BCI session.

The rest of this paper is organized as follows. In Sect. 2 our modification of AdaBoost algorithm is presented. The experimental evaluation, discussion and conclusions from the experiments are presented in Sect. 3. Finally, some conclusions are given.

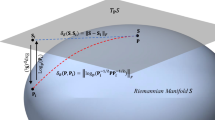

2 AdaBoost Algorithm

In recent years many modifications of algorithms based on the boosting idea appeared. For example, AdaBoost.OC algorithm [20] is a combination of AdaBoost method and ECOC model, LogitBoost algorithm [5] utilizes the model of logistic regression, FloatBoost algorithm [11] removes those classifiers in subsequent iterations which do not fulfill the quality condition assumed and in [2] the methods of modifying weights of the examples are described.

It is worth mentioning that in the work [1] a common name for boosting type algorithms was proposed, the acronym ARCing for Adaptive Resampling and Combining was created, and also a new algorithm named ARC-x4 was presented. In this work we use the three well-known boosting algorithms (AdaBoost, RealAdaBoost and GentleAdaBoost), together with our modification of the classic AdaBoost algorithm.

One of the main factors that have an effect on the action of AdaBoost algorithm is the selection of weights assigned to individual elements of the learning set. Let’s propose then a modification of AdaBoost algorithm which will represent imprecision in values of weights obtained in subsequent iterations of the algorithm. Such imprecision will be defined by parameters k and \(\lambda \). The second parameter defines certain linear combination of the upper and lower value of weights obtained after modification by k. The algorithm steps are presented in Table 1.

If the values of functions \(\overline{e_b}=e_b+k\), \(\underline{e_b}=e_b-k\) obtained in point 4c are outside the range of values [0, 1], then the values of these functions should be modified (point 4d). This procedure seems to be used extremely rarely due to the possible values of the error \(e_b\) and the assumed values of the k parameter. In the earlier work [2] the changes in weights values depended on the iteration of the algorithm. In this work the changes in weights do not depend on the iteration of the algorithm.

3 Experimental Studies

The experiment was performed with a male subject, aged 32. The subject was right-handed, had normal vision and did not report any mental disorders. The experiment was conducted according to the Helsinki declaration on proper treatment of human subjects. Written consent was obtained from the subject.

The subject was placed in a comfortable chair and EEG electrodes were applied on his head. In order to limit the number of artifacts, the participant was instructed to stay relaxed and not move. The start of the experiment was announced by a short sound signal. 200 trials were recorded during the experiment. Each trial started with a picture of an arrow pointing to the left or right displayed on a computer screen. The screen was located about 70 cm from the subject’s eyes. The task of the subject was to imagine the wrist rotation of the hand indicated by the arrow (arrow to the left - left hand; arrow to the right - right hand). The arrow directions for the succeeding trials were chosen randomly. There were no breaks between trials. The experiment was divided into 4 sessions, 50 trials each. The trial length was fixed and was equal to 10 s. There were 3-minute breaks between sessions.

EEG data was recorded from two monopolar channels at a sampling frequency of 256 Hz. Four passive electrodes were used in the experiments. Two of them were attached to the subject’s scalp at C3 and C4 positions according to the International 10–20 system [10]. The reference and ground electrodes were located at Fpz and the right mastoid, respectively. The impedance of the electrodes was kept below 5 k\(\varOmega \). The EEG signal was acquired with Discovery 20 amplifier (BrainMaster) and recorded with OpenVibe Software [19].

During the data recording stage some restrictions to the experiment protocol were introduced in order to preliminarily limit the number of artifacts in the recording. These restrictions, however, could not eliminate the artifacts fully. Therefore, in order to enhance the signal-to-noise ratio (SNR), the recorded EEG signal had to be subjected to some preprocessing. Since the most artifacts are outside the frequency band where motor potentials are searched for (alpha and beta frequency band), simple band-pass filtering in the 6–30 Hz frequency range was used in the reported survey. According to Fatourechi et al. low-pass filtering should remove most of EMG artifacts and high-pass filtering should remove EOG artifacts [4]. Moreover, the low-pass filtering allows also eliminating the artifacts caused by power lines that are in the 50 Hz range (in Poland). A Butterworth band-pass filter of the 4th order was used to filter the EEG data.

The classification of the motor imagery EEG data was performed with power band features. Twelve frequency bands were used to extract the features: alpha band (8–13 Hz), beta band (13–30 Hz), five sub-bands of alpha band (8–9 Hz; 9–10 Hz; 10–11 Hz; 11–12 Hz; 12–13 Hz), five sub-bands of beta band (13–17 Hz; 17–20 Hz; 20–23 Hz; 23–26 Hz; 26–30 Hz). The features were calculated separately per each second of the recording, hence there were 240 features. Due to a high number of features, the feature selection process was performed with LASSO algorithm [10]. Taking into account a small number of samples (200 samples), the number of classes (2 classes), and the possibility of using non-linear classifiers, we decided that no more than 8 features should be used in the classification process [18].

In the classification process four different classifier types were tested: boosting classifiers, LDA, SVM, and k-NN. First, we compared four boosting algorithms described in detail in Sect. 3. Then, we moved to SVM classifiers. Here we compared SVMs with different kernel functions: linear, quadratic, polynomial, and rbf. Finally, we tested k-NN classifiers with different values of k parameter (k was set to 1, 3, 5, and 7). The performance of each classifier, regardless of its type, was evaluated with 10-fold cross-validation scheme.

4 Results

Figure 1 presents the results of the experiments, in which Boosting algorithms were used. The experiments were performed for 200 iterations for both learning Fig. 1(a) and testing Fig. 1(b) process. Three versions of Boosting algorithms (AdaBoost – B1, RealAdaBoost – B2, GentleAdaBoost – B3) were used in research as well as the modification of AdaBoost algorithm presented in this work (B1M) with \(\lambda =0\) and \(k=0.025\). The classification accuracy presented in Fig. 1(a) indicate unequivocally that the classifiers were overtrained. Such classifiers behavior might be a result of small learning sets - each of them contained only 191 elements. The testing error was in the range of 37–32%, which was an average of 10 repetitions. The best results were obtained for the proposed modification of the AdaBoost algorithm. The average results from the iterations from 120 to 130 were used in the analysis. They are presented in Fig. 1(c). The X axis indicates the index of the testing subset.

Figure 2 presents the classification results for the algorithms from k-NN and SVM group. The algorithms that were characterized by stable classification for different testing subsets were selected for the final analysis. These were SVM with the linear kernel and k-NN with 3 neighbors.

The classification error obtained with the use of the selected algorithms is shown in Fig. 3 and in Table 2.

Three from the selected algorithms (B1M, linear SVM, LDA) were characterized by similar average errors. The difference (for both the average and the median) was no higher than 2%. The proposed boosting algorithm was the most stable due to the fact that the spread of results for the individual learning subsets was no higher than 23%, while for the two other algorithms it was 38% and 33% respectively. 3-NN algorithm had the same dispersion of results (23%), however the correctness of the classification was inferior by 5% when compared with the results obtained for the boosting algorithm.

5 Conclusion

In the paper we compared the performance of four boosting classifiers (AdaBoost, RealAdaBoost, GentleAdaBoost, and our modification of AdaBoost - B1M) and three classic classification algorithms: LDA, SVM, and k-NN. After the comparison, performed over the EEG data acquired during a MI-BCI session, we found out that our modification of AdaBoost provided the best results among the four boosting algorithms and almost all other classifiers tested. Two exceptions were LDA and SVM with linear kernel, the performance of which was on the same level as in the case of B1M.

The analysis, the outcome of which was presented in the paper, was meant as a preliminarily study on the application of boosting algorithms in BCI domain. Because our results were on the same level as the results of two classifiers leading in BCI research, we plan to extend our analysis to more subjects. Moreover, since the results obtained with the use of the boosting algorithm proposed in this paper were the most stable, we believe that it can bring real benefits when used not in an off-line but in an on-line BCI mode.

References

Breiman, L., et al.: Arcing classifier. Ann. Stat. 26(3), 801–849 (1998)

Burduk, R.: The AdaBoost algorithm with the imprecision determine the weights of the observations. In: Nguyen, N.T., Attachoo, B., Trawiński, B., Somboonviwat, K. (eds.) ACIIDS 2014. LNCS, vol. 8398, pp. 110–116. Springer, Cham (2014). doi:10.1007/978-3-319-05458-2_12

Donnerer, M., Steed, A.: Using a p300 brain-computer interface in an immersive virtual environment. Presence Teleoperators Virtual Environ. 19(1), 12–24 (2010)

Fatourechi, M., Bashashati, A., Ward, R.K., Birch, G.E.: Emg and eog artifacts in brain computer interface systems: a survey. Clin. Neurophysiol. 118(3), 480–494 (2007)

Friedman, J., Hastie, T., Tibshirani, R., et al.: Additive logistic regression: a statistical view of boosting. Ann. Stat. 28(2), 337–407 (2000)

Guger, C., Edlinger, G., Harkam, W., Niedermayer, I., Pfurtscheller, G.: How many people are able to operate an EEG-based brain-computer interface (BCI)? IEEE Trans. Neural Syst. Rehabil. Eng. 11(2), 145–147 (2003)

Guger, C., Schlogl, A., Neuper, C., Walterspacher, D., Strein, T., Pfurtscheller, G.: Rapid prototyping of an EEG-based brain-computer interface (BCI). IEEE Trans. Neural Syst. Rehabil. Eng. 9(1), 49–58 (2001)

Guger, C., Schlogl, A., Walterspacher, D., Pfurtscheller, G.: Design of an EEG-based brain-computer interface (BCI) from standard components running in real-time under windows-entwurf eines eeg-basierten brain-computer interfaces (bci) mit standardkomponenten, das unter windows in echtzeit arbeitet. Biomedizinische Technik/Biomed. Eng. 44(1–2), 12–16 (1999)

Hayashi, I., Tsuruse, S., Suzuki, J., Kozma,R.T.: A proposal for applying PDI-boosting to brain-computer interfaces. In: 2012 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), pp. 1–6. IEEE (2012)

Jasper, H.H.: The ten twenty electrode system of the international federation. Electroencephalogr. Clin. Neurophysiol. 10, 371–375 (1958)

Li, S.Z., Zhang, Z.: Floatboost learning and statistical face detection. IEEE Trans. Pattern Anal. Mach. Intell. 26(9), 1112–1123 (2004)

Liu, Y., Zhang, H., Zhao, Q., Zhang, L.: Common spatial-spectral boosting pattern for brain-computer interface. In: Proceedings of the Twenty-First European Conference on Artificial Intelligence, pp. 537–542. IOS Press (2014)

Müller-Putz, G.R., Kaiser, V., Solis-Escalante, T., Pfurtscheller, G.: Fast set-up asynchronous brain-switch based on detection of foot motor imagery in 1-channel EEG. Med. Biol. Eng. Comput. 48(3), 229–233 (2010)

Pfurtscheller, G., Brunner, C., Schlögl, A., Da Silva, F.L.: Mu rhythm (de) synchronization and EEG single-trial classification of different motor imagery tasks. NeuroImage 31(1), 153–159 (2006)

Pfurtscheller, G., Neuper, C., Schlogl, A., Lugger, K.: Separability of EEG signals recorded during right and left motor imagery using adaptive autoregressive parameters. IEEE Trans. Rehabil. Eng. 6(3), 316–325 (1998)

Porwik, P., Doroz, R., Orczyk, T.: The k-NN classifier and self-adaptive hotelling data reduction technique in handwritten signatures recognition. Pattern Anal. Appl. 18(4), 983–1001 (2015)

Porwik, P., Orczyk, T., Lewandowski, M., Cholewa, M.: Feature projection k-NN classifier model for imbalanced and incomplete medical data. Biocybern. Biomed. Eng. 36(4), 644–656 (2016)

Raudys, S.J., Jain, A.K., et al.: Small sample size effects in statistical pattern recognition: recommendations for practitioners. IEEE Trans. Pattern Anal. Mach. Intell. 13(3), 252–264 (1991)

Renard, Y., Lotte, F., Gibert, G., Congedo, M., Maby, E., Delannoy, V., Bertrand, O., Lécuyer, A.: OpenViBE: an open-source software platform to design, test, and use brain-computer interfaces in real and virtual environments. Presence Teleoperators Virtual Environ. 19(1), 35–53 (2010)

Schapire, R.E.: Using output codes to boost multiclass learning problems. ICML 97, 313–321 (1997)

Acknowledgments

This work was supported in part by the statutory funds of the Department of Systems and Computer Networks, Wroclaw University of Science and Technology.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 IFIP International Federation for Information Processing

About this paper

Cite this paper

Rejer, I., Burduk, R. (2017). Classifier Selection for Motor Imagery Brain Computer Interface. In: Saeed, K., Homenda, W., Chaki, R. (eds) Computer Information Systems and Industrial Management. CISIM 2017. Lecture Notes in Computer Science(), vol 10244. Springer, Cham. https://doi.org/10.1007/978-3-319-59105-6_11

Download citation

DOI: https://doi.org/10.1007/978-3-319-59105-6_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-59104-9

Online ISBN: 978-3-319-59105-6

eBook Packages: Computer ScienceComputer Science (R0)