Abstract

We propose a new protocol for two-party computation, secure against malicious adversaries, that is significantly faster than prior work in the single-execution setting (i.e., non-amortized and with no pre-processing). In particular, for computational security parameter \({\kappa }\) and statistical security parameter \({\rho }\), our protocol uses only \({\rho }\) garbled circuits and \(O({\rho }+ {\kappa })\) public-key operations, whereas previous work with the same number of garbled circuits required either \(O({\rho }\cdot n+{\kappa })\) public-key operations (where n is the input/output length) or a second execution of a secure-computation sub-protocol. Our protocol can be based on the decisional Diffie-Hellman assumption in the standard model.

We implement our protocol to evaluate its performance. With \({\rho }=40\), our implementation securely computes an AES evaluation in 65 ms over a local-area network using a single thread without any pre-computation, \(22\times \) faster than the best prior work in the non-amortized setting. The relative performance of our protocol is even better for functions with larger input/output lengths.

X. Wang and J. Katz—Research supported by NSF awards #1111599 and #1563722.

A.J. Malozemoff—Conducted in part with Government support through the National Defense Science and Engineering Graduate (NDSEG) Fellowship, 32 CFG 168a, awarded by DoD, Air Force Office of Scientific Research. Work done while at the University of Maryland.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Secure multi-party computation (MPC) allows multiple parties with private inputs to compute some agreed-upon function such that all parties learn the output while keeping their inputs private. Introduced in the 1980s [38], MPC has become more practical in recent years, with several companies now using the technology. A particularly important case is secure two-party computation (2PC), which is the focus of this work.

Many existing applications and implementations of 2PC assume that all participants are semi-honest, that is, they follow the protocol but can try to learn sensitive information from the protocol transcript. However, in real-world applications this assumption may not be justified. Although protocols with stronger security guarantees exist, 2PC protocols secure against malicious adversaries are relatively slow, especially when compared to protocols in the semi-honest setting. To address this, researchers have considered variants of the classical, “single-execution” setting for secure two-party computation, including both the batch setting [18, 28, 29, 33] (in which the computational cost is amortized over multiple evaluations of the same function) and the offline/online setting [28, 29, 33] (in which parties perform pre-processing when the circuit—but not the parties’ inputs—is known). The best prior result [33] (done concurrently and independently of our own work) relies on both amortization and pre-processing (as well as extensive parallelization) to achieve an overall amortized time of 6.4 ms for evaluating AES with 40-bit statistical security. Due to the pre-processing, however, it introduces a latency of 5222 ms until the first execution can be done.

In addition, existing 2PC schemes with security against malicious adversaries perform poorly on even moderate-size inputs or very large circuits. For example, the schemes of Lindell [24] and Afshar et al. [1] require a number of public-key operations at least proportional to the statistical security parameter times the sum of one party’s input length and the output length. The schemes tailored to the batch, offline/online setting [29, 33] do not scale well for large circuits due to memory constraints: the garbled circuits created during the offline phase need either to be stored in memory, in which case evaluating very large circuits is almost impossible, or else must be written/read from disk, in which case the online time incurs a huge penaltyFootnote 1 due to disk I/O (see Sect. 5).

Motivated by these issues, we design a new 2PC protocol with security against malicious adversaries that is tailored for the single-execution setting (i.e., no amortization) without any pre-processing. Our protocol uses the cut-and-choose paradigm [25] and the input-recovery approach introduced by Lindell [24], but the number of public-key operations required is independent of the input/output length. Overall, we make the following contributions.

-

Our protocol is more efficient, and often much more efficient, than the previous best protocol with malicious security in the single-execution setting (see Table 1). Concretely, our protocol takes only 65 ms to evaluate an AES circuit over a local-area network, better than the most efficient prior work in the same setting.

-

We identify and fix bottlenecks in various building blocks for secure computation; these fixes may prove useful in subsequent work. As an example, we use Streaming SIMD Extensions (SSE) to improve the performance of oblivious-transfer extension, and improve the efficiency of the XOR-tree technique to avoid high (non-cryptographic) complexity when applied to large inputs. Our optimizations reduce the cost of processing the circuit evaluator’s input by \(1000\times \) for \(2^{16}\)-bit inputs, and even more for larger inputs.

-

We release an open-source implementation, EMP-toolkit [36], with the aim of providing a benchmark for secure computation and allowing other researchers to experiment with, use, and extend our code.

1.1 High-Level Approach

Our protocol is based on the cut-and-choose paradigm. Let f be the circuit the parties want to compute. At a high level, party \(P_{1}\), also called the circuit garbler, begins by generating s garbled circuits for f and sending those to \(P_{2}\), the circuit evaluator. Some portion of those circuits (the check circuits) are randomly selected and checked for correctness by the evaluator, and the remaining circuits (the evaluation circuits) are evaluated. The outputs of the evaluation circuits are then processed in some way to determine the output.

The Input-Recovery Technique. To achieve statistical security \(2^{-{\rho }}\), early cut-and-choose protocols [26, 34, 35] required \(s \approx 3{\rho }\). Lindell [24] introduced the input-recovery technique and demonstrated a protocol requiring only \(s = {\rho }\) garbled circuits of f (plus additional, smaller garbled circuits computing another function). At a high level, the input-recovery technique allows \(P_{2}\) to obtain \(P_{1}\)’s input x if \(P_{1}\) cheats; having done so, \(P_{2}\) can then compute the function itself to learn the output. For example, in one way of instantiating this approach [24], every garbled circuit uses the same output-wire labels for a given output wire i, and moreover the labels on every output wire share the same XOR difference \(\varDelta \). That is, for every wire i, the output-wire label \(Z_{i,0}\) corresponding to ‘0’ is random whereas the output-wire label \(Z_{i,1}\) corresponding to ‘1’ is set to \(Z_{i,1}:=Z_{i,0}\oplus \varDelta \). (The protocol is set up so that \(\varDelta \) is not revealed by the check circuits.) If \(P_{2}\) learns different outputs for some output wire i in two different gabled circuits—which means that \(P_{1}\) cheated—then \(P_{2}\) recovers \(\varDelta \). The parties then run a second 2PC protocol in which \(P_{2}\) learns x if it knows \(\varDelta \); here, input-consistency checks are used to enforce that \(P_{1}\) uses the same input x as before.

Afshar et al. [1] designed an input-recovery mechanism that does not require a secondary 2PC protocol. In their scheme, \(P_{1}\) first commits to its input bit-by-bit using ElGamal encryption; that is, for each bit x[i] of its input, \(P_{1}\) sends \((g^r, h^r g^{x[i]})\) to \(P_{2}\), where \(h := g^\omega \) for some \(\omega \) known only to \(P_{1}\). As part of the protocol, \(P_{1}\) sends \(\{Z_{i,b}+\omega _b\}_{b\in {{\{0,1\}}^{}}}\) to \(P_{2}\) (where, as before, \(Z_{i,b}\) is the label corresponding to bit b for output wire i), with \(\omega =\omega _0+\omega _1\). Now, if \(P_{2}\) learns two different output-wire labels for some output wire, \(P_{2}\) can recover \(\omega \) and hence recover x. Afshar et al. use homomorphic properties of ElGamal encryption to enable \(P_{2}\) to efficiently check that the \(\{Z_{i,b}+\omega _b\}_{b\in {{\{0,1\}}^{}}}\) are computed correctly, and for this bit-by-bit encryption of the input is required. Overall, \(O({\rho }\cdot n)\) public-key operations (where \(|x|=n\)) are needed.

Our construction relies on the same general idea introduced by Afshar et al., but our key innovation is that we are able to replace most of the public-key operations with symmetric-key operations, overall using only \(O({\rho })\) public-key operations rather than \(O({\rho }\cdot n)\); see Sect. 3.1 for details.

Input Consistency. One challenge in the cut-and-choose approach with the input-recovery technique is that \(P_{2}\) needs to enforce that \(P_{1}\) uses the same input x in all the evaluation circuits, as well as in the input-recovery phase. Afshar et al. address this using zero-knowledge proofs to demonstrate (in part) that the ElGamal ciphertexts sent by \(P_{1}\) all commit to the same bit across all evaluation circuits. We observe that it is not actually necessary to ensure that \(P_{1}\) uses the same input x across all evaluation circuits and in the input-recovery step; rather, it is sufficient to enforce that the input x used in the input-recovery step is used in at least one of the evaluation circuits. This results in a dramatic efficiency improvement; see Sect. 3.1 for details.

Preventing a Selective-Failure Attack. 2PC protocols must also prevent a selective-failure attack whereby a malicious \(P_{1}\) uses one valid input-wire label and one invalid input-wire label (for one of \(P_{2}\)’s input wires) in the oblivious-transfer step. If care is not taken, \(P_{1}\) could potentially use this to learn a bit of \(P_{2}\)’s input by observing whether or not \(P_{2}\) aborts. Lindell and Pinkas [25] proposed to deal with this using the XOR-tree approach in which \(P_{2}\) replaces each bit \(y_i\) of its input by \({\rho }\) random bits that XOR to \(y_i\). By doing so, it can be shown that the probability with which \(P_{2}\) aborts is (almost) independent of its actual input. This approach increases the number of oblivious transfers by a factor of \({\rho }\), but this can be improved by using a \({\rho }\)-probe matrix [25, 35], which only increases the length of the effective input by a constant factor.

Nevertheless, this constant-factor blow-up in the number of (effective) input bits corresponds to a quadratic blow-up in the number of XOR operations required. Somewhat surprisingly (since these XORs are non-cryptographic operations), this blow-up can become quite prohibitive. For example, for inputs as small as 4096 bits, we find that the time to compute all the XORs required for a \({\rho }\)-probe matrix is over 3 s! We resolve this bottleneck by breaking \(P_{2}\)’s input into small chunks and constructing smaller \(\rho \)-probe matrices for each chunk, thereby reducing the overall processing required. See Sect. 4 for details.

Results. Combining the above ideas, as well as other optimizations identified in Sect. 4, we obtain a new 2PC protocol with provable security against malicious adversaries; see Sect. 3.2 for a full description. Implementing this protocol, we find that it outperforms prior work by up to several orders of magnitude in the single-execution setting; see Table 1 and Sect. 5.

Subsequent Work. In our extended version [37] we adopt ideas by David et al. [10] to further improve the efficiency of our protocol—especially when communication is the bottleneck—by reducing the communication required for the check circuits (as in [14]).

1.2 Related Work

Since the first implementation of a 2PC protocol with malicious security [27], many implementations with better performance (including those already discussed in the introduction) have been developed [11, 13, 23, 31, 32, 35]. Although other approaches have been proposed for two-party computation with malicious security (e.g., [9, 12, 31]), here we focus on protocols using the cut-and-choose paradigm that is currently the most efficient approach in the single-execution setting when pre-processing is not used. Lindell and Pinkas [25] first showed how to use the cut-and-choose technique to achieve malicious security. Their construction required 680 garbled circuits for statistical security \(2^{-40}\), but this has been improved in a sequence of works [1, 7, 11, 17, 24, 26, 34] to the point where currently only 40 circuits are required.

2 Preliminaries

Let \({\kappa }\) be the computational security parameter and let \({\rho }\) be the statistical security parameter. For a bit-string x, let x[i] denote the ith bit of x. We use the notation \(a := f(\cdots )\) to denote the output of a deterministic function, \(a \leftarrow f(\cdots )\) to denote the output of a randomized function, and \(a \in _R S\) to denote choosing a uniform value from set S. Let \([n] = \{1,\ldots ,n\}\). We use the notation \(({\mathsf {c}},{\mathsf {d}})\leftarrow {\mathsf {Com}} (x)\) for a commitment scheme, where \({\mathsf {c}} \) and \({\mathsf {d}} \) are the commitment and decommitment of x, respectively.

In Figs. 1 and 2, we show functionalities \(\mathcal {F}_\mathbf {\mathsf {OT}}\) and \(\mathcal {F}_\mathbf {\mathsf {cOT}}\) for parallel oblivious transfer (OT) and a weak flavor of committing OT used also by Jawurek et al. [19]. \(\mathcal {F}_\mathbf {\mathsf {cOT}}\) can be made compatible with OT extension as in [19].

Throughout this paper, we use \(P_{1}\) and \(P_{2}\) to denote the circuit garbler and circuit evaluator, respectively. We let \(n_1\), \(n_2\), and \(n_3\) denote \(P_{1}\)’s input length, \(P_{2}\)’s input length, and the output length, respectively.

Two-Party Computation. We use a (standard) ideal functionality for two-party computation in which the output is only given to \(P_{2}\); this can be extended to deliver (possibly different) outputs to both parties using known techniques [25, 34].

Building Blocks. Our implementation of garbled circuits uses all recent optimizations [5, 21, 22, 32, 39]. Our implementation uses the base OT protocol of Chou and Orlandi [8], and the OT extension protocol of Asharov et al. [4].

\({\rho }\) -probe Matrix. A \({\rho }\)-probe matrix, used to prevent selective-failure attacks, is a binary matrix \(M\in {{\{0,1\}}^{n_2\times m}} \) such that for any \(L\subseteq [n_2]\), the Hamming weight of \(\bigoplus _{i\in L}M_i\) (where \(M_i\) is the ith row of M) is at least \({\rho }\). If \(P_{2}\)’s actual input is \(y \in {{\{0,1\}}^{}} ^{n_2}\), then \(P_{2}\) computes its effective input by sampling a random \(y' \in {{\{0,1\}}^{}} ^m\) such that \(y = My'\).

The original construction by Lindell and Pinkas [25] has \(m = \max \{4n_2,8{\rho }\}\). shelat and Shen [35] improved this to \(m = n_2+O({\rho }+ \log (n_2))\). Lindell and Riva [29] proposed to append an identity matrix to M to ensure that M is full rank, and to make it easier to find \(y'\) such that \(y= My'\).

3 Our Protocol

3.1 Protocol Overview

We describe in more detail the intuition behind the changes we introduce. This description is not complete, but only illustrates the main differences from prior work. Full details of our protocol are given in Sect. 3.2.

In our protocol, the two parties first run \({\rho }\) instances of OT, where in the jth instance \(P_{1}\) sends a random key \(\mathsf {key} _j\) and a random seed \(\mathsf {seed} _j\), while \(P_{2}\) chooses whether to learn \(\mathsf {key} _j\) (thereby choosing to make the jth garbled circuit an evaluation circuit) or \(\mathsf {seed} _j\) (thereby choosing to make the jth garbled circuit a check circuit). The protocol is designed such that \(\mathsf {key} _j\) can be used to recover the input-wire labels associated with \(P_{1}\)’s input in the jth garbled circuit, whereas \(\mathsf {seed} _j\) can be used to recover all the randomness used to generate the jth garbled circuit. Thus far, the structure of our protocol is similar to that of Afshar et al. [1]. However, we differ in how we recover \(P_{1}\)’s input if \(P_{1}\) is caught cheating, and in how we ensure input consistency for \(P_{1}\)’s input.

Input Recovery. Recall that we want to ensure that if \(P_{2}\) detects cheating by \(P_{1}\), then \(P_{2}\) can recover \(P_{1}\)’s input. This is done by encoding some trapdoor in the output-wire labels of the garbled circuits such that if \(P_{2}\) learns both labels for some output wire (in different garbled circuits) then \(P_{2}\) can recover the trapdoor and thus learn \(P_{1}\)’s input. In slightly more detail, input recovery consists of the following high-level steps:

-

1.

\(P_{1}\) “commits to” its input x using some trapdoor.

-

2.

\(P_{1}\) sends garbled circuits and the input-wire labels associated with x, using an input-consistency protocol (discussed below) to enforce that consistent input-wire labels are used.

-

3.

\(P_{1}\) and \(P_{2}\) run some protocol such that if \(P_{2}\) detects cheating by \(P_{1}\), then \(P_{2}\) gets the trapdoor without \(P_{1}\) learning this fact.

-

4.

\(P_{2}\) either (1) detects cheating, recovers x using the trapdoor, and locally computes (and outputs) f(x, y), or (2) outputs the (unique) output of the evaluated garbled circuits, which is f(x, y).

In Afshar et al. [1], the above is done using ElGamal encryption and efficient zero-knowledge checks to enforce input consistency. However, this approach requires \(O({\rho }\cdot (n_1+n_3))\) public-key operations. In contrast, our protocol achieves the same functionality with only \(O({\rho })\) public-key operations.

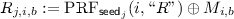

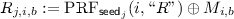

Our scheme works as follows. Assume for ease of presentation that \(P_{1}\)’s input x is a single bit and the output of the function is also a single bit. The parties run an OT protocol in which \(P_{1}\) inputs x and \(P_{2}\) inputs two random labels \(M_0,M_1\), with \(P_{1}\) receiving \(M_x\). Then, for the jth garbled circuit, \(P_{1}\) “commits” to x by computing  and sending to \(P_{2}\) an encryption of \(R_{j,x}\) under \(\mathsf {key} _j\). Note that \(P_{1}\) cannot “commit” to \(1-x\) unless \(P_{1}\) can guess \(M_{1-x}\). Also, if \(P_{1}\) is honest then x remains hidden from \(P_{2}\) because \(P_{2}\) knows either \(\mathsf {key} _j\) or \(\mathsf {seed} _j\) for each j, but not both. The value \(\mathsf {seed} _j\) for any evaluation circuit j serves as a trapdoor since, in conjunction with the value \(\mathsf {key} _j\) that \(P_{2}\) already has, it allows \(P_{2}\) to learn \(M_x\) (and hence determine x).

and sending to \(P_{2}\) an encryption of \(R_{j,x}\) under \(\mathsf {key} _j\). Note that \(P_{1}\) cannot “commit” to \(1-x\) unless \(P_{1}\) can guess \(M_{1-x}\). Also, if \(P_{1}\) is honest then x remains hidden from \(P_{2}\) because \(P_{2}\) knows either \(\mathsf {key} _j\) or \(\mathsf {seed} _j\) for each j, but not both. The value \(\mathsf {seed} _j\) for any evaluation circuit j serves as a trapdoor since, in conjunction with the value \(\mathsf {key} _j\) that \(P_{2}\) already has, it allows \(P_{2}\) to learn \(M_x\) (and hence determine x).

The next step is to devise a way for \(P_{2}\) to recover \(\mathsf {seed} _j\) if it learns inconsistent output-wire labels in two different evaluation circuits. We do this as follows. First, \(P_{1}\) chooses random \(\varDelta , \varDelta _0, \varDelta _1\) such that \(\varDelta = \varDelta _0 \oplus \varDelta _1\). Then, for all j it encrypts \(\varDelta _0\) using \(Z_{j,0}\) and encrypts \(\varDelta _1\) using \(Z_{j,1}\), where \(Z_{j,0}, Z_{j,1}\) are the two output-wire labels of the jth garbled circuit. It sends all these encryptions to \(P_{2}\). Thus, if \(P_{2}\) learns \(Z_{j_1,0}\) for some \(j_1\) it can recover \(\varDelta _0\), and if it learns \(Z_{j_2,1}\) for some \(j_2\) it can recover \(\varDelta _1\). If it learns both output-wire labels, it can then of course recover \(\varDelta \).

\(P_{1}\) and \(P_{2}\) then run a protocol that guarantees that if \(P_{2}\) knows \(\varDelta \) it recovers \(\mathsf {seed} _j\), and otherwise it learns nothing. This is done as follows. \(P_{2}\) sets \(\varOmega :=\varDelta \) if it learned \(\varDelta \), and sets \(\varOmega := 1\) otherwise. \(P_{2}\) then computes \((h, g_1, h_1) := (g^\omega , g^r, h^{r} \varOmega )\), for random \(\omega \) and r, and sends \((h, g_1, h_1)\) to \(P_{1}\). Then, for each index j, party \(P_{1}\) computes \(C_j := g^{s_j} h^{t_j}\) and \(D_j := g_1^{s_j} (h_1 / \varDelta )^{t_j}\) for random \(s_j, t_j\), and sends \(C_j\) along with an encryption of \(\mathsf {seed} _j\) under \(D_j\). Note that if \(\varOmega = \varDelta \), then \(C_j^r = D_j\) and thus \(P_{2}\) can recover \(\mathsf {seed} _j\), whereas if \(\varOmega \ne \varDelta \) then \(P_{2}\) learns nothing (in an information-theoretic sense).

Of course, the protocol as described does not account for the fact that \(P_{1}\) can send invalid messages or otherwise try to cheat. However, by carefully integrating appropriate correctness checks as part of the cut-and-choose process, we can guarantee that if \(P_{1}\) tries to cheat then \(P_{2}\) either aborts (due to detected cheating) or learns \(P_{1}\)’s input with high probability without leaking any information.

Input Consistency. As discussed in Sect. 1.1, prior schemes enforce that \(P_{1}\) uses the same input x for all garbled circuits and also for the input-recovery sub-protocol. However, we observe that this is not necessary. Instead, it suffices to ensure that \(P_{1}\) uses the same input in the input-recovery sub-protocol and at least one of the evaluated garbled circuit. Even if \(P_{1}\) cheats by using different inputs in two different evaluated garbled circuits, \(P_{2}\) still obtains the correct output: if \(P_{2}\) learns only one output then this is the correct output; if \(P_{2}\) learns multiple outputs, then the input-recovery procedure ensures that \(P_{2}\) learns x and so can compute the correct output.

We ensure the above weaker notion of consistency by integrating the consistency check with the cut-and-choose process as follows. Recall that in our input-recovery scheme, \(P_{1}\) sends to \(P_{2}\) a “commitment”  for each index j. After these commitments are sent, we now have \(P_{2}\) reveal \(M_0\oplus M_1\) to \(P_{1}\) (we use committing OT for this purpose), so \(P_{1}\) learns both \(M_0\) and \(M_1\). \(P_{1}\) then computes and sends (in a randomly permuted order) \({\mathsf {Com}} (R_{j,0}, A_{j,0})\) and \({\mathsf {Com}} (R_{j,1}, A_{j,1})\), where \(A_{j,0},A_{j,1}\) are \(P_{1}\)’s input-wire labels in the jth garbled circuit and the commitments are generated using randomness derived from \(\mathsf {seed} _j\). \(P_{1}\) also sends \({\mathsf {Enc}} _{\mathsf {key} _j}(\mathsf {Decom}({\mathsf {Com}} (R_{j,x},A_{j,x})))\). Note that (1) if \(P_{2}\) chose j as a check circuit then it can check correctness of the commitment pair, since everything is computed from \(\mathsf {seed} _j\), and (2) if \(P_{2}\) chose j as an evaluation circuit then it can open the appropriate commitment to recover \(R_{j,x}\), and check that this matches the value sent before.

for each index j. After these commitments are sent, we now have \(P_{2}\) reveal \(M_0\oplus M_1\) to \(P_{1}\) (we use committing OT for this purpose), so \(P_{1}\) learns both \(M_0\) and \(M_1\). \(P_{1}\) then computes and sends (in a randomly permuted order) \({\mathsf {Com}} (R_{j,0}, A_{j,0})\) and \({\mathsf {Com}} (R_{j,1}, A_{j,1})\), where \(A_{j,0},A_{j,1}\) are \(P_{1}\)’s input-wire labels in the jth garbled circuit and the commitments are generated using randomness derived from \(\mathsf {seed} _j\). \(P_{1}\) also sends \({\mathsf {Enc}} _{\mathsf {key} _j}(\mathsf {Decom}({\mathsf {Com}} (R_{j,x},A_{j,x})))\). Note that (1) if \(P_{2}\) chose j as a check circuit then it can check correctness of the commitment pair, since everything is computed from \(\mathsf {seed} _j\), and (2) if \(P_{2}\) chose j as an evaluation circuit then it can open the appropriate commitment to recover \(R_{j,x}\), and check that this matches the value sent before.

3.2 Protocol Details and Proof of Security

We present the full details of our protocol in Figs. 3 and 4. To aid in understanding the protocol, we also present a graphical depiction in Fig. 5. We summarize some important notations in Table 2 for reference.

Our protocol, including the optimizations detailed in Sect. 4, requires a total of \(O({\rho }\cdot (n_1+n_2+n_3+|C|))\) symmetric-key operations and \(O({\rho }+{\kappa })\) group operations. Most of the symmetric-key operations, including circuit garbling and computing the \(\text {PRF} \)s, can be accelerated using hardware AES instructions.

Theorem 1

Let \({\mathsf {Com}} \) be a computationally hiding/binding commitment scheme, let the garbling scheme satisfy authenticity, privacy, and obliviousness (cf. [6]), let H be collision-resistant, and assume the decisional Diffie-Hellman assumption holds. Then the protocol in Figs. 3 and 4 securely computes f in the \((\mathcal {F}_\mathbf {\mathsf {OT}},\mathcal {F}_\mathbf {\mathsf {cOT}})\)-hybrid model with security \(2^{-{\rho }}+\mathsf{negl}({\kappa })\).

Proof

We consider separately the case where \(P_{1}\) or \(P_{2}\) is malicious.

Malicious \(P_1\) . Our proof is based on the fact that with all but negligible probability, \(P_{2}\) either aborts or learns the output f(x, y), where x is the input \(P_{1}\) sent to \(\mathcal {F}_\mathbf {\mathsf {cOT}}\) in Step 3 and y is \(P_{2}\)’s input. We rely on the following lemma, which we prove in Sect. 3.3.

Lemma 1

Consider an adversary \(\mathcal {A}_{}\) corrupting \(P_{1}\) and denote x as the input \(\mathcal {A}_{}\) sends to \(\mathcal {F}_\mathbf {\mathsf {cOT}}\) in Step 3. With probability at least \(1-2^{-{\rho }} - \mathsf{negl}(\kappa )\), \(P_{2}\) either aborts or learns f(x, y).

Given this, the simulator essentially acts as an honest \(P_{2}\) using input 0, extracts \(P_{1}\)’s input x from the call to \(\mathcal {F}_\mathbf {\mathsf {cOT}}\), and outputs f(x, y) if no party aborts.

We now proceed to the formal details. Let \(\mathcal {A}_{}\) be an adversary corrupting \(P_{1}\). We construct a simulator \(\mathcal S _{}\) that runs \(\mathcal {A}_{}\) as a subroutine and plays the role of \(P_{1}\) in the ideal world involving an ideal functionality \(\mathcal {F}\) evaluating f. \(\mathcal S _{}\) is defined as follows.

-

1–2

\(\mathcal S _{}\) interacts with \(\mathcal {A}_{}\), acting as an honest \(P_{2}\) using input 0.

-

3

\(\mathcal S _{}\) obtains the input x that \(\mathcal {A}_{}\) sends to \(\mathcal {F}_\mathbf {\mathsf {cOT}}\). It forwards x to \(\mathcal {F}\).

-

4–6

\(\mathcal S _{}\) acts as an honest \(P_{2}\), where if \(P_{2}\) would abort then \(\mathcal S _{}\) sends \(\mathsf {abort}\) to \(\mathcal {F}\) and halts, outputting whatever \(\mathcal {A}_{}\) outputs.

-

7–8

\(\mathcal S _{}\) acts as an honest \(P_{2}\) using \(\varOmega := 1\), where if \(P_{2}\) would abort then \(\mathcal S _{}\) sends \(\mathsf {abort}\) to \(\mathcal {F}\) and halts, outputting whatever \(\mathcal {A}_{}\) outputs.

-

9

\(\mathcal S _{}\) acts as an honest \(P_{2}\), except that after the check in Step 9a, \(\mathcal S _{}\) also checks if \(\{B_{j,i,b}\}_{j\notin {\mathcal {E}},i\in [m],b\in {{\{0,1\}}^{}}}\) are correctly computed and aborts if, for at least \({\rho }\) different \(i\in [m]\), \(\{B_{j,i,b}\}_{j\notin {\mathcal {E}},b\in {{\{0,1\}}^{}}}\) contains incorrect values. If \(P_{2}\) would abort then \(\mathcal S _{}\) sends \(\mathsf {abort}\) to \(\mathcal {F}\) and halts, outputting whatever \(\mathcal {A}_{}\) outputs; otherwise, \(\mathcal S _{}\) sends \(\mathsf {continue}\) to \(\mathcal {F}\).

We now show that the joint distribution over the outputs of \(\mathcal {A}_{}\) and the honest \(P_{2}\) in the real world is indistinguishable from their joint distribution in the ideal world.

- \(\mathbf {H_{}} _{\mathbf{1}}\).:

-

Same as the hybrid-world protocol, where \(\mathcal S _{}\) plays the role of an honest \(P_{2}\) using the actual input y.

- \(\mathbf {H_{}} _{\mathbf{2}}\).:

-

\(\mathcal S _{}\) now extracts the input x that \(\mathcal {A}_{}\) sends to \(\mathcal {F}_\mathbf {\mathsf {cOT}}\) and sends x to \(\mathcal {F}_\mathbf {}\) if no party aborts. \(\mathcal S _{}\) also performs the additional checks as described above in Step 9 of the simulator.

There are two ways \(\mathcal {A}_{}\) would cheat here, and we address each in turn. For simplicity, we let \(I\subset [m]\) denote the set of indices i such that \(B_{j,i,b}\) is not correctly computed.

- 1.:

-

\(\mathcal {A}_{}\) launches a selective-failure attack with \(|I|< {\rho }\). Lemma 1 ensures (in \(\mathbf {H_{1}}\)) that \(P_{2}\) either aborts or learns f(x, y) with probability at least \(1-2^{-{\rho }}\). In \(\mathbf {H_{2}}\), note that \(P_{2}\) either aborts or learns f(x, y) with probability 1. Further, since fewer than \({\rho }\) wires are corrupted, the probability of an abort due to the selective-failure attack is exactly the same in both hybrids. Therefore the statistical distance between \(\mathbf {H_{1}}\) and \(\mathbf {H_{2}}\) is at most \(2^{-{\rho }}\).

- 2.:

-

\(\mathcal {A}_{}\) launches a selective-failure attack with \(|I|\ge {\rho }\). By the security of the \({\rho }\)-probe matrix [29], \(\mathcal S _{}\) aborts in \(\mathbf {H_{1}}\) with probability at least \(1-2^{-{\rho }}\). (If \(\mathcal {A}_{}\) cheats elsewhere, the probability of abort can only increase.) But in \(\mathbf {H_{2}}\) in this case \(P_{2}\) aborts with probability 1, and so there is at most a \(2^{-{\rho }}\) difference between \(\mathbf {H_{1}}\) and \(\mathbf {H_{2}}\).

- \(\mathbf {H_{}} _\mathbf{3}\).:

-

Same as \(\mathbf {H_{2}}\), except \(\mathcal S _{}\) uses \(y:=0\) throughout of protocol and sets \(\varOmega :=1\) in Step 7.

In [\(\mathbf {H_{}} _\mathbf{3}\)], \(P_{2}\) sends \((h,g_1,h_1):=(g^\omega ,g^r,g^{\omega r})\), which is indistinguishable from \((g^\omega ,g^r,g^{\omega r}\varOmega )\) by the decisional Diffie-Hellman problem. Computationally indistinguishability of \(\mathbf {H_{2}}\) and \(\mathbf {H_{3}}\) follows.

As \(\mathbf {H_{3}}\) is the ideal-world execution, the proof is complete. \(\square \)

Malicious \(P_2\) . Here, we need to simulate the correct output f(x, y) that \(P_{2}\) learns. Rather than simulate the garbled circuit, as is done in most prior work, we modify the output-mapping tables \(\{T_{j,i,b}\}\) to encode the correct output. At a high level, the simulator acts as an honest \(P_{1}\) with input 0, which lets \(P_{2}\) learn the output-wire labels for f(0, y) when evaluating the garbled circuits. The simulator then “tweaks” the output mapping tables \(\{T_{j,i,b}\}\) to ensure that \(P_{2}\) reconstructs the “correct” output f(x, y).

We now proceed to the formal details. Let \(\mathcal {A}_{}\) be an adversary corrupting \(P_{2}\); we construct a simulator \(\mathcal S _{}\) as follows.

-

1

\(\mathcal S _{}\) acts as an honest \(P_{1}\) and obtains the set \({\mathcal {E}} \) that \(\mathcal {A}_{}\) sends to \(\mathcal {F}_\mathbf {\mathsf {OT}}\).

-

2

\(\mathcal S _{}\) acts as an honest \(P_{1}\), and obtains the input \(y'\) that \(\mathcal {A}_{}\) sends to \(\mathcal {F}_\mathbf {\mathsf {OT}}\). \(\mathcal S _{}\) computes y from \(y'\), sends \((\mathsf {input}, y)\) to \(\mathcal {F}_\mathbf {}\), which sends back \(z := f(x,y)\) to \(\mathcal S _{}\).

-

3

\(\mathcal S _{}\) acts as an honest \(P_{1}\) with input \(x:=0\). That is, \(\mathcal S _{}\) receives \(\{M_{i,b}\}\) labels and sends \(\{{\mathsf {Enc}} _{\mathsf {key} _j}(\{R_{j,i,0}\}_{i\in [n_1]})\}_{j\in [{\rho }]}\) to \(\mathcal {A}_{}\). If \(\mathcal {A}_{}\) send \(\mathsf {abort}\) to \(\mathcal {F}_\mathbf {\mathsf {cOT}}\), \(\mathcal S _{}\) aborts, outputting whatever \(\mathcal {A}_{}\) outputs.

-

4

\(\mathcal S _{}\) acts as an honest \(P_{1}\) with input \(x:=0\). That is, \(\mathcal S _{}\) sends \(\{({\mathsf {c}} ^R_{j,i,0}, {\mathsf {c}} ^R_{j,i,1})\}\) (in random permuted order) and \({\mathsf {Enc}} _{\mathsf {key} _j}(\{{\mathsf {d}} ^R_{j,i,0}\}_{i\in [n_1]})\) to \(\mathcal {A}\).

-

5

\(\mathcal S _{}\) acts as an honest \(P_{1}\), except as follows. \(\mathcal S _{}\) computes \(z' := f(0, y)\) and for \(j\in {\mathcal {E}} \), \(i\in [n_3]\), and \(b\in {{\{0,1\}}^{}} \), sets \(T_{j,i,b}:={\mathsf {Enc}} _{Z_{j,i,b}}(\varDelta _{i,1-b})\) if \(z[i] \ne z'[i]\).

-

6–7

\(\mathcal S _{}\) acts as an honest \(P_1\).

We now show that the joint distribution over the outputs of the honest \(P_{1}\) and \(\mathcal {A}_{}\) in the real world is indistinguishable from their joint distribution in the ideal world.

- \(\mathbf {H_{}} _\mathbf{1}\).:

-

Same as the hybrid-world protocol, where \(\mathcal S _{}\) plays the role of an honest \(P_{1}\).

- \(\mathbf {H_{}} _\mathbf{2}\).:

-

\(\mathcal S _{}\) extracts \(P_{2}\)’s input y from \(\mathcal {F}_\mathbf {\mathsf {OT}}\) and sends \((\mathsf {input}, y)\) to \(\mathcal {F}_\mathbf {}\), receiving back z. \(\mathcal S _{}\) uses \(x:=0\) throughout the simulation and “tweaks” \(\{T_{j,i,b}\}\) as is done by the simulator using knowledge of z.

\(\mathbf {H_{1}}\) and \(\mathbf {H_{2}}\) are the same except:

- 1.:

-

In \(\mathbf {H_{1}}\), \(P_{2}\) learns \(\{R_{j,i,x[i]}\}_{j\in {\mathcal {E}}}\), while \(P_{2}\) learns \(\{R_{j,i,0}\}_{j\in {\mathcal {E}}}\) in \(\mathbf {H_{2}}\). Note that these values are computed such that

. Since \(P_{2}\) does not know any \(\{\mathsf {seed} _j\}_{j\in {\mathcal {E}}}\),

. Since \(P_{2}\) does not know any \(\{\mathsf {seed} _j\}_{j\in {\mathcal {E}}}\),  looks random to \(P_{2}\). Because only one of \(\{R_{j,i,b}\}_{b\in {{\{0,1\}}^{}}}\) is given in both \(\mathbf {H_{}}\)s, \(R_{j,i,x[i]}\) in \(\mathbf {H_{1}}\) and \(R_{j,i,0}\) in \(\mathbf {H_{2}}\) are uniformly random to \(P_{2}\).

looks random to \(P_{2}\). Because only one of \(\{R_{j,i,b}\}_{b\in {{\{0,1\}}^{}}}\) is given in both \(\mathbf {H_{}}\)s, \(R_{j,i,x[i]}\) in \(\mathbf {H_{1}}\) and \(R_{j,i,0}\) in \(\mathbf {H_{2}}\) are uniformly random to \(P_{2}\). - 2.:

-

In \(\mathbf {H_{1}}\), party \(P_{2}\) gets \(Z_{j,i,z[i]}\) and \(T_{j,i,z[i]}:={\mathsf {Enc}} _{Z_{j,i,z[i]}}(\varDelta _{i,z[i]})\), while in \(\mathbf {H_{2}}\), if \(z[i] \ne z'[i]\) then \(P_{2}\) gets \(Z_{j,i,1-z[i]}\) and \(T_{j,i,1-z[i]}:={\mathsf {Enc}} _{Z_{j,i,1-z[i]}}(\varDelta _{i,z[i]})\) instead. In both hybrids, \(P_{2}\) cannot learn any information about the other output-wire label due to the authenticity property of the garbled circuit.

By the obliviousness property of the garbling scheme, \(Z_{j,i,0}\) and \(Z_{j,i,1}\) are indistinguishable. Likewise, by the security of the encryption scheme the values \(T_{j,i,0}\) and \(T_{j,i,1}\) are indistinguishable.

As \(\mathbf {H_{2}}\) is the same as the ideal-world execution, the proof is complete.

3.3 Proving Lemma 1

We now prove a series of lemmas toward proving Lemma 1. We begin with a definition of what it means for an index \(j\in [{\rho }]\) to be “good.”

Definition 1

Consider an adversary \(\mathcal {A}_{}\) corrupting \(P_{1}\), and denote \(\{\mathsf {seed} _j\}\) as the labels \(\mathcal {A}_{}\) sent to \(\mathcal {F}_\mathbf {\mathsf {OT}}\). An index \(j\in [{\rho }]\) is good if and only if all the following hold.

-

1.

The \(B_{j,i,y'[i]}\) values \(\mathcal {A}_{}\) sent to \(\mathcal {F}_\mathbf {\mathsf {OT}}\) in Step 2 are computed honestly using \(\mathsf {seed} _j\).

-

2.

The commitments \(\{{\mathsf {c}} ^R_{j,i,b}\}_{i\in [n_1],b\in {{\{0,1\}}^{}}}\) that \(\mathcal {A}_{}\) sent to \(P_{2}\) in Step 4 are computed honestly using \(\mathsf {seed} _j\).

-

3.

\(\text {GC} _j\) is computed honestly using \(\{A_{j,i,b}\}\) and \(\{B_{j,i,b}\}\) as the input-wire labels and \(\mathsf {seed} _j\).

-

4.

The values \(C_j\) and \({\mathsf {Enc}} _{D_j}(\mathsf {seed} _j)\) are computed honestly using \(\mathsf {seed} _j\) and the \(\varDelta \) value sent by \(\mathcal {A}_{}\) in Step 7.

-

5.

The commitment \({\mathsf {c}} ^T_j\) is computed honestly using \(\varDelta _{i,b}\) and \(\mathsf {seed} _j\).

It is easy to see the following.

Fact. If an index \(j\in [{\rho }]\) is not good then it cannot pass all the checks in Step 9.

We first show that \(P_{2}\) is able to recover the correct output-wire labels for a good index.

Lemma 2

Consider an adversary \(\mathcal {A}_{}\) corrupting \(P_{1}\), and denote x as the input \(\mathcal {A}_{}\) sent to \(\mathcal {F}_\mathbf {\mathsf {cOT}}\). If an index \(j\in {\mathcal {E}} \) is good and \(P_{2}\) does not abort, then with all but negligible probability \(P_{2}\) learns output labels \(Z_{j,i,z[i]}\) with \(z=f(x,y)\).

Proof

Since j is good, we know that \(P_{2}\) receives an honestly computed \(\text {GC} _j\) and \(T_{j,i,b}\) from \(\mathcal {A}_{}\) and honest \(B_{j,i,y'[i]}\) from \(\mathcal {F}_\mathbf {\mathsf {OT}}\). However, it is still possible that \(P_{2}\) does not receive correct input labels for \(P_{1}\)’s input that corresponds to the input x that \(\mathcal {A}_{}\) sent to \(\mathcal {F}_\mathbf {\mathsf {cOT}}\). We will show that this can only happen with negligible probability.

Note that if j is good, then the commitments \(\{{\mathsf {c}} ^R_{j,i,b}\}\) are computed correctly. Since \(P_{2}\) obtains the \(A_{j,i,x[i]}\) labels by decommitting one of these commitments, the labels \(P_{2}\) gets are valid input-wire labels, although they may not be consistent with the input x that \(\mathcal {A}_{}\) sent to \(\mathcal {F}_\mathbf {\mathsf {cOT}}\).

Assume that for some \(i\in [n_1]\), \(P_{2}\) receives \(A_{j,i,1-x[i]}\). This means \(P_{2}\) also receives \(R_{j,i,1-x[i]}\) from the same decommitment, since \({\mathsf {c}} _{j,i,b}\) is computed honestly. However, if \(P_{2}\) does not abort, then we know that \(P_{2}\) receives the same label \(R_{j,i,1-x[i]}\) in Step 3 since the checks pass. We also know that

Therefore \(\mathcal {A}_{}\) needs to guess \(M_{i,1-x[i]}\) correctly before \(P_{2}\) sends both labels, which happens with probability at most \(2^{-{\kappa }}\).

We next show that \(P_{2}\) can recover x if \(P_{1}\) tries to cheat on a good index.

Lemma 3

Consider an adversary \(\mathcal {A}_{}\) corrupting \(P_{1}\), and denote x as the input \(\mathcal {A}_{}\) sent to \(\mathcal {F}_\mathbf {\mathsf {cOT}}\). If an index \(j\in {\mathcal {E}} \) is good and \(P_{2}\) learns \(\varOmega =\varDelta \), then \(P_{2}\) can recover \(x_j=x\) in Step 8 if no party aborts.

Proof

Since j is good, we know that \(C_j\) and \({\mathsf {Enc}} _{D_j}(\mathsf {seed} _j)\) are constructed correctly, where \(\mathsf {seed} _j\) is the one \(P_{1}\) sent to \(\mathcal {F}_\mathbf {\mathsf {OT}}\) in Step 1. Therefore, \(P_{2}\) can recompute \(\mathsf {seed} _j\) from them. We just need to show that \(P_{2}\) is able to recover x from a good index using \(\mathsf {seed} _j\).

Using a similar argument as the previous proof, we can show that the label \(R_{j,i,x[i]}\) that \(P_{2}\) learns in Step 4 is a correctly computed label using x that \(P_{1}\) sent to \(\mathcal {F}_\mathbf {\mathsf {cOT}}\) in Step 3: Since j is good, the \({\mathsf {c}} ^R_{j,i,b}\) values are all good, which means that the \(R_{j,i,x[i]}\) labels \(P_{2}\) learns are valid. However, \(P_{1}\) cannot “flip” the wire label unless \(P_{1}\) guesses a random label correctly, which happens with negligible probability.

In conclusion, \(P_{2}\) has the correct  and the \(\mathsf {seed} _j\) used in the computation. Further \(P_{2}\) has \(M_{i,0},M_{i,1}\). Therefore \(P_{2}\) can recover x that \(P_{1}\) sent to \(\mathcal {F}_\mathbf {\mathsf {cOT}}\) if \(P_{2}\) has \(\varOmega =\varDelta \).

and the \(\mathsf {seed} _j\) used in the computation. Further \(P_{2}\) has \(M_{i,0},M_{i,1}\). Therefore \(P_{2}\) can recover x that \(P_{1}\) sent to \(\mathcal {F}_\mathbf {\mathsf {cOT}}\) if \(P_{2}\) has \(\varOmega =\varDelta \).

Note that given the above lemma, it may still be possible that a malicious \(P_{1}\) acts in such a way that \(P_{2}\) recovers different x’s from different indices. In the following we show this only happens with negligible probability.

Lemma 4

Consider an adversary \(\mathcal {A}_{}\) corrupting \(P_{1}\) and denote x as the input \(P_{1}\) sends to \(\mathcal {F}_\mathbf {\mathsf {cOT}}\) in Step 3. If \(P_{2}\) does not abort, then \(P_{2}\) recovers some \(x'\ne x\) with negligible probability.

Proof

Our proof is by contradiction. Assume that \(P_{2}\) does not abort and recovers some \(x' \ne x\) for some \(j\in {\mathcal {E}} \). Let i be an index at which \(x'[i] \ne x[i]\); we will show in the following that \(\mathcal {A}_{}\) will have to complete some task that is information-theoretically infeasible, and thus a contradiction.

Since \(P_{2}\) does not abort at Step 4, we can denote \(R_{j,i,x[i]}\) as the label \(P_{1}\) learns in Step 3, which also equals the one decommitted to in Step 4. \(P_{2}\) recovering some \(x'\) means that

where \(\mathsf {seed} '_j\) is the seed \(P_{2}\) recovers in Step 7. Therefore we conclude that

Although \(\mathcal {A}_{}\) receives \(M_{i,x[i]}\) in Step 3, \(M_{i,1-x[i]}\) remains completely random before \(\mathcal {A}_{}\) sends \(R_{j,i,x[i]}\). Further, \(\mathcal {A}_{}\) receives \(M_{i,b}\) only after sending \(R_{j,i,x[i]}\). Therefore, the value of \(R_{j,i,x[i]}\oplus M_{i,1-x[i]}\) is completely random to \(\mathcal {A}_{}\). If \(\mathcal {A}_{}\) wants to “flip” a bit in x, \(\mathcal {A}_{}\) needs to find some \(\mathsf {seed} '_j\) such that  equals a randomly chosen string, which is information theoretically infeasible if \(n_1 > 1\).

equals a randomly chosen string, which is information theoretically infeasible if \(n_1 > 1\).

Finally, we are ready to prove Lemma 1, namely, that \(P_{2}\) either aborts or learns f(x, y), regardless of \(P_{1}\)’s behavior.

Lemma 1

Consider an adversary \(\mathcal {A}_{}\) corrupting \(P_{1}\) and denote x as the input \(P_{1}\) sends to \(\mathcal {F}_\mathbf {\mathsf {cOT}}\) in Step 3. With probability at least \(1-(2^{-{\rho }} + \mathsf{negl}(\kappa ))\), \(P_{2}\) either aborts or learns f(x, y).

Proof

Denote the set of \(P_{1}\)’s good circuits as \({\mathcal {E}} '\) and consider the following three cases:

-

\(\mathbf {\bar{{\mathcal {E}}}\cap \bar{{\mathcal {E}} '}\ne \emptyset }\). In this case \(P_{2}\) aborts because \(P_{2}\) checks some \(j\notin {\mathcal {E}} '\) which is not a good index.

-

\(\mathbf {{\mathcal {E}} \cap {\mathcal {E}} '\ne \emptyset }\). In this case, there is some \(j\in {\mathcal {E}} \cap {\mathcal {E}} '\), which means \(P_{2}\) learns \(z:=f(x,y)\) and \(Z_{j,i,z[i]}\) from the jth garbled circuit (by Lemma 2). However, it is still possible that \(P_{2}\) learns more than one valid z. If this is the case, \(P_{2}\) learns \(\varDelta \). Lemma 3 ensures that \(P_{2}\) obtains x; Lemma 4 ensures that \(P_{2}\) cannot recover any other valid \(x'\) even from bad indices.

-

\(\mathbf {{\mathcal {E}} ={\mathcal {E}} '}\). This only happens when \(\mathcal {A}_{}\) guesses \({\mathcal {E}} \) correctly, which happens with at most \(2^{-{\rho }}\) probability.

This completes the proof.

3.4 Universal Composability

Note that in our proof of security, the simulators do not rewind in any of the steps. Similarly, none of the simulators in the hybrid arguments need any rewinding. Therefore, if we instantiate all the functionalities using UC-secure variants then the resulting 2PC protocol is UC-secure.

4 Optimizations

We now discuss several optimizations we discovered in the course of implementing our protocol, some of which may be applicable to other malicious 2PC implementations.

4.1 Optimizing the XOR-tree

We noticed that when using a \({\rho }\)-probe matrix to reduce the number of OTs needed for the XOR-tree, we incurred a large performance hit when \(P_{2}\)’s input was large. In particular, processing the XOR gates introduced by the XOR-tree, which are always assumed to be free due to the free-XOR technique [22], takes a significant amount of time. The naive XOR-tree [25] requires \({\rho }n\) OTs and \({\rho }n\) XOR gates; on the other hand, using a \({\rho }\)-probe matrix of dimension \(n\times cn\), with \(c\ll {\rho }\), requires cn OTs but \(c n^2\) XOR gates. We observe that this quadratic blowup becomes prohibitive as \(P_{2}\)’s input size increases: for a 4096-bit input, it takes more than 3 s to compute just the XORs in the \({\rho }\)-probe matrix of Lindell and Riva [29] across all circuits. Further, it also introduces a large memory overhead: it takes gigabytes of memory just to store the matrix for 65,536-bit inputs.

In the following we introduce two new techniques to both asymptotically reduce the number of XOR gates required and concretely reduce the hidden constant factor in the \({\rho }\)-probe matrix.

A General Transformation to a Sparse Matrix. We first asymptotically reduce the number of XORs needed. Assuming a \({\rho }\)-probe matrix with dimensions \(n\times cn\), we need \(c{\rho }n^2\) XOR gates to process the \({\rho }\)-probe matrices across all \({\rho }\) circuits. Our idea to avoid this quadratic growth in n is to break \(P_{2}\)’s input into small chunks, each of size k. When computing the random input \(y'\), or recovering y in the garbled circuits, we process each chunk individually. By doing so, we reduce the complexity to \({\rho }\cdot \frac{n}{k} c(k)^2=ck{\rho }n\). By choosing \(k=2{\rho }\), this equates to a \(51\times \) decrease in computation even for just 4096-bit inputs. This also eliminates the memory issue, since we only need a very small matrix for any input size.

A Better \({\rho }\) -probe Matrix. After applying the above technique, our problem is reduced to finding an efficient \({\rho }\)-probe matrix for k-bit inputs for some small k, while maintaining a small blowup c. We show that a combination of the previous solutions [25, 29] with a new tighter analysis results in a better solution, especially for small k. Our solution can be written as \(A=[M \Vert I_k]\), where \(M\in {{\{0,1\}}^{k\times (c-1)k}} \) is a random matrix and \(I_k\) is an identity matrix of dimension k. The use of \(I_k\) makes it easy to find a random \(y'\) such that \(y=Ay'\) for any y, and ensures that A is full rank [29]. However, we show that it also helps to reduce c. The key idea is that the XOR of any i rows of A has Hamming weight at least i, contributed by \(I_k\), so we do not need as much Hamming weight from the random matrix as in prior work [25].

In more detail, for each \(S\subseteq [k]\), denote \(M_S:=\bigoplus _{i\in S}M_i\) and use random variable \(X_S\) to denote the number of ones in \(M_S\). In order to make A a \({\rho }\)-probe matrix, we need to ensure that \(X_S + |S| \ge {\rho }\) for any \(S\subseteq [k]\), because XORing any |S| rows from \(I_k\) gives us a Hamming weight of |S|.

\(X_S\) is a random variable with distribution \(\text {Bin}(ck-k,\frac{1}{2})\). Therefore, we can compute the probability that A is not a \({\rho }\)-probe matrix as follows:

where cdf() is the cumulative distribution function for \(\text {Bin}(ck-k, \frac{1}{2})\). Now, for each k we can find the smallest c such that \(\Pr [A\text { is bad}] \le 2^{-{\rho }}\); we include some results in Table 3. We see that our new \({\rho }\)-probe matrix achieves smaller c than prior work [25, 35]. Note that the number of XORs is \(c{\rho }kn\) and the number of OTs is cn. In our implementation we use \(k=232\) and \(c=2\) to achieve the maximum overall efficiency.

Performance Results. See Fig. 6 for a comparison between our approach and the best previous scheme [35]. When the input is large, the cost of computing the \({\rho }\)-probe matrix over all circuits dominates the overall cost. As we can see, our design is about \(10\times \) better for 1,024-bit inputs and can be \(1000\times \) better for 65,536-bit inputs. We are not able to compare beyond this point, because just storing the \({\rho }\)-probe matrix for 262,144 bits for the prior work takes at least 8.59 GB of memory.

4.2 Other Optimizations

Oblivious Transfer with Hardware Acceleration. As observed by Asharov et al. [3], matrix transposition takes a significant amount of the time during the execution of OT extension. Rather than adopting their solution using cache-friendly matrix transposition, we found that a better speedup can be obtained by using matrix transposition routines based on Streaming SIMD Extensions (SSE) instructions [30]. The use of SSE-based matrix transposition in the OT extension protocol is also independently studied in a concurrent work by Keller et al. [20] in a multi-party setting.

Comparing the cost of our \({\rho }\)-probe matrix design with the prior best scheme [35]. When used in a malicious 2PC protocol, computing the \({\rho }\)-probe matrix needs to be done \({\rho }\) times, and OT extension needs to process a cn-bit input because of the blowup of the input caused by the \({\rho }\)-probe matrix.

Given a 128-bit vector of the form \(a[0],\ldots ,a[15]\) where each a[i] is an 8-bit number, the instruction \(\mathsf {\_mm\_movemask\_epi8}\) returns the concatenation of the highest bits from all a[i]s. This makes it possible to transpose a matrix of dimension \(8\times 16\) very efficiently in 15 instructions (8 instructions to “assemble the matrix” and 7 instructions to shift the vector left by one bit). By composing such an approach, we achieve very efficient matrix transposition, which leads to highly efficient OT extension protocols; see Sect. 5.1 for performance results.

Reducing OT Cost. Although our protocol requires three instantiations of OT, we only need to construct the base OTs once. The OTs in Steps 1 and 2 can be done together, and further, by applying the observation by Asharov et al. [3] that the “extension phase” can be iterated, we can perform more random OTs along with the OTs for Steps 1 and 2 to be used in the OTs of Step 3.

Pipelining. Pipelining garbled circuits was first introduced by Huang et al. [16] to reduce memory usage and hence improve efficiency. We adopt a similar idea for our protocol. While as written we have \(P_{2}\) conduct most of the correctness checks at the end of the protocol, we note that \(P_{2}\) can do most of the checks much earlier. In our implementation, we “synchronize” \(P_{1}\) and \(P_{2}\)’s computation such that \(P_{2}\)’s checking is pipelined with \(P_{1}\)’s computation. Pipelining also enables us to evaluate virtually any sized circuit (as long as the width of the circuit is not too large). As shown in Sect. 5.4, we are able to evaluate a 4.3 billion-gate circuit without any memory issue, something that offline/online protocols [29] cannot do without using lots of memory or disk I/O.

Pushing Computation Offline. Although the focus of our work is better efficiency in the absence of pre-processing, it is still worth noting that several steps of our protocol can be pushed offline (i.e., before the parties’ inputs are known) when that is an option. Specifically:

-

1.

In addition to the base OTs, most of the remaining public-key operations can also be done offline. \(P_{2}\) can send \((h,g_1):=(g^\omega , g^r)\) before knowing the input to \(P_{1}\), who can compute the \(C_j\) values and half the \(D_j\) values. During the online phase, \(P_{1}\) and \(P_{2}\) only need to perform \({\rho }\) exponentiations.

-

2.

Garbled circuits can be computed, sent, and checked offline. \(P_{2}\) can also decommit \({\mathsf {c}} ^T_j\) to learn the output translation tables for the evaluation circuits.

5 Implementation and Evaluation

We implemented our protocol in C++ using RELIC [2] for group operations, OpenSSL libssl for instantiating the hash function, and libgarble for garbling [15]. We adopted most of the recent advances in the field [4, 5, 8, 29, 39] as well as the optimizations introduced in Sect. 4. We instantiate the commitment scheme as \((\text {SHA-1}(x, r), r) \leftarrow {\mathsf {Com}} (x)\), though when x has sufficient entropy we use \(\text {SHA-1}(x)\) alone as the commitment.

Evaluation Setup. All evaluations were performed with a single-threaded program with computational security parameter \({\kappa }=128\) and statistical security parameter \({\rho }=40\). We evaluated our system in three different network settings:

-

1.

localhost. Experiments were run on the same machine using the loopback network interface.

-

2.

LAN. Experiments were run on two c4.2xlarge Amazon EC2 instances with 2.32 Gbps bandwidth as measured by iperf and less than 1 ms latency as measured by ping.

-

3.

WAN. Experiments were run on two c4.2xlarge Amazon EC2 instances with total bandwidth throttled to 200 Mbps and 75 ms latency.

All numbers are average results of 10 runs. We observed very small variance between multiple executions.

5.1 Subprotocol Performance

Because of the various optimizations mentioned in Sect. 4, as well as a carefully engineered implementation, many parts of our system perform better than previously reported implementations. We summarize these results in Table 5.

The garbling speed is about 20 million AND gates per second. When both garbling and sending through localhost, this reduces to 14 million AND gates per second due to the overhead of sending all the data through the loopback interface. Over LAN the speed is roughly 9.03 million gates per second, reaching the theoretical upper bound of \(2.32\cdot 10^9 / 256 = 9.06 \cdot 10^6\) gates per second.

For oblivious transfer, our malicious OT extension reports 5.64 s for 8 million OTs. Our implementation takes 0.133 s for 1024 base OTs. We observe that when two machines are involved, bandwidth is the main bottleneck.

5.2 Overall Performance

We now discuss the overall performance of our protocol. Table 4 presents the running time of our protocol on several standard 2PC benchmark circuits for various network settings. For each network condition, we report a single execution running time, which includes all computation for one 2PC invocation, and an offline/online running time. In order to be comparable with Lindell and Riva [29], the offline time includes disk I/O and the online time does not; the time to preload all garbled circuits before the online stage starts is reported separately in parentheses. We tried to compare with the implementation by Rindal and Rosulek [33]; however, their implementation is inherently parallelized, making comparisons difficult. Estimations suggest that their implementation is faster than Lindell and Riva but still less efficient than ours in the single-execution setting.

In Table 6, we compare the performance of our protocol with the existing state-of-the-art implementations. The most efficient implementation for single execution of malicious 2PC without massive parallelization or GPUs we are aware of is by Afshar et al. [1]. They reported 5860 ms of computation time for AES and 7580 ms for SHA256, with disk and network I/O excluded, whereas we achieve 65 ms and 438 ms, respectively, with all I/O included. Thus our result is \(17\times \) to \(90\times \) better than their result, although ours includes network cost while theirs does not.

The performance of our protocol while modifying the input lengths, output length and the circuit size. Input and output lengths are set to 128 bits initially and circuit size is set as 16,384 AND gates. Numbers in the figure show the slope of the lines, namely the cost to process an additional bit or gate.

We also evaluated the performance of the implementation by Lindell and Riva [29] using the same hardware with one thread and parameters tuned for single execution, i.e., 40 main circuits and 132 circuits for input recovery. Their implementation is about \(3\times \) to \(4\times \) better than Afshar et al., but still \(6\times \) to \(26\times \) slower than our LAN results.

5.3 Scalability

In order to understand the cost of each component of our construction, we investigated the scalability as one modifies the input lengths, output length, and circuit size. We set input and output lengths to 128 bits and circuit size as 16,384 AND gates and increase each the variables separately. In Fig. 7, we show how the performance is related to these parameters.

Not surprisingly, the cost increases linearly for each parameter. We can thus provide a realistic estimate of the running time (in \(\mu \)s) of a given circuit of size |C| with input lengths \(n_1\) and \(n_2\) and output length \(n_3\) through the following formula (which is specific to the LAN setting):

The coefficients for other network settings can be found in Table 7, with the same constant cost of the base OTs.

5.4 Additional Examples

Finally, in Table 8 we report the performance of our implementation in the LAN setting on several additional examples. We also show the projected time calculated based on the formula in the previous section. We observe that over different combinations of input, output and circuit sizes, the projected time matches closely to the real results we get.

We further report the total communication and the percentage of the communication not spent on garbled circuits. We can see the percentage stays low except when the circuit is linear to the input lengths.

References

Afshar, A., Mohassel, P., Pinkas, B., Riva, B.: Non-interactive secure computation based on cut-and-choose. In: Nguyen, P.Q., Oswald, E. (eds.) EUROCRYPT 2014. LNCS, vol. 8441, pp. 387–404. Springer, Heidelberg (2014). doi:10.1007/978-3-642-55220-5_22

Aranha, D.F., Gouvêa, C.P.L.: RELIC is an Efficient Library for Cryptography. https://github.com/relic-toolkit/relic

Asharov, G., Lindell, Y., Schneider, T., Zohner, M.: More ecient oblivious transfer and extensions for faster secure computation. In: 20th ACM Conference on Computer and Communications Security (CCS), pp. 535–548. ACM Press (2013)

Asharov, G., Lindell, Y., Schneider, T., Zohner, M.: More efficient oblivious transfer extensions with security for malicious adversaries. In: Oswald, E., Fischlin, M. (eds.) EUROCRYPT 2015. LNCS, vol. 9056, pp. 673–701. Springer, Heidelberg (2015). doi:10.1007/978-3-662-46800-5_26

Bellare, M., Hoang, V.T., Keelveedhi, S., Rogaway, P.: Efficient garbling from a fixed-key blockcipher. In: 2013 IEEE Symposium on Security and Privacy, pp. 478–492. IEEE (2013)

Bellare, M., Hoang, V.T., Rogaway, P.: Foundations of garbled circuits. In: ACM Conference on Computer and Communications Security (CCS), pp. 784–796. ACM Press (2012)

Brandão, L.T.A.N.: Secure two-party computation with reusable bit-commitments, via a cut-and-choose with forge-and-lose technique. In: Sako, K., Sarkar, P. (eds.) ASIACRYPT 2013. LNCS, vol. 8270, pp. 441–463. Springer, Heidelberg (2013). doi:10.1007/978-3-642-42045-0_23

Chou, T., Orlandi, C.: The simplest protocol for oblivious transfer. In: Lauter, K., Rodríguez-Henríquez, F. (eds.) LATINCRYPT 2015. LNCS, vol. 9230, pp. 40–58. Springer, Cham (2015). doi:10.1007/978-3-319-22174-8_3

Damgård, I., Lauritsen, R., Toft, T.: An empirical study and some improvements of the minimac protocol for secure computation. In: Abdalla, M., Prisco, R. (eds.) SCN 2014. LNCS, vol. 8642, pp. 398–415. Springer, Heidelberg (2014). doi:10.1007/978-3-319-10879-7_23

David, B.M., Nishimaki, R., Ranellucci, S., Tapp, A.: Generalizing efficient multiparty computation. In: Lehmann, A., Wolf, S. (eds.) ICITS 2015. LNCS, vol. 9063, pp. 15–32. Springer, Heidelberg (2015). doi:10.1007/978-3-319-17470-9_2

Frederiksen, T.K., Jakobsen, T.P., Nielsen, J.B.: Faster maliciously secure two-party computation using the GPU. In: Abdalla, M., Prisco, R. (eds.) SCN 2014. LNCS, vol. 8642, pp. 358–379. Springer, Heidelberg (2014). doi:10.1007/978-3-319-10879-7_21

Frederiksen, T.K., Jakobsen, T.P., Nielsen, J.B., Nordholt, P.S., Orlandi, C.: MiniLEGO: efficient secure two-party computation from general assumptions. In: Johansson, T., Nguyen, P.Q. (eds.) EUROCRYPT 2013. LNCS, vol. 7881, pp. 537–556. Springer, Heidelberg (2013). doi:10.1007/978-3-642-38348-9_32

Frederiksen, T.K., Nielsen, J.B.: Fast and maliciously secure two-party computation using the GPU. In: Jacobson, M., Locasto, M., Mohassel, P., Safavi-Naini, R. (eds.) ACNS 2013. LNCS, vol. 7954, pp. 339–356. Springer, Heidelberg (2013). doi:10.1007/978-3-642-38980-1_21

Goyal, V., Mohassel, P., Smith, A.: Efficient two party and multi party computation against covert adversaries. In: Smart, N. (ed.) EUROCRYPT 2008. LNCS, vol. 4965, pp. 289–306. Springer, Heidelberg (2008). doi:10.1007/978-3-540-78967-3_17

Groce, A., Ledger, A., Malozemoff, A.J., Yerukhimovich, A.: CompGC: efficient offline/online semi-honest two-party computation. Cryptology ePrint Archive, Report 2016/458 (2016). http://eprint.iacr.org/2016/458

Huang, Y., Evans, D., Katz, J., Malka, L.: Faster secure two-party computation using garbled circuits. In: 20th USENIX Security Symposium. USENIX Association (2011)

Huang, Y., Katz, J., Evans, D.: Efficient secure two-party computation using symmetric cut-and-choose. In: Canetti, R., Garay, J.A. (eds.) CRYPTO 2013. LNCS, vol. 8043, pp. 18–35. Springer, Heidelberg (2013). doi:10.1007/978-3-642-40084-1_2

Huang, Y., Katz, J., Kolesnikov, V., Kumaresan, R., Malozemoff, A.J.: Amortizing garbled circuits. In: Garay, J.A., Gennaro, R. (eds.) CRYPTO 2014. LNCS, vol. 8617, pp. 458–475. Springer, Heidelberg (2014). doi:10.1007/978-3-662-44381-1_26

Jawurek, M., Kerschbaum, F., Orlandi, C.: Zero-knowledge using garbled circuits: how to prove non-algebraic statements efficiently. In: ACM Conference on Computer and Communications Security (CCS), pp. 955–966. ACM Press (2013)

Keller, M., Orsini, E., Scholl, P.: MASCOT: faster malicious arithmetic secure computation with oblivious transfer, pp. 830–842. ACM Press (2016)

Kolesnikov, V., Mohassel, P., Rosulek, M.: FleXOR: flexible garbling for XOR gates that beats free-XOR. In: Garay, J.A., Gennaro, R. (eds.) CRYPTO 2014. LNCS, vol. 8617, pp. 440–457. Springer, Heidelberg (2014). doi:10.1007/978-3-662-44381-1_25

Kolesnikov, V., Schneider, T.: Improved garbled circuit: free XOR gates and applications. In: Aceto, L., Damgård, I., Goldberg, L.A., Halldórsson, M.M., Ingólfsdóttir, A., Walukiewicz, I. (eds.) ICALP 2008. LNCS, vol. 5126, pp. 486–498. Springer, Heidelberg (2008). doi:10.1007/978-3-540-70583-3_40

Kreuter, B., Shelat, A., Shen, C.H.: Billion-gate secure computation with malicious adversaries. In: USENIX Security Symposium, pp. 285–300. USENIX Association (2012)

Lindell, Y.: Fast cut-and-choose based protocols for malicious and covert adversaries. In: Canetti, R., Garay, J.A. (eds.) CRYPTO 2013. LNCS, vol. 8043, pp. 1–17. Springer, Heidelberg (2013). doi:10.1007/978-3-642-40084-1_1

Lindell, Y., Pinkas, B.: An efficient protocol for secure two-party computation in the presence of malicious adversaries. In: Naor, M. (ed.) EUROCRYPT 2007. LNCS, vol. 4515, pp. 52–78. Springer, Heidelberg (2007). doi:10.1007/978-3-540-72540-4_4

Lindell, Y., Pinkas, B.: Secure two-party computation via cut-and-choose oblivious transfer. In: Ishai, Y. (ed.) TCC 2011. LNCS, vol. 6597, pp. 329–346. Springer, Heidelberg (2011). doi:10.1007/978-3-642-19571-6_20

Lindell, Y., Pinkas, B., Smart, N.P.: Implementing two-party computation efficiently with security against malicious adversaries. In: Ostrovsky, R., Prisco, R., Visconti, I. (eds.) SCN 2008. LNCS, vol. 5229, pp. 2–20. Springer, Heidelberg (2008). doi:10.1007/978-3-540-85855-3_2

Lindell, Y., Riva, B.: Cut-and-choose yao-based secure computation in the online/offline and batch settings. In: Garay, J.A., Gennaro, R. (eds.) CRYPTO 2014. LNCS, vol. 8617, pp. 476–494. Springer, Heidelberg (2014). doi:10.1007/978-3-662-44381-1_27

Lindell, Y., Riva, B.: Blazing fast 2PC in the offline/online setting with security for malicious adversaries. In: 22nd ACM Conference on Computer and Communications Security (CCS), pp. 579–590. ACM Press (2015)

Mischasan: What is SSE good for? Transposing a bit matrix. https://mischasan.wordpress.com/2011/07/24/what-is-sse-good-for-transposing-a-bit-matrix/. Accessed 10 Dec 2015

Nielsen, J.B., Nordholt, P.S., Orlandi, C., Burra, S.S.: A new approach to practical active-secure two-party computation. In: Safavi-Naini, R., Canetti, R. (eds.) CRYPTO 2012. LNCS, vol. 7417, pp. 681–700. Springer, Heidelberg (2012). doi:10.1007/978-3-642-32009-5_40

Pinkas, B., Schneider, T., Smart, N.P., Williams, S.C.: Secure two-party computation is practical. In: Matsui, M. (ed.) ASIACRYPT 2009. LNCS, vol. 5912, pp. 250–267. Springer, Heidelberg (2009). doi:10.1007/978-3-642-10366-7_15

Rindal, P., Rosulek, M.: Faster malicious 2-party secure computation with online/offline dual execution. In: USENIX Security Symposium, pp. 297–314. USENIX Association (2016)

Shelat, A., Shen, C.: Two-output secure computation with malicious adversaries. In: Paterson, K.G. (ed.) EUROCRYPT 2011. LNCS, vol. 6632, pp. 386–405. Springer, Heidelberg (2011). doi:10.1007/978-3-642-20465-4_22

Shelat, A., Shen, C.H.: Fast two-party secure computation with minimal assumptions. In: ACM Conference on Computer and Communications Security (CCS), pp. 523–534. ACM Press (2013)

Wang, X., Malozemoff, A.J., Katz, J.: EMP-toolkit: efficient multiparty computation toolkit. https://github.com/emp-toolkit

Wang, X., Ranellucci, S., Malozemoff, A.J., Katz, J.: Faster secure two-party computation in the single-execution setting. Cryptology ePrint Archive, Report 2016/762 (2016). http://eprint.iacr.org/2016/762

Yao, A.C.C.: Protocols for secure computations. In: 23rd Annual Symposium on Foundations of Computer Science (FOCS), pp. 160–164. IEEE (1982)

Zahur, S., Rosulek, M., Evans, D.: Two halves make a whole—reducing data transfer in garbled circuits using half gates. In: Oswald, E., Fischlin, M. (eds.) EUROCRYPT 2015. LNCS, vol. 9057, pp. 220–250. Springer, Heidelberg (2015). doi:10.1007/978-3-662-46803-6_8

Acknowledgments

The authors thank Samuel Ranellucci and Yan Huang for helpful discussions and comments.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 International Association for Cryptologic Research

About this paper

Cite this paper

Wang, X., Malozemoff, A.J., Katz, J. (2017). Faster Secure Two-Party Computation in the Single-Execution Setting. In: Coron, JS., Nielsen, J. (eds) Advances in Cryptology – EUROCRYPT 2017. EUROCRYPT 2017. Lecture Notes in Computer Science(), vol 10212. Springer, Cham. https://doi.org/10.1007/978-3-319-56617-7_14

Download citation

DOI: https://doi.org/10.1007/978-3-319-56617-7_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-56616-0

Online ISBN: 978-3-319-56617-7

eBook Packages: Computer ScienceComputer Science (R0)

. Since

. Since  looks random to

looks random to