Abstract

In this paper we formulate a time-optimal control problem in the space of probability measures endowed with the Wasserstein metric as a natural generalization of the correspondent classical problem in \({\mathbb {R}}^d\) where the controlled dynamics is given by a differential inclusion. The main motivation is to model situations in which we have only a probabilistic knowledge of the initial state. In particular we prove first a Dynamic Programming Principle and then we give an Hamilton-Jacobi-Bellman equation in the space of probability measures which is solved by a generalization of the minimum time function in a suitable viscosity sense.

Acknowledgements. The first two authors have been supported by INdAM - GNAMPA Project 2015: Set-valued Analysis and Optimal Transportation Theory Methods in Deterministic and Stochastics Models of Financial Markets with Transaction Costs.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The controlled dynamics of a classical time-optimal control problem in finite-dimension can be presented by mean of a differential inclusion as follows:

where F is a set-valued map from \({\mathbb {R}}^d\) to \({\mathbb {R}}^d\). The problem in this setting is to minimize the time needed to steer \(x_0\) to a given closed target set \(S\subseteq {\mathbb {R}}^d\), \(S\ne \emptyset \), defining the minimum time function \(T:{\mathbb {R}}^d\rightarrow [0,+\infty ]\) by

The main motivation of this work is to model situations in which the knowledge of the starting position \(x_0\) is only probabilistic (for example in the case of affection by noise) and this can happen even if the evolution of the system is deterministic.

We thus consider as state space the space of Borel probability measures with finite p-moment endowed with the p-Wasserstein metric \(W_p(\cdot ,\cdot )\), \(({\mathscr {P}}_p({\mathbb {R}}^d),W_p)\). In [2] the reader can find a detailed treatment about Wasserstein distance.

Following this idea we choose to describe the initial state by a probability measure \(\mu _0\in {\mathscr {P}}_p({\mathbb {R}}^d)\) and for its evolution in time we take a time-depending probability measure on \({\mathbb {R}}^d\), \(\varvec{\mu }:=\{\mu _t\}_{t\in [0,T]}\subseteq {\mathscr {P}}_p({\mathbb {R}}^d)\), \(\mu _{|t=0}=\mu _0\). In order to preserve the total mass \(\mu _0({\mathbb {R}}^d)\) during the evolution, the process will be described by a (controlled) continuity equation

where the time-depending Borel velocity field \(v_t:{\mathbb {R}}^d\rightarrow {\mathbb {R}}^d\) has to be chosen in the set of \(L^2_{\mu _t}\)-selections of F in order to respect also the classical underlying control problem (1) which is the characteristic system of (3) in the smooth case.

It is well known that if \(v_t(\cdot )\) is sufficiently regular then the solution of the continuity equation is characterized by the push-forward of \(\mu _0\) through the unique solution of the characteristic system.

In Theorem 8.2.1 in [2] and Theorem 5.8 in [4], the so called Superposition Principle states that, if we conversely require much milder assumptions on \(v_t\), the solution \(\mu _t\) of the continuity equation can be characterized by the push-forward \(e_t\sharp \varvec{\eta }\), where \(e_t:{\mathbb {R}}^d\times \varGamma _T\rightarrow {\mathbb {R}}^d, (x,\gamma )\mapsto \gamma (t)\), \(\varGamma _T:=C^0([0,T];{\mathbb {R}}^d)\) and \(\varvec{\eta }\) is a probability measure in the infinite-dimensional space \({\mathbb {R}}^d\times \varGamma _T\) concentrated on those pairs \((x,\gamma )\in {\mathbb {R}}^d\times \varGamma _T\) such that \(\gamma \) is an integral solution of the underlying characteristic system, i.e. of an ODE of the form \(\dot{\gamma }(t)=v_t(\gamma (t))\), with \(\gamma (0)=x\). We refer the reader to the survey [1] and the references therein for a deep analysis of this approach that is at the basis of the present work.

Pursuing the goal of facing control systems involving measures, we define a generalization of the target set S by duality. We consider an observer that is interested in measuring some quantities \(\phi (\cdot )\in \varPhi \); the results of this measurements are the average of these quantities w.r.t. the state of the system. The elements of the generalized target set \(\tilde{S}_p^\varPhi \) are the states for which the results of all these measurements are below a fixed threshold.

Once defined the admissible trajectories in this framework, the definition of the generalized minimum time function follows in a straightforward way the classical one.

Since classical minimum time function can be characterized as unique viscosity solution of a Hamilton-Jacobi-Bellman equation, the problem to study a similar formulation for the generalized setting would be quite interesting. Several authors have treated a similar problem in the space of probability measures or in a general metric space, giving different definitions of sub-/super differentials and viscosity solutions (see e.g. [2, 3, 8,9,10]). For example, the theory presented in [10] is quite complete: indeed there are proved also results on time-dependent problems, comparison principles granting uniqueness of the viscosity solutions under very reasonable assumptions.

However, when we consider as metric space the space \({\mathscr {P}}_2({\mathbb {R}}^d)\), we notice that the class of equations that can be solved is quite small: the general structure of metric space of [10] allows only to rely on the metric gradient, while \({\mathscr {P}}_2({\mathbb {R}}^d)\) enjoys a much more richer structure in the tangent space (which is a subset of \(L^2\)).

Dealing with the definition of sub-/superdifferential given in [8], the major bond is that the “perturbed” measure is assumed to be of the form \(\left( \mathrm {Id}_{{\mathbb {R}}^d}+\phi \right) \sharp \mu \) in which a (rescaled) transport plan is used. It is well known that, by Brenier’s Theorem, if \(\mu \ll \mathscr {L}^d\) in this way we can describe all the measures near to \(\mu \). However in general this is not true. Thus if the set of admissible trajectories contains curves whose points are not all a.c. w.r.t. Lebesgue measure (as in our case), the definition in [8] cannot be used.

In order to fully exploit the richer structure of the tangent space of \({\mathscr {P}}_2({\mathbb {R}}^d)\), recalling that AC curves in \({\mathscr {P}}_2({\mathbb {R}}^d)\) are characterized to be weak solutions of the continuity equation (Theorem 8.3.1 in [2]), we considered a different definition than the one presented in [8] using the Superposition Principle.

The paper is structured as follows: in Sect. 2 we give the definitions of the generalized objects together with the proof of a Dynamic Programming Principle in this setting. In Sect. 3 we focus on the main result of this work, namely we outline a Hamilton-Jacobi-Bellman equation in \({\mathscr {P}}_2({\mathbb {R}}^d)\) and we solve it in a suitable viscosity sense by the generalized minimum time function, assuming some regularity on the velocity field. Finally, in Sect. 4 we illustrate future research lines on the subject.

2 Generalized Minimum Time Function

Definition 1

(Standing Assumptions). We will say that a set-valued function \(F:{\mathbb {R}}^d \rightrightarrows {\mathbb {R}}^d\) satisfies the assumption \((F_j)\), \(j=0,1,2\) if the following hold true

- \((F_0)\) :

-

\(F(x)\ne \emptyset \) is compact and convex for every \(x\in {\mathbb {R}}^d\), moreover \(F(\cdot )\) is continuous with respect to the Hausdorff metric, i.e. given \(x\in X\), for every \(\varepsilon >0\) there exists \(\delta >0\) such that \(|y-x|\le \delta \) implies \(F(y)\subseteq F(x)+ B(0,\varepsilon )\) and \(F(x)\subseteq F(y)+ B(0,\varepsilon )\).

- \((F_1)\) :

-

\(F(\cdot )\) has linear growth, i.e. there exist nonnegative constants \(L_1\) and \(L_2\) such that \(F(x)\subseteq \overline{B(0,L_1|x|+L_2)}\) for every \(x\in {\mathbb {R}}^d\),

- \((F_2)\) :

-

\(F(\cdot )\) is bounded, i.e. there exist \(M>0\) such that \(\Vert y\Vert \le M\) for all \(x\in {\mathbb {R}}^d\), \(y\in F(x)\).

Definition 2

(Generalized target). Let \(p\ge 1\), \(\varPhi \subseteq C^0({\mathbb {R}}^d,{\mathbb {R}})\) such that the following property holds

-

\((T_E)\) there exists \(x_0\in {\mathbb {R}}^d\) with \(\phi (x_0)\le 0\) for all \(\phi \in \varPhi \).

We define the generalized target \(\tilde{S}_p^{\varPhi }\) as follows

For an analysis of the properties of the generalized target see [5] or [6] for deeper results.

Definition 3

(Admissible curves). Let \(F:{\mathbb {R}}^d\rightrightarrows {\mathbb {R}}^d\) be a set-valued function, \(I=[a,b]\) a compact interval of \({\mathbb {R}}\), \(\alpha ,\beta \in {\mathscr {P}}_p({\mathbb {R}}^d)\). We say that a Borel family of probability measures \(\varvec{\mu }=\{\mu _{t}\}_{t\in I}\subseteq {\mathscr {P}}_p({\mathbb {R}}^d)\) is an admissible trajectory (curve) defined in I for the system \(\varSigma _F\) joining \(\alpha \) and \(\beta \), if there exists a family of Borel vector fields \(v=\{v_t(\cdot )\}_{t\in I}\) such that

-

1.

\(\varvec{\mu }\) is a narrowly continuous solution in the distributional sense of the continuity equation \(\partial _t\mu _t+\mathrm {div}(v_t\mu _t)=0\), with \(\mu _{|t=a}=\alpha \) and \(\mu _{|t=b}=\beta \).

-

2.

\(J_F(\varvec{\mu },v)<+\infty \), where \(J_F(\cdot )\) is defined as

$$\begin{aligned} J_F(\varvec{\mu },v):={\left\{ \begin{array}{ll} \displaystyle \int _I \int _{{\mathbb {R}}^d}\left( 1+I_{F(x)}\left( v_t(x)\right) \right) \,d\mu _t(x)\,dt,&{}\text { if }\Vert v_t\Vert _{L^1_{\mu _t}} \in L^1([0,T]),\\ &{}\\ +\infty ,&{}\text { otherwise}, \end{array}\right. } \end{aligned}$$(4)where \(I_{F(x)}\) is the indicator function of the set F(x), i.e., \(I_{F(x)}(\xi )=0\) for all \(\xi \in F(x)\) and \(I_{F(x)}(\xi )=+\infty \) for all \(\xi \notin F(x)\).

In this case, we will also shortly say that \(\varvec{\mu }\) is driven by v.

When \(J_F(\cdot )\) is finite, this value expresses the time needed by the system to steer \(\alpha \) to \(\beta \) along the trajectory \(\varvec{\mu }\) with family of velocity vector fields v.

Definition 4

(Generalized minimum time). Given \(p\ge 1\), let \(\varPhi \in C^0({\mathbb {R}}^d;{\mathbb {R}})\) and \(\tilde{S}_p^\varPhi \) be the corresponding generalized target defined in Definition 2. In analogy with the classical case, we define the generalized minimum time function \(\tilde{T}_p^{\varPhi }:{\mathscr {P}}_p({\mathbb {R}}^d)\rightarrow [0,+\infty ]\) by setting

where, by convention, \(\inf \emptyset =+\infty \).

Given \(\mu _0\in {\mathscr {P}}_p({\mathbb {R}}^d)\), an admissible curve \(\varvec{\mu }=\{\mu _t\}_{t\in [0,\tilde{T}_p^{\varPhi }(\mu _0)]}\subseteq {\mathscr {P}}_p({\mathbb {R}}^d)\), driven by a time depending Borel vector-field \(v=\{v_t\}_{t\in [0,\tilde{T}_p^{\varPhi }(\mu _0)]}\) and satisfying \(\mu _{|t=0}=\mu _0\) and \(\mu _{|t=\tilde{T}_p^{\varPhi }(\mu _0)}\in \tilde{S}_p^\varPhi \) is optimal for \(\mu _0\) if

Some interesting results concerning the generalized minimum time function together with comparisons with the classical definition are proved in the proceedings [5] and in the forthcoming paper [6].

Here we will focus our attention on the problem of finding an Hamilton-Jacobi-Bellman equation for our time-optimal control problem.

First of all we need to state and prove a Dynamic Programming Principle and, for this aim, the gluing result for solutions of the continuity equation stated in Lemma 4.4 in [7] will be used.

Theorem 1

(Dynamic programming principle). Let \(p\ge 1\), \(0\le s\le \tau \), let \(F:{\mathbb {R}}^d\rightrightarrows {\mathbb {R}}^d\) be a set-valued function, let \(\varvec{\mu }=\{\mu _t\}_{t\in [0,\tau ]}\) be an admissible curve for \(\varSigma _F\). Then we have

Moreover, if \(\tilde{T}_p^\varPhi (\mu _0)<+\infty \), equality holds for all \(s\in [0,\tilde{T}_p^\varPhi (\mu _0)]\) if and only if \(\varvec{\mu }\) is optimal for \(\mu _0=\mu _{|t=0}\).

Proof

The proof is based on the fact that, by Lemma 4.4 in [7], the juxtaposition of admissible curves is an admissible curve. Thus, for every \(\varepsilon >0\) we consider the curve obtained by following \(\varvec{\mu }\) up to time s, and then following an admissible curve steering \(\mu _s\) to the generalized target in time \(\tilde{T}_p^\varPhi (\mu _s)+\varepsilon \). We obtain an admissible curve steering \(\mu _0\) to the generalized target in time \(s+\tilde{T}_p^\varPhi (\mu _s)+\varepsilon \), and so, by letting \(\varepsilon \rightarrow 0^+\), we have \(\tilde{T}_p^\varPhi (\mu _0)\le s+ \tilde{T}_p^\varPhi (\mu _s)\).

Assume now that \(\tilde{T}_p^\varPhi (\mu _0)<+\infty \) and equality holds for all \(s\in [0,\tilde{T}_p^\varPhi (\mu _0)]\). By taking \(s=\tilde{T}_p^\varPhi (\mu _0)\) we get \(T_p^\varPhi (\mu _{\tilde{T}_p^\varPhi (\mu _0)})=0\), i.e., \(\mu _{\tilde{T}_p^\varPhi (\mu _0)}\in \tilde{S}_p^\varPhi \). In particular, \(\varvec{\mu }\) steers \(\mu _0\) to \(\tilde{S}_p^\varPhi \) in time \(\tilde{T}_p^\varPhi (\mu _0)\), which is the infimum among all admissible trajectories steering \(\mu _0\) to the generalized target. So \(\varvec{\mu }\) is optimal.

Finally, assume that \(\varvec{\mu }\) is optimal for \(\mu _0\) and \(\tilde{T}_p^\varPhi (\mu _0)<+\infty \). Starting from \(\mu _0\), we follow \(\varvec{\mu }\) up to time s. Since \(\varvec{\mu }\) is still an admissible curve steering \(\mu _s\) to the generalized target in time \(\tilde{T}_p^\varPhi (\mu _0)-s\), we must have \(\tilde{T}_p^{\varPhi }(\mu _s)\le \tilde{T}_p^\varPhi (\mu _0)-s\), and so \(\tilde{T}_p^{\varPhi }(\mu _s)+s=\tilde{T}_p^\varPhi (\mu _0)\), since the reverse inequality always holds true. \(\square \)

3 Hamilton-Jacobi-Bellman Equation

In this section we will prove that, under some assumptions, the generalized minimum time functional \(\tilde{T}_2^\varPhi \) is a viscosity solution, in a sense we will precise, of a suitable Hamilton-Jacobi-Bellman equation on \({\mathscr {P}}_2({\mathbb {R}}^d)\). In this paper we assume the velocity field to be continuous for simplicity. In the forthcoming paper [6] we prove a result of approximation of \(L^2_\mu \)-selections of F with continuous and bounded ones in \(L^2_\mu \)-norm that allows us to treat a more general case.

We recall that, given \(T\in \,]0,+\infty ]\), the evaluation operator \(e_t:{\mathbb {R}}^d\times \varGamma _T\rightarrow {\mathbb {R}}^d\) is defined as \(e_t(x,\gamma )=\gamma (t)\) for all \(0\le t<T\). We set

where \(\mu _0\in {\mathscr {P}}_2({\mathbb {R}}^d)\).

It is not hard to prove the following result.

Lemma 1

(Properties of the evaluation operator). Assume \((F_0)\) and \((F_1)\), and let \(L_1,L_2>0\) be the constants as in \((F_1)\). For any \(\mu _0\in {\mathscr {P}}_2({\mathbb {R}}^d)\), \(T\in \,]0,1]\), \(\varvec{\eta }\in {\mathscr {T}}_F(\mu _0)\), we have:

-

(i)

\(|e_t(x,\gamma )|\le (|e_0(x,\gamma )|+L_2)\,e^{L_1}\) for all \(t\in [0,T]\) and \(\varvec{\eta }\)-a.e. \((x,\gamma )\in {\mathbb {R}}^d\times \varGamma _T\);

-

(ii)

\(e_t\in L^2_{\varvec{\eta }}({\mathbb {R}}^d\times \varGamma _T;{\mathbb {R}}^d)\) for all \(t\in [0,T]\);

-

(iii)

there exists \(C>0\) depending only on \(L_1,L_2\) such that for all \(t\in [0,T]\) we have

$$\begin{aligned} \left\| \dfrac{e_{t}-e_0}{t}\right\| ^2_{L^2_{\varvec{\eta }}}\le C\left( \mathrm {m}_2(\mu _0)+1\right) \!. \end{aligned}$$

In the case we are considering, where the trajectory \(t\mapsto e_t\sharp \varvec{\eta }\) is driven by a sufficiently smooth velocity field, we recover as initial velocity what we expected.

Lemma 2

(Regular driving vector fields). Let \(\varvec{\mu }=\{\mu _t\}_{t\in [0,T]}\) be an absolutely continuous solution of

where \(v\in C^0_b({\mathbb {R}}^d;{\mathbb {R}}^d)\) satisfies \(v(x)\in F(x)\) for all \(x\in {\mathbb {R}}^d\). Then if \(\varvec{\eta }\in {\mathscr {T}}_F(\mu _0)\) satisfies \(\mu _t=e_t\sharp \varvec{\eta }\) for all \(t\in [0,T]\), we have that

The proof is based on the boundedness of v and on the fact that, by hypothesis, \(\gamma \in C^1\), \(\dot{\gamma }(t)=v(\gamma (t))\). The conclusion comes applying Lebesgue’s Dominated Convergence Theorem.

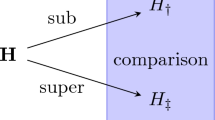

We give now the definitions of viscosity sub-/superdifferential and viscosity solutions that suit our problem. As presented in the Introduction, these concepts are different from the ones treated in [2, 3, 8,9,10], due mainly to the structure of \({\mathscr {P}}_2({\mathbb {R}}^d)\).

Definition 5

(Sub-/Super-differential in \({\mathscr {P}}_2({\mathbb {R}}^d)\) ). Let \(V:{\mathscr {P}}_2({\mathbb {R}}^d)\rightarrow {\mathbb {R}}\) be a function. Fix \(\mu \in {\mathscr {P}}_2({\mathbb {R}}^d)\) and \(\delta >0\). We say that \(p_{\mu }\in L^2_{\mu }({\mathbb {R}}^d;{\mathbb {R}}^d)\) belongs to the \(\delta \)-superdifferential \(D^+_{\delta }V(\mu )\) at \(\mu \) if for all \(T>0\) and \(\varvec{\eta }\in {\mathscr {P}}({\mathbb {R}}^d\times \varGamma _T)\) such that \(t\mapsto e_t\sharp \varvec{\eta }\) is an absolutely continuous curve in \({\mathscr {P}}_2({\mathbb {R}}^d)\) defined in [0, T] with \(e_0\sharp \varvec{\eta }=\mu \) we have

In the same way, \(q_{\mu }\in L^2_{\mu }({\mathbb {R}}^d;{\mathbb {R}}^d)\) belongs to the \(\delta \)-subdifferential \(D^-_{\delta }V(\mu )\) at \(\mu \) if \(-q_{\mu }\in D^+_{\delta }[-V](\mu )\). Moreover, \(D^\pm _{\delta }[V](\mu )\) is the closure in \(L^2_{\mu }\) of \(D^\pm _{\delta }[V](\mu )\cap C^0_b({\mathbb {R}}^d;{\mathbb {R}}^d)\).

Definition 6

(Viscosity solutions). Let \(V:{\mathscr {P}}_2({\mathbb {R}}^d)\rightarrow {\mathbb {R}}\) be a function and \(\mathscr {H}:{\mathscr {P}}_2({\mathbb {R}}^d)\times C^0_b({\mathbb {R}}^d;{\mathbb {R}}^d)\rightarrow {\mathbb {R}}\). We say that V is a

-

1.

viscosity supersolution of \(\mathscr {H}(\mu ,DV(\mu ))=0\) if there exists \(C>0\) depending only on \(\mathscr {H}\) such that \(\mathscr {H}(\mu ,q_{\mu })\ge -C\delta \) for all \(q_{\mu }\in D^{-}_{\delta }V(\mu )\cap C^0_b\), \(\delta >0\) and \(\mu \in {\mathscr {P}}_2({\mathbb {R}}^d)\).

-

2.

viscosity subsolution of \(\mathscr {H}(\mu ,DV(\mu ))=0\) if there exists \(C>0\) depending only on \(\mathscr {H}\) such that \(\mathscr {H}(\mu ,p_{\mu })\le C\delta \) for all \(p_{\mu }\in D^{+}_{\delta }V(\mu )\cap C^0_b\), \(\delta >0\) and \(\mu \in {\mathscr {P}}_2({\mathbb {R}}^d)\).

-

3.

viscosity solution of \(\mathscr {H}(\mu ,DV(\mu ))=0\) if it is both a viscosity subsolution and a viscosity supersolution.

Definition 7

(Hamiltonian Function). Given \(\mu \in {\mathscr {P}}_2({\mathbb {R}}^d)\), we define the map \(\mathscr {H}_F:{\mathscr {P}}_2({\mathbb {R}}^d)\times C^0_b({\mathbb {R}}^d;{\mathbb {R}}^d)\rightarrow {\mathbb {R}}\) by setting

Theorem 2

(Viscosity solution). Assume \((F_0)\) and \((F_2)\). Then \(\tilde{T}_2^\varPhi (\cdot )\) is a viscosity solution of \(\mathscr {H}_F(\mu ,D\tilde{T}_2^\varPhi (\mu ))=0\), with \(\mathscr {H}_F\) defined as in Definition 7.

Proof

The proof is splitted in two claims.

Claim 1

\(\tilde{T}_2^\varPhi (\cdot )\) is a subsolution of \(\mathscr {H}_F(\mu ,D\tilde{T}_2^\varPhi (\mu ))=0\).

Proof of Claim 1

Given \(\varvec{\eta }\in {\mathscr {T}}_F(\mu _0)\) and set \(\mu _t=e_t\sharp \varvec{\eta }\) for all t by the Dynamic Programming Principle we have \(\tilde{T}_2^\varPhi (\mu _0)\le \tilde{T}_2^\varPhi (\mu _s)+s\) for all \(0< s\le \tilde{T}_2^\varPhi (\mu _0)\). Without loss of generality, we can assume \(0<s<1\). Given any \(p_{\mu _0}\in D^+_{\delta }\tilde{T}_2^\varPhi (\mu _0)\cap C^0_b\), and set

we have \(A(s,p_{\mu _0},\varvec{\eta })\le B(s,p_{\mu _0},\varvec{\eta })\).

We recall that since by definition \(p_{\mu _0}\in L^2_{\mu _0}\), we have that \(p_{\mu _0}\circ e_0\in L^2_{\varvec{\eta }}\). Dividing by \(s>0\) the left hand side, we observe that we can use Lemma 2, indeed the velocity field \(v(\cdot )\) associated to \(\varvec{\eta }\in {\mathscr {T}}_F(\mu _0)\) satisfies all the hypothesis (the boundedness comes from \((F_2)\)) and so we have

Recalling that \(p_{\mu _0}\in D^+_{\delta }\tilde{T}_2^\varPhi (\mu _0)\) and using Lemma 1(iii), we have

where \(C>0\) is a suitable constant (we can take twice the upper bound on F given by \((F_2)\)).

We thus obtain for all \(\varvec{\eta }\in {\mathscr {T}}_F(\mu _0)\) that

By passing to the infimum on \(\varvec{\eta }\in {\mathscr {T}}_F(\mu _0)\) we have

so \(\tilde{T}_2^\varPhi (\cdot )\) is a subsolution, thus confirming Claim 1. \(\diamond \)

Claim 2

\(\tilde{T}_2^\varPhi (\cdot )\) is a supersolution of \(\mathscr {H}_F(\mu ,D\tilde{T}_2^\varPhi (\mu ))=0\).

Proof of Claim 2

Given \(\varvec{\eta }\in {\mathscr {T}}_F(\mu _0)\), let us define the admissible trajectory \(\varvec{\mu }=\{\mu _t\}_{t\in [0,T]}=\{e_t\sharp \varvec{\eta }\}_{t\in [0,T]}\). Given \(q_{\mu _0}\in D^-_{\delta }\tilde{T}_2^\varPhi (\mu _0)\cap C^0_b\), we have

Thus, using Lemma 2 and Lemma 1, we have

for all \(s>0\).

Then, by passing to the infimum on all admissible trajectories, we obtain

Thus

By the Dynamic Programming Principle, recalling that \(\dfrac{\tilde{T}_2^\varPhi (\mu _0)-\tilde{T}_2^\varPhi (\mu _s)}{s}-1\le 0\) with equality holding if and only if \(\varvec{\mu }\) is optimal, we obtain \(\mathscr {H}_F(\mu _0,q_{\mu _0})\ge - C'\delta \), which proves that \(\tilde{T}_2^\varPhi (\cdot )\) is a supersolution, thus confirming Claim 2. \(\square \)

4 Conclusion

In this work we have studied a Hamilton-Jacobi-Bellman equation solved by a generalized minimum time function in a regular case. In the forthcoming paper [6] an existence result is proved for optimal trajectories as well as attainability properties in the space of probability measures. Furthermore, a suitable approximation result allows to give a sense to a Hamilton-Jacobi-Bellman equation in a more general case.

We plan to study if it is possible to prove a comparison principle for an Hamilton-Jacobi equation solved by the generalized minimum time function, as well as to give a Pontryagin maximum principle for our problem.

References

Ambrosio, L.: The flow associated to weakly differentiable vector fields: recent results and open problems. In: Bressan, A., Chen, G.Q., Lewicka, M., Wang, D. (eds.) Nonlinear Conservation Laws and Applications. The IMA Volumes in Mathematics and its Applications, vol. 153, pp. 181–193. Springer, Boston (2011). doi:10.1007/978-1-4419-9554-4_7

Ambrosio, L., Gigli, N., Savaré, G.: Gradient Flows in Metric Spaces and in the Space of Probability Measures. Lectures in Mathematics ETH Zürich, 2nd edn. Birkhäuser Verlag, Basel (2008)

Ambrosio, L., Feng, J.: On a class of first order Hamilton-Jacobi equations in metric spaces. J. Differ. Equ. 256(7), 2194–2245 (2014)

Bernard, P.: Young measures, superpositions and transport. Indiana Univ. Math. J. 57(1), 247–276 (2008)

Cavagnari, G., Marigonda, A.: Time-optimal control problem in the space of probability measures. In: Lirkov, I., Margenov, S.D., Waśniewski, J. (eds.) LSSC 2015. LNCS, vol. 9374, pp. 109–116. Springer, Cham (2015). doi:10.1007/978-3-319-26520-9_11

Cavagnari, G., Marigonda, A., Nguyen, K.T., Priuli, F.S.: Generalized control systems in the space of probability measures (submitted)

Dolbeault, J., Nazaret, B., Savaré, G.: A new class of transport distances between measures. Calc. Var. Partial Differ. Equ. 34(2), 193–231 (2009)

Cardaliaguet, P., Quincampoix, M.: Deterministic differential games under probability knowledge of initial condition. Int. Game Theory Rev. 10(1), 1–16 (2008)

Gangbo, W., Nguyen, T., Tudorascu, A.: Hamilton-Jacobi equations in the Wasserstein space. Methods Appl. Anal. 15(2), 155–184 (2008)

Gangbo, W., Święch, A.: Optimal transport and large number of particles. Discret. Contin. Dyn. Syst. 34(4), 1397–1441 (2014)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 IFIP International Federation for Information Processing

About this paper

Cite this paper

Cavagnari, G., Marigonda, A., Orlandi, G. (2016). Hamilton-Jacobi-Bellman Equation for a Time-Optimal Control Problem in the Space of Probability Measures. In: Bociu, L., Désidéri, JA., Habbal, A. (eds) System Modeling and Optimization. CSMO 2015. IFIP Advances in Information and Communication Technology, vol 494. Springer, Cham. https://doi.org/10.1007/978-3-319-55795-3_18

Download citation

DOI: https://doi.org/10.1007/978-3-319-55795-3_18

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-55794-6

Online ISBN: 978-3-319-55795-3

eBook Packages: Computer ScienceComputer Science (R0)