Abstract

Learning deeper convolutional neural networks has become a tendency in recent years. However, many empirical evidences suggest that performance improvement cannot be attained by simply stacking more layers. In this paper, we consider the issue from an information theoretical perspective, and propose a novel method Relay Backpropagation, which encourages the propagation of effective information through the network in training stage. By virtue of the method, we achieved the first place in ILSVRC 2015 Scene Classification Challenge. Extensive experiments on two large scale challenging datasets demonstrate the effectiveness of our method is not restricted to a specific dataset or network architecture.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Convolutional neural networks (CNNs) are capable of inducing rich features from data, and have been successfully applied in a variety of computer vision tasks. Many breakthroughs obtained in recent years benefit from the advances of convolutional neural networks [2, 12, 13, 24], spurring the research of pursuing a high performing network. The importance of network depth has been revealed in these successes. For example, compared with AlexNet [13], the utilisation of VGGNet [19] brings about substantial gains of accuracy on 1000-class ImageNet 2012 dataset by virtue of deeper architectures.

Increasing the depth of network has become a promising way to enhance performance. On the downside, such a solution is in conjunction with the growth of parameter size and model complexity, thus poses great challenges for optimisation. The training of deeper networks typically encounters the risk of divergence or slower convergence, and is prone to overfitting. Besides, there are many empirical evidences [5, 19, 20] (e.g., the results reported by [19] on ImageNet dataset shown in Table 1 (Left)) to show that the improvement on accuracy cannot be trivially gained by simply adding more layers. It is in accordance with the results in our preliminary experiments on Places2 challenge dataset [29], where deeper networks even suffer from a decline on performance (in Table 1 (Right)).

To understand the phenomenon, we should be concerned with the possibility of vanishing and exploding gradient, which is the crucial reason of hampering the optimisation of very deep networks with backpropagation [14] (BP) algorithm, as gradients might be prone to become either very small or very large after going across many layers. To investigate whether the issue appears, we analyse the scale of the gradients at different convolutional layers. We take the 22-layer CNN model (in Table 1) as an example, and the results are shown in Fig. 1. The average magnitude of gradients and their relative values with respect to weights are displayed, respectively. It can be observed that the gradient magnitude of lower layers does not tend to vanish or explode, but remains roughly stable in the progression. In practice, some techniques, e.g., rectifier neuron [16, 17], refined initialisation scheme [3, 5], and Batch Normalization [9], have shown the capacity of coping with vanishing and exploding gradient.

From an information theoretical perspective [1, 11, 26], the amount of information derived from target outputs diminishes during propagation, although the gradient magnitude does not vanish. Such degradation would be amplified as a network goes deeper. In order to effectively update network parameters, the error information should not go back too many layers. However, the problem is inevitable when optimising a very deep network with standard backpropagation algorithm.

Magnitude of the gradient at each convolutional layer of the 22-layer CNN model (i.e., 19 convolutional layers and 3 fully connected layers) in Table 1. Each colour line plots the gradient magnitude of different layers at a certain number of iterations. (Left) Average magnitude of gradients. (Right) Relative magnitude of gradients, i.e., average magnitude of gradients divided by average magnitude of weights. (Color figure online)

To address the issue, in this paper we propose a novel method, Relay Backpropagation (Relay BP) for training, which encourages effective information to pass through the network. To accomplish the aim, the whole network is first divided into several segments. We introduce one or multiple interim output modules (including loss layer) after intermediate segments, and aim to minimise the ensemble of losses. More importantly, the gradients from different losses are propagated through a limited number of segments, namely, the gradient with respect to certain loss will propagate at most N consecutive layers, where N is smaller than the depth of entire network. An example framework is depicted in Fig. 2 with two auxiliary output modules. In a word, we provide an elegant way to effectively preserve relevant information by shortening the path from outputs to lower layers, and meanwhile restrain the adverse effect of less relevant information caused by propagating across too many layers.

By virtue of Relay BP, we achieved the first place in ILSVRC 2015 Scene Classification Challenge, which provided a new large scale dataset involving 401 classes and more than 8 million training images. The benefits of our method are also verified on ImageNet 2012 classification dataset with another two prevalent network architectures, demonstrating the capacity of the method is not confined to a specific architecture or dataset. We will make our models available to the research community.

(Left) VGGNet-19 network [19] with standard backpropagation algorithm. (Middle & Right) VGGNet-19 extended network with Relay Backpropagation algorithm. This is an example with two auxiliary output modules, adding two branches on traditional VGGNet-19 architecture. The black arrows denote the forward propagation of information through the network, and the colour arrows indicate the information (gradient) flows at backward propagation. This figure is best viewed on the screen. (Color figure online)

2 Related Work

Convolutional neural networks have attracted much attention over the last few years. For image classification tasks with large scale data [18, 27, 30], there is a tendency to boost network capacity by increasing the complexity of network (e.g., depth [19] and width [28]), whereas brings about difficulties on training. A range of techniques are exploited to address the issue from various angles. For example, Simonyan and Zisserman [19] propose to reduce the risk of divergence by initialising a deeper network with the aid of pre-training shallower ones. Refined initialisation schemes are adopted to train very deep networks directly by drawing the weights from properly scaled distributions [3, 5]. Moreover, the benefits of new activation functions [4, 5, 17] for training deep networks have been shown in extensive experiments. Besides, some studies are developed in the direction of finding better optimizers, such as stochastic gradient descent with momentum [21] and RMSProp [25], which are widely used and work well in practice.

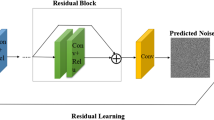

It is particularly worthy of comparing our method with the work in [15, 22], where temporary branches including classifiers are attached to intermediate layers that assist in propagating supervision information to lower layers. However, such multi-loss mechanism neglects information reduction due to long-term propagation, and the adverse effect of less relevant information for lower layers. Different from it, relevant information could be effectively preserved in our method, meanwhile the adverse effect of less relevant information is restrained from propagating to lower layers. In [7, 8, 23], some powerful networks are obtained by employing new structures, i.e., Inception module and Residual block, which are concurrent with our work, and also attend the ILSVRC 2015 Challenge. The two structures implement shortcut connections in different ways, however long-term propagation still exists when training a deeper network. Therefore, our contribution is orthogonal to these work, and network performance can be further improved benefitting from our method.

3 Standard BP and Information Reduction

Considering a feedforward neural network, which is comprised of L parameterised layers with weights W, each layer \(l \in \{1, \cdots , L\}\) is followed by a non-linear transformation on its input variables \(h_{l-1}\) to yield the output \(h_{l}\), i.e., \(h_l = f_l(h_{l-1};W_l)\), which is the input of consecutive layer \(l+1\). Different transformations could be applied in the network. The network receives sample \(x \in \mathcal {X}\) as the starting input, i.e., \(h_0\), and is learned to minimise the loss between the final output \(h_L\) and desired target \(y\in \mathcal {Y}\), \(\ell (y, h_L)\), over data \((\mathcal {X}, \mathcal {Y})\).

When training with standard BP algorithm, optimisation at each iteration is comprised of forward propagation and backward propagation (as shown in Fig. 2 (Left)). The process of forward propagation is to feed x into and forward propagate through the network. Error is then generated, and the gradients with respect to neurons h and weights W are propagated backward,

until the lowest layer (i.e., the first convolutional layer), which is backward propagation. Weights are updated according to the respective gradients on samples (i.e., batches of data). In other words, the information of error received by lower layers has flowed through many intermediate layers, whose number arises along with the growth of network depth.

From an information theoretical point of view, the flow of information through network forms a Markov chain. The gradient \(g^h_{L}\) from topmost layer preciously represents the supervision signal (loss), which is transmitted to \(g^h_{L-1}\) and \(g^h_{L-2}\) in turn. According to Data Processing Inequality [1], \(I(g^h_{L};g^h_{L-1}) \ge I(g^h_{L};g^h_{L-2})\), the amount of information about the signal is unable to increase during processing, without attenuation only when the transformation is invertible. In practice, when information flow goes across a series of layers, the amount of information is prone to reduction due to complicated transformations (e.g., ReLU and pooling), which implies less relevant information derived from loss is received by lower layers, making it difficult to leverage meaningful gradients for weight update. Such effect will amplify when information propagates deeper, ultimately hamper the performance of whole network. In order to effectively update network parameters, information flow should not go back too many layers.

4 Relay BackPropagation

The motivation of our method is to propagate effective information through the network in backward propagation. We accomplish the target by using auxiliary output modules appropriately. Take VGGNet-19 network architecture for example, as shown in Fig. 2 (right). The whole network is first divided into several segments separated with max-pooling layers. For instance, from the first convolutional layer to the first max-pooling layer is considered as a segment, and the next segment starts from the third convolutional layer and ends to the second max-pooling layer. Thus, there are totally five segments, numbered 1 to 5 from lower to higher layers.

We attach one or multiple auxiliary output modules to intermediate segments. Figure 2 is an example with two output modules (i.e., auxiliary loss 1 and loss 2), which are added after segment 3 and 4, respectively. In order to preserve the relevant information about loss, gradient flows in the whole network are blocked, and one derived from each loss is required to go across at most N consecutive layers, where N is the upper limit of the numbers of layers that we deem that can carry enough relevant information. Namely, different losses are responsible for different parts of weight layers in the network. The information flows from different losses are represented with different colours in Fig. 2. Auxiliary loss 1 (coloured with red) would be propagated until the lowest one in segment 1, and auxiliary loss 2 (coloured with green) would be propagated until the lowest one in segment 3, and the primary loss (coloured with blue) would be propagated until the lowest layer in segment 4, respectively. On the other hand, it is equivalent to apply different step sizes for gradient flows in such a framework, and size for the flow derived from primary loss can be regarded as zero at lower segments (e.g., segment 1), while it is not based on gradient magnitude modulated in adaptive optimisation methods (e.g., ADAM and RMSprop).

More importantly, there is overlapping between information flows at intermediate segments, such as segment 4 receives the information from primary loss and auxiliary loss 2. As our optimisation objective is to minimise the sum of the three losses, updating on segment 4 would fuse the information derived from the two losses. Consequently, segment 4 plays a role of transition between the two information flows of primary loss and auxiliary loss 2, not only the transition between lower and higher layers trivially, that is why we call the method as Relay Backpropagation. The back-forward step can be interpreted as update with multiple gradient flows respectively across shorten networks, whereas jointly passing through the entire one. Lower layers seems to be isolated from the topmost loss, however, other information flows with identical target would affect them.

In summary, our method is characterised with two points: (1) Each loss (including the main and auxiliary ones) is responsible for the update of different layers, i.e., a shorten sub-network, rather than the overall ensemble of layers below. Such mechanism is helpful to reduce the degradation of relevant information about loss and restrain the adverse effect of less relevant information due to long-term propagation. It is distinctively different from traditional multi-loss with standard BP algorithm [15, 22], where lower layers would be affected by diffuse flows. (2) Information flows from different losses exist overlapping at intermediate segments, guaranteeing to coordinate information propagation in a very deep network.

In forward propagation step, information transmission follows the manner from input to output layers, where the activations generated at one layer are fed into its adjacent layer in turn. The black arrows in Fig. 2 (middle) indicate the directions of information flows through network. It is consistent with standard BP for the network with auxiliary branches.

When testing an image, a prediction is made without considering auxiliary branches, as auxiliary supervision is introduced only to enhance the training of network. Consequently, there is no extra cost (parameter size and time expense) brought in testing stage, ensuring the test efficiency of model.

One might be concerned with: Where to add auxiliary output module? And which segments (or convolutional layers) should belong to the scope of certain loss? We apply a heuristic scheme based on empirical evidences in this work. Nevertheless, some intuitive rules can be used directly for reference. One insight is that it is inadvisable to add auxiliary output modules at too lower layers, since the patterns captured at these layers lack of sufficient discrimination for recognising a high-level concept (e.g., object or scene). Moreover, network depth is an important factor to be considered. Adding an auxiliary branch might be enough if network is not too deep. In general, the design can be adjusted flexibly according to specific requirements and practical experience.

5 Experiments

In this section, we evaluate Relay BP on Places2 challenge [29] and ImageNet 2012 classification dataset [18], and also investigate it on four different network architectures. We show Relay BP outperforms baselines significantly. The baseline methods are briefly introduced below:

-

Standard BP: Given the network, information forward and backward propagation follow the rule of traditional backpropagation algorithm (e.g., in Fig. 2(Left)).

-

Multi-loss + standard BP: One auxiliary output module (branch) is attached to parts of intermediate layers.

For a fair comparison, the network architecture in training stage (i.e., the architecture with temporary branches) is identical for our method and the baseline of multi-loss with standard BP, while the difference lies in the scheme of information backward propagation. In the experiments, we only add one auxiliary branch for each architecture, as they are not too deep to tackle. Moreover, the increment of branch also brings about training computation cost. Therefore, the principle is adding the branches as few as possible. We intend to train extremely deeper networks by aid of multiple branches in future work.

5.1 Places2 Challenge

We evaluate our method on Places2 challenge dataset [29], which is used in ILSVRC 2015 Scene Classification Challenge. This dataset includes images belonging to 401 scene categories, with 8.1M images for training, 20K images for validation and 381K images for testing. To mimic the real-world frequencies of scene occurrence, there is a non-uniform distribution of images per category for training, ranging from 4,000 to 30,000. The classification performance of the challenge is evaluated with top-5 error, which allows an algorithm to identify multiple scene categories for an image, because a scene is likely to be described with different words.

Network Architectures. Relay BP is independent on the network architectures used. We investigate two types of deep convolutional neural network architectures on the dataset, as shown in Table 2. Model A is based on VGGNet-19 [19], while simply adding 3 convolutional layers on the three smaller feature maps (56, 28, 14). A \(7\times 7\) convolutional layer and a modified inception module is used as building block in model B. We also incorporate spatial pyramid pooling (spp) [6] into the models, where the pyramid configuration is \(7\times 7\), \(3\times 3\), \(2\times 2\) and \(1\times 1\). Dropout regularization is applied to the first two fully-connected (fc) layers, with the dropout ratio 0.5. We use Rectified Linear Unit (ReLU) as nonlinearity and do not use Batch Normalization [9] in the two networks. The experiments involving Batch Normalization will be seen in Sect. 5.2. The auxiliary classifier  is used in multi-loss standard BP and Relay BP, rather than standard BP. The loss weight of the auxiliary classifier is set to 0.3. The “gradient” in Table 2 shows the details of backward propagation in Relay BP.

is used in multi-loss standard BP and Relay BP, rather than standard BP. The loss weight of the auxiliary classifier is set to 0.3. The “gradient” in Table 2 shows the details of backward propagation in Relay BP.

and

and  indicate which layers the gradients propagate to.

indicate which layers the gradients propagate to.Class-Aware Sampling. The Places2 challenge dataset has more than 8M training images in total. The numbers of images in different classes are imbalanced, ranging from 4,000 to 30,000 per class. The large scale data and non-uniform class distribution pose great challenges for model learning.

To address this issue, we apply a sampling strategy, named “class-aware sampling”, during training. We aim to fill a mini-batch as uniform as possible with respect to classes, and prevent the same example and class from always appearing in a permanent order. In practice, we use two types of lists, one is class list, and the other is per-class image list, i.e., 401 per-class image lists in total. When getting a training mini-batch in an iteration, we first sample a class X in the class list, then sample an image in the per-class image list of class X. When reaching the end of the per-class image list of class X, a shuffle operation is performed to reorder the images of class X. When reaching the end of class list, a shuffle operation is performed to reorder the classes. We leverage such a class-aware sampling strategy to effectively tackle the non-uniform class distribution, and the gain of accuracy on the validation set is about 0.6 %.

Training and Testing. Our implementation is based on the publicly available library Caffe [10], where function “BackwardFromTo” is called for each loss. Compared to standard BP, weight gradient blobs are accumulated thus there is no extra memory cost, and data gradient blob can be reused by different flows by simply modifying “split_layer”, which has no extra memory cost. Overall, like multi-loss method, a bit memory cost is needed for auxiliary branches compared to standard BP.

We train models on the provided Places2 challenge training set, do not use any additional training data. The image is resized isotropically so that its shorter side is 256. To augment the training set, a 224 \(\times \) 224 crop is randomly sampled from a training image, with per-pixel mean subtracted. Random horizontal flipping and standard colour shift in [13] are used. We initialise the weights using [5] and train all networks from scratch by applying stochastic gradient descent (SGD) with mini-batch size of 256 and a fixed momentum of 0.9. The learning rate is initialised to 0.01, and is annealed by a factor of 10 when the error plateaus. The training is regularised by weight decay (set to 0.0002). We train all models up to \(80\times 10^4\) iterations. In testing, we take the standard “single crop (centre crop)” protocol in [22]. Furthermore, we use the fully-convolutional testing [19] to report the performance of single model. The image is resized isotropically so that its shorter side is in {224, 256, 320, 384, 448}, and the scores are averaged at multiple scales.

Comparisons of Results. Table 3 lists the results of the three methods with “single crop” testing strategy. Compared with standard BP, the baseline “Multi-loss + standard BP” shows better performance by introducing auxiliary supervision on intermediate layers, however the superiority is marginal, even negligible with regard to model B. In contrast, our method achieves significant improvement over standard BP, as well as consistently outperforms “Multi-loss + standard BP” (approximately \(1.0\,\%\) on model A and \(0.8\,\%\) on model B based on top-5 measure). It is notable that the improvement on model B is less than the one on model A. The shortcut connections in modified Inception modules make it possible to propagate information with shortcuts, somewhat alleviates the information reduction. This is also the reason of ineffectiveness of “Multi-loss + standard BP” on model B. Nevertheless, our method is capable of improving the performance on model B. It confirms our insight that restraining the adverse effect of less relevant information is helpful for training deep neural networks.

For a comprehensive comparison, we also report the model performance with “single model” testing strategy in Table 4. Clear advantage can be observed in our method compared to the baselines. It is worthy of mentioning that the improvement of single model over centre crop is less, about \(1.5\,\%\) top-5 error diminished from \(17.83\,\%\) (single crop) to \(16.33\,\%\), while empirical results on ImageNet 2012 classification dataset suggest the performance gain is approximately \(3.0\,\%\) [5, 9]. To display further details about the result, we list top-5 error rates of example classes on validation set in Fig. 3 (left), which are based on the architecture of model B. Distinguished superiority can be observed compared to standard BP, e.g., the improvements on concept “basement”, “living room” are 12 % and 6 %.

ILSVRC 2015 Scene Classification Challenge. By virtue of Relay BP, our “WM” team won the 1st place in ILSVRC 2015 Scene Classification task. Table 5 shows the results of this challenge. We combine five models of different architectures and input scales, and achieve 15.74 % top-5 error on validation set. Figure 3 (right) displays the per-class results of our method, which are reordered in ascend. We can observe that the model is capable of distinguishing most of concepts, i.e., less than 20 % error rates on more than 75 % classes. Meanwhile, few concepts suffer from poor results, e.g., only 12 % accuracy on “library/outdoor”, which typically lacks of distinctive characters apart from the others with similar appearance. For the final result, our top-5 error is 16.87 % on the testing set, which is roughly 1.1 % worse than the validation result. We conjecture that there might be a distribution gap between validation and testing data, because similar degradation has also been observed by other teams [29]. Such phenomenon also implies the difficulty of task, as scene concepts are typically associated with large intra-class divergence and sample amount plays a crucial role for the distribution of classes. Compared to single model, the improvement of model ensemble over the one with architecture B is 0.6 %. From single crop to single model, and further to model ensemble, the improvement is consistently lower than expected. We conjecture that training with large scale data enhances the capability of single view, leading to the difficulties of further improvement with model ensemble.

Figure 4 shows some exemplars in Places2 challenge validation set, which are successfully classified by our method. The predicted labels are in descending order of confidence. Even though many high-level scene concepts exist large variance on intra-class appearance, our method could still recognise them easily. On the other hand, we also show some exemplars incorrectly classified in Fig. 5. These predictions seem to be reasonable, although they fail in context of evaluation measure. A scene image might be typically described by multi-labels. Moreover, composing a scene is mostly complicated, such as a place is comprised of multiple objects and similar object is likely to appear in different places. Loose connections between scene and object concepts increase the difficulties of scene recognition.

5.2 ImageNet 2012 Classification

We evaluate our method on ImageNet 2012 classification dataset [18], which has become one of the benchmarks to assess the progress of image classification. This dataset includes images belonging to 1000 classes, with 1.2M images for training, 50K images for validation and 100K images for testing. Classification performance is measured by top-1 and top-5 error rates. We use the provided data for training models, do not use any additional data.

Configurations. Recently, residual networks [7] introduce shortcut connections, and has achieved state-of-the-art performance on ImageNet 2012 classification dataset. Moreover, [23] yields comparable classification accuracy by exploiting “Inception-v3” architectures. We use the 50-layer residual network (ResNet-50) [7] and the Inception-v3 architectures [23] to evaluate Relay BP. For both architectures, we do not use scale jitter augmentation [19] during training. Standard SGD is applied to train the networks. Other configurations (including data augmentation, network architectures, and training/testing methodology) remain unchanged as [7, 23]. More details about the configurations can be found in [7, 23]. For Relay BP, we add one auxiliary branch with the loss weight set to 0.3. The gradient overlapping segments of primary and auxiliary loss range from “conv4_1” to “conv4_4” (ResNet-50), and “inception4a” to “inception4d” (Inception-v3), respectively. As the scheme of multi-loss has been included in Inception-v3, we omit the baseline “Multi-loss + standard BP” in Table 6.

Results Analysis and Discussion. Table 6 lists the classification errors achieved in single model. The results in the first row are the ones reported in [7] and [23], respectively. And the second row displays the results by our re-implementation. There is slight difference between the two rows, mainly because of the diversity of details in implementation, which has been described in the section of “Configurations”.

The models trained with Relay BP achieve better classification performance compared to the ones trained with standard BP. The accuracy improvement is \(0.44\,\%\) on top-5 measure, and \(0.91\,\%\) on top-1 measure based on ResNet-50 network. Besides, there are \(0.46\,\%\) and \(0.66\,\%\) improvement on top-5 and top-1 measure based on Inception-v3 architecture. The common characteristic of the two architectures is the utilisation of shortcut connections, although the implementations are different. As we have mentioned in above sections, shortcuts make the gradient of final outputs easily reach lower layers, thus are able to prevent the information reduction due to long-term propagation. This is also the evidence of only adding one auxiliary branch in Relay BP. Nevertheless, the network performance can be enhanced by aid of our method, which further demonstrates the promise of our insight that restraining the adverse effect of less relevant information is effective for improving network performance. Because of the high baselines, the improvement is so difficult, which highlights the effectiveness of our method. Moreover, we also report the results on test dataset (submitted to test server) to verify that the obtained results are not overfitting to the dataset. We only submitted the two results in the last half year, and the result \(4.03\,\%\) outperforms the best single model reported in ILSVRC 2015 ImageNet Classification task [7, 23].

6 Conclusion

In this paper, we proposed the method Relay Backpropagation, which encourages the flows of informative gradient in backward propagation when training deep convolutional neural networks. Relevant information can be effectively preserved, and the adverse effect of less relevant information can be restrained. The experiments with four different network architectures on two challenging large scale datasets demonstrate the effectiveness of our method is not restricted to certain network architecture or specific dataset. As a future direction, we are interested in theoretical and mathematical support for the method.

References

Cover, T.M., Thomas, J.A.: Elements of Information Theory. Wiley-Interscience, Hoboken (2006)

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: CVPR (2014)

Glorot, X., Bengio, Y.: Understanding the difficulty of training deep feedforward neural networks. In: ICAIS (2010)

Goodfellow, I.J., Warde-Farley, D., Mirza, M., Courville, A., Bengio, Y.: Maxout networks. arXiv:1302.4389 (2013)

He, K., Zhang, X., Ren, S., Sun, J.: Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In: ICCV (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Spatial pyramid pooling in deep convolutional networks for visual recognition. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8691, pp. 346–361. Springer, Heidelberg (2014). doi:10.1007/978-3-319-10578-9_23

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: CVPR (2016)

He, K., Zhang, X., Ren, S., Sun, J.: Identity mappings in deep residual networks. arXiv:1603.05027 (2016)

Ioffe, S., Szegedy, C.: Batch normalization: Accelerating deep network training by reducing internal covariate shift. In: ICML (2015)

Jia, Y., Shelhamer, E., Donahue, J., Karayev, S., Long, J., Girshick, R., Guadarrama, S., Darrell, T.: Caffe: convolutional architecture for fast feature embedding. arXiv:1408.5093 (2014)

Kamimura, R.: Information Theoretic Neural Computation. World Scientific, New York (2002)

Karpathy, A., Toderici, G., Shetty, S., Leung, T., Sukthankar, R., Fei-Fei, L.: Large-scale video classification with convolutional neural networks. In: CVPR (2014)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: ImageNet classification with deep convolutional neural networks. In: NIPS (2012)

LeCun, Y.A., Bottou, L., Orr, G.B., Müller, K.-R.: Efficient BackProp. In: Montavon, G., Orr, G.B., Müller, K.-R. (eds.) Neural Networks: Tricks of the Trade. LNCS, vol. 7700, pp. 9–48. Springer, Heidelberg (2012). doi:10.1007/978-3-642-35289-8_3

Lee, C.Y., Xie, S., Gallagher, P., Zhang, Z., Tu, Z.: Deeply-supervised nets. In: AISTATS (2015)

Maas, A.L., Hannun, A.Y., Ng, A.Y.: Rectified nonlinearities improve neural network acstic models. In: ICML (2013)

Nair, V., Hinton, G.: Rectified linear units improve restricted Boltzmann machines. In: ICML (2010)

Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., Huang, Z., Karpathy, A., Khosla, A., Bernstein, M., Berg, A.C., Fei-Fei, L.: Imagenet large scale visual recognition challenge. IJCV 115, 211–252 (2015)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. In: ICLR (2015)

Srivastava, R.K., Greff, K., Schmidhuber, J.: Highway networks. In: ICML Deep Learning Workshop (2015)

Sutskever, I., Martens, J., Dahl, G.E., Hinton, G.E.: On the importance of initialization and momentum in deep learning. In: ICML (2013)

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V., Rabinovich, A.: Going deeper with convolutions. In: CVPR (2015)

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., Wojna, Z.: Rethinking the inception architecture for computer vision. arXiv:1512.00567 (2015)

Taigman, Y., Yang, M., Ranzato, M., Wolf, L.: Deepface: closing the gap to human-level performance in face verification. In: CVPR (2014)

Tieleman, T., Hinton, G.: Divide the gradient by a running average of its recent magnitude. COURSERA Neural Netw. Mach. Learn. (2012)

Tishby, N., Zaslavsky, N.: Deep learning and the information bottleneck principle. In: IEEE Information Theory Workshop (2015)

Xiao, J., Ehinger, K., Hays, J., Torralba, A., Oliva, A.: Sun database: exploring a large collection of scene categories. IJCV 119, 3–22 (2014)

Zeiler, M.D., Fergus, R.: Visualizing and understanding convolutional neural networks. In: ECCV (2014)

Zhou, B., Khosla, A., Lapedriza, A., Torralba, A., Oliva, A.: Places2: A large-scale database for scene understanding (2015). http://places2.csail.mit.edu/

Zhou, B., Lapedriza, A., Xiao, J., Torralba, A., Oliva, A.: Learning deep features for scene recognition using places database. In: NIPS (2014)

Acknowledgments

Z. Lin is supported by China 973 Program (grant no. 2015CB352502), NSF China (grant nos. 61272341 and 61231002), and Microsoft Research Asia Collaborative Research Program. Q. Huang is supported by China 973 Program: 2012CB316400 and 2015CB351800, and NSF China: 61332016.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Shen, L., Lin, Z., Huang, Q. (2016). Relay Backpropagation for Effective Learning of Deep Convolutional Neural Networks. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds) Computer Vision – ECCV 2016. ECCV 2016. Lecture Notes in Computer Science(), vol 9911. Springer, Cham. https://doi.org/10.1007/978-3-319-46478-7_29

Download citation

DOI: https://doi.org/10.1007/978-3-319-46478-7_29

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-46477-0

Online ISBN: 978-3-319-46478-7

eBook Packages: Computer ScienceComputer Science (R0)