Abstract

While the Matrix Generalized Inverse Gaussian (\(\mathcal {MGIG}\)) distribution arises naturally in some settings as a distribution over symmetric positive semi-definite matrices, certain key properties of the distribution and effective ways of sampling from the distribution have not been carefully studied. In this paper, we show that the \(\mathcal {MGIG}\) is unimodal, and the mode can be obtained by solving an Algebraic Riccati Equation (ARE) equation [7]. Based on the property, we propose an importance sampling method for the \(\mathcal {MGIG}\) where the mode of the proposal distribution matches that of the target. The proposed sampling method is more efficient than existing approaches [32, 33], which use proposal distributions that may have the mode far from the \(\mathcal {MGIG}\)’s mode. Further, we illustrate that the the posterior distribution in latent factor models, such as probabilistic matrix factorization (PMF) [24], when marginalized over one latent factor has the \(\mathcal {MGIG}\) distribution. The characterization leads to a novel Collapsed Monte Carlo (CMC) inference algorithm for such latent factor models. We illustrate that CMC has a lower log loss or perplexity than MCMC, and needs fewer samples.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Posterior Distribution

- Markov Chain Monte Carlo

- Importance Sampling

- Proposal Distribution

- Algebraic Riccati Equation

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Matrix Generalized Inverse Gaussian (\(\mathcal {MGIG}\)) distributions [3, 10] are a family of distributions over the space of symmetric positive definite matrices and has been recently applied as the prior for covariance matrix [20, 32, 33]. \(\mathcal {MGIG}\) is a flexible prior since it contains Wishart, and Inverse Wishart distributions as special cases. We anticipate the usage of \(\mathcal {MGIG}\) as prior for statistical machine learning models to grow with potential applications in Bayesian dimensionality reduction and Bayesian matrix completion (Sect. 4).

Some properties of the \(\mathcal {MGIG}\) distribution and its connection with Wishart distribution has been studied in [10, 26, 27]. However, to best of our knowledge, it is not yet known if the distribution is unimodal and, if it is unimodal, how to obtain the mode of \(\mathcal {MGIG}\). Besides, it is difficult to analytically calculate mean of the distribution and sample from the \(\mathcal {MGIG}\) distribution. Monte Carlo methods like the importance sampling can in principle be applied to infer the mean of \(\mathcal {MGIG}\) but one needs to design a suitable proposal distribution [21, 23].

There is only one important sampling procedure for estimating the mean of \(\mathcal {MGIG}\) [32, 33]. In this approach, \(\mathcal {MGIG}\) is viewed as a product of the Wishart and Inverse Wishart distributions and one of them is used as the proposal distribution. However, we illustrate that the mode of the proposal distribution in [32, 33] may be far away from the \(\mathcal {MGIG}\)’s mode. As a result, the proposal density is small in a region where the \(\mathcal {MGIG}\) density is large yielding to an ineffective sampler and drastically wrong estimate of the mean (Figs. 1 and 2).

In this paper, we first illustrate that the \(\mathcal {MGIG}\) distribution is unimodal where the mode can be obtained by solving an Algebraic Riccati Equation (ARE) [7]. This characterization leads to an effective importance sampler for the \(\mathcal {MGIG}\) distribution. More specifically, for estimating the expectation \(\mathbb {E}_{X\sim \mathcal {MGIG}}[g(X)]\), we select a proposal distribution over space of symmetric positive definite matrices like Wishart or Inverse Wishart distribution such that the mode of the proposal matches the mode of the \(\mathcal {MGIG}\). As a result, unlike the current sampler [32, 33], by aligning the shape of the proposal and the \(\mathcal {MGIG}\), the density of the proposal gets higher values in the high density regions of the target, yielding to a good approximation of \(\mathbb {E}_{X\sim \mathcal {MGIG}}[g(X)]\).

Further, we discuss a new application of the \(\mathcal {MGIG}\) distribution in latent factor models such as probabilistic matrix factorization (PMF) [24] or Bayesian PCA (BPCA) [4]. In these settings, the given matrix \(X \in \mathbb {R}^{N \times M}\) is approximated by a low-rank matrix \(\hat{X} = U V^T\) where \(U \in \mathbb {R}^{N \times D} \) and \(V \in \mathbb {R}^{M \times D}\) with Gaussian priors over the latent matrices U and V. We show that after analytically marginalizing one of the latent matrices in PMF (or BPCA), the posterior over the other matrix has the \(\mathcal {MGIG}\) distribution. This illustration yields to a novel Collapsed Monte Carlo (CMC) inference algorithm for PMF. In particular, we marginalize one of the latent matrices, say V, and propose a direct Monte Carlo sampling from the posterior of the other matrix, say U. Through extensive experimental analysis on synthetic, SNP, gene expression, and MovieLens datasets, we show that CMC has lower log loss or perplexity with fewer samples than Markov Chain Monte Carlo (MCMC) inference approach for PMF [25].

The rest of the paper is organized as follows. In Sect. 2, we cover background materials. In Sect. 3, we show that \(\mathcal {MGIG}\) is unimodal and give a novel importance sampler for \(\mathcal {MGIG}\). We provide the connection of \(\mathcal {MGIG}\) with PMF in Sect. 4, present the results in Sect. 5, and conclude in Sect. 6.

2 Background and Preliminary

In this section, we provide some background on the relevant topics and tools that will be used in our analysis. We start by an introduction to importance sampling, \(\mathcal {MGIG}\) distribution, followed by a brief overview of the ARE.

Notations: Let \(\mathbb {S}^N_{++}\) and \(\mathbb {S}^N_{+}\) denote the space of symmetric (\(N \times N\)) positive definite and positive semi-definite matrix, respectively. Let |.| denote the determinant of matrix, \({{\mathrm{Tr}}}(.)\) be the matrix trace. A matrix \(\varLambda \in \mathbb {S}^N_{++}\) has a Wishart distribution denoted as \(\mathcal {W}_N(\varLambda | \varPhi , \tau )\) where \(\tau > N-1\) and \(\varPhi \in \mathbb {S}^N_{++}\) [31]. A matrix \(\varLambda \in \mathbb {S}^N_{++}\) has an Inverse Wishart distribution denoted as \(\mathcal {IW}_N(\varLambda | \varPsi , \alpha )\) where \(\alpha > N-1\) and \(\varPsi \in \mathbb {S}^N_{++}\) is the scale matrix. We denote \(\mathbf{{x}}_{:m}\) as the \(m^{th}\) column of matrix \(X \in \mathbb {R}^{N \times M}\) and \(\mathbf{{x}}_n\) as the \(n^{th}\) row of X.

2.1 Importance Sampling

Consider distribution \(p(x) = \frac{1}{Z_p} p^*(x)\) where \(Z_p\) is the partition function which plays the role of a normalizing constant. Importance sampling is a general technique for estimating \(\mathbb {E}_{x\sim p(x)}[g(x)]\) where sampling from p(x) (the target distribution) is difficult but we can evaluate the value of \(p^*(x)\) at any given x [21]. The idea is to draw S samples \(\{x_i \}_{i=1}^S\) from a similar but easier distribution denoted by proposal distribution \(q(x) = \frac{1}{Z_q} q^*(x)\). Define \(w(x_i) = \frac{p^*(x_i)}{q^*(x_i)}\) as the weight of each sample i. Then, we calculate the expected value as follows

The efficiency of importance sampling depends on how closely the proposal approximates the target in the shape. One way for monitoring the efficiency of importance sampling is the effective sample size \(ESS = \frac{(\sum _{i=1}^S w(x_i))^2}{\sum _{i=1}^S w^2(x_i)}\) [15]. Very small value of ESS indicates a big discrepancy between the proposal and target (for example when the mode of the proposal distribution is far away from the target’s mode) leading to a drastically wrong estimate of \(\mathbb {E}_{x\sim p}[g(x)]\) [21].

2.2 \({\mathcal {MGIG}}\) Distribution

\(\mathcal {MGIG}\) distribution was first introduced in [3] as a distribution over the space of symmetric (\(N \times N\)) positive definite matrices defined as follows.

Definition 21

A matrix-variate random variable \(\varLambda \in \mathbb {S}^N_{++}\) is \(\mathcal {MGIG}\) distributed [3, 10] and is denoted as \(\varLambda \sim \mathcal {MGIG}_N(\varPsi , \varPhi , \nu )\) if the density of \(\varLambda \) is

where \(B_\nu (.)\) is the matrix Bessel function [13] defined as

When \(N=1\), the \(\mathcal {MGIG}\) is the generalized inverse Gaussian distribution \(\mathcal {GIG}\) [14] which is often used as the prior in several domains [6, 12]. If \(\varPsi =0\), the \(\mathcal {MGIG}\) distribution reduces to the Wishart, and if \(\varPhi =0\), it becomes the Inverse Wishart distribution.

Proposition 1

[32, Proposition 2] If matrix \(\varLambda \sim \mathcal {MGIG}_N(\varPsi , \varPhi , \nu )\), then \(\varLambda ^{-1} \sim \mathcal {MGIG}_N(\varPhi , \varPsi , -\nu )\).

Sampling Mean of \(\varvec{\mathcal {MGIG}}\) : The sufficient statistics of \(\mathcal {MGIG}\) are \(\log |\varLambda |\), \(\varLambda \), and \(\varLambda ^{-1}\). It is, however, difficult to analytically calculate the expectations \(\mathbb {E}_{\varLambda \sim \mathcal {MGIG}}[\varLambda ]\) and \(\mathbb {E}_{\varLambda \sim \mathcal {MGIG}}[\varLambda ^{-1}]\). Importance sampling can be applied to approximate those quantities. Note that based on the result of Proposition 1, the importance sampling procedure for estimating mean of \(\mathcal {MGIG}\), i.e., \(\mathbb {E}_{\varLambda \sim \mathcal {MGIG}}[\varLambda ]\), can also be applied to infer the reciprocal mean i.e. \(\mathbb {E}_{\varLambda \sim \mathcal {MGIG}}[\varLambda ^{-1}]\).

An importance sampling procedure proposed in [32, 33], where the \(\mathcal {MGIG}\) is viewed as a product of Inverse Wishart and Wishart distributions and one of the multiplicands is used as the natural choice of the proposal distribution. In particular, in [32, 33], the \(\mathcal {MGIG}\) is viewed as

In [32, 33], authors advocate using \(T_2\) (or \(T_4\)) as the proposal distribution which simplify the weight calculation to the evaluation of \(T_1\) (or \(T_3\)). However, it is not studied how close \(T_2\) (or \(T_4\)) are to the \(\mathcal {MGIG}\) distribution in shape. For example, consider the \(1-\)dimensional \(\mathcal {MGIG}\) distribution

In [32, 33], \(T_4: \mathcal {W}_1 (\varLambda \, | \,10, 20)\) is considered as the proposal distribution, but the mode of \(T_4\) is far away from the mode of \(\mathcal {MGIG}_1(\varLambda \, | \,35, 10, 10)\) (Fig. 2(a)). As a result, samples drawn from \(T_4\) will be on the tail of the \(\mathcal {MGIG}_1(\varLambda \, | \,10, 20)\) distribution, and will end up getting low weights from the \(\mathcal {MGIG}_1(\varLambda \, | \,10, 20)\) distribution. Such a sampling procedure will be wasteful, i.e., drawing samples from the tails of the target \(\mathcal {MGIG}_1\) distribution, leading to a very low ESS. Similar behavior is observed with several different choices of parameters for the \(\mathcal {MGIG}\), here we only show three of them in Fig. 2 due to the lack of space.

(a,b) Comparison of different proposal distribution (a) Wishart (\(\mathcal {W}\)) and (b) Inverse Wishart (\(\mathcal {IW}\)) for sampling mean of \(\mathcal {MGIG}_{1}(\varPsi , \varPhi , \nu )\) where \(\varLambda ^{*}\) is the mode of MGIG. The blue curves are the proposal distribution defined in [32, 33] which can not recover the mode of the \(\mathcal {MGIG}\) distribution. (c) Density of \(\mathcal {MGIG}_2(\varPsi , \varPhi , \nu )\) for 1000 samples generated by each proposal distribution is calculated. More than \(90\,\%\) of samples generated by the previous proposal distribution in [32, 33] (\(\mathcal {IW}(\psi , -2\nu )\)) have zero \(\mathcal {MGIG}\) density leading to \(ESS=40\). Whereas, the new proposal distribution \(IW(23\varLambda ^*, 20)\) has the \(ESS=550\) which has a very similar shape to the target \(\mathcal {MGIG}\). (Color figure online)

2.3 Algebraic Riccati Equation

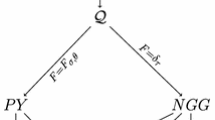

An algebraic Riccati equation (ARE) is

where \(A \in \mathbb {R}^{N \times N}, Q \in \mathbb {S}_{+}^N\), and \(R \in \mathbb {S}_{+}^N\). We associate a \(2N \times 2N\) matrix called the Hamiltonian matrix H with the ARE (3) as \(H = \begin{bmatrix} A&R \\ -Q&-A^T \end{bmatrix}\). The ARE (3) has a unique positive definite solution if and only if the associated Hamiltonian matrix H has no imaginary eigenvalues (Section 5.6.3 of [7]).

There have been offered various numerical methods to solve the ARE which can be reviewed in [1]. The key of numerical technique to solve ARE (3) is to convert the problem to a stable invariant subspace problem of the Hamiltonian matrix i.e., finding the invariant subspace corresponding to the eigenvalues of H with negative real parts. The usual ARE solvers such as the Schur vector method [16], SR methods [9], the matrix sign function [2, 11] require in general \(O(n^3)\) flops [19]. For special cases, faster algorithms such as [19] can be applied which solves such an ARE with 20k dimensions in seconds. In this paper, we use Matlab ARE solver (care) to find the solution of ARE.

3 \(\mathcal {MGIG}\) Properties

Some properties of the \(\mathcal {MGIG}\) and its connection with Wishart distribution has been studied in [10, 26, 27]. However, to best of our knowledge, it is not yet known if the distribution is unimodal and how to obtain the mode of \(\mathcal {MGIG}\). In the following Lemma we show that the \(\mathcal {MGIG}\) distribution is unimodal.

Lemma 1

Consider the \(\mathcal {MGIG}\) distribution \(\mathcal {MGIG}_N(\varLambda | \varPsi , \varPhi , \nu )\). The mode of \(\mathcal {MGIG}\) is the solution of the following Algebraic Riccati Equation (ARE)

where \(\alpha = (\nu - \frac{N+1}{2})\). ARE in (4) has a unique positive definite solution, thus the \(\mathcal {MGIG}\) distribution is a unimodal distribution.

Proof

The \(\log \)-density of \(\mathcal {MGIG}_N(\varLambda | \varPsi , \varPhi , \nu )\) is

where \(\alpha = (\nu - \frac{N+1}{2})\), and C is a constant which does not depend on \(\varLambda \). The mode of \(\mathcal {MGIG}_N\) is obtained by setting derivative of (5) to zero as follows

which is a special case of ARE (3). The associated Hamiltonian matrix for (6) is \({H} = \begin{bmatrix} - \alpha \mathbb {I}_N&\varPhi \\ \varPsi&\alpha \mathbb {I}_N \end{bmatrix}\). Recall that ARE has a unique positive definite solution if and only if the associated Hamiltonian matrix H has no imaginary eigenvalues (Section 5.6.3 of [7]). Thus, to show the unimodality of \(\mathcal {MGIG}\), it is enough to show that the corresponding characteristic polynomial \(| {H} - \lambda \mathbb {I}_{2N} | = 0\) has no imaginary solution.

which yields to \(\lambda ^2 = \tilde{\lambda }_i + \alpha ^2\) where \(\tilde{\lambda }_i\) is the \(i^{th}\) eigenvalue of \(\varPhi \varPsi \). Note \(\tilde{\lambda }_i > 0\) since \(\varPhi \) and \(\varPsi \) are positive definite and product of two positive definite matrix has positive eigenvalue. As a result, (7) has no imaginary solution and H does not have any imaginary eigenvalue. As a result, ARE in (6) has a unique positive definite solution. This completes the proof. \(\square \)

Importance Sampling for \(\varvec{\mathcal {MGIG}}\) : Since \(\mathcal {MGIG}\) is a unimodal distribution, we propose an efficient importance sampling procedure for \(\mathcal {MGIG}\) by mode matching. We select a proposal distribution over space of positive definite matrices by matching the proposal’s mode to the mode of \(\mathcal {MGIG}\) (mode matching) which aligns the proposal and \(\mathcal {MGIG}\) shapes. As a result, the proposal q(x) is large in a region where the target distribution \(\mathcal {MGIG}\) is large leading to a good approximation of \(\mathbb {E}_{\varLambda \sim \mathcal {MGIG}}[g(\varLambda )]\). An example of such proposal distribution is Inverse Wishart or Wishart distribution.

Let \(\varLambda ^*\) be the mode of \(\mathcal {MGIG}_N(\varLambda | \varPsi , \varPhi , \nu )\) which can be found by solving the ARE (6). The mode of \(\mathcal {W}_N(\varLambda | \Sigma , \rho )\) distribution is \(\Sigma ^* = (\rho -N -1)\Sigma \). To match the mode of \(\mathcal {W}_N(\varLambda | \Sigma , \rho )\) with that of \(\mathcal {MGIG}_N(\varLambda | \varPsi , \varPhi , \nu )\), we choose the scale parameter \(\Sigma \) of the Wishart distribution by setting \(\Sigma ^* = \varLambda ^*\). In particular,

Thus, we suggest using \(\mathcal {W}_N(\frac{\varLambda ^*}{\rho -N-1}, \rho )\) as the proposal distribution. At each iteration, we draw a sample \(\varLambda _i \sim \mathcal {W}_N(\frac{\varLambda ^*}{\rho -N-1}, \rho )\), and calculate \(w(\varLambda _i)\). More specifically, the density of Wishart distribution is

Then, the importance weight can be calculated as

As a result, we approximate \(\mathbb {E}_{\varLambda \sim \mathcal {MGIG}}[g(\varLambda )] \approx \frac{\sum _{i=1}^S w(\varLambda _i) g(\varLambda _i)}{\sum _{j=1}^S w(\varLambda _j)}.\) A similar argument holds when the proposal distribution is an Inverse Wishart distribution.

Note that the weight calculation requires to calculate the inverse and determinant of sampled matrix \(\varLambda _i\). However, as illustrated in Algorithm 1, the random samples generator from \(\mathcal {W}\) [28] returns the upper triangular matrix R where \(\varLambda = R^TR\). Hence the inverse and determinant of \(\varLambda \) can be calculated efficiently from the inverse and diagonal of the triangular matrix R, respectively. Therefore, the cost of weight calculation is reduced to the cost of solving a linear system and upper triangular matrix production at each iteration.

Figure 2 illustrates that the proposed importance sampling outperforms the one in [32, 33] for three examples of \(\mathcal {MGIG}\). In particular, more than \(90\,\%\) of samples drawn from the proposal distribution \(T_2\) in [32, 33] have zero weights leading to \(ESS=40\) (Fig. 2 (c)). Whereas, our proposal distribution achieved \(ESS=550\) leading to a better approximation of the mean of \(\mathcal {MGIG}\). Similar behavior is observed with several different choices of parameters for the \(\mathcal {MGIG}\).

4 Connection of \({\mathcal {MGIG}}\) and Bayesian PCA

In this section, we illustrate that the mapping matrix V in Bayesian PCA can be marginalized or ‘collapsed’ yielding a Matrix Generalized Inverse Gaussian (\(\mathcal {MGIG}\)) [3, 10] posterior distribution over the latent matrix U denoting as the marginalized posterior distribution. Then, we explain the derivation of the marginalized posterior for data with missing values, followed by a collapsed Monte Carlo Inference for PMF.

4.1 PMF, PPCA, and Bayesian PCA

First, we give a review of PMF [24], Probabilistic PCA (PPCA) [29], and Bayesian PCA (BPCA) [4], to illustrate the similarity and differences between the existing ideas and our approach. A related discussion appears in [18]. All these models focus on an (partially) observed data matrix \(X \in \mathbb {R}^{N \times M}\). Given latent factors \(U \in \mathbb {R}^{N \times D}\) and \(V \in \mathbb {R}^{M \times D}\), the rows of X are assumed to be generated according to \(\mathbf {x}_{:m} = U \mathbf {v}_m^T + \epsilon \), where \(\epsilon \in \mathbb {R}^N\). The different models vary depending on how they handle distributions or estimates of the latent factors U, V. Without loss of generality, in this paper, we are considering a fat matrix X where \(M > N\).

PMF and BPMF: In PMF [24], one assumes independent Gaussian priors for all latent vectors \(\mathbf {u}_n\) and \(\mathbf {v}_m\), i.e., \(\mathbf {u}_n \sim \mathcal{N}(0, \sigma ^2_u \mathbb {I}), [n]_1^N\) and \(\mathbf {v}_{m} \sim \mathcal{N}(0, \sigma ^2_v \mathbb {I}), [m]_1^M\). Then, one obtains the following posterior over (U, V)

where \(\delta _{nm}= 0\) if \(x_{nm}\) is missing and \(\delta =\{\sigma ^2, \sigma _u^2, \sigma _v^2\}\). PMF obtains point estimates \((\hat{U},\hat{V})\) by maximizing the posterior (MAP), based on alternating optimization over U and V [24].

Bayesian PMF (BPMF) [25] considers independent Gaussian priors over latent factors with full covariance matrices, i.e., \(\mathbf {u}_n \sim \mathcal{N}(0, \Sigma _u), [n]_1^N\) and \(\mathbf {v}_{m} \sim \mathcal{N}(0, \Sigma _v), [m]_1^M\). Inference is done using Gibbs sampling to approximate the posterior P(U, V | X). At each iteration t, \(U^{(t)}\) is sampled from the conditional probability of \(p(U | V^{(t-1)}, X)\), followed by sampling V from \(p(V | U^{(t)}, X)\).

Probabilistic PCA: In PPCA [29], one assumes independent Gaussian prior over \(\mathbf {u}_n\), i.e., \(\mathbf {u}_n \sim \mathcal{N}(0,\sigma _u^2 \mathbb {I})\), but V is treated as a parameter to be estimated. In particular, V is chosen so as to maximize the marginalized likelihood of X

Interestingly, as shown in [29], the estimate \(\hat{V}\) can be obtained in closed form. For such a fixed \(\hat{V}\), the posterior distribution over \(U|X,\hat{V}\) can be obtained as

where \(\Gamma = \hat{V}^T\hat{V} + \sigma _u^{-2} \sigma ^{-2} \mathbb {I}\). Note that the posterior of the latent factor U in (11) depends on both X and \(\hat{V}\). For applications of PPCA in visualization, embedding, and data compression, any point \(x_n\) in the data space can be summarized by its posterior mean \(E[\mathbf {u}_n | \mathbf {x}_n, \hat{V}]\) and covariance \(Cov(\mathbf {u}_n | \hat{V})\) in the latent space.

Bayesian PCA: In Bayesian PCA [4], one assumes independent Gaussian priors for all \(\mathbf {u}_n\) and \(\mathbf {v}_m\), i.e., \(\mathbf {u}_n \sim \mathcal{N}(0, \sigma ^2_u \mathbb {I})\) and \(\mathbf {v}_{m} \sim \mathcal{N}(0, \sigma ^2_v \mathbb {I})\), \([m]_1^M\). Bayesian posterior inference by Bayes rule considers \(p(U,V|X) = p(X|U,V) p(U) p(V)/p(X)\), which includes the intractable partition function

The literature has considered approximate inference methods, such as variational inference [5], gradient descent optimization [18], MCMC [25], or Laplace approximation [4, 22].

While PPCA and Bayesian PCA were originally considered in the context of embedding and dimensionality reduction, PMF and BPMF have been widely used in the context of matrix completion where the observed matrix X has many missing entries. Nevertheless, as seen from the above exposition, the structure of the models are closely related (also see [17, 18]).

4.2 Closed Form Posterior Distribution in Bayesian PCA

The key challenge in models such as Bayesian PCA or BPMF is that joint marginalization over both latent factors U, V is intractable. PPCA gets around the problem by considering one of the variables, say V, to be a constant. In this section, we show that one can marginalize or ‘collapse’ one of the latent factors, say V, and obtain the marginalized posterior P(U|X) over the other variable denoted. In fact, we obtain the posterior with respect to the covariance structure \(\varLambda _u = \beta _u \mathbb {I}+ UU^T\), for a suitable constant \(\beta _u\), which is sufficient to do Bayesian inference on new test points \(x_{\text {test}}\). Note that

and, based on the posterior over U, one can obtain the probability on a new point as \(p(x_{\text {test}}|X) = \int _U p(x_{\text {test}}|U) p(U|X) dU.\)

Next, we show that the posterior over U as in (13), rather the distribution over \(\varLambda _u = \beta _u \mathbb {I}+ UU^T\), can be derived analytically in closed form. The distribution is the Matrix Generalized Inverse Gaussian (\(\mathcal {MGIG}\)) distribution.

Similar to (10), marginalizing V gives

where \(\varLambda _u = \beta _v \mathbb {I} + UU^T \) and \(\beta _v = \frac{\sigma ^2}{\sigma ^2_v}\). Then, the marginalized posterior of U is

where \(\varPsi _u = \frac{1}{\sigma _v^2} {X} {X}^T\), \(\varPhi _u = \frac{1}{\sigma _u^2} \mathbb {I}\), and \(\nu _u = \frac{N-M+1}{2}\).

Therefore, by marginalizing or collapsing V, the posterior over \(\varLambda _u = \beta _v \mathbb {I} + UU^T \) corresponding to the latent matrix U can be characterized exactly with a \(\mathcal {MGIG}\) distribution with parameters depending only on X. Note that this is in sharp contrast with (11) for PPCA, where the posterior covariance of \(\mathbf {u}_n\) is \(\sigma ^{-2} \Gamma \) which in turn depends on the point estimate for \(\hat{V}\).

4.3 Posterior Distribution with Missing Data

In this section, we consider the matrix completion setting, when the observed matrix X has missing values. In presence of missing values, the likelihood of the observed sub-vector in any column of X is given as

where \(n_m\) is a vector of size \(\tilde{N}_m\) containing indices of non-missing entries in column m of X, and \(\tilde{U}_m\) is a sub-matrix of U with size of \(\tilde{N}_m \times D\) where each row correspond to a non-missing entry in the \(m^{th}\) column of X. The marginalized likelihood (14) is \(p\left( X \, | \,U \right) = \prod _{m=1}^M ~ \mathcal {N}\left( \mathbf {x}_{n_m, m} \, | \,0, ~ \sigma _v^2 \varLambda _{un} \right) ,\) where \(\varLambda _{un}= \beta _v \mathbb {I}+\tilde{U}_n\tilde{U}_n^T\) and \(\beta _v = \frac{\sigma ^2}{\sigma _v^2}\). The marginalized posterior is given by

Thus, in presence of missing values, the posterior cannot be factorized as in (15) because each column \(\mathbf {x}_{:m}\) contributes to different blocks \(\varLambda _{un}\) of \(\varLambda \).

We propose to address the missing value issue by gap-filling. In particular, if one can obtain a good estimate of the covariance structure in X, so that \(\varPsi _u = \frac{1}{\sigma _v^2} XX^T\) in (16) can be approximated well, one can use the \(\mathcal {MGIG}\) posterior to do approximate inference. We consider two simple approaches to approximate the covariance structure of X: (i) by zero-padding the missing value matrix X (assuming \(E[X]=0\) or centering the data in practice), and estimating the covariance structure based on the zero-padded matrix [30], and (ii) by using a suitable matrix completion method, such as PMF, to get point estimates of the missing entries in X, and estimating the covariance structure based on the completed matrix. We experiment with both approaches in Sect. 5, and the zero-padded version seems to work quite well.

4.4 Collapsed Monte Carlo Inference for PMF

Given that \({\varLambda }_u \sim \mathcal {MGIG}_N\), we predict the missing values as follows. Let \(\mathbf {x} = [\mathbf {x}^{o}, \mathbf {x}^{*}] \sim \mathcal {N} (0, {\varLambda })\), where \(\mathbf {x}^o \in \mathbb {R}^p\) is the observed partition of \(\mathbf {x} \in \mathbb {R}^N\) and \(\mathbf {x}^* \in \mathbb {R}^{N-p}\) is missing. Accordingly, partition \({\varLambda }\) as  . Then the conditional probability of \(\mathbf {x}^{*}\) given \(\mathbf {x}^{o}\) and \(\varLambda \) is

. Then the conditional probability of \(\mathbf {x}^{*}\) given \(\mathbf {x}^{o}\) and \(\varLambda \) is

where \(\mathbf {y} = \varLambda _{*o} \varLambda _{oo}^{-1}\) is the solution of the linear system \(\varLambda _{oo} \mathbf {y} = \varLambda _{*o}^T\) and can be calculated efficiently. Since sampling from \(\mathcal {MGIG}\) is difficult, we propose to use importance sampling to infer the missing values as

where q is the proposal distribution as discussed above and sampling \(\varLambda ^{(t)}\) from q yields to the estimate of

Algorithm 2 illustrates the summary of the collapsed Monte Carlo (CMC) inference for predicting the missing values. A practical approximation to avoid the calculations in Lines 7–8 of Algorithm 2 at each iteration is to simply estimate the mean of the posterior \(\bar{\varLambda } = \frac{\sum _{t=1}^T \varLambda ^{(t)} w^t}{\sum _{t=1}^T w^t} \) with samples drawn from the proposal distribution (line 6), then do the inference based on \(\bar{\varLambda }\).

5 Experimental Results

We compared the performance of MCMC and CMC on both log loss and running times. We evaluated the models on 4 datasets: (1) SNP: single nucleotide polymorphism (SNP) is important for identifying gene-disease associations where the data usually has 5 to 20 % of genotypes missing [8]. We used phased SNP dataset for chromosome 13 of the CEU populationFootnote 1. We randomly dropped 20 % of the entries. (2) Gene Expression: DNA microarrays provides measurement of thousands of genes under a certain experimental condition where suspicious values are usually regarded as missing values. Here we used gene expression dataset for Breast Cancer (BRCA)Footnote 2. We randomly dropped 20 % of the entries. (3) MovieLens: we used MovieLensFootnote 3 dataset with 1M rating represented as a fat matrix \(X \in \mathbb {R}^{N \times M}\) where \(M=3900\) movies and \(N=6040\) users. (4) Synthetic: first the latent matrices U and V are generated by randomly choosing each \(\{\mathbf {u}_n\}_{n=1}^N\) and \(\{\mathbf {v}_m\}_{m=1}^M\) from \(\mathcal {N}(0, \sigma _u^2 \mathbb {I})\) and \(\mathcal {N} (0, \sigma _v^2 \mathbb {I})\), respectively. Then, matrix X is built by sampling each \(x_{nm}\) from \(\mathcal {N} ( \langle \mathbf {u}_n, \mathbf {v}_m \rangle , \sigma ^2)\). The parameters are set to \(N=100\), \(M=6000\), \(\sigma _u^2=\sigma _v^2=0.05\), and \(\sigma ^2 = 0.01\). We dropped random entries using Bernoulli distributions with \(\delta = 0.1, 0.2\).

Log loss (LL) of CMC and MCMC for different log loss percentile on different datasets presented in the log scale (\(\delta \) denotes the missing proportion). CMC consistently achieves lower LL compared to MCMC. LL of MCMC increases exponentially (linearly in log scale) by adding data points with higher log loss. Proposal in [30, 31] achieved infinity LL for MovieLens. Empty bar represents infinity LL (e.g. 90 % and 100 % percentile in (d)

5.1 Methodology

We compared CMC with MCMC inference for PMF. Gibbs sampling with diagonal covariance prior over the latent matrices is used for MCMC. For the model evaluation, average of log loss (LL) is reported over 5-fold cross-validation. LL measures how well a probabilistic model q predicts the test sample defined as \(LL = {-\frac{1}{T} \sum _{i=1}^N \sum _{j=1}^M \delta _{ij} \log q(x_{ij})}\) where \(q(x_{ij})\) is the inferred probability and T is the total number of observed values. A better model q assign higher probability \(q(x_{ij})\) to observed test data, and have a smaller value of LL.

LL Percentile: For any posterior model q(x), a test data point \(x_{\text {test}}\) with low \(q(x_{\text {test}})\) has large log loss, and high \(q(x_{\text {test}})\) has low log loss. To comparatively evaluate the posteriors obtained from MCMC and CMC, we consider their log loss percentile plots. For any posterior, we sort all the test data points in ascending order of their log loss, and plot the mean log loss in 10 percentile batches. More specifically, the first batch corresponds to the top 10 % of data points with the lowest log loss, the second batch corresponds to the top 20 % of data points with the lowest log loss (including the first 10 % percentile), and so on.

5.2 Results

We summarize the results from different aspects:

Log loss: CMC has a small log loss across all percentile batches, whereas log loss of MCMC increases exponentially (linear increase in the log scale) for percentile batches with higher log loss i.e., smaller predicting probability, (Fig. 3). Thus, MCMC assigned extremely low probability to several test points as compared to CMC. Figure 4(a) illustrates that log loss of MCMC continues to decrease with growing sample size up to 2000 samples, implying that MCMC has not yet converged to the equilibrium distribution. Note that log loss of CMC with 200 samples (Fig. 4(b)) is 10 times less than log loss of MCMC with 2000 samples. We also compared the results with the previous proposal [32, 33], and observed that for MovieLens the results are worse than our proposed result as they achieved Inf LL on the last batch.

Effective number of samples: For the synthetic, SNP, and gene expression datasets, we generated 10,000 samples using MCMC. The burn-in period is set to 500 with a lag of 10 yielding to 1000 effective samples. For the MovieLens, we generated 5,000 samples using MCMC with the burn-in period of 1000 and a lag of 2 yielding to 2000 effective samples. We initialized the latent matrices U and V with the factors estimated by PMF, to help the convergence of MCMC. Sample size in CMC procedure is set to 1,000 for all datasets. Note that MCMC alternately sample both latent matrices U and V from a Markov chain and the quality of the posterior improves with increasing number of samples. For the proposed CMC procedure, the bigger matrix V is marginalized and only samples from the smaller U matrix is drawn directly from the true posterior distribution. Hence, CMC has considerably improved sample utilization.

Initialization: As discussed in Sect. 4.3, in order to use the \(\mathcal {MGIG}\) posterior for inference, the covariance structure of matrix X should be estimated. Here we evaluate two approaches to approximate the covariance structure of X: (i) by zero-padding the missing value matrix X, and (ii) by computing the point estimates of the missing entries in X with PMF. CMC with zero-padded initialization has a similar log loss behavior as point estimate initialization with PMF (Figs. 3 (d-f)).

Full sampler vs Mean sampler: Figure 3(f) shows the result of the full sampler (Algorithm 2), and the mean sampler (approximating the inference by estimating \(\bar{\varLambda } = \mathbb {E}_{\varLambda \sim \mathcal {MGIG}} [\varLambda ]\) as discussed in Sect. 4.4) on gene expression data. Since the log losses are similar with both samplers, and the behavior is typical, we presented log loss results on the other datasets only based on the mean sampler, which is around 100 times faster.

Comparison of inferred posterior distributions: To emphasize the importance of choosing the right measure for comparison, e.g., log loss vs RMSE, we illustrate the inferred posterior distributions over several missing entries/ratings in MovieLens obtained from MCMC and CMC in Fig. 5. Note that the scales for CMC (red) and MCMC (blue) are different. Overall, the posterior from CMC tends to be more conservative (not highly peaked), and obtains lower log loss across a range of test points. Interestingly, as shown in Fig. 5(a), MCMC can make mistakes with high confidence, i.e., predicts 5 stars with a peaked posterior whereas the true rating is 3 stars. Such troublesome behavior is correctly assessed with log loss, but not by RMSE since it does not consider the confidence in the prediction. As shown in Fig. 5(d), for some test points, both MCMC and CMC inferred similar posterior distributions with a bias difference where the mean of CMC is closer to the true value.

Time Comparison: We have compared running time in both serial and in parallel over 1000 steps yielding to 200 and 1000 samples for MCMC and CMC, respectively. We implement the algorithms in Matlab. The computation time is estimated on a PC with a 3.40 GHz Quad core CPU and 16.0 G memory. The average run time results are reported in Table 1. For Synthetic, SNP, and gene expression datasets, MCMC converges very slowly. For MovieLens dataset, the running time of both are very close but note that MCMC requires more number of samples for convergence than CMC (Fig. 4).

6 Conclusion

We studied the \(\mathcal {MGIG}\) distribution and provided certain key properties with a novel sampling technique from the distribution and its connection with the latent factor models such as PMF or BPCA. With showing that the \(\mathcal {MGIG}\) distribution is unimodal and the mode can be obtained by solving an ARE, we proposed a new importance sampling approach to infer the mean of \(\mathcal {MGIG}\). The new sampler, unlike the existing sampler [32, 33], chooses the proposal distribution to have the same mode as the \(\mathcal {MGIG}\). This characterization leads to a far more effective sampler than [32, 33] since the new sampler align the shape of the proposal to the target distribution. Although, the \(\mathcal {MGIG}\) distribution has been recently applied to Bayesian models as the prior for the covariance matrix, here, we introduced a novel application of the \(\mathcal {MGIG}\) in PMF or BPCA. We showed that the posterior distribution in PMF or BPCA has the \(\mathcal {MGIG}\) distribution. This illustration, yields to a new CMC inference algorithm for PMF.

References

Anderson, B., Moore, J.: Optimal control: linear quadratic methods (2007)

Bai, Z., Demmel, J.: Using the matrix sign function to compute invariant subspaces. SIAM J. Matrix Anal. Appl. 19(1), 205–225 (1998)

Barndorff-Nielsen, O., Blæsild, P., et al.: Exponential transformation models. Proc. Roy. Soc. London Ser. A 379(1776), 41–65 (1982)

Bishop, C.M.: Bayesian PCA. NIPS 11, 382–388 (1999)

Bishop, C.M.: Variational principal components. In: ICANN (1999)

Blei, D., Cook, P., Hoffman, M.: Bayesian nonparametric matrix factorization for recorded music. In: ICML, pp. 439–446 (2010)

Boyd, S., Barratt, C.: Linear controller design: limits of performance (1991)

Brevern, A.D., Hazout, S., Malpertuy, A.: Influence of microarrays experiments missing values on the stability of gene groups by hierarchical clustering. BMC Bioinform. 5(1), 114 (2004)

Bunse-Gerstner, A., Mehrmann, V.: A symplectic QR like algorithm for the solution of the real algebraic Riccati equation. IEEE Trans. Autom. Control 31, 1104–1113 (1986)

Butler, R.W.: Generalized inverse Gaussian distributions and their Wishart connections. Scand. J. Statist. 25(1), 69–75 (1998)

Byers, R.: Solving the algebraic Riccati equation with the matrix sign function. Linear Algebra Appl. 85, 267–279 (1987)

Eberlein, E., Keller, U.: Hyperbolic distributions in finance. Bernoulli 1, 281–299 (1995)

Herz, C.: Bessel functions of matrix argument. Ann. Math. 23, 77–87 (1955)

Jørgensen, B.: Statistical properties of the generalized inverse Gaussian distribution

Kong, A., Liu, J., Wong, W.: Sequential imputations and bayesian missing data problems. JASA 89(425), 278–288 (1994)

Laub, A.: A Schur method for solving algebraic Riccati equations. IEEE Trans. Autom. Control 24(6), 913–921 (1979)

Lawrence, N.: Probabilistic non-linear principal component analysis with Gaussian process latent variable models. JMLR 6, 1783–1816 (2005)

Lawrence, N., Urtasun, R.: Non-linear matrix factorization with gaussian processes. In: ICML (2009)

Li, T., Chu, E., et al.: Solving large-scale continuous-time algebraic Riccati equations by doubling. J. Comput. Appl. Math. 237(1), 373–383 (2013)

Li, Y., Yang, M., Qi, Z., Zhang, Z.: Bayesian multi-task relationship learning with link structure. In: ICDM (2013)

MacKay, D.: Information theory, inference, and learning algorithms (2003)

Minka, T.P.: Automatic choice of dimensionality for PCA. In: NIPS (2000)

Owen, A.: Monte Carlo theory, methods and examples (2013)

Salakhutdinov, R., Mnih, A.: Probabilistic matrix factorization. In: NIPS (2007)

Salakhutdinov, R., Mnih, A.: Bayesian probabilistic matrix factorization using markov chain monte carlo. In: ICML (2008)

Seshadri, V.: Some properties of the matrix generalized inverse Gaussian distribution. Stat. Methods Pract. 69, 47–56 (2003)

Seshadri, V., Wesołowski, J.: More on connections between Wishart and matrix GIG distributions. Metrika 68(2), 219–232 (2008)

Smith, W., Hocking, R.: Algorithm as 53: Wishart variate generator. Appl. Statist. 21, 341–345 (1972)

Tipping, M.E., Bishop, C.M.: Probabilistic principal component analysis. J. Royal Statist. Soc. Seri. B 61(3), 611–622 (1999)

Wang, H., Fazayeli, F., et al.: Gaussian copula precision estimation with missing values. In: AISTATS (2014)

Wishart, J.: The generalised product moment distribution in samples from a normal multivariate population. Biometrika 20A, 32–52 (1928)

Yang, M., Li, Y., Zhang, Z.: Multi-task learning with Gaussian matrix generalized inverse Gaussian model. In: ICML (2013)

Yoshii, K., Tomioka, R.: Infinite positive semidefinite tensor factorization for source separation of mixture signals. In: ICML (2013)

Acknowledgements

The research was supported by NSF grants IIS-1447566, IIS-1447574, IIS-1422557, CCF-1451986, CNS-1314560, IIS-0953274, IIS-1029711, NASA grant NNX12AQ39A, and gifts from Adobe, IBM, and Yahoo. F.F. acknowledges the support of DDF (2015–2016) from the University of Minnesota.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Fazayeli, F., Banerjee, A. (2016). The Matrix Generalized Inverse Gaussian Distribution: Properties and Applications. In: Frasconi, P., Landwehr, N., Manco, G., Vreeken, J. (eds) Machine Learning and Knowledge Discovery in Databases. ECML PKDD 2016. Lecture Notes in Computer Science(), vol 9851. Springer, Cham. https://doi.org/10.1007/978-3-319-46128-1_41

Download citation

DOI: https://doi.org/10.1007/978-3-319-46128-1_41

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-46127-4

Online ISBN: 978-3-319-46128-1

eBook Packages: Computer ScienceComputer Science (R0)