Abstract

Virtual reality has received a lot of attention lately due to a new wave of affordable HMD devices arriving in the consumer market. These new display devices – along with the availability of fast wireless networking, comprehensive wearable technologies, and robust context-aware devices – are enabling the emergence of a new type of mixed-reality system for games and digital entertainment. In this paper we name this new situation as “pervasive virtuality”, which we define as being a virtual environment that is extended by incorporating physical environments, physical objects as “proxy” elements, and context information. This new mixed reality paradigm is not well understood by both industry and academia. Therefore, we propose an extension to the well-known Milgram and Colquhoun’s taxonomy to cope with this new mixed-reality situation. Furthermore, we identify fundamental aspects and features that help designers and developers of this new type of application. We present these features as a two-level map of conceptual characteristics (i.e. quality requirements). This paper also presents a brief case study using these characteristics.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Nowadays we are witnessing a growing interest in VR technologies, as several manufacturers have announced the development and shipping of high-quality head-mounted display (HMD) devices at affordable prices – examples include the Facebook Oculus Rift, Samsung VR, HTC Vive, and Sony PlayStation VR. The availability of these devices – along with fast wireless networking, comprehensive wearable technologies, and robust context-aware devices (e.g. sensor devices and the internet of things) – brings exciting potential to develop new digital entertainment applications.

Due to these advances, some initiatives have recently emerged by exploring the following pattern in mixed-reality environments: the players wear mobile HMD devices (seeing only virtual content) and are able to move freely in a physical environment, being able to touch physical walls and interact with physical objects, while immersed in the simulation. On the industry side, The VOID [1] and Artanim Interactive’s VR system [2] are notable examples. On the academia side, we developed a similar experience by creating an indoor navigation system for “live-action virtual reality games” [3, 4]. These three examples share similarities that we believe will become a current trend in digital entertainment.

To help in conceptualizing this new kind of entertainment application more precisely, this paper presents the concept of “pervasive virtuality (PV)”. Essentially, PV means “a virtual environment that is extended by the incorporation of physical environments and objects as proxy elements”. At first glance, PV seems to be another name for a well-known mixed-reality situation. However, this is not the case. Observing The VOID and Artanim’s systems [1, 2] reveals a new mixed-reality situation (imprecisely named as “real virtuality” by Artanim [2]), which is not well understood by both industry and academia. Therefore, firstly we argue that “pervasive virtuality” is a more adequate term for this type of mixed reality. Secondly, we claim that the well-known Milgram and Colquhoun’s mixed reality [5] should be extended to accommodate this new type of situation. Thirdly, we believe that pervasive virtuality (which is the situation found in [1, 2]) needs a more precise and useful definition. Our contribution stems from shedding some light in this new type of mixed reality, by creating a common vocabulary and identifying important aspects and features for designers and developers. Research on conceptual characteristics that help the design of mixed-reality applications can be found in other related areas, such as pervasive games [6–9]. Some conceptual characteristics presented in this paper have been inspired by the work in [9], whose authors discuss non-functional requirements (qualities) for pervasive mobile games and provide checklists to assess and introduce these qualities in game projects.

The present paper is organized as follows. Firstly, Sect. 2 defines and characterizes “pervasive virtuality”. Next, Sect. 3 proposes a two-level map of characteristics (i.e. quality requirements), which can describe pervasive virtuality in a more precise way and help the design of new applications. Section 4 presents a brief case study using these characteristics. Finally, Sect. 5 presents conclusions and future works.

2 Pervasive Virtuality

Pervasive virtuality comprises a mixed-reality environment that is constructed and enriched using real-world information sources. This new type of mixed reality can be achieved by using non-see-through HMD devices, wireless networking, and context-aware devices (e.g. sensors and wearable technology). In PV, the user moves through a virtual environment by actually walking in a physical environment (exposed to sounds, heat, humidity, and other environmental conditions). In this mixed environment, the user can touch, grasp, carry, move, and collide with physical objects. However he/she can only see virtual representations of these objects. Even when a user is physically shaking hands with another user, he/she has no idea about the real characteristics of this other user (such as gender, appearance, and physical characteristics).

This new type of mixed-reality application has emerged recently, with several similar initiatives appearing almost simultaneously in the industry (“The VOID” [1] and “real virtuality” [2]) and academia (“live-action virtual reality game” [4]). An earlier similar academic initiative was “virtual holodeck” [10]. The experience in this new type of mixed-reality is extremely intense and immersive. However, being a new area, the literature lacks proper definitions, design principles, and methods to guide designers and researchers. This paper contributes to shed more light on these issues. We start by defining PV considering the traditional reality-virtuality continuum [5].

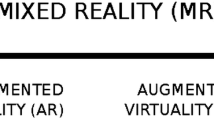

2.1 A New Reality-Virtuality Continuum Axis

Milgram and Colquhoun [5] proposed a mixed-reality taxonomy with visual displays in mind. Yet, most mixed-reality applications found in the literature simply juxtaposes real and virtual objects through the projection of visual artefacts. For instance, a common example of augmented virtuality is the video of a real human face projected on a 3D mesh of an avatar’s head in a virtual world. Essentially, in these applications, “augmented virtuality” consists of a virtual world augmented with the mapping of an image or video from the real world in virtual objects, and “augmented reality” is the same process the other way round. Therefore, we need to extend Milgram and Colquhoun’s taxonomy to accommodate other forms of mixed reality.

We need a taxonomy that can cope with situations where real physical objects are transformed into virtual objects, and vice-versa (i.e. virtual objects become real objects). We propose to identify the first situation as “pervasive virtuality” and the later situation as “ubiquitous virtuality”. These situations represent a better fusion of reality and virtuality, which goes beyond a simple mapped visual projection. In these new environments, transformed objects should work like a proxy. Figure 1 illustrates a proposal to extend the Milgram and Colquhoun’s taxonomy with our new concepts.

Augmented virtuality is different from pervasive virtuality, as in the first one real world objects are projected in virtual content and the HMDs that the user wears (if any) necessarily are see-through devices. Examples of augmented virtuality can be found in [11, 12].

Pervasive virtuality is different from the mixed-reality found in “pervasive games” [9], although they may share similarities (e.g. context-awareness). Essentially, pervasive games are based on the idea of a real-world augmented with virtual content (left of Fig. 1) through context-awareness, made possible through sensor devices placed in the physical environment and carried by players (in mobile and wearable devices) while they move in the real-world.

Our concept of ubiquitous virtuality (UV) is aligned with well-known definitions of this term in the literature [13], which means that this type of mixed-reality integrates virtual objects seamlessly in the real environment and preserves as many human senses as possible. However, transforming virtual objects into real ones is a much more complicated affair and we have almost no examples to produce. Computational holography is a potential technology in this regard, but this is a research area still in its infancy. Smart materials that can change their shape in particular magnetic fields or change their texture in response to a voltage change could also be used, but they are in an experimental stage. In the realm of science fiction, UV would be the Star Trek Holodeck. Figure 1 illustrates that PV enhances visual virtual environments towards what we consider the true “virtual reality” – an environment with a strong sensation of immersion and presence due to the existence of several human senses. On the other hand, UV tends to evolve towards what we call “real virtuality” – a virtual world so convincing that we cannot easily distinguish it from the real world. As a last observation about Fig. 1, we can expect that a continuum of mixed reality situations connect its two ends (Real Virtuality and Virtual Reality).

PV transforms physical objects into virtual equivalents (also named “proxy objects” in [10]) by representing their geometry in the virtual environment and tracking them with wireless networking systems. Furthermore, pervasive virtuality maps the real environment into the virtual environment through the compression and gain factors that apply to a user during his/her immersion in virtual environments. For example, the user’s real movements always occur over smaller (and curved) paths when compared to his/her virtual paths (Fig. 2). For example, these compression factors allow a user to walk through a long and intricate virtual map by walking in circles over a small physical area. Steinicke et al. [10] present several variables on which these factors apply, such as: user’s head rotation, path curvature, scaling of translational movements, and scaling of objects (and/or the entire environment).

(inspired in [10])

Mapping real movements into virtual ones

2.2 Defining and Characterizing PV

In the context of the previous section, we define pervasive virtuality (PV) as follows. PV is a mixed reality where real, physical, objects are transformed into virtual objects by using real-world information sources through direct physical contact and context-aware devices (e.g. sensors and wearable technology). In PV users wear non-see-through HMDs all the time, which means that they do not see any real-world contents. PV usually requires intensive use of compression and gain factors on external world variables to adjust the transformation between reality and virtuality.

PV can be better understood by exploring its characteristics (as Sect. 3 describes), which are essentially “quality requirements” (or “non-functional requirements”). In this Sect. (2.2) we summarize the concepts underlying those requirements. While doing so we make references to subsections of Sect. 3 to correlate descriptions with specific characteristics/requirements, to help in understanding the new concepts better.

All content that a user experiences in PV is virtual – the simulation uses digital content and generates virtual content based on real world information sources. These information sources are: (1) the physical environment architecture; (2) physical objects that reside in the environment; and (3) context information (see Sects. 3.1 and 3.6).

PV takes place in a “simulation stage” (or “game stage”, in the case of games), which consists of a physical environment (e.g. a room, school floor, or museum) equipped with infrastructure to support the activities (e.g. wireless networking, sensors, and physical objects). The simulation (or game) uses these elements to create the mixed reality.

In PV, a user wears a non-see-through HMD device and walks in the physical environment (Sect. 3.3), being able to touch physical walls and other elements. The user sees a 3D virtual world through the HMD and does not see the real world. The simulation constructs a virtual world based on the physical environment architecture (i.e. the first information source, as a 1:1 matching), keeping these two worlds superimposed (Sects. 3.1, 3.7). The simulation detects physical objects (e.g. furniture, portable objects, and users’ bodies – the second information source) and maps them into virtual representations, which are then displayed to the user (Sect. 3.1). Users touch, grasp, carry, and move these physical objects, but they only see their virtual representation (Sects. 3.1, 3.5).

The third information source is context information [14] (Sect. 3.6), which the simulation may use to generate virtual content and to change the rules or simulation behaviour (i.e. unpredictable game experiences and emergent gameplay). Examples of context information include: (1) player information (e.g. physiological state, personal preferences, personality traits); (2) physical environment conditions (e.g. temperature, humidity, lighting conditions, weather); (3) information derived from the ways a player interacts with physical objects and input devices; and (4) information derived from relationships and interactions among players in the game (the social context).

A PV application may respond back to the user through various channels and various types of media (Sect. 3.4). Some of these channels may be worn or carried by users (Sect. 3.1), and some of them correspond to physical objects that are spread in the physical space (e.g. smart objects and environment devices, Sects. 3.4, 3.6). Finally, users may interact in PV through multiple modalities (e.g. voice, body movements, and gestures), ordinary physical objects, and context-aware devices, supporting tangible, body-based, and context-aware interaction paradigms (Sect. 3.5).

3 Pervasive Virtuality Characteristics

Figure 3 illustrates a map with two levels of characteristics, which can be understood as quality requirements to use for PV system design. Each one of the seven first-level characteristics is subdivided into further sub-aspects, which represent more specific issues. In future works, we intend to explore the interdependences among these characteristics, similarly as in [9]. This section presents a brief description of these characteristics (i.e. quality requirements).

3.1 Virtuality

Virtuality (Vir) regards handling the virtual aspects in PVs, including Virtual world generation (VWG) and Virtual content presentation (VCP). Virtual world generation regards procedures to generate the 3D virtual world based on physical world structure, which may be a real-time process or may be a pre-configuration step (i.e. mapping the environment before the simulation runs), for example. Virtual content presentation concerns issues about how the simulation presents the virtual world and virtual content to users. For example, PV may present content through HMDs, wearable devices (capable of providing haptic feedback), the underlying physical structure, and physical objects (e.g., touching physical walls, tables, and holding small objects). In case of HMDs, there are issues that the simulation must address properly, such as adverse effects on users (e.g. nausea, motion sickness). Hearing may be stimulated by isolating or non-isolating headphones and smart objects (Sect. 3.4). VCP also relates to presence in virtual environments [15].

3.2 Sociality

Sociality (Soc) refers to social aspects and social implications of the simulation. Social presence (SP) concerns “how people experience their interactions with others and refers to conditions that should be met in order to experience a sense of co-presence (i.e. mutual awareness)” [16]. Social presence may happen among real people and/or among users and virtual characters. For example, a simulation may foster social relations by providing team play activities that require collaboration. A stronger possibility in this example may be activities that require collaboration due to complimentary user roles.

Finally, Ethical concerns (Eth) regards topics related to the well-being of users and ethical issues. For example, Madary and Metzinger [17] discuss issues about virtual environments influencing user’s psychological states while using the system, lasting psychological effects after the simulation is over (e.g. long-term immersion effects, lasting effects of the illusion of embodiment, undesired behaviour change).

3.3 Spatiality

Spatiality (Spa) regards aspects related to the physical space usage. In particular, Mobility concerns issues related to the free movement of players in the physical environment. Some examples are providing adequate network support that covers the entire simulation stage, requirements of user movement due to physical space size and interaction with physical objects, and how to simulate a large virtual environment in a confined space (e.g. redirected walking techniques [18] as in Fig. 2).

3.4 Communicability

Communicability (Comm) concerns aspects about the infrastructure that the simulation uses to communicate with users and other simulation components. Connectivity (Con) refers to the networking infrastructure that is required to support activities in the simulation (and associated issues). For example, PVs may require wireless local networking with specific requirements (e.g. low latency).

Game stage communication (GSC) refers to the communication channels that the game uses to exchange information with players in the game stage. For example, environment devices [9] are objects placed in the physical environment and may output information (e.g. audio), generate effects in the physical world (e.g. smells, wind, heat, cold, spray water, open doors, and move elevators).

3.5 Interaction

Interaction (Int) refers to interaction paradigms in PV. So far we identify these important paradigms that contribute to create the “live-action play” aspect of PV: Tangible interaction (TI), Body-based interaction (BI), Multimodal interaction (MI), and Sensor-based interaction (SI). These interaction paradigms may be facilitated through wearable devices (e.g. smart bands, motion sensors) and dedicated infrastructure (e.g. motion-capture cameras).

Multimodal interaction (MI) corresponds to interaction through multiple modalities such as voice input, audio, and gestures. Tangible interaction (TI) represents the “tangible object metaphor” as first defined by Ishii and Ullmer [19]. In TI, a user interacts with the simulation by manipulating context-aware mobile devices (e.g. portable device equipped with sensors) and ordinary physical objects (e.g., wood sticks, rocks). In PV, the simulation tracks all these devices and displays a correspondent virtual representation to the user through a HMD. For example, a real wood stick could become a virtual sword in the simulation. Body-based interactions (BI) represent interactions through body movements such as jumping, spinning, walking, running, and gestures. Sensor-based interaction (SI) represents interactions of implicit nature [20] based on sensor devices. An example is “proximity interaction”, where the simulation triggers events when a sensor detects the presence of a user.

3.6 Context-Awareness

Context-awareness (CA) refers to acquiring and using context information in simulation activities. The simulation is able to sense context information through several means, such as: (1) The physical place where the game happens may host physical objects that are equipped with sensors (e.g. smart objects in [9]). These devices may be connected to other similar objects and to game servers; (2) The player carries or wears devices that sense context from the environment and/or from the player; and (3) The game queries remote information about the player based on the player identity (e.g. social network profiles).

In this regard, Dynamic Content Generation (DCG) refers to creating game content dynamically through context. The simulation also may use context to adapt rules, change simulation behaviour, and virtual world content (Gameplay adaptability – GpA).

3.7 Resilience

Resilience (Res) refers to how a game is able to cope with technology uncertainties (i.e. in sensors and networking) to prevent them from breaking immersion and the simulation experience. These uncertainties stem from inherent technology component limitations such as accuracy, precision, response time, and dependability. For example, in PV the tracking problem is a key issue as: (1) sensor technologies ignore physical world boundaries; (2) sensor technologies may not be able to track objects moving above a certain speed threshold. We identify three important aspects for resilience: Uncertainty handling policy (UHP), Game activity pacing (GAP), and Mixed-reality consistency (MRC).

Uncertainty handling policy (UHP) refers to specific strategies a simulation may use to handle uncertainties. Valente et al. [9] discussed five general strategies to approach these issues (e.g. hide, remove, manage, reveal, exploit). Game activity pacing (GAP) refers to how the pacing of activities might interfere with the operations of specific technologies (e.g. sensors), and vice-versa, and what to do about this issue.

Mixed-reality consistency (MRC) refers to how a game keeps the physical and virtual worlds superimposed and synchronized (in real-time) without negative side-effects that a player might perceive. For example, a key element in the PV pipeline is tracking of physical objects and physical elements (e.g. architecture). PV may use different approaches, such as sensors or computer vision. A PV simulation can process tracking through the mobile user equipment (e.g. “inside processing” in portable HMDs, cameras, and computers) or through a dedicated infrastructure (e.g. “outside processing” using cameras fixed in the physical infra-structure and dedicated computers).

4 Case Study

This section describes briefly how the aspects that Sect. 3 describes apply to a proof-of-concept PV demo that we developed in previous works [4] (as a “live-action virtual reality game”). In this game demo the player carries a smart object that simulates a gun. The player wanders around a small room freely, shooting virtual aliens that try to invade the environment. The physical room is equipped with several infrared markers that are used in the mapping (Virtual world generation) and tracking processes (Mixed-reality consistency). The tracking hardware consists of a portable computer and an Oculus Rift device equipped with two regular cameras (one for each eye), which have been modified to capture infrared light only.

Virtuality.

Considering Virtual world generation (VWG), the application requires a pre-processing step to build the virtual world. In this process, a user (non-player) walks in the physical environment wearing the tracking hardware to scan all infrared markers fixed on the room walls. The system uses this information to create the first version of the 3D world. Later, a designer tweaks this 3D model manually. Considering Virtual content presentation (VCP), the demo provides information through the HMD and headphones. During our preliminary tests, one player (out of twelve) experienced adverse effects such as headaches and disorientation.

Sociality.

Considering Social presence, in the current version there is only one active avatar (the player character) in the game stage, which means that Social presence does not apply. Regarding Ethical concerns, we plan to create an evaluation plan to assess how these experiences might affect players when we test this application with a broader audience.

Spatiality.

Considering Mobility, the current version takes place in a 5 m × 5 m room. The demo does not use redirected walking techniques to simulate a larger environment.

Communicability.

Considering Connectivity, in the current version the simulation does not use networking as the mobile player equipment is self-contained (i.e. performs all processing). However, we are experimenting with environment devices and smart objects spread in the room that communicate with the player equipment using radio frequency modules and Wi-Fi. Considering Game stage communication, it does not apply to the current version.

Interaction.

The demo uses tangible interaction – the player manipulates a smart object as a gun.

Resilience.

As a proof-of-concept, the demo does not implement Uncertainty handling policy. However, we are working on “manage” strategies [9] as “fallback options” for networking operation: the player equipment communicates with smart objects through RF modules (the fastest option), if this option does not work adequately then the game tries Wi-Fi; if this also fails then the game tries Bluetooth. Considering Game activity pacing, the speed that the player moves the gun object has not affected how the game operated. However, we are still experiencing issues if the player equipment loses sight of the infrared markers. Considering Mixed-reality consistency, tracking errors accumulate as the player moves in the environment as the system uses relative position of some markers in the tracking process. To solve this issue, the environment contains special markers that contain absolute physical position, which the tracking system uses to correct tracking information.

Context-Awareness.

Dynamic content generation does not apply to the demo. Considering Gameplay adaptability, the current version does not use it. However, we are experimenting with temperature sensors to change virtual world colours according to physical temperature (Fig. 4). In Fig. 4, the laptop displays what the player sees through the HMD. To the left of the player’s hand, there is an environment device (a heater) that becomes a fireplace in the virtual world. In this example the player equipment contains a temperature sensor. The demo senses the current temperature and modifies the fireplace animation according to this information: if the temperature is higher, then the virtual fireplace gets bigger.

5 Conclusions

In this paper we started to conceptualize a new paradigm that has emerged recently through the new trend of affordable HMD devices coming to the market, as well as the availability of fast wireless networking, wearable technology, and context-aware devices. In previous works [3], we started exploring this new entertainment paradigm as “live-action virtual reality games”. However, instead of providing formal definitions about this kind of application, in this paper we set out to identify an initial set of characteristics (i.e. quality requirements) that may contribute to implement this new paradigm. We believe that this approach may lead to more practical results (e.g. helping designing and developing these applications) instead of trying to formulate abstract definitions – this is an approach that echoes other works about fuzzy definitions in game research such as “pervasiveness” [21].

The initial set of aspects that we presented is not a complete set, but it is a starting point to foster discussion and further research agendas. Also, these aspects do not exist in isolation as several of them have interdependences and specific game features may require several aspects. For example, in a work in progress we are experimenting with ultrasonic sensors to detect player presence, so as to open a door in a game activity and play a sound in a loudspeaker. This is a simple example of Game stage communication that requires Context-awareness and Interaction (e.g. proximity interaction).

Future works include elaborating questions to assess the characteristics, refining this initial set, discovering interdependences, discovering more characteristics, developing applications based on this material, analysing other applications that share similar concerns, and feed all this material back again in this research process.

References

The VOID: The vision of infinite dimensions. https://thevoid.com/

Artanim: Real Virtuality. http://artaniminteractive.com/real-virtuality/

Valente, L., Clua, E., Ribeiro Silva, A., Feijó, B.: Live-action virtual reality games. Departamento de Informática. PUC-Rio, Rio de Janeiro (2015)

Silva, A.R., Clua, E., Valente, L., Feijó, B.: An indoor navigation system for live-action virtual reality games. In: Proceedings of SBGames 2015, pp. 84–93. SBC, Teresina (2015)

Milgram, P., Colquhoun Jr., H.: A taxonomy of real and virtual world display integration. In: Ohta, Y., Tamura, H. (eds.) Mixed Reality, pp. 1–26. Springer, Berlin (1999)

Walther, B.K.: Notes on the methodology of pervasive gaming. In: Kishino, F., Kitamura, Y., Kato, H., Nagata, N. (eds.) ICEC 2005. LNCS, vol. 3711, pp. 488–495. Springer, Heidelberg (2005)

Guo, B., Zhang, D., Imai, M.: Toward a cooperative programming framework for context-aware applications. Pers. Ubiquit. Comput. 15, 221–233 (2010)

Nevelsteen, K.J.L.: A Survey of Characteristic Engine Features for Technology-Sustained Pervasive Games. Springer International Publishing, Cham (2015)

Valente, L., Feijó, B., do Prado Leite, J.C.S.: Mapping quality requirements for pervasive mobile games. Requirements Eng. 1–29 (2015)

Steinicke, F., Ropinski, T., Bruder, G., Hinrichs, K.: The holodeck construction manual. In: ACM SIGGRAPH 2008 Posters, pp. 97:1–97:3. ACM, New York (2008)

Bruder, G., Steinicke, F., Valkov, D., Hinrichs, K.: Augmented virtual studio for architectural exploration. In: Proceedings of Virtual Reality International Conference (VRIC 2010), pp. 43–50. Citeseer, Laval (2010)

Paul, P., Fleig, O., Jannin, P.: Augmented virtuality based on stereoscopic reconstruction in multimodal image-guided neurosurgery: methods and performance evaluation. IEEE Trans. Med. Imaging 24, 1500–1511 (2005)

Kim, S., Lee, Y., Woo, W.: How to realize ubiquitous VR?. In: Pervasive: TSI Workshop, pp. 493–504 (2006)

Dey, A.K.: Understanding and using context. Pers. Ubiquit. Comput. 5, 4–7 (2001)

Sanchez-Vives, M.V., Slater, M.: From presence to consciousness through virtual reality. Nat. Rev. Neurosci. 6, 332–339 (2005)

Wolbert, M., Ali, A.E., Nack, F.: CountMeIn: evaluating social presence in a collaborative pervasive mobile game using NFC and touchscreen interaction. In: Proceedings of the 11th Conference on Advances in Computer Entertainment Technology, pp. 5:1–5:10. ACM, New York (2014)

Madary, M., Metzinger, T.K.: Real virtuality: a code of ethical conduct. recommendations for good scientific practice and the consumers of VR-technology. Front. Robot. AI. 3 (2016)

Steinicke, F., Bruder, G., Jerald, J., Frenz, H., Lappe, M.: Estimation of detection thresholds for redirected walking techniques. IEEE Trans. Vis. Comput. Graph. 16, 17–27 (2010)

Ishii, H., Ullmer, B.: Tangible bits: towards seamless interfaces between people, bits and atoms. In: Proceedings of the SIGCHI conference on Human factors in computing systems, pp. 234–241 (1997)

Rogers, Y., Muller, H.: A framework for designing sensor-based interactions to promote exploration and reflection in play. Int. J. Hum Comput Stud. 64, 1–14 (2006)

Nieuwdorp, E.: The pervasive discourse: an analysis. Comput. Entertain. 5, 13 (2007)

Acknowledgments

The authors thank CAPES, FINEP, CNPq, FAPERJ, and NVIDIA for the financial support to this paper.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 IFIP International Federation for Information Processing

About this paper

Cite this paper

Valente, L., Feijó, B., Ribeiro, A., Clua, E. (2016). The Concept of Pervasive Virtuality and Its Application in Digital Entertainment Systems. In: Wallner, G., Kriglstein, S., Hlavacs, H., Malaka, R., Lugmayr, A., Yang, HS. (eds) Entertainment Computing - ICEC 2016. ICEC 2016. Lecture Notes in Computer Science(), vol 9926. Springer, Cham. https://doi.org/10.1007/978-3-319-46100-7_16

Download citation

DOI: https://doi.org/10.1007/978-3-319-46100-7_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-46099-4

Online ISBN: 978-3-319-46100-7

eBook Packages: Computer ScienceComputer Science (R0)