Abstract

System-on-Chip (SoC) technology enables integrating all the functionality required to control and process science data delivered by space instruments in a single silicon chip (e.g., microprocessor + programmable logic). This chapter discusses the implications of using this technology in deep-space exploration avionics, namely in the next generation of NASA science instruments that will be used to explore our Solar system. We present here our experience at the NASA Jet Propulsion Laboratory (JPL) using Xilinx Zynq SoC devices to implement the data processing of a Fourier transform spectrometer, namely the Compositional InfraRed Imaging Spectrometer (CIRIS). Besides, we also discuss the different fault-tolerance techniques that have been implemented in the CIRIS controller SoC to deal with harsh radiation conditions prevailing in deep-space environments.

Xabier Iturbe was also affiliated with the NASA Jet Propulsion Laboratory, California Institute of Technology, when conducting this research.

Patrick Yiu was affiliated with the California Institute of Technology when conducting this research.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Hybrid System-on-Chip (SoC) devices that embed the most energy efficient processor (ARM cores [1]) and the latest and most powerful FPGA architecture (Xilinx 7-series [2]) into a single chip (Xilinx Zynq [3]) promise new opportunities due to the performance, power consumption, weight and volume benefits they bring. This is especially relevant for building more capable space avionics. Currently most of these systems combine programmable logic and processors as separate components distributed along one or several PCB board(s), which results in power consumption overheads and larger volume to be put into space [4, 5]. Besides, currently existing space-grade processors (e.g., RAD750 [6]) are not suitable to be used in the next-generation spacecraft computing platforms because they do not provide sufficient performance and energy efficiency [7]. As a result, NASA and other space agencies have approached ARM and SoC technology, hoping to pave the way for future space exploration missions that are becoming ever more performance demanding.

Despite the fact that currently there are no space-qualified SoC parts, NASA is testing commercial Xilinx Zynq SoC devices in the International Space Station (ISS) as well as in precursor CubeSats operating in Low Earth Orbit (LEO), where the exposure to radiation is limited.

In view of a potential radiation-hardened SoC device that might be ready to fly in deep-space missions in the near to mid future, JPL and ARM have partnered together to develop a SoC platform to be used as a research vehicle for powering next-generation flight instruments intended to be used in NASA deep-space missions. Presently this platform, called APEX-SoC (APEX stands for Advanced Processor core for space EXploration), is being prototyped using a commercial Xilinx Zynq device. The APEX-SoC includes a generic and adaptable infrastructure that provides support for hardware and software based science processing. More specifically, the data acquisition and processing proper to each science instrument is to be implemented as a collection of “custom software and hardware applications” that are encapsulated by the APEX-SoC infrastructure and run on the Zynq’s on-chip ARM processor and reside on the Zynq’s FPGA fabric. Besides the infrastructure itself, the APEX-SoC includes a set of Radiation Hardened By Design (RHBD) features to protect the instrument-dependent modules implemented on the FPGA fabric from harsh space radiation. In connection with this, we are currently carrying out two research efforts to create a space-grade ARM processor that could potentially replace commercial ARM processors embedded in future radiation-tolerant SoC devices. First, we are conducting a thorough soft-error analysis of the ARM Cortex-R5 microprocessor, which is currently used in terrestrial safety-critical real-time applications, to identify the most vulnerable parts in the micro-architecture of this processor, analyze what level of protection is required for these vulnerable parts (e.g., detection only, correction only or hybrid), and then decide how to achieve this level of protection. Secondly, we are designing a Cortex-R5 based fail-operational Triple Core Lock-Step ARM processor (TCLS-ARM) with the capability to recover from errors within microseconds [8].

This chapter describes the first prototype of the APEX-SoC platform implemented on the Zynq SoC and presents an illustrative case-study drawn from the JPL Compositional Infrared Imaging Spectrometer (CIRIS) [11], which has been proposed to be used in icy moons, such as Jupiter’s moon Europa [12]. The remainder of this chapter is as follows. Section 2 introduces the SoC technology and its use in space missions so far. Section 3 describes the APEX-SoC platform, and then Sect. 4 presents a case-study where the JPL CIRIS spectrometer data processing is implemented on this platform. Section 5 summarizes the implementation, performance and irradiation results that have been collected so far and, finally, Sect. 6 concludes the chapter and points out to future work.

2 System-on-Chip Technology and Its Use in Space Exploration Avionics

Despite miniaturized SoC technology is very convenient for space, where every gram of mass launched involves enormous costs, it is currently designed for and used by consumer terrestrial applications, where a single device with very low power consumption has found a niche in the network and telecommunication markets. Commercial SoCs have developed very advanced computation capabilities in consumer electronics that is continuously demanding more powerful devices and applications. One example of the sophistication degree achieved by commercial SoCs is the Xilinx Zynq-UltraScale+ MPSoC that is scheduled for release in early 2016 [13]. This will include an ARM Cortex-A53 high-performance 64-bit processor, an ARM Cortex-R5 real-time processor and a Xilinx UltraScale FPGA architecture.

Current SoC devices available in the market typically include at least one processor and an FPGA fabric. Since ARM cores are the standard processors used in all SoCs, the difference between them comes from the FPGA fabric they use. This fabric embeds routing resources, programmable logic, DSP and RAM blocks together with the memory cells to store their configuration.

Although the current use of SoCs is largely limited to terrestrial applications, space agencies consider this technology could be an alternative to overcome the current performance crisis seen in the space sector [7] as long as it develops an adequate degree of reliability to operate in harsh space environments. Indeed, when used in space, both ARM cores and FPGA fabric embedded in SoCs are vulnerable to radiation-induced soft-errors [9, 10], which pose a greater reliability threat to SRAM-based FPGAs, such as those from Xilinx and Altera. In the latter FPGAs, the charged particles and outer radiation in general can alter the configuration information stored in SRAM-based memory cells, resulting in undesired logic functions implemented in the programmable logic and/or wrong inter-connections between the components. On the other hand Microsemi uses flash memory in its FPGA fabric, which is more resilient to radiation provoked soft-errors but allows for lower integration density, thus delivering more modest computation capabilities. Scrubbing is a classical method to protect the configuration memory in SRAM-based FPGAs. This technique consists in periodically checking the Error Correction Codes (ECCs) associated to the configuration information stored in the FPGA configuration memory and correct any errors that might have been occurred by rewriting the correct value, which is typically stored in an external rad-hard non-volatile flash memory. That said, Xilinx has released several generations of radiation-hardened FPGAs (e.g., Virtex-5QV [14]) and software tools for making designs fault-tolerant (e.g., Xilinx TMR Tool [15]) that are used in a number of space systems. The Xilinx roadmap includes the development of a radiation-tolerant SoC technology as well as the necessary software tools for creating fault-tolerant designs on it.

Current space instrument payload systems typically include either a flash-based FPGA (e.g., Microsemi ProASIC3 [16]) or a rad-hard SRAM-based FPGA (e.g., Xilinx Virtex5-QV) for implementing data acquisition, synchronization and processing, and an antifuse-based FPGA (e.g., Microsemi RTAX [17]) for implementing data communications with spacecraft main computer, internal bus handling, housekeeping data collection and management of the configuration of the SRAM-based FPGA. In applications that are critical for spacecraft mission, such as Guidance Navigation and Control (GNC), the antifuse FPGA is replaced by a rad-hard processor such as a BAE Systems RAD750 [6] or a Cobham Gaisler Leon3 [18]. A couple of recent NASA instruments that use this classic architecture are the ChemCAM on the Mars Curiosity rover [4] and the Goddard Space Flight Center (GSFC) SpaceCube [5]. Hence, a SoC that includes these two components (processor + programmable logic) into a single chip is perfectly suited for space instrument payload systems.

Two are the reasons that have made us choose Xilinx Zynq SoC to prototype our APEX-SoC platform. First, Xilinx is one of the vendors with the most advanced SoC technology roadmap, which also addressed radiation-hardened FPGAs. Second and most important, NASA has recently approached Xilinx technology in the scope of its CubeSat Launch initiative (CSLI), as described in the paragraph below. Xilinx Zynq SoCs integrate a dual-core ARM Cortex-A9 centric Processing System (PS) and a 28 nm Xilinx 7-Series (Artix-7 or Kintex-7) Programmable Logic (PL) fabric. The chip includes abundant on-chip AXI ports with low power rails to communicate the PS with the PL, which results in substantially less power consumption, considerably higher bandwidth and lower latency.

The Xilinx Zynq SoC is in the heart of the Computer Space Processor (CSP) designed by the National Science Foundation (NSF) Center for High-performance Reconfigurable Computing (CHREC) and licensed for fabrication to Space Micro Inc. [19–21]. The CSP uses a combination of commercial and rad-hard components, where commercial devices perform critical computations and are supervised by the rad-hard devices (e.g., reset and watchdog circuits). This Zynq-based processor will be part of future NASA missions such as the Space test Program-Houston-ISS-5 SpaceCube experiment [22] and the Compact Radiation bElt Explorer (CeREs) heliophysics CubeSat [23]. PlanetiQ Inc. will also integrate 3 CSPs on each of the 12 LEO weather satellites scheduled to be launched in 2017. In addition to these space missions, the CSP has been tested in neutron radiation and heavy-ion environment by Brigham Young University [24]. JPL, Xilinx and Swift LLC have also tested the Xilinx SoC part and other Xilinx 7-series FPGAs under heavy-ions radiation [25–27].

3 The APEX-SoC Platform and Infrastructure

The APEX-SoC platform is currently prototyped on a ZedBoard mini-ITX board, which is populated with a Xilinx Zynq 7Z100 SoC device. An FMC board containing an ADC is attached to the ZedBoard to deal with the analog electrical signals that are typically delivered by space science instruments. The APEX-SoC platform is also coupled with two external DDR memories to enable its use with instruments that generate large amounts of data: the PS-DDR is solely dedicated to the ARM processor in the Zynq, while the PL-DDR is used as scratchpad memory by the data processing modules implemented on the FPGA fabric and is also accessible by the ARM processor to retrieve the intermediate results computed by these. The last external component connected to the APEX-SoC is a SATA Solid State Device (SSD). This is used to temporarily store the (likely large amounts of) science results produced by the APEX-SoC until a downlink communication window with Earth is available, allowing for creating independent and stand-alone instruments avionics subsystems. The typical data-flow in the APEX-SoC is thus as follows: (1) the instrument data is acquired and processed by the FPGA logic, (2) the computed intermediate results by the FPGA logic are DMA-transferred to the DDR memory dedicated to the ARM processor for final processing, and (3) the final results are copied to the SSD prior to being downloaded to Earth.

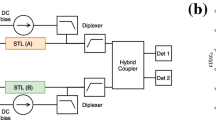

The APEX-SoC provides support for integrating multiple identical data processing stages that can be used to process different science data in parallel to increase performance, or to detect computation errors by comparing their results when they process the same science data. This flexibility is needed when the requirements might change during the mission.

Figure 1 shows a block diagram of the APEX-SoC architecture. The following subsections describe the major aspects related to this architecture as well as the main fault-tolerance mechanisms that are implemented on it.

3.1 ARM-Centric Processing System

The ARM-centric PS includes all the peripherals that are typically required by flight science instruments, including: DMA support, GPIOs, Ethernet, SATA, interrupt controller and a memory-mapped register bank to exchange state and configuration data with the FPGA processing logic. As previously mentioned, process data are exchanged with the FPGA logic through the DMA-accessible PL-DDR memory. In order to speed-up the development of APEX-SoC-based instruments avionics, one of the ARM cores runs a standard Linux-based operating system, which provides Ethernet protocol to communicate with the spacecraft’s main computer and a file system to ease the management of science results stored in the SSD. The second ARM core can be dedicated for software-based processing of instrument data. One scenario where software processing is convenient is when dealing with floating-point intensive algorithms, which can be easily computed using the NEON Floating Point Unit (FPU) [28] available in the ARM processor. A Real-Time Operating System (RTOS) can be deployed in this core to use software multitasking to extend the hardware parallel processing carried out in the FPGA fabric while ensuring a sustainable use of CPU by all of the tasks [29].

3.2 Data-Flow Infrastructure

Each data processing module in the FPGA fabric is assigned a private data segment in the PL-DDR, with its size depending on the computing needs of that particular module. In order to exploit the full bandwidth delivered by the PL-DDR memory (6.4 GB/s) and to support the parallel/redundant execution of the hardware modules, the APEX-SoC implements eight 32-bit DDR access ports at 200 MHz using Xilinx-provided AXI-Stream Data Movers, which act as DMA controllers for the FPGA processing logic [30]. One of the DDR ports is dedicated to the instrument data acquisition logic (shown in blue color), another one is assigned to the ARM DMA, and the remaining six ports are connected to a crossbar that multiplexes them among the instrument data processing stages. The objective of this crossbar is thus to create as many communication channels as needed by the instrument-dependent modules using the physically available DDR ports. A data-flow controller drives the connections in the crossbar and schedules the PL-DDR accesses to maximize performance. For each data transfer, it specifies the memory address and size of the data segment to be read or written to the corresponding Data Mover. The data-flow controller is based on a tiny Xilinx 8-bit PicoBlaze processor [31], which consumes only 26 LUTs in the Zynq FPGA fabric, and implements a collection of reusable assembler routines that provide the required flexibility to deal with a wide range of instruments. Most of the HDL code used to describe the APEX-SoC infrastructure is also parameterizable and can be easily customized to the needs of any instrument.

3.3 Fault-Tolerance Features

The temperature on the Zynq die is continuously monitored using an on-chip sensor (see XADC in Fig. 1) [32] to identify and prevent overheat situations that could lead to the eventual destruction of the chip. Excessive noise situations in the power supply are also detected with this sensor. These may indicate that there is a problem with the voltage regulators, power lines in the PCB or even in the spacecraft power subsystem. Finally, the PL-DDR AXI Stream ports are continuously monitored to detect stuck-at situations and errors in memory data transfers. All storage resources in the APEX-SoC platform are protected with ECCs. The Xilinx ECC solution built in the silicon of the Zynq is used for the PS-DDR, whereas a custom ECC logic for the PL-DDR is implemented on the FPGA fabric. This ECC logic uses Hamming (32, 26) codes to protect the data words transferred through each of the PL-DDR ports and is pipelined to maximize performance. It allows for detecting and automatically correcting single bit flips (e.g., radiation-induced SEUs) in a PL-DDR data word and detecting, but not correcting, double bit errors. Note that the possibility that multiple bit errors are accumulated in the same data word is small, as the ECC logic corrects every single bit flip that might have occurred in the short period of time data remains stored in the PL-DDR memory between consecutive write accesses. Data words affected by uncorrectable double bit errors can be either replaced by zeros or with the interpolated value of the two neighboring samples. All the finite state machines in the APEX-SoC are implemented using “one-hot” encoding, in such a way that radiation-provoked upsets in state flip-flops result in the state machine flow being redirected to an “illegal” state that signals the ARM processor the error situation. The correctness of the configuration data stored in the Zynq configuration memory is periodically checked by a Xilinx Single Event Mitigation (SEM) controller [33]. Single-bit upsets are automatically fixed by the Xilinx SEM, and in the event of a double bit upset, the ARM processor carries out a full reconfiguration of the FPGA fabric.

3.4 Reliability Mode

As previously introduced, the APEX-SoC permits to increase system reliability by using multiple identical data processing stages in an N-out-of-M scheme. The number of M redundant stages that can be implemented is only limited by the amount of FPGA resources available on the fabric and the energy budget, however Dual Modular Redundancy (DMR) or Triple Modular Redundancy (TMR) are typically used. In all cases, three redundant copies of the same science data are kept in the PL-DDR memory and replicated majority voters are connected both at the input and output of the M redundant processing stages as shown in Fig. 2. The input voters do not consider corrupted data that cannot be recovered using ECCs. When any of the output voters detect that all of its input results are different, a computation error is assumed and the processing of that science dataset is repeated. Computation errors can occur when radiation affects data registers and/or FPGA configuration [10]. While upsets in the data registers cannot be detected by the Xilinx SEM controller, these are automatically corrected when reloading the data to process again. The Xilinx SEM is still needed to deal with the corrupted configuration bits, as described in Sect. 3.3. The data-flow controller coordinates the access by the voters to the redundant data in the PL-DDR in a ping-pong fashion, so that the voted results do not overwrite the source data, in case the computation needs to be repeated.

4 Case-Study: APEX-SoC-Based Controller of the JPL CIRIS Spectrometer

This section describes a proof-of-concept SoC implementation of a controller for the JPL CIRIS spectrometer using the APEX-SoC platform.

4.1 The JPL CIRIS Spectrometer

CIRIS is one of the new generation JPL instruments proposed to search for life indicators in icy moons, such as Europa [12]. It is based on the COTS instrument prototype described in [34], and it a small, rugged and lightweight Fourier Transform Spectrometer (FTS) with a high Signal-to-Noise Ratio (SNR) in the near-IR to thermal-IR region (2–12 µm) where the strongest and most diagnostic vibrational bands of the compounds of interest in Europa are found (e.g., ‘CHNOPS’ functional groups). CIRIS can work in cryogenic temperatures from 70–130 K with the use of passive cooling methods while onboard a spacecraft. More importantly, as opposed to related instruments such as grating spectrometers (e.g., Galileo NIMS [35]), CIRIS has intrinsic immunity from radiation-induced noise, enabling it to perform mid-IR solar reflectance and thermal emission spectroscopy with limited interference from the radiation environment in Europa.

The major structural novelty introduced by CIRIS is the constant-velocity rotating refractor it uses to vary the optical path difference of the two rays in which incoming light is divided by a beam splitter at the entrance of the instrument (red and green rays in Fig. 3). The reflected rays in the rotating refractor recombine after travelling through the instrument, resulting in a fringe interference light pattern (interferogram) that is measured with a photo-detector (purple ray in Fig. 3). There are up to four regions over the course of a revolution of the refractor where the optical interference between the input light rays can be measured with a photo-detector. These regions are located at approximately 16\(^{\circ }\) arcs around the four positions where the refractor is parallel or perpendicular to the beam splitter. Note that the interferogram amplitude value is maximum in these four positions as all of the light rays travel the same distance along the spectrometer and recombine in phase at its output. This is why these positions are called Zero Path Difference (ZPD) positions. An optical incremental encoder mounted on the servomotor that drives the refractor’s rotation is used on the ground prototype of CIRIS to identify these regions. As the CIRIS refractor performs 6.5 revolutions per second, each interferogram spans over a period of 13.6 ms every 24.8 ms. The optics and functioning of CIRIS result in an interferogram with the high-amplitude values assembled in a narrow central burst, and small-amplitude values spanning the vast majority of the tail positions and carrying the spectral resolution information (see Fig. 4). The interferogram signal delivered by the photo-detector is conditioned, filtered and amplified to ±5V range prior to being digitized at 1 MSPS using the ADC available in the APEX-SoC. The interferogram samples are then processed via a Fast Fourier Transform (FFT) to produce a spectrum that illustrates the intensity of the wavelengths present in the light beam. This in turn permits to find out the chemical composition of the sample or body under study by looking at the absorption lines in the spectrum. However, spectral leakage (e.g., “picket-fence” effect) and noise are also present in the spectrum due to the limited discretization of the interferograms through time limited digital sampling, and need to be properly handled by the instrument electronics to produce meaningful results [36].

Although radiation has small impact on the spectral content of CIRIS data, FTS data processing allows increasing the SNR of the instrument even further [37]. As shown in Fig. 4, the shape of the CIRIS interferogram allows the data processing for detecting (and removing) most of the radiation hits that induce large current pulses (i.e., significantly greater than the nominal value) in the instrument’s photo-detectors. In Fig. 4, note there are two radiation hits at \(-266\) and \(-500\) µs.

At the moment there is a single photo-detector in the ground prototype of CIRIS, however the flight version of CIRIS will be equipped with an array of up to 25 photo-detectors to increase the instrument’s spatial resolution and sensitivity in different IR bands. This will also increase the computation burden, as more interferograms will need to be processed within the same span of time (24.8 ms).

4.2 CIRIS Data Processing

The section describes the different processing stages that must be applied on the CIRIS interferogram data in order to produce meaningful spectroscopy results that can be interpreted by the scientists on Earth [36]. Figure 5 shows a block diagram of these stages as well as their interfaces with the APEX-SoC infrastructure. In this figure, note the two superposed main blocks that represent the dual data processing solution adopted in the CIRIS APEX-SoC to increase the performance and reliability.

The first stage prepares the digitized interferogram samples for subsequent processing by selecting 8,192 samples centered around the ZPD positions. This is done to deal with any temporal shift that might have occurred while sampling the interferogram.

The second stage removes the DC offset in the ZPD aligned interferogram by subtracting its average value, which is computed using a Cumulative Moving Average (CMA).

The third stage implements a radiation hit filter to detect and remove the outlier in the interferogram provoked by radiation striking the CIRIS photo-detector. The radiation pulses at the output of the CIRIS transconductance amplifier, with a bandwidth of 100 kHz, are about 10 µs full width at half maximum and easily recognized in the small-amplitude tail samples using statistics, namely the mean and variance (shown by triangles in Fig. 6) [37]. Radiation hit samples are then replaced by zeros without modifying significantly the spectral content of the interferogram. This property comes from the fact that interferogram points outside the central burst mainly carry redundant resolution information, and hence, removing a few points out of 8,192 lead to indistinguishable changes in the spectrum. In effect, each of the interferogram samples contributes only in about 0.1 % to the spectrum. Note here that the undetectable radiation hits that are at or below the un-irradiated noise level spread their energy over all wavelengths and therefore average to a constant DC offset in the spectrum, which is removed in the second stage. The mean and variance statistics are computed on the tail samples of the interferogram using the Knuth algorithm [38].

The fourth stage (STAT Inter.) computes the variance and performs a CMA on successive interferograms detected around the same ZPD positions with the objective of estimating and increasing the SNR by removing the effect of high frequency and random noise. As in the third stage, the Knuth algorithm is used to calculate these statistics.

The fifth stage apodizes the averaged interferograms at the edges of the sampled regions to minimize the effects of spectral leakage.

The sixth stage computes the FFT on the interferogram. In light of increasing spectral resolution, this stage adds 4,096 zeros to each of the tails of the interferogram to obtain 8,192 additional interpolated spectrum points in-between the original nonzero-filled spectrum data, that is, 16,384 total spectrum points. This zero padding allows us to reduce the erroneous signal due to the “picket-fence” effect by up to 36 % [39].

The seventh stage relies on the Knuth algorithm to compute the variance and CMA on the spectrums resulting from the successive interferograms detected around the same ZPD positions.

The eighth and ninth stages are intended to correct the deviations provoked by CIRIS refractor’s refractive index variations with wavelength and due to minor dissimilarities in the CIRIS optics between the four interferogram acquisition regions.

All of the processing stages described in this section are runtime configurable from Earth to adapt to potentially unexpected conditions when exploring distant planetary bodies. Some of the parameters that can be configured include: (1) the method to detect the position of the ZPD sample (e.g., most-negative, most-positive or most-magnitude) in the ZPD alignment core, (2) the number of trials to be averaged and the requirement to compute or not the variance in the STAT cores, (3) the apodizing function in the apodization core, and (4) the requirement for zero-filling (16,384 spectrum points) or not (8,192 spectrum points) in the FFT core.

It is important to note here that the interferograms detected in the photo-detector array that will be available in the flight version of CIRIS are independent of each other, and hence, the processing stages presented above are suitable for a parallel implementation on the APEX-SoC. Currently we simulate the photo-detector array by copying multiple times (to different PL-DDR memory segments) the same interferogram samples digitized by the single ADC in the system.

4.3 CIRIS Data Processing Integration into the APEX-SoC Infrastructure

All of the processing stages presented in Sect. 4.2 are implemented on the FPGA fabric, except stages 7 and 8, which run as software routines in the ARM processor because they involve floating-point operations. Two instances of the whole IRIS data processing are integrated into the APEX-SoC infrastructure, as shown in Fig. 5. As previously mentioned, these can be used to boost performance or to improve reliability (i.e., DMR scheme), depending on the mission’s requirement at each time. For this specific scenario, a crossbar with 13 communication channels is created and the associated assembler routines in the data-flow controller are appropriately tailored for the data transfers required by CIRIS processing stages.

5 Results

This section summarizes the implementation, performance and irradiation results we have collected so far in the APEX-SoC-based CIRIS controller.

5.1 Implementation

The amount and type of resources consumed by the APEX-SoC infrastructure and the CIRIS data acquisition and processing modules when implemented on a Zynq 7Z100 device are detailed in Table 1. The most important implementation aspect to note here is the spatial isolation of the CIRIS modules within the FPGA fabric, which has been carried out following the Xilinx Isolation Design Flow (IDF) [40]. This permits to increase the availability of the system by preventing the situation where a single charged particle corrupts multiple processing stages in the FPGA fabric. As shown in Fig. 7, the crossbar and the data-flow controller are mapped in-between the two processing stages forming a fence. It is also important to note the small footprint of the voters compared to the processing stages, in the range of hundreds of LUTs and flip-flops, which minimizes the chances of being corrupted by radiation.

The power consumption reported by Xilinx Vivado design tool for the whole APEX-SoC-based CIRIS controller is approximately 5 W, which is about 3 W less than that of an equivalent board based controller.

The APEX-SoC uses up to 256 MB in the PL-DDR memory (approximately 25 % of the total DDR memory capacity) to process 25 interferograms simultaneously. The memory is arranged into several data segments across different categories, each containing a given type of data (e.g., raw interferogram, interferogram mean/variance, spectrum amplitude mean/variance or spectrum phase mean/variance) related to the information detected by a given photo-detector when the rotating refractor was in a given position. Besides, as explained in Sect. 3.4, each data is stored three times in the PL-DDR memory in different TMR data pools to increase reliability, and each TMR pool is itself replicated two times (A and B) to allow for data re-processing, if needed.

5.2 Performance

Table 2 shows the performance results measured when the FPGA processing logic is clocked at 200 MHz and both DDR memories and ARM Cortex-A9 processor run at 800 MHz. As shown in Fig. 8, the parallelism provided by the dual data processing channels in the APEX-SoC and the efficient PL-DDR memory access schedule allow for processing two interferograms every 460 µs, with a latency of 867 µs. Up to 4.6 GB/s of the total PL-DDR bandwidth (approx. 85 %) are allocated to processing and the long latency introduced by the radiation hit filter and the FFT computation is hidden by overlapping parallel processing and PL-DDR data transfers. As a result, the APEX-SoC almost quadruples the processing requirements of the flight CIRIS spectrometer as it is able to process about a hundred interferograms within the time span the refractor in the instrument takes to get between consecutive ZPD positions (24.8 ms). On the other hand, when using the two redundant data processing channels to increase reliability (i.e., DMR scheme), the APEX-SoC-based CIRIS controller is still able to fulfill the processing requirement for the next-generation of CIRIS, requiring up to 900 µs to process each interferogram.

5.3 Robustness Against Radiation

A radiation test was conducted at JPL using a 60Co \(\gamma \)-ray source (1 rad/sec) directed toward the CIRIS photo-detector operating at 77 K to reduce detector noise below the radiation hit pulses. The hit rate in this test was approximately 3,400 hits per second, exceeding what is expected in the Europa mission by at least a factor of three. Figure 9 shows the obtained results, where the blue line represents the measured values without radiation, the purple line represents the values measured with radiation and the yellow line represents the values measured when the radiation hit filter was enabled. As shown in Fig. 9a, the radiation hit filter stage reduces the distortion of the line shape and spectrum provoked by radiation while keeping all other spectral components unaltered. Note in this figure that the high artificial “emission” peaks on the spectrum are coming from electrical noise generated by the vacuum pumps in the laboratory. These results are of utmost importance towards building an instrument that could cope with Europa-like radiation, which indeed deteriorated the spectroscopy data collected by NASA’s previous generation NIMS spectrometer aboard the Galileo spacecraft more than a decade ago [35]. In addition, as shown in Fig. 9b, the adopted mitigation solution increases the instrument SNR by eliminating the noise due to radiation hit pulses.

We have not conducted any specific experiment to test the implemented fault-tolerance features yet, as these are well known and proven to be effective. Plans are to port the APEX-SoC-based CIRIS instrument described in this chapter to a radiation-hardened Xilinx SoC as soon as this technology is available and test the design in a simulated Europa-like thermal and radiation environment.

6 Conclusions and Future Work

This chapter has presented an ongoing research conducted by NASA’s Jet Propulsion Laboratory (JPL) and ARM to develop a SoC platform (APEX-SoC) to power instruments avionics in future space exploration missions. This platform reduces significantly the size and power consumption of the instrument avionics as most of the electronics required for science processing of the instrument data are fitted in a single chip. At the moment this platform is prototyped using a commercial Xilinx Zynq SoC, where a number of fault-tolerance mechanisms have been implemented. The expectation is to port this design to a radiation-tolerant SoC part that might be available in the near future. The chapter has presented a case-study where the APEX-SoC prototype is used to process data delivered by a JPL spectrometer (CIRIS). Finally, the chapter has discussed the implications of using SoC technology in future space missions.

Future work at ARM will focus on making the processor more resilient to radiation. Namely, a thorough study of the ARM Cortex-R5 microarchitecture will be conducted to identify the parts that are more vulnerable and to choose the most suitable fault-tolerance techniques to be used in each of these parts without compromising the area and power consumption efficiency of the ARM architecture. At the processor architecture level, a fail-operational Triple Cortex-R5 Core Lock-Step (TCLS) processor will be developed [8]. JPL will look forward using the APEX-SoC platform with other space instruments.

Besides the research described in this chapter to design the next-generation space instruments avionics, JPL is also working in collaboration with the Goddard Space Flight Center (GSFC) and the Air Force Research Laboratory (AFRL) on designing a next-generation high-performance spaceflight processor based on a dual quad-core ARM Cortex-A53 [41].

References

Blem, E., Menon, J., Sankaralingam, K.: A detailed analysis of contemporary ARM and x86 architectures. Technical report, University of Wisconsin - Madison (2013)

Mehta, N.: Xilinx 7 Series FPGAs: the logical advantage. Xilinx WP405 (2012)

Xilinx Inc.: Zynq-7000 All Programmable SoC: Technical Reference Manual, UG585 (2015)

Wiens, R.C., et al.: The ChemCam instrument suite on the Mars Science Laboratory (MSL) Rover: body unit and combined system tests. Space Sci. Rev. 170, 167–227 (2012). Springer

Petrick, D., Gill, N., Hassouneh, M., Stone, R., Winternitz, L., Thomas, L., Davis, M., Sparacino, P., Flatley, T.: Adapting the SpaceCube v2.0 data processing system for mission-unique application requirements. In: Proceedings of the NASA/ESA Conference on Adaptive Hardware and Systems (AHS 2015) (2015)

BAE Systems Plc.: RAD750 Radiation-Hardened PowerPC Microprocessor (2008)

Doyle, R., Some, R., Powell, W., Mounce, G., Goforth, M., Horan, S., Lowry, M.: High performance spaceflight computing (HPSC) next-generation space processor (NGSP): a joint investment of NASA and AFRL. In: Proceedings of the Workshop on Spacecraft Flight Software (2013)

Iturbe, X., Venu, B., Ozer, E., Das, S.: A triple core lock-step (TCLS) ARM Cortex-R5 microprocessor for safety-critical and ultra-reliable applications. In: Proceedings of the IEEE/IFIP International Conference on Dependable Systems and Networks (2016)

Ebrahimi, M., Evans, A., Tahoori, M.B., Costenaro, E., Alexandrescu, D., Chandra, V., Seyyedi, R.: Comprehensive analysis of sequential and combinational soft errors in an embedded processor. In: IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, vol. 34, no. 10, October 2015

Kastensmidt, F.L., Carro, L., Reis, R.: Fault-Tolerance Techniques for SRAM-Based FPGAs. Springer, Heidelberg (2006)

Berisford, D.F., Hand, K.P., Younse, P.J., Keymeulen, D., Carlson, R.W.: Thermal testing of the compositional infrared imaging spectrometer (CIRIS). In: Proceedings of the International Conference on Environmental Systems (2012)

Carlson, R.W., Hand, K.P., Berisford, D.F., Keymeulen, D.: The compositional infrared interferometric spectrometer (CIRIS) for assessing the habitability of Europa. In: Proceedings of the American Geophysical Union Fall Meeting (2013)

Xilinx Inc.: UltraScale Architecture and Product Overview, DS890 (2015)

Xilinx Inc.: Radiation-Hardened, Space Grade Virtex5-QV FPGA Data Sheet: DC and AC Switching Characteristics, DS692 (2015)

Microsemi Inc.: Radiation-Tolerant ProASIC3 Low Power Spaceflight Flash FPGAs with Flash Freeze Technology (2012)

Microsemi Inc.: RTAX-S/SL and RTAX-DSP Radiation-Tolerant FPGAs (2015)

Cobham Gaisler: LEON3-FT SPARC V8 Processor Data Sheet and User’s Manual (2013)

Rudolph, D., Wilson, C., Stewart, J., Gauvin, P., George, A., Lam, H., Crum, G., Wirthlin, M., Wilson, A., Stoddard, A.: CHREC space processor: a multifaceted hybrid architecture for space computing. In: Proceedings of the AIAA/USU Conference on Small Satellites (2014)

Mandl, D.: Intelligent payload module update. In: Proceedings of the HyspIRI Symposium (2015)

Wilson, C., Stewart, J., Gauvin, P., MacKinnon, J., Coole, J., Urriste, J., George, A., Crum, G., Timmons, E., Beck, J., Flatley, T., Wirthlin, M., Wilson, A., Stoddard, A.: CSP hybrid space computing for STP-H5/ISEM on ISS. In: Proceedings of the AIAA/USU Conference on Small Satellites (2014)

Kanekal, S., Jones, A., Randol, B., Patel, D., Summerlin, E., Gorman, E., Crum, G., Nolfo, G.D., Paschalidis, N., Heyward, S., Riall, S.: CeREs: a Compact Radiation bElt Explorer (2014)

Wirthlin, M.: Neutron radiation test results of the linux operating system executing within the CHREC space processor (CSP). In: Proceedings of the Military and Aerospace Programmable Logic Device International Conference (MAPLD 2015) (2015)

Amrbar, M., Irom, F., Guertin, S.M., Allen, G.: Heavy Ion single event effect measurements of Xilinx Zynq-7000 FPGA. In: Proceedings of the Radiation Effects Data Workshop (REDW 2015) (2015)

Switft, G.: Investigation of high current events in 28 nm 7-series FPGAs. In: Proceedings of the Military and Aerospace Programmable Logic Device International Conference (MAPLD 2015) (2015)

Koszek, W.A., Lesea, A., Steiner, G., White, D., Maillard, P.: Challenges in assessing single event upset impact on processor systems. In: Proceedings of the Workshop on Silicon Errors in Logic and System Effects (SELSE 2015) (2015)

ARM Ltd.: Cortex-A9 NEON Media Processing Engine: Technical Reference Manual (2011)

Xilinx Inc.: Zynq All Programmable SoC Linux-FreeRTOS AMP Guide, UG978 (2013)

Xilinx Inc.: AXI DataMover User Guide, PG022 (2014)

Chapman, K.: PicoBlaze for Spartan-6, Virtex-6, 7-Series, Zynq and UltraScale Devices (KCPSM6) (2014)

Xilinx Inc.: LogiCORE IP AXI XADC v1.00a Product Guide, PG019 (2012)

Xilinx Inc.: Soft Error Mitigation Controller, PG036 (2014)

Wadsworth, W., Dybwad, J.P.: Rugged high-speed rotary imaging fourier transform spectrometer for industrial use. In: Proceedings of the International Society for Optics and Photonics Conference on Environmental and Industrial Sensing (2002)

Carlson, R.W., Weissman, P., Smythe, W., Mahoney, J.: Near-infrared mapping spectrometer experiment on galileo. Space Sci. Rev. 60, 457–502 (1992). Springer

Saptari, V.: Fourier Transform Spectroscopy Instrumentation Engineering, vol. 61. SPIE Press, Bellingham (2004)

Yiu, P., Iturbe, X., Keymeulen, D., Berisford, D., Hand, K.P., Carlson, R.W., Wadsworth, W., Levy, R.: Adaptive controller for a fourier transform spectrometer with space applications. In: Proceedings of the IEEE Aerospace Conference (2015)

Knuth, D.E.: The Art of Computer Programming: Seminumerical Algorithms, vol. 2. Addison-Wesley, Boston (1998)

Gronholz, J., Herres, W.: Understanding FT-IT data processing. Instrum. Comput. 3(10), 1–23 (1985)

Hallett, E.: Isolation Design Flow for Xilinx 7 Series FPGAs or Zynq-7000 AP SoCs (ISE Tools), Xilinx XAp1086 (2015)

Statement of Work (SOW) for the Development of the High Performance Space Computing (HPSC) Processor. http://prod.nais.nasa.gov/eps/eps_data/167836-DRAFT-001-001.pdf

Acknowledgment

The research described in this chapter was carried out at the Jet Propulsion Laboratory, California Institute of Technology, under a contract with the National Aeronautics and Space Administration (NASA). Xabier Iturbe is funded by the European Commission’s FP7 Marie-Curie International Outgoing Fellowship Program with “Project No. 627579”.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 IFIP International Federation for Information Processing

About this paper

Cite this paper

Iturbe, X. et al. (2016). On the Use of System-on-Chip Technology in Next-Generation Instruments Avionics for Space Exploration. In: Shin, Y., Tsui, C., Kim, JJ., Choi, K., Reis, R. (eds) VLSI-SoC: Design for Reliability, Security, and Low Power. VLSI-SoC 2015. IFIP Advances in Information and Communication Technology, vol 483. Springer, Cham. https://doi.org/10.1007/978-3-319-46097-0_1

Download citation

DOI: https://doi.org/10.1007/978-3-319-46097-0_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-46096-3

Online ISBN: 978-3-319-46097-0

eBook Packages: Computer ScienceComputer Science (R0)