Abstract

Uncertainty impacts many crucial issues the world is facing today – from climate change prediction, to scientific modelling, to the interpretation of medical data. Decisions typically rely on data which can be aggregated from different sources and further transformed using a variety of algorithms and models. Such data processing pipelines involve different types of uncertainty. As visual data representations are able to mediate between human cognition and computational models, a trustworthy conveyance of data characteristics requires effective representations of uncertainty which take productivity and cognitive abilities, as important human factors, into account. We summarize findings resulting from prior work on interactive uncertainty visualizations. Subsequently, an evaluation study is presented which investigates the effect of different visualizations of uncertain data on users’ efficiency (time, error rate) and subjectively perceived cognitive load. A table, a static graphic, and an interactive graphic containing uncertain data were compared. The results of an online study (N = 146) showed a significant difference in the task completion time between the visualization type, while there are no significant differences in error rate. A non-parametric K-W test found a significant difference in subjective cognitive load [H (2) = 7.39, p < 0.05]. Subjectively perceived cognitive load was lower for static and interactive graphs than for the numerical table. Given that the shortest task completion time was produced by a static graphic representation, we recommend this for use cases in which uncertain data are to be used time-efficiently.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Exploring and analyzing data is often realized with the help of algorithms. Information visualization and data visualization can also help users to interpret the results produced by these algorithms e.g. by reducing information overload and aiding people understand (their) data. While many types of data are visualized in order to make them more understandable, uncertainty measures are often excluded from these visualizations to limit visual complexity and clutter, which may lead to misunderstanding or false interpretations or further information overload. However, uncertainty is an essential component of the data, and a trustworthy representation requires it as an integral part of data visualization. Visualizing uncertain data requires us to look initially at different kinds of visual representation and different types of uncertainty. While the graphical attributes are assigned by the visualization developer, the type of uncertainty is often inherent in the data as a predefined feature. In general, uncertainty types are: errors within data, accuracy level, credibility of information source, subjectivity, non-specificity or noise [1]. Pang et al. use a threefold distinction, including statistical uncertainty, error and range caused by data acquisition, measurements, numerical models and data entry [2] while Skeels et al.’s domain-independent classification of uncertainties includes (1) inference uncertainty resulting from imprecision of modelling methods such as probabilistic modelling, hypothesis-testing or diagnosis, (2) completeness uncertainty due to missing values, sampling or aggregation errors and (3) measurement uncertainty caused by imprecise measurement [3].

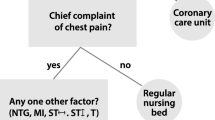

Like all data, uncertain data can be represented by mapping numerical values to graphical attributes. As a result, an abstract concept can be expressed in a visualization. Uncertainty values can, for example, be represented as tables, glyphs, by geometrical attributes or in terms of colour [2] (Fig. 1).

A table was the baseline in our experiment (left). It was compared to the static visualization condition (right) and the interactive visualization condition (Fig. 2).

In addition, uncertainty measures can, for example, be represented by error bars, boxplots or Tufte Quartile Plots. In principle it is possible to select any graphical attribute as a representation of an uncertain value. This so-called graphical mapping is often brought about by the personal preferences and data experience of the developer or designer [4], by looking for an innovative solution [5], or by user requirements. While the latter studies lead to domain-specific and task dependent visualization, domain-independent studies about the influence of distinct visualizations on human aspects could enable visualization developers and researchers to make their design decisions based on empirical evidence. The need of a design decision is characterized by questions such as for example “Should I use a static or an interactive visualization?” or “Should I use a table instead of a graphic?”, “Is cognitive effort lower if I use a bar chart or a line graph?”. We believe that design decisions can be supported by general recommendations, resulting from visualization evaluation studies. Unlike domain-specific, user-centered design studies, evaluation studies aim at results domain spanning, thus permitting conclusions about the fundamental character of distinct visualization characteristics. This paper illustrates the characteristics of both approaches. First we derive human aspects relevant to domain-specific visualizations of a marine ecology video retrieval system. Subsequently, we present an example for a more general visualization evaluation aiming at giving general recommendations supporting design decisions in different application fields.

This paper is structured as follows: Sect. 2 summarizes human aspects relevant during the domain-specific development of uncertainty visualizations (marine ecology), Sect. 3 outlines the problem and research questions, followed by a method description in Sect. 4 and experimental results in Sect. 5. We end with a discussion and a conclusion in Sect. 6.

2 Related Work

A user study on trust and data provenance revealed that to be accepted in scientific use, the video analysis tool must provide data provenance information that describes the data collection methods (e.g., sampling methods), as well as the data processing methods and their potential errors (e.g., computer vision software and their confusion matrices). This would allow results to be compared with traditional statistical methods and increase the trust in results [6]. A related study revealed that a high level of user confidence can, however, be subjectively influenced by the visualization. Over-simplifying the visualization had potentially lead to the possibility of negative adverse effects, such as attention tunneling, memory loss, induced misinterpretations and unawareness of crucial information, which can be similar to the effects of uncertainty [7]. In another study [8] it was argue that simplifying expert-oriented visualization of uncertainty, misinterpretations of computer vision errors are less likely to occur. It remains unclear if findings are applicable to other scenarios and how visualization characteristics influence user confidence. But it can be stated that usability evaluation criteria such as efficiency, effectiveness, intuitiveness, intelligibility and trust – which are regularly considered in domain-specific research – are pivotal as a basis for evaluations supporting design decisions. Designing visualization characteristics according to cognitive characteristics are supposed to positively influence the usability [9]. As it is complex to analyze and measure cognitive processes, a more feasible approach is to measure subjectively perceived cognitive load. Cognitive load is strongly related to effectiveness, error rates and learning times [10]. Measured either by EEG or subjective evaluation, it appeared to differ depending on different visualization techniques. It remains unclear if different visualizations of uncertain data also lead to differences in cognitive load [11, 12].

The difference between user-centered design and an ergonomic evaluation is that the former aims at interface development, while the latter produces general recommendations supporting design decisions. Results of evaluation studies are able to shorten the effort for user-centered development activities without completely replacing them. General recommendations for the selection of visualization types require ergonomic evaluations of common visualization types, which usually apply different measures in order to obtain a true understanding of the strengths and weaknesses for all visualizations in the context of a specific task. Performance data provides evidence for the user’s ability to use the visualization. Subjective data reflects the opinion of the user, which complements the performance data as often a higher subjective correlates with better performance. Data visualization research focusses on the development of specific tools, tasks or for specific user types. Research on domain independent recommendations for representations of uncertain data is rare. Ergonomics have mainly been involved at an a posteriori stage [13–15] while large part of the work focusses on creating new visual representations and interaction techniques [16], data representations [17, 18] or mathematical foundations [19, 20].

3 Research Question and Hypotheses

The following study therefore aims to generate recommendations for selecting the distinct uncertainty visualization types in terms of task completion time, error rate and cognitive effort by testing the following hypotheses: (1) H1: There is a significant difference in task execution time per visualization type. (2) H2: There is a significant difference in error rate per visualization type. (3) H3: There is a significant difference in cognitive load per visualization type.

4 Method

In order to test our hypotheses we chose a between-group design, testing visualization type as the independent variable and task completion time, error rate and cognitive effort as the dependent variables. Participants were exposed to an experiment website which provided a task description and a task page where participants had to make a decision with the help of visualizations. Afterwards they had to fill in a survey documenting their subjective perceived cognitive load and demographic parameters.

Procedure. During the first phase of the experiment, participants had to accomplish a task using a visualization of uncertain data within the interface of a website. After reading an introduction, the visualization was shown. Participants had to look at the visualization and make a decision based on the information they could derive from it. The time it took each user to come to a decision, as well as the decision value, were recorded. During the second part, conducted to examine the cognitive effort, participants had to fill in a questionnaire.

Task. Weather prediction was chosen as the task topic because it provides uncertain data that is most likely to be easily understood by all participants. We suspected it would deliver the most domain-independent results, because it concerns a major part of the population to which we want to generalize our results. In this case, inference uncertainty [3] was the studied type of uncertainty. In the given case, a task using weather predictions was determined and participants had to make a decision. The task required participants to choose one out of fourteen days to go sailing. They were asked to select the day with the highest estimated wind force and the lowest uncertainty, given one of three visualizations. Daily wind force and uncertainty values, and one radio button for each day as selection options, were provided (see Fig. 2).

Data Set. Weather is usually predicted for the next five to seven days and rarely contains explicit uncertainty values or intervals and if so, only the likelihood of a weather phenomenon (e.g. 60 % chance of wind) is reported. Hence the 10-day ensemble weather predictions published by the KNMI [21] were more suitable for our purpose. The original ensemble predictions show one expected wind value per day and changing minimum and maximum values within the time span of one day. The higher the interval of minimum and maximum estimated wind force, the more uncertain is the estimated wind value. We created an artificial data set, to prevent making any uncertainty related decision too easy, because the more a predicted value is located in the future, the more uncertain it is. Data was shaped, based on the structure (minimum, maximum and estimated wind value) of the ensemble data, according to different possible solution scenarios. In order to design a more difficult task, the original data was extended to fourteen days. 10 days got a lower (2–4 m/s) to medium (4–6 m/s) wind force, serving as distractive noise. Wind forces in the upper third (6–8.5 m/s, days 4, 7, 13 and 14) were designed in order to increase the required attention the participant had to invest for solving the task. These scenarios built a basis for the error rate computation.

Visualizations. On the basis of our artificially created data set, three visualizations were designed. By defining a visualization as visually structured, serving a better perception of its content, we see a table as the simplest form of visualization. The table itself was designed without any colour and graphics in order to distinguish it as much as possible from the graphics. In the graphic visualization the estimated wind value was represented by a horizontal line. The dotted horizontal line represents minimum and maximum estimated wind value and creates a contrast between both lines. The interactive visualization differs from the static one only by the interface control elements, while all graphic attributes were retained. Each of the dimensions time, estimated wind force, maximum and minimum estimated wind force were given a control component, even if this does not contribute to the answer. Each wind value could be restricted by sliders and particular days could be selected with a checkbox.

Questionnaire. Cognitive effort was examined by the SWAT (Subjective Workload Assessment Technique) definition of cognitive effort. This questionnaire tool considers cognitive effort as the amount of concentration (Q8) and automatism (Q13) required by a certain task [4]. The SWAT “cognitive load” subscale with its following items was included: Q7: “The visualization caused low cognitive effort.” Q8: “Very little concentration was required to come to a decision.” Q13: “Coming to a decision was quite automatic”. Answers could be given on a 5-Point Likert scale (1 = “totally agree”). Additionally, demographic and usability questions had to be answered.

Participants. Participants (N = 146) were acquired in two different ways. One part was randomly selected from a research and non-research environment on 5 different locations on 5 different days. The arbitrariness with which participants walked into the experiment situation randomly assigned them to it. The other part was found by distributing the link of the experiment website on research and non-research platforms on the internet (Facebook, Twitter, Researchgate, LinkedIn, Xing) and sent to 4 mailing lists of the University of Amsterdam and at the national research institute for mathematics and computer science in the Netherlands (CWI). 107 participants (Interactive: n = 40, static: n = 33, table: n = 34) also filled in the questionnaire. 68 % of participants who filled in the questionnaire were male, 32 % were female, and they were highly educated (77 % University degree or PhD). 56 data experts and 45 data novices could be identified by participants self-reporting. The mean age was 29.3 years (SD = 4.3 years). Excluding defective entries and incomplete answers lead to a reduction of the sample size for some of the dependent variables.

5 Results

We define an effective and efficient visualization of uncertain data as a visualization leading to a correct decision in the shortest amount of time with least cognitive effort. We analyzed the task completion time, task error rate and cognitive effort for the table (TAB), static graphic (SG) and interactive graphic (IG).

Task Time. The average task time differs per visualization: It took participants with IG 2:19 min on average to come to a decision, while this was only 1:18 min with SG and 1:19 min with TAB. Overall, experts, including students working in research institutions and familiar with visualizations, completed the task faster with a time of 1:28 min than novices with 1:59 min.

A Kolmogorov-Smirnov (K-S) test after log transformation showed that the completion times of the interactive visualization [D(48) = 0.08, p > 0.05] the completion times of the static visualization [D(50) = 0.12, p > 0.05] and the table [D(47) = 0.07, p > 0.05] did not significantly deviate from normality. A one-way ANOVA was conducted to compare the effect of visualization types on task completion time. There was a significant effect of task completion time on visualization type for the three conditions [F(2, 142) = 5.15, p < 0.05]. This confirms our first hypothesis that there is significant difference in task completion time among the different uncertainty visualization types.

A K-S-test after log transformation showed that the task completion time of the experts [D(56) = 0.08, p > 0.05] and non-experts groups [D(45) = 0.12, p > 0.05] did not significantly deviate from normality. A one-way between subjects ANOVA was conducted to compare the effect of the group (expert/non-expert) on task completion time (Figs. 3 and 4). There was no significant effect of group on task completion time for the two conditions [F(1, 99) = 0,85, p > 0.05]. Due to the small group sizes formed by expert group per visualization type we did not test interaction effects.

Error Rate (ER). Error rates were computed for a strict definition of a right answer (only day 4) as well as for adding day 13 and 7 as the satisfactory right answers (see Table 1). Day 4 was defined as the day with the highest wind force and the lowest interval between minimum and maximum estimated wind force. If day 4 is considered the only right answer, from 145 valid answers (one missing value), 93 gave the wrong answer. Thus, a total of 93 errors/146 answers = 0.64 ER can be stated. Most errors were made with the interactive visualization (IG, ER = 0.74), while the fewest errors were made with the table (TAB, 28 errors/47 answers = 0.59 ER). The static visualization led to 30 errors in proportion to 50 valid answers and an ER of 0.6. The interactive graphic led to slightly more errors than the other two. Experts, including students in research (57 participants), made 38 errors (0.66 ER) while 23 of 45 novices gave the wrong answer (0.51 ER). To make the task more difficult, day 13 was introduced with a higher wind force but larger uncertainty. According to the original task definition it would be a wrong answer but when we consider it as an acceptable second solution we get a much lower error rate for all visualizations. Experts make fewer errors (0.14 ER) than novices (0.22 ER). Then, the interactive visualization would cause a 0.14 ER, the static graphic would have the highest score of 0.22 ER and the table would lead to a 0.19 ER. In order to test the differences error rate if day 4 is right/wrong, a Chi-square test was conducted. There was no significant difference between the type of visualization and whether or not the right answer was given [X2 (2) = 2.19, p > 0.5]. Likewise, there was no significant difference between the different groups (expert/novice) and whether or not the right answer was given [X2 (1) = 2.93, p > 0.05]. Even if day 13 and 7 were considered as right answers, no significant difference was found. Therefore hypothesis 2 stating that there is a significant difference in errors between different uncertainty visualizations was rejected.

Cognitive Load. Even if we selected a 3-item subscale of the SWAT questionnaire, these items (see Table 2) had a high reliability, with Cronbach’s α = 0.691. A K-S showed that all three items did significantly deviate from normality [Q7: D(97) = 0.19, p < 0.001, Q8: D(97) = 0.18, p < 0.001 and Q13: D(97) = 0.21, p < 0.001]. A non-parametric Kruskal-Wallis test found a significant difference in subjective cognitive load between visualization types [Q7: H(2) = 7.39, p < 0.05]. The effect of visualization type on subjectively perceived concentration was non-significant [H (2) = 4.81, p > 0.05] as well as the item automation [Q 13: H (2) = 2.04, p > 0.05]. All three SWAT items together measure cognitive load. Two of them do not show significant difference. We reject hypothesis 3, which assumes a significant difference regarding cognitive effort between the different types of visualizations.

Qualitative Feedback. With the interactive visualization most participants used the average wind force slider to find the highest and then select the day with the lowest uncertainty interval without using other control elements (see Fig. 2). The following quote describes the most common procedure: “I sub-selected the three highest wind forces to see them more detailed, compared them and finally choose the one with the highest amount and possible highest prediction.“Participants stated that not all controls are really useful for the decision but the one they used made the decision easier. Some would have preferred more explanation and feedback, especially when making unusual selections. The only feedback received given an unusual selection was an empty screen without values. Some participants also grounded their decision on the highest possible maximum value, which showed that they did not take uncertainty into account or did not understand the meaning of the different values. This was more often the case for novice users. Unfortunately the questionnaire only examined the thoughts not the interactions. In some cases it was not clear which, or whether, control elements were used. Colour, interactivity and filtering functions were perceived as the positive attributes of the visualization, while the amount of data was a negative aspect. Few people indicated that they found it difficult to perform the task without having sailing experience. A common thinking process reported by users of the static visualization is similar to the one of the interactive one: in the beginning participants try to understand the meaning of graphical elements by reading the legend. Then they seek out the highest mean values and examine the interval sizes. This process leads in most cases to the right answer. Most of the participants grounded their decision on comparing mean and maximum value of different days. This usually led to the answer of day 13. Most errors occurred as a result of comparing mean, minimum and maximum values independently from each other. While increasing the uncertainty of predictions located further into the future, experts often included this existing knowledge into their process of decision making. Especially when using the table visualization, participants tended to ignore the relationship between minimum and maximum values as an uncertainty interval. Often they started by selecting the highest minimum values and then proceeded by searching for not too high maximum values or just based their decision on the maximum value. For the table visualization, notably fewer participants describe the interval as influencing their decision. The typical cognitive process caused by the interactive and static visualization (searching for the highest means and then deciding on interval size) was rarely documented in the table group. Apparently the table emphasizes independence of values and hides their relation to each other. Overall, it became clear that a majority of participants, especially from the non-researcher group, had problems understanding the concept of uncertainty even though it was briefly described in the introduction.

6 Summary and Future Work

We investigated the influence of three uncertainty visualizations on performance measures and cognitive load. The findings show that task completion time significantly differs per visualization. We found no significant differences in error rates depending on visualization type. Significant differences in explicitly mentioned cognitive load require further investigation. Considering Q7 as single indicator of cognitive load – which would not be in line with our initial hypothesis – would show that data visualizations produce lower subjectively perceived cognitive load than a numerical table representation. We then would recommend data visualizations instead of numeric tables for all cases where data comprehension should result in low cognitive effort. Using the interactive visualization was most time consuming and also leads to a slightly higher error rate than the static visualization and the table. Participants perceived the cognitive effort induced by the interactive visualization as slightly higher than for the SG, but still lower compared to the table. Users stated that the required concentration and automatism during decision making was neutral for IG. Perceiving these attributes is assumed to be difficult. Participants could complete the task most rapidly with the SG, while also making the fewest errors with the lowest cognitive effort. The amount of concentration needed with SG is the lowest. Slightly more time than with the static visualization had to be spent with the table (Table 3).

While the error rate was equal, the table leads to the highest cognitive effort, concentration and the lowest automatism. We found differences between experts and novices. Surprisingly, novices can complete a task much faster with a numerical table than with a SG, whereas experts are faster with a graphical visualization and much slower with a numeric table. Experts make the least mistakes with the interactive and the most with the static graphic visualizations. Novices make the least mistakes in the day 4 scenario with the static visualization. In the day 4 and 13 scenario, this surprisingly alters to the table visualization, while they are again better with the static one when also considering day 7 as a right answer. The number of participants per condition has to be considered in the context of these results. This also applies to the cognitive effort numbers between subjects. According to experts, a table requires both the most cognitive effort and concentration, and could be used with the lowest amount of automation. The static visualization scored best in all three categories. Similar, but less distinctive, results were achieved by novices. On the basis of our results, we can state the best visualization in terms of task completion time, error rate and cognitive effort for data experts would be a static graphical visualization. Even if experts complete tasks faster with a table this leads to higher error rates and cognitive effort (assuming that it is more worthwhile to make the right decisions than making them in the shortest period of time possible).

Given results affect visualization of uncertainty as those can be represented as table, interactive or static visualization, but as these characteristics are more a general visualization feature and not only related to the uncertainty, given findings might also apply for data visualizations without uncertainty. Future studies are required to find out whether our findings are also true for data visualization without uncertainty orwhether the results change with an interactive visualization with improved usability, which is more decision related. Moreover, since we found that a static visualization of uncertainty can be recommended to experts, it would be interesting to investigate the detailed attributes. It would be interesting to see if our findings also apply to other types of uncertainties or to analyze cognitive load measurements with objective measures like eye-tracking or pupil dilation or EEG.

Generalizing the findings to the point where designers can confidently rely on them during design decisions would require much more experiments with several datasets, user profiles, and visualization designs including different designs variants like colors, sizes, bar charts or line charts, interactive features, tables with/without heat maps or other graphical features etc.). And further work has to be done to investigate how those features need to be designed for different user types like e.g. elderly whose perception and cognitive functions change during life span. Present work is thus just one of the first struggles within ergonomic evaluations generating general recommendations to support design decisions in data and information visualization.

References

Griethe, H., Schumann, H.: The visualization of uncertain data: methods and problems. In: Proceedings of Simulation and Visualization, Magdeburg (2006)

Pang, A.T., Wittenbrink, C.M., Lodha, S.K.: Approaches to uncertainty visualization. Vis. Comput. 13, 370–390 (1997)

Skeels, M., Lee, B., Smith, G., Robertson, G.G.: Revealing uncertainty for information visualization. Inf. Vis. 9(1), 70–81 (2010)

Bigelow, A., Drucker, S., Fisher, D., Meyer, M.: Reflections on how designers design with data. In: Proceedings of the 2014 International Working Conference on Advanced Visual Interfaces, pp. 17–24. ACM, May 2014

Chen, C.: Top 10 unsolved information visualization problems. IEEE Comput. Graph. Appl. 25(4), 12–16 (2005)

Beauxis-Aussalet, E., Arslanova, E., Hardman, L., van Ossenbruggen, J.: A case study of trust issues in scientific video collections. In: Proceedings of the 2nd ACM International Workshop on Multimedia Analysis for Ecological Data (2013)

Beauxis-Aussalet, E., Arslanova, E., Hardman, L.: Supporting non-experts’ awareness of uncertainty: negative effects of simple visualizations in multiple views. In: Proceedings of the European Conference on Cognitive Ergonomics 2015, p. 20. ACM, Warsaw (2015)

Beauxis-Aussalet, E., Hardman, L.: Simplifying the visualization of confusion matrix. In: BNAIC, November 2014

Ware, C.: Information Visualization: Perception for Design. Elsevier, Amsterdam (2012)

Sweller, J.: Cognitive load theory, learning difficulty, and instructional design. Learn. Instr. 4(4), 295–312 (1994)

Anderson, E.W., Potter, K.C., Matzen, L.E., Shepherd, J.F., Preston, G.A., Silva, C.T.: A User study of visualization effectiveness using EEG and cognitive load. Comput. Graph. Forum 30, 791–800 (2011). Blackwell Publishing Ltd.

Huang, W., Eades, P., Hong, S.-H.: Measuring effectiveness of graph visualizations: a cognitive load perspective. doi:10.1057/ivs.2009.10

Rogers, Y., Sharp, J., Preece, J.: Interaction Design: Beyond Human-Computer Interaction. Wiley, Hoboken (2011)

Nielsen, J.: The usability engineering life cycle. Computer 25(3), 12–22 (1992)

Tory, M., Möller, T.: Human factors in visualization research. IEEE Trans. Vis. Comput. Graph. 10, 72–84 (2004)

Badam, S.K., Fisher, E., Elmqvist, N.: Munin: a peer-to-peer middleware for ubiquitous analytics and visualization spaces. IEEE Trans. Vis. Comput. Graphi. XX(c), 1 (2014)

Kang, H., Shneiderman, B.: Visualization methods for personal photo collections: browsing and searching in the PhotoFinder. In: ICME 2000, 2000 IEEE International Conference on Multimedia and Expo, vol. 3, pp. 3:1539–3:1542 (2000)

Mullër, W., Schumann, H.: Visualization methods for time-dependent data-an overview. In: Proceedings of the 2003 Winter Simulation Conference, pp. 1:737–1:745. IEEE (2003)

Purchase, H.C., Andrienko, N., Jankun-Kelly, T.J., Ward, M.: Theoretical foundations of information visualization. In: Kerren, A., Stasko, J.T., Fekete, J.-D., North, C. (eds.) Information Visualization. LNCS, vol. 4950, pp. 46–64. Springer, Heidelberg (2008)

Wilkinson, L.: The Grammar of Graphics. Springer Science & Business Media, Berlin (2006)

KNMI, KNMI Verwachtingen, cited 13 July 2011. http://www.knmi.nl/waarschuwingen_en_verwachtingen/

Acknowledgements

This publication is part of the research project “TECH4AGE”, funded by the German Federal Ministry of Education and Research (BMBF, Grant No. 16SV7111) supervised by the VDI/VDE Innovation + Technik GmbH.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Theis, S. et al. (2016). Ergonomic Considerations for the Design and the Evaluation of Uncertain Data Visualizations. In: Yamamoto, S. (eds) Human Interface and the Management of Information: Information, Design and Interaction. HIMI 2016. Lecture Notes in Computer Science(), vol 9734. Springer, Cham. https://doi.org/10.1007/978-3-319-40349-6_19

Download citation

DOI: https://doi.org/10.1007/978-3-319-40349-6_19

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-40348-9

Online ISBN: 978-3-319-40349-6

eBook Packages: Computer ScienceComputer Science (R0)