Abstract

Head-mounted virtual reality and augmented reality displays (a.k.a. VR/AR goggles) created a revolutionary multimedia genre that is seeking ever-broadening applications and novel natural human interfaces. Adding neuromonitoring and neurofeedback to this genre is expected to introduce a new dimension to user interaction with the cyber-world. This study presents the development of a Neuromonitoring VR/AR Goggle armed with electroence-phalo-gram and electrooculogram sensors, programmable milli-Ampere current stimulators and wireless fog/cloud computing support. Beside of its potential use in mitigating cybersickness, this device may have potential applications in augmented cognition ranging from feedback-controlled perceptual training to on-line learning and virtual social interactions. A prototype of the device has been made from a Samsung Gear VR for S6. This study explains its technical design to ensure precision data sampling, synchronous event marking, real-time signal processing and big data cloud computing support. This study also demonstrates the effective-ness in measuring the event-related potentials during a visual oddball experiment.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Virtual reality

- Mixed reality

- Augmented cognition

- Cybersickness

- Electroencephalography

- Electrooculography

- Oculo-vestibular recoupling

1 Introduction

Head-mounted 3D displays, encased in wearable eye goggles, adapted to users’ head and body movements, have succeeded in producing virtual reality (VR) and mixed/augmented reality (MR/AR) images rivaling real-world sceneries in their complexity and immensity. These devices can also produce images impossible or unsafe to be captured in actual environments. Hence, AR/VR Goggles are having a wide-range of emerging applications not only in entertainment but also in learning, training, neurodiagnostics, psychotherapy and many other areas [1, 2]. Amidst the rapid development of this new medium, natural user interfaces are urgently needed to enhance interactions between its users and the virtual reality it creates. Motion and gesture detection as well as gaze tracking are regarded as essential. Users’ neuro-logical responses are regarded by some visual artists as the necessary characterization of the affective components of this new medium [3].

This study presents the development of a Neuromonitoring VR/AR Goggle armed with multi-modal electrophysiological sensors, milli-Ampere current stimulators and wireless IoT-cloud computing support. This system can capture and analyze electroencephalogram (EEG) and electrooculogram (EOG) in near real time to provide users with brain state estimates as well as oculo-vestibular recoupling (OVR) feedbacks. This goggle will have potential applications not merely in mitigating cybersickness [4] but also in enhancing users’ sense of engagement in on-line learning and virtual social interactions. Its EEG and EOG measurements can be used to evaluate users’ responses to different presentation forms [5] and to assess users’ perception to different syntactic elements in virtual reality [3].

The rest of this paper was divided into four sections: the potential use of EEG/ EOG monitoring and OVR stimulation in VR/AR applications were reviewed in Sect. 2; the architecture and functional components of the Neuromonitoring Goggle were introduced in Sect. 3; the result of a pilot visual oddball experiment were discussed in Sect. 4; a conclusion based on engineering tests and experiment results was given finally in Sect. 5.

2 Contribution of EEG/EOG and OVR to VR/AR Applications

EOG measured around the perimeters of subjects’ eyes can be used to monitor the dynamics of eye saccade and fixation. These features have long been exploited in eye-based human-computer interfaces [6]. Recent studies showed that saccade durations and movement patterns can be used to estimate subjects’ attention levels during study sessions while fixation points and mean fixation durations can be used to assess subjects’ accuracy of response [7] in learning. In addition, the electromyogram (EMG) captured from the same electrodes can be used to monitor eyelid movements. Specifically, eye blink durations and intervals, eyelid closure delays and re-opening speeds are useful in estimating subjects’ fatigue levels [8].

EEG data measured from the frontal, parietal and occipital regions of human brains are known to correlate with subjects’ cognitive and emotional states. Spectral components in theta (4–7 Hz), alpha (8–12 Hz), and beta (13–30 Hz) bands have long been used to assess subjects’ attention and alertness levels [9–11]. EEG generated by the Anterior Cingulate Cortex is known to be a biomarker of rational cognitive functions such as reward anticipation, decision making and empathy [12] while the differential asymmetric EEG measurements from 12 electrode pairs can accurately classify four emotional states: joy, anger, sadness, and pleasure while listening to music [13].

Cybersickness remains a “sore thumb” that may hinder the widespread use of VR technology [14]. Conflicting signals produced by users’ oculomotor and vestibular systems is a main cause of this sickness. This Goggle enables oculo-vestibular recoupling (OVR) [4] by applying low-power transmastoidal direct-current or random-noise galvanic vestibular stimulation (GVS) [15] that are modulated based on in-situ EOG and 9-DOF motion measurements.

3 Development of Neuromonitoring VR/AR Goggle

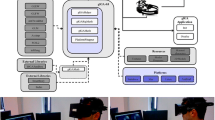

The Neuromonitoring VR/AR Goggle Fig. 5(a) is a pervasive adaptive IoT platform that adds neuromonitoring and neurofeedback to VR/AR applications. As shown 1, the system consists of four components: a commercial VR/AR Goggle, a proprietary Neuromonitor, a smartphone/tablet Operating Console and an IoT-Cloud Computing service support. Bluetooth 4 communications among the Goggle, the Neuromonitor and the Console allow the system to be used completely untethered anytime anywhere. The WiFi-Internet connections of the Neuromonitor and the Goggle as IoT devices support Firmware-over-the-air (FOTA) programming, Linked Data mining and Cloud based machine learning. These capabilities enable rapid personalization as well as progressive refinement of brain state prediction models using transfer machine learning techniques (Fig. 1).

VR/AR Goggle. Commercial products (current choice: Samsung Gear VR) were used to offer users with the immersive experience of virtual/augmented reality while monitoring their EEG/EOG signals and head movements using the Neuromonitor unit. Oculus Mobile SDK [16] and OpenGL ES 2.0 [17] were used to produce the VR/AR contents and augmentation. A distinct approach to use the Neuromonitoring Goggle is to embed special visual patterns and motion sequences into 3D/VR scenes and use these stimuli to evoke neuro-physiological/psychological responses. Potential applications in neurodiagnostics and perceptual learning can all take advantage of this immersive form of visual stimulation [Sect. 5].

Neuromonitor. This miniature device (3 cm × 4.5 cm × 1.5 cm in size excluding the Li battery) functions as an add-on to the VR/AR Goggle. The current prototype can capture six EEG channels Fig. 5(b) and two EOG/EMG channels Fig. 5(c) simultaneously at 500sps with micro-second timestamping synchronous to the Internet standard time. Its programmable current sources can inject 250 μA (rms) DC/AC/Gaussian random waveforms at 1000sps. Equipped with a multi-core embedded processor, a Bluetooth + Wi-Fi radio and a micro-SD storage, the device is well-positioned to serve as a wearable data hub for multi-modal electrophysiological signals.

Cloud Computing Support. The Neuromonitoring Goggle is merely an IoT frontend to a pervasive fog-cloud computing platform developed in the NCTU PET Lab [18]. A BCI Ontology [19] aligned with the W3C Semantic Sensor Network Ontology [20] was devised to capture the semantic structure of real-world brain-computer interactions. An on-line repository of BCI data conforming to Linked Data practice [21] was deployed and can be accessed via the CerebraWeb API [22]. This Cloud Computing platform can couple with smartphones or laptops connected to the Neuromonitor and recruit them to perform real-time signal processing and brain state prediction.

Operating Console. It is an Android mobile app that performs the “housekeeping” functions such as: remote control of Neuromonitor and VR visualization; real-time display of captured signals; management of the Linked Data repository. Aided with DC lead-off detection capability of the data acquisition unit, the real-time waveform display can alert the operator of loose electrode contacts or poor signal quality.

3.1 Hardware Function Modules

The Neuromonitor consists of the following hardware modules Fig. 2.

Embedded Dual-Core Processor. Advanced embedded processor with ample RAM can capture data and perform time-bounded signal processing simultaneously.

Wi-Fi and Bluetooth Radios. Bluetooth radio allows the neuromonitoring unit to be controlled in a locally deployed environment via a mobile application GUI. On the other hand, Wi-Fi enables the module to serve both as a data collection hub and Internet of Things gateway, offering and accessing various web services to other Internet nodes.

MicroSD Card. A 32 GB micro-SD on board allows the Neuromonitoring Goggle to store at least eight hours of recorded data.

Eight-Channel Biosensors. An eight-channel Biosensing frontend equipped with low-noise pre-amplifiers and a 24-bit sigma-delta analog-digital converter (ADC) is capable of synchronously collecting 2000 samples per second. Each channel is equipped with lead-off detection capability in order to check whether its electrodes have good contact with the subject scalp.

Two-Channel Programmable Current Sources. Two 256-step software programmable bi-directional current sources can optionally inject DC, AC or Gaussian random noisy currents with max. ±1 mA amplitude or 250 μA rms value and [0.5 Hz, 50 Hz] band-width through four electrodes at Fz, Oz, left and right mastoids. These weak currents can offer sub-threshold galvanic vestibular stimulation (GVS) based on stochastic resonance as an attempt to recouple vestibular response with moving image perception. The authors are currently working with Martin J. McKeown’s Pacific Parkinson’s Research Center at the University of British Columbia to investigate its effectiveness in improving patients’ motor control. We also tried to use similar approach to mitigate cybersickness. Given the known current waveforms, the current-induced artifacts can possibly be largely removed from the EEG/EOG signals using QR decomposition following by independent component analysis [23].

3.2 Software Functional Modules

The Neuromonitor operates not only with precise timing and real-time response; it also connects with other IoT frontends and fog/cloud servers through standard M2 M communication. Figure 3 illustrates its software functional modules.

Embedded Real-Time Linux Operating System. Neuromonitor employs standardized embedded Yocto Linux [24] with Preempt-RT extension to fulfill real time data processing and I/O requirements.

Machine-to-Machine Publish/Subscribe I/O. The Neuromonitor exchanges raw or processed data autonomously with other M2 M devices using the MQTT Publish/Subscribe standard [25]. These demand-driven data transfers maintain the communication fabric of pervasive fog-cloud computing.

NTP Time Synchronization. With the use of Network Time Protocol (NTP), the VR/AR Goggle, the Neuromonitor and the Operating Console are all time-locked to the atomic clocks on the Internet with 1μs resolution. All the components are synchronized to the same NTP server in order to maintain max. 1 ms timing offsets. The synchronized clocks are then used as the timing reference for all the timestamping of data samples and events.

Synchronous Multi-channel Data Sampling. The analog-to-digital converter (ADC) samples the eight-channel biosensor at 500sps while the data acquisition software driven by the Linux system clock polls the ADC at 2048sps. Figure 4(a) shows the time trace of 2 ms sampling intervals and the timing offsets within [−0.25 ms, +0.20 ms].

Synchronous Event Marking. Visual stimuli displayed on the VR/AR goggle are refreshed in 60fps while its timing is again sampled at 2048sps. Figure 4(b) shows the time trace of 16.692 ms frame intervals and the timing offsets within [−2 ms, +0.5 ms].

4 Pilot Experiments

A binocular visual oddball experiment preceded with open and closed-eye resting EEG recording was performed on subjects with normal or corrected to normal vision in order to evaluate the effectiveness of the Neuromonitoring Goggle in acquiring EEG signals as well as visual evoked responses in real-world environments.

Participants. Six healthy subjects (five male, one female; age: 21.2‒58.3, median: 23.45, standard error: 5.81) participated in the oddball experiment, which was carried out at National Chiao Tung University (NCTU), Hsinchu, Taiwan. All subjects had previous experience with EEG recording. Informed consent was obtained from all of them. NCTU ethics committee has approved the study protocol and the procedures were carried in accordance with the approved protocol.

Method. The Neuromonitoring Goggle was worn by each subject in turn. A foam pad with EEG sensors was applied at the back of subject’s head over the Goggle’s back-pad to sooth the pressure. Small amount of electric-conductive gel was applied to the tip of the electrodes to improve conductivity. Four channels (Pz, Oz, O3, and O4) in the occipital region were used to record ERP signals.

Each subject went through first a resting EEG recording and then a visual oddball experiment with an approx. two-minute intermission. During the resting EEG recording, subjects were instructed to relax and keep their eyes open first and then closed without blinking for one minute each. During the oddball experiment, two white crosses (a crisscrossed one  as the target and an up-right one

as the target and an up-right one  as the non-target) were flashed with 100 ms duration over a black background in both eyes through the Goggle. Subjects were asked to tap their figures at the desk when they saw the targets while remained inert when they saw the non-targets. The probability of target occurrence was set at 20 % while no two targets can directly follow each other. Target and non-target stimuli were presented in random order with randomized inter-stimulus intervals of 1400‒2400 ms. These intervals are long enough to ensure no overlap between the event related potentials (ERPs) evoked by the visual stimuli and they are deliberately randomized to avoid habituation of neural responses. In order to reduce visual fatigue, targets and non-targets were grouped into a sequence of ten stimuli with a five-second rest period before each sequence. A small round dot appeared steady for four second in both eyes at the beginning of the rest period. During that time, the subjects were allowed to blink their eyes and relax briefly. Five of these sequences were composed into a session. Each subject was asked to go through ten sessions (again with two-minute intermissions in between) in order to accumulate a total of a hundred targets.

as the non-target) were flashed with 100 ms duration over a black background in both eyes through the Goggle. Subjects were asked to tap their figures at the desk when they saw the targets while remained inert when they saw the non-targets. The probability of target occurrence was set at 20 % while no two targets can directly follow each other. Target and non-target stimuli were presented in random order with randomized inter-stimulus intervals of 1400‒2400 ms. These intervals are long enough to ensure no overlap between the event related potentials (ERPs) evoked by the visual stimuli and they are deliberately randomized to avoid habituation of neural responses. In order to reduce visual fatigue, targets and non-targets were grouped into a sequence of ten stimuli with a five-second rest period before each sequence. A small round dot appeared steady for four second in both eyes at the beginning of the rest period. During that time, the subjects were allowed to blink their eyes and relax briefly. Five of these sequences were composed into a session. Each subject was asked to go through ten sessions (again with two-minute intermissions in between) in order to accumulate a total of a hundred targets.

Results. Data collected from the first subject (#0) were discarded because the visual stimuli were set to low intensity. Among the remaining datasets, alpha waves were observed in all the resting EEG data recorded with the subjects’ eyes closed. Figure 6 displays the \( [\mu , \mu \pm 1\sigma ] \) plot of the open-eye (blue) and closed-eye (red) EEG power spectral density (PSD) of subject #4 by applying multitaper analysis (NW = 4) onto five 50 % overlapped twenty-second segments of [1 Hz, 50 Hz] band-passed EEG data. A spectral peak of 17db appears clearly in the [8 Hz, 12 Hz] alpha band. Besides, both open/closed-eye PSDs diminished gradually with respect to the increase in frequency.

Synchronous averaging and ERP map construction [26] were applied to the event related potential (ERP) epochs collected from both the target and non-target events during the visual oddball experiment. Each epoch was taken from 200 ms before to 1200 ms after the onset of each target/non-target stimulus excluding the five-second rest periods. The first 200 ms of each epoch was taken as the baseline, the mean value of which was subtracted from the remaining samples. Moreover, The ERPs with amplitudes exceeding ±100 μV were removed from the dataset before further processing. Among the remaining five subjects, the average ERP waveforms of subjects 1 ‒ 4 showed sharp target and non-target N2 pulses at all channels while the waveforms of Subject #5 seemed to show involuntary eye blinks in response to the stimuli. Only the ERPs of Subject #1 and #4 exhibited observable P3 pulses. Figure 7 displays the average ERP waveformsFootnote 1 as well as the target/non-target ERP maps of both subject #1 and #4. The ERP maps all showed clear P1 and N2 pulses in respond to the visual stimuli; however, the P3 pulses of subject #1 were smeared as he tapped his fingers while counting the target stimuli. It also seemed that the EEG signals captured from channel Pz were of better quality than those from Oz.

5 Conclusions

The detection of alpha waves in the open/closed-eye resting EEG and the P3 pulses in the ERPs evoked by the oddball target stimuli gave clear evidence that the Neuromonitoring Goggle is capable of capturing users’ EEG signals and monitoring their brain responses towards visual stimulation. In addition, the engineering tests performed on the device demonstrated the precise and consistent timing of its data sampling and event marking based on Network Timing Protocol (NTP) and Linux System Clock. These empirical results support the claim that the Neuromonitoring Goggle can be used for pervasive brain state monitoring with VR/AR goggle-based applications.

Notes

- 1.

Each averaged ERP waveforms of channels Pz, Oz, O3 and O4 were shifted by ‒20 μV respectively in order to separate their traces.

References

Stein, J.: Inside the box. TIME Mag. 186(6), 30–39 (2015)

Virtual Reality: Grand Illusions. The Economist 416(8953) (2015)

Brown, S.: Interactive Neurological Methods for Characterizing Syntactic Elements of Virtual Reality. Personal communication (2015)

Cevette, M.J., Stepanek, J., Cocco, D., Galea, A.M., Pradhan, G.N., Wagner, L.S., Oakley, S.R., Smith, B.E., Zapala, D.A., Brookler, K.H.: Oculo-vestibular recoupling using galvanic vestibular stimulation to mitigate simulator sickness. Aviat. Space Environ. Med. 83(6), 549–555 (2012)

Yeh, K.H., She, H.C.: On-line synchronous scientific argumentation learning: nurturing students’ argumentation ability and conceptual change in science context. Comput. Educ. 55(2), 586–602 (2010)

Bulling, A., Gellersen, H.: Toward mobile eye-based human-computer interaction. IEEE Pervasive Comput. 9(4), 8–12 (2010)

Chen, S.C., She, H.C., Chuang, M.H., Wu, J.Y., Tsai, J.L., Jung, T.P.: Eye movements predict students’ computer-based assessment performance of physics concepts in different presentation modalities. Comput. Educ. 74(1), 61–72 (2014)

Schleicher, R., Galley, N., Briest, S., Galley, L.: Blinks and saccades as indicators of fatigue in sleepiness warnings: looking tired? Ergonomics 51(7), 982–1010 (2008)

Jung, T.P., Makeig, S., Stensmo, M., Sejnowski, T.J.: Estimating alertness from the EEG power spectrum. IEEE Trans. Biomed. Eng. 44(1), 60–69 (1997)

Jap, B.T., Lal, S., Fischer, P., Bekiaris, E.: Using EEG spectral components to assess algorithms for detecting fatigue. Expert Syst. Appl. 36(2), 2352–2359 (2009)

Wang, Y.T., Huang, K.C., Wei, C.S., Huang, T.Y., Ko, L.W., Lin, C.T., Cheng, C.K., Jung, T.P.: Developing an EEG-based on-line closed-loop lapse detection and mitigation system. Front. Neurosci. 8, 321 (2014)

Decety, J., Jackson, P.L.: The functional architecture of human empathy. Behav. Cogn. Neurosci. Rev. 3(2), 71–100 (2004)

Lin, Y.P., Wang, C.H., Jung, T.P., Wu, T.L., Jeng, S.K., Duann, J.R., Chen, J.H.: EEG-based emotion recognition in music listening. IEEE Trans. Biomed. Eng. 57(7), 1798–1806 (2010)

LaViola Jr., J.J.: A discussion of cybersickness in virtual environments. ACM SIGCHI Bull. 32(1), 47–56 (2000)

Kim, D.J., Yogendrakumar, V., Chiang, J., Ty, E., Wang, Z.J., McKeown, M.J.: Noisy galvanic vestibular stimulation modulates the amplitude of EEG synchrony patterns. PLoS One 8(7), e69055 (2013)

Oculus Mobile SDK. Oculus. https://developer.oculus.com/documentation/mobilesdk/latest/. Accessed Mar 2016

OpenGL ES. Khronos Group. https://www.khronos.org/opengles/. Accessed 2016

Zao, J.K., Gan, T.T., You, C.K., Chung, C.E., Wang, Y.T., Méndez, S.J.R., Mullen, T., Yu, C., Kothe, C., Hsiao, C.T., Chu, S.L.: Pervasive brain monitoring and data sharing based on multi-tier distributed computing and linked data technology. Front. Hum. Neurosci. 8, 370 (2014)

Rodríguez Méndez, S., Zao, J.K.: https://w3id.org/BCI-ontology. Accessed Mar 2016

Semantic Sensor Network Ontology. W3C. https://www.w3.org/2005/Incubator/ssn/ssnx/ssn. Accessed 2009–2011

Linked Data. http://linkeddata.org. Accessed 2009

CerebraWeb.net. NCTU Pervasive Embedded Technology (PET) Laboratory. http://cerebraweb.net. Accessed 2015

Kim, D.J., Yogendrakumar, V., Chiang, J., Ty, E., Wang, Z.J., McKeown, M.J.: Noisy galvanic vestibular stimulation modulates the amplitude of EEG synchrony patterns. PLoS One 8(7), e69055 (2013)

Yocto Project. Linux Foundation Collaborative Project. https://www.yoctoproject.org/

Message Queuing Telemetry Transport. http://mqtt.org/. Accessed 1999

Makeig, S., Debener, S., Onton, J., Delorme, A.: Mining event-related brain dynamics. Trends Cogn. Sci. 8(5), 204–210 (2004)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Zao, J.K. et al. (2016). Augmenting VR/AR Applications with EEG/EOG Monitoring and Oculo-Vestibular Recoupling. In: Schmorrow, D., Fidopiastis, C. (eds) Foundations of Augmented Cognition: Neuroergonomics and Operational Neuroscience. AC 2016. Lecture Notes in Computer Science(), vol 9743. Springer, Cham. https://doi.org/10.1007/978-3-319-39955-3_12

Download citation

DOI: https://doi.org/10.1007/978-3-319-39955-3_12

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-39954-6

Online ISBN: 978-3-319-39955-3

eBook Packages: Computer ScienceComputer Science (R0)