Abstract

In the medical imaging applications, endoscope image is used to observe the human body. The VBW (Vogel-Breuß-Weickert) model is proposed as a method to recover 3-D shape under point light source illumination and perspective projection. However, the VBW model recovers relative, not absolute, shape. Here, shape modification is introduced to recover the exact shape. Modification is applied to the output of the VBW model. First, a local brightest point is used to estimate the reflectance parameter from two images obtained with movement of the endoscope camera in depth. A Lambertian sphere image is generated using the estimated reflectance parameter and VBW model is applied for a sphere. Then Radial Basis Function Neural Network (RBF-NN) learning is applied. The NN implements the shape modification. NN input is the gradient parameters produced by the VBW model for the generated sphere. NN output is the true gradient parameters for the true values of the generated sphere. Here, regression analysis is introduced in comparison with the performance by NN. Depth can then be recovered using the modified gradient parameters. Performance of shape modification by NN and regression analysis was evaluated via computer simulation and real experiment. The result suggests that NN gives better performance than the regression analysis to improve the absolute size and shape of polyp.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Endoscopy allows medical practitioners to observe the interior of hollow organs and other body cavities in a minimally invasive way. Sometimes, diagnosis requires assessment of the 3-D shape of the observed tissue. For example, the pathological condition of a polyp often is related to its geometrical shape. Medicine is an important area of application of computer vision technology. Specialized endoscopes with a laser light beam head [1] or with two cameras mounted in the head [2] have been developed. Many approaches are based on stereo vision [3]. However, the size of the endoscope becomes large and this imposes a burden on the patient. Here, we consider a general purpose endoscope, of the sort still most widely used in medical practice.

Shape recovery from endoscope images is considered. Shape from shading (SFS) [4] and Fast Marching Method (FMM) [5] based SFS approach [6] are proposed. These approaches assume orthographic projection. An extension of FMM to perspective projection is proposed in [7]. Further extension of FMM to both point light source illumination and perspective projection is proposed in [8]. Recent extensions include generating a Lambertian image from the original multiple color images [9, 10]. Application of FMM includes solution [11] under oblique illumination using neural network learning [12]. Most of the previous approaches treat the reflectance parameter as a known constant. The problem is that it is impossible to estimate the reflectance parameter from only one image. Further, it is also difficult to apply point light source based photometric stereo [13] in the context of endoscopy.

Iwahori et al. [14] developed Radial Basis Function Neural Network (RBF-NN) photometric stereo, exploiting the fact that an RBF-NN is a powerful technique for multi-dimensional non-parametric functional approximation.

Recently, the Vogel-Breuß-Weickert (VBW) model [15], based on solving the Hamilton-Jacobi equation, has been proposed to recover shape from an image taken under the conditions of point light source illumination and perspective projecction. However, the result recovered by the VBW model is relative. VBW gives smaller values for surface gradient and height distribution compared to the true values. That is, it is not possible to apply the VBW model directly to obtain exact shape and size.

This paper proposes a new approach to improve the accuracy of polyp shape determination as absolute size. The proposed approach estimates the reflectance parameter from two images with small camera movement in the depth direction. A Lambertian sphere model is synthesized using the estimated reflectance parameter. The VBW model is applied to the synthesized sphere and shape then is recovered. An RBF-NN is used to improve the accuracy of the recovered shape, where the input to the NN is the surface gradient parameters obtained with the VBW model and the output is the corresponding, corrected true values. Regression analysis is another candidate for the modification of surface gradients.

Performance of shape modification by NN and regression analysis was evaluated via computer simulation and real experiment. NN gives better performance than the regression analysis to improve the absolute size and shape of polyp.

2 VBW Model

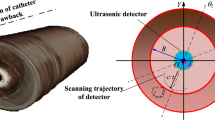

The VBW model [15] is proposed as a method to calculate depth (distance from the viewer) under point light source illumination and perspective projection. The method solves the Hamilton-Jacobi equations [16] associated with the models of Faugeras and Prados [17, 18]. Lambertian reflectance is assumed.

The following processing is applied to each point of the image. First, the initial value for the depth \(Z_{default}\) is given using Eq. (1) as in [19].

where I represents the normalized image intensity and f is the focal length of the lens.

Next, the combination of gradient parameters which gives the minimum gradient is selected from the difference of depths for neighboring points. The depth, Z, is calculated from Eq. (2) and the process is repeated until the Z values converge. Here, (x, y) are the image coordinates, \(\varDelta t\) is the change in time, (m, n) is the minimum gradient for (x, y) directions, and \(Q=\frac{f}{\sqrt{x^{2}+y^{2}+f^{2}}}\) is the coefficient of the perspective projection.

Here, it is noted that the shape obtained with the VBW model is given in a relative scale, not an absolute one. The obtained result gives smaller values for surface gradients than the actual gradient values.

3 Proposed Approach

3.1 Estimating Reflectance Parameter

When uniform Lambertian reflectance and point light source are assumed, image intensity depends on the dot product of surface normal vector and the light source direction vector subject to the inverse square law for illuminance.

Measured intensity at each surface point is determined by Eq. (3).

where E is image intensity, \(\mathbf{s}\) is a unit vector towards the point light source, \(\mathbf{n}\) is a unit surface normal vector, and r is the distance between the light source and surface point.

The proposed approach estimates the value of the reflectance parameter, C, using two images acquired with a small camera movement in the depth direction. It is assumed that C is constant for all points on the Lambertian surface. Regarding geometry, it is assumed that both the point light source and the optical center of lens are co-located at the origin of the (X, Y, Z) world coordinate system. Perspective projection is assumed.

The actual endoscope image has the color textures and specular reflectance. Using the approach proposed by [20] the original input endoscope image is converted into one that satisfies the assumptions of a uniform Lambertian gray scale image.

The procedure to estimate C is as follows.

-

Step 1. If the value of C is given, depth Z is uniquely calculated and determined at the point with the local maximum intensity [21]. At this point, the surface normal vector and the light source direction vector are aligned and produce the local maximum intensity for that value of C.

-

Step 2. For camera movement, \(\varDelta Z\), in the Z direction, two images are used and the difference in Z, \(Z_{diff}\), at the local maximum intensity points in each image is calculated. Here the camera movement, \(\varDelta Z\), is assumed to be known.

-

Step 3. Let f(C) be the error between \(\varDelta Z\) and \(Z_{diff}\). f(C) represents an objective function to be minimized to estimate the correct value of C. That is, the value of C is the one that minimizes f(C) given in Eq. (4).

$$\begin{aligned} f(C) = (\varDelta Z - Z_{diff}(x,y))^2 \end{aligned}$$(4)

3.2 NN Learning for Modification of Surface Gradient

The size and shape recovered by the VBW model are relative. VBW gives smaller values for surface gradient and depth compared to the true values. Here, modification of surface gradient and improvement of the recovered shape are considered. First, the surface gradient at each point is modified by a neural network. Then the depth is modified using the estimated reflectance parameter, C, and the modified surface gradient, \((p,q)=(\frac{\partial Z}{\partial X}, \frac{\partial Z}{\partial Y})\). A Radial Basis Function Neural Network (RBF-NN) [12] is used to learn the modification of the surface gradient obtained by the VBW model.

Using the estimated C, a sphere image is synthesized with uniform Lambertian reflectance.

The VBW model is applied to this synthesized sphere. Surface gradients, (p, q), are obtained using forward difference of the Z values obtained from the VBW model.

The estimated gradients, (p, q), and the corresponding true gradients for the synthesized sphere, (p, q), are given respectively as input vectors and output vectors to the RBF-NN. NN learning is applied.

After NN learning, the RBF-NN can be used to modify the recovered shape for other images.

Two endoscope images, (a) and (b), and the images assuming Lambertian reflectance, (c) and (d), generated using [20], are shown in Fig. 1.

An example of the objective function, f(C), is shown in Fig. 2.

The synthesized sphere image used in NN learning is shown in Fig. 3(a). Surface gradients obtained by the VBW model are shown in Fig. 3(b) and (c) and the corresponding true gradients for this sphere are shown in Fig. 3(d) and (e). Points are sampled from the sphere as input for NN learning, except for points with large values of (p, q). The procedure for NN learning is shown in Fig. 4.

3.3 NN Generalization and Modification of Z

The trained RBF-NN allows generalization to other test objects. Modification of estimated gradients, (p, q), is applied to the test object and its depth, Z, is calculated and updated using the modified gradients, (p, q).

In the case of endoscope images, preprocessing is used to remove specularities and to generate a uniform Lambertian image based on [20].

Next, the VBW model is applied to the this Lambertian image and the gradients, (p, q), are estimated from the obtained Z distribution.

The estimated gradients, (p, q), are input to the NN and modified estimates of (p, q) are obtained as output from the NN.

Recall that the reflectance parameter, C, is estimated from f(C), based on two images obtained by small movement of endoscope in the Z direction.

The depth, Z, is calculated and updated by Eq. (5) using the modified gradients, (p, q), and the estimated C, where Eq. (5) also is the original equation developed in [8].

Again, \((p,q)=(\frac{\partial Z}{\partial X}, \frac{\partial Z}{\partial Y})\), E represents image intensity, f represents the focal length of the lens and \(V=\frac{f^2}{(x^{2}+y^{2}+f^{2})^{\frac{3}{2}}}\).

A flow diagram of the processing described above is shown in Fig. 5.

3.4 Regression Analysis for Shape Modification

Here, modification of surface gradient andd improvement of the recovered shape are considered by another approach for evaluation. Here, the surface gradient at each point is modified by a regression analysis. The depth is modified using the estimated reflectance parameter C and modified surface gradient parameters by regression analysis. The regression analysis estimates the linear coefficients of regression model and modifies each of the surface gradient parameters for the recovered result by VBW model. Using the estimated C, a sphere image is synthesized with uniform Lambertian reflectance. The VBW model is applied to this synthesized sphere. Ellipsoid is assumed under the consideration to treat a sphere. The hight z of ellipsoid is differenciated with x and y respectively and equation of p and q are derived. Using n points on the sphere, p and q is calculated from the equation. These ideal values are provided as true p and q.

The estimated gradients, (p,q), and the corresponding true gradients for the synthesized sphere, (p,q), are given respectively as input vectors and output vectors to the regression analysis. Suppose when we assume the 3rd order polynomial for the relation between the original gradient parameter and the corresponding true gradient parameter, the regression model becomes Eq. (6) and four coefficients a to d are obtained using linear least squares of Eqs. (7) to (8) with n samples points on the Lambertian sphere.

Here x is assumed to be each of gradient parameter p or q, respectively and y is assumed to be each of corresponding modified gradient parameter p or q, respectively. That is, the mapping of p by VBW to the true p and that of q by VBW to the true q are considered.

Substituting coefficients a to d into Eq. (6) can obtain the modified gradient parameters p or q. Gradient parameters p and q are obtained with numerical difference taken for the recovered result z of VBW. For the real endoscope image, these parameters are used to this regression model for the modification. In the case of endoscope images, preprocessing is used to remove specular points and to generate Lambertian image based on [20]. After modifying p and q respectively. z is updated with Eq. (5) using the modified p and q.

4 Experimental Results

4.1 NN Learning

A sphere was synthesized with radius 5 mm and with center located at (0, 0, 15). The focal length of the lens was 10 mm. The image size was 9 mm \(\times \) 9 mm with pixel size 256 \(\times \) 256 pixels.

The VBW model was used to recover the shape of this sphere. The resulting gradient estimates, (p, q), are shown in Fig. 3(b) and (c), respectively.

These estimated gradients, (p, q), are used as NN input and the corresponding true gradients, (p, q), output from the NN, are shown in Fig. 3(d) and (e). Learning was done under the conditions: error goal 1.0e-1, spread constant of the radial basis function 0.00001, and maximum number of learning epochs 500. Learning was complete by about 400 epochs with stable status.

The results of learning is shown in Fig. 6.

The reflectance parameter, C, was estimated as 854 from f(C). The difference in depth, Z, was 0.5 [mm] for the known camera movement.

As shown in Fig. 6, NN learning was complete at 428 epochs. The square error goal reached the specified value. Processing time for NN learning was around 30 s.

A sphere has a variety of surface gradients and it is used for the NN learning. After a sphere is used for NN learning, not only a sphere object but also other object with another shape including convex or concave surfaces is also available in the generalization process. This is because surface gradient for each point is modified by NN and this modification does not depend on the shape of target object.

4.2 Order Number in Polynomial with Regression Analysis

Other order number of n in polynomial used in regression analysis is also investigated via simulation. Mean error was evaluated for the height z in each case with substituting the modified (p,q) into Eq. (5). The result by VBW and that after each case of 1st to 5th regression analysis are shown in Table 1, where the number of percentage is the proportion to the radius r = 5 mm of a sphere.

Table 1 shows every case of regression analysis with different order polynomials gives improvement than the result of VBW. 1st and 2nd order regression gave some difference but 3rd, 4th and 5th order regressions did not give much difference. From these observations, 3rd-order regression analysis is used below for the comparison with NN based modification.

4.3 Computer Simulation

Computer simulation was performed for a second pair of synthesized images to confirm the performance of NN generalization. Synthesized cosine curved surfaces were used, one with center located at coordinates (0, 0, 12) and the other with center at (0, 0, 15). Common to both, the reflectance parameter, C, is 120, the focal length, f, is 10 mm and the waveform cycle is 4 mm and the \(\pm \) amplitude is 1 mm. Image size is 5 mm \(\times \) 5 mm and pixel size is 256 \(\times \) 256 pixels.

The synthesized image whose center is located at (0, 0, 12) is shown in Fig. 7(a) and the one with center located at (0, 0, 15) is shown in Fig. 7(b).

The reflectance parameter, C, was estimated according to the proposed method. Using the learned NN, the gradients, (p, q), obtained from the VBW model were input and generalized. The gradients, (p, q), were modified and the depths, Z, were updated using Eq. (5).

The graph of the objective function, f(C), is shown in Fig. 8 and the true depth is shown in Fig. 9(a). The estimated C was 119 (compared to the true value of 120). The estimagtd \(Z_{diff}\) was 2.9953 (compared to the true value of 3).

The result recovered by VBW for Fig. 7(a) is shown in Fig. 9(b). The modified values of depth, using the NN and Eq. (5), are shown in Fig. 9(c) and those by the regression analysis and Eq. (5), are shown in Fig. 9(d).

Table 2 gives the mean errors in surface gradient and depth estimation. The percentages given in the Z column represent the error relative to the amplitude of maximum\(-\)minimum depth (=4 mm) of the cosine synthesized function. In Table 2, the original VBW results have a mean error of around 3.8 degrees for the surface gradient while the proposed approach reduced the mean error to about 0.1 degree. Depth estimation also improved to a mean error of 8.3 % from 43.1 %. NN generalization improved estimation of shape for an object with different size and shape. It took 9 s to recover the shape while it took 61 s for NN learning with 428 learning epochs, that is, it took 70 s in total. Althoug regression analysis also improves the depth Z, it is also shown that modified Z by NN gives less mean error in comparison with Z by regression analysis.

Computer simulation was performed for a second pair of synthesized images to confirm the performance of NN generalization. Synthesized cosine curved surfaces were used, one with center located at coordinates (0, 0, 12) and the other with center at (0, 0, 15). Common to both, the reflectance parameter, C, is 120, the focal length, f, is 10 mm and the waveform cycle is 4 mm and the \(\pm \) amplitude is 1 mm.

Another experiment was performed under the following assumptions. The reflectance factor, C, is 590, the focal length, f, is 10 mm and the object is a sphere with radius 5 mm. The centers for two positions of the sphere were set at (0, 0, 15) and (0, 0, 17) respectively, as shown in Fig. 10. The image size was 9 mm \(\times \) 9 mm with pixel size 360 \(\times \) 360 pixels. Here, 4 % Gaussian noise (mean 0, variance 0.02, standard deviation 0.14142) is added to each of the two input images. The graph of the objective function, f(C), is shown in Fig. 11 and the true depth is shown in Fig. 12(a). The result recovered by VBW is shown in Fig. 12(b). The improved result is shown in Fig. 12(c). The mean errors in surface gradient and depth are shown in Table 3. Evaluations for 6 % (mean 0, variance 0.03, standard deviation 0.17320) and 10 % (mean 0, variance 0.03, standard deviation 0.17320) Gaussian noise included in Table 4, as well.

Learning epochs for Gaussian noise 4 %, 6 % and 10 % were 212, 212 and 210. Processing time was around 40 s for every case of different Gaussian noises.

The reflectance parameter, C, estimated from Fig. 11, was 591. Improvement in the estimated results is shown in Fig. 12(a), (b) and (c) and Table 3.

In all three cases, Gaussian noise of 4 %, 6 % and 10 %, the proposed approach reduced the mean error in Z significantly compared to the original VBW model. Table 3 also suggests that modification by NN gives better performancce than that by regression analysis.

This suggests generalization using the RBF-NN is robust to noise and is applicable to real imaging situations, including endoscopy. Result of VBW model gives less errors with noises but this is based on the result that the recovered shape is relative scale and sensitive to the original intensity of each point according to Gaussian noise, while the proposed approach gives much better shape with the absolute size. Although the error increases a little bit according to Gaussian noise, the approach is still robust and stable result is obtained.

4.4 Real Image Experiments

Two endoscope images obtained with camera movement in the Z direction are used in the experiments.

The reflectance parameter, C, was estimated and a RBF-NN was learned using a sphere synthesized with the estimated C. VBW was applied to one of the images which was first converted to a uniform Lambertian image.

Surface gradients, (p, q), were modified with the NN then depth, Z, was calculated and updated at each image point. The focal length, f = 10 mm, the image size 5 mm \(\times \) 5 mm, and camera movement, \(\varDelta Z\) = 3 mm, were assigned to the same known values as those in the computer simulation. The error goal was set to be 0.1.

The two endoscope images are shown in Fig. 13(a) and (b). The generated Lambertian images are shown in Fig. 13(c) and (d), respectively.

The objective function, f(C), is shown in Fig. 14.

The result from the VBW model is shown in Fig. 15(a) and the modified result is shown in Fig. 15(b).

The estimated value of the reflectance parameter, C, was 1141. The difference in depth, Z, at the local maximum point was 1 [mm] for the camera movement \(Z_{diff}\) between two images. In Fig. 13(c) and (d), specularities were removed compared to Fig. 13(a) and (b). The converted images are gray scale with the appearance of uniform reflectance. Figure 15(b) gives a larger depth range than Fig. 15(a). This suggests depth estimation is improved. The size of the polyp was 1 cm and the processing time for shape modification was 9 s. As it took 117 s for NN learning with 540 epochs, a total processing time was 126 s.

Although quantitative evaluation is difficult, medical doctors with experience in endoscopy qualitatively evaluated the result to confirm its correctness. Different values of the reflectance parameter, C, were estimated in different experimental environments. The absolute size of a polyp is estimated based on the estimated value of C. Accurate values of C lead to accurate estimation of the size of the polyp. The estimated polyp sizes were seen as reasonable by the medical doctor. This qualitatively confirms that the proposed approach is effective in real endoscopy.

Another experiment was done for the endoscope images shown in Fig. 16(a) and (b). The generated gray scale Lambertian images are shown in Fig. 16(c) and (d), respectively. Here the focal length is 10 mm, image size is 5 mm \(\times \) 5 mm, pixel size is 256 \(\times \) 256 and \(\varDelta Z\) was set to be 10 mm.

The graph of f(C) is shown in Fig. 17. The VBW result for Fig. 16(d) is shown in Fig. 18(a), while that for the proposed approach is shown in Fig. 18(b).

C was estimated as 4108 from Fig. 17. Figure 18(b) shows greater depth amplitude compared to Fig. 18(a).

The estimated size of the polyp was about 5 mm. This corresponds to the convex and concave shape estimation based on a stain solution. It took 60 s for NN learning with 420 epochs and a total processing time was 70 s.

4 % Gaussian noise was added to a real image and the corresponding results are shown in Fig. 19(a). Shape was estimated from the generated Lambertian image, shown in Fig. 19(b). The corresponding results are shown in Fig. 19(c) and Fig. 19(d). Here the focal length is 10 mm, image size is 5 mm \(\times \) 5 mm, pixel size is 256 \(\times \) 256 and \(\varDelta Z\) was 3 mm.

In this paper, it is assumed that movement of the endoscope is constrained to be in the depth, Z, direction only. Here, it is seen that the result is acceptable even when camera movement is in another direction, provided rotation is minimal and the overall camera movement is still small.

The reflectance parameter, C, was estimated as 13244 and Fig. 19(b) shows the final estimated shape. Total of processing time was 90 s including 80 s for NN learning with 480 epochs. The estimated size of this polyp was about 1 cm. Although Gaussian noise was added, shape recovery remained robust.

5 Conclusion

This paper proposed a new approach to improve the accuracy of absolute size and shape determination of polyps observed in endoscope images.

An RBF-NN was used to modify surface gradient estimation based on training with data from a synthesized sphere. The VBW model was used to estimate a baseline shape. However VBW gives the relative shape and cannot recover the shape with absolute size. So this paper proposed modification of gradients with the RBF-NN to recover the size and shape of object. After the gradient parameters are modified, the depth map is updated with the reflectance parameter C. The approach also proposed that this reflectance parameter, C, can be estimated under the assumption that two images are acquired via small camera movement in the depth, Z, direction. The RBF-NN is non-parametric in that no parametric functional form has been assumed for gradient modification. Performance of modification of RBF-NN is evaluated with the regression analysis for the comparison. It is shown that NN gives better modification to the original result of VBW model. The approach was evaluated both in computer simulation and with real endoscope images. Results confirm that the approach improves the accuracy of recovered shape to within error ranges that are practical for polyp analysis in endoscopy.

References

Nakatani, H., Abe, K., Miyakawa, A., Terakawa, S.: Three-dimensional measuremen endoscope system with virtual rulers. J. Biomed. Opt. 12(5), 051803 (2007)

Mourgues, F., Devernay, F., Coste-Maniere, E.: 3D reconstruction of the operating field forimage overlay in 3D-endoscopic surgery. In: Proceedings of the IEEE and ACM International Symposium on Augmented Reality (ISAR), pp. 191–192 (2001)

Thormaehlen, T., Broszio, H., Meier, P.N.: Three-dimensional endoscopy. In: Falk Symposium, pp. 199–212 (2001)

Horn, B.K.P.: Obtaining Shape from Shading Information. MIT Press, Cambridge (1989)

Sethian, J.A.: A fast marching level set method for monotonically advancing fronts. Proc. Nat. Acad. Sci. U.S.A. (PNAS U.S.) 93(4), 1591–1593 (1996)

Kimmel, R., Sethian, J.A.: Optimal algorithm for shape from shading and path planning. J. Math. Imaging Vis. (JMIV) 14(3), 237–244 (2001)

Yuen, S.Y., Tsui, Y.Y., Chow, C.K.: A fast marching formulation of perspective shape from shading under frontal illumination. Pattern Recogn. Lett. 28(7), 806–824 (2007)

Iwahori, Y., Iwai, K., Woodham, R.J., Kawanaka, H., Fukui, S., Kasugai, K.: Extending fast marching method un-der point light source illumination and perspective projection. In: ICPR 2010, pp. 1650–1653 (2010)

Ding, Y., Iwahori, Y., Nakamura, T., He, L., Woodham, R.J., Itoh, H.: Shape recovery of color textured object using fast marching method via self-caribration. In: EUVIP 2010, pp. 92–96 (2010)

Neog, D.R., Iwahori,Y., Bhuyan, M.K., Woodham, R.J., Kasugai, K.: Shape from an endoscope image using extended fast marching method. In: Proceedings of IICAI 2011, pp. 1006–1015 (2011)

Iwahori, Y., Shibata, K., Kawanaka, H., Funahashi, K., Woodham, R.J., Adachi, Y.: Shape from SEM image using fast marching method and intensity modification by neural network. In: Tweedale, J.W., Jain, L.C. (eds.) Recent Advances in Knowledge-based Paradigms and Applications. AISC, vol. 234, pp. 73–86. Springer, Heidelberg (2014)

Ding, Y., Iwahori, Y., Nakamura, T., Woodham, R.J., He, L., Itoh, H.: Self-calibration and image rendering Using RBF neural network. In: Velásquez, J.D., Ríos, S.A., Howlett, R.J., Jain, L.C. (eds.) KES 2009, Part II. LNCS, vol. 5712, pp. 705–712. Springer, Heidelberg (2009)

Iwahori, Y., Sugie, H., Ishii, N.: Reconstructing shape from shading images under point light source illumination. In: ICPR 1990, vol. 1, pp. 83–87 (1990)

Iwahori, Y., Woodham, R.J., Ozaki, M., Tanaka, H., Ishii, N.: Neural network based photometric stereo with a nearby rotational moving light source. IEICE Trans. Info. and Syst. E80–D(9), 948–957 (1997). ICPR 1990, vol. 1, pp. 83-87 (1990)

Vogel, O., Breuß, M., Weickert, J.: A direct numerical approach to perspective shape-from-shading. In: Vision Modeling and Visualization (VMV), pp. 91–100 (2007)

Benton, S.H.: The Hamilton-Jacobi Equation: A Global Approach, vol. 131. Academic Press, New York (1977)

Prados, E., Faugeras, O.: Unifying approaches and removing unrealistic assumptions in shape from shading: mathematics can help. In: Pajdla, T., Matas, J.G. (eds.) ECCV 2004. LNCS, vol. 3024, pp. 141–154. Springer, Heidelberg (2004)

Prados, E., Faugeras, O.: A mathematical and algorithmic study of the Lambertian SFS problem for orthographic and pinhole cameras. Technical report 5005, INRIA 2003 (2003)

Prados, E., Faugeras, O.: Shape from shading: a well-posed problem? In: CVPR 2005, pp. 870–877 (2005)

Shimasaki, Y., Iwahori, Y., Neog, D.R., Woodham, R.J., Bhuyan, M.K.: Generating lambertian image with uniform reflectance for endoscope image. In: IWAIT 2013, 1C–2 (Computer Vision 1), pp. 60–65 (2013)

Tatematsu, K., Iwahori, Y., Nakamura, T., Fukui, S., Woodham, R.J., Kasugai, K.: Shape from endoscope image based on photometric and geometric constraints. KES 2013 Procedia Comput. Sci. 22, 1285–1293 (2013). Elsevier

Acknowledgement

Iwahori’s research is supported by Japan Society for the Promotion of Science (JSPS) Grant-in-Aid for Scientific Research (C) (26330210) and Chubu University Grant. Woodham’s research is supported by the Natural Sciences and Engineering Research Council (NSERC). The authors would like to thank Kodai Inaba for his experimental help and the related member for useful discussions in this paper.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Iwahori, Y., Tsuda, S., Woodham, R.J., Bhuyan, M.K., Kasugai, K. (2015). Modification of Polyp Size and Shape from Two Endoscope Images Using RBF Neural Network. In: Fred, A., De Marsico, M., Figueiredo, M. (eds) Pattern Recognition: Applications and Methods. ICPRAM 2015. Lecture Notes in Computer Science(), vol 9493. Springer, Cham. https://doi.org/10.1007/978-3-319-27677-9_15

Download citation

DOI: https://doi.org/10.1007/978-3-319-27677-9_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-27676-2

Online ISBN: 978-3-319-27677-9

eBook Packages: Computer ScienceComputer Science (R0)