Abstract

This paper describes the LuminUs - a device that we designed in order to explore how new technologies could influence the inter-personal aspects of co-present musical collaborations. The LuminUs uses eye-tracking headsets and small wireless accelerometers to measure the gaze and body motion of each musician. A small light display then provides visual feedback to each musician, based either on the gaze or the body motion of their co-performer. We carried out an experiment with 15 pairs of music students in order to investigate how the LuminUs would influence their musical interactions. Preliminary results suggest that visual feedback provided by the LuminUs led to significantly increased glancing between the two musicians, whilst motion based feedback appeared to lead to a decrease in body motion for both participants.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Musical interaction

- Computer-supported cooperative work

- Groupware

- Eye-tracking

- Social signals

- Non-verbal communication

1 Introduction

There has been much focus recently on the use of wearable sensors for lifestyle, sports and activity tracking. Our research concerns the use of wearable devices as tools for understanding and enhancing interactions between musicians during co-present performances. In a previous study we assessed the suitability of various sensors for providing continuous information on the affective and behavioural aspects of collaborative music making [1]. Following on from this work, we decided to design and test a device that would enable musicians to receive real-time sensor-based feedback on aspects of their collaborative interactions. We chose to focus specifically on two non-verbal interaction features that have important expressive and communicative roles in musical performance - eye gaze and body motion.

Studies have shown that gaze has a variety of functions in non-verbal communication, including the expression of emotional and interpersonal information such as liking, attentiveness, competence, attraction, and dominance [2, 3]. It may also be used as a means of directing attention towards shared references and surveying activity in the external environment [4]. Schutz notes that visual co-presence during collaborative music making allows each performer to share “in vivid present the Other’s stream of conciousness in immediacy” [5, pp. 176]. Despite its apparent significance, surprisingly few studies have been conducted on the use of gaze during collaborative music making. Davidson and Good [6] observed that members of a string quartet used “conversations with the eyes” to convey important information while playing. A study with a string duet found that visual contact had a positive influence on timing synchrony between musicians for certain types of music [7].

Eye-tracking technology makes it possible to automatically track where someone is looking. Existing studies have predominantly investigated how eye-tracking might be used to support and evaluate the success of computer-supported collaborative work [8, 9]. Music related studies have generally focused on the potential for eye-tracking to be used as a control medium (e.g. selecting notes by glancing at specific markers - for a review see [10]). We are not aware of any studies that have used eye-tracking to investigate or support the interpersonal and affective functions of gaze during collaborative musical interactions.

As an intrinsic feature of non-verbal communication, body motions are closely tied to gestural [11] and emotional expression [2]. Humans are able to successfully identify emotions from simple point-light representations of body motion [12, 13]. More specifically, studies have found evidence for a link between measures of body motion activity and the arousal/activation aspects of emotion [14, 15]. Body motion analysis has also been applied to the study of affect in musicians [16]. Motion measurements can be obtained using accelerometers worn at positions of interest. Many consumer electronic devices - such as smart phones, smart watches, and activity trackers - already contain accelerometers, from which data can be accessed using custom built applications.

In this paper we describe the design of the LuminUs - a device that provides musicians with real-time visual feedback on either the gaze or body motion of their co-performers. We also report preliminary results from a study that investigated the impact that the LuminUs has on dyadic improvised performances.

2 The LuminUs

We designed the LuminUs with the aim of exploring how technology might be used to provide performing musicians with an increased awareness of each other, especially in situations where their mutual attention might be hindered by complex musical interfaces and physical obstructions. The LuminUs consists of a strip of 16 coloured LEDs that can be controlled individually to provide dynamic visual information (see Fig. 1(a)). The device is mounted on a flexible arm so that it can be positioned in front of the musician. Control of the lights is provided via USB connection to a computer. The design was chosen based upon three criteria for the way in which feedback should be presented to the musician: visual (as opposed to haptic or audio); minimal (so as not to be overly distracting); and dynamic (to represent time varying signals).

The LuminUs has two modes of operation - motion feedback and gaze feedback. In the motion feedback mode we measure the body movements of each musician using small wireless accelerometers worn around the waist. These movements are then processed and displayed on the LuminUs. The greater the movement, the more lights are illuminated - with the colours of the lights ranging from green (low) to red (high). In the gaze feedback mode we use eye-tracking glasses to detect when one musician is glancing towards the other. The LuminUs then visually notifies the musician who is being glanced at. In this case, more lights become illuminated as the duration of the glance increases. For gaze feedback the colours of the lights range from blue (short) to purple (long).

For eye-tracking we used the Pupil headset, which is an open-source hardware and software project [17]. The headset tracks the movement of the right eye using a single infra red camera, and simultaneously captures the wearer’s point-of-view (POV) using a separate forward-facing video camera. Following a short calibration (\(\sim \)20 s), the software is able to map the gaze point of the wearer onto the live POV video. We modified the pupil software so that it could recognise 2D markers within the POV images. Consequently, by placing markers on the headsets of each musician (see Fig. 1(b)), we are able to automatically register the moments when one person is glancing at the other.

3 The Experiment

Our hypotheses concerning the way in which the LuminUs would influence musical interactions were as follows:

-

H1. Providing gaze feedback would increase the overall amount of gaze during collaborative interaction.

-

H2. Providing motion feedback would stimulate increased awareness of the other participant, as indicated through more glancing at the other.

-

H3. Providing motion feedback would increase the overall amount of motion during collaborative interaction.

To test these hypotheses we designed an experiment in which pairs of pianists and percussionists were asked to create live improvised accompaniments to a silent animation. This task was chosen for a number of reasons. Firstly, the improvisational nature of the task encouraged the musicians to work collaboratively and heightened the need for mutual awareness. Secondly, the animation meant that the musicians had more to attend to than simply their instrument and co-performer - as would often be the case in real-world performances. In addition to this, it provided a visual stimulus to guide and influence the improvisations created by each pair.

Four two minute long animations were short-listed for use in the study. We then asked a separate group of 24 musicians to watch and rate the animations based upon their suitability for improvised musical accompaniment. The animation that received the highest average rating was chosen for use in the study.

3.1 Method

15 percussionists and 15 pianists were recruited from music colleges in London. The participants comprised 7 females and 23 males aged between 18 and 38 (\(M = 22.7\), \(SD = 4.7\)). Their playing experience ranged from 3 to 33 years (\(M = 11.6\), \(SD = 5.6\)). The participants were assigned to percussionist-pianist pairs such that the individuals in each pair did not know one another.

There were seven experimental conditions, coded as: G-G, G-X, X-G, M-M, M-X, X-M, and X-X. The first letter represents the feedback that the LuminUs provided to the percussionist, whilst the second letter represents the feedback provided to the pianist - either gaze feedback (G), motion feedback (M), or no feedback (X).

The experiment was held in a performance space, with overhead stage lighting and black curtains surrounding the performance area. The setup consisted of a large screen, which showed the animation and provided instructions to the participants. The two participants were then positioned facing the screen, but angled slightly towards one another. The percussionist was provided with two electronic drum pads (snare and floor tom) and an electronic ride cymbal, which they played standing up. The pianist was provided with a 61 note keyboard and sustain pedal, which they also played standing up. Both instruments were connected to a computer via MIDI, and the audio was output through speakers positioned either side of the screen. Each participant’s LuminUs was positioned on a stand in front of them, such that it was just below their line of sight to the screen. The devices were positioned such that each participant could only see their own device, and the brightness of the lights was adjusted so that no reflections were visible. Figure 1(b) shows the experimental setup as seen from below the screen.

Upon arriving for the experiment the participants were given a couple of minutes to play the instruments together. This gave them a chance to make brief introductions and familiarise themselves with the instrumental set up. The participants were then fitted with the accelerometers and eye tracking headsets, which were subsequently calibrated for each participant. All of the eye-tracking and motion data was time-stamped and saved. The experiment began with a warm up session, where participants watched the animation twice without playing their instruments and then twice whilst playing along. Following this, the participants were given roughly one minute to discuss ideas. This was the last opportunity for them to verbally interact during the experiment. The remainder of the experiment consisted of seven improvisation sessions, each randomly assigned to one of the seven conditions discussed above. Prior to each session each participant’s LuminUs would show either green-red (motion feedback), blue-purple (gaze feedback), or no lights (no feedback) to indicate which type of feedback they would receive in that session. This was done so that neither participant would know what kind of feedback the other was receiving. In each session the participants had two attempts to play along to the animation, with a 10 s gap between. After each session the participants completed a short questionnaire to rate aspects of their experience and subjective opinions relating to that particular session. The analysis of our questionnaire results is out of the scope of this paper.

3.2 Results and Analyses

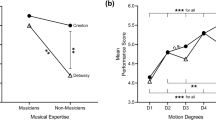

To test H1 and H2 we decided to look at the average number of glances that each participant made towards their co-performer within each condition. This information was extracted from our eye-tracking data. Figure 2(a) shows the mean and standard error for the number of glances averaged over participants within each condition. We see that the mean values for both the pianist and percussionist are lowest in the conditions where they are not receiving any feedback from the LuminUs. We can also see that, on average, the pianist tended to glance more than the percussionist.

To test H3 we extracted the mean body motion for each participant within each condition. The results are shown in Fig. 2(b). We can see that the percussionist moved more than the pianist, as might be expected. More interestingly, it appears that the body motion values tend to be lower when the participants are receiving motion feedback, compared to gaze feedback.

We used the Wilcoxon signed rank test to perform statistical analyses of some of the relationships in Fig. 2(a) and (b). In particular, we looked at the differences between the mean values obtained in the 6 conditions where the LuminUs was active, relative to the equivalent means for the inactive LuminUs condition (X - X). The results are shown in Table 1. The results for the mean number of glances indicate that there were significant differences between all of the conditions and the X - X condition. This effect is greatest for condition 1, where both participants had gaze feedback (\(r = 0.7, p = .000\)). The second strongest effect is for condition 2, where only the percussionist had gaze feedback (\(r = 0.57, p = .002\)). The third strongest is for condition 4, where both participants had motion feedback (\(r = 0.52, p = .005\)).

For the body motion results we see that none of the differences are significant. However, of potential interest is the fact that a mild negative effect is observed for condition 4 (\(r = -0.29, p = .106\)), where both participants had motion feedback. In addition to this, the only other weak negative effects correspond to the other conditions involving motion feedback. For the conditions involving gaze feedback (1–3) the effect sizes are small (\(<\!0.2\)).

3.3 Discussion

H1 and H2 are supported by our findings, which indicate that when participants were receiving feedback from the LuminUs they glanced towards each other significantly more than when no feedback was provided. The size of this effect was greatest for the condition where both participants had gaze feedback. However, it is possible that the main effects were simply due to the LuminUs serving as a reminder of the other musician’s presence. We intend to carry out further analyses to investigate the nature of these effects in more detail. In particular, we will look at how the specific timings of glances relate to the light output of the LuminUs. This will allow us to see whether musicians responded directly to the feedback, or whether it had a more general influence on their behaviour during the sessions.

Regarding H3, our results were less conclusive, but appeared to indicate that motion feedback led to decreased body motion. We envisaged that providing feedback on the motion of a co-performer would, if anything, encourage participants to move more. This hypothesis was partly influenced by studies on behavioural mimicry, which suggest that the actions or emotions displayed by one person can cause congruent behaviour in another person [18]. The fact that an opposite trend was observed is a potentially interesting finding. Unfortunately there is an absence of existing research on the behavioural effects of providing real-time motion feedback, so at this stage we can only speculate as to any causality. A possible explanation is that the musicians felt self-concious when they knew their movements were being displayed on the LuminUs. However, if this was the case then we might expect a similar effect for the gaze feedback. Furthermore, none of the musicians indicated feelings of self-conciousness when we informally interviewed them after the experiments.

The results presented in this paper indicate that the LuminUs had an influence upon the objective aspects of the musicians’ behaviours. However, at this stage it is not possible for us to say whether these were detrimental or beneficial to the musical interaction as a whole. Our ongoing analyses will investigate whether the use of the LuminUs influenced the subjective experiences of the musicians; the quality of their coordination; and the musical outcomes of their performances. In order to investigate this we will use the self report data collected from the questionnaires alongside the audio and video recordings of the performances. An additional consideration is that the short duration of the study may not have provided the musicians with sufficient opportunity to familiarise themselves with the LuminUs and make effective use of its feedback. In future work it would be interesting to investigate how musicians appropriate the LuminUs over a more sustained period of usage.

4 Conclusion

This paper provides a brief introduction to the LuminUs, and some preliminary results from experiments, where we put it to the test with trained musicians. Our initial findings suggest that providing real-time co-performer gaze and motion feedback can have a measurable impact on aspects of non-verbal communication and behaviour during collaborative musical performances. Our continued analyses will attempt to ascertain whether or not the LuminUs also influenced the quality of the interactions between musicians and the accompaniments they created. Furthermore, through detailed analysis of our eye-tracking, motion, and video data, we hope to gain a better understanding of how musicians use non-verbal communication during live performances.

References

Morgan, E., Gunes, H., Bryan-Kinns, N.: Using affective and behavioural sensors to explore aspects of collaborative music making. Int. J. Hum.-Comput. Stud. 82, 31–47 (2015)

Kendon, A.: Some functions of gaze-direction in social interaction. Acta Psychologica 26, 22–63 (1967)

Kleinke, C.L.: Gaze and eye contact: a research review. Psycholog. Bull. 100(1), 78–100 (1986)

Argyle, M., Graham, J.A.: The central Europe experiment: looking at persons and looking at objects. Environ. Psychol. Nonverbal Behav. 1(1), 6–16 (1976)

Schutz, A.: Making music together. Collected papers II (1976)

Davidson, J.W., Good, J.M.M.: Social and musical co-ordination between members of a string quartet: an exploratory study. Psychol. Music 30(2), 186–201 (2002)

Vera, B., Chew, E., Healey, P.: A study of ensemble performance under restricted line of sight. In: Proceedings of the International Conference on Music Information Retrieval, Curitiba, Brazil (2013)

Vertegaal, R.: The GAZE groupware system: mediating joint attention in multiparty communication and collaboration. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems - CHI 1999, pp. 294–301. ACM Press, New York (1999)

Chanel, G., Bétrancourt, M., Pun, T., Cereghetti, D., Molinari, G.: Assessment of computer-supported collaborative processes using interpersonal physiological and eye-movement coupling. In: Affective Computing and Intelligent Interaction (ACII 2013), Geneva, Switzerland (2013)

Hornof, A.J.: The prospects for eye-controlled musical performance. In: International Conference on New Interfaces for Musical Expression (NIME 2014), pp. 461–466 (2014)

Goldin-Meadow, S.: Beyond words: the importance of gesture to researchers and learners. Child Dev. 71(1), 231–239 (2000)

Walk, R.D., Homan, C.P.: Emotion and dance in dynamic light displays. Bull. Psychon. Soc. 22(5), 437–440 (1984)

Clarke, T.J., Bradshaw, M.F., Field, D.T., Hampson, S.E., Rose, D.: The perception of emotion from body movement in point-light displays of interpersonal dialogue. Perception 34(10), 1171–1180 (2005)

Castellano, G., Villalba, S.D., Camurri, A.: Recognising human emotions from body movement and gesture dynamics. In: Paiva, A.C.R., Prada, R., Picard, R.W. (eds.) ACII 2007. LNCS, vol. 4738, pp. 71–82. Springer, Heidelberg (2007)

Kleinsmith, A., Bianchi-Berthouze, N.: Affective body expression perception and recognition: a survey. IEEE Trans. Affect. Comput. 4(1), 15–33 (2012)

Dahl, S., Friberg, A.: Visual perception of expressiveness in Musicians’ body movements. Music Percept.: Interdisc. J. 24(5), 433–454 (2007)

Kassner, M., Patera, W., Bulling, A.: Pupil: an open source platform for pervasive eye tracking and mobile gaze-based interaction, April 2014. CoRR abs/1405.0006

Chartrand, T.L., Lakin, J.L.: The antecedents and consequences of human behavioral mimicry. Annu. Rev. Psychol. September 2012

Acknowledgements

This research is supported by the Media and Arts Technology Programme, an RCUK Doctoral Training Centre in the Digital Economy.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 IFIP International Federation for Information Processing

About this paper

Cite this paper

Morgan, E., Gunes, H., Bryan-Kinns, N. (2015). The LuminUs: Providing Musicians with Visual Feedback on the Gaze and Body Motion of Their Co-performers. In: Abascal, J., Barbosa, S., Fetter, M., Gross, T., Palanque, P., Winckler, M. (eds) Human-Computer Interaction – INTERACT 2015. INTERACT 2015. Lecture Notes in Computer Science(), vol 9297. Springer, Cham. https://doi.org/10.1007/978-3-319-22668-2_4

Download citation

DOI: https://doi.org/10.1007/978-3-319-22668-2_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-22667-5

Online ISBN: 978-3-319-22668-2

eBook Packages: Computer ScienceComputer Science (R0)