Abstract

Face image super resolution, also referred to as face hallucination, is aiming to estimate the high-resolution (HR) face image from its low-resolution (LR) version. In this paper, a novel two-layer face hallucination method is proposed. Different from the previous SR methods, by applying global similarity selecting, the proposed approach can narrow the scope of samples and boost the reconstruction speed. And the local similarity representation step make the method have better ability to suppress noise for applications under severe condition. As a general framework, other useful algorithms can also be incorporated into it conveniently. Experiments on commonly used face database demonstrate our scheme has better performance, especially for noise face image.

This research was supported in part by the National Nature Science Foundation, P. R. China. (No. 61071166, 61172118, 61071091, 61471201), Jiangsu Province Universities Natural Science Research Key Grant Project (No. 13KJA510004), Natural Science Foundation of Jiangsu Province (BK20130867), the Six Kinds Peak Talents Plan Project of Jiangsu Province(2014-DZXX-008), and the “1311” Talent Plan of NUPT.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Rapid development of video and image services call for high resolution face images. But existing condition of image and video equipment often can’t meet the requirement. Therefore, many single image super-resolution researches, targeting on sensitive regions such as vehicle plates and faces, attract much attention. Face hallucination refers to the technique of reconstructing the latent high-resolution (HR) face from a single low-resolution (LR) face. Recent state-of-the-art face hallucination methods are mostly learning-based. These learning-based methods [1] utilize HR and LR dictionary pair to obtain the similar local geometry between HR and LR samples, and achieve satisfactory result under stationary condition.

Freeman et al. [2] firstly proposed a patch-based Markov network to model the local geometry relationship between LR patches and their HR counterparts which is time consuming. Baker and Kanade [4] employed a Bayesian model to estimate the latent high-frequency components. Inspired by locally linear embedding (LLE), Chang et al. [7] obtained high-resolution image through neighbor embedding (NE) method, but the fixed number of neighbors is unstable and difficult to estimate. Considering that face images have stable structure, Ma et al. [8] took it as prior and proposed a position-patch based method, which only use the patches in the same position. But when the number of training faces is much larger than the LR patch’s dimension, unconstrained least square estimation (LSE) [8, 9] will lead to inaccurate solutions. Yang et al. [12] and Jung et al. [13] introduced sparse representation (SR) method and convex optimization to address this problem, respectively. Although these SR based methods have achieved good results, their performance are strictly limited by the noise level and degradation process. Figure 1 shows the framework of these typical position-patch based methods. To better adapt to real situation, constrained SR methods are proposed. By affixing similarity constrained factor to optimization coefficients [15, 16], adding illumination compensation for constrained factor [17], and introducing adaptive ℓ-q norm to sparse representation [18], these constrained SR methods improved the ability to resist noise. But the computational load of the commonly used SLEP toolbox [23] which solves the optimization problem is significant.

In this paper, we prove that the common face database used to face hallucination is over-complete and not all the samples are necessary. We also establish a novel two-layer framework which has a natural parallel structure to infer HR patch. Inspired by the constrained sparse representation methods, we think part of the samples in training set are very different from the input face, which corresponding to the nearly-zero coefficients during optimization. These faces are less important and can even be directly ignored to improve robustness and also reduce computation. We find that reducing the number of trained samples according to their similarities with the input LR face hardly affect the result but save a lot of time, which proves our assumption above. So a sample selection strategy is needed. Considering that the samples in database are all well-aligned faces and almost under the same condition, we intuitively choose eigenface to present the sample reducing operation. Finally, by using global similarity selection via eigenface to speed up the reconstruction process, and using local similarity representation between the same position patches and neighbor patches to synthesize HR patch, our proposed similarity selection and representation (SSR) method get better performance when the input image is corrupted by noise and different blur kernels. As a general framework, the global comparison and local representation algorithm can also be replaced by other useful face recognition and representation methods conveniently. The proposed method has the following features.

-

When the surrounding (neighbor) patches are considered to improve robustness against face position changing, about ten times of calculation will be increased. Our two-layer parallel framework can boost the reconstruction speed.

-

By excluding insignificant or dissimilar samples in the training set before reconstruction, the algorithm is further accelerated with no decrease on the quality.

-

Using constrained exponential distance as coefficients can guarantee good structure similarities between patches, and meanwhile solve the over-fitting problem caused by noise in sparse representation, which make the method very robust to noise and blur kernel changing in real scenarios.

2 Existing Position-Patch Based Approaches

Let \( N \) be the dimension of a patch (usually a patch with size \( \sqrt N \times \sqrt N \)) and \( M \) be the number of samples in the training set. Given a test patch \( {\mathbf{x}}{ \in }R^{N \times 1} \), the patches at the same position in LR training set is represented as \( {\mathbf{Y}}{ \in }R^{N \times M} \), with mth column \( {\mathbf{Y}}^{\text{m}} \) (m = 1,…M) being the patch in sample m. Then the input patch can be represented by the sample patches as:

where \( {\mathbf{w}}{ \in }R^{M \times 1} \) is the coefficients vector with entry \( w_{m} \) and \( {\mathbf{e}} \) is the reconstruction error vector.

Obviously, solving the coefficients \( {\mathbf{w}} \) is the key problem in patch-based methods. In [7–9], reconstruction coefficients are solved by constrained least square estimation (LSE) as

This least square problem’s closed-form solution can be solved with the help of Gram matrix, but it becomes unstable when the number of samples M is much bigger than the patch dimension N. Jung et al. [13] introduce sparse representation into face hallucination and convert (2) into a standard SR problem:

where \( \ell_{0} \) norm counts the non-zero entries number in \( {\mathbf{w}} \) and \( \upvarepsilon \) is error tolerance. Yang et al. [12] and Zhang et al. [14] respectively use squared \( \ell_{2} \) norm \( \left\| {\mathbf{w}} \right\|_{2}^{2} \) and \( \ell_{1} \) norm \( \left\| {\mathbf{w}} \right\|_{1} \) to replace \( \ell_{0} \) norm, which means the statistics of coefficients are constrained by Gaussian and Laplacian distribution.

Similarity constrained SR methods proposed in [15–18] can be formulated as

where \( {\mathbf{D}}{ \in }R^{M \times M} \) is the diagonal matrix which controls the similarity constrains placed on coefficients. The entries \( d_{mm} \) on the main diagonal of \( {\mathbf{D}} \) represent the Euclidean distance with gain factor \( {\text{g}} \). Furthermore, in [15–17], \( q \) is set to 1 and 2 respectively. In [18], an adaptively selected \( \ell_{q} \) norm scheme is introduced to improve its robustness against different conditions.

After the coefficients are obtained, the reconstructed HR test patch \( {\mathbf{x}}_{\text{H}} \) can be represented as

with coefficients being directly mapped to the patches of HR samples \( {\mathbf{Y}}_{\text{H}} \).

3 Proposed Method

The methods introduced in Sect. 2 have shown impressive results for experimental noise free faces. But when the noise level, blur kernel and degradation process change, the performance will drop dramatically. It’s mainly due to the under-sparse nature of noise, and the local geometry between the high dimension and the low dimension manifolds are no longer coherent since the degradation process has changed. To overcome this problem, we propose global similarity selection and local similarity representation to improve its robustness.

3.1 Global Similarity Selection

In SR based methods, a test LR patch is represented by a large number of LR sample patches through coefficients with sparse. Therefore, heavy calculation is cost on the coefficients with sparsity even the corrupted face is not sparse. So we think not all the samples in the over-complete face database are necessary. Some faces in training set are very different from the input face, which corresponding to very tiny weights during optimization. These faces are not worth occupying so much calculation because they have very limited impact on the results.

Therefore, for the noise corrupted faces, we no longer look for the representation with sparsity but use similarity representation to represent LR patch directly. In order to exclude the dissimilar samples which corresponding to the nearly-zero coefficients, a similarity comparison strategy is needed. And we choose global similarity selection scheme, instead of local (patch) similarity comparison before the reconstruction. This is mainly because the face databases we used are all same size, well aligned and under same lighting condition, global comparison can be reliable enough and very fast.

We intuitively apply Turk and Pentlad’s [10] eigenface method, which projects the test face image into the eigenface space, and selects the most similar \( M \) faces according to Euclidean distance. Given the LR face database \( {\mathbf{F}} \), with mth column being sample \( {\mathbf{F}}^{\text{m}} \). After being normalized by subtracting its mean value, the covariance matrix \( {\mathbf{C}} \) can be obtained by (6), where \( {\text{M}} \) is the number of samples in \( {\mathbf{F}} \). Then the eigenface \( {\mathbf{P}} \) is easy to compute by singular value decomposition, and the projected database \( {\mathbf{F^{\prime}}} \) is given in (7). Before reconstruction, the input LR face \( {\mathbf{x}} \) is firstly projected to the eigenface space by (8), and the similar faces can be selected between the samples and \( {\mathbf{x}} \) through Euclidean distance, according to \( {\mathbf{F^{\prime}}}_{m} - {\mathbf{x^{\prime}}}_{2}^{2} \).

Results shown in Fig. 3 in Sect. 4 demonstrate our assumption perfectly. This global similarity selection method have saved about half of the traditional method’s calculation before reconstruction.

3.2 Local Similarity Representation

After picking out the similar faces we need from the entire training set. To make full use of the information in neighbor patches, we establish a framework with parallel two-layer structure which integrates the information of patches surrounding the test LR patch, as shown in Fig. 2. Instead of using all the sample patches at the same position to estimate the test patch (as in Fig. 1), we change the traditional structure into a two layer mode which every sample outputs a middle-layer HR patch before synthesizing the final HR patch.

In Fig. 2, we can notice that the test LR patch’s neighbor patches in training samples are marked with dark lines. Let M be the number of samples we picked out and S (S is 9 in Fig. 2) be the number of neighbor patches we used surrounding the center patch. The test LR patch is still \( {\mathbf{x}}{ \in }R^{N \times 1} \), and all its neighbor patches in sample \( {\text{m}} \) (m = 1,…M) is represented as \( {\mathbf{Y}}_{\text{m}}^{S} { \in }R^{N \times S} \) with sth column being \( {\mathbf{Y}}_{\text{m}}^{\text{s}} \) (s = 1,…S).

For every sample in the LR training set, a weight vector \( {\mathbf{w}}_{\text{m}} { \in }R^{S \times 1} \) which represents the similarity between the test patch and its neighbor patches is obtained, the entries \( {\text{w}}_{\text{m}}^{\text{s}} \) are computed as

The function \( {\text{D}}\left( \cdot \right) \) calculates the patch distance from the current patch to the center patch. Then the middle-layer HR patch of sample m can be represented as

After every sample output its corresponding middle-layer HR patch, the final HR patch can be synthesized simply by

Finally, by assembling all reconstructed HR patches to the corresponding position and averaging the pixels in overlapped regions. The estimated HR face is obtained. The entire similarity selection and representation face hallucination method is summarized as following steps.

4 Experiments and Results

In this section, we conduct face hallucination experiments on the proposed SSR method to testify its performance under inconsistent degradation conditions between the test face image and training face images. The face database we apply is FEI face database [24], which contains 400 faces from 200 adults (100 men and 100 women). Among them, we randomly select 380 faces for training and the rest 20 faces for testing. All the samples are well-aligned and in the same size \( 360 \times 260 \). The LR samples are smoothed and down-sampled with factor of 4. The LR patch size is set to \( 3\times 3 \) pixels with overlap of 1 pixel and the HR patch size is \( 12 \times 12 \) pixels with 4 overlapped pixels. The smooth kernel we use in the training phase is fixed by \( 20 \times 20 \) Gaussian lowpass filter with standard deviation of 4.

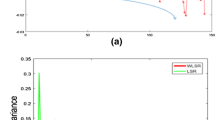

In Fig. 3, we show how the number of similar faces M in the global similarity selection stage affect the quality of reconstruction. We can see that the second half of two curves are almost flat, which means the reconstruction quality is basically unchanged even the number of faces we use to reconstruct is reduced to half of the entire set. Therefore, we set M to 190 (half of the entire set) without affecting the quality in the following tests, while other methods still use the entire set with 380 training faces.

We conduct the following experiments under two unconformity degradation conditions: noise corrupted and smoothed by different kernels. NE [7], LSR [8] and LcR [15] methods are tested for better comparison.

4.1 Robustness Against Noise

We add a zero-mean Gaussian noise (\( \upsigma = 1,2 \ldots 15 \)) to test face to get the simulated noisy face. The smooth kernel is the same with the one in the training phase. Some randomly selected objects’ results are shown in Figs. 4 and 5 when \( \upsigma \) is set to 5 and 10. The corresponding average PSNR and SSIM values are listed in Table 1. We can see that with the increase of noise level, the input face is no longer sparse. So the traditional SR methods can’t distinguish the noise component from original images, the performance drop dramatically, and there are many artificial texture due to gaussian noise. But our SSR method is much less affected by noise and is able to restore a much clearer face. Relative to the second best LcR, PSNR gains of SSR approach 0.23 dB and 1.83 dB respectively for noise level 5 and 10. More results under different noise levels are shown in Fig. 6. As we can see, with the noise level continues to grow, SSR method will continue to widen the gap with the traditional methods.

4.2 Robustness Against Kernel Changes

We find the performance of SR methods decrease significantly even the kernel in reconstruction phase is slightly changed, compared to the one in training phase. However, this situation is the most common one in practical applications. Therefore, we decide to test different methods under the condition of inconformity kernels in training and reconstruction phase. As mentioned above, a fixed \( 20 \times 20 \) gaussian lowpass filter with standard deviation of 4 is used in training phase. We change the standard deviation to 8 from 4 to make the input face more blurred than the faces in LR set.

According to Table 1, despite SSR method can’t beat LSR and LcR in PSNR and SSIM values, results in Fig. 7 intuitively demonstrate that SSR method can create more details and sharper edges than others, which is more valuable in practical use. Performances of the first three methods are influenced a lot due to the different kernel, only the eye’s region is artificially enhanced while other region is basically the same blurred as input face. But SSR can generate much clear facial edges like eyes, nose and facial contours, which proves its superiority in subjective visual quality.

5 Conclusion

In this paper, we have proposed a general parallel two-layer face hallucination framework to boost reconstruction speed and improve robustness against noise. Our method can exclude the unnecessary samples from over-complete training set using global similarity selection without quality loss. Then the local similarity representation stage can make the method output satisfactory result under severe conditions. Experiments on FEI face database demonstrate this method can achieve better results under heavy noise conditions and gain good visual quality when the degradation process is changed. As a general framework, many other existing face hallucination schemes can also be incorporated into SSR method conveniently.

References

Wang, N., Tao, D., Gao, X., Li, X., Li, J.: A comprehensive survey to face hallucination. Int. J. Comput. Vis. 106, 9–30 (2014)

Freeman, W., Pasztor, E., Carmichael, O.: Learning low-level vision. Int. J. Computer. Vis. 40(1), 25–47 (2000)

William, T.F., Thouis, R.J., Egon, C.P.: Example-based super-resolution. IEEE Comput. Graph. Appl. 22(2), 56–65 (2002)

Baker, S., Kanade, T.: Limits on super-resolution and how to break them. IEEE Trans. Pattern Anal. Mach. Intell. 24(9), 1167–1183 (2002)

Dalley, G., Freeman, B., Marks, J.: Single-frame text super-resolution: a bayesian approach. In: Proceedings of IEEE Conference on Image Processing, vol. 5, pp. 3295–3298 (2004)

Yang, C., Liu, S., Yang, M.: Structured face hallucination. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 1099–1106 (2013)

Chang, H., Yeung, D., Xiong, Y.: Super-resolution through neighbor embedding. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 275–282 (2004)

Ma, X., Zhang, J., Qi, C.: Position-based face hallucination method. In: Proceedings of IEEE Conference on Multimedia and Expo, pp. 290–293 (2009)

Ma, X., Zhang, J., Qi, C.: Hallucinating face by position-patch. Pattern Recogn. 43(6), 3178–3194 (2010)

Matthew, A.T., Alex P.P.: Face recognition using eigenfaces. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 586–591 (1991)

Wright, J., Yang, A.Y., Ganesh, A., Shankar Sastry, S., Ma, Y.: Robust face recognition via sparse representation. IEEE Trans. PAMI. 31(2), 210–227 (2009)

Yang, J., Tang, H., Ma, Y., Huang, T.: Image super-resolution via sparse representation. IEEE Trans. Image Process. 19(11), 2861–2873 (2010)

Jung, C., Jiao, L., Liu, B., Gong, M.: Position-patch based face hallucination using convex optimization. IEEE Signal Process. Lett. 18(6), 367–370 (2011)

Zhang, J., Zhao, C., Xiong, R., Ma, S., Zhao, D.: Image super-resolution via dual-dictionary learning and sparse representation. In: Proceedings of IEEE International Symposium on Circuits Systems, pp. 1688–1691 (2012)

Jiang, J., Hu, R., Han, Z., Lu, T., Huang, K.: Position-patch based face hallucination via locality-constrained representation. In: Proceedings of IEEE International Conference on Multimedia and Expo (ICME), pp. 212–217 (2012)

Jiang, J., Hu, R., Wang, Z., Han, Z.: Noise robust face hallucination via locality-constrained representation. IEEE Trans. Multimedia 16, 1268–1281 (2014)

Wang, Z., Jiang, J., Xiong, Z., Hu, R., Shao, Z.: Face hallucination via weighted sparse representation. In: Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 2198–2201 (2013)

Wang, Z., Hu, R., Wang, S., Jiang, J.: Face hallucination via weighted adaptive sparse regularization. IEEE Trans. Circ. Syst. Video Technol. 24, 802–813 (2013)

Weiss, Y., Freeman, W.T.: What makes a good model of natural images? In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8 (2007)

Roth, S., Black, M.J.: Fields of experts. Int. J. Comput. Vis. 82(2), 205–229 (2009)

Schmidt, U., Gao, Q., Roth, S.: A generative perspective on MRFs in low-level vision. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 1751–1758 (2010)

Zhang, H., Zhang, Y., Li, H., Huang, T.S.: Generative bayesian image super resolution with natural image prior. IEEE Trans. Image Process. 21(9), 4054–4067 (2012)

Liu, J., Ji, S., Ye, J.: SLEP: sparse learning with efficient projections (2010). http://www.public.asu.edu/jye02/Software/SLEP

FEI face database. http://fei.edu.br/cet/facedatabase.html

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Liu, F., Yin, R., Gan, Z., Chen, C., Tang, G. (2015). Robust Face Hallucination via Similarity Selection and Representation. In: Zhang, YJ. (eds) Image and Graphics. Lecture Notes in Computer Science(), vol 9219. Springer, Cham. https://doi.org/10.1007/978-3-319-21969-1_22

Download citation

DOI: https://doi.org/10.1007/978-3-319-21969-1_22

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-21968-4

Online ISBN: 978-3-319-21969-1

eBook Packages: Computer ScienceComputer Science (R0)