Abstract

Nowadays touchscreen smartphones are the most common kind of mobile devices. However, gesture-based interaction is a difficult task for most visually impaired people, and even more so for blind people. This difficulty is compounded by the lack of standard gestures and the differences between the main screen reader platforms available on the market. Therefore, our goal is to investigate the differences and preferences in touch gesture performance on smartphones among visually impaired people. During our study, we implemented a web-based wireless system to facilitate the capture of participants’ gestures. In this paper we present an overview of both the study and the system used.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

Smartphones are the most common kind of mobile devices; in the third quarter of 2014 they accounted for 66 % of the global mobile market [1]. Most smartphones use touchscreen technology, so touch-based user interfaces have become the main mobile interaction paradigm. However, touch-based interfaces are a challenge for most visually impaired people, particularly for blind users [2]. The accessibility features incorporated into smartphones to overcome issues related to visually-based touchscreen interaction are mainly based on voice interaction, such as Apple’s VoiceOver or Android’s TalkBack. Nevertheless, voice interaction is not always accurate, is difficult to use in noisy environments [3], and can be undesirable due to privacy or etiquette concerns [4]. Nonetheless, we feel that usable touch gestures can significantly enrich the mobile user experience of visually impaired people, despite the inherent difficulties in touch-based interaction. In a previous study by the authors on touch-based interaction in smartphones, we noted that people with different types of visual impairment performed some gestures with more or less difficulty [5]. This motivated us to further study how visually impaired people perform touch gestures. Touch gestures are characterized by a set of attributes called descriptors, which can be geometric and kinematic (e.g., number of fingers, path length, velocity, etc.), and are used by gesture recognition systems [6]. The differences in these descriptors influence the qualitative aspects of the gestures, such as discoverability, ease-of-use performance, memorability, and reliability [7]. For instance, finger count, stroke count, and synchronicity have an important effect on perceived difficulty [8]. However, creating accessible computer-based interfaces across a myriad of available platforms is a complex challenge [9], particularly due to difficulties in recruiting users with disabilities for research studies [10]. For this reason, studies sometimes use sighted participants who are blindfolded or are blocked from seeing the screen [11, 12]. Furthermore, blind people may have difficulty learning touch gestures [13]. For this reason, a study with blind people suggests some guidelines to apply when performing user tests with them: not using print symbols, reducing location accuracy demand, using familiar layouts, favoring screen landmarks, and limiting time-based gestures [14].

2 Methodology

For our study, we recruited 36 participants (14 female, 22 male) from four different local centers for blind and low-vision people in Tuscany. The mean age of the participants was 45 years for females (SD = 14.3) and 50 years for males (SD = 16.8). We classified the 36 participants in four categories: severe low vision (11), blind since birth (7), blind since adolescence (6), and those who became blind in adulthood (12). Twenty-six of the participants had previously used some kind of mobile touchscreen, such as smartphones, tablets, and music players. In addition, more than half of the participants reported to have used iOS, with the rest using mostly Android.

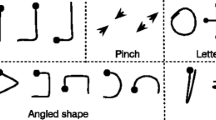

We selected 25 gesture types, mostly from those used with Android’s screen reader TalkBack, and iOS screen reader VoiceOver. The authors suggested other gestures in the set. Gesture selection was based on three main characteristics: pointer count (or finger count), stroke count, and direction. Also based on these characteristics, we classified the gestures into seven groups: swipes, letterlike shapes, taps, rotors, angled shapes, and to and fro swipes (Fig. 1). When we described the gestures to the participants, we described a given gesture by its shape or by how it is performed; we did not give a semantic to the gestures.

We experienced difficulties capturing the participants’ gestures with touchscreen smartphones. We needed to capture a set of gestures performed by visually impaired participants who were on tight schedules and were spread across geographically distributed local centers. Also taking into account the project’s time and budget constraints, we needed to optimize the capture sessions. Therefore, we decided to work with multiple participants at the same time, using identical Android smartphones. In addition, to facilitate data collection we developed a Web-based system aimed at capturing the user gestures (Fig. 2).

3 The Gesture Capture System

To capture a participant’s gesture, we used three identical Nexus 5 smartphones with a 4.9-inch display. All of our devices had Android v4.4 as the operating system. We developed a web-based client-server architecture to capture the participant’s gestures. Up to three clients (smartphones) can connect simultaneously to a web server (a laptop) through a Wi-Fi local network. The connection was via WebSockets, which allowed interactive sessions between the server and the smartphones. Gesture data and capture parameters were transmitted as JSON and serialized within an SQLite database.

From the server dashboard, we could select the gesture type and start or cancel a given capture session. These commands would be sent to all the connected smartphones, so the participants could perform their gestures with minimal interruptions. We could also visualize each gesture as it was performed, using the canvas API. In addition, we integrated automated and manual mechanisms to mark a participant’s gesture in case their gesture did not match the gesture type characteristics (e.g., number of fingers, strokes, direction, etc.).

4 Procedure

We arranged four different sets of capture sessions, one for every local blind association. In each location, we had one, two or three participants per session, according to their availability schedule. Additionally, we sorted the set of gesture types by increasing difficulty, according to our perception, in order to avoid participants’ frustration. Each session lasted approximately 75 min and consisted of two parts. The first part consisted in capture of the gestures per se, and the second part consisted of a questionnaire in which we collected data about the profile, mobile device use and gesture preferences of the participants.

The 36 participants were asked to perform each of the 25 gestures six times, with the goal of obtaining 5400 gesture samples. For every gesture type, we initially told the participants the name of the gesture and how to perform it, and then we recorded whether they already knew or had done the gesture or not. We also asked them to rate its difficulty, using a five-point scale, from 1 (very easy) to 5 (very difficult). We had cardboard cutouts of most of the gestures in case any of the participants needed a tactile representation. To manage the session, a researcher would use the web-based server to visualize each participant capture and record the data for each gesture. We also implemented an automated mechanism to mark captures with an incorrect number of simultaneous pointers or consecutive strokes, according to the reference gesture.

5 Results and Discussion

In general, participants perceived a low level of difficulty with most of the gesture types (mean = 1.49, median = 1). The most difficult gestures, as perceived by the participants, were to and fro swipes, and rotor, while the easiest gestures were simple swipes with one finger, one stroke, and one direction. However, we noted a slight increase in perceived difficulty in longer gestures with similar descriptors. Concerning gesture shape preference, half of the participants preferred rounded gestures, six steep-angled gestures, two right-angled gestures, and ten reported having no preference. In addition, the majority of the participants also preferred gestures with one finger (22 participants), and one stroke (19 participants). Regarding differences among the visually impaired groups, we found noteworthy variations, and in one gesture, the vertical chevron, the difference in sharpness was significant.

We would like to note that despite the issues solved by our solution, we had other issues in capturing the gestures. For instance, the average age of our participants, 48 years (SD = 15.8), means younger visually impaired people are underrepresented. In addition, participants would sometimes perform a gesture outside the boundaries of the screen due to the lack of tactile edges. Other times, participants’ fingernails would make contact with the display, and the gesture was not recorded as intended, especially in women with long fingernails. In the first location, an issue we had inherent to wireless capture system was multipath propagation [15]. This kind of interference occurred when the signals sent by the mobile devices to the web server, and vice versa, arrived by more than two paths or canceled each other. The construction materials used in the room where we perform the sessions caused this interference. Therefore, we relocated the equipment and participants, and in subsequent locations we did a preliminary signal strength test. Despite these issues, in the end, thanks to our capture solution we were able to make effective use of our limited time with the participants.

6 Conclusions

More research is needed regarding accessible and mobile touch-based interaction for visually impaired people, especially for those who are blind. In this paper we presented an overview of a study on gestures preferences and differences among visually impaired people. We also presented how the use of wireless and web based technologies can solve some of the problems that might arise during such studies. Given that we mainly used web technologies, similar research tools could be implemented across different mobile platforms with relative ease, compared to native solutions [16].

References

Rivera, J., van der Meulen, R.: Gartner Says Sales of Smartphones Grew 20 Percent in Third Quarter of 2014 Gartner. Egham, UK (2014)

Kane, S.K., Bigham, J.P., Wobbrock, J.O.: Slide rule: making mobile touch screens accessible to blind people using multi-touch interaction techniques. In: Proceedings of the 10th International ACM SIGACCESS Conference on Computers and Accessibility, pp. 73–80. ACM (2008)

Leporini, B., Buzzi, M.C., Buzzi, M.: Interacting with mobile devices via voiceover: usability and accessibility issues. In: Proceedings of 24th Australian Computer-Human Interaction Conference, pp. 339–348 (2012)

Kane, S.K., Jayant, C., Wobbrock, J.O., Ladner, R.E.: Freedom to roam: a study of mobile device adoption and accessibility for people with visual and motor disabilities. In: Proceedings of 11th International ACM SIGACCESS (2009)

Buzzi, M.C., et al.: Designing a text entry multimodal keypad for blind users of touchscreen mobile phones. In: Proceedings of the 16th international ACM SIGACCESS conference on Computers and accessibility, pp. 131–136. ACM, New York (2014)

Rubine, D.: Specifying gestures by example. In: Proceedings of the 18th annual conference on Computer graphics and interactive techniques, pp. 329–337. ACM (1991)

Ruiz, J., Y. Li, Lank, E.: User-defined motion gestures for mobile interaction. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. ACM, Vancouver (2011)

Rekik, Y., Vatavu, R.-D., Grisoni, L.: Understanding users’ perceived difficulty of multi-touch gesture articulation. In: Proceedings of the 16th International Conference on Multimodal Interaction. ACM (2014)

Cerf, V.G.: Why is accessibility so hard? Commun. ACM 55(11), 7 (2012)

Sears, A., Hanson, V.: Representing users in accessibility research. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 2235–2238. ACM, Vancouver (2011)

Oh, U., Kane, S.K., Findlater,L.: Follow that sound: using sonification and corrective verbal feedback to teach touchscreen gestures. In: 15th International ACM SIGACCESS, pp. 13:1–13:8. ACM, New York (2013)

Sandnes, F., et al.: Making touch-based kiosks accessible to blind users through simple gestures. Univ. Access Inf. Soc. 11(4), 421–431 (2012)

Schmidt, M., Weber, G.: Multitouch haptic interaction. In: Stephanidis, C. (ed.) UAHCI 2009, Part II. LNCS, vol. 5615, pp. 574–582. Springer, Heidelberg (2009)

Kane, S.K., Wobbrock, J.O., Ladner, R.E.: Usable gestures for blind people: understanding preference and performance. In: SIGCHI Conference, pp. 413–422 (2011)

Bose, A., Foh, C.H.: A practical path loss model for indoor WiFi positioning enhancement. In: 6th International Conference on Information, Communications and Signal Processing, 2007. IEEE (2007)

Charland, A., Leroux, B.: Mobile application development: web vs. native. Commun. ACM 54(5), 49–53 (2011)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Buzzi, M.C., Buzzi, M., Leporini, B., Trujillo, A. (2015). Design of Web-Based Tools to Study Blind People’s Touch-Based Interaction with Smartphones. In: Stephanidis, C. (eds) HCI International 2015 - Posters’ Extended Abstracts. HCI 2015. Communications in Computer and Information Science, vol 528. Springer, Cham. https://doi.org/10.1007/978-3-319-21380-4_2

Download citation

DOI: https://doi.org/10.1007/978-3-319-21380-4_2

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-21379-8

Online ISBN: 978-3-319-21380-4

eBook Packages: Computer ScienceComputer Science (R0)