Abstract

To reduce disparities related to prostate cancer among African American men, the American Cancer Society recommends that men make an informed decision with their healthcare provider about whether prostate cancer screening is right for them. The informed decision-making process can be facilitated through technology by teaching men about prostate cancer and providing them with activities to build their self-efficacy. However, these tools may be most effective when they are developed using a set of validated design principles, such as the Usability Engineering Lifecycle, in conjunction with a community-based participatory research (CBPR) process. Using CBPR can be especially useful in designing tools for minority communities, where men have the highest prostate cancer incidence and mortality. This paper describes the author’s process for using CBPR principles to develop a prostate cancer education program for African American men and also discusses the value of using these principles within an existing usability framework.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Background

With the pronounced burden of prostate cancer (PrCA) among men of all races and the disparity of incidence and mortality between African American (AA) and European American (EA) men [1, 2], there is a critical need to develop technological interventions that can assist men with informed decision making [3]. In 2015, 220,800 men were diagnosed with PrCA and 27,540 are expected die from the disease [2]. However, PrCA incidence is 60 % higher in AAs and this racial group is two and a half times more likely to die from PrCA [2]. Informed decision making is described by the CDC as: when an individual understands the disease, is familiar with the risks, benefits, and uncertainties of a screening or treatment, actively participates in the decision-making process at the level he or she desires, and makes a decision at the time of service or defers the decision to a later date [4]. Informed decision making is recommended by the American Cancer Society as a solution for reducing the PrCA mortality rate because of the unclear findings regarding the efficacy of prostate specific antigen screening, a blood test used to detect PrCA [3, 5, 6]. The two most recent, longitudinal studies on PrCA screening, titled “The European Randomized Study of Screening for PrCA” and “Prostate, Lung, Colorectal, and Ovarian (PLCO) Cancer Screening Trial on Prostate Cancer Mortality,” (which included few African American men), concluded that the prostate specific antigen test was either not effective or led to over-detection of PrCA [5, 6]. Over-detection is a serious concern because it can lead to the treatment of indolent forms of PrCA and in some cases treatment can lead to life-long side effects such as incontinence and/or impotence [7].

In addition to possessing a thorough knowledge of PrCA and its screenings/treatments, an individual must also believe that he possesses the capacity to engage in the informed decision-making process (i.e., self-efficacy) with a doctor or other healthcare provider [8, 9]. Multiple past studies have demonstrated that preparation for the informed decision-making process can be facilitated by computer-based education programs [10–12], but most of these and other studies on technology design do not report on the involvement of the target population in the intervention/technology design process. Applying community-based participatory research (CBPR) principles (primarily used in public health) to systems design can potentially enhance the impact of interventions by identifying the specific needs of the user and any foreseeable barriers to implementation [13–15]. This paper uses the Nielsen’s Usability Engineering Lifecycle [16] as a framework for discussing the design of a computer-based PrCA education program, but focuses on how CBPR principles can enhance this framework. CBPR strategies are a promising way to address cancer disparities because they leverage community involvement in each phase of the research process to assist with making the most optimal decisions regarding everything from conceptualization to intervention [17–19]. Through the development of authentic partnerships with the target audience and stakeholders, cultural and contextual relevance of interventions is increased [18, 20]. Thus, the likelihood of improvement in knowledge and preventive behavior through an intervention is maximized, resulting in better health outcomes [18, 21, 22].

1.1 Community-Based Participatory Research Principles

There are eight CBPR principles [14, 15, 23–25]. These principles, created by Israel et al., (1998) include:

CBPR approaches emerge as a critical strategy to engage stakeholders and identify culturally and geographically appropriate methods to overcome health and cancer disparities [17, 18, 26]. The key to the success of designing a PrCA education program was operationalization of the CBPR principles in our research. We were able to operationalize all of the principles with the exception of principle 7 and only partial operationalization of principle 3. This success stemmed from the multiple interactive and iterative forums where AA men in the targeted community were provided with an opportunity to actively collaborate with researchers to develop a resource for enhancing their ability to make informed decisions about PrCA screening.

1.2 Usability Engineering Lifecycle

The Usability Engineering Lifecycle (UEL) is an approach to systems design that emphasizes nine core principles that, when followed chronologically, can lead to an interface that has maximum usabilty [16]. Usability is defined by Nielsen as learnability, efficiency of use once the system has been learned, ability of frequent users to return to the system without having to relearn the system, frequency of error, and subjective user satsifaction [16]. The UEL (see Fig. 1) has been applied to projects such as desigining systems to allow gesture controlled interaction with virtual 3D content [27]. Some of the UEL’s principles are similar in nature (e.g., iterative design) to CBPR principles, but do not emphasize the importance of community involvement throughout the entire design process. However, when the aforementioned CBPR principles are employed within the UEL, the conjunctional use of these principles may lead to a more optimal interface for any community-specific, digital interventions such as a computer-based PrCA education program. The discussion below is structured using UEL design principles as a chrononlogical framework while highlighting how CBPR principles can be employed within a UEL guided intervention development process.

2 Design Process

2.1 Know the User

Within the UEL, Nielsen suggests that the developers should study the users to assess their individual characteristics (e.g., age) and the environment in which the product will be used [16]. He also describes the process for implementing a competitive analysis where current products that are similar to a potential future product are empirically tested among members of the target population [16]. In CBPR it is customary, following an in-depth literature review of a problem, to recognize the target community for an intervention as a unit of identity. (1) Recognizing the community as a unit of identity extends beyond the demographics suggested by Nielsen and can be characterized by norms, values, customs, language, sexual orientation, etc. [14]. For example, our literature review on PrCA revealed that South Carolina has one of highest PrCA mortality disparity rates between AA and EA men in the country [28]. In addition, American Cancer Society recommends that men make an informed decision about PrCA screening beginning at the age of 45 for high risk groups (40 + for AA men with a family history) [3]. Therefore, a developer seeking to create an ideal computer-based education program for preparing AA men to make an informed PrCA screening decision must identify a defined community or subset of AA men who can help determine the best inclusions for the system and the environment in which the system should be housed. In a CBPR process, the researcher will investigate those cultural practices, shared needs, and self-constructed and social representations of identity. Becoming familiar with the community’s identity, which can be separated into multiple social and geographic subgroups, can contribute to an end-product systems design that is customized to meet the needs of the target community. In addition, the formative nature of a CBPR approach essentially allows the community to have more involvement in and control over the product development. The prominent community of identity beyond the race of the men in our study was the faith community (i.e., churches). Churches were targets for the study because AAs’ spiritual needs in addition to other socio-cultural and psychological necessities can influence their participation and trust in health research [29, 30]. Churches in AA communities have also been influential in partnering with universities to offer health-related programming [31–34], which includes PrCA prevention [35–37].

Recruitment for Study Participation. AAs are significantly less likely than other racial groups to participate in health-related research [38], which could also pose an issue for someone solely using UEL processes for design. There are multiple barriers to AA participation, including factors such as mistrust and time constraints [38, 39]. Our recruitment was guided by Vesey’s framework on the recruitment and retention of minority groups, which involves a series of strategies such as leveraging partnerships in the community to assist researchers throughout the planning and implementation process (Vesey, 2002). These strategies are congruent with CBPR principles, particularly principle 3 which involves facilitating collaborative partnerships in all phases of the research. The specific strategies from Vesey’s framework used for this study were: (1) conceptualization, planning, and development of the recruitment plan and promotional materials in collaboration with community partners (i.e., leaders in churches), (2) recruitment of study sample with partners/stakeholders, and (3) reporting findings to the community at various stages in the research process. Furthermore, knowing someone who has established relationships in the community of interest and allowing some flexibility in your recruitment/research implementation plan can be paramount to reaching a recruitment goal.

Knowing Someone Who Knows Someone. Reaching out to a colleague or an existing community partner can be effective for recruiting in minority communities. For example, churches connected to your academic colleagues have a higher likelihood of being open to working with researchers than a church that doesn’t have a history of partnering with university researchers. In the course of recruitment for our study to develop a computer-based PrCA education program, there were three academic colleagues who provided the research team with names of churches with whom they had relationships. These churches not only helped to recruit their members for our research study (in conjunction with the research team), but also scheduled dates and times (e.g., after their midweek Bible study) when focus groups could be conducted. Recruitment efforts lasted two months in duration and resulted in 39 of the 40 men desired for the study. Almost all of these men were recruited through word of mouth within churches. Many of these churches were recommended by colleagues.

Other Important Things to Know When Approaching Communities of Identity With Your Research. During the process of approaching communities of identity (particularly churches) to gain support for your research, it should be noted that (1) the timeliness and relevance of the research or system aren’t always consistent with the priorities of the community. For example, our research team approached a church that questioned the impact of the proposed PrCA education program and elected to forgo participation in our study. In addition, some communities are already conscious about a specific problem such as PrCA/informed decision making and are capable of providing their members with solutions (e.g., health education/decision support). Therefore, they may underestimate the benefit of your research to enhance their current goals. In this scenario you must make the decision whether to sell the importance of your research and how it can further enhance their current efforts or simply make contact with another community. (2) Be flexible and prepared to work around the community’s schedule (e.g., they may invite you to implement your research prior to or at the beginning of an event and you may be asked to shorten your intended implementation time).

2.2 Competitive Analysis

Competitive analysis is not a key component of CBPR, but it is necessary to determine if there are products that exist that may be appropriate for your user. In our study, an analysis of competing products was accomplished (as suggested by Nielsen) through an Internet search and literature review for computer-based PrCA education programs, but most available products had either not been empirically tested among AA men or were not available for customization based on our formative research findings. These findings revealed specific PrCA information necessary for AA men in the study population to make an informed decision about prostate cancer screening and the essential functions/aesthetics of an ideal computer-based PrCA program. Therefore, it was decided by the research team to develop an original PrCA intervention.

2.3 Setting Usability Goals, Participatory/Coordinated Design

Nielsen recommends setting usability goals based on five constructs: learnability, efficiency of use once the system has been learned, ability of frequent users to return to the system without having to relearn the system, frequency of error, and subjective user satisfaction. He also explains that the priority of each usability goal and additional important attributes of the system will be dependent upon the targeted user. He then recommends a participatory design process where users have input on a specific prototype and that all aspects associated with the interface (e.g., documentation, tutorials, future releases) contain consistent elements (i.e., coordinated design). In CBPR the product usability goals are partly determined by the community because the community is considered a collaborative partner in all phases of the research. These usability goals should also build on the strengths and resources within the community. For example, men in our study attend church often, so considering how the product could fit into the church environment could be advantageous because the church could be a place where the system could be more accessible than the home environment. Furthermore, the support of the system by social networks within churches (e.g., clergy, men’s ministry) may lead to a higher likelihood of system use [40].

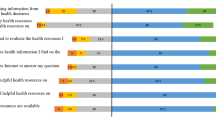

In our formative approach, the research team examined the literature relevant to PrCA, informed decision-making behavior among AAs, and technology use/acceptance (in general and for health decision making). We then convened multiple focus groups to determine AA men’s (1) current PrCA risk and screening knowledge, (2) decision-making processes for PrCA screening, (3) usage of, attitudes toward, and access to interactive communication technologies (e.g., computers, ATMs, kiosks), and (4) preferences for and characteristics of a computer-based PrCA education program. These discussions helped the research team determine what information should be included in a PrCA education program based on knowledge and decision-making needs such as facts about PrCA screening. Also, gaining information about technologies that AA men currently use on a frequent basis and what aspects of these technologies made them easy to use, helped the research team identify usability elements that could be incorporated into a digital PrCA education program. Finally, we were able to gain any additional input specifically on their openness to using a computer for PrCA decision making and create goals for designing a PrCA education program. All of the input gained through these groups was used to create a paper prototype (i.e., storyboard) in PowerPoint and a short animated clip displaying an avatar that could potentially be used in the program. A more detailed description of results can be found elsewhere [41].

The community was invited to participate in a second phase of focus groups to conduct a thorough review of the storyboard, the accompanying character script, and the clip of the AA male avatar who would be providing users with PrCA information throughout the course of the 12-minute education module. Prior to the focus group and consistent with CBPR principle 8, findings and knowledge gained through the first focus groups were disseminated to study participants. It was also explained how these findings had informed the development of a storyboard/script that captured the intended content and functionality of the future intervention. The community was then invited to ask questions prior to participating in our second phase focus groups. In the second phase of focus groups, participants were specifically asked to critique the content of the script to ensure that it was appropriate for users with diverse literacy levels and provide their thoughts on navigation elements for users who may have lower levels of computer fluency. Participants also provided input on the appearance and expected acceptance of the use of the AA male avatar. The focus groups provided a forum for co-learning and empowerment because the participants and the researchers’ exchange led to both parties leaving the focus groups with useful information. For example, while the participants gained additional knowledge about PrCA and the development process, the research team gained knowledge about decision-making behavior among AA men. In addition, the research team learned about participants’ specific technology needs, while the participants learned more about what was technologically possible. Ultimately, the feedback received was then used to revise the storyboard/script and develop a full prototype of a PrCA education program. The design of this prototype (based on significant community input) represented the integration of knowledge and action for the mutual benefit of our partners (i.e., the AA faith-based community).

2.4 Prototyping/Heuristic Analysis

In the UEL, prototyping is suggested after a heuristic analysis is performed, but our research team developed a prototype prior to the heuristic analysis using a series of usability guidelines [42] and significant community feedback. Developing the prototype prior to the heuristic analysis allowed us to receive optimal expert feedback early in the process. Waiting until after the heuristic analysis could be more costly in circumstances where the product is difficult to develop or modify.

The research team solicited assistance from an animator to help translate the storyboard (created in PowerPoint) into a full prototype. Co-learning and empowerment was also applicable to the relationship between the developer and the research team because both parties were actively involved in the development process, which translated into an exchange of information and skills (e.g., PI learned basic animation skills; Animator learned about PrCA). The prototyping process consisted of using software that facilitated motion capture through a Microsoft Kinect camera, which could be applied to a custom designed avatar. These rendered video clips were then uploaded to a learning software that was capable of playing clips based on user decision and administering quizzes throughout the user’s educational experience. The resulting PrCA prototype was designed to be operated on any computer, but the preference of the community was that the final product be administered on a large touch screen monitor to accommodate aging users who may also have lower levels of computer fluency.

The PrCA education program was then mailed along with a heuristic evaluation instrument to six experts with experience and knowledge of digital health intervention design. The evaluation instrument was based on Nielsen’s 10 usability heuristics for interface design [43]. Most of the changes recommended focused on aesthetics as opposed to issues related to the usability of the education program. The PrCA education program was then moved to empirical testing through 10 in-depth interviews. The empirical testing was a means to validate the usability of the prototype through system use observation and follow-up interviews with the community. The interviews with the community focused on similar system design constructs relevant to our expert heuristic evaluation (e.g., how similarly does this system function compared to other technologies that you have used previous to using this system?). These interviews exemplified involving the community in all phases of the research process. Details regarding the prototyping process and results from the second phase of focus groups and in-depth interviews can be found elsewhere [44].

2.5 Iterative Design

CBPR, much like UEL, supports the development of interventions/systems through a cyclical and iterative process where any stage of the design process is revisited in order to produce the most usable system. In the development of the PrCA education program, the design from conception to prototype was iteratively orchestrated through multiple focus groups, in-depth interviews, and a heuristic evaluation with the community involved in each of these phases of research. In addition, prior to the research team’s future field testing of the system, the PrCA education program will be further revised based on changes recommended during the heuristic evaluation and in-depth interviews described above. Furthermore, following this future field testing (next step), the research team will revisit the design again to make salient changes that could improve the intervention.

2.6 Collect Feedback from Field Use (Next Steps)

Based on findings from the prior heuristic evaluations and empirical testing, the research and development team will make changes necessary to increase the usability and professional value of the PrCA education program. The research team will then pilot the PrCA education program among AA men who did not participate in the development of the system. For the pilot, the PrCA education program will be administered on both tablets and all-in-one, touch screen computers. Men from the design phase will, however, be invited to participate in this phase of research by helping recruit other participants for involvement in the pilot. By implementing pre-and post-surveys, the research team can gather information about the system’s effect on the research team’s posited knowledge and behavioral outcomes (e.g., PrCA knowledge, informed decision making self-efficacy) and the usability of the system based on both general heuristics and overall satisfaction regarding user experience. At the conclusion of our study, we will disseminate findings and knowledge gained to all partners through local forums with study participants/stakeholders who will be invited to engage in further discussion regarding where the system would be most accessible to AA men within and beyond the AA faith-based community (Table 1).

3 Summary/Conclusions

There are multiple strengths and similarities in UEL and CBPR principles. However, using UEL and CBPR principles in concert could lead to stronger computer-based intervention designs for minority populations who may be far less likely to participate in a non-targeted effort to solicit feedback on a product or system design. CBPR emphasizes an equitable partnership between the developer and the community, which is not central to UEL. Conversely, CBPR has not been used extensively in studies focused on the development of computer-based education interventions and cannot be used unaccompanied by a set of usability guidelines. Further research is warranted to assess the impact of the conjunctive use of UEL and CBPR principles to develop technologies for diverse populations to address the prevention of varying diseases.

References

Siegel, R., et al.: Cancer Statistics, 2014. Cancer 64(1), 9–29 (2014)

American Cancer Society, Cancer Facts and Figures 2015. 2015: Atlanta, GA

American Cancer Society, Prostate Cancer: Early Detection. 2013: Atlanta, GA

Briss, P., et al.: Promoting informed decisions about cancer screening in communities and healthcare systems. Am. J. Prev. Med. 26(1), 67–80 (2004)

Schroder, F.H., et al.: Screening and prostate-cancer mortality in a randomized European study. N. Engl. J. Med. 360(13), 1320–1328 (2009)

Andriole, G.L., et al.: Mortality results from a randomized prostate-cancer screening trial. N. Engl. J. Med. 360(13), 1310–1319 (2009)

Welch, H.G., Albertsen, P.C.: Prostate cancer diagnosis and treatment after the introduction of prostate-specific antigen screening: 1986-2005. J. Natl Cancer Inst. 101(19), 1325–1329 (2009)

O’ Connor, A.M.: User Manual-Decision Self Efficacy Scale (1995)

Bass, S.B., et al.: Relationship of internet health information use with patient behavior and self-efficacy: experiences of newly diagnosed cancer patients who contact the national cancer institute’s cancer information service. J. Health Commun. 11(2), 219–236 (2006)

Wakefield, C.E., et al.: Development and pilot testing of an online screening decision aid for men with a family history of prostate cancer. Patient Educ. Couns. 83(1), 64–72 (2011)

Allen, J.D., et al.: A computer-tailored intervention to promote informed decision making for prostate cancer screening among African American men. Am. J. Mens Health 3(4), 340–351 (2009)

Evans, R., et al.: Supporting informed decision making for prostate specific antigen (PSA) testing on the web: an online randomized controlled trial. J. Med. Internet Res. 12(3), e27 (2010)

Patton, M.Q.: Qualitative Research Evaluation Methods. Sage Publications, Thousand Oaks (2002)

Israel, B.A., et al.: Review of community-based research: assessing partnership approaches to improve public health. Annu. Rev. Public Health 19, 173–202 (1998)

Schulz, A.J., et al.: A community-based participatory planning process and multilevel intervention design toward eliminating cardiovascular health inequities. Health Promot. Pract. 12(6), 900–911 (2011)

Nielsen, J.: The usability engineering life cycle. Computer 25(3), 12–22 (1992)

Braun, K.L., et al.: Operationalization of community-based participatory research principles across NCI’s community networks programs. Am. J. Public Health 102(6), 1195–1203 (2012)

Wallerstein, N., Duran, B.: Community-based participatory research contributions to intervention research: the intersection of science and practice to improve health equity. Am. J. Public Health 1(100), 10 (2010)

Hebert, J., et al.: Interdisciplinary, translational, and community-based participatory research: finding a common language to improve cancer research. Cancer Epidemiol. Biomark. Prev. 18(4), 1213–1217 (2009)

Letcher, A.S., Perlow, K.M.: Community-based participatory research shows how a community initiative creates networks to improve well-being. Am. J. Prev. Med. 37(6, suppl 1), S292–S299 (2009)

Kerner, J.F.: Integrating research, practice, and policy: what we see depends on where we stand. J. Public Health Manage. Pract. 14(2), 193–198 (2008)

Kerner, J.F., et al.: Translating research into improved outcomes in comprehensive cancer control. Cancer Causes Control 1, 27–40 (2005)

Israel, B.A., et al.: Community-based participatory research: policy recommendations for promoting a partnership approach in health research. Educ. Health 14(2), 182–197 (2001)

Israel, B.A., et al.: Critical issues in developing and following community based participatory research principles. In: Wallerstein, N., Minkler, M. (eds.) Community-Based Participatory Research for Health, pp. 53–79. Jossey-Bass, San Francisco (2003)

Strong, L.L., et al.: Piloting interventions within a community-based participatory research framework: Lessons learned from the Healthy Environments Partnership. Prog. Community Health Partnerships Res. Educ. Action 3(4), 327 (2009)

Friedman, D.B., et al.: Developing partnerships and recruiting dyads for a prostate cancer informed decision making program: lessons learned from a community-academic-clinical team. J. Cancer Educ. 27(2), 243–249 (2012)

Hackenberg, G., McCall, R., Broll, W.: Lightweight palm and finger tracking for real-time 3D gesture control. In: Virtual Reality Conference (VR). IEEE (2011)

Hebert, J.R., et al.: Mapping cancer mortality-to-incidence ratios to illustrate racial and gender disparities in a high-risk population. Cancer 115(11), 2539–2552 (2009)

Holt, C.L., et al.: A comparison of a spiritually based and non-spiritually based educational intervention for informed decision making for prostate cancer screening among church-attending African-American men. Urol. Nurs. 29(4), 249–258 (2009)

Holt, C.L., Wynn, T.A., Darrington, J.: Religious involvement and prostate cancer screening behaviors among southeastern African American men. Am. J. Mens Health 3(3), 214–223 (2009)

Corbie-Smith, G., et al.: Partnerships in health disparities research and the roles of pastors of black churches: potential conflict, synergy, and expectations. J. Natl Med. Assoc. 102(9), 823–831 (2010)

Allicock, M., et al.: Promoting fruit and vegetable consumption among members of black churches, Michigan and North Carolina, 2008–2010. Prev. Chronic Dis. 10, E33 (2013)

Wilcox, S., et al.: The Faith, Activity, and Nutrition (FAN) Program: design of a participatory research intervention to increase physical activity and improve dietary habits in African American churches. Contemp. Clin. Trials 31(4), 323–335 (2010)

Kaplan, S.A., et al.: Stirring up the mud: using a community-based participatory approach to address health disparities through a faith-based initiative. J. Health Care Poor Underserved 20(4), 1111–1123 (2009)

Drake, B.F., et al.: A church-based intervention to promote informed decision-making for prostate cancer screening among African-American men. J. Natl Med. Assoc. 102(3), 164–171 (2010)

Holt, C.L., et al.: Development of a spiritually based educational intervention to increase informed decision making for prostate cancer screening among church-attending African American men. J. Health Commun. 14(6), 590–604 (2009)

Holt, C.L., et al.: A comparison of a spiritually based and non-spiritually based educational intervention for informed decision making for prostate cancer screening among church-attending African-American men. Urol. Nurs. 29(4), 249–258 (2009)

Ford, J.G., et al.: Barriers to recruiting underrepresented populations to cancer clinical trials: a systematic review. Cancer 112(2), 228–242 (2008)

Owens, O.L., et al.: African American men’s and women’s perceptions of clinical trials research: focusing on prostate cancer among a high-risk population in the South. J. Health Care Poor Underserved 24(4), 1784–1800 (2013)

Venkatesh, V., et al.: User acceptance of information technology: toward a unified view. MIS Q. 27(3), 425–478 (2003)

Owens, O.L., et al.: Digital solutions for informed decision making: an academic-community partnership for the development of a prostate cancer decision aid for African-American men. Am. J. Men’s Health (2014). (in Press)

Keeker, K.: Improving Website Usability and Appeal. Microsoft Corporation, Redmond (2007)

Nielsen, J., Mack, R.L.: Usability Inspection Methods. Wiley, Hoboken (1994)

Owens, O.l., et al.: An iterative process for developing and evaluating a computer-based prostate cancer decision aid for African-American men. Health Promot. Pract. 2014. (in Press)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Owens, O.L. (2015). Principles for Developing Digital Health Interventions for Prostate Cancer: A Community-Based Design Approach with African American Men. In: Zhou, J., Salvendy, G. (eds) Human Aspects of IT for the Aged Population. Design for Everyday Life. ITAP 2015. Lecture Notes in Computer Science(), vol 9194. Springer, Cham. https://doi.org/10.1007/978-3-319-20913-5_13

Download citation

DOI: https://doi.org/10.1007/978-3-319-20913-5_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-20912-8

Online ISBN: 978-3-319-20913-5

eBook Packages: Computer ScienceComputer Science (R0)