Abstract

The question addressed here is whether and how the increase of research funding in higher education leads to a higher scientific productivity and to an increased impact measured by current procedures (i.e. Hirsch and Egghe index score distributions). Recently collected data from the Romanian universities show that increased financial resources is associated with an inflated number of publications which have a rather low impact. We thus follow the idea that higher research productivity and impact is not determined only by growing funding, but also by appropriate policy incentives. Consequently, we analyze the institutional arrangements carried away by the recently implemented reforms in the Romanian higher education and suggest that their institutional incentives could also bring along an increase in the impact of research productivity. In the context of economic crisis and scarcity of public resources, we argue that a higher research productivity and impact may better be delivered through providing institutional incentives that generate responsible and efficient resource allocation and spending in university research centers.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Some recently introduced institutional arrangements in the Romanian higher education system aimed at increasing the quality of both research and teaching, while also providing incentives to Romanian universities for a better connection to the international stream of research and ideas. These arrangements have been set to drive the Romanian higher education system from a traditionally praised Humboldtian model, where research and teaching are harmoniously combined within each and every university, towards a model in which one might identify a differentiation based on the division of labour among universities, that is research-oriented universities versus teaching-oriented universities (Shin and Toutkoushian 2011). This key rationale of such a change addressed the need of universities to grow their specialized competences as to effectively and efficiently spend the rather scarce public resources, while relying on existing and prospective faculty.

We build on Schwarz and Teichler (2002) perspective that institutional framework determines, to a large extent, the theoretical and methodological standards that higher education research strives for or achieves. Moreover, as Mace (1995) showed, new funding mechanisms are expected to change the behaviour of academics, both in terms of teaching and research. Consequently, our view is that the quality of research productivity is affected not only by the funding levels, but also by the incentives conveyed by the institutional arrangements governing the higher education system. As argued by Estermann and Pruvot (2011), diversity in the funding structure is an important condition for universities to achieve financial sustainability. While public funding is an important income source for Romanian universities, recently introduced incentives are expected to determine universities to seek out additional funding sources. However, we show that university income diversification and higher levels of funding are not the only drivers for higher quality in research. The introduction of performance criteria in the allocation of public funding should act as a driving force towards an increase in research productivity and its impact.

In the first section of this paper, we briefly analyze the legal arrangements recently provisioned within the Romanian higher education, from an institutional analysis perspective. We stress the most important changes brought forth from a manifold perspective: academic career, quality assurance and funding. In the second section, we shortly discuss the idea of scientific productivity, suggesting that citations and citation-based formulae (i.e. H-index and G-index) are acceptable tools for the measurement of research impact. Eventually, we report and discuss the findings produced after analyzing the scientific productivity of two classes of Romanian university departments (i.e. Sociology; Political Science and International Relations). We conclude by suggesting that an increase in the quality of research productivity is due to a combination of income diversification and funding growth, with institutional incentives that stress performance criteria.

2 Institutional Arrangements Within Romanian Higher Education

2.1 The Problem of Increasing Research Productivity

When approaching issues related to academic quality and research productivity in higher education systems similar to the Romanian one, at least two streams of ideas may be pointed out. On the one hand, there is a dominant stream that builds on the idea that public expenditures or public funding would necessary yield academic quality and research productivity enhancement. The best way of growing research productivity and academic quality would be that of increasing the flow of financial resources. There is also a rather marginal stream of ideas that works with the assumption that research productivity and impact could be improved by increasing the level of efficiency in spending public funding. In other words, academic quality and research productivity could be increased by holding the public funding constant while improving the mechanisms for a more efficient exploitation of the existing resources. From such a perspective, specific incentives and institutional arrangements are needed in order to determine a significant increase in the efficiency of spending the same quantity of financial resources.

These two streams of ideas may be considered as complementary. From such a perspective, we put forward a model in which we merge the need for increasing public funding with those incentives that would lead to an increase in the efficiency of spending input resources (such as funding).

As to test the model, we choose to provide a case study focused on the recent reforms in the Romanian higher education system. The reforms provisioned after 2011 have been legally set out for increasing the level of efficiency in spending public funding made available for academic research and teaching (see the Law of Education no. 1/2011).

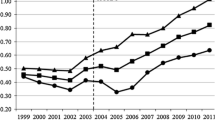

Within Romanian tertiary education and R&D sector, increasing expenditure trends can be identified (as shown in Fig. 1), even if these are particularly small compared with other EU countries, and despite the provisions of the current Romanian Law of Education (according to the Romanian Law of Education, minimum 6 % of GDP ought to have been allocated in 2012 as expenditure on tertiary education and at least 1 % of GDP as expenditure on R&D).

Given the expenditure trends (Fig. 1), the scientific productivity reported for the same time framework also increased. For instance, the increasing trend of publications within the field of Romanian sociology (as shown in Fig. 2). The data plotted in Fig. 2 were collected using Publish or Perish software tool (Harzing 2007) and refers to the scientific productivity of the academics working full-time within Romanian departments of sociology.

The Law of Education (no. 1/2011) provided the legal basis for important reforms in the Romanian education system: new institutions for the selection and recruitment of academic staff, a new mechanism of university funding, a new philosophy of higher education quality assurance and evaluation, a new arrangement for enhancing the institutional capacity of universities.

2.2 The Academic Career

By recognizing the need for auditing institutional policies focused on academic and research staff, the Council on University Qualifications and Degrees (CNATDCU) has formally introduced new quality evaluation mechanisms and schema. The new policy of staff development has thus rendered a shift from the traditional policy to a post-traditional one. While the traditional approach, in Romania, was based on principles of in-breeding localism and academic gerontocracy, considering age/seniority as the key element in the process of job recruitment and appointment, the post-traditional one relies entirely on peer-reviewed academic performances and scientometric outputs. The post-traditional approach is meant to be meritocratic, highlighting the knowledge productivity internationally acknowledged. For instance, according to the reforms provisioned in 2011, academic and research staff recruitment and promotion have had to take into account individual performances measured by specific criteria, such as: publications impact (e.g. number of citations, G-index and H-index scores), number of publications (e.g. papers, books, book chapters etc.) included in internationally indexed databases etc. This post-traditional policy approach of providing new ways of moving up onto the academic career ladder ignores age and considers the ability of academics and researchers to connect, by excellence in research, to international flows of ideas and research communities. Under the given context, the policy expectation is that the new selection procedures would filter the occupational mobility and orient the academic career ladder towards breeding internationally recognized performance and originality.

2.3 The Quality Assurance Process

Concerning the quality assurance procedures, Romanian Agency for Quality Assurance in Higher Education (ARACIS), using the ACADEMIS project as a vehicle, has made several proposals for redefining the quality assurance criteria and indicators. These proposals have aimed at changing the traditional philosophy of quality assurance and evaluation from an input oriented approach to the one which is highly standardized and relying mostly on results, particularly learning outcomes. The new philosophy is process-oriented and, especially, output/outcome oriented, matching European and international trends (Stensaker 2011). Stressing the process and the output/outcome features of higher education have been thought of as an adequate means for increasing the educational process efficiency and efficacy, while also providing wider and proper opportunities for an improved exploitation of the existing inputs into the process. Given the new quality assurance framework, a certain degree of resistance is expected on the part of some universities that lack the institutional capacity of adjusting their internal processes to the new institutional requirements. Within the near future, the problem of circumscribing this resistance remains open, while lessons learned from other reported cases (Beach 2013) might become relevant.

2.4 The University Classification Exercise and the Introduction of Performance Criteria

The most radical change produced by the 2011 Law of Education has been represented by the University Classification and Study Program Ranking Exercise. That was a national evaluation exercise which aimed: (a) to break the systemically in-built institutional isomorphism which kept hidden to stakeholders basic information on the differentiation of universities, and (b) to provide opportunities for emerging a more institutionally diverse system of higher education. There has also been an instrumental objective, in the sense that the university evaluation exercise sought to differentiate among universities based on their institutional mission and performance. Consequently, Romanian universities have been classified into three distinct classes: advanced research universities, research and teaching universities, and teaching oriented universities. Furthermore, all university study programs were ranked. For instance, within the sociology ranking domain or within the mathematics ranking domain etc., all the corresponding study programs existing in the Romanian higher education system have been ranked into five ordinal classes (from the class A to class E).

These two outputs—institutional classification and study programs ranking—are to be highly flexible in time, so as to allow universities and study programs to evolve both within and between classes and ranks according to their academic and research performing outcomes. They have been intended to facilitate the institutional development of universities, enhance their quality assurance mechanisms, increase the quality of university internal operations and processes, and correct the informational asymmetry between universities and prospective students or stakeholders. The evaluation exercise has thus sought also to accomplish a substantial objective: that of providing universities with the necessary and accurate mechanisms for a better understanding and establishment of their own institutional mission and strategic development.

Both objectives have had salient expected consequences for Romanian higher education. The accomplishment of the instrumental objective has been expected to correct the informational asymmetry (e.g. with respect to prospective students awareness regarding the quality of educational services provided by universities). Put it simpler, different types of beneficiaries (e.g. prospective students, alumni, employers etc.) were expected to have the proper means to evaluate university study programs in terms of performance and, incidentally, to make informed choices. Moreover, even universities have been expected to have a clear and sound image of their own levels of scientific and teaching performance levels. Also, the accomplishment of the substantial objective would enhance universities’ capabilities of attaining reflexivity. This reflexivity is expected to raise awareness with respect to the institutional mission, strategic action setting and community involvement.

2.5 The New Public Funding Mechanism

According to the reform, institutional arrangements, the public funding streams are to be correlated with the results produced by the university evaluation exercise (i.e. university classification and study program ranking). For instance, public funding should be so oriented as to take into consideration how university study programs perform (i.e. their position within each ranking domain). This principle of financing performance in both teaching and research rests on the idea of spending public resources more efficiently. Public funding streams should concentrate especially on those universities and university study programs that entail higher levels of quality in teaching and research (e.g. university study programs that hold top positions within each ranking domain, be that in teaching and/or in research, are expected to receive more public funding, whereas university study programs poorly ranked either lose their public funding, or receive less financing for respectively research and/or teaching). This mechanism is expected to produce competition among universities and specialized study programs at the level of higher education system, and to forge the required incentives/payoffs to better structure university organizational environments. Within this type of institutional landscape, universities are predicted to orient their human resource strategies on appointing highly competitive academic staff and to consider improving and even removing that study programs that poorly perform (i.e. to cut off their losses). Though criticized, the idea of competitive funding seems to be a common feature for many worldwide higher education reforms (Marginson 2013).

Such reform policies, provisioned in 2011 with respect to academic staff recruitment and promotion, quality assurance, university classification, study program ranking or public financing, are expected to conduct the Romanian higher education system into increasing the level of efficiency in spending public funding.

3 Methodology

3.1 Research Productivity and Its Impact

We shall here focus on the research area, leaving teaching for a later demonstration. When measuring research productivity and impact, various scientometric tools are available. Firstly, one may seek to measure academics and departments’ number of journal papers, books, book chapters, patents etc. The highlight is thus on the quantity of publications from which one may estimate the scholar and/or department’s research productivity (Johnes 1988). A larger number of published scientific items in a given unit of time would mean a higher level of individual research productivity. However, there are many drawbacks when using this approach. The most important one is that a scholar could publish a large number of publications, but without any real scientific impact, due to their low quality and lack of interest for other researchers. Such a drawback might be compensated by measuring the impact of a scholar’s work in terms of citations (Shin and Toutkoushian 2011). In this paper, we consider citations as an acceptable measure for the quality and impact of research productivity, in spite of the controversies and discussions on its pros and cons (Toutkoushian 1994; Toutkoushian et al. 2003).

For illustrating such an approach, we consider the relationship between research productivity and academic quality impact by looking at the G-index and H-index scores (Egghe 2006a, b; Egghe and Rousseau 2008; Hirsch 2005; Woeginger 2008). Although G-index, H-index and other similar indices have been developed as tools for ranking journals, scholars or university departments, in this paper we refer to these formulae for other purposes. We treat G-index and H-index scores as proxies for estimating the impact of funding on academics’ research productivity in two specific Romanian fields: Sociology and Political Science & International Relations. Citations, G-index and H-index scores are measured both at an individual and departmental level, within the same discipline, building on previous similar research studies (Becher 1994; Feldman 1987). We keep the model simple, ignoring other factors that determine or affect scientific productivity, such as, for instance, personal career preferences, human capital, teaching workload (Porter and Umbach 2001; Webber 2011).

3.2 Methods

We used Publish or Perish software (Harzing 2007) to measure the impact of the Romanian academics’ research productivity as this is distributed in two disciplinary domains. We collected G-index and H-index scores for all scholars working in the Romanian university departments of Political Science and International Relations (hereafter PS&IR) and Sociology (Table 1). The human resource composition of the 40 university departments was established using official data reports provided by ARACIS.

When considering the G-index and H-index scores, our stress was not on assessing their ranking potential, but on their descriptive guise, exploring the possible relationships between these results and the public funding mechanisms. We were thus interested in investigating whether increasing research funding would be positively associated with an increase in research productivity and its impact.

3.3 Data Analysis and Results

The distributions of individual G-index and H-index scores, split on ranking domains (i.e. Sociology and PS&IR), are available in Figs. 3 and 4. Inspecting the box-and-whiskers plots, one could notice that 50 % of the academic staff working in the departments of PS&IR and Sociology have a G-index score and a H-index score of zero. Put it differently, half of the academics in the Romanian departments of Sociology and PS&IR have publications that almost no one ever cited (or, at least, there is no file—e.g. paper, book, magazine article etc., indexed by the Google browser, that cites the scientific work published by the people working in the Romanian departments of Sociology and PS&IR). The top 25 % of the academics have a G-index and H-index score range of three, between two and five. Outliers are present in the score distributions and point out that there are some scholars whose work is more influential, seminal and have a greater impact (e.g. there is a G-index score outlier of 22 for PS&IR and of 27 for Sociology).

Male scholars have better G-index and H-index scores than female scholars, irrespective of the ranking domain (median = 1 for males and median = 0 for females), but a higher variation (S.D. = 2.1 for males and 1.4 for females). Although the difference between the two medians is extremely small, this could still be explained by the fact that the time spent in the system by male scholars is longer than the time spent by women. That is, in the university departments of Sociology and of PS&IR, male scholars tend to occupy higher academic ranks (professors and associate professors). The relationship between sex and academic ranks is stressed by Pearson’s chi-square test: in case of Sociology ranking domain, χ2 = 18, df = 4, p = 0.00, and in the case of PS&IR, χ2 = 38, df = 4, p = 0.00. We also uncovered a positive correlation between the academic ranks (i.e. the period spent within the higher education system, as, until recently, the climbing on the academic ladder in Romania has been gerontocratic) and H-index scores (τ = 0.475, p = 0.01, for Sociology, and τ = 0.450, p = 0.01, for PS&IR) or G-index scores (τ = 0.469, p = 0.01, for Sociology, and τ = 0.443, p = 0.01, for PS&IR). It follows that male scholars score better than women and they hold the highest academic positions (i.e. professors and associate professors).

We wanted to control the time influence over the G-index and H-index score distributions. For doing so, we took into account only the scientific items published after 2006. We did this exercise for the Sociology ranking domain and the empirical findings suggest two things. Firstly, there is a positive relationship between the distribution of G-index scores and the distribution of G-index scores computed for publications published after 2006 in sociology (τ = 0.806, p = 0.01). There is also a positive relationship between the distribution of H-index scores and the distribution of H-index scores computed for publications published after 2006 (τ = 0.821, p = 0.01). Secondly, there is also a positive relationship between academic titles and G-index score distribution computed for publications published after 2006 (τ = 0.371, p = 0.01). Even if the relationship between academic titles and G-index scores computed for publications published after 2006 is smaller than the correlation between academic titles and G-index score distribution (without time referral), it shows that professors and associate professor still have a scientific work with a larger impact (Table 2).

The differences among academic titles are smaller when compared using G-index and H-index scores computed for publications published after 2006, than when compared using G-index and H-index scores (computed without time referral). This idea is shown in Figs. 5 and 6, where one might inspect the differences among academic titles in terms of G-index and H-index mean scores, within the Romanian Sociology ranking domains.

Using the individual (nominal) G-index scores calculated for each member of the Romanian departments of Sociology and PS&IR, we were able to apply Tol’s formula to compute the successive G-index for each department (Tol 2008).

In Fig. 7, one might investigate the distribution of successive G-index scores for all the 17 Romanian departments of Sociology and for all the 23 Romanian departments of PS&IR. As shown, the great majority has successive G-index scores between one and three, while the top departments (designated by empty triangles and squares) have successive G-index scores between five and eleven. This distribution of scores indicates at least a cleavage between top and ordinary departments.

Analyzing the raw data reported by the 17 departments of Sociology during the official University Classification and Study Program Ranking Exercise (2011), we uncovered some useful results for investigating the impact of research funding on the quality and research productivity. Firstly, there is a positive correlation between the total fundingFootnote 1 reported by the departments and the total number of publications (e.g. papers, books, book chapters etc.) published between 2006 and 2011 (τ = 0.391, p (2-tailed) = 0.05). There is also a positive correlation between total number of publications between 2006 and 2011 and public fundingFootnote 2 (τ = 0.477, p (2-tailed) = 0.05) or private funding streams (non-state funding) (τ = 0.510, p (2-tailed) = 0.01).

When measuring for the impact of the scientific productivity, we did not find any relationship between the total funding/public funding/private funding streams reported by the Sociology departments and the number of citationsFootnote 3 or the departments’ H and G scoresFootnote 4 (Table 3).

4 Discussion

Our investigation uncovered three key results. Firstly, irrespective of gender or academic title, half of the scholars from the two academic disciplinary domains submitted to ranking (i.e. Sociology and PS&IR) published research items (e.g. papers, books, book chapters etc.) without any scientific impact (i.e. H and G index scores of zero). The mode of individual G and H index scores within each academic category was zero (i.e. associate professors, lecturers and assistants) except for the category of professors. Inspecting the data, we detected an extremely small modal difference between male and female scholars, in terms of G and H index scores. However, we suspect this difference to be caused by the period spent within the system and not by other factors (see, for instance, that male scholars tend to occupy better positions on the academic ladder compared to women).

Secondly, in each of the two disciplinary domains there are a few top departments that significantly outscore the rest. Using departmental G index scores, we could only discriminate between top departments and ordinary departments (Fig. 7). For instance, 32 out of the 40 departments are being extremely similar, having a departmental G index score range of three (minimum one and maximum four). Moreover, the ordinary departments’ departmental G-index mean is only 19 % out of the best departmental G-index score and only 29 % out of the top departments’ successive G-index mean.

These two results show that, at least in the fields of Sociology and of PS&IR, only a few departments publish scientific research items that have some impact (e.g. the maximum departmental G-index score for Sociology domain is 12 and for PS&IR ranking domain is 10). Unfortunately, we do not have the possibility of assessing the intensity of this impact, as previous similar studies conducted on the Romanian higher education do not exist. However, it seems to be highly sensitive that half of the scholars in each of the two fields have G and H index scores of zero.

Thirdly, there is no significant statistical relationship between the research funding and the impact of published research items. In this case, research funding acts only as a major incentive that inflates the number of publications and deflates their specific and overall impact. In the broad context of a poor Romanian state and of an underfunded higher education system, the issue of how to efficiently use the very few financial resources available should be explored. For instance, one may consider whether the public funding mechanisms should be associated with specific institutional arrangements so as to competitively direct the funds towards those departments that publish high impact research items (Fig. 7). As a matter of fact, this has been the policy option that was implemented after 2011 in the Romanian higher education system. It is now currently expected that the new institutional arrangements carry along incentives and procedures that, at least potentially, might enhance the efficient exploitation of the public financial resources.

Even if we have shown that the impact of the scientific research items published by the Romanian scholars from the fields of Sociology and of PS&IR has a low intensity, our results must be approached with due care. Firstly, our data are representative for only two domains of study. This means that we cannot extend our interpretations and findings towards other fields, and particularly for the whole system. Secondly, the impact of research items published by scholars could also be measured and assessed using other tools and criteria. Thirdly, we used mono-dimensional measures (H-index and G-index scores) as proxies to estimate scientific impact.

Furthermore, one may question the key assumption adopted in our analysis. We consider that rankings may prove to be a tool not only for adjusting informational asymmetry, but also for addressing the need of increasing the efficiency in public money spending. Such an assumption was adopted for two reasons: (a) to see how rankings would work within two of the most recently expanding disciplinary areas of the Romanian system of higher education, and (b) to explore rankings’ eventual policy consequences in terms of public funding and efficient use of funds in higher education institutions. Both issues are academically sensitive. Turning rankings into inter alia funding criteria may have unintended consequences that are questionable from the perspective of an equitable distribution of public resources, while the same option may facilitate the generation of those intended consequences which aim to decrease informational asymmetry for the students, and increase economic efficiency in the use of public funds. The two types of consequences are not necessarily consistent, and this joins the wide range of critiques towards the use of rankings in assessing higher education (e.g. different ranking criteria generate different rankings, their methodologies are systematically biased, produce significant errors, and are deemed to ignore creative thinking and teaching, while mainly referring to research results, etc.). Despite all this, it seems that only by changing the institutional arrangements within the higher education system and introducing different incentives, the quality of research and academics’ research productivity may stand a good chance of getting improved, and face the competitive arena of today’s higher education. Instead of opting for either availability of more public funds or financial efficiency while decoupling it from the level of existing academic performances, a better policy option would be to provide an institutional arrangement which combines financial incentives with the demand for an increasing research productivity and quality.

5 Conclusion

We argued that, at least in the disciplinary fields of Romanian sociology and political sciences, the research productivity is highly influenced by additional financial resources. Furthermore, we revealed that the quality of research productivity (defined by its impact) could be increased by a mixture of two factors: institutional arrangements (that carry specific incentives) and additional financial resources.

We claimed that there are two streams of ideas and policies within the Romanian higher education system: (a) a traditional policy approach according to which research quality can be improved by a higher rate of public expenditure on research and teaching; and (b) a post-traditional policy according to which research quality and productivity may be improved by increasing the level of efficiency in spending public money.

We aimed at questioning the traditional policy (or philosophy), arguing that an increase in funding is not sufficient for automatically increasing academic quality. Moreover, it seems obvious that this traditional way of thinking cannot be supported by any alternative means during periods of economic crisis, when governments, more often than not, do not increase their rates of expenditures on higher education.

In the context of a poor Romanian economy and a heavily underfunded higher education system, provisioning specific institutional arrangements to increase the efficiency of exploiting resources could, at least theoretically, improve the quality of academic research. The reforms provisioned in 2011, as shown, were meant to introduce new institutional arrangements in areas such as quality assurance, public funding, academic career, university classification and study program rankings. Their goal has been to bring forth a Mathew effect: top university department deserve more money.

An illustrative example of how the two above mentioned philosophies operate is provided by the relationship between public funding and quality and productivity of research. An increase in the quality of the research items produced by the university departments could be attained by embracing the principles of the post-traditional philosophy that grounded the 2011 reforms. Accordingly, after ranking the university departments in five classes, only the better positioned departments in each ranking domain were to receive public money. This incentive was expected to drive the poorly performing university departments to increase their quality and research productivity. Consequently, the ordinary university departments would either improve their departmental G-index score as to catch up with the top departments, or identify alternative funding streams as to avoid demise. This incentive was thought of as introducing competition among university departments toward improving their quality of teaching and research and their research productivity. When university departments are different in terms of quality and research productivity, publicly and equally funding all departments, irrespective of their performance (e.g. departmental G-index scores), is a clear case of wasting critical public resourcesFootnote 5; especially in cases where the impact of research productivity is zero. Changing the institutional arrangements and introducing new incentives could determine organizational change towards increasing academic quality.

Our findings also indirectly approach some additional topics that, in the future, may need further work and empirical investigation. Firstly, Romanian academics, at least from the field of sociology and political science, have a considerable non-academic productivity (e.g. columns in newspapers, ideas shared through media etc.). Has this non-academic productivity any relevance for various publics, including the political elites? Should this non-academic productivity be taken into account in assessing academic work? Secondly, there is a lot of confusion regarding university departments’ mission. On one hand, academics are required to publish in high impact scientific journals, if they were to climb on the academic ladder. Consequently, measures like the ones mentioned in this paper are highly relevant and should be used as assessment tools by the university management. On the other hand, public funding is allocated on unclear criteria which are expected to stress also the importance of teaching. While reviewing research criteria, the needs for having appropriate criteria for teaching are pressing. From this point of view, it seems that there is a clear case of interest mismatch between policy-makers and academics (Scott 2010).

Notes

- 1.

Official raw data on Romanian university research funding are extremely hard to find for academic purposes. However, we constructed the scale variable total funding by aggregating all the research funding streams that the 17 sociology departments reported to received during 2006 and 2011. By all the funding streams we understand: state funding, alternative to State national funding and international funding. Moreover, the total number of publications was computed at the level of all Romanian sociology departments (there are 19 departments of sociology, among which ARACIS accredited only 17).

- 2.

Correlation was computed filtering out the private departments that do not receive public funding.

- 3.

Using Publish or Perish software, we computed the total number of citations for the publications published after 2006 by the academic researchers of the 17 Romanian sociology departments.

- 4.

We computed departmental H and G scores taking into account publications published after 2006. We consider the number of citations and the departments’ G and H index scores as proxies for the impact of scientific productivity.

- 5.

Irrespective of the provisions of the Law of Education, in 2013, the Romanian Ministry of Education continues to finance university departments by the student number and not by the quality of their research and teaching.

References

Beach, D. (2013). Changing higher education: Converging policy-packages and experiences of changing academic work in Sweden. Journal of Education Policy, 28(4), 517–533.

Becher, T. (1994). The significance of disciplinary differences. Studies in Higher Education, 19(2), 151–161.

Egghe, L. (2006a). An improvement of the H-index: The G-index. ISSI Newsletter, 2(1), 8–9.

Egghe, L. (2006b). How to improve the H-index. The Scientist, 20(3), 14.

Egghe, L., & Rousseau, R. (2008). An H-index weighted by citation impact. Information Processing and Management, 44, 770–780.

Estermann, T., & Pruvot, E. B. (2011). Financially sustainable universities II. European universities diversifying income streams. Brussels: The European University Association.

Feldman, K. A. (1987). Research productivity and scholarly accomplishment of college teachers as related to their instructional effectiveness: A review and exploration. Research in Higher Education, 26(3), 227–298.

Harzing, A. W. (2007). Publish or perish (4.6). http://www.harzing.com/pop.htm.

Hirsch, J. (2005). An index to quantify an individual’s scientific research output. Proceedings of the National Academy of Sciences, 102(46), 16569–16572.

Johnes, G. (1988). Research performance indications in the university sector. Higher Education Quarterly, 42(1), 54–71.

Mace, J. (1995). Funding matters: A case study of two universities’ response to recent funding changes. Journal of Education Policy, 10(1), 57–74.

Marginson, S. (2013). The impossibility of capitalist markets in higher education. Journal of Education Policy, 28(3), 353–370.

Porter, S., & Umbach, P. (2001). Analyzing faculty workload data using multilevel modelling. Research in Higher Education, 42(2), 171–196.

Schwarz, S., & Teichler, U. (Eds.). (2002). The institutional basis of higher education research. Experiences and perspectives. New York: Kluwer Academic Publishers.

Scott, P. (2010). The research-policy gap. Journal of Education Policy, 14(3), 317–337.

Shin, J. C., & Toutkoushian, R. K. (2011). The past, present, and future of university rankings. In J. C. Shin, R. K. Toutkoushian, & U. Teihler (Eds.), University rankings. Theoretical basis, methodology and impact on global higher education (pp. 1–19). Dordrecht Heidelberg: Springer.

Stensaker, B. (2011). Accreditation of higher education in Europe—Moving towards the US model? Journal of Education Policy, 26(6), 757–769.

Tol, R. (2008). A rational, successive G-index applied to economics departments in Ireland. Journal of Informetrics, 2(2), 149–155.

Toutkoushian, R. (1994) Using citations to measure sex discrimination in faculty salaries. The Review of Higher Education 18(1), 61–82.

Toutkoushian, R., Porter S., Danielson C. and Hollis P. (2003) Using publication counts to measure an institution’s research productivity. Research in Higher Education 44(2), 121–148.

Webber, K. L. (2011). Measuring faculty productivity. In J. C. Shin, R. K. Toutkoushian & U. Teichler (Eds.), University rankings. Theoretical basis, methodology and impact on global higher education (pp. 105–121). Dordrecht Heidelberg: Springer.

Woeginger, G. J. (2008). An axiomatic analysis of egghe’s G-index. Journal of Informetrics, 2(4), 364–368.

Acknowledgements

We wish to thank Adrian Miroiu, Professor of Political Science at the National University of Political Studies and Public Administration, Bucharest, Romania, and his research team for providing us with the raw data on the H-index and G-index scores of the full-time academics working within the 23 Romanian Political Science and International Relations Departments.

We also thank ARACIS for supporting this research by providing official statistics and reports on the academic personnel working in the 17 Romanian departments of sociology and the 23 Romanian departments of political science and international relations.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is distributed under the terms of the Creative Commons Attribution Noncommercial License, which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Copyright information

© 2015 The Author(s)

About this chapter

Cite this chapter

Vlăsceanu, L., Hâncean, MG. (2015). Policy Incentives and Research Productivity in the Romanian Higher Education. An Institutional Approach. In: Curaj, A., Matei, L., Pricopie, R., Salmi, J., Scott, P. (eds) The European Higher Education Area. Springer, Cham. https://doi.org/10.1007/978-3-319-20877-0_13

Download citation

DOI: https://doi.org/10.1007/978-3-319-20877-0_13

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-18767-9

Online ISBN: 978-3-319-20877-0

eBook Packages: Humanities, Social Sciences and LawEducation (R0)