Abstract

In this paper, we improve the named-entity recognition (NER) capabilities for an already existing text-based dialog system (TDS) in Spanish. Our solution is twofold: first, we developed a hidden Markov model part-of-speech (POS) tagger trained with the frequencies from over 120-million words; second, we obtained 2, 283 real-world conversations from the interactions between users and a TDS. All interactions occurred through a natural-language text-based chat interface. The TDS was designed to help users decide which product from a well-defined catalog best suited their needs. The conversations were manually tagged using the classical Penn Treebank tag set, with the addition of an ENTITY tag for all words relating to a brand or product. The proposed system uses an hybrid approach to NER: first it looks up each word in a previously defined catalog. If the word is not found, then it uses the tagger to tag it with its appropriate POS tag. When tested on an independent conversation set, our solution presented a higher accuracy and higher recall rates compared to a current development from the industry.

Víctor R. Martínez and Luis Eduardo Pérez—These authors contributed equally to the work.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Text-based Dialog Systems (TDS) help people accomplish a task using written language [24]. A TDS can provide services and information automatically. For example, many systems have been developed to provide information and manage flight ticket booking [22] or train tickets [11]; decreasing the number of automobile ads according to the user preferences [9], or inquiring about the weather report in a certain area [25]. Normally, these kinds of systems are composed by a dialog manager, a component that manages the state of the dialog, and a dialog strategy [12]. In order to provide a satisfactory user experience, the dialog manager is responsible for continuing the conversation appropriately, that is reacting to the user’s requests and staying within the subject [16]. To ensure this, the system must be capable of resolving ambiguities in the dialog, a problem often referred to as Named Entity Recognition (NER) [10].

Named Entity Recognition consists in detecting the most salient and informative elements in a text such as names, locations and numbers [14]. The term was first coined for the Sixth Message Understanding Conference (MUC-6) [10], since then most work has been done for the English language (for a survey please refer to [17]). Other well represented languages include German, Spanish and Dutch [17].

Feasible solutions to NER either employ lexicon based approaches or multiple machine learning techniques, with a wide range of success [14, 17]. Some of the surveyed algorithms work with Maximum Entropy classifiers [3], AdaBoost [4], Hidden Markov Models [1], and Memory-based Learning [21]. Most of the novel approaches employ deep learning models to improve on accuracy and speed compared to previous taggers [5]. Unfortunately, all these models rely on access to large quantities (in the scale of billions) of labeled examples, which normally are difficult to acquire, specially for the Spanish language.

Furthermore, when considering real-world conversations, perfect grammar cannot be expected from the human-participant. Errors such as missing letters, lacking punctuation marks, or wrongly spelled entity names could easily cripple any catalog-based approach to NER. Several algorithms could be employed to circumvent this problems, for example, one could spell-check and automatically replace every error or consider every word that is within certain distance from the correct entity as an negligible error. However, this solutions do not cover the whole range of possible human errors, and can be quite complicated to maintain as the catalog increases in size.

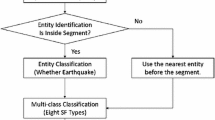

In this work we present a semi-supervised model implementation for tagging entities in natural language conversation. By aggregating the information obtained from a POS-tagger with that obtained from a catalog lookup, we ensure the best accuracy and recall from both approaches. Our solution works as follows: first, we look each word coming from the user in our catalog of entities. If the word is not present, we then use a POS-tagger to find the best possible tag for each word in the user’s sentence. We use the POS tag set defined by the Penn Treebank [15] with the addition of an ENTITY tag. We trained our POS-tagger with 120-million tagged words from the Spanish wiki-corpus [20] and thousands of real-world conversations, which we manually tagged.

Our approach solves a specific problem in a rather specific situation. We aimed to develop a tool that is both easy to deploy and easy to understand. In a near future, this model would provide NLP-novice programmers and companies with a module that could be quickly incorporated as an agile enhancement for the development of conversational agents focused on providing information on products or services with a well-defined catalog. Examples of this scenario include the list of movies shown in a theater, or different brands, models and contracts offered by a mobile phone company. To the best of the authors knowledge, no similar solution has been proposed for the Spanish language. Further work could also incorporate our methodology into a Maximum Entropy Classifier, an AdaBoost, or even a DeepLearning technique to further improve on the entity detection.

The paper is structured as follows: in Sect. 2 we present the POS tagging problem and a brief background of POS taggers using hidden Markov models. In Sect. 3 we present the obtained corpus and data used along this work. Section 4 presents the methodology for this work, while Sect. 5 shows our main results. Finally, Sect. 6 describes the real-world implementation and compares our work against the industry’s current development.

2 Formal Background

2.1 Part of Speech Tagger

In any spoken language, words can be grouped into equivalence classes called parts of speech (POS) [12]. In English and Spanish some examples of POS are noun, verb and articles. Part-of-speech tagging is the process of marking up a word in a text as corresponding to a particular part of speech, based on both its definition, as well as its context (i.e., its relationship with adjacent and related words in a phrase or sentence) [12]. A POS tagger is a program that takes as input the set of all possible tags in the language (e.g., noun, verbs, adverbs, etc.) and a sentence. Its output is a single best tag for each word [12]. As aforementioned, ambiguity makes this problem non trivial, hence POS-tagging is a problem of disambiguation.

The first stage of our solution follows the classical solution of using a HMM trained in a previously tagged corpus in order to find the best relation between words and tags. Following are the formal definitions used in our work.

Hidden Markov Models. Loosely speaking, a Hidden Markov Model (HMM) is a Markov chain observed in noise [19]. A discussion on Markov chains is beyond the scope of this work, but the reader is referred to [13, 18] for further information. The underlying Markov chain, denoted by \(\{X_k\}_{k\ge 0}\) is assumed to take values in a finite set. As the name states, it is hidden, that is, it is not observable. What is available to the observer is another stochastic process \(\{Y_k\}_{k\ge 0}\) linked to the Markov chain in that \(X_k\) governs the distribution of \(Y_k\) [19]. A simple definition is given by Capp [19] as follows:

Definition 1

A hidden Markov Model is a bivariate discrete time process \(\{X_k, Y_k\}_{k\ge 0}\) where \(\{X_k\}\) is a Markov chain and, conditional on \(\{X_k\}\), \(\{Y_k\}\) is a sequence of independent random variables. The state space of \(\{X_k\}\) is denoted by X, while the set in which \(\{Y_k\}\) takes its values is denoted by Y.

We use this definition as it works for our purpose and has the additional benefit of not overwhelming the reader with overly complex mathematical terms. Following this definition, the problem of assigning a sequence of tags to a sequence of words can be formulated as:

Definition 2

Let Y be the set of all possible POS tags in the language, and \(w_1,w_2,w_3,\ldots ,w_n\) a sequence of words. The POS-tagging problem can be expressed as the task of finding for each word \(w_i\), the best tag \(\hat{y}_i\) from all the possible tags \(y_i \in Y\). That is,

Given a sequence of words \(w_1,w_2,\ldots ,w_T\), an equivalent approach to the POS tagging problem is finding the sequence \(y_1,y_2,\ldots ,y_T\) such that the joint probability is maximized. That is,

We then can assume that the joint probability function 2 is of the form

with \(y_0 = y_{-1} = *\) and \(y_{T+1} = STOP\).

The function q determines the probability of observing the tag \(y_i\) after having observed the tags \(y_{i - 1}\) and \(y_{i - 2}\). The function e returns the probability that the word \(w_i\) has been assigned the label \(y_i\). By maximizing both functions, the joint probability P is maximized. Now we explain how we can obtain both 4 and 5 from a previously tagged corpus.

Equation 3 captures the concept of using word-level trigrams for tagging [7]. Calculating the function q will depend on the two previous tags. Using the definition of conditional probability, we observe that the function q is the ratio between the number of times \(y_i\) is observed after \(y_{i - 2}, y_{i -1}\) and how many times the bigram \(y_{i - 2}, y_{i -1}\) was observed in the corpus:

Similarly, the probability that \(w_i\) is labelled with \(y_i\) can be obtained as the frequentist probability observed in the corpus

Viterbi Algorithm. The Viterbi algorithm, proposed in 1966 and published the following year by Andrew J. Viterbi, is a dynamic programming algorithm designed to find the most likely sequence of states, called the Viterbi path, that could produce an observed output [23]. It starts with the result of the process (the sequence of outputs) and conducts a search in reverse, in each step discarding every hypothesis that could not have resulted in the outcome.

The algorithm was originally intended for message coding in electronic signals, ensuring that the message will not be lost if the signal is corrupted, by adding redundancy. This is called an error correcting coding. Today, the algorithm is used in a wide variety of areas and situations [8]. Formally, the Viterbi algorithm is defined as follows

Definition 3

Let \(\lambda = \{X_k, Y_k\}_{k \ge 0}\) be an HMM with a sequence of observed inputs \(y_1,y_2,\ldots ,y_T\). The sequence of states \(x_1,x_2,\ldots ,x_T\) that produced the outputs can be found using the following recurrence relation:

where \(V_{t,k}\) is the probability of the most likely sequence of states that result in the first t observations and has k as a final state, and \(A_{s,k}\) is the transition matrix for \(\{X_k\}\)

The Viterbi path can be found following backward pointers, which keep a reference to each state s used in the second equation. Let Ptr(k, t) be a function that returns the value of s used in the calculation of \(V_{t,k}\) if \(t > 1\), or k if \(t = 1\). Then:

3 Data

We propose an implementation of the Viterbi algorithm to tag every word in a conversation with a real-world dialog system. The resulting HMM corresponds to an observed output \(w_1,w_2,\ldots ,w_T\) (the words in a conversation), and the states \(y_1,y_2,\ldots ,y_T\) (the POS-tags for each word). Some simplifications and assumptions allowed us to implement the Markov model starting from a set of data associating each word (or tuple of words) with one (or more) tags, often called a tagged corpus.

For this work, we used the Spanish Wikicorpus [20], a database of more than 120 million words obtained from Spanish Wikipedia articles, annotated with lemma and part of speech information using the open source library FreeLing. Also, they have been sense annotated with the Word Sense Disambiguation algorithm UKB. The tags used and the quantity of corresponding words in the corpus are given in Table 1. We can note that the least common tag in this corpus is dates (W), with no examples in the texts used. We suspect this was due an error on our side while parsing the corpus.

3.1 Rare Words

Even with a corpus of 120 million words, it is quite common that conversations with users contain words that never appeared in the training set. We looked into two possible ways of handling these words: replacing all words with their corresponding tokens, and replacing only rare words with their respective tokens. A word is considered rare if it’s total frequency (number of times it appears in the corpus, regardless of its tag) is less than 5. For this work we only used 5 tokens (Table 2).

4 Methodology

We downloaded the Spanish WikiCorpus in its entirety, transformed its files into a frequency counts file using a MapReduce scheme [6]. Our aggregated file contains the number of times each word appears, and the number of times each word appears with a certain tag (e.g., the number of times “light” appears as noun). We then applied the rules for rare words, as discussed in Sect. 3.1, which yielded two new corpus and frequency files upon which we trained our HMM.

Each of these two models was tested using five rounds of cross-validation over the whole WikiCorpus of 120 million words. For each round, our program read the frequency count file and calculated the most likely tag for each word in the training set. The result of each round was a confusion matrix. For each tag, we obtained measurements of the model’s precision, recall, and F-score over that tag.

5 Results

5.1 Replacing only Rare Words with Tokens (VM1)

For words labeled as nouns or names (N), the algorithm had an average precision of 12.15 % and recall of 46.87 %. From the total of 21, 651, 297 names, it only managed to find 10, 147, 962. From the words the algorithm tagged as nouns or names, only 3 out of every 25 was, in fact, a noun or a name. The left side of Table 3 shows the results for this algorithm.

5.2 HMM Replacing Any Word in Table 2 with a Token (VM2)

This model stands out because of an increase in precision and recall in the classification of all tags. This comes as no surprise since the last rule in our translation Table 2 already considers the whole set of translated words in the previous model. The results are shown on the right side of Table 3.

6 Implementing in a Real-World Scenario

Considering the results described in the previous section, we decided to compare and improve model VM2 in a real world scenario. Such opportunity was presented by BlueMessaging Mexico’s text-based dialog systems.

BlueMessaging is a private initiative whose sole objective is to connect business and brands with customers anytime anywhere [2]. It has developed several TDS systems, one of such (named CPG) was designed for helping users decide which product best fits their needs. CPG works with a well-defined product catalog, in a specific domain, and interacts with the user through a natural-language text-based chat interface. For example, CPG could help a user select among hundreds of cell phone options by guiding a conversation with the user about the product’s details (see Table 4).

During the course of this work, we collected 2, 283 real-world conversations from CPG. This conversations were manually tagged over the same tag set as the wiki corpus. Words that were part of a product’s name or brand were tagged as ENTITY. For example, Samsung Galaxy S3 were labeled as Samsung/ENTITY Galaxy/ENTITY S3/ENTITY. After this process, we had a new corpus containing 11, 617 words (1, 566 unique), from which 1, 604 represent known entities.

We then tested three new approaches. The baseline was set by CPG’s current catalog approach. We then tested our VM2 model, having scored the best across the tests in the last section. Finally, an hybrid approach was used: using both the POS-tagger and the catalog to identify named entities in the dialog. Each approach was tested with 5 rounds of cross-validation on the conversation corpus. Here we present the results for each of the three.

6.1 Catalog-Based Tag Replacement (DICT)

We tested a simple model consisting only of tag substitution using a previously known catalog of entities. Each entry in this dictionary is a word or phrase that identifies an entity, and an entity can be identified by multiple entries. This is useful for compound product names that could be abbreviated by the user. For example: “galaxy fame”, “samsung fame” and “samsung galaxy fame” are all references to the same product. In this case, we used a catalog with 148 entries, identifying 45 unique entities.

To make the substitutions, the sentence to be labeled is compared against every entry in the catalog. If the sentence has an entry as a sub-sequence, the words in that sub-sequence are tagged as ENTITY, overriding any tags they might have had.

Using only this approach to tagging sentences, we reached a precision of 93.56 % and recall of 64.04 % for entities. However, this process does not give any information about any other tag, and does not attempt to label words as anything other than entity (Table 5).

6.2 Model VM2 Applied to Real-World Conversations (VM2-CPG)

Having tested this model with another corpus, we can use those results to compare its performance on the corpus of conversations, and see whether the model is too specific to the first corpus. Once again, we tested it using 5 rounds of cross-validation, training with subset of the corpus and leaving the rest as a testing set, for each round. The results for this model are shown on the left part of Table 6. With respect to the previous corpus, the model VM2 in this instance had less recall, but a better precision for nouns, determinants, interjections, pronouns, and adverbs.

6.3 VM3 Model with Catalog-Based Tag Replacement (VM2-DICT)

Lastly, we present the results for the model combining the HMM model, replacing words with the appropriate tokens, and tag replacement using a catalog of known entities as shown on the right hand of Table 6. This method is capable of identifying 24 out of every 25 entities.

7 Comparison with Current System

As a final validation for our models, we compared their performance against the system currently in use by BlueMessaging Mexico’s platform. We collected an additional 2, 280 actual user conversations. Again, we manually tagged the sentences according to the wikiCorpus tag set with the inclusion of the ENTITY tag. For both systems, we measured precision and recall. For the VM2-DICT model, the standard error was determined as two standard deviations from the results of the 5-fold cross-validation. Figure 1 shows the results obtained for each system. We found a highly significant difference in precision and recall of the two models (t-test \(t = 23.0933, \, p < 0.0001\) and \(t = 4.8509, \, p < 0.01\)), with VM2-DICT having a better performance in both.

8 Discussion

We presented a semi-supervised approach to named entity recognition for the Spanish language. We noted that previous works on Spanish entity recognition used either a catalog-based approach or machine learning models with a wide range of success. Our model leverages on both approaches, improving both the accuracy and recall of a real-world implementation.

With a real-world implementation in mind, where solutions are measured by their tangible results and how easily they can be adapted to existing production schemes, we designed our system to be an assemblage of well-studied techniques requiring only minor modifications. We believe that our solution would allow for quick development and deployment of text-based dialog systems in Spanish. In a further work, this assemblage of simple techniques could evolve into more robust solutions, for example by exploring conditional random fields in order to replace some of the hidden Markov model assumptions. Moreover, implementations for deep learning in Spanish language might be possible in a future, as more researchers work in developing tagged corpus.

References

Bikel, D.M., Miller, S., Schwartz, R., Weischedel, R.: Nymble: a high-performance learning name-finder. In: Proceedings of the Fifth Conference on Applied Natural Language Processing, pp. 194–201. Association for Computational Linguistics (1997)

BlueMessaging: About BlueMessaging. http://bluemessaging.com/about/

Borthwick, A.: A maximum entropy approach to named entity recognition. Ph.D. thesis, New York University (1999)

Carreras, X., Marquez, L., Padró, L.: Named entity extraction using adaboost. In: Proceedings of the 6th Conference on Natural Language Learning, vol. 20, pp. 1–4. Association for Computational Linguistics (2002)

Collobert, R., Weston, J., Bottou, L., Karlen, M., Kavukcuoglu, K., Kuksa, P.: Natural language processing (almost) from scratch. J. Mach. Learn. Res. 12, 2493–2537 (2011)

Dean, J., Ghemawat, S.: MapReduce: simplified data processing on large clusters. Commun. ACM 51(1), 107–113 (2008)

Figueira, A.P.F.: 5. the viterbi algorithm for HMMs - part i (2013). Available online at http://www.youtube.com/watch?v=sCO2riwPUTA

Forney Jr., G.D.: The viterbi algorithm: a personal history, April 2005. arXiv:cs/0504020, http://arxiv.org/abs/cs/0504020, arXiv: cs/0504020

Goddeau, D., Meng, H., Polifroni, J., Seneff, S., Busayapongchai, S.: A form-based dialogue manager for spoken language applications. In: Proceedings of the Fourth International Conference on Spoken Language ICSLP 1996, vol. 2, pp. 701–704. IEEE (1996)

Grishman, R., Sundheim, B.: Message understanding conference-6: a brief history. In: Proceedings of the 16th Conference on Computational Linguistics COLING 1996, vol. 1, pp. 466–471. Association for Computational Linguistics, Stroudsburg, PA, USA (1996)

Hurtado, L.F., Griol, D., Sanchis, E., Segarra, E.: A stochastic approach to dialog management. In: IEEE Workshop on Automatic Speech Recognition and Understanding 2005, pp. 226–231. IEEE (2005)

Jurafsky, D., Martin, J.H.: Speech and Language Processing. Pearson Education India, Noida (2000)

Karlin, S., Taylor, H.E.: A First Course in Stochastic Processes. Academic Press, New York (2012)

Kozareva, Z.: Bootstrapping named entity recognition with automatically generated gazetteer lists. In: Proceedings of the Eleventh Conference of the European Chapter of the Association for Computational Linguistics: Student Research Workshop, pp. 15–21. Association for Computational Linguistics (2006)

Marcus, M.P., Marcinkiewicz, M.A., Santorini, B.: Building large annotated corpus english: the penn treebank. Comput. Linguist. 19(2), 313–330 (1993)

Misu, T., Georgila, K., Leuski, A., Traum, D.: Reinforcement learning of question-answering dialogue policies for virtual museum guides. In: Proceedings of the 13th Annual Meeting of the Special Interest Group on Discourse and Dialogue, p. 8493. Association for Computational Linguistics (2012)

Nadeau, D., Sekine, S.: A survey of named entity recognition and classification. Lingvist. Investig. 30(1), 3–26 (2007)

Norris, J.R.: Markov Chains. Cambridge Series in Statistical and Probabilistic Mathematics. Cambridge University Press, New York (1999)

Capp, O., Moulines, E., Rydn, T.: Inference in Hidden Markov Models. Springer Series in Statistics. Springer, New York (2005)

Reese, S., Boleda, G., Cuadros, M., Padr, L., Rigau, G.: Wikicorpus: a word-sense disambiguated multilingual wikipedia corpus. In: Proceedings of 7th Language Resources and Evaluation Conference (LREC 2010). La Valleta, Malta, May 2010

Sang, T.K., Erik, F.: Memory-based named entity recognition. In: Proceedings of the 6th Conference on Natural Language Learning, vol. 20, pp. 1–4. Association for Computational Linguistics (2002)

Seneff, S.: Response planning and generation in the mercury flight reservation system. Comput. Speech Lang. 16(3–4), 283–312 (2002)

Viterbi, A.: Error bounds for convolutional codes and an asymptotically optimum decoding algorithm. IEEE Trans. Inf. Theory 13(2), 260–269 (1967)

Williams, J.D., Young, S.: Partially observable Markov decision processes for spoken dialog systems. Comput. Speech Lang. 21(2), 393–422 (2007)

Zue, V., Seneff, S., Glass, J.R., Polifroni, J., Pao, C., Hazen, T.J., Hetherington, L.: Jupiter: a telephone-based conversational interface for weather information. IEEE Trans. Speech Audio Process. 8(1), 85–96 (2000)

Acknowledgments

The authors would like to thank the anonymous reviewers for their valuable comments and suggestions. We would also like to thank Consejo Nacional de Ciencia y Tecnología (CONACYT), BlueMessaging Mexico S.A.P.I. de C.V., and to the Asociación Mexicana de Cultura A.C. for all their support. Specially we would like to mention Andrés Rodriguez, Juan Vera and David Jiménez for their wholehearted assistance.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Martínez, V.R., Pérez, L.E., Iacobelli, F., Bojórquez, S.S., González, V.M. (2015). Semi-Supervised Approach to Named Entity Recognition in Spanish Applied to a Real-World Conversational System. In: Carrasco-Ochoa, J., Martínez-Trinidad, J., Sossa-Azuela, J., Olvera López, J., Famili, F. (eds) Pattern Recognition. MCPR 2015. Lecture Notes in Computer Science(), vol 9116. Springer, Cham. https://doi.org/10.1007/978-3-319-19264-2_22

Download citation

DOI: https://doi.org/10.1007/978-3-319-19264-2_22

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-19263-5

Online ISBN: 978-3-319-19264-2

eBook Packages: Computer ScienceComputer Science (R0)