Abstract

In recent years, sparse classification problems have emerged in many fields of study. Finite mixture models have been developed to facilitate Bayesian inference where parameter sparsity is substantial. Classification with finite mixture models is based on the posterior expectation of latent indicator variables. These quantities are typically estimated using the expectation-maximization (EM) algorithm in an empirical Bayes approach or Markov chain Monte Carlo (MCMC) in a fully Bayesian approach. MCMC is limited in applicability where high-dimensional data are involved because its sampling-based nature leads to slow computations and hard-to-monitor convergence. In this chapter, we investigate the feasibility and performance of variational Bayes (VB) approximation in a fully Bayesian framework. We apply the VB approach to fully Bayesian versions of several finite mixture models that have been proposed in bioinformatics, and find that it achieves desirable speed and accuracy in sparse classification with finite mixture models for high-dimensional data.

This is a preview of subscription content, log in via an institution.

Buying options

Tax calculation will be finalised at checkout

Purchases are for personal use only

Learn about institutional subscriptionsReferences

Alon U, Barkai N, Notterman D, Gish K, Ybarra S, Mack D, Levine A (1999) Broad patterns of gene expression revealed by clustering analysis of tumor and normal colon tissues probed by oligonucleotide arrays. Proc Natl Acad Sci 96(12):6745–6750

Attias H (2000) A variational Bayesian framework for graphical models. Adv Neural Inf Process Syst 12(1–2):209–215

Bar H, Schifano E (2010) Lemma: Laplace approximated EM microarray analysis. R package version 1.3-1. http://CRAN.R-project.org/package=lemma

Bar H, Booth J, Schifano E, Wells M (2010) Laplace approximated EM microarray analysis: an empirical Bayes approach for comparative microarray experiments. Stat Sci 25(3):388–407

Beal M (2003) Variational algorithms for approximate Bayesian inference. PhD thesis, University of London

Bishop C (1999) Variational principal components. In: Proceedings of ninth international conference on artificial neural networks, ICANN’99, vol 1. IET, pp 509–514

Bishop C (2006) Pattern recognition and machine learning. Springer Science+ Business Media, New York

Bishop C, Spiegelhalter D, Winn J (2002) VIBES: a variational inference engine for Bayesian networks. Adv Neural Inf Proces Syst 15:777–784

Blei D, Jordan M (2006) Variational inference for Dirichlet process mixtures. Bayesian Anal 1(1):121–143

Booth J, Eilertson K, Olinares P, Yu H (2011) A Bayesian mixture model for comparative spectral count data in shotgun proteomics. Mol Cell Proteomics 10(8):M110-007203

Boyd S, Vandenberghe L (2004) Convex optimization. Cambridge University Press, Cambridge

Callow M, Dudoit S, Gong E, Speed T, Rubin E (2000) Microarray expression profiling identifies genes with altered expression in HDL-deficient mice. Genome Res 10(12):2022–2029

Christensen R, Johnson WO, Branscum AJ, Hanson TE (2011) Bayesian ideas and data analysis: an introduction for scientists and statisticians. CRC, Boca Raton

Consonni G, Marin J (2007) Mean-field variational approximate Bayesian inference for latent variable models. Comput Stat Data Anal 52(2):790–798

Corduneanu A, Bishop C (2001) Variational Bayesian model selection for mixture distributions. In: Jaakkola TS, Richardson TS (eds) Artificial intelligence and statistics 2001. Morgan Kaufmann, Waltham, pp 27–34

Cowles MK Carlin BP (1996) Markov chain Monte Carlo convergence diagnostics: a comparative review. J Am Stat Assoc 91(434):883–904

De Freitas N, Højen-Sørensen P, Jordan M, Russell S (2001) Variational MCMC. In: Breese J, Koller D (eds) Proceedings of the seventeenth conference on uncertainty in artificial intelligence. Morgan Kaufmann, San Francisco, pp 120–127

Efron B (2008) Microarrays, empirical Bayes and the two-groups model. Stat Sci 23(1):1–22

Faes C, Ormerod J, Wand M (2011) Variational Bayesian inference for parametric and nonparametric regression with missing data. J Am Stat Assoc 106(495):959–971

Friston K, Ashburner J, Kiebel S, Nichols T, Penny W (2011) Statistical parametric mapping: the analysis of functional brain images. Academic, London

Gelman A, Carlin JB, Stern HS, Rubin DB (2003) Bayesian data analysis. Chapman & Hall/CRC, London/Boca Raton

Ghahramani Z, Beal M (2000) Variational inference for Bayesian mixtures of factor analysers. Adv Neural Inf Proces Syst 12:449–455

Goldsmith J, Wand M, Crainiceanu C (2011) Functional regression via variational Bayes. Electr J Stat 5:572

Grimmer J (2011) An introduction to Bayesian inference via variational approximations. Polit Anal 19(1):32–47

Honkela A, Valpola H (2005) Unsupervised variational Bayesian learning of nonlinear models. In: Saul LK, Weiss Y, Bottou L (eds) Advances in neural information processing systems, vol 17. MIT, Cambridge, pp 593–600

Jaakkola TS (2000) Tutorial on variational approximation methods. In: Opper M, Saad D (eds) Advanced mean field methods: theory and practice. MIT, Cambridge, pp 129–159

Li Z, Sillanpää M (2012) Estimation of quantitative trait locus effects with epistasis by variational Bayes algorithms. Genetics 190(1):231–249

Li J, Das K, Fu G, Li R, Wu R (2011) The Bayesian lasso for genome-wide association studies. Bioinformatics 27(4):516–523

Logsdon B, Hoffman G, Mezey J (2010) A variational Bayes algorithm for fast and accurate multiple locus genome-wide association analysis. BMC Bioinf 11(1):58

Luenberger D, Ye Y (2008) Linear and nonlinear programming. International series in operations research & management science, vol 116. Springer, New York

Marin J-M, Robert CP (2007) Bayesian core: a practical approach to computational Bayesian statistics. Springer, New York

Martino S, Rue H (2009) R package: INLA. Department of Mathematical Sciences, NTNU, Norway. Available at http://www.r-inla.org

McGrory C, Titterington D (2007) Variational approximations in Bayesian model selection for finite mixture distributions. Comput Stat Data Anal 51(11):5352–5367

McLachlan G, Peel D (2004) Finite mixture models. Wiley, New York

Minka T (2001a) Expectation propagation for approximate Bayesian inference. In: Breese J, Koller D (eds) Proceedings of the seventeenth conference on uncertainty in artificial intelligence. Morgan Kaufmann, San Francisco, pp 362–369

Minka T (2001b) A family of algorithms for approximate Bayesian inference. PhD thesis, Massachusetts Institute of Technology

Ormerod J (2011) Grid based variational approximations. Comput Stat Data Anal 55(1):45–56

Ormerod J, Wand M (2010) Explaining variational approximations. Am Stat 64(2):140–153

Rue H, Martino S, Chopin N (2009) Approximate Bayesian inference for latent Gaussian models by using integrated nested Laplace approximations. J R Stat Soc Ser B 71(2):319–392

Salter-Townshend M, Murphy T (2009) Variational Bayesian inference for the latent position and cluster model. In: NIPS 2009 (Workshop on analyzing networks & learning with graphs)

Sing T, Sander O, Beerenwinkel N, Lengauer T (2007) ROCR: visualizing the performance of scoring classifiers. R package version 1.0-2. http://rocr.bioinf.mpi-sb.mpg.de/ROCR.pdf/

Smídl V, Quinn A (2005) The variational Bayes method in signal processing. Springer, Berlin

Smyth G (2004) Linear models and empirical Bayes methods for assessing differential expression in microarray experiments. Stat Appl Genet Mol Biol 3(1):1–25. Article 3

Smyth G (2005) Limma: linear models for microarray data. In: Bioinformatics and computational biology solutions using R and bioconductor. Springer, New York, pp 397–420

Teschendorff A, Wang Y, Barbosa-Morais N, Brenton J, Caldas C (2005) A variational Bayesian mixture modelling framework for cluster analysis of gene-expression data. Bioinformatics 21(13):3025–3033

Tzikas D, Likas A, Galatsanos N (2008) The variational approximation for Bayesian inference. IEEE Signal Process Mag 25(6):131–146

Wand MP, Ormerod JT, Padoan SA, Frührwirth R (2011) Mean field variational Bayes for elaborate distributions. Bayesian Anal 6(4):1–48

Wang B, Titterington DM (2005) Inadequacy of interval estimates corresponding to variational Bayesian approximations. In: Cowell RG, Ghahramani Z (eds) Proceedings of the tenth international workshop on artificial intelligence and statistics. Society for Artificial Intelligence and Statistics, pp 373–380

Zhang M, Montooth K, Wells M, Clark A, Zhang D (2005) Mapping multiple quantitative trait loci by Bayesian classification. Genetics 169(4):2305–2318

Acknowledgements

We would like to thank John T. Ormerod who provided supplementary materials for GBVA implementation in Ormerod (2011), and Haim Y. Bar for helpful discussions.

Professors Booth and Wells acknowledge the support of NSF-DMS 1208488 and NIH U19 AI111143.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix: The VB-LEMMA Algorithm

Appendix: The VB-LEMMA Algorithm

1.1 The B-LEMMA Model

We consider a natural extension to the LEMMA model in Bar et al. (2010): a fully Bayesian three-component mixture model, B-LEMMA:

where (b 1g, b 2g) takes values (1, 0), (0, 1), or (0, 0), indicating that gene g is in non-null group 1, non-null group 2, or null group, respectively. p 1 and p 2 are proportions of non-null group 1 and non-null group 2 genes. Hence, the non-null proportion is p 1 + p 2. Each of τ and ψ g represents the same quantity as in the B-LIMMA model.

1.1.1 Algorithm

The VB-LEMMA algorithm was derived based on an equivalent model to B-LEMMA. In the equivalent model, the gene-specific treatment effect is treated as the combination of a fixed global effect and a random zero-mean effect. That is, conditional distribution of d g and that of ψ g are replaced with

The set of observed data and the set of unobserved data are identified as

where b g = (b 1g, b 2g) and p = (p 1, p 2).

Because of the similarities of the B-LEMMA model to the B-LIMMA model, derivation of VB-LEMMA was achieved by extending the derivation of VB-LIMMA that involves a gene-specific zero-mean random effect parameter. The VB algorithm based on the exact B-LEMMA model was also derived for comparison. However, little discrepancy in performance between the VB algorithm based on the exact model and VB-LEMMA was observed. Therefore, VB-LEMMA based on the equivalent model was adopted.

The product density restriction

leads to q-densities

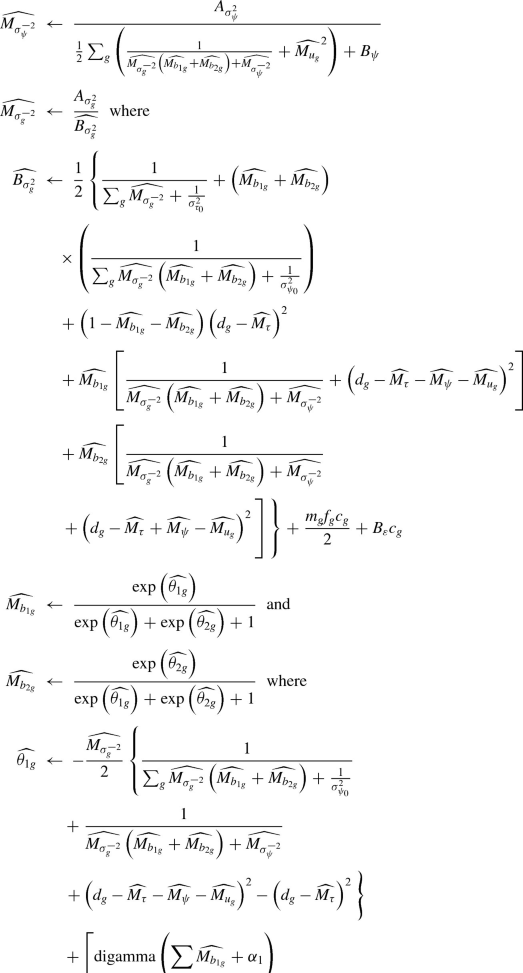

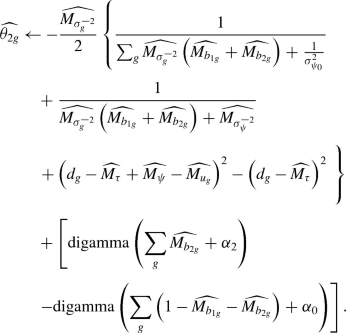

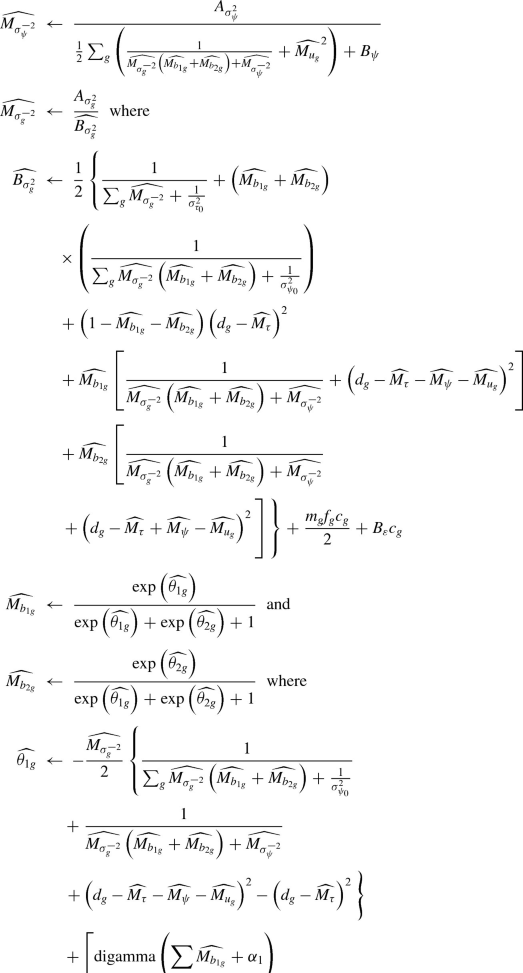

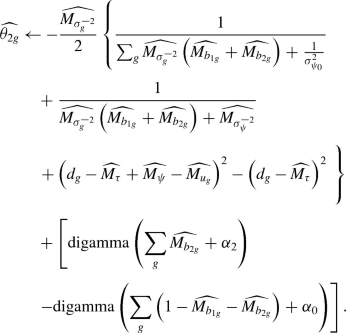

It is only necessary to update the variational posterior means \(\hat {M_\cdot }\) in VB-LEMMA. Upon convergence, the other variational parameters are computed based on the converged value of those involved in the iterations. The iterative scheme is as follows:

-

1.

Initialize

$$\displaystyle \begin{aligned} \widehat{M_{\sigma_\psi^{-2}}} &=1\\ \widehat{M_{\sigma_g^{-2}}} &=\frac{1}{c_g}\;\forall\: g \\ \widehat{M_{b_g}} &= \left\{ \begin{array}{l l} (1,0,0) & \quad \text{if rank{$(d_g) \geq (1-0.05)G$}}\\ (0,1,0) & \quad \text{if rank{$(d_g) \leq 0.05G$}}\\ (0,0,1) & \quad \text{otherwise} \end{array} \right. \text{ for each } g \\ \widehat{M_\psi} &= \frac{1}{2}\left(\bigg \vert \sum_{\{g: \text{rank}(d_g) \geq (1-0.05)G\}}{d_g} - \sum_{g=1}^G{d_g}\bigg \vert+\bigg \vert \sum_{g=1}^G{d_g} - \sum_{\{g: \text{rank}(d_g) \leq 0.05G\}}{d_g} \bigg \vert \right) \\ \widehat{M_{u_g}} &= 0\;\forall\: g \\ \text{Set } A_{\sigma_\psi^2}&=\frac{G}{2}+A_\psi \quad \text{and}\quad A_{\sigma_g^2}=\frac{1+f_g}{2}+A_\varepsilon \;\text{for each}\;g. \end{aligned} $$ -

2.

Update

$$\displaystyle \begin{aligned} \widehat{M_\tau} \; & \leftarrow\; \left\{ \sum_{g} \widehat{M_{\sigma_g^{-2}}}\left[ \left(1-\widehat{M_{b_{1g}}}-\widehat{M_{b_{2g}}} \right)d_g + \widehat{M_{b_{1g}}}\left(d_g-\widehat{M_\psi}-\widehat{M_{u_g}}\right) \right. \right. \\ & \qquad \left. \left. + \widehat{M_{b_{2g}}} \left(d_g+\widehat{M_\psi}-\widehat{M_{u_g}}\right)\right] + \frac{\mu_{\tau_0}}{\sigma^2_{\tau_0}}\right\} \times \dfrac{1}{ \sum_{g}\widehat{M_{\sigma_g^{-2}}} + \frac{1}{\sigma^2_{\tau_0}}} \\ \widehat{M_{\psi}} \; & \leftarrow\; \left\{ \sum_{g} \widehat{M_{\sigma_g^{-2}}} \left( \widehat{M_{b_{1g}}} - \widehat{M_{b_{2g}}} \right) \left(d_g-\widehat{M_\tau}-\widehat{M_{u_g}}\right) + \frac{\mu_{\psi_0}}{\sigma^2_{\psi_0}}\right\} \\ & \qquad \times \dfrac{1}{ \sum_{g}\widehat{M_{\sigma_g^{-2}}}\left(\widehat{M_{b_{1g}}}+\widehat{M_{b_{2g}}}\right)+\frac{1}{\sigma^2_{\psi_0}}} \\ \widehat{M_{u_g}} \; & \leftarrow\; \widehat{M_{\sigma_g^{-2}}}\left[ \widehat{M_{b_{1g}}}\left(d_g-\widehat{M_\tau}-\widehat{M_\psi}\right) + \widehat{M_{b_{2g}}} \left(d_g-\widehat{M_\tau}+\widehat{M_\psi}\right)\right]\\ & \qquad \times \dfrac{1}{\widehat{M_{\sigma_g^{-2}}}\left(\widehat{M_{b_{1g}}}+\widehat{M_{b_{2g}}}\right)+\widehat{M_{\sigma_\psi^{-2}}} } \end{aligned} $$

-

3.

Repeat (2) until the increase in

$$\displaystyle \begin{aligned} & \log\underline{p} \left(\mathbf{y};\mathbf{q}\right) \\ &\quad = \frac{-G}{2}\times\log{\left(2\pi\right)} -\sum_g \left[\widehat{M_{b_{1g}}}\log{\widehat{M_{b_{1g}}}}+\widehat{M_{b_{2g}}}\log{\widehat{M_{b_{2g}}}} \right.\\ &\qquad \left. +\left(1-\widehat{M_{b_{1g}}}-\widehat{M_{b_{2g}}}\right)\log{\left(1-\widehat{M_{b_{1g}}}-\widehat{M_{b_{1g}}}\right)}\right] \\ &\qquad +\log{\left( {\mathrm{Beta}} \left(\sum_{g}\widehat{M_{b_{1g}}}+\alpha_1,\sum_{g}\widehat{M_{b_{2g}}}+\alpha_2,\sum_{g}\left(1-\widehat{M_{b_{1g}}}-\widehat{M_{b_{2g}}}\right)+\alpha_0\right)\right)} \\ & \qquad - \log{\left( {\mathrm{Beta}} \left(\alpha_1,\alpha_2,\alpha_0\right)\right)} \\ & \qquad +\left[\log{\left(\frac{1}{ \sum_{g}\widehat{M_{\sigma_g^{-2}}} + \frac{1}{\sigma^2_{\tau_0}}}\right)}\right.\\ &\qquad \left.-\log{{\sigma^2_{\tau_0}}}+1-\dfrac{\frac{1}{ \sum_{g}\widehat{M_{\sigma_g^{-2}}} + \frac{1}{\sigma^2_{\tau_0}}}+\left(\widehat{M_\tau}-\mu_{\tau_0}\right)^2}{\sigma^2_{\tau_0}}\right]\times\frac{1}{2}\\ & \qquad +\left[\log{\left(\frac{1}{\sum_{g}\widehat{M_{\sigma_g^{-2}}}\left(\widehat{M_{b_{1g}}}+\widehat{M_{b_{2g}}}\right)+\frac{1}{\sigma^2_{\psi_0}}} \right)}-\log{{\sigma^2_{\psi_0}}}\right]\times\frac{1}{2} \\ & \qquad +\left[1-\dfrac{\left(\frac{1}{\sum_{g}\widehat{M_{\sigma_g^{-2}}}\left(\widehat{M_{b_{1g}}}+\widehat{M_{b_{2g}}}\right)+\frac{1}{\sigma^2_{\psi_0}}} \right)+\left(\widehat{M_\psi}-\mu_{\psi_0}\right)^2}{\sigma^2_{\psi_0}}\right]\times\frac{1}{2} \\ &\qquad +G\times{A_\varepsilon}\log{B_\varepsilon}+\sum_g \left[\left(\frac{f_g}{2}+A_\varepsilon\right)\log{c_g}\log{\Gamma{\left(A_{\sigma_g^{2}}\right)}}-\log{\Gamma{\left(A_\varepsilon\right)}}\right. \\ &\qquad +\left. -\frac{f_g}{2}\log{2}-\log{\Gamma{\left(\frac{f_g}{2}\right)}}+\left(\frac{f_g}{2}-1\right)\log{m_g}+\frac{f_g}{2}\log{f_g}\right] \\ &\qquad +\sum_g \left\{ \frac{A_{\sigma_g^{2}}}{\widehat{B_{\sigma_g^{2}}}}\times \left[ -\frac{1}{2}{m_g}{f_g}{c_g}-{B_\varepsilon}{c_g} \right. \right. \\ &\qquad - \left. \left. {\frac{1}{2}}\left(\frac{1}{ \sum_{g}\widehat{M_{\sigma_g^{-2}}} + \frac{1}{\sigma^2_{\tau_0}}}+\left(1-\widehat{M_{b_{1g}}}-\widehat{M_{b_{2g}}}\right)\left({d_g}-\widehat{M_\tau}\right)^2\right)\right. \right. \\ &\qquad - \left. \left. \frac{\widehat{M_{b_{1g}}} + \widehat{M_{b_{1g}}}}{2}\left(\frac{1}{\sum_{g}\widehat{M_{\sigma_g^{-2}}}\left(\widehat{M_{b_{1g}}}+\widehat{M_{b_{2g}}}\right)+\frac{1}{\sigma^2_{\psi_0}}}\right)\right. \right. \\ & \qquad - \left. \left.\frac{\widehat{M_{b_{1g}}}}{2}\left(\frac{1}{\widehat{M_{\sigma_g^{-2}}}\left(\widehat{M_{b_{1g}}}+\widehat{M_{b_{2g}}}\right)+\widehat{M_{\sigma_\psi^{-2}}} }+\left({d_g}-\widehat{M_\tau}-\widehat{M_\psi}-\widehat{M_{u_g}}\right)^2\right) \right. \right. \\ & \qquad - \left. \left.\frac{\widehat{M_{b_{2g}}}}{2}\left(\frac{1}{\widehat{M_{\sigma_g^{-2}}}\left(\widehat{M_{b_{1g}}}+\widehat{M_{b_{2g}}}\right)+\widehat{M_{\sigma_\psi^{-2}}} }+\left({d_g}-\widehat{M_\tau}+\widehat{M_\psi}-\widehat{M_{u_g}}\right)^2\right) \right] \right\} \\ &\qquad + \sum_g{{A_{\sigma_g^{2}}\left(-\log{\widehat{B_{\sigma_g^{2}}}}+1\right) }} \\\noalign{} &\qquad + \frac{1}{2}\sum_g{\left[\log\left( \frac{1}{\widehat{M_{\sigma_g^{-2}}}\left(\widehat{M_{b_{1g}}}+\widehat{M_{b_{2g}}}\right) +\widehat{M_{\sigma_\psi^{-2}}} } \right)+1\right]} \\ & \qquad +{A_\psi}\log{B_\psi}-\log{\Gamma{\left(A_\psi\right)}} + {A_{\sigma_\psi^{2}}\left(-\log{\widehat{B_{\sigma_\psi^{2}}}}\right) }+\log{\Gamma{\left(A_{\sigma_\psi^{2}}\right)}} \end{aligned} $$from previous iteration becomes negligible.

-

4.

Upon convergence, the remaining variational parameters are computed:

$$\displaystyle \begin{aligned} \widehat{V_\tau} &\leftarrow\; \dfrac{1}{ \sum_{g}\widehat{M_{\sigma_g^{-2}}} + \frac{1}{\sigma^2_{\tau_0}}} \\ \widehat{V_{\psi}} &\leftarrow\; \dfrac{1}{\sum_{g}\widehat{M_{\sigma_g^{-2}}}\left(\widehat{M_{b_{1g}}}+\widehat{M_{b_{2g}}}\right)+\frac{1}{\sigma^2_{\psi_0}}} \\ \widehat{V_{u_g}} &\leftarrow\; \dfrac{1}{\widehat{M_{\sigma_g^{-2}}}\left(\widehat{M_{b_{1g}}}+\widehat{M_{b_{2g}}}\right)+\widehat{M_{\sigma_\psi^{-2}}} } \\ \widehat{B_{\sigma_\psi^2}} &\leftarrow \; \dfrac{1}{2}\sum_{g}\left[ \dfrac{1}{ \widehat{M_{\sigma_g^{-2}}}\left(\widehat{M_{b_{1g}}}+\widehat{M_{b_{2g}}}\right)+\widehat{M_{\sigma_\psi^{-2}}} }+\widehat{M_{u_g}}^2\right] + B_\psi \end{aligned} $$$$\displaystyle \begin{aligned} \widehat{\alpha_{p_1}}&\leftarrow\; \sum_{g}\widehat{M_{b_{1g}}}+\alpha_1 \\ \widehat{\alpha_{p_2}}&\leftarrow\; \sum_{g}\widehat{M_{b_{2g}}}+\alpha_2 \\ \widehat{\alpha_{p_0}}&\leftarrow\; \sum_{g}\left(1-\widehat{M_{b_{1g}}}-\widehat{M_{b_{2g}}}\right)+\alpha_0. \end{aligned} $$

1.2 The VB-Proteomics Algorithm

1.2.1 The Proteomics Model

As pointed out in Sect. 7.4.1.3, the fully Bayesian model in Booth et al. (2011) is as follows:

where y ij is the spectral count of protein i, i = 1, …, p, and replicate j, j = 1, …, n. L i is the length of protein i, N j is the average count for replicate j over all proteins, and

In fact, conjugacy in this Poisson GLMM is not sufficient for a tractable solution to be computed by VB. Therefore, a similar Poisson–Gamma HGLM where the parameters β m, m = 0, 1, 2 and the latent variables b ki, k = 0, 1 are transformed is used for the VB implementation:

As before classification is inferred from the posterior expectations of the latent binary indicators I i, i = 1, …, p.

1.2.2 Algorithm

The set of observed data and the set of unobserved data are identified as

The complete likelihood is

in which the mixture density is

The log densities that comprise the log complete likelihood are

The product density restriction

leads to the following q-densities:

-

Derivation of \(q_{\lambda _0}(\lambda _0)\):

$$\displaystyle \begin{aligned} q_{\lambda_0}(\lambda_0) & \propto \exp \text{E}_{-\lambda_0} \left\{ \log p(\mathbf{y},H) \right\} \\ & \propto \exp \text{E}_{-\lambda_0} \left\{ \sum_{i,j} \log p(y_{ij}|\lambda_0,\lambda_1,\lambda_2,\phi_{0i},\phi_{1i},I_i) + \log p(\lambda_0) \right\} \\ &\propto \exp \left\{ \sum_{i,j} \left[ (1-T_j)({y_{ij}} \log \lambda_0^{-1} - \lambda_0^{-1}\widehat{M_{\phi_{0i}^{-1}}}L_i{N_j}) \right. \right. \\ &\quad \left. \left. + (1-\widehat{M_{I_i}})T_j ({y_{ij}}\log \lambda_0^{-1} - \lambda_0^{-1}\widehat{M_{\phi_{0i}^{-1}}}\widehat{M_{\lambda_1^{-1}}}L_i{N_j}) \right. \right. \\ &\quad \left. \left. + \widehat{M_{I_i}}T_j({y_{ij}}\log \lambda_0^{-1} - \lambda_0^{-1}\widehat{M_{\phi_{0i}^{-1}}}\widehat{M_{\lambda_1^{-1}}}\widehat{M_{\phi_{1i}^{-1}}}\widehat{M_{\lambda_2^{-1}}}L_i{N_j}) \right] \right. \\ &\quad \left. + (A_{\lambda_0} +1)\log \lambda_0^{-1} - \frac{B_{\lambda_0}}{\lambda_0} \right\} \end{aligned} $$The kernel of an Inverse-Gamma density is identified on the right hand side. Therefore, it can be deduced that

$$\displaystyle \begin{aligned} q_{\lambda_0}(\lambda_0) &= {\mathrm{IG}}(\widehat{A_{\lambda_0}}, \widehat{B_{\lambda_0}}) \end{aligned} $$with

$$\displaystyle \begin{aligned} \widehat{A_{\lambda_0}} &= \sum_{i,j} y_{ij} + A_{\lambda_0} \\ \widehat{B_{\lambda_0}} &= \sum_{i,j} L_i{N_j}\left[(1-T_j)\widehat{M_{\phi_{0i}^{-1}}}+(1-\widehat{M_{I_i}})T_j \widehat{M_{\phi_{0i}^{-1}}}\widehat{M_{\lambda_1^{-1}}} \right.\\ & \quad \left.+\widehat{M_{I_i}}T_j \widehat{M_{\phi_{0i}^{-1}}}\widehat{M_{\lambda_1^{-1}}}\widehat{M_{\phi_{1i}^{-1}}}\widehat{M_{\lambda_2^{-1}}}\right]+B_{\lambda_0}. \end{aligned} $$Moreover, the posterior mean and posterior expected log of \(\lambda _0^{-1}\) are

$$\displaystyle \begin{aligned} \widehat{M_{\lambda_0^{-1}}} &= \dfrac{\widehat{A_{\lambda_0}}}{\widehat{B_{\lambda_0}}}\\ \widehat{\log \lambda_0^{-1}} &= \text{digamma}(\widehat{A_{\lambda_0}}) -\log{\widehat{B_{\lambda_0}}}. \end{aligned} $$ -

Derivation of \(q_{\lambda _1}(\lambda _1)\) and \(q_{\lambda _2}(\lambda _2)\) is similar to that of \(q_{\lambda _0}(\lambda _0)\):

$$\displaystyle \begin{aligned} q(\lambda_1) &\propto \exp \text{E}_{-\lambda_1} \left\{ \sum_{i,j} \log p(y_{ij}|\lambda_0,\lambda_1,\lambda_2,\phi_{0i},\phi_{1i},I_i) + \log p(\lambda_1) \right\} \\ \Rightarrow q(\lambda_1) &= {\mathrm{IG}}(\widehat{A_{\lambda_1}}, \widehat{B_{\lambda_1}})\\ \widehat{A_{\lambda_1}} &= \displaystyle\sum_{i,j}T_j y_{ij} + A_{\lambda_1} \\ \widehat{B_{\lambda_1}} &= \displaystyle\sum_{i,j} L_i{N_j}[(1-\widehat{M_{I_i}})T_j \widehat{M_{\lambda_0^{-1}}}\widehat{M_{\phi_{0i}^{-1}}}+\widehat{M_{I_i}}T_j \widehat{M_{\lambda_0^{-1}}}\widehat{M_{\phi_{0i}^{-1}}}\widehat{M_{\phi_{1i}^{-1}}}\widehat{M_{\lambda_2^{-1}}} ] \\ &\quad +B_{\lambda_1}, \end{aligned} $$and

$$\displaystyle \begin{aligned} \widehat{M_{\lambda_1^{-1}}} &= \dfrac{\widehat{A_{\lambda_1}}}{\widehat{B_{\lambda_1}}}\\ \widehat{\log \lambda_1^{-1}} &= \text{digamma}(\widehat{A_{\lambda_1}}) -\log{\widehat{B_{\lambda_1}}}. \end{aligned} $$$$\displaystyle \begin{aligned} q_{\lambda_2}(\lambda_2) &\propto \exp \text{E}_{-\lambda_2} \left\{ \sum_{i,j} \log p(y_{ij}|\lambda_0,\lambda_1,\lambda_2,\phi_{0i},\phi_{1i},I_i) + \log p(\lambda_2) \right\} \\ \Rightarrow q_{\lambda_2}(\lambda_2) &= {\mathrm{IG}}(\widehat{A_{\lambda_2}}, \widehat{B_{\lambda_2}})\\ \widehat{A_{\lambda_2}} &= \displaystyle\sum_{i,j}\widehat{M_{I_i}}T_j y_{ij} + A_{\lambda_2}\\ \widehat{B_{\lambda_2}} &= \displaystyle\sum_{i,j} L_i{N_j}[\widehat{M_{I_i}}T_j \widehat{M_{\lambda_0^{-1}}}\widehat{M_{\phi_{0i}^{-1}}}\widehat{M_{\lambda_1^{-1}}}\widehat{M_{\phi_{1i}^{-1}}} ]+B_{\lambda_2}, \end{aligned} $$and

$$\displaystyle \begin{aligned} \widehat{M_{\lambda_2^{-1}}} &= \dfrac{\widehat{A_{\lambda_2}}}{\widehat{B_{\lambda_2}}}\\ \widehat{\log \lambda_2^{-1}} &= \text{digamma}(\widehat{A_{\lambda_2}}) -\log{\widehat{B_{\lambda_2}}}. \end{aligned} $$ -

Derivation of \(q_{\boldsymbol {\phi _0}}( \boldsymbol {\phi _0})\) and \(q_{\boldsymbol {\phi _1}}( \boldsymbol {\phi _1})\) is also similar to that of \(q_{\lambda _0}(\lambda _0)\), with induced factorizations:

$$\displaystyle \begin{aligned} q_{\boldsymbol{\phi_0}}( \boldsymbol{\phi_0}) &\propto \exp \text{E}_{- \boldsymbol{\phi_0}} \left\{ \sum_{i,j} \log p(y_{ij}|\lambda_0,\lambda_1,\lambda_2,\phi_{0i},\phi_{1i},I_i) \right. \\ & \quad \left. + \sum_i \log p(\phi_{0i}|\delta_0) \right\}\\ \Rightarrow \quad q_{\boldsymbol{\phi_0}}(\boldsymbol{\phi_0}) &= \prod_i q_{\phi_{0i}}(\phi_{0i}) \text{ and}\\ q_{\phi_{0i}}(\phi_{0i}) &\propto \exp \text{E}_{-\boldsymbol{\phi_0}} \left\{ \sum_{j} \log p(y_{ij}|\lambda_0,\lambda_1,\lambda_2,\phi_{0i},\phi_{1i},I_i)+ \log p(\phi_{0i}|\delta_0) \right\}. \end{aligned} $$Therefore, for each i,

$$\displaystyle \begin{aligned} q_{\phi_{0i}}(\phi_{0i}) &= {\mathrm{IG}}(\widehat{A_{\phi_{0i}}}, \widehat{B_{\phi_{0i}}})\\ \widehat{A_{\phi_{0i}}} &= \displaystyle \sum_{j} y_{ij} + \widehat{M_{\delta_0}}\\ \widehat{B_{\phi_{0i}}} &= \displaystyle \sum_{j} L_i{N_j}\widehat{M_{\lambda_0^{-1}}}[(1-T_j) + (1-\widehat{M_{I_i}})T_j \widehat{M_{\lambda_1^{-1}}} \\ & \qquad + \widehat{M_{I_i}}T_j \widehat{M_{\lambda_1^{-1}}}\widehat{M_{\phi_{1i}^{-1}}} \widehat{M_{\lambda_2^{-1}}}]+\widehat{M_{\delta_0}} \\ \widehat{M_{{\phi_{0i}}^{-1}}} &= \dfrac{\widehat{A_{\phi_{0i}}}}{\widehat{B_{\phi_{0i}}}}\\ \widehat{\log {\phi_{0i}}^{-1}} &= \text{digamma}(\widehat{A_{\phi_{0i}}}) -\log{\widehat{B_{\phi_{0i}}}}. \end{aligned} $$$$\displaystyle \begin{aligned} q_{\boldsymbol{\phi_1}}(\boldsymbol{\phi_1}) &\propto \exp \text{E}_{-\boldsymbol{\phi_1}} \left\{ \sum_{i,j} \log p(y_{ij}|\lambda_0,\lambda_1,\lambda_2,\phi_{0i},\phi_{1i},I_i) \right. \\ & \quad \left. + \sum_i \log p(\phi_{1i}|\delta_1) \right\} \\ \Rightarrow \quad q_{\boldsymbol{\phi_1}}(\boldsymbol{\phi_1}) &= \prod_i q_{\phi_{1i}}(\phi_{1i}) \text{ and} \\ q_{\phi_{1i}}(\phi_{1i}) &\propto \exp \text{E}_{-\boldsymbol{\phi_1}} \left\{ \sum_{j} \log p(y_{ij}|\lambda_0,\lambda_1,\lambda_2,\phi_{0i},\phi_{1i},I_i) + \log p(\phi_{1i}|\delta_1) \right\}. \end{aligned} $$Therefore, for each i,

$$\displaystyle \begin{aligned} q_{\phi_{1i}}(\phi_{1i}) &= {\mathrm{IG}}(\widehat{A_{\phi_{1i}}}, \widehat{B_{\phi_{1i}}})\\ \widehat{A_{\phi_{1i}}} &= \displaystyle \sum_{j} \widehat{M_{I_i}}T_j y_{ij} + \widehat{M_{\delta_1}}\\ \widehat{B_{\phi_{1i}}} &= \displaystyle \sum_{j} L_i{N_j}\widehat{M_{I_i}}T_j \widehat{M_{\lambda_0^{-1}}}\widehat{M_{\phi_{0i}^{-1}}} \widehat{M_{\lambda_1^{-1}}} \widehat{M_{\lambda_2^{-1}}}+\widehat{M_{\delta_1}} \\ \widehat{M_{{\phi_{1i}}^{-1}}} &= \dfrac{\widehat{A_{\phi_{1i}}}}{\widehat{B_{\phi_{1i}}}}\\ \widehat{\log {\phi_{1i}}^{-1}} &= \text{digamma}(\widehat{A_{\phi_{1i}}}) -\log{\widehat{B_{\phi_{1i}}}}. \end{aligned} $$ -

Derivation of \(q_{\delta _0}(\delta _0)\):

$$\displaystyle \begin{aligned} q_{\delta_0}(\delta_0) &\propto \exp \text{E}_{-\delta_0} \left\{ \sum_i \log p(\phi_{0i}|\delta_0) + \log p(\delta_0) \right\}\\ & \propto \exp \left\{ \sum_i \left[ \delta_0 \log \delta_0 - \log\Gamma(\delta_0) + (\delta_0+1)\widehat{\log \phi_{0i}^{-1}} - \delta_0 \widehat{M_{\phi_{0i}^{-1}}} \right] \right.\\ & \quad \left. + - A_{\delta_0} \log B_{\delta_0} - \log\Gamma(A_{\delta_0}) + (A_{\delta_0}-1)\log \delta_0 - \dfrac{\delta_0}{B_{\delta_0}}\right\}. \end{aligned} $$The right-hand side does not contain the kernel of any standard distribution. Therefore, an approximation to \(\log \Gamma (\delta _0)\) is used.

For complex number z with large Re(z), because Γ(z + 1) = z! = z Γ(z),

$$\displaystyle \begin{aligned} \log\Gamma(z) &= \log\Gamma(z+1) - \log z\\ &=\log z! - \log z\\ &\approx \left(\frac{1}{2}\log(2\pi z)+z\log z - z\right) -\log z\\ & \quad \text{by Stirling's approximation } n! \approx \sqrt{2\pi n}\left(\frac{n}{e}\right)^n \\ &\approx (z-\frac{1}{2})\log z - z + \frac{1}{2}\log(2\pi). \end{aligned} $$Hence,

$$\displaystyle \begin{aligned} \delta_0 \log \delta_0 - \log\Gamma(\delta_0) \approx \frac{1}{2}\log \delta_0 + \delta_0 - \frac{1}{2}\log(2\pi) \text{ for large } \delta_0 >0. \end{aligned} $$Substituting the above on the right-hand side of the formula for \(q_{\delta _0}(\delta _0)\) leads to the kernel of a Gamma density. Therefore,

$$\displaystyle \begin{aligned} q_{\delta_0}(\delta_0) &\approx {\mathrm{Gamma}}(\widehat{A_{\delta_0}}, \widehat{B_{\delta_0}})\\ \widehat{A_{\delta_0}} &= \frac{p}{2} + A_{\delta_0}\\ \widehat{B_{\delta_0}} &= \dfrac{1}{-p- \sum_i \widehat{\log \phi_{0i}^{-1}} + \sum_i \widehat{M_{\phi_{0i}^{-1}}} + \frac{1}{B_{\delta_0}} }, \end{aligned} $$and

$$\displaystyle \begin{aligned} \widehat{M_{\delta_0}} &= \widehat{A_{\delta_0}}\widehat{B_{\delta_0}}. \end{aligned} $$ -

Derivation of \(q_{\delta _1}(\delta _1)\) is similar to that of \(q_{\delta _0}(\delta _0)\), with the same approximation employed:

$$\displaystyle \begin{aligned} q_{\delta_1}(\delta_1) &\approx {\mathrm{Gamma}}(\widehat{A_{\delta_1}}, \widehat{B_{\delta_1}})\\ \widehat{A_{\delta_1}} &= \frac{p}{2} + A_{\delta_1}\\ \widehat{B_{\delta_1}} &= \dfrac{1}{-p- \sum_i \widehat{\log \phi_{1i}^{-1}} + \sum_i \widehat{M_{\phi_{1i}^{-1}}} + \frac{1}{B_{\delta_1}} }, \end{aligned} $$and

$$\displaystyle \begin{aligned} \widehat{M_{\delta_1}} &= \widehat{A_{\delta_1}}\widehat{B_{\delta_1}}. \end{aligned} $$ -

Derivation of q I(I):

$$\displaystyle \begin{aligned} q_{\mathbf{I}}(\mathbf{I}) &\propto \exp \text{E}_{-\mathbf{I}} \left\{ \sum_{i,j} \log p(y_{ij}|\lambda_0,\lambda_1,\lambda_2,\phi_{0i},\phi_{1i},I_i) + \sum_i \log p(I_i|\pi_1) \right\}\\ \Rightarrow \quad q_{\mathbf{I}}(\mathbf{I}) &= \prod_i q_{I_i}(I_i) \text{ and}\\ q_{I_i}(I_i) &\propto \exp \text{E}_{-\mathbf{I}} \left\{ \sum_{j} \log p(y_{ij}|\lambda_0,\lambda_1,\lambda_2,\phi_{0i},\phi_{1i},I_i) + \log p(I_i|\pi_1) \right\}. \end{aligned} $$Therefore, for each i,

$$\displaystyle \begin{aligned} q_{I_i}(I_i) &= {\mathrm{Bernoulli}}\left(\frac{\exp(\widehat{\theta_i})} {\exp(\widehat{\theta_i}) + 1}\right)\\ \widehat{\theta_i} &= \sum_j y_{ij} T_j \left(\widehat{\log \phi_{1i}^{-1}} +\widehat{\log \lambda_2^{-1}} \right) \\ & \qquad + L_i \widehat{M_{\phi_{0i}^{-1}}}\left(\sum_j N_j T_j\right)\widehat{M_{\lambda_0^{-1}}}\widehat{M_{\lambda_1^{-1}}}\left(1-\widehat{M_{\phi_{1i}^{-1}}}\widehat{M_{\lambda_2^{-1}}}\right) \\ & \qquad + \widehat{\log \pi_1} - \widehat{\log(1-\pi_1)}, \end{aligned} $$and

$$\displaystyle \begin{aligned} \widehat{M_{I_i}} &= \frac{\exp(\widehat{\theta_i})} {\exp(\widehat{\theta_i}) + 1}. \end{aligned} $$ -

Derivation of \(q_{\pi _1}(\pi _1)\):

$$\displaystyle \begin{aligned} q_{\pi_1}(\pi_1) &\propto \exp \text{E}_{-\pi_1} \left\{ \sum_i \log p(I_i|\pi_1) + \log p(\pi_1) \right\}\\ \Rightarrow q_{\pi_1}(\pi_1) &= {\mathrm{Beta}}(\widehat{\alpha_{\pi_1}},\widehat{\beta_{\pi_1}})\\ \widehat{\alpha_{\pi_1}} &= \sum_i \widehat{M_{I_i}} + \alpha \\ \widehat{\beta_{\pi_1}} &= \sum_i (1-\widehat{M_{I_i}}) + \beta, \end{aligned} $$and

$$\displaystyle \begin{aligned} \widehat{\log \pi_1} &= \text{digamma}(\widehat{\alpha_{\pi_1}}) - \text{digamma}(\widehat{\alpha_{\pi_1}}+\widehat{\beta_{\pi_1}})\\ \widehat{\log(1-\pi_1)} &= \text{digamma}(\widehat{\beta_{\pi_1}}) - \text{digamma}(\widehat{\alpha_{\pi_1}}+\widehat{\beta_{\pi_1}}) \end{aligned} $$

Updating the q-densities in an iterative scheme boils down to updating the variational parameters in the scheme. Convergence is monitored via the scalar quantity C q(y), the lower bound on the log of the marginal data density:

The VB-proteomics algorithm consists of the following steps:

-

Step 1: Initialize \(\widehat {B_{\lambda _0}}, \widehat {B_{\lambda _1}}, \widehat {B_{\delta _0}}, \widehat {B_{\delta _1}}\), and \( \widehat {A_{\phi _{0i}}}, \widehat {A_{\phi _{1i}}}, \widehat {B_{\phi _{0i}}}, \widehat {B_{\phi _{1i}}}\) for each i.

-

Step 2: Cycle through \(\widehat {A_{\lambda _2}}, \widehat {B_{\lambda _2}}, \widehat {B_{\lambda _0}}, \widehat {B_{\lambda _1}}, \widehat {B_{\delta _0}}, \widehat {B_{\delta _1}}, \widehat {A_{\phi _{0i}}}, \widehat {B_{\phi _{0i}}}, \widehat {A_{\phi _{1i}}}, \widehat {B_{\phi _{1i}}}, \widehat {M_{I_i}}\) iteratively, until the increase in C q(y) computed at the end of each iteration is negligible.

-

Step 3: Compute \(\widehat {\alpha _{\pi _1}}\) and \(\widehat {\beta _{\pi _1}}\) using converged variational parameter values.

The values of the model parameters were chosen to form non-informative priors: The shape and scale parameters for the Inverse-Gamma priors were set to be 0.1, the shape and scale parameters for the Gamma priors were set to be 0.1 and 100 respectively, and the parameters for the Beta prior were both 1. Because a log-Normal distribution is approximately a Gamma distribution, the variance of \( e^{b_{ki}}, k=0,1\) which follows a log-Normal distribution in the Poisson GLMM roughly equals to the variance of \( \phi ^{-1}_{ki}, k=0,1\) which follows a Gamma distribution in the Poisson–Gamma HGLM. That is, \((e^{\sigma _k^2}-1)e^{\sigma _k^2} \approx 1/{\delta _k}, \; k=0,1\). Based on this we determined parameter values in the \({\mathrm{Gamma}}(A_{\delta _k}, B_{\delta _k})\) prior for δ k, k = 0, 1.

Starting values of posterior mean of the latent indicator were \(\widehat {M_{I_i}}=1\) for proteins that are associated with the 20% smallest p-values from one protein at a time Score tests and \(\widehat {M_{I_i}}=0\) otherwise.

An approximation to the digamma function, \(\text{digamma}(z) \approx \log z - \frac {1}{2z}\), was used wherever z was too small in VB implementation.

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this chapter

Cite this chapter

Wan, M., Booth, J.G., Wells, M.T. (2018). Variational Bayes for Hierarchical Mixture Models. In: Härdle, W., Lu, HS., Shen, X. (eds) Handbook of Big Data Analytics. Springer Handbooks of Computational Statistics. Springer, Cham. https://doi.org/10.1007/978-3-319-18284-1_7

Download citation

DOI: https://doi.org/10.1007/978-3-319-18284-1_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-18283-4

Online ISBN: 978-3-319-18284-1

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)