Abstract

In this chapter, we summarize some theoretical fundamentals. We assume that the reader is already familiar with these basic facts. The main purpose of this chapter is to introduce the notation that is used in this book and to provide a reference. Therefore, the explanations are brief, and no proofs are given.

You have full access to this open access chapter, Download chapter PDF

In this chapter, we summarize some theoretical fundamentals. We assume that the reader is already familiar with these basic facts. The main purpose of this chapter is to introduce the notation that is used in this book and to provide a reference. Therefore, the explanations are brief, and no proofs are given.

2.1 Fourier Analysis and Application to Beam Signals

In this section, several formulas for Fourier series and the Fourier transform are summarized. However, we do not discuss the properties of a function that are necessary for the existence of the transformation. For those foundations, the reader should consult the references cited here.

2.1.1 Fourier Series

A real-valued periodic function f(t) with period T may be decomposed into Fourier components according to the Fourier series

where the complex coefficients cn are determined by

or by

where we made the substitution \(\varphi =\omega t\).

With the substitution \(x = t + T\), we obtain

Due to \(\omega = \frac{2\pi } {T}\), the last exponential function equals 1. Furthermore, we have \(f(x - T) = f(x)\), so that

holds. Therefore, we may use

instead of Eq. (2.2).

2.1.2 Spectrum of a Dirac Comb

In this book, the Dirac delta distribution is used in a heuristic way without the foundations of distribution theory. Therefore, the reader should be aware that the results presented still have to be proven mathematically. For example, we use the formula

even though it does not have any meaning in the scope of classical analysis.

A strongly bunched beam may be approximated by a sum of Dirac delta pulses

which is called Dirac comb. For this special sum of Dirac pulses, one obtains the following Fourier coefficients (only the Dirac pulse with k = 0 is located inside the interval \(-T/2 \leq t \leq +T/2\)):

Hence, all coefficients are equal. According to Eq. (2.1), we get

This can also be written as

2.1.3 Different Representations of the Fourier Series

The general definition of the Fourier series shows that the cn are defined in such a way that both positive and negative frequencies occur. If only positive frequencies are to be allowed, one may write Eq. (2.1) as follows:

We obtain the result

By means of the definition

and

one obtains

Taking a0 = 2c0 and b0 = 0 into account, one may calculate the coefficients cn if an and bn are known:

For the special case that \(c_{n} = 1/T\) holds for all n (Dirac comb; see Sect. 2.1.2), one obtains \(a_{n} = 2/T\) and bn = 0. According to Eq. (2.7), this means that the average, i.e., the DC component, of a strongly bunched beam is exactly one-half the fundamental harmonic:

Now we return to the general case. Instead of using an and bn, one may also use amplitudes and phases:

A comparison with Eq. (2.7) shows that

This leads to the following conditions:

According to Eq. (2.8), we therefore have

and

Due to

one obtains

as the physical amplitudes (peak values). By inserting Eqs. (2.11) and (2.12) into Eq. (2.8), one gets

The same result is obtained by combining Eqs. (2.13)–(2.15).

2.1.4 Discrete Fourier Transform

The discrete Fourier transform is a powerful tool for spectral analysis of signals that are given in digital form, e.g., on a computer. Therefore, we briefly discuss some important features here.

2.1.4.1 Motivation of the Transformation Formula

Let us now assume that a real-valued periodic function f(t) with period \(T = \frac{2\pi } {\omega }\) is discretized according to

where k is an integer. The period T is divided into \(N \in \mathbb{N}\) time intervals

such that f0 = fN holds. Therefore, the N samples \(f_{0},f_{1},\ldots,f_{N-1}\) are sufficient to describe the function f(t), provided that N is large enough. We now replace the integral in Eq. (2.3) by the Riemann sum

This formula is used to define the discrete Fourier transform (DFT)

This obviously yields an approximation of the Fourier coefficients cn of the periodic function f(t), provided that the number N of samples \(x_{k} = f(k\Delta t)\) is large enough.

2.1.4.2 Symmetry Relations

Based on Eq. (2.17), we find that

Therefore, all Xn are known if those for 0 ≤ n ≤ N − 1 are specified. One sees that for a sample \((x_{0},x_{1},\ldots,x_{N-1})\), one obtains a sample \((X_{0},X_{1},\ldots,X_{N-1})\) as the spectrum.

Since we have assumed that the signal f(t) is real-valued and periodic, the same is true for the samples xk. Based on Eq. (2.17), it is then obvious that the symmetry relation

holds. We may also combine these two symmetry relations to obtain

Therefore, only about one-half of the coefficients Xn with 0 ≤ n ≤ N − 1 have to be calculated.

2.1.4.3 Interpretation of the Spectral Components

According to Eq. (2.1), the sample X0 belongs to the DC component of the signal. The sample X1 obviously belongs to the angular frequency

Therefore, the spectrum \((X_{0},X_{1},\ldots,X_{N-1})\) has a resolution of \(f = 1/T\), where T is the total time that passes between the samples x0 and xN. It is obvious that XN−1 belongs to the frequency

This approximation is, of course, valid only for large samples with N ≫ 1. Hence we conclude that the frequency resolution is given by the inverse of the total time T, whereas the maximum frequency is determined by the sampling frequency \(f_{\mathrm{sampl}} = 1/\Delta t\). However, due to \(X_{N-n} = X_{n}^{{\ast}}\), only one-half of this frequency range between 0 and fmax actually contains information. In other words, and in compliance with the Nyquist–Shannon sampling theorem, sampling has to take place with at least twice the signal bandwidth.

These properties are visualized in Table 2.1.

If one makes sure that the N equidistant samples xn of the periodic function represent an integer number of periods (so that duplicating \((x_{0},x_{1},\ldots,x_{N-1})\) does not introduce any severe discontinuities), one may obtain good results even without sophisticated windowing techniques.

For the interpretation of the spectrum, please note that the DC component is equal to

i.e., to the first value of the DFT.

According to Eq. (2.16), the amplitude (peak value) at the frequency p∕T is given by

The discussion above shows that the sample \((X_{0},X_{1},\ldots,X_{N-1})\) contains all the information about the spectrum, but that the DFT spectrum is infinite. It does not even decrease with increasing frequencies. At first glance, this looks strange, but in our introduction to the DFT, we assumed only that the integral over \(\Delta t\) may approximately be replaced by a product with \(\Delta t\). We made no assumption as to how the function f(t) varies in the interval \(\Delta t\). This explains the occurrence of the high-frequency components.

It should be clear from the Nyquist–Shannon sampling theorem that the spectrum for frequencies larger than fmax∕2 cannot contain any relevant information, since the sampling frequency is fixed at \(\Delta t \approx 1/f_{\mathrm{max}}\).

Therefore, in the next section, we filter out those frequencies to obtain the inverse transform.

2.1.4.4 Inverse DFT

As mentioned above, the Nyquist–Shannon sampling theorem tells us that we should consider only frequencies fx with

This corresponds to

or

For the sake of simplicity, we assume that N ≥ 3 is an odd number. If we have a look at Eq. (2.1),

it becomes clear that only those n with

lead to the aforementioned frequencies \(\omega _{x} = 2\pi f_{x} = n\omega\). Therefore, we expect to be able to reconstruct the signal based on

We now apply the discretization

and obtain

Here we introduced the new summation index \(l = n - N\). The last formula leads to

On the right-hand side, we may now rename l as n again. This shows that the sum from \(-(N - 1)/2\) to − 1 included in Eq. (2.18) may be replaced by the sum from \((N + 1)/2\) to N − 1:

This defines the formula for the inverse DFT (not only for odd N):

Please note that in the literature, the factor 1∕N is sometimes not included in the definition of the DFT, but it appears in that of the inverse DFT. Our choice was determined by the close relationship to the Fourier series coefficients discussed above. Apart from the factor 1∕N, the DFT and the inverse DFT differ only by the sign in the argument of the exponential function.

2.1.4.5 Conclusion

We have summarized only a few basic facts that will help the reader to interpret the DFT correctly. There are many other properties that cannot be mentioned here.

For large sample sizes equal to a power of 2, the so-called fast Fourier transform(FFT) algorithm may be used, which is a dramatically less time-consuming implementation of the DFT.

2.1.5 Fourier Transform

The Fourier transformX(ω) of a real-valued function x(t) depending on the time variable t is given by

the inverse transform by

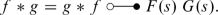

This relation is visualized by the correspondence symbol

The Fourier transform is a linear transformation. It is used to determine the frequency spectrum of signals, i.e., it transforms the signal x(t) from the time domain into the frequency domain. It is possible to generalize the definition of the Fourier transform to generalized functions (i.e., distributions), which also include the Dirac function [1, 2].

Please note that various definitions for the Fourier transform and for its inverse transform exist in the literature. The factor \(\frac{1} {2\pi }\) may be distributed among the original transformation and the inverse transformation in a different way, and even the sign of the argument of the exponential function may be defined in the opposite way.

Some common Fourier transforms are summarized in Table A.3 on p. 417. Further relations can also be found using symmetry properties of the Fourier transform. Consider the Fourier transform

If the time t in x(t) is replaced by ω, and x(ω) is regarded as a Fourier transform, its inverse transform is given by

In other words, the inverse transform of x(ω) is obtained by replacing ω in the function X(ω) by − t.

2.1.5.1 Fourier Transform of a Single Cosine Pulse

Let

define a single cosine pulse. This leads to

In the last equation, we used the definition

For the sake of uniqueness, we call this function si(x) instead of sinc(x).

2.1.5.2 Convolution

The convolution is given by

and one obtains

We consider the special case that

is a sequence of Dirac pulses. This leads to

Hence, by convolution with a sequence of Dirac pulses, we may produce a repetition of the function x(t) at the locations of the delta pulses.

2.1.5.3 Relation to the Fourier Series

We consider the special case

According to Eq. (2.20), this leads to

If we set

we obtain the correspondence

which is an ordinary Fourier series, as Eq. (2.1) shows.

Hence, if we calculate the Fourier transform of a periodic function with period \(T_{0} = \frac{2\pi } {\omega _{0}}\), we get a sum of Dirac pulses that are multiplied by 2π and the Fourier coefficients. The factor 2π is obvious because of the correspondence

2.1.6 Consequences for the Spectrum of the Beam Signal

We first model an idealized beam signal h(t) as a periodic sequence of Dirac pulses. Even if the bunches oscillate in the longitudinal direction, periodicity may be satisfied if the beam signal repeats itself after one synchrotron oscillation period. The sequence of delta pulses will be defined by

as above. Thus, we get a realistic beam signal by convolution with the time function x(t), which represents a single bunch:

Since h(t) is to be periodic, it may be represented by a Fourier series. As shown in the previous section, this leads to the Fourier transform

The function x(t) describes a single pulse and is therefore equal to zero outside a finite interval. Therefore, the spectrum X(ω) will be continuous. This shows that

is a Fourier series whose Fourier coefficients are

As an example and as a test of the results obtained so far, we analyze the convolution of a Dirac comb

with a single cosine pulse. According to Eq. (2.4), the Fourier coefficients of the Dirac comb are

Here T0 denotes the time span between the pulses. For the single cosine pulse with time span \(T = \frac{2\pi } {\Omega }\) that was defined in Eq. (2.21), one obtains—based on Eq. (2.22)—the Fourier transform

According to Eq. (2.23), the Fourier coefficients of the convolution function y(t) = h(t) ∗ x(t) are therefore

We will now analyze this result for several special cases.

-

Constant beam current: In this first case, we assume that the different single-cosine pulses overlap according to T = 2T0, which is equivalent to \(\omega _{0} = 2\Omega \). In this case, we obtain cky(t) = 0 for k ≠ 0. For c0y(t), which corresponds to the DC component, one obtains

$$\displaystyle{c_{0}^{y(t)} = \frac{1} {T_{0}}\; \frac{2\pi } {\Omega } = 2,}$$which is the expected result for a constant function that equals 2.

-

Continuous sine wave: In this case, we make use of the simplification \(\Omega =\omega _{0}\), so that y(t) corresponds to a simple cosine function that is shifted upward:

$$\displaystyle{c_{k}^{y(t)} = \frac{\mathrm{si}(\pi k)} {1 - k^{2}}.}$$We obviously have

$$\displaystyle{c_{0}^{y(t)} = 1.}$$For k = ±1, we may use l’Hôpital’s rule:

$$\displaystyle{c_{\pm 1}^{y(t)} =\lim _{ k\rightarrow \pm 1} \frac{\mathrm{si}(\pi k)} {1 - k^{2}} =\lim _{k\rightarrow \pm 1} \frac{\sin (\pi k)} {\pi \;(k - k^{3})} =\lim _{k\rightarrow \pm 1} \frac{\pi \;\cos (\pi k)} {\pi \;(1 - 3k^{2})} = \frac{1} {2}.}$$All other coefficients are zero. Thus we obtain

$$\displaystyle{y(t) =\sum _{ k=-\infty }^{+\infty }c_{ k}^{y(t)}e^{jk\omega _{0}t} = 1 + \frac{1} {2}\;e^{j\omega _{0}t} + \frac{1} {2}\;e^{-j\omega _{0}t} = 1 +\cos (\omega _{ 0}t),}$$which is in accordance with our expectation.

-

Dirac comb: For this last case, we first observe that the area under each single-cosine pulse defined in Eq. (2.21) is T. If we want to have an area of 1 instead, we have to divide the function y(t) by T:

$$\displaystyle{\tilde{y}(t) = \frac{y(t)} {T}.}$$Hence, the Fourier coefficients in Eq. (2.24) also have to be divided by T:

$$\displaystyle{c_{k}^{\tilde{y}(t)} = \frac{1} {T_{0}}\; \frac{\mathrm{si}\left (\pi k\; \frac{\omega _{0}} {\Omega }\right )} {1 -\left (k\; \frac{\omega _{0}} {\Omega }\right )^{2}}.}$$We now consider the case T → 0 while assuming a fixed value of T0. Hence \(\omega _{0}/\Omega \rightarrow 0\), and we obtain

$$\displaystyle{c_{k}^{\tilde{y}(t)} = \frac{1} {T_{0}},}$$which is the expected result for a Dirac comb.

Finally, our simple beam signal model that was constructed by a combination of single-cosine pulses is able to describe all states between unbunched beams and strongly bunched beams. In the case of long bunches (continuous sine wave), the DC current equals the RF current amplitude. As the bunches become shorter (\(\omega _{0} < \Omega \)), Eq. (2.24) can be used to determine the ratio between RF current amplitude and DC current.

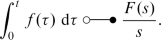

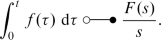

2.2 Laplace Transform

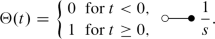

The Laplace transform is one of the standard tools used to analyze closed-loop control systems. In the scope of the book at hand, we deal only with the one-sided Laplace transform [3, 4], which is useful because processes can be described whereby signals are switched on at t = 0. Hence, the name “Laplace transform” will be used as a synonym for “one-sided Laplace transform.” Such a one-sided Laplace transform of a function f(t) with f(t) = 0 for t < 0 is given by

Here \(s =\sigma +j\omega\) is a complex parameter. It is obvious that the Laplace transform has a close relationship to the Fourier transform that is obtained for σ = 0 if only functions with f(t) = 0 for t < 0 are allowed. The real part of s is usually introduced to obtain convergence for a larger class of functions (please note that the Fourier transform of a sine or cosine function already leads to nonclassical Dirac pulses, as we saw in Sect. 2.1.5.3).

The Laplace transform F(s) of a function f(t) is an analytic function, and there is a unique correspondence between f(t) and F(s) if the classes of functions/distributions that are considered in the time domain and the Laplace domain are chosen accordingly [1, 4]. Since the integral in Eq. (2.25) exists only in some region of the complex plane, the Laplace transform is initially defined in only this region as well. If, however, a closed-form expression is obtained for the Laplace transform, e.g., a rational function, it is possible to extend the domain of definition by means of analytic continuation (cf. [5, Sect. 2.1]; [6, Sect. 10-9]; [7, Sect. 5.5.4]). Therefore, the Laplace transform F(s) should be defined as the analytic continuation of the function defined by Eq. (2.25). Apart from poles, a Laplace transform F(s) may thus be defined in the whole complex plane.

Like the Fourier transform, the Laplace transform is a linear transformation. If according to

we use the correspondence symbol again, the Laplace transform has the following properties (n is a positive integer, and a is a real number):

-

Laplace transform of a derivativeFootnote 1:

-

Derivative of a Laplace transform:

-

Laplace transform of an integral:

-

Shift theorems:

(2.26)

(2.26) -

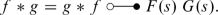

Convolution:

(2.27)

(2.27) -

Scaling (a > 0):

-

Limits:

$$\displaystyle{ f(0+) =\lim _{s\rightarrow \infty }\left (s\;F(s)\right ), }$$$$\displaystyle{ f(\infty ):=\lim _{t\rightarrow \infty }f(t) =\lim _{s\rightarrow 0}\left (s\;F(s)\right ). }$$(2.28)Here f and its derivative must satisfy further requirements [4]. Before using the final-value theorem (2.28), for example, one should verify that the function actually converges for t → ∞.

Like the Fourier transform, the Laplace transform may also be generalized in order to cover distributions (i.e., generalized functions) [1]. Some common Laplace transforms are summarized in Table A.4 on p. 417.

2.3 Transfer Functions

Some dynamical systemsFootnote 2 may be described by the equation

In this case, X(s) and Y (s) are the Laplace transforms of the input signal x(t) and the output signal y(t), respectively. The Laplace transform H(s) is called the transfer function of the system. We discuss two specific input signals:

-

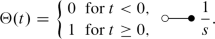

Let us assume that the input function x(t) is a Heaviside step function

In this case, the output is

$$\displaystyle{Y (s) = \frac{H(s)} {s}.}$$If we now apply Eq. (2.28), we obtain

$$\displaystyle{y(\infty ) =\lim _{s\rightarrow 0}H(s)}$$as the long-term (unit-)step response of the system.

-

If generalized functions are allowed, we may use x(t) = δ(t) as an input signal. In this case, the correspondence

leads to

$$\displaystyle{Y (s) = H(s),}$$which means that the transfer function H(s) corresponds to the impulse responseh(t) of the system. The final value of the response y(t) = h(t) is then given by

$$\displaystyle{y(\infty ) =\lim _{s\rightarrow 0}\left (s\;H(s)\right ).}$$

Let us assume that a system component is specified by the transfer function H(s). If we calculate the phase responseFootnote 3of this component according to \(\varphi (\omega ) = \measuredangle H(j\omega )\), the group delay can be defined by

Taking a dead-time element with \(H(s) = e^{-sT_{\mathrm{dead}}}\) (see shift theorem (2.26)) as an example, one obtains the frequency-independent, i.e., constant group delay

Hence, the dead-time element is an example of a device with linear phase response.

2.4 Mathematical Statistics

The results summarized in this chapter can be read in more detail in [8].

2.4.1 Gaussian Distribution

The Gaussian distribution (also called the normal distribution) is given by the probability density function

where \(\mu,\sigma \in \mathbb{R}\) with σ > 0 are specified. In order to ensure that f(x) is in fact a valid probability distribution, the equation

must hold. We show this by substituting

This leads to

By means of standard methods of mathematical analysis, one may show that

which actually leads to the result

For a given measurement curve that has the shape of a Gaussian distribution, one may use curve-fitting techniques to determine the parameters μ and σ. A simpler method is to determine the FWHM (full width at half maximum) value. According to Eq. (2.29), one-half of the maximum value is obtained for

The FWHM value equals twice this distance (one to the left of the maximum and one to the right of the maximum):

This formula may, of course, lead to less-accurate results than those obtained by the curve-fitting concept if zero line or the maximum cannot be clearly identified in the measurement data.

2.4.2 Probabilities

We now consider the area below the curve f(x) that is located to the left of \(x =\mu +\Delta x\), where \(\Delta x > 0\) holds. This area will be denoted by \(\Phi \):

It obviously specifies the probability that the random variableX is less than \(\mu +\Delta x\). By applying the same substitution as that mentioned above, one obtains

According to Fig. 2.1 we set

and get

The area D that is enclosed between \(\mu -\Delta x\) and \(\mu +\Delta x\) (see Fig. 2.2) can be calculated as follows:

Due to symmetry, we have

which leads to

Often, the area \(\Phi _{0}\) is considered, which is located between μ and \(\mu +\Delta x\):

This shows that D may also be written in the form

Some examples for these quantities are summarized in Table 2.2.

As an example, the table shows that the random variable is located in the confidence interval between μ − 2σ and μ + 2σ with a probability of 95. 45%.

2.4.3 Expected Value

Let X be a random variable with probability density function f(x). Then the expected value of the function g(X) is given by

It is obvious that the expected value is linear:

For g(X) = Xk, one obtains the kth moment:

By definition, the first moment is the mean of the random variable X. For the Gaussian distribution, we obtain

The term σ u in the parentheses leads to an odd integrand, so that this part of the integral vanishes.

Using Eq. (2.30), one obtains the mean

which is geometrically obvious.

If we always (not only for the Gaussian distribution) denote the mean by μ, then the kth central moment is given by

The second central moment is called the variance. For the Gaussian distribution, we obtain

With

an integration by parts yields

The first term on the right-hand side vanishes, and we get

The remaining integral is known from Eq. (2.30):

Hence we obtain

The variance is generally denoted by σ2 (not only for the Gaussian distribution), and its square root, the value σ, is called the standard deviation.

For a random sample with m values x1, x2, …, xm, one defines the sample mean

and the sample variance

For large samples, this value does not deviate much from \(\Delta x_{\mathrm{rms}}^{2}\), where the root mean square (rms) is definedFootnote 4 as

2.4.4 Unbiasedness

The individual values xk of a sample are the observed realizations of the random variables Xk that belong to the same distribution. Also,

is a random variable for which one may calculate the expected value. From E(Xk) = μ we obtain

which means that \(\bar{X}\) is an unbiased estimator of the mean value μ of the population. We now check whether the sample variance

is unbiased as well. We have

First of all, we need an expression for E(Xk2). For this purpose, we point out that all the random variables Xk belong to the same distribution, so that

holds. From E(Xk) = μ, we obtain

and

Now we analyze the second expression in Eq. (2.33), i.e., the expected value of

For independent random variablesX and Y, we have the equation

In our case, this is satisfied only for k ≠ l, which means for m − 1 terms. The term with k = l leads to the expected value E(Xk2) derived above. Therefore, we have

Finally, we calculate the expected value of

in an analogous way, obtaining

The results (2.34)–(2.36) may now be used in Eq. (2.33):

This shows that the sample variance is an unbiased estimator of the population variance. This is obviously not true for rms values. For large samples, however, this difference is no longer important.

We now calculate the variance of the sample mean \(\bar{X}\):

This shows that an estimate of the population mean from the sample mean becomes better as the sample size becomes larger.

2.4.5 Uniform Distribution

According to

we now calculate the variance of a uniform distribution:

In the last step, we substituted \(u = x-\mu\) to obtain

For large samples, we get

2.5 Bunching Factor

Let us consider a beam signal Ibeam(t) of a bunched beam as shown, for example, in Fig. 1.3 on p. 7. The bunching factor is defined as

i.e., it is the ratio of the average beam current to the maximum beam current (cf. Chao [9, Sect. 2.5.3.2, p. 131] or Reiser [10, Sect. 4.5.1, p. 263]). Obviously, the equation

holds, where TRF denotes the period.

Now one may replace the true shape of the beam current pulse by a rectangular one with the same maximum value. For \(-T_{\mathrm{RF}}/2 < t < T_{\mathrm{RF}}/2\), we then have

where we have assumed that the bunch is centered at t = 0. In this case, one has to choose a pulse width τ in such a way that the same average beam current is obtained:

Under these conditions, we obtain the expression

for the bunching factor.

We now assume that the beam current pulse has the shape of a Gaussian distribution. This is, of course, possible only if the pulses are significantly shorter than the period time TRF. Under this condition, the beam current will be close to zero before the next pulse starts.

Making use of Eq. (2.29), one may write Ibeam(t) in the form

We have

The average beam current is obtained using the above-mentioned approximation:

For the maximum current, we obtain

so that the bunching factor

is obtained. The equivalent length τ of a rectangular pulse is therefore

The two slopes of the rectangular pulse are therefore located at about ± 1. 25 σ. This leads to the conversion between the Gaussian bunch and the rectangular signal that is visualized in Fig. 2.3.

2.6 Electromagnetic Fields

We summarize in this section a few basic formulas that may be found in standard textbooks (cf. [11–17]). We begin with Maxwell’s equations in their integral form.

In the following, A denotes a two-dimensional domain, and V a three-dimensional domain. For a domain D (two- or threedimensional), ∂ D denotes its boundary (with mathematically positive orientation, if applicable).

Maxwell’s first equation (Ampère’s law) in the time domain is

where \(\vec{H}\) is the magnetizing field, \(\vec{J}\) the current density, and \(\vec{D}\) the electric displacement field.

Maxwell’s second equation in the time domain (Faraday’s law) reads

Here \(\vec{E}\) is the electric field, and \(\vec{B}\) is the magnetic field.

Maxwell’s third equation states that no magnetic charge exists:

The electric charge Q inside a three-dimensional domain V is determined by Maxwell’s fourth equation (Gauss’s law):

Here, ρq denotes the charge density.

The current through a certain region A is given by

and the voltage along a curve C is defined by

Please note that we use the same symbol for voltage and for threedimensional domains, but according to the context this should not lead to confusion.

In material bodies, the simplest relationships (linear isotropic media with relaxation times that are much smaller than the minimum time intervals of interest) between the field vectors are

The material parameters are the permittivity ε, the permeability μ, and the conductivity κ. In vacuum, and approximately also in air, we have

At least for fixed nonmoving domains A, we can write Eq. (2.39) in the form

where

is the magnetic flux through the domain A. This form is suitable for induction problems.

Based on the integral form of Maxwell’s equations presented above, one may derive their differential form if integral theorems are used:

Taking Eq. (2.44) into account, the divergence of Eq. (2.42) leads to the continuity equation

We will discuss the physical meaning of this equation in Sect. 2.9.

In certain cases (here we assume that domains are filled homogeneously with linear isotropic material), Maxwell’s equations may be solved by means of the vector potentialFootnote 5\(\vec{A}\), defined by

and the scalar potential\(\Phi \), defined by

both connected by the Lorenz gauge condition

Using these definitions, one obtains the wave equations

Here

denotes the speed of light in the material under consideration. The speed of light in vacuum is

For static problems, there is no time dependence of the fields, and according to Maxwell’s equations, electric and magnetic fields are therefore decoupled. In this case, the vector potential and the scalar potential also do not depend on time.

Equations (2.48) and (2.51) for homogeneous media thus reduce to

and the Poisson equation

respectively. This equation has to be solved for electrostatic problems.

2.7 Special Relativity

The primary objective of this section is to introduce the nomenclature that is used in this book. This nomenclature is close to that of the introductory text [17] (in German). In any case, the reader should consult standard textbooks on special (and general) relativity (cf. [11, 13, 18–20] in English or [14, 21–28] in German) for an extensive introduction. However, the remainder of the book can also be understood if the formulas presented in this section are regarded as given.

The speed of light c0 in vacuum has the same value in every inertial frame. Therefore, the equation of the wave front

in one inertial frame S (e.g., light flash at t = 0 at the origin of S) is transformed into a wave front equation

that has the same form in a different inertial frame \(\bar{S}\). Such transformation behavior is satisfied by the general Lorentz transformation. If one restricts generality in such a way that at t = 0, the origins of the two inertial frames are at the same position and that one frame \(\bar{S}\) moves with constant velocity v in the z-direction relative to the other frame S, then one obtains the special Lorentz transformation

The inverse transformation can be generated if the quantities with a bar (e.g., \(\bar{y}\)) are replaced by the same quantities without the bar (e.g., y) and vice versa. In that case, \(\bar{v} = -v\) has to be used (if \(\bar{S}\) moves with respect to S with velocity v in the positive z direction, S will move with respect to \(\bar{S}\) in the negative z direction), and c0 remains the same. This concept for generating inverse transformation formulas may also be applied to electromagnetic field quantities, whose transformation behavior is discussed below.

The square root in the denominator of Eqs. (2.58) and (2.59) is typical of expressions in special relativity. Therefore, the so-called Lorentz factors are defined:

Special relativity may be built up by defining so-called four-vectors and four-tensors. For example, the space coordinates are combined with the time “coordinate” in order to define the components of a four-vector that specifies the position in space-time:

Specific values of this four-vector can be interpreted as events. In combination with the special choice (signature)

for the metric tensor (i, k ∈ { 1, 2, 3, 4}), one obtains the desired transformation behavior of the wave front equation, because

which reproduces Eq. (2.55), is a tensor equation with a tensor of rank 0 (scalar) on the right-hand side. Here we use the Ricci calculus and Einstein’s summation convention. The special Lorentz transformation given above can now be reproduced by

which corresponds to the matrix equation

if the transformation coefficients

are chosen (i = row, k = column).

Similarly to the construction of the position four-vector, the vector potential and the scalar potential in electromagnetic field theory may be combined to form the electromagnetic four-potential \(\mathcal{A}\) according to

This, for example, allows one to write the Lorenz gauge condition (2.49) for free space in the form

of a tensor equation, where the vertical line indicates a covariant derivative, which—in special relativity—corresponds to the partial derivative because the metric coefficients are constant.

The four-current density\(\mathcal{J}\) is defined by

so that the tensor equation

represents the continuity equation (2.46). The transformation law obviously yields

With \(\vec{v} = v\vec{e}_{z}\) defining the parallel direction ∥ , this may be written in the generalized form

The electromagnetic field tensor may be defined as

while its counterpart for the other field components in Maxwell’s equations may be defined as

where i specifies the row, and k the column. The introduction of these four-vectors and four-tensors allows one to write Maxwell’s equations asFootnote 6

so that their form remains the same if a Lorentz transformation from one inertial frame to a different one is performed. This form invariance of physical laws is called covariance. The covariance of Maxwell’s equations implies the constancy of c0 in different inertial frames, since c0 is a scalar quantity, a tensor of rank 0. Because \(\mathcal{B}^{ik}\) and \(\mathcal{H}^{ik}\) are tensors of rank 2, they are transformed according to the transformation rule

Taking the second transformation rule as an example, this may be translated into the matrix equation

A long but straightforward calculation then leads to the transformation laws for the corresponding field components:

The generalized form is

The remaining transformation laws are obtained analogously:

In the scope of this book, there is no need to develop the theory further. Nor do we discuss such standard effects as time dilation, Lorentz contraction, and the transformation of velocities. However, we need some relativistic formulas for mechanics.

The definition of the Lorentz force (1.1) is valid in special relativity—it corresponds to a covariant equation (the charge Q is invariant; it is a scalar quantity).

Also, the equation

with the momentum definition

based on the velocity

still holds. However, the mass m is not invariant. Only the rest massm0 is a tensor of rank zero, i.e., a scalar:

Please note that we strictly distinguish between the velocities v and u and also between the related Lorentz factors. The velocity \(\vec{v} = v\;\vec{e}_{z}\) is defined as the relative velocity of the inertial frame \(\bar{S}\) with respect to the inertial frame S, i.e., the velocity between these two reference frames. The velocity \(\vec{u}\) is the velocity of a particle measured in the first inertial frame S. Consequently, \(\vec{\bar{u}}\) is the velocity of the same particle in the inertial frame \(\bar{S}\). If it is clear what is meant by a certain Lorentz factor, one may, of course, omit the subscript.

The total energy of a particle with velocity \(\vec{u}\) is given by

Consequently, the rest energy is obtained for \(\vec{u} = 0\), which leads to γu = 1:

Therefore, the kinetic energy is

Using the Lorentz factors, one may write the momentum in the form

leading to the absolute value

Here we used the definition

If we have a look at Eqs. (2.89)–(2.91), we observe that γ is related to the energy, the product β γ to the momentum, and β corresponds to the velocity. It is often helpful to keep this correspondence in mind when complicated expressions containing a large number of Lorentz factors are evaluated. One should also keep in mind that when one of the expressions β, γ, β γ is known, the others are automatically fixed as well.

This is why we can also convert expressions for relative deviations into each other. For example, we may calculate the time derivative of

as follows:

Here we can use the relation

which follows directly from Eq. (2.92):

Expressions of this type are very helpful, because they can be translated as follows:

This conversion is possible if the relative change in the quantities is sufficiently small. In the example presented here, one can see directly that a velocity deviation of 1% is transformed into an energy deviation of 3% if γ = 2 holds.

As a second example, we can calculate the time derivative of Eq. (2.93):

This can be translated into

Relations like these are summarized in Table 2.3.

In accelerator physics and engineering, specific units that contain the elementary charge e are often used to specify the energy of the beam. This is due to the fact that the energy that is gained by a chargeFootnote 7Q = zqe is given by formula (1.2),

An electron that passes a voltage of V = 1 kV will therefore lose or gain an energy of 1 keV, depending on the orientation of the voltage. We have only to insert the quantities into the formula without converting e into SI units. In order to convert an energy that is given in eV into SI units, one simply has to insert \(e = 1.6022 \cdot 10^{-19}\,\mathrm{C}\), so that \(1\,\mathrm{eV} = 1.6022 \cdot 10^{-19}\,\mathrm{J}\) holds.

Also, the rest energy of particles is often specified in eV. For example, the electron rest mass \(m_{\mathrm{e}} = 9.1094 \cdot 10^{-31}\,\mathrm{kg}\) corresponds to an energy of 510. 999 keV.

As we saw above, the energy directly determines the Lorentz factors and the velocity. Therefore, it is desirable to specify the energy in a unit that directly corresponds to a certain velocity. Due to

a kinetic energy of 1 MeV leads to different values for γ if different particle rest masses m0 are considered. This is why one introduces another energy unit for ions. An ion with mass number A has rest mass

where \(m_{\mathrm{u}} = 1.66054 \cdot 10^{-27}\,\mathrm{kg}\) denotes the unified atomic mass unit (as mentioned below, Ar differs slightly from A). Therefore, one obtains

If the value on the right-hand side is specified now, γ is determined in a unique way, since mu and c0 are global constants. As an example, an ion beam with a kinetic energy ofFootnote 8 11. 4 MeV∕u corresponds to γ = 1. 0122386 and β = 0. 15503. We do not need to specify the ion species.

Ions are usually specified by the notation

Here A is the (integer) mass number, i.e., the number of nucleons (protons plus neutrons); Z is the atomic number, which equals the number of protons and identifies the element. For example,

indicates a uranium ion that has \(A - Z = 146\) neutrons. Different uranium isotopesFootnote 9 exist with a different number of neutrons. The number of protons however, is the same for all these isotopes. Therefore, Z is redundant information that is already included in the element name. In the last example, the uranium atom has obviously lost 28 of its 92 electrons, leading to the charge number zq = 28.

The unified atomic mass unit mu is defined as 1∕12 of the mass of the atomic nucleus612C. For different ion species and isotopes, the mass is not exactly an integer multiple of mu (reasons: different mass of protons and neutrons, relativistic mass defect due to binding energy). For238U, for example, one has Ar = 238. 050786, which approximately equals A = 238.

2.8 Nonlinear Dynamics

A continuous dynamical system of first order may be described by the following first-order ordinary differential equation (ODE):

The state of a dynamical system of order n is represented by the values of n variables x1, x2, …, xn, which may be combined into a vector \(\vec{r} = (x_{1},x_{2},\ldots,x_{n})\). Hence, a dynamical system of order n is described by the system of ordinary differential equations

One should note that the system of ODEs is still of order 1, but of dimension n. Such a system is called autonomous when \(\vec{v}(\vec{r},t)\) does not depend on the timeFootnote 10t, i.e., when

holds. The next sections will show that Eq. (2.94), which may look very simple at first sight, includes a huge variety of problems.

2.8.1 Equivalence of Differential Equations and Systems of Differential Equations

Let us consider the nth-order linear ordinary differential equation

with dimension 1. One sees that by means of the definitions

it may be converted into the form

which is equivalent to the standard form

If b and all the ak do not depend on time (ODE of order n with constant coefficients), then \(\vec{v}\) will also not depend on time explicitly, so that an autonomous system is present.

Although the vector field \(\vec{v}\) is called a velocity function, it does not always correspond to a physical velocity. As already mentioned, the variable t is not necessarily the physical time. However, we will use this notation because the reader may always interpret these variables in terms of the mechanical analogy, which may help to understand the physical background.

The above-mentioned equivalence is also valid for nonlinear ODEs of the form

whereFootnote 11F ∈ C1. Also here, we may use

to obtain the standard form

An autonomous system results when F and \(\vec{v}\) do not explicitly depend on the time variable t.

2.8.2 Autonomous Systems

Hereinafter, we will consider only autonomous systems if a time dependence is not stated explicitly.

2.8.2.1 Time Shift

An advantage of autonomous systems is the fact that if a solution y(t) of

is known, then \(z(t) = y(t - T)\) will also be a solution if T is a constant time shift. This can be shown as follows:

The solution y(t) is the first component of the vector \(\vec{r}(t)\) that satisfies the differential equationFootnote 12

Therefore, z(t) is the first component of the vector

We obtain

One sees that \(\vec{r}_{\mathrm{shift}}(t)\) satisfies the system of ODEs in the same way as \(\vec{r}(t)\) does. Due to the equivalence with the differential equation of order n, z(t) will be a solution as well.

This explains, for instance, why sin(ω t) must be a solution of the homogeneous differential equation

if one knows that cos(ω t) is a solution. This ODE is autonomous, and these two solutions differ only by a time shift.

2.8.2.2 Phase Space

The phase space may be defined as the continuous space of all possible states of a dynamical system. In our case, the dynamical system is described by an autonomous system of ordinary differential equations.

The graphs of the solutions \(\vec{r}(t)\) of the differential equation are the integral curves or solution curves in the n-dimensional phase space. Such an integral curve contains the dependence on the parameter t (which is usually but not necessarily the time). A different parameterization therefore leads to a different integral curve.

The set of all image points of the map \(t\mapsto \vec{r}(t)\) is called the orbit. An orbit does not contain dependence on the parameter t. A different parameterization therefore leads to the same orbit, since the same image points are obtained simply by a different value of the parameter t.

Different orbits of an autonomous system are often drawn in a phase portrait, which may be defined as the set of all orbits.

2.8.3 Existence and Uniqueness of the Solution of Initial Value Problems

The standard form

has the advantage that it can be solved numerically according to the (explicit) Euler method:

It is obvious that by defining the initial condition

the states

of the system at different times can be derived iteratively for k > 0 (\(k \in \mathbb{N}\)). The states of the system may be calculated for both future times t > t0 and past times t < t0 in a unique way by selecting the sign of \(\Delta t\). However, this is possible only in a certain neighborhood around t0, as we will see in the next sections.

It is obvious that by defining \(\vec{r}_{0}\), n scalar initial conditions are required to make the solution unique.

2.8.3.1 Existence of a Local Solution

The existence of a solution is ensured by the following theorem:

Theorem 2.1 (Peano).

Consider an initial value problem

with a continuous \(\vec{v}: D \rightarrow \mathbb{R}^{n}\) on an open set \(D \subset \mathbb{R}^{n+1}\) . Then there exists \(\alpha (\vec{r}_{0},t_{0}) > 0\) such that the initial value problem has at least one solution in the interval \([t_{0}-\alpha,t_{0}+\alpha ]\) .

(See Aulbach [29, Theorem 2.2.3].)

Remark.

We may easily see that \(\vec{v}\) must be continuous. If we choose \(v = \Theta (t)\) (Heaviside step function) in the one-dimensional case, we immediately see that the derivative \(\frac{\mathrm{d}r} {\mathrm{d}t}\) is not defined at t = 0. Therefore, in the scope of classical analysis, we have to exclude functions that are not continuous. In the scope of distribution theory, the solution \(r = t\;\Theta (t)\) is obvious.

2.8.3.2 Uniqueness of a Local Solution

Uniqueness can be ensured if the vector field \(\vec{v}\) satisfies a Lipschitz condition or if it is continuously differentiable.

Definition 2.2.

The vector function \(\vec{v}(\vec{r},t): D \rightarrow \mathbb{R}^{n}\) (\(D \subset \mathbb{R}^{n+1}\) open) is said to satisfy a global Lipschitz condition on D with respect to \(\vec{r}\) if there is a constant K > 0 such that for all \((\vec{r}_{1},t),(\vec{r}_{2},t) \in D\), the condition

holds. Instead of saying that a function satisfies a global Lipschitz condition, one also speaks of a function that is Lipschitz continuous.

(Cf. Aulbach [29, Definition 2.3.5] and Perko [30, p. 71, Definition 2].)

Definition 2.3.

The vector function \(\vec{v}(\vec{r},t): D \rightarrow \mathbb{R}^{n}\) (\(D \subset \mathbb{R}^{n+1}\) open) is said to satisfy a local Lipschitz condition on D with respect to \(\vec{r}\) if for each \((\vec{r}_{0},t_{0}) \in D\), there exist a neighborhood \(U_{(\vec{r}_{0},t_{0})} \subset D\) of \((\vec{r}_{0},t_{0})\) and a constant K > 0 such that for all \((\vec{r}_{1},t),(\vec{r}_{2},t) \in U_{(\vec{r}_{0},t_{0})}\), the condition

holds. Instead of saying that a function satisfies a local Lipschitz condition, one also speaks of a function that is locally Lipschitz continuous.

(Cf. Aulbach [29, Definition 2.3.5], Wirsching [31, Definition 3.4], and Perko [30, p. 71, Definition 2].)

In other words, the function satisfies a local Lipschitz condition if for every point, we can find a neighborhood such that a “global” Lipschitz condition holds in that neighborhood.

Example.

The function f(x) = x2 is locally Lipschitz continuous, but it is not Lipschitz continuous.

Theorem 2.4 (Picard–Lindelöf).

Consider the initial value problem

with continuous \(\vec{v}: D \rightarrow \mathbb{R}^{n}\) ( \(D \subset \mathbb{R}^{n+1}\) open). Suppose that the vector function \(\vec{v}(\vec{r},t)\) is locally Lipschitz continuous with respect to \(\vec{r}\) . Then there exists \(\alpha (\vec{r}_{0},t_{0}) > 0\) such that the initial value problem has a unique solution in the interval \([t_{0}-\alpha,t_{0}+\alpha ]\) .

(See Aulbach [29, Theorem 2.3.7].)

Every locally Lipschitz continuous function is also continuous.

Every continuously differentiable function satisfies a local Lipschitz condition, i.e., is locally Lipschitz continuous (Aulbach [29, p. 77], Arnold [32, p. 279], Perko [30, lemma on p. 71]).

Therefore, the Picard–Lindelöf theorem may simply be rewritten for continuously differentiable functions instead of locally Lipschitz continuous functions (Perko [30, p. 74]: “The Fundamental Existence-Uniqueness Theorem,” Guckenheimer/Holmes [33, Theorem 1.0.1]).

2.8.3.3 Maximal Interval of Existence

One may try to make the solution interval larger by using the endpoint of the solution interval as a new initial condition. If this strategy is executed iteratively, one obtains the maximal interval of existence. It is an open interval (cf. [30, p. 89, Theorem 1]). The maximal interval of existence does not necessarily correspond to the full real time axis. Further requirements are necessary to ensure this.

2.8.3.4 Global Solution

A continuously differentiable vector field \(\vec{v}\) is called complete if it induces a global flow,Footnote 13 i.e., if its integral curves are defined for all \(t \in \mathbb{R}\).

Every differentiable vector field with compact support is complete.

The following theorem shows that certain restrictions on the “velocity” \(\vec{v}(\vec{r})\) are sufficient for completeness:

Theorem 2.5.

Let the vector function \(\vec{v}(\vec{r})\) with \(\vec{v}: D \rightarrow \mathbb{R}^{n}\) ( \(D \subset \mathbb{R}^{n}\) open) be continuously differentiable and linearly bounded with K,L ≥ 0:

Then the initial value problem

has a global flow.

(Cf. Zehnder [34, Proposition IV.3, p. 130], special form of Theorem 2.5.6, Aulbach [29].)

According to Amann [35, Theorem 7.8], the solution will then be bounded for finite time intervals.

Like many other authors, Perko [30, p. 188, Theorem 3] requires that \(\vec{v}(\vec{r})\) satisfy a global Lipschitz condition

for arbitrary \(\vec{r}_{1},\vec{r}_{2} \in \mathbb{R}^{n}\). For \(\vec{r}_{2} = 0\), this leads to linear boundedness, as one may show by means of the reverse triangle inequality, but it is a stronger condition.

Example.

The ODE

is obviously satisfied for

This solution may be found by separation of variables. An arbitrary initial condition y(0) = y0 may be satisfied if the shifted solution

is considered. In any case, however, the solution curve reaches infinity while t is still finite. The “vector” field \(v(y) = 1 + y^{2}\) is not complete, and it is obviously not linearly bounded.

If we simplify the results of this section, we may summarize them as follows:

-

The existence of a local solution is ensured by continuity of \(\vec{v}\).

-

Local Lipschitz continuity ensures uniqueness of the solution. If \(\vec{v}\) is continuously differentiable, uniqueness is also guaranteed.

-

If linear boundedness of \(\vec{v}\) is required in addition, a global solution/global flow exists.

For the sake of simplicity, we will consider only complete vector fields in the following.

2.8.3.5 Linear Systems of Ordinary Differential Equations

For linear systems of differential equations with

where A is a quadratic matrix with real constant elements, we may use the matrix norm:

Therefore, the conditions of Theorem 2.5 are satisfied, and a unique solution with a global flow exists. One may specifically use the Frobenius norm

which is compatible with the Euclidean norm

of a vector, so that

holds.

2.8.4 Orbits

Two distinct orbits of an autonomous system do not intersect. In order to prove this, we assume the contrary. Suppose that two distinct orbits defined by \(\vec{r}_{1}(t)\) and \(\vec{r}_{2}(t)\) intersect according to

Please note that the intersection point may be reached for different values t1 and t2 of the parameter t, since we require only that the orbits (i.e., the images of the solution curves) intersect. As shown in Sect. 2.8.2.1,

is also a solution of the differential equation. Therefore, we have

Hence \(\vec{r}_{\mathrm{shift}}(t)\) and \(\vec{r}_{2}(t)\) satisfy the same initial conditions at the time t2. This means that the solution curves \(\vec{r}_{\mathrm{shift}}(t)\) and \(\vec{r}_{2}(t)\) are identical.

Since \(\vec{r}_{1}(t)\) is simply time-shifted with respect to \(\vec{r}_{\mathrm{shift}}(t) =\vec{ r}_{2}(t)\), the images, i.e., the orbits, will be identical. This means that two orbits are completely equal if they have one point in common.

In other words, each point of phase space is crossed by only one orbit.

2.8.5 Fixed Points and Stability

Vectors \(\vec{r} =\vec{ r}_{\mathrm{F}}\) for which

holds are called fixed points (or equilibrium points or stationary points or critical points) of the dynamical system given by

This nomenclature is obvious, since a particle that is initially located at

will stay there forever:

Definition 2.6.

A fixed point of an autonomous dynamical system is called an isolated fixed point or a nondegenerate fixed point if an environment of the fixed point exists that does not contain any other fixed points.

(Cf. Sastry [36, Definition 1.4, p. 13], Perko [30, Definition 2, p. 173].)

We now define the stability of fixed points according to Lyapunov.

Definition 2.7.

A fixed point is called stable if for every neighborhood U of \(\vec{r}_{\mathrm{F}}\), another neighborhood V ⊂ U of \(\vec{r}_{\mathrm{F}}\) exists such that a trajectory starting in V at t = t0 will remain in U for all t ≥ t0 (see Fig. 2.4). Otherwise, the fixed point is called unstable.

Please note that it is usually necessary to choose V smaller than U, because the shape of the orbit may cause the trajectory to leave U for some starting points in U even if \(\vec{r}_{\mathrm{F}}\) is stable.

Definition 2.8.

A stable fixed point \(\vec{r}_{\mathrm{F}}\) is called asymptotically stable if a neighborhood U of \(\vec{r}_{\mathrm{F}}\) exists such that for every trajectory that starts at t = t0 in U, the following equation holds:

(See, e.g., Perko [30, Definition 1, p. 129].)

Definition 2.9.

A function \(L(\vec{r})\) with L ∈ C1 and \(L: U \rightarrow \mathbb{R}\) (\(U \subset \mathbb{R}^{n}\) open) is called a Lyapunov function for the fixed point \(\vec{r}_{\mathrm{F}}\) of the autonomous system

if

and

hold in a neighborhood U ⊂ D of \(\vec{r}_{\mathrm{F}}\).

A Lyapunov function is called a strict Lyapunov function if

holds.

(Cf. Perko [30, p. 131, Theorem 3], La Salle/Lefschetz [37, Sect. 8], Guckenheimer/Homes [33, Theorem 1.0.2].)

Theorem 2.10.

If a Lyapunov function for a fixed point \(\vec{r}_{\mathrm{F}}\) of an autonomous system exists, then this fixed point \(\vec{r}_{\mathrm{F}}\) is stable. If a strict Lyapunov function exists, then this fixed point \(\vec{r}_{\mathrm{F}}\) is asymptotically stable.

(Cf. Perko [30, p. 131, Theorem 3].)

It is easy to see that this theorem is valid. For two-dimensional systems with the particle trajectory \(\vec{r}(t) = x(t)\;\vec{e}_{x} + y(t)\;\vec{e}_{y}\), we obtain, for example,Footnote 14

If this expression is negative, the strict Lyapunov function will decrease while the particle continues on its path. Since the minimum of the Lyapunov function is obtained for \(\vec{r}_{\mathrm{F}}\), it is clear that the particle will move toward the fixed point.

Similar reasoning applies for a Lyapunov function that is not strict. In this case, the particle cannot move away from the fixed point, because the Lyapunov function does not increase. However, it will not necessarily get closer to the fixed point.

2.8.6 Flows of Linear Autonomous Systems

Having shown above that a linear autonomous system possesses a global flow, we shall now compute this flow. If an autonomous system of order n is linear, we may describe it by

with

where A is a quadratic n × n matrix with real constant elements. The ansatz

leads to

or

For nontrivial solutions \(\vec{w}\neq 0\), the condition

is necessary, which determines the eigenvaluesλ. For the sake of simplicity, we now assume that all n eigenvalues are distinct and that there is one eigenvector belonging to each eigenvalue (A is diagonalizable in this case). The overall solution of the homogeneous system of ODEs may then be written in the form

where \(\vec{w}_{k}\) denotes the eigenvector that belongs to the eigenvalue λ = λk and where the Ck are constants. For the initial condition at t = 0, we therefore have

According to Eq. (2.95), the solution is obviously asymptotically stable if and only if

holds for all \(k \in \{ 1,2,\ldots,n\}\), since only then does

hold for arbitrary initial conditions. In this case, \(\vec{r} = 0\) is an asymptotically stable fixed point.

Now we raise the question whether further fixed points exist. This is the case for

with

i.e., only for

In Sect. 2.8.8, we will see that this is the condition for a degenerate, i.e., nonisolated, fixed point (see Definition 2.6, p. 59).

Let us now determine a map that transforms an initial value \(\vec{r}_{0}\) into a vector \(\vec{r}(t)\) that satisfies the linear autonomous system of ODEs

The overall solution

with eigenvectors

may, due to

be written as the matrix equation

We define

(matrix of the eigenvectors),

and

Hence we have

For t = 0, we obtain

Due to

the definition

makes sense. Finally, we obtain

Using the matrix exponential function

one also writes

This equation obviously determines the global flow (Guckenheimer [33, Eqn. (1.1.9), p. 9]) if the following definition is used:

Definition 2.11.

A (global) flow is a continuous map \(\Phi: \mathbb{R} \times D \rightarrow D\), which transforms each initial value \(\vec{r}(0) =\vec{ r}_{0} \in D\) (\(D \subset \mathbb{R}^{n}\) open) into a vector \(\vec{r}(t)\) (\(t \in \mathbb{R}\)) satisfying the following conditions:

Here we have defined \(\Phi _{t}(\vec{r}):= \Phi (t,\vec{r})\).

(Cf. Wiggins [38, Proposition 7.4.3, p. 93], Wirsching [31, Definition 8.6], Amann [39, p. 123/124].)

The interpretation of this definition is simple: If no time passes, one remains at the same point. Instead of moving from a first point to a second one in the time span t2 and then from this second one to a third one in a time span t1, one may go directly from the first to the third in the time span t1 + t2.

Remark.

-

A flow (also called a phase flow) is called a global flow if it is defined for all \(t \in \mathbb{R}\) (as in Definition 2.11), a semiflow if it is defined for all \(t \in \mathbb{R}^{+}\), and a local flow if it is defined for t ∈ I (open interval I with 0 ∈ I).

-

For semiflows and local flows, Definition 2.11 has to be modified.

-

In the modern mathematical literature, a dynamical system is defined as a flow. In our introduction, however, the dynamical system was initially described by an ODE, and the corresponding velocity vector field induced the flow.

Final remark: If the matrix A does not possess n linearly independent eigenvectors, then no diagonalization is possible, in which this case generalized eigenvectors may be used (cf. Guckenheimer [33, p. 9]). These are defined by

and may be used to transform any quadratic matrix A into Jordan canonical form (cf. Burg et al. [40, vol. II, p. 293] or Perko [30, Sect. 1.8]). As the formula shows, eigenvectors are also generalized eigenvectors (for p = 1).

2.8.7 Topological Orbit Equivalence

In this section, we shall define what it means to say that two vector fields are topologically orbit equivalent. Of course, the term topological orbit equivalence will include the case that the two vector fields can be transformed into each other by a simple rotation.

In order to simplify the situation even further, we assume that the two vector fields are given by

and

where A and B are quadratic matrices.

If one vector field can be obtained as a result of rotating the other one, there must be a rotation matrix M such that

holds. Now \(\vec{v}_{2}\) depends on \(\vec{r}_{1}\). In order to make \(\vec{v}_{2}\) dependent on \(\vec{r}_{2}\), we must rotate the coordinates in the same way as the vector field (see Fig. 2.5):

Hence, we obtain

Since M is invertible as a rotation matrix, the matrix

describes the well-known similarity transformation that may also be written in the form

A similarity transformation, however, is usually written in the form

so that we have to define \(\tilde{M} = M^{-1}\) here.

Now we observe that two orbits can be identical even though the corresponding solution curves are parameterized in a different way.

A flow for Eq. (2.100) will be denoted by

and a flow for Eq. (2.101) by

In order to transform the orbits of these flows into each other, the starting points must be mapped first:

Our requirement that different parameterizations be allowed for both solution curves may be translated as follows: For every time t1, there is a time t2 such that

and therefore

holds. This formula must be included in the general definition of topological orbit equivalence if rotations are to be allowed as topologically equivalent transformations.

The previous considerations make the following definition transparent:

Definition 2.12.

Two C1 vector fields \(\vec{v}_{1}(\vec{r}_{1})\) and \(\vec{v}_{2}(\vec{r}_{2})\) are called topologically orbit equivalentFootnote 15 if a homeomorphism h exists such that for every pair \(\vec{r}_{10}\), t1, there exists t2 such that

holds. Here, the orientation of the orbits must be preserved. If in addition, the parameterization by time is preserved, the vector fields are called topologically conjugate. In this definition, \(\Phi _{t}^{\vec{v}}\) denotes the flow that is induced by the vector field \(\vec{v}\).

(Cf. Sastry [36, Definition 7.18, p. 303], Wiggins [38, Definition 19.12.1, p. 346], Guckenheimer [33, p. 38, Definition 1.7.3].)

Remark.

A homeomorphism is a continuous map whose inverse map exists and is also continuous. The fact that a homeomorphism is used as a generalization of the rotation matrix, used as an example above, leads to the following features:

-

Not only linear maps are allowed, but also nonlinear ones.

-

The requirement that the map be continuous guarantees that neighborhoods of a point are mapped to neighborhoods of its image point. Therefore, the orbits are deformed but not torn apart. Two examples for topological orbit equivalence are shown in Fig. 2.6.

The fact that the validity of Eq. (2.104) is required for each initial point ensures that all orbits are transformed into each other. Hence, the entire phase portraits will be equivalent.

The preservation of the orientation may be checked by means of a continuously differentiable function \(t_{2}(\vec{r}_{10},t_{1})\) with \(\frac{\partial t_{2}} {\partial t_{1}} > 0\) (cf. Perko [30, Sect. 3.1, Remark 2, p. 183/184]).

Please note that different authors use slightly different definitions. In cases of doubt, one should therefore check the relevant definitions thoroughly.

Let us now consider the case that a vector field \(\vec{v}(\vec{r}) = A \cdot \vec{ r}\) is given by a real n × n matrix A and that we want to check whether this vector field is topologically orbit equivalent to a simpler vector field. Often, diagonalization is possible. This case will be discussed in the following.

Remark.

-

In case diagonalization is not possible, it is always possible to transform the matrix into Jordan canonical form.

-

Diagonalization of an n × n matrix is possible if and only if for each eigenvalue, the algebraic multiplicity (multiplicity of the zeros of the characteristic polynomial) equals the geometric multiplicity (number of linearly independent eigenvectors).

-

Diagonalization of an n × n matrix is possible if and only if it possesses n linearly independent eigenvectors.

-

Diagonalization is possible for every symmetric matrix with real elements.

Consider a matrix A for which diagonalization is possible. We will show now that the diagonal matrixFootnote 16

does in fact lead to a topologically orbit equivalent vector field. Here, \(\tilde{M} = M^{-1} = X_{A}(0)\) denotes the matrix of the n eigenvectors of A. These are linearly independent, since diagonalization of A is possible (cf. Burg/Haf/Wille [40, vol. II, p. 280, Theorem 3.52]).

According to Eqs. (2.98) and (2.99), the flows are given by

for A and by

for B. In our case, the homeomorphism h is given by the matrix M. We therefore obtain

On the other hand,

holds. We see that these expressions are equal for every initial vector \(\vec{r}_{10}\) if

or

is valid. Due to Eq. (2.97), we know that

and

hold, so that the equation

has to be verified. Since B is a diagonal matrix, the Cartesian unit vectors are eigenvectors, so that

is valid. Therefore, we have only to check whether

is true. Since the eigenvalues of A and B are equal due to diagonalization, we obtain

Here we had only to set t2 = t1. We have shown that diagonalization leads to topologically orbit equivalent vector fields.

2.8.8 Classification of Fixed Points of an Autonomous Linear System of Second Order

The considerations presented above indicate that a similarity transformation

always leads to topologically orbit equivalent vector fields. Every similarity transformation leaves the eigenvalues unchanged (cf. Burg/Haf/Wille [40, vol. II, p. 272, 3.17]). This leads us to the assumption that the eigenvalues of a matrix at least influence the topological properties of the related vector field. Therefore, the eigenvalues are now used to characterize the fixed points.

We calculate the eigenvalues of a two-dimensional matrix

with constant real elements. This leads to

Hence, we obtain

with

We now try to distinguish as many cases as possible:

-

1.

Both eigenvalues are real (C ≥ 0).

-

(a)

Both are positive

-

(i)

λ1 > λ2 > 0

-

(ii)

λ1 = λ2 > 0

-

(i)

-

(b)

Both are negative

-

(i)

λ1 < λ2 < 0

-

(ii)

λ1 = λ2 < 0

-

(i)

-

(c)

One is positive, one is negative: λ1λ2 < 0

-

(d)

One equals 0 (λ1 = 0):

-

(i)

λ2 > 0

-

(ii)

λ2 < 0

-

(iii)

λ2 = 0

-

(i)

-

(a)

-

2.

Imaginary eigenvalues: C < 0, B = 0, \(\lambda _{2} = -\lambda _{1}\)

-

3.

Complex eigenvalues: C < 0, λ1 = λ2∗

-

(a)

B = Re{λ} < 0

-

(b)

B = Re{λ} > 0

-

(a)

Hence we have found 11 distinct cases. If one eigenvalue is zero, then Eq. (2.106) leads to

As we will show now, this means in general that one row is a multiple of the other row, which contains the special case that one or both rows are zero.

If we now assume that the second row is a multiple of the first one,

we obtain the following condition for the eigenvalues:

This shows that at least one eigenvalue is zero. The same can be shown for the case that the first equation is a multiple of the second one. Since one eigenvalue is 0, the relation

leads to

In conclusion, the following statements for our two-dimensional case are equivalent:

-

det A = 0.

-

One row of A is a multiple of the other row.

-

At least one eigenvalue is 0.

The following general theorem holds:

Theorem 2.13.

The following statements for a quadratic matrix A are equivalent:

-

The quadratic matrix A is regular.

-

All row vectors (or column vectors) of A are linearly independent.

-

det A ≠ 0.

-

All eigenvalues of A are nonzero.

-

A is invertible.

In our two-dimensional case, the statement that for det A = 0, one row is a multiple of the other one means that the equation \(\vec{v}(\vec{r}) = A \cdot \vec{ r} = 0\) is satisfied along a line through the origin or even everywhere. Hence, we have an infinite number of fixed points that are not separated from each other. In this case, we speak of degenerate fixed points (see Definition 2.6 on p. 59). This case will henceforth be excludedFootnote 17 (case 1.d), so that the number of relevant cases is reduced from 11 to 8. According to the behavior of the vector field in the vicinity of the fixed point \(\vec{r}_{\mathrm{F}} = 0\), the fixed points are named as follows:

-

1.

Both eigenvalues are real (C ≥ 0).

-

(a)

Both positive

-

(i)

λ1 > λ2 > 0: unstable node

-

(ii)

λ1 = λ2 > 0: unstable improper node or unstable star

-

(i)

-

(b)

Both negative

-

(i)

λ1 < λ2 < 0: stable node

-

(ii)

λ1 = λ2 < 0: stable improper node or stable star

-

(i)

-

(c)

One positive, one negative: λ1λ2 < 0: saddle point

-

(a)

-

2.

Imaginary eigenvalues: C < 0, B = 0, \(\lambda _{2} = -\lambda _{1}\): center or elliptic fixed point

-

3.

Complex eigenvalues: C < 0, λ1 = λ2∗

-

(a)

Re{λ} < 0: stable spiral point or stable focus

-

(b)

Re{λ} > 0: unstable spiral point or unstable focus

-

(a)

The classification of fixed points is summarized in Table 2.4 on p. 74 (cf. [29, 42, 43]). As the table shows, not only the eigenvalues can be used to characterize the fixed points. In the column “Topology”, the numbers in parentheses denote the quantity of eigenvalues with positive real part. The column “Topology” also contains the so-called index in brackets. In order to calculate the index, one considers a closed path around the fixed point with an orientation that is mathematically positive. Now one checks how many revolutions the vectors of the vector field perform while “walking” on the path. If, e.g., the vectors of the vector field also perform one revolution in mathematically positive orientation, the index is + 1. If the vector field rotates in opposite direction, the index is − 1.

Figures 2.7, 2.8, 2.9, 2.10, 2.11, and 2.12 on p. 75 and 76 show how orbits in the vicinity of the fixed point look in principle for each type of fixed point. In case the fixed point is a stable node, star, or spiral point, the orientation of the solution curves will be towards the fixed point in the middle; in case of an unstable node, star, or spiral point, all solution curves will be directed outwards. Each picture is just an example; in the specific case under consideration the orbits may of course be deformed significantly.

2.8.9 Nonlinear Systems

Consider the nonlinear autonomous system

with the initial condition

where \(\vec{v}(\vec{r}) \in C^{2}\) has a fixed point \(\vec{r}_{\mathrm{F}}\) with

As the results summarized in this section show, the linearization

of the system (2.109), where \(A = D\vec{v}(\vec{r}_{\mathrm{F}})\) is the Jacobian matrixFootnote 18 at \(\vec{r} =\vec{ r}_{\mathrm{F}}\), is a powerful tool for analyzing a nonlinear system in the vicinity of its fixed points. Please note that \(\vec{r} = 0\) in Eq. (2.110) corresponds to \(\vec{r} =\vec{ r}_{\mathrm{F}}\) in Eq. (2.109), i.e., the fixed point of the nonlinear system was shifted to the origin of the linearized system.

Theorem 2.14.

Consider the nonlinear system (2.109) with the linearization (2.110) at a fixed point\(\vec{r}_{\mathrm{F}}\). If A is nonsingular, then the fixed point\(\vec{r}_{\mathrm{F}}\)is isolated (i.e., nondegenerate).

(See Sastry [36, Proposition 1.5, p. 13], Perko [30, Definition 2, p. 173].)

If one or more eigenvalues of the Jacobian matrix are zero, the fixed point is a degenerate fixed point. This is the generalization of the linear case.

Definition 2.15.

A fixed point is called a hyperbolic fixed point if no eigenvalue of the Jacobian matrix has zero real part.

Theorem 2.16.

If the fixed point \(\vec{r}_{\mathrm{F}}\) is a hyperbolic fixed point, then there exist two neighborhoods U of \(\vec{r}_{\mathrm{F}}\) and V of \(\vec{r} = 0\) and a homeomorphism h: U → V, such that h transforms the orbits of Eq. (2.109) into orbits of

Orientation and parameterization by time are preserved.

(Cf. Guckenheimer [33, Theorem 1.3.1 (Hartman-Grobman), p. 13], Perko [30, Theorem Sect. 2.8, p. 120], and Bronstein [44, Sect. 11.3.2].)

In other words, we may state the following theorem.

Theorem 2.17 (Hartman–Grobman).

Let \(\vec{r}_{\mathrm{F}}\) be a hyperbolic fixed point. Then the nonlinear problem

and the linearized problem

with

are topologically conjugate in a neighborhood of \(\vec{r}_{\mathrm{F}}\) .

(See Wiggins [38, Theorem 19.12.6, p. 350].)

If the fixed point is not hyperbolic, i.e., a center (elliptic fixed point), then the smallest nonlinearities are sufficient to create a stable or an unstable spiral point. This is why the theorem refers to hyperbolic fixed points only.

If the real parts of all eigenvalues of \(D\vec{v}(\vec{r}_{\mathrm{F}})\) are negative, then \(\vec{r}_{\mathrm{F}}\) is asymptotically stable. If the real part of at least one eigenvalue is positive, then \(\vec{r}_{\mathrm{F}}\) is unstable:

Theorem 2.18.

Let \(D \subset \mathbb{R}^{n}\) be an open set, \(\vec{v}(\vec{r})\)continuously differentiable on D, and\(\vec{r}_{\mathrm{F}}\)a fixed point of

If the real parts of all eigenvalues of\(D\vec{v}(\vec{r}_{\mathrm{F}})\)are negative, then\(\vec{r}_{\mathrm{F}}\)is asymptotically stable. If\(\vec{r}_{\mathrm{F}}\)is stable, then no eigenvalue has positive real part.

(Cf. Bronstein [44, Sect. 11.3.1], Perko [30, Theorem 2, p. 130].)

A saddle point has the special property that two trajectories exist that approach the saddle point for t → ∞, whereas two different trajectories exist that approach the saddle point for t → −∞ (cf. [30, Sect. 2.10, Definition 5]). These four trajectories define a separatrix. Loosely speaking, a separatrix is a trajectory that “meets” the saddle point.

2.8.10 Characteristic Equation

Consider the autonomous linear homogeneous nth-order ordinary differential equation

As usual (see Sect. 2.8.1), we define the vector \(\vec{r} = (x_{1},x_{2},\ldots,x_{n})^{\mathrm{T}}\) by

which leads to

in order to obtain the standard form

This may be written as

if the following n × n matrix is defined:

According to Sect. 2.8.6, Eq. (2.96), we know that asymptotic stability is reached if all eigenvalues of this system matrix have negative real part. Therefore, we now describe how to find the eigenvalues based on the requirement that the determinant

equal zero. Let us begin with n = 2 as an example:

For n = 3, we obtain

These two results lead us to the assumption that

holds in general. In Appendix A.11, it is shown that this is indeed true. The requirement that the polynomial in Eq. (2.114) equal zero is called the characteristic equation of the ODE (2.111). One easily sees that the characteristic equation is also obtained if the Laplace transform is applied to the original ODE (2.111):

The matrix A is called the Frobenius companion matrix of the polynomial. Please note that instead of finding the zeros (roots) of the polynomial, one may also determine the eigenvalues of the companion matrix A and vice versa.

Hence, asymptotic stability of the dynamical system defined by the ODE (2.111) is equivalently shown

-

if all zeros of the characteristic equation have negative real part.

-

if all eigenvalues of the system matrix have negative real part.

In case of asymptotic stability, one also calls the system matrix a strictly or negative stable matrix (cf. [45, Definition 2.4.2]) or a Hurwitz matrix.

2.9 Continuity Equation

Consider a particle density ρ in space and a velocity field \(\vec{v}\) that moves the particles. We will now calculate how the particle density in a fixed volume V changes due to the velocity field.

For this purpose, we consider a small volume element \(\Delta V\) at the surface of the three-dimensional domain V. As shown in Fig. 2.13, this contains in total

particles. During the time interval \(\Delta t\), this quantity of

particles will leave the domain V, where \(v_{n} = \Delta h/\Delta t\) denotes the normal component of the velocity vector \(\vec{v}\) with respect to the surface of the domain V. Hence, we have

As a limit for \(\Delta t \rightarrow 0\), one therefore obtains

According to Gauss’s theorem,

one concludes by setting \(\vec{V } =\rho \;\vec{ v}\):

Since this equation must be valid for arbitrary choices of the domain V, one obtains

This is the continuity equation, for which we only assumed that no particles disappear and no particles are generated. Instead of the particle density, one could have considered different densities, such as the mass density, assuming mass conservation in that case. If we take the charge density as an example, charge conservation leads to

which we already know as Eq. (2.46) and where \(\vec{J} =\rho _{q}\vec{v}\) is the convection current density.

Remark.

If \(\dot{\rho }_{q} = 0\) holds, then the density will remain constant at every location; one obtains a stationary flow with

This equation is known in electromagnetism for steady currents.

2.10 Area Preservation in Phase Space

In this section, we discuss how an area or a volume that is defined by the contained particles is modified when the particles are moving.

2.10.1 Velocity Vector Fields

Consider an arbitrary domain A in \(\mathbb{R}^{2}\) at time t. Particles located inside the domain and on its boundary at time t will move a bit farther during the time span \(\Delta t\). This movement is determined by the velocity field \(\vec{v}(x,y)\).

Let us define a parameterization of the domain such that x(α, β) and y(α, β) are given depending on the parameters α and β. This leads to the area