Abstract

The first purpose of this chapter is to provide a deep intuition of formal notions like the metric tensor, the Christoffel symbols, the Riemann tensor, vector fields, the covariant derivative, and so on: an intuition based on the consideration of surfaces in the three dimensional real space. We switch next to the study of geodesics, geodesic curvature and systems of geodesic coordinates. As an example of a Riemann surface, we develop the study of the Poincaré half plane and prove that it is a model of non-Euclidean geometry. We conclude with the Gauss–Codazzi–Mainardi equations and the related question of the embeddability of Riemann surfaces in the three dimensional real space.

As the title indicates, the purpose of this chapter is not to develop Riemannian geometry as such. The idea is to first re-visit the theory of surfaces in \(\mathbb{R}^{3}\), as studied in Chap. 5, adopting a different point of view and different notation. One of the objectives of this somehow unusual exercise—besides proving some important new theorems—is to provide a good intuition for the basic notions and techniques involved in general Riemannian geometry.

The idea of Riemannian geometry is to consider a surface as a universe in itself, not as a part of a “bigger universe”, for example as a part of \(\mathbb{R}^{3}\). Thus Riemannian geometry is interested in the study of those properties of the surface which can be established by measures performed directly “within” the surface, without any reference to the possible surrounding space. The “key” to performing measures on the surface will be the consideration of its first fundamental form (see Sect. 5.4), called the metric tensor in Riemannian geometry.

In Chap. 5, our main concern regarding surfaces in \(\mathbb {R}^{3}\) has been the study of their curvature. The normal curvature of a given curve on the surface is the orthogonal projection of its “curvature” vector on the normal vector to the surface (see Sect. 5.8). This normal curvature cannot be determined by measures performed on the surface, but the geodesic curvature can: the geodesic curvature is “the other component” of the curvature vector, that is, the length of the orthogonal projection of the curvature vector on the tangent plane to the surface. When you have the impression of “moving without turning” on a given surface—like when you follow a great circle on the surface of the Earth—you are in fact following a curve with zero geodesic curvature. Such a curve is called a geodesic: we pay special attention to the study of these geodesics.

In Sect. 5.16 we also studied the Gaussian curvature, which provides less precise information than the normal curvature. But in Riemannian geometry—where the normal curvature no longer makes sense—the Gaussian curvature assumes its full importance: the Gaussian curvature can be determined by measures performed on the surface itself. This is the famous Theorema Egregium of Gauss.

We characterize—in terms of the coefficients E, F, G, L, M, N of their two fundamental quadratic forms—those Riemann surfaces which arise from a surface embedded in \(\mathbb{R}^{3}\), as studied in Chap. 5. As an example of a Riemann surface not obtained from a surface in \(\mathbb{R}^{3}\), we describe the so-called Poincaré half plane, which is a model of non-Euclidean geometry.

We conclude with a first discussion of what a tensor is and a precise definition of a Riemann surface. This last definition refers explicitly to topological notions: the reader not familiar with them is invited to consult Appendix A.

Let us close this introduction with an observation which, in this chapter, will play an important role in supporting our intuition. Consider a surface represented by

and suppose that f is injective, not just locally injective. Consider a point P=f(u,v), for (u,v)∈U. The injectivity of f allows us to speak equivalently of the point P of the surface or the point with parameters (u,v) on the surface. In this case, the points of the open subset U describe precisely the points of the surface: f is a bijection between these two sets of points.

6.1 What Is Riemannian Geometry?

Turning through the pages of Chap. 5, we find many pictures of surfaces, as if we had taken photographs of these surfaces. But of course when you take a photograph of a surface, you do not put the lens of the camera on the surface itself: you stay outside the surface, sufficiently far, at some point from which you have a good view of the shape of the surface. Doing this, you study your surface from the outside, taking full advantage of the fact that the surface is embedded in \(\mathbb{R}^{3}\) and that you are able to move in \(\mathbb{R}^{3}\), outside the surface.

Let us proceed to a completely different example. We are three dimensional beings living in a three-dimensional world. We are interested in studying the world in which we are living. Of course if we are interested in only studying our solar system, we can take \(\mathbb{R}^{3}\) as a reliable mathematical model of our universe and use the rules of classical mechanics to study the trajectories of the planets. But we know that if we are interested in cosmology and the theory of the expansion of the universe, the very “static” model \(\mathbb{R}^{3}\) is no longer appropriate to the question. Physicists perform a lot of experiments to study our universe: they use large telescopes to capture very remote information. But these telescopes are inside our universe and take pictures of things that are inside our universe. This time—we have no other choice—we study our universe from the inside. From this study inside the universe itself physicists try to determine—for example—the possible curvature of our universe.

The topic of Riemannian geometry is precisely this: the study of a universe from the inside, from measures taken inside that universe. In this book we shall focus on Riemann surfaces, that is, two dimensional universes. Thus we imagine that we are very clever two-dimensional beings, living in a two-dimensional universe and knowing a lot of geometry. We do our best to study our universe from the inside, since of course there is no way for us to escape and look at it from the outside.

Our first challenge is to mathematically model this idea. For that, we shall rely on our study of surfaces embedded in \(\mathbb {R}^{3}\) in order to guess what it can possibly mean to study these surfaces from the inside. The above discussion suggests a first answer:

A Riemannian property of a surface in \(\mathbb{R}^{3}\) is a property which can be established by measures performed on the support of the surface, without any reference to its parametric representation.

Of course a two-dimensional being living on a surface of \(\mathbb {R}^{3}\) is able to measure the length of an arc of a curve on this surface, or the angle between two curves on the surface. These operations can trivially be done inside the surface, without any need to escape from the surface.

But given a regular curve

on a regular surface

we have seen (see Proposition 5.4.3) how to calculate its length:

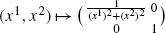

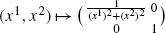

where the three functions E(u,v), F(u,v), G(u,v) are the coefficients of the first fundamental form of the surface. The same matrix also allows us to calculate the angle between two curves on the surface (see Proposition 5.4.4). The matrix

thus allows us to compute lengths and angles on the surface: it is intuitively the “mathematical measuring tape” on the surface.

However, we must stress the following: knowledge of this “mathematical measuring tape” is (somehow) equivalent to being able to perform measures inside the surface. That is, only from measures performed inside the surface, you can infer the values of the three functions E(u,v), F(u,v), G(u,v). In this statement, the “somehow” restriction is the fact that to reach this goal, you have to perform infinitely many measures, because there are infinitely many points on the surface.

Indeed consider the curve v=v 0

for some fixed value v 0. The length on this curve from an origin u 0 to the point with parameter u is thus

The two dimensional being living on the surface can thus measure the value ℓ(u) for any value of the parameter u, and so “somehow” determine the function ℓ(u). If he makes the additional effort to attend a first calculus course, he will be able to compute the derivative

of that function and thus eventually, get the value of E(u,v 0). An analogous argument holds for G(u 0,v). Notice further that the angle θ between the two curves u=u 0 and v=v 0 is given by

Since E(u 0,v 0) and G(u 0,v 0) are already known, the two-dimensional being gets the value of F(u 0,v 0) from the measure of the angle θ.

All this suggests reformulating the above statement as follows:

The Riemannian geometry of a surface

$$f\colon U \longrightarrow\mathbb{R}^3,\qquad(u,v)\mapsto f(u,v) $$embedded in \(\mathbb{R}^{3}\) is the study of those properties of the surface which can be inferred from the sole knowledge of the three functions

$$E,F,G\colon U \longrightarrow\mathbb{R}. $$

Now working with three symbols E, F, G and two parameters u, v remains technically quite tractable. But imagine that you are no longer interested in “two dimensional universes” (surfaces), but in “three dimensional universes”, such as the universe in which we are living! Instead of two parameters, you now have to handle three parameters; analogously, as we shall see in Definition 6.17.6, the corresponding “mathematical measuring tape” will become a 3×3-matrix. If you are interested—for example—in studying relativity, you will have to handle a fourth dimension, “time”. Thus four parameters and a 4×4-matrix. In such higher dimensions, one has to use “notation with indices” in order to cope with all the quantities involved! We shall do this in the case of surfaces and introduce the classical notation of Riemannian geometry.

The Riemannian notation for the first fundamental form is:

Having changed the notation, we shall also change the terminology.

Definition 6.1.1

Consider a regular parametric representation of a surface

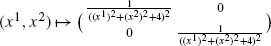

The matrix of functions

defined by

is called the metric tensor of the surface.

The “magic word” tensor suddenly appears! The reason for such a terminology will be “explained” in Sect. 6.12. For the time being, this is just a point of terminology which does not conceal any hidden properties and so formally, does not require any justification.

Using symbols like g ij to indicate the various elements of the “tensor”—which after all is just a matrix—sounds perfectly reasonable, as does using symbols (x 1,x 2) to indicate the two parameters. The use of upper indices x 1 and x 2 might seem like an invitation for confusion at this point, but we shall come back to this in Sect. 6.12. For the time being, we just decide to use the unusual notation (x 1,x 2).

Even if we do not yet know the reason for using these “upper indices”, let us at least be consistent. Given a curve

on the surface, we should now write

for the two components of the function c.

We have thus described the “challenge” of Riemannian geometry, as far as surfaces embedded in \(\mathbb{R}^{3}\) are concerned, and we have introduced the classical notation and terminology of Riemannian geometry. But to help us guess which properties have a good chance to be Riemannian, we shall add a “slogan”.

Consider again our friendly and clever two-dimensional being living on the surface. This two-dimensional being should have full knowledge of what happens “at the level of the surface” but no knowledge at all of what happens “outside the surface”. From a quantity that lives in the “outside world \(\mathbb{R}^{3}\)”, the two-dimensional being should only see its “shadow on the surface”, its “component at the level of the surface”, that is, its “orthogonal projection at the level of the surface”. Let us write this quantity of \(\mathbb{R}^{3}\) in terms of the basis comprising the two partial derivatives of the parametric representation and the normal vector to the surface. What happens along the normal to the surface, that is, what projects as “zero” on the surface, is the part of the information that the two-dimensional being cannot possibly access. So the rest of the information, that is, the components along the partial derivatives, should probably be accessible to our two-dimensional being. Let us take this as a slogan for discovering Riemannian properties.

Slogan:

The component of a geometric quantity along the normal vector to the surface is not Riemannian, but its components along the tangent plane should be Riemannian.

This is of course just a “slogan”, not a precise mathematical statement!

6.2 The Metric Tensor

Let us first recall (Proposition 5.5.4) that at each point of a regular surface

the matrix

is that of the scalar product in the tangent plane at \(f(x^{1}_{0},x^{2}_{0})\), with respect to the affine basis

This matrix is thus symmetric, definite and positive (Proposition 5.4.6).

We are now ready to give a first (restricted) definition of a Riemann surface, a definition which no longer refers to any parametric representation:

Definition 6.2.1

A Riemann patch of class \(\mathcal{C}^{k}\) consists of:

-

1.

a connected open subset \(U\subseteq\mathbb{R}^{2}\);

-

2.

four functions of class \(\mathcal{C}^{k}\)

$$g_{i,j}\colon U \longrightarrow\mathbb{R},\qquad \bigl(x^1,x^2 \bigr)\mapsto g_{ij}\bigl(x^1,x^2\bigr),\quad 1 \leq i,j\leq2 $$

so that at each point (x 1,x 2)∈U, the matrix

is symmetric definite positive. The matrix of functions

is called the metric tensor of the Riemann patch.

The observant reader will have noticed that if we start with a regular parametric representation f of class \(\mathcal{C}^{k}\) of a surface in \(\mathbb{R}^{3}\), the corresponding metric tensor as in Definition 6.2.1 is only of class \(\mathcal{C}^{k-1}\). This is the reason why some authors declare a Riemann patch to be of class \(\mathcal{C}^{k+1}\) when the functions g ij are of class \(\mathcal {C}^{k}\). This is just a matter of taste!

The term Riemann patch instead of Riemann surface underlines the fact that in this chapter, we shall again essentially work “locally”. The more general notion of Riemann surface is investigated in Sect. 6.17. We can now express will full precision the concern of Riemannian geometry:

Local Riemannian geometry is the study of the properties of a Riemann patch.

Of course what has been explained above suggests that we should think of the metric tensor intuitively as being that of a hypothetical surface in \(\mathbb {R}^{3}\). This can indeed support our intuition but this is perhaps not the best way to look at a Riemann patch.

Let us go back to the example of the sphere (or part of it) in terms of the “longitude” and “latitude”, as in Example 5.1.6:

Assume that we have restricted our attention to an open subset U on which f is injective. Think of the sphere as being the Earth. The open subset \(U\subseteq \mathbb{R}^{2}\) is then the geographical map of the corresponding piece of the Earth. The two coordinates of a point of \(U\subseteq \mathbb{R}^{2}\) are the longitude and the latitude of the corresponding point of the Earth. But how can you—for example—determine the distance between two points of the Earth, simply by inspecting your map? Certainly not by measuring the distance on the map using your ruler! Indeed on the map, the further one moves away from the equator, the more distorted the distances on the map become. Of course we can determine the longitude and the latitude of the two points on the map and use our knowledge of spherical trigonometry to calculate the corresponding distance on the surface of the Earth. However, to do this, one has to know that the Earth is approximately a sphere in the surrounding universe \(\mathbb{R}^{3}\): an attitude which does not make sense in Riemannian geometry.

What would be better would be to have an elastic ruler which is able to adjust itself to the correct length, depending on where it has been placed on the map. We are in luck, such an elastic ruler exists: it is the metric tensor. The metric tensor is at each point the matrix of a scalar product, but a scalar product which varies from point to point, compensating for the distortion of the map.

It is better to imagine that the map is of some unknown planet, the shape of which is totally unknown to us. From only the longitude and the latitude of points on this planet, as given by the map, we cannot draw any conclusions since we have no idea of the shape of the planet and thus of the distortion of the map together with the “elastic ruler” (the metric tensor), to “calculate” from the map the actual distances on the planet (we shall make this precise in Definitions 6.3.2 and 6.3.3). This probably gives a clearer intuitive way to think about Riemann patches.

Let us return to our formal definition of a Riemann patch.

Proposition 6.2.2

Given a Riemann patch as in Definition 6.2.1, the metric tensor is at each point (x 1,x 2) an invertible matrix with strictly positive determinant. Moreover at each point, g 11>0 and g 22>0.

Proof

By Proposition G.3.4 in [4], Trilogy II, the determinant of the matrix is strictly positive, thus the matrix is invertible. On the other hand

thus this quantity is strictly positive by positivity and definiteness. An analogous argument holds for g 22. □

Definition 6.2.3

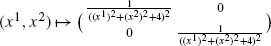

Given a Riemann patch as in Definition 6.2.1, the inverse metric tensor

is at each point (x 1,x 2) the inverse of the metric tensor.

The matrix (g ij) ij has again received the label tensor and the indices have now been put “upstairs”. Once more, this is for the moment simply a matter of terminology and notation. We will comment further on this in Sect. 6.12.

Proposition 6.2.4

Given a Riemann patch of class \(\mathcal{C}^{k}\), the coefficients g ij of the inverse metric tensor are still functions of class \(\mathcal{C}^{k}\).

Proof

From any algebra course, we know that the inverse metric tensor is equal to

This forces the conclusion because the denominator is never zero (Proposition 6.2.2) while by Definition 6.2.1, the functions g ij are of class \(\mathcal{C}^{k}\). □

Let us conclude this section with a useful point of notation. Since at each point of a Riemann patch, the metric tensor is a 2×2 symmetric definite positive matrix (Definition 6.2.1), it is the matrix of a scalar product in \(\mathbb{R}^{2}\). Let us introduce a notation for this scalar product.

Notation 6.2.5

Given a Riemann patch

and a point (x 1,x 2)∈U, we shall write

for the corresponding scalar product on \(\mathbb{R}^{2}\), and by analogy

6.3 Curves on a Riemann Patch

The notion of a curve on a Riemann patch is the most obvious one.

Definition 6.3.1

A curve on a Riemann patch

is simply a plane curve in the sense of Sect. 2.1, admitting a parametric representation

The curve on the Riemann patch is regular when the plane curve represented by c is regular.

Using Notation 6.2.5 and in view of Propositions 5.4.3 and 5.4.4, we define:

Definition 6.3.2

Consider a regular curve

on a Riemann patch

Given a<k<l<b, the length of the arc of the curve between the points with parameters k and l is defined as being

Definition 6.3.3

Consider two regular curves

on a Riemann patch

When these two curves have a common point

the angle between these two curves at their common point is the real number θ∈[0,2π[ such that

Let us now, for a curve in a Riemann patch, investigate the existence of a normal representation.

Definition 6.3.4

Consider a Riemann patch

and a regular curve

in it. The parametric representation c is said to be a normal representation when at each point

One should be well aware that in Definition 6.3.4, c is not a normal representation of the ordinary plane curve in U of which it is a parametric representation. In the case of a surface of \(\mathbb{R}^{3}\) represented by f, the condition in Definition 6.3.4 requires in fact that f∘c be a normal representation of the corresponding skew curve.

The existence of normal representations in the case of a Riemann patch can be established just as in the case of plane or skew curves (see Propositions 2.8.2 and 4.2.7).

Proposition 6.3.5

Consider a Riemann patch

and a regular curve

Fixing a point t 0∈]a,b[, the function

is a change of parameter of class \(\mathcal{C}^{1}\) making c∘σ −1 a normal representation.

Proof

The derivative of σ is simply σ′=∥c′∥ c . This derivative is strictly positive at each point because the matrix (g ij ) ij is symmetric definite positive (see Definition 6.2.1) and by regularity, the vector c′ is never zero. Therefore σ′—thus also σ—is still of class \(\mathcal {C}^{1}\), since so are the g ij and c, as well as the square root function “away from zero”. Since its derivative is always strictly positive, σ is a strictly increasing function. Therefore σ admits an inverse σ −1, still of class \(\mathcal {C}^{1}\), whose first derivative is given by

Let us write \(\overline{c}=c\circ\sigma^{-1}\). We must prove that \(\|\overline{c}'\|_{\overline{c}}=1\) (see Definition 6.3.4). But

which forces at once the conclusion. □

Of course, we have:

Proposition 6.3.6

Consider a Riemann patch

and a regular curve

given in normal representation. Then for a<k<l<b

Proof

In Definition 6.3.2, the integral is that of the constant function 1. □

6.4 Vector Fields Along a Curve

Following the “slogan” at the end of Sect. 6.1, what happens in the tangent plane to a surface should be a Riemannian notion. In the theory of skew curves (see Chap. 3) we have considered several vectors attached to each point of a curve: its successive derivatives, the tangent vector, the normal vector, the binormal vector, and so on. When the curve is drawn on a surface, our “slogan” suggests that only the components of these vectors in the tangent plane should be relevant in Riemannian geometry. Therefore we make the following definition.

Definition 6.4.1

Let us consider a curve c on a surface f

both being regular and of class \(\mathcal{C}^{k}\). A vector field of class \(\mathcal{C}^{k}\) along the curve, tangent to the surface, is a function of class \(\mathcal{C}^{k}\)

where for each t∈]a,b[, ξ(t) belongs to the direction of the tangent plane to the surface at the point (f∘c)(t) (see Definition 2.4.1 in [4], Trilogy II).

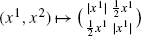

Of course, working as usual in the affine basis of the partial derivatives in each tangent plane, we can re-write (with upper indices)

The knowledge of the vector field ξ is of course equivalent to the knowledge of its two components ξ 1 and ξ 2. This suggests at once that being a tangent vector field can easily be made a Riemannian notion:

Definition 6.4.2

Consider a Riemann patch of class \(\mathcal{C}^{k}\)

and a regular curve of class \(\mathcal{C}^{k}\) in it

A vector field ξ of class \(\mathcal{C}^{k}\) along this curve consists of giving two functions of class \(\mathcal{C}^{k}\)

Of course in Definition 6.4.2 we intuitively think of the two functions ξ 1, ξ 2 as being the two components of a vector in the tangent plane (see Definition 6.4.1), even if in the case of a Riemann patch, no such tangent plane is a priori defined. Let us conclude this section with a very natural definition:

Example 6.4.3

Consider a Riemann patch of class \(\mathcal{C}^{k}\)

and a regular curve of class \(\mathcal{C}^{k}\)

The vector field τ of class \(\mathcal{C}^{k-1}\) with components

is called “the” tangent vector field to the curve; it is such that ∥τ∥ c =1. When c is given in normal representation, one has further τ=c′.

Proof

At each point, the vector field τ is the vector \(c'\in\mathbb {R}^{2}\) divided by its norm for the scalar product (−|−) c (see Notation 6.3.4). The result follows by Definition 6.3.4. □

In Example 6.4.3, it is clear that equivalent parametric representations of the same curve can possibly give corresponding tangent vector fields opposite in sign.

Definition 6.4.4

Consider a Riemann patch

and a regular curve

With Notation 6.2.5:

-

1.

The norm of a vector field ξ along c is the positive real valued function

$$\|\xi\|(t)=\bigl\| \xi(t)\bigr\| _{c(t)}. $$ -

2.

Two vector fields ξ and χ along c are orthogonal when at each point

$$\bigl(\xi(t)\bigl|\chi(t)\bigr)_{c(t)}=0. $$

6.5 The Normal Vector Field to a Curve

We are now interested in transposing, to the context of a Riemann patch, the notion of the normal vector to a curve in the sense of the Frenet trihedron (see Definition 4.4.1).

Proposition 6.5.1

Consider a Riemann patch of class \(\mathcal{C}^{k}\)

and a regular curve of class \(\mathcal{C}^{k}\)

There exists a vector field of class \(\mathcal{C}^{k-1}\)

along c with the properties:

-

1.

η is orthogonal to the tangent vector field of c;

-

2.

∥η∥ c =1;

-

3.

the basis (c′,η) has at each point direct orientation (see Sect. 3.2 in [4], Trilogy II).

This vector field is called “the” normal vector field to the curve.

Proof

To get a vector field μ satisfying the orthogonality condition of the statement, at each point we must have (see Definition 6.4.4)

or in other words

It suffices to put

or—of course—the opposite choice

This can be re-written as

In this formula, the square matrix is regular at each point (it has the same determinant as the metric tensor) and c′ is non-zero at each point, by regularity of c. Thus μ is non-zero at each point and the expected normal vector field is

Observe that the two-fold possibility in the choice of the vector field μ yields two bases (c′,η) with opposite orientations: it remains to choose the basis with direct orientation. □

6.6 The Christoffel Symbols

Chapter 5 has provided evidence that all important properties of the surface can be expressed in terms of the six functions E, F, G, L, M, N defined in Proposition 5.4.3 and Theorem 5.8.2. As emphasized in Sect. 6.2, the functions E, F, G constitute the metric tensor of the surface. But what about the functions L, M, N. Are they Riemannian quantities, quantities that we can determine by measures performed on the surface? The answer is definitely “No”:

Counterexample 6.6.1

The coefficients L, M, N of the second fundamental form of a surface cannot be deduced from the sole knowledge of the coefficients E, F, G of the first fundamental form.

Proof

At each point of the plane with parametric representation

we have trivially

At each point of the circular cylinder (see Sect. 1.14 in [4], Trilogy II) with parametric representation

we have

from which again

On the other hand

and

from which

The two surfaces have the same functions E, F, G, but not the same functions L, M, N. □

So L, M, N are not Riemannian quantities. Let us recall that they are obtained from the second partial derivatives of the parametric representation, by performing the scalar product with the normal vector \(\overrightarrow{n}\) to the surface (see Theorem 5.8.2). Applying the “slogan” at the end of Sect. 6.1 to the case of the second partial derivatives of the parametric representation, the following definition sounds sensible:

Definition 6.6.2

Consider a regular parametric representation

of class \(\mathcal{C}^{k}\) of a surface.

-

1.

The Christoffel symbols of the first kind are the functions

$$\varGamma_{ijk} = \biggl( \frac{\partial^2 f}{\partial x^i\partial x^j} \bigg\vert \frac{\partial f}{\partial x^k} \biggr),\quad 1\leq i,j,k\leq2. $$ -

2.

The Christoffel symbols of the second kind are the quantities \(\varGamma_{ij}^{k}\), the components of the second partial derivatives of f with respect to the basis comprising the first partial derivatives and the normal to the surface:

$$\frac{\partial^2 f}{\partial x^i\partial x^j} = \varGamma_{ij}^1\frac{\partial f}{\partial x^1} +\varGamma_{ij}^2\frac{\partial f}{\partial x^2} +h_{ij}\overrightarrow{n}. $$

The observant reader will have noticed the use of the word “symbols”, not “tensor”; and the presence of upper and lower indices! Again, we shall comment upon this later.

Proposition 6.6.3

Under the conditions of Definition 6.6.2,

Proof

Simply take the scalar product of

with \(\overrightarrow{n}\), keeping in mind that

□

From now on, we shall use the notation h ij instead of L, M, N. Let us also make the following easy observations:

Proposition 6.6.4

Under the conditions of our Definition 6.6.2, the Christoffel symbols are functions of class \(\mathcal{C}^{k-2}\) with the following properties:

Proof

The first two equalities hold because

The third equality is obtained by expanding the scalar product

keeping in mind that \((\overrightarrow{n}|\frac{\partial f}{\partial x^{k}})=0\).

This third equality can be re-written in matrix form as

Multiplying both sides by the inverse metric tensor, we obtain the fourth formula.

By Definition 6.6.2, the Christoffel symbols of the first kind are functions of class \(\mathcal{C}^{k-2}\). By Proposition 6.2.4 and the fourth equality in the statement, the same conclusion holds for the symbols of the second kind. □

The key observation is now:

Proposition 6.6.5

Under the conditions of Definition 6.6.2, the Christoffel symbols of the first kind are also equal to

Proof

First (see Lemma 1.11.3)

Therefore

by the first formula in Proposition 6.6.4. □

Proposition 6.6.6

Under the conditions of Definition 6.6.2, the Christoffel symbols of the first and second kind can be expressed as functions of the coefficients of the metric tensor.

Proof

Proposition 6.6.5 proves the result for the symbols of the first kind. But the inverse metric tensor can itself be expressed in terms of the metric tensor (see Definition 6.2.3 or the proof of Proposition 6.2.4 for an explicit formula). By the fourth equality in Proposition 6.6.5, the result for the symbols of the second kind follows immediately. □

To stress the fact that the Christoffel symbols are Riemannian quantities, let us conclude this section with a definition inspired by Proposition 6.6.6:

Definition 6.6.7

Given a Riemann patch of class \(\mathcal{C}^{1}\) as in Definition 6.2.1, the Christoffel symbols of the first kind are by definition the quantities

while the Christoffel symbols of the second kind are the quantities

Proposition 6.6.4 carries over to this generalized context.

Proposition 6.6.8

Consider a Riemann patch of class \(\mathcal{C}^{k}\)

The Christoffel symbols are functions of class \(\mathcal{C}^{k-1}\) satisfying the following properties:

Proof

The last condition is just Definition 6.6.7. This same definition forces at once the first condition, because the metric tensor is symmetric. This immediately implies the second condition. Finally Definition 6.6.7 can be expressed as the matrix formula

Multiplying both sides by the metric tensor yields condition 4 in the statement. □

6.7 Covariant Derivative

Our experience of doing mathematics tells us how important the derivative of a function can be. Going back to Definition 6.4.1, we therefore want to consider the derivative of the function ξ describing a vector field. However, since we are working in Riemannian geometry, our “slogan” of Sect. 6.6 suggests that we should focus on the component of this derivative in the tangent plane.

Definition 6.7.1

Consider a regular curve c on a regular surface f

Consider further a vector field of class \(\mathcal{C}^{1}\) along this curve, tangent to the surface

The covariant derivative of this vector field is the vector field

defined at each point as the orthogonal projection of the derivative of ξ on the direction of the tangent plane to the surface.

Our job is now to explicitly calculate this covariant derivative.

Proposition 6.7.2

In the situation described in Definition 6.7.1, when the surface is of class \(\mathcal{C}^{2}\), the covariant derivative of the vector field ξ is equal to

Proof

Let us first differentiate the function

We obtain, keeping in mind Definition 6.6.2 and writing \(\overrightarrow{n}\) for the normal vector to the surface:

where for short, we have used the abbreviated notation

and analogously for the partial derivatives of f. The orthogonal projection on the direction of the tangent plane is constituted of the first two lines of this last expression, which is indeed the formula in the statement. □

The observant reader will have noticed that Definition 6.7.1 of the covariant derivative makes perfect sense in class \(\mathcal{C}^{1}\), while its expression given in Proposition 6.7.2 requires the class \(\mathcal{C}^{2}\) because of the presence of the Christoffel symbols (see Definition 6.6.2).

The Christoffel symbols are Riemannian quantities (see Definition 6.6.7), thus by Proposition 6.7.2, so is the covariant derivative:

Definition 6.7.3

Consider a Riemann patch of class \(\mathcal{C}^{1}\)

and a regular curve

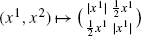

The covariant derivative of a tangent vector field ξ of class \(\mathcal{C}^{1}\) along this curve is the tangent vector field \(\frac{\nabla\xi}{dt}\) whose two components are

The covariant derivative inherits the classical properties of an “ordinary” derivative. For example:

Proposition 6.7.4

Consider a Riemann patch of class \(\mathcal{C}^{1}\)

and a regular curve

in it. Consider two tangent vector fields ξ and χ of class \(\mathcal{C}^{1}\) along this curve, as well as an additional function of class \(\mathcal{C}^{1}\)

and a change of parameter of class \(\mathcal{C}^{1}\)

The following properties hold:

-

1.

\(\frac{\nabla(\xi+\chi)}{dt} =\frac{\nabla\xi}{dt}+\frac{\nabla\chi}{dt}\);

-

2.

\(\frac{\nabla(\alpha\cdot\xi)}{dt} =\frac{d\alpha}{dt}\xi+\alpha\frac{\nabla\xi}{dt}\);

-

3.

\(\frac{\nabla(\xi\circ\varphi)}{ds} =(\frac{\nabla\xi}{dt}\circ\varphi) \cdot\varphi'\);

-

4.

\(\frac{d(\xi|\chi)_{c}}{dt} =(\frac{\nabla\xi}{dt}|\xi)_{c} +(\xi|\frac{\nabla\chi}{dt})_{c}\).

Proof

Condition 1 of the statement is trivial. Condition 2 is immediate: the components of \(\frac{\nabla(\alpha\cdot\xi)}{dt}\) are

which is the second formula of the statement. Condition 3 is proved in exactly the same straightforward way: the components of \(\frac{\nabla(\xi\circ\varphi)}{ds}\) are

which is again the announced statement.

Proving the fourth formula in the statement is a more involved task. First let us observe that the components of \(\frac{d(\xi|\chi )_{c}}{dt}\) are

On the other hand the components of \((\frac{\nabla\xi}{dt}|\chi) +(\xi|\frac{\nabla\xi}{dt})\) are

Comparing both expressions, it remains to prove that

Using Proposition 6.6.8 and Definition 6.6.7, we obtain

which is the expected equality concluding the proof. □

Corollary 6.7.5

Consider a Riemann patch of class \(\mathcal{C}^{2}\)

and a regular curve of class \(\mathcal{C}^{2}\)

given in normal representation. The tangent vector field c′ to the curve and its covariant derivative \(\frac{\nabla c'}{ds}\) are orthogonal vector fields (see Definition 6.4.4).

Proof

By Definition 6.3.4, (c′|c′) c =1. By Proposition 6.7.4.4, this implies \(2(\frac{\nabla c'}{ds}\bigl|c')_{c}=0\). □

Under the conditions of Corollary 6.7.5, when the covariant derivative of c′ is non-zero at each point, the normal vector field of Proposition 6.5.1 is given by

Let us conclude this section by noticing that the notion of covariant derivative provides the notion of covariant partial derivative:

Definition 6.7.6

Consider a Riemann patch (U,(g ij ) ij ) of class \(\mathcal{C}^{k}\) (k≥1).

-

1.

A 2-dimensional tangent vector field ξ of class \(\mathcal {C}^{k}\) on this Riemann patch consists of two functions of class \(\mathcal{C}^{k}\)

$$\xi^1,\xi^2\colon U \longrightarrow\mathbb{R}. $$ -

2.

The covariant partial derivatives of this vector field ξ at a point \((x^{1}_{0},x^{2}_{0})\) are:

-

\(\frac{\nabla\xi}{\partial x^{1}}(x^{1}_{0},x^{2}_{0})\), the covariant partial derivative at \(x^{1}_{0}\) of the vector field \(\xi (x^{1},x^{2}_{0})\) along the curve \(x^{2}=x^{2}_{0}\);

-

\(\frac{\nabla\xi}{\partial x^{2}}(x^{1}_{0},x^{2}_{0})\), the covariant partial derivative at \(x^{2}_{0}\) of the vector field \(\xi (x^{1}_{0},x^{2})\) along the curve \(x^{1}=x^{1}_{0}\).

-

As expected, one has:

Proposition 6.7.7

Consider:

-

a Riemann patch (U,(g ij ) ij ) of class \(\mathcal{C}^{1}\) (k≥1);

-

a 2-dimensional tangent vector field ξ=(ξ 1,ξ 2) of class \(\mathcal{C}^{1}\);

-

a regular curve represented by c:]a,b[⟶U.

Under these conditions, writing t∈]a,b[ for the parameter,

Proof

Since \(\frac{\nabla\xi}{\partial x^{1}}\) is computed along a curve \(h(x^{1})=(x^{1},x^{2}_{0})\), one has

and analogously for the other partial derivative. Thus by Definition 6.7.3 \(\frac{\nabla\xi}{\partial x^{j}}\) has for components

On the other hand, still by Definition 6.7.3, the vector field ξ(c(t)) along c has a covariant derivative whose components are given by

This proves the announced formula. □

6.8 Parallel Transport

In the plane \(\mathbb{R}^{2}\), we know at once how to “transport” a fixed vector \(\overrightarrow{v}\) along a curve represented by c(t) (see Fig. 6.1): at each point of the curve, simply consider the point

that is the point P(t) such that \(\overrightarrow{c(t)\, P(t)}=\overrightarrow{v}\) (see Definition 2.1.1 in [4], Trilogy II).

Fig. 6.1

This construction in \(\mathbb{R}^{2}\) thus yields a “constant vector field” along c

But saying that this function is constant is equivalent to saying that its derivative is equal to zero. The corresponding Riemannian notion is now clear:

Definition 6.8.1

Consider a Riemann patch of class \(\mathcal{C}^{1}\)

and a regular curve

in it. A vector field ξ of class \(\mathcal{C}^{1}\) along c is said to be parallel when its covariant derivative is everywhere zero.

Let us observe that

Lemma 6.8.2

Being a parallel vector field along a curve is independent of the regular parametric representation chosen for the curve.

Proof

Let φ be a change of parameters of class \(\mathcal{C}^{1}\) for the curve. Differentiating the equality \(\varphi\circ\varphi ^{_{1}}=\mathsf{id}\), we get

proving that φ′ is never zero. The conclusion then follows immediately from Proposition 6.7.4.3. □

Proposition 6.8.3

Consider a Riemann patch of class \(\mathcal{C}^{1}\)

and a regular curve in it:

-

1.

A parallel vector field ξ of class \(\mathcal{C}^{1}\) along c has a constant norm.

-

2.

A parallel vector field ξ of class \(\mathcal{C}^{1}\) along c is orthogonal to its covariant derivative \(\frac{\nabla\xi}{dt}\).

-

3.

Two non-zero parallel vector fields ξ, χ of class \(\mathcal{C}^{1}\) along c make a constant angle.

Proof

By Proposition 6.7.4

This proves that the scalar product (ξ|χ) c is constant. Putting ξ=χ we conclude that ∥ξ∥ c and ∥χ∥ c are constant. Together with the scalar product being constant, this proves that the angle is constant as well (see Notation 6.2.5).

But when \(\|\xi\|_{c}^{2}=(\xi|\xi)_{c}\) is constant, its derivative is zero and by Proposition 6.7.4.4, this yields \(2(\frac {\nabla\xi}{dt}|\xi)_{c}=0\), thus the orthogonality of ξ and \(\frac{\nabla\xi}{dt}\). □

The existence of parallel vector fields is attested by the following theorem:

Theorem 6.8.4

Consider a Riemann patch of class \(\mathcal{C}^{k}\) (k≥2)

and a regular curve of class \(\mathcal{C}^{k}\) in it:

Given a vector \(\overrightarrow{v}\in\mathbb{R}^{2}\) and a point t 0∈]a,b[, there exists a sub-interval ]r,s[⊆]a,b[ still containing t 0 and a unique parallel vector field ξ of class \(\mathcal{C}^{k}\) along c

such that \(\xi(t_{0})=\overrightarrow{v}\). For each value t∈]r,s[, the vector ξ(t) is called the parallel transport of \(\overrightarrow{v}\) along c.

Proof

This is an immediate consequence of the theorem for the existence and uniqueness of a solution of the system of differential equations (see Proposition B.1.1)

together with the initial conditions

(see Definition 6.7.3). Observe that all the coefficients of the differential equations are indeed of class \(\mathcal{C}^{k-1}\) (see Proposition 6.6.8). □

6.9 Geodesic Curvature

Let us now switch to the study of the curvature of a curve in a Riemann patch.

Let us first recall the situation studied in Sect. 5.8. Given a curve on a surface

we write h=f∘c for the corresponding skew curve and \(\overline{h}\) for its normal representation. The normal curvature (up to its sign) is the length of the orthogonal projection of the “curvature vector” \(\overline{h}''\) on the normal vector \(\overrightarrow{n}\) to the surface. Following our “slogan” at the end of Sect. 6.1, this normal curvature is probably not a Riemannian notion. Indeed we have the following:

Counterexample 6.9.1

The normal curvature of a surface cannot be deduced from the sole knowledge of the three coefficients E, F, G.

Proof

In Counterexample 6.6.1, the two surfaces have the same coefficients E, F, G but not the same normal curvature. Indeed by Theorem 5.8.2, the normal curvature of the cylinder is equal to −1 in the direction (1,0) while in the case of the plane, the normal curvature is equal to 0 in all directions. □

However, as our “slogan” of Sect. 6.1 suggests, in the discussion above, the orthogonal projection of the “curvature vector” \(\overline{h}''\) on the tangent plane should be a Riemannian notion. That projection—called the geodesic curvature of the curve—is intuitively what the two-dimensional being living on the surface sees of the curvature of the curve (see Sect. 6.1).

Definition 6.9.2

Consider a curve on a surface

both being regular and of class \(\mathcal{C}^{2}\). Write \(\overline {f\circ c}\) for the normal representation of the corresponding skew curve. The geodesic curvature of the curve on the surface is the length of the orthogonal projection of the vector \(\overline{f\circ c}''\) on the tangent plane to the surface.

We thus get at once:

Proposition 6.9.3

Consider a curve on a surface, both being regular and of class \(\mathcal{C}^{2}\). Then at each point of this curve

where

-

κ indicates the curvature of the curve;

-

κ n indicates the normal curvature of the curve;

-

κ g indicates the geodesic curvature of the curve.

Proof

This follows by Pythagoras’ Theorem (see Theorem 4.3.5 in [4], Trilogy II) and Definitions 5.8.1 and 6.9.2. □

Definition 6.9.2 can easily be rephrased:

Proposition 6.9.4

Consider a curve on a surface

both being regular and of class \(\mathcal{C}^{2}\). Write \(\overline {f\circ c}\) for the normal representation of the corresponding skew curve. The geodesic curvature of the curve on the surface is the norm of the covariant derivative of the tangent vector field \(\overline {f\circ h}'\).

Proof

This follows by Definitions 6.9.2 and 6.7.1. □

By Proposition 6.9.4, the geodesic curvature is thus a Riemannian notion. Therefore we make the following definition:

Definition 6.9.5

Consider a Riemann patch of class \(\mathcal{C}^{2}\)

and a regular curve of class \(\mathcal{C}^{2}\)

given in normal representation. The geodesic curvature of that curve is—with Notation 6.2.5—the norm of the covariant derivative of its tangent vector field:

Of course one can refine Definition 6.9.5 and provide the geodesic curvature with a sign, as we did for plane curves (see Definition 2.9.8). For that purpose, let us make the following observation:

Proposition 6.9.6

Consider a Riemann patch of class \(\mathcal{C}^{2}\)

and a regular curve of class \(\mathcal{C}^{2}\)

given in normal representation. The geodesic curvature is also equal to

where η is the normal vector field to the curve (see Proposition 6.5.1).

Proof

At a point where \(\frac{\nabla c'}{ds}(s)=(0,0)\), both the geodesic curvature and the scalar product of the statement are equal to zero. Otherwise we have

where θ(s) is the angle between \(\frac{\nabla c'}{ds}(s)\) and η(s). By Proposition 6.5.1, η(s) is of length 1. But by Proposition 6.7.5, since c is given in normal representation, \(\frac{\nabla c}{ds}(s)\) is proportional to η(s), thus cosθ(s)=±1. Therefore

which forces the conclusion. □

Definition 6.9.7

Consider a Riemann patch of class \(\mathcal{C}^{2}\)

and a regular curve of class \(\mathcal{C}^{2}\)

given in normal representation. The relative geodesic curvature is the quantity

where η is the normal vector field to the curve (see Proposition 6.5.1).

Clearly, the sign of the geodesic curvature as in Definition 6.9.7 is not an intrinsic property of the curve: for example, it is reversed when considering the equivalent normal parametric representation \(\widetilde{c}(\widetilde{s})\) obtained via the change of parameter \(\widetilde{s}=-s\).

Of course the following proposition is particularly useful:

Proposition 6.9.8

Consider a Riemann patch of class \(\mathcal{C}^{2}\)

and a regular curve of class \(\mathcal{C}^{2}\)

given in arbitrary representation. The geodesic curvature of c is equal to

where η is the normal vector field of the curve (see Proposition 6.5.1).

Proof

Let us freely use the notation and the results in the proof of Proposition 6.3.5: we thus write s=σ(t) and \(\overline{c}(s)=(c\circ\sigma^{-1})(s)\) for the normal representation of the curve. Analogously, we write \(\overline{\eta}(s)\) for the normal vector expressed as a function of the parameter s (see Proposition 6.5.1). Thus, by the proof of Proposition 6.3.5, we already know that

By Proposition 6.9.6, and using Proposition 6.7.4, the normal curvature in terms of the parameter s is then given by

where the last but one equality holds because c′ is orthogonal to η.

Putting σ −1(s)=t in these equalities, we get the formula of the statement. □

6.10 Geodesics

Imagine that you traveling on the Earth, around the equator. To achieve this, you have to proceed “straight on”, without ever turning left or right. But nevertheless, by doing this you travel along a circle, because the equator is a circle. The point is that the “curvature vector” of this circle—the second derivative of a normal representation (see Example 2.9.5)—is oriented towards the center of the circle, and in the case of the equator, the center of the circle is also the center of the Earth. The “curvature vector” is thus perpendicular to the tangent plane to the Earth and so its orthogonal projection on that tangent plane is zero. The geodesic curvature of the equator is zero and this is the reason why you have the false impression of not turning at all when you proceed along the equator.

Definition 6.10.1

A geodesic in a Riemann patch of class \(\mathcal{C}^{2}\) is a regular curve of class \(\mathcal{C}^{2}\) whose geodesic curvature is zero at each point.

Notice at once that

Proposition 6.10.2

In a Riemann patch of class \(\mathcal{C}^{2}\), a regular curve of class \(\mathcal{C}^{2}\) is a geodesic if and only if its tangent vector field is a parallel vector field.

Proof

By Lemma 6.8.2, there is no loss of generality in assuming that the curve is given in normal representation. By Definitions 6.10.1 and 6.9.5, being a geodesic is then equivalent to \(\|\frac{\nabla c'}{ds}\|=0\), which is the condition for being a parallel vector field (see Definition 6.8.1). □

The results that we already have yield at once a characterization of the geodesics:

Theorem 6.10.3

Consider a Riemann patch of class \(\mathcal{C}^{2}\)

and a regular curve of class \(\mathcal{C}^{2}\)

given in normal representation. That curve is a geodesic if and only if

Proof

By Definition 6.9.5, we must prove that \(\bigl\|\frac{\nabla c'}{ds}\bigr\|=0\), which is of course equivalent to \(\frac{\nabla c'}{ds}=0\), since at each point of U, the norm is that given by a scalar product (see Definition 6.4.4 and Notation 6.2.5). The result follows by Definition 6.7.3, putting ξ=c′. □

Example 6.10.4

The geodesics of a sphere are the great circles.

Proof

The argument concerning the equator, at the beginning of this section, works for every great circle, proving that these are geodesics of the sphere.

Conversely, consider a geodesic on a sphere. There is no loss of generality in assuming that the center of the sphere is the origin of \(\mathbb{R}^{3}\). Given a normal representation h of that geodesic viewed as a skew curve, we have h′ in the tangent plane to the sphere (Lemma 5.5.1) and h″ perpendicular to that tangent plane (Definition 6.9.2). Therefore h″ is oriented along the radius of the sphere and the osculating plane (Definition 4.1.6) to the curve passes through the center of the sphere. But, since the center of the sphere is the origin of \(\mathbb{R}^{3}\), h″ is also proportional to h. Let us write

By Proposition 4.5.1, the torsion of the geodesic is equal to

because h′×h″ is orthogonal to h′, but also to h which is proportional to h″. So the torsion of the curve is equal to zero and by Proposition 4.5.3, the geodesic is a plane curve. The plane of this curve is thus also its osculating plane, which passes through the center of the sphere. So the geodesic lies on the intersection of the sphere with a plane through the center of the sphere. Therefore the geodesic is (a piece of) a great circle. □

Example 6.10.5

A straight line contained in a surface is always a geodesic.

Proof

A straight line has a zero curvature vector (see Example 2.9.4). □

Example 6.10.6

The geodesics of the plane are the straight lines.

Proof

The straight lines are geodesics by Example 6.10.5. Now as a surface, the plane is its own tangent plane at each point. But given a curve in the plane, its curvature vector is already in the plane, thus coincides with its orthogonal projection on the tangent plane. Therefore the curve is a geodesic if and only if its curvature vector is zero at each point. The result follows by Example 2.12.7. □

Example 6.10.7

The geodesics of the circular cylinder

are:

-

1.

for each fixed value θ 0, the rulings

$$z\mapsto(\cos\theta_0,\sin\theta_0,z); $$ -

2.

for each fixed value z 0, the circular sections

$$\theta\mapsto(\cos\theta,\sin\theta,z_0); $$ -

3.

for all values r≠0, \(s\in\mathbb{R}\), the circular helices (see Example 4.5.4)

$$\theta\mapsto(\cos\theta,\sin\theta,r\theta+s). $$

Proof

Going back to the proof of Example 6.6.1, we observe at once that the second partial derivatives of g are orthogonal to the first partial derivatives. Therefore the Christoffel symbols of the first kind are all equal to zero (Definition 6.6.2). By the fourth formula in Proposition 6.6.4, the Christoffel symbols of the second kind are all zero as well. This trivializes the equations in Theorem 6.10.3: a curve on the cylinder

such that g∘c is in normal representation is a geodesic when

Integrating twice, we conclude that c 1 and c 2 are polynomials of degree 1. The geodesics are thus obtained as the deformations by g of the plane curves

The case a=0=c is excluded, since it is not a curve. When a=0, \(c\not=0\), we obtain the ruling corresponding to θ 0=b. When \(a\not=0\), c=0 we obtain the circular section corresponding to z 0=d. When \(a\not=0\not=c\), the change of parameter t=as+b yields in the plane the parametric representation

Putting

this curve yields on the cylinder the circular helix of the statement. □

In fact, all surfaces admit geodesics, not just these obvious examples which tend to be the ones we immediately think of. Indeed:

Proposition 6.10.8

Consider a Riemann patch of class \(\mathcal{C}^{k}\), with k≥3

For each point of \((x^{1}_{0},x^{2}_{0})\in U\) and every direction \((\alpha ,\beta)\not=(0,0)\), there exists in a neighborhood of this point a unique geodesic of class \(\mathcal{C}^{k}\)

such that

Proof

We are looking for two functions c 1, c 2 of class \(\mathcal{C}^{k}\) which are solutions of the second order differential equations in Theorem 6.10.3 and satisfy the initial conditions of the statement. Since all coefficients of the differential equations are of class \(\mathcal{C}^{k-1}\), such a solution exists and is unique (see Proposition B.2.1). □

6.11 The Riemann Tensor

Both the normal curvature and the Gaussian curvature of a surface are expressed in terms of the six coefficients E, F, G, L, M, N (see Propositions 5.8.4 and 5.16.3). We have seen in Counterexample 6.6.1 that the three functions L, M, N are not Riemannian quantities and, in Counterexample 6.9.1, that the normal curvature is not a Riemannian notion. This might suggest that the Gaussian curvature is also not a Riemannian quantity. Perhaps unexpectedly, it is!

A very striking result, due to Gauss himself, is that the Gaussian curvature can be expressed as a function of E, F, G. So the Gaussian curvature is a Riemannian notion, while the normal curvature is not. To prove this, in view of the formula

of Proposition 5.16.3, it suffices of course to prove that the quantity LN−M 2 can be expressed as a function of E, F, G. For this, let us switch back to the notation h ij and g ij of Definitions 6.6.2 and 6.1.1.

Definition 6.11.1

Consider a regular parametric representation of class \(\mathcal {C}^{3}\) of a surface:

The Riemann tensor of this surface consists of the family of functions

where

are the coefficients of the second fundamental form of the surface (see Theorem 5.8.2).

Notice once more the appearance of the term tensor.

Lemma 6.11.2

Under the conditions of Definition 6.11.1, all the components R ijkl of the Riemann tensor are equal to one of the following quantities:

Thus, knowing the metric tensor, the knowledge of the Riemann tensor is equivalent to the knowledge of the Gaussian curvature.

Proof

Simply observe that

while all other components are zero. □

Theorem 6.11.3

(Theorema Egregium, Gauss)

Under the conditions of Definition 6.11.1, the Riemann tensor is equal to

In particular, the Riemann tensor can be expressed as a function of the sole coefficients of the metric tensor.

Proof

Of course the last sentence in the statement will follow at once from the formula in the statement, since we already know the corresponding result for the Christoffel symbols (see Proposition 6.6.6). Let us therefore prove this formula.

Since the normal vector \(\overrightarrow{n}\) has length 1, we can write equivalently

But by Definition 6.6.2

Let us then replace \(h_{ij}\overrightarrow{n}\) by the quantity on the right hand side, keeping in mind Definition 6.1.1 of the coefficients of the metric tensor and Definition 6.6.2 of the Christoffel symbols.

Let us now use the third formula in Proposition 6.6.4 to simplify this last expression. This formula allows us to combine the first and the third terms in the fourth line to obtain

That quantity is then exactly the opposite of the first term in the second line. The same process allows us to simplify the second and fourth terms in the fourth line with the second term in the second line. Next, we can apply this process again to the last line and the first two terms in the third line. Eventually, the last four lines reduce to

This is exactly the sum in α in the formula of the statement.

To conclude, it remains to check that

Indeed

by the well-known property of commutation of partial derivatives. □

As you might now expect, we conclude this section with a corresponding definition:

Definition 6.11.4

Given a Riemann patch of class \(\mathcal{C}^{2}\), the Riemann tensor is defined as being the family of functions

(See Definition 6.6.7.)

It is worth adding a comment.

Definition 6.11.5

Given a Riemann patch of class \(\mathcal{C}^{2}\), the quantity

is called the Gaussian curvature of the Riemann patch.

This terminology is clearly inspired by Lemma 6.11.2 and its proof. This notion of Gaussian curvature makes perfect sense in the “restricted” context of our Definition 6.2.1, simply because Lemma 6.11.2 remains valid in this context (see Problem 6.18.1). However, the possibility of reducing the information given by the metric tensor to a single quantity κ τ is a very specific peculiarity of the Riemann patches of dimension 2. This notion of Gaussian curvature does not extend to higher dimensional Riemann patches, as defined in Definition 6.17.6: in higher dimensions, the correct notion to consider is the full Riemann tensor.

6.12 What Is a Tensor?

The time has come to discuss the magic word tensor. A family of functions receives this “honorary label” when it transforms “elegantly” along a change of parameters. In fact, a formal, general and elegant theory of tensors must rely on a good multi-linear algebra course; but this is beyond the scope of this book.

In Sect. 6.1 we have exhibited the Riemann patch corresponding to a specific parametric representation of a surface in \(\mathbb{R}^{3}\), and we know very well that a given surface admits many equivalent parametric representations. However, up to now, we have not paid attention to the question: What are equivalent Riemann patches?

Consider a regular surface of class \(\mathcal{C}^{3}\) admitting two equivalent parametric representations

To be able to handle the corresponding change of parameters in our arguments, we have to fix a notation for it. Up to now, we have always used a notation like

Of course if you have many changes of parameters to handle, using various notations such as φ, ψ, θ, τ and so on rapidly becomes unwieldy. Riemannian geometry uses a very standard and efficient notation for a change of parameters:

Of course such a notation is a little ambiguous, since it uses the same symbol for the coordinates \(\widetilde{x}^{i}\) and for the functions \(\widetilde{x}^{i}\). However, in practice no confusion occurs. In fact, this notation significantly clarifies the language. When you have several changes of coordinates, the notation \(\widetilde{x}^{i}(x^{1},x^{2})\) reminds you at once of both systems of coordinates involved in the question, while a notation such as φ i(x 1,x 2) recalls only one of them.

Proposition 6.12.1

Consider a regular surface of class \(\mathcal{C}^{3}\) admitting the equivalent parametric representations

Write further

for the corresponding metric tensors. Under these conditions

Proof

With the notation just explained for the changes of coordinates, we have

It follows that

This implies

that is

which is the formula of the statement. □

This elegant formula is what one calls the transformation formula for a tensor which is twice covariant. Forgetting about this new jargon “covariant” for the time being, let us repeat the same for the inverse metric tensor (see Definition 6.2.3).

Proposition 6.12.2

Consider a regular surface of class \(\mathcal{C}^{3}\) admitting the equivalent parametric representations

Write further

for the corresponding inverse metric tensors. Under these conditions

Proof

As already observed in the proof of Proposition 6.12.1:

The matrix

is thus the change of coordinates matrix between the two bases of partial derivatives in the tangent plane (see Sect. 2.20 in [4], Trilogy II).

For the needs of this proof, let us write T for the matrix given by the metric tensor. The formula of Proposition 6.12.1 becomes simply

Taking the inverses of both sides, we get

since (B t)−1=(B −1)t. But the same argument as above shows that

Therefore the transformation formula for the inverse metric tensor is

as announced in the statement. □

Compare now the two formulas in Propositions 6.12.1 and 6.12.2. They are very similar of course, but nevertheless with a major difference! It will be convenient for us to call \((\widetilde{x}^{1},\widetilde{x}^{2})\) the “new” coordinates and (x 1,x 2) the “old” coordinates.

-

In the case of the metric tensor, the coefficients in the change of parameters formula are the derivatives of the “old” coordinates with respect to the “new” coordinates. One says that the tensor is covariant in both variables or simply, twice covariant. One uses lower indices to indicate the covariant indices of a tensor.

-

In the case of the inverse metric tensor, the coefficients in the change of parameters formula are the derivatives of the “new” coordinates with respect to the “old” coordinates. One says that the tensor is contravariant in both variables or simply, twice contravariant. One uses upper indices to indicate the contravariant indices of a tensor.

This already clarifies some points of notation and terminology. However, this still does not tell us what a tensor is. As mentioned earlier, in order to give an elegant definition we would need some multi-linear algebra. Nevertheless, as far as surfaces in \(\mathbb{R}^{3}\) are concerned, we can at least take as our definition a famous criterion characterizing the tensors of Riemannian geometry. For simplicity, we state the definition in the particular case of a tensor two times covariant and three times contravariant, but the generalization is obvious.

Definition 6.12.3

Suppose that for each parametric representation of class \(\mathcal {C}^{3}\) of a given surface of \(\mathbb{R}^{3}\) you have a corresponding family of continuous functions

with two lower indices and three upper indices. These families of continuous functions are said to constitute a tensor covariant in the indices i, j and contravariant in the indices k, l, m when, given any two equivalent parametric representations f, \(\widetilde{f}\)—and with obvious notation—these functions transform into each other via the formulas

Of course, an analogous definition holds for a tensor α times covariant and β times contravariant, for any two integers α, β.

You should now have a clear idea why some quantities are designated as tensors and others are not. For example, the Riemann tensor of Theorem 6.11.3 is a tensor four times covariant (Problem 6.18.2) while the Christoffel symbols do not constitute a tensor (Problem 6.18.4). This also indicates why some indices are put upside and others downside.

One should be able to guess now why we use upper indices to indicate the coordinates of a point or the coordinates of a tangent vector field.

Proposition 6.12.4

Consider a regular curve c on a regular surface of class \(\mathcal {C}^{3}\) in \(\mathbb{R}^{3}\). In a change of parameters and with obvious notation, a vector field ξ along the curve c, tangent to the surface, transforms via the formula

Proof

One has

and this proves the formula of the statement. □

Of course a vector field along a curve is not a tensor in the sense of Definition 6.12.3, because it is not defined on the whole subset U. Nevertheless, its transformation law along the curve is exactly that of a tensor one time contravariant. This explains the use of upper indices.

In particular, the components of the tangent vector field to the curve c itself should be written with upper indices: c′=((c 1)′,(c 2)′). But then of course, the components of c should use upper indices as well c=(c 1,c 2). To be consistent, when writing the parametric equations of the curve c

we should use upper indices as well for the two coordinates x 1 and x 2.

Let us conclude this long discussion on tensors by giving the answer to the question raised at the beginning of this section: What are equivalent Riemann patches? Keeping in mind that for a surface of class \(\mathcal{C}^{k+1}\) in \(\mathbb{R}^{3}\) the coefficients g ij of the metric tensor are functions of class \(\mathcal{C}^{k}\) (see Definition 6.1.1), we make the following definition:

Definition 6.12.5

Two Riemann patches of class \(\mathcal{C}^{k}\)

are equivalent in class \(\mathcal{C}^{k}\) when there exists a change of parameters of class \(\mathcal{C}^{k+1}\) (that is, a bijection of class \(\mathcal{C}^{k+1}\) with inverse of class \(\mathcal {C}^{k+1}\))

such that

As expected:

Proposition 6.12.6

A change of parameters φ as in Definition 6.12.5 is a Riemannian isometry, that is, respects lengths and angles in the sense of the Riemannian metric.

Proof

Consider a curve

Under the conditions of Definition 6.12.5, the length of an arc of the curve in \(\widetilde{U}\) represented by φ∘c is given by

and this last formula expresses precisely the length of the curve c in U.

The proof concerning the preservation of angles is perfectly analogous. □

Notice that already for a Riemann patch of class \(\mathcal{C}^{0}\), the form of the change of parameters requires that it be of class \(\mathcal {C}^{1}\). This is another way to justify the “jump” of one unit in the classes of differentiability.

We are almost done. But you are still entitled to ask an intriguing question. If the Christoffel symbols are not tensors, how do we decide to use upper or lower indices? There is another convention in Riemannian geometry: a convention which, deliberately, has not been used in this chapter, and which requires an appropriate choice of position of the indices.

Convention 6.12.7

(Abbreviated Notation)

In Riemannian geometry, when in a given term of a formula, the same index appears once as an upper index and once as a lower index, it is understood that a sum is taken over all the possible values of this index.

For example, following this convention, the formula giving the components of the covariant derivative of a tangent vector field (see Definition 6.7.3)

is generally simply written as

because both indices i and j appear once as an upper index and once as a lower index in the “second” term. Notice that the index k appears twice as an upper index and moreover in two different terms: thus no sum is to be taken on this index. It is easy to see why we did not use this convention in this first approach of Riemannian geometry.

6.13 Systems of Geodesic Coordinates

Once again, let us support our intuition with the case of the Earth, regarded as a sphere. The most traditional system of coordinates is in terms of the latitude and the longitude. Consider the corresponding “geographical map” as in Example 5.1.6

where τ is the latitude and θ is the longitude.

-

The equator is really the “base curve” of the whole system of coordinates: the curve given by τ=0; this is a great circle on the sphere, that is, a geodesic (see Example 6.10.4). Observe that f(0,θ) is a normal representation of the equator, because the radius of the sphere has been chosen to be equal to 1.

-

The curves θ=k on the sphere, with k constant, are the meridians: they are great circles, thus geodesics, and moreover they are orthogonal to the equator. Observe that f(τ,θ 0) is again a normal representation of the meridian with fixed longitude θ 0, again because the radius of the sphere is equal to 1.

-

The curves τ=k on the sphere, with k constant, are the so-called parallels; they are not great circles (except for the equator), thus they are not geodesics; but they are orthogonal to all the meridians.

This is thus a very particular system of coordinates of which we can expect many properties and advantages. One calls such a system a system of geodesic coordinates.

A system of geodesic coordinates exists in a neighborhood of each point of a “good” surface. Let us establish this result in the general context of a Riemann patch.

Theorem 6.13.1

Consider a regular curve \(\mathcal{C}\) passing through a point P in a Riemann patch. Assume that both the Riemann patch and the curve are of class \(\mathcal{C}^{m}\), with m≥2. There exists a connected open neighborhood of P such that the Riemann patch, restricted to this neighborhood, is equivalent in class \(\mathcal{C}^{m-1}\) to a Riemann patch

with the following properties:

-

1.

the point P has coordinates (0,0);

-

2.

the curve \(\mathcal{C}\) is the curve x 1=0 and is now given in normal representation;

-

3.

the curves x 2=k, with k constant, are geodesics in normal representation;

-

4.

the curves x 1=l, with l constant, are orthogonal to the curves x 2=k, with k constant;

-

5.

at all points of U

$$g_{11}=1,\qquad g_{21}=0=g_{12},\qquad g_{22}>0 $$and also

$$g^{11}=1,\qquad g^{21}=0= g^{12},\qquad g^{22}>0; $$ -

6.

at all points of U

$${\varGamma}_{211}={\varGamma}_{121}= {\varGamma}_{112}={\varGamma}_{111}=0; \qquad {\varGamma}^2_{11}={\varGamma}^1_{11} ={\varGamma}_{21}^1={\varGamma}_{12}^1 =0 $$while

$${\varGamma}_{222} =\frac{1}{2} \frac{\partial g_{22}}{\partial x^2} ,\qquad {\varGamma}_{212} ={\varGamma}_{122} = \frac{1}{2} \frac{\partial g_{22}}{\partial x^1},\qquad {\varGamma}_{221} =-\frac{1}{2} \frac{\partial g_{22}}{\partial x^1}, $$and

$${\varGamma}_{22}^2 =\frac{1}{2 g_{22}} \frac{\partial g_{22}}{\partial x^2} ,\qquad {\varGamma}_{21}^2 ={\varGamma}_{12}^2 =\frac{1}{2 g_{22}} \frac{\partial g_{22}}{\partial x^1},\qquad {\varGamma}_{22}^1= -\frac{1}{2}\frac{\partial g_{22}}{\partial x^1}; $$ -

7.

when moreover the original curve c is a geodesic

$$g_{22}(0,x^2)=1,\qquad \frac{\partial g_{22}}{\partial x^1}(0, x^2)=0 $$and

$${\varGamma}_{ij}^k(0, x^2)=0,\qquad {\varGamma}_{ijk}(0, x^2)=0,\quad 1\leq i,j,k\leq2. $$

A system of coordinates satisfying conditions 1 to 6 is called a geodesic system of coordinates. When moreover it satisfies condition 7, it is called a Fermi system of geodesic coordinates.

Proof

Let us write

for the original Riemann patch and

for the given curve \(\mathcal{C}\). Let us write further P=c(t 0). Follow the construction above on Fig. 6.2.

Fig. 6.2

By Proposition 6.3.5, there is no loss of generality in assuming that the curve \(\mathcal{C}\) is given in normal representation with P as origin, thus P=c(0). Under these conditions c′(t) becomes a vector of norm 1 (see Definition 6.3.4).

For each value t∈]a,b[, we consider the normal vector η(t) to the curve (see Proposition 6.5.1), which is thus a vector of norm 1 orthogonal to c′(t). By Proposition 6.10.8, in a neighborhood of c(t), there exists in the Riemann patch a unique geodesic h t (s) of class \(\mathcal{C}^{m}\) through c(t) in the direction η(t), satisfying an initial condition that we choose to be h t (0)=c(t). We are interested in the function

which we want to become the expected change of parameters of class \(\mathcal{C}^{m-2}\) in a neighborhood of c(0). Since the coefficients of the equations in Proposition 6.10.8 are of class \(\mathcal{C}^{m-1}\) and c is of class \(\mathcal{C}^{m}\), the function φ is indeed defined, and of class \(\mathcal{C}^{m}\), on a neighborhood of (0,0) (see Proposition B.3.2). But to be a good change of parameters, the inverse of φ should also be of class \(\mathcal{C}^{m}\).

Let us compute the partial derivatives of the function φ at the point (0,0):

By regularity of c and Proposition 6.5.1, c′(0) and η(0) are perpendicular and of length 1 with respect to the scalar product (−|−) c(0), thus linearly independent. By the Local Inverse Theorem (see Theorem 1.3.1), the function φ is thus invertible on some neighborhood U′ of (0,0), with an inverse which is still of class \(\mathcal{C}^{m}\). There is no loss of generality in choosing U′ open and connected. In this way φ becomes a homeomorphism

We simply define U=φ(U′) and use the notation \(\bigl(x^{1}, x^{2})\) instead of (s,t). Thus our change of parameters φ is now

Of course there is no difficulty in providing U with the structure of a Riemann patch equivalent to that given by the \(\widetilde{g}_{ij}\) on \(\widetilde{U}\). With Definition 6.12.5 in mind, simply define

With the notation of Proposition 6.12.3, this definition can be re-written as

As observed in the proof of Proposition 6.12.3, the matrix B is that of a change of basis, while \(\widetilde{T}\) is at each point the matrix of a scalar product in \(\mathbb{R}^{2}\) (see Notation 6.2.5). By Corollary G.1.4 in [4], Trilogy II, T is then at each point the matrix of the same scalar product expressed in another base: it is thus a symmetric definite positive matrix. Therefore the g ij on U constitute a Riemann patch equivalent in class \(\mathcal{C}^{m-1}\) to that of the \(\widetilde{g}_{ij}\) on U′.

By construction, the curve x 1=0 is the curve h t (0), that is the original curve c(t).

Also by construction, the curves x 2=k, with k constant, are the curves h k (s), which are geodesics given in normal representation.

Next, we prove that g 11=1. With Notation 6.2.5,

because each h t (s) is in normal representation (see Definition 6.3.4).

Next, we turn our attention to the Christoffel symbols. The curve x 2=k is represented by

Differentiating with respect to s=x 1, we obtain

Since this curve x 2=k is a geodesic in normal representation, it satisfies the system of differential equations of Theorem 6.10.3. The observations that we have just made show that this system reduces simply to its terms in (i,j)=(1,1), that is,

Since this holds for every value k, this proves condition 5 of the statement.

Now the case of g 12=g 21. By Definition 6.6.7, we have at all points

Keeping in mind that g 11=1 while g 12=g 21, which also forces g 12=g 21 (the inverse of a symmetric matrix is symmetric), this equality reduces to

Introducing the value of g 21 (see the proof of Proposition 6.2.4) into this equality, we obtain

We know that \(g_{11} g_{22}- g_{12} g_{21}\not=0\) (Proposition 6.2.1); the equality is thus equivalent to

But this can be re-written as

This proves that g 21(x 1,x 2) is a constant function of x 1: thus to conclude that g 21=0, it suffices thus to prove that g 21(0,x 2)=0. By definition of g 21 and using the values of the partial derivatives of the change of parameters φ, we indeed obtain

So g 21(0,x 2)=0 and as we have seen, this implies g 21=g 12=0. Since we know already that g 11=1, this forces g 22>0 by positivity of the metric tensor (see Definition 6.2.1).

The metric tensor is thus a diagonal matrix; therefore its inverse (see Definition 6.2.3) is obtained by taking the inverses of the diagonal elements and thus

Since g 11, g 12 and g 21 are constant, their partial derivatives are zero. Considering the definition of the Christoffel symbols of the first kind (see Definition 6.6.7), only the partial derivatives of g 22 remain: this gives at once the formulas of the statement concerning the symbols Γ ijk and as an immediate consequence, the formulas concerning the symbols \({\varGamma}_{ij}^{k}\).

Saying that the curves x 1=l, x 2=k, are orthogonal means

which is trivially the case since g 12=0=g 21. This concludes the proof in the case of an arbitrary base curve c.

Let us now suppose that this curve c is itself a geodesic. In terms of the coordinates (x 1,x 2), we have t=x 2 and the curve c is simply c(x 2)=(0,x 2). By Theorem 6.10.3 we have

But since c(x 2)=(0,x 2), this reduces to

By Proposition 6.7.4.4

On the other hand by Proposition 6.7.4

the last equality holds because c(t) is a geodesic in normal representation: this implies that c′ is a parallel vector field (see Proposition 6.10.2), thus \(\frac{\nabla c'}{dt}=0\) by Definition 6.8.1. But, still by Proposition 6.5.1 and normality of the representation, c′ and η constitute at each point an orthonormal basis of \(\mathbb{R}^{2}\) for the scalar product (−|−) c . The orthogonality of \(\frac{\nabla\eta}{dt}\) to both c′ and η implies

for all values of t=x 2. By Definition 6.7.3 we have

But in terms of the coordinates (x 1,x 2), η(x 2)=(1,0) while c(x 2)=(0,x 2). Therefore the two equalities reduce to

Of course this also forces

by Proposition 6.6.8. The third condition in this same proposition also shows that Γ ijk (0,x 2)=0 for all indices. □

Corollary 6.13.2

Consider a Riemann patch of class \(\mathcal{C}^{m}\), with m≥2

Suppose that:

-

1.

the curves x 2=k are geodesics in normal representation;

-

2.

each of these curves cuts the curve x 1=0 orthogonally.

Under these conditions, (x 1,x 2) is already a system of geodesic coordinates.

Proof

Simply observe that in the proof of Theorem 6.13.1, the change of parameters φ is the identity and therefore, is trivially valid on the whole of V. □

At the beginning of Sect. 6.10, we introduced geodesics via the intuition that they are the curves on the surface along which you have the impression of travelling in a straight line without ever turning left or right. As a consequence of the existence of systems of geodesic coordinates, let us now prove a precise result which reinforces the intuition that geodesics are “the best substitute for straight lines” on a surface.

Theorem 6.13.3

Locally, in a Riemann patch of class \(\mathcal{C}^{2}\), a geodesic is the shortest regular curve joining two of its points.

Proof

We consider a Riemann patch

and a geodesic

There is no loss of generality in assuming that h is at once given in normal representation, with 0∈]a,b[.