Abstract

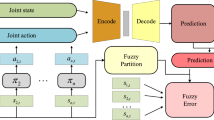

Interactive reinforcement learning can effectively facilitate the agent training via human feedback. However, such methods often require the human teacher to know what is the correct action that the agent should take. In other words, if the human teacher is not always reliable, then it will not be consistently able to guide the agent through its training. In this paper, we propose a more effective interactive reinforcement learning system by introducing multiple trainers, namely Multi-Trainer Interactive Reinforcement Learning (MTIRL), which could aggregate the binary feedback from multiple non-perfect trainers into a more reliable reward for an agent training in a reward-sparse environment. In particular, our trainer feedback aggregation experiments show that our aggregation method has the best accuracy when compared with the majority voting, the weighted voting, and the Bayesian method. Finally, we conduct a grid-world experiment to show that the policy trained by the MTIRL with the review model is closer to the optimal policy than that without a review model.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bignold, A., Cruz, F., Dazeley, R., Vamplew, P., Foale, C.: Persistent rule-based interactive reinforcement learning. Neural Comput. Appl. 1–18 (2021)

Burnett, C., Norman, T.J., Sycara, K.: Bootstrapping trust evaluations through stereotypes. In: Proceedings of the 9th International Conference on Autonomous Agents and Multiagent Systems (AAMAS 2010), pp. 241–248. International Foundation for Autonomous Agents and Multiagent Systems (2010)

Cao, X., Fang, M., Liu, J., Gong, N.Z.: Fltrust: byzantine-robust federated learning via trust bootstrapping. In: ISOC Network and Distributed System Security Symposium (NDSS), (2021)

Cheng, M., Yin, C., Zhang, J., Nazarian, S., Deshmukh, J., Bogdan, P.: A general trust framework for multi-agent systems. In: Proceedings of the 20th International Conference on Autonomous Agents and MultiAgent Systems, pp. 332–340 (2021)

Cui, Y., Zhang, Q., Allievi, A., Stone, P., Niekum, S., Knox, W.: The empathic framework for task learning from implicit human feedback. In: Conference on Robot Learning (2020)

Fan, X., Liu, L., Zhang, R., Jing, Q., Bi, J.: Decentralized trust management: risk analysis and trust aggregation. ACM Comput. Surv. (CSUR) 53(1), 1–33 (2020)

Goel, N., Faltings, B.: Personalized peer truth serum for eliciting multi-attribute personal data. In: Uncertainty in Artificial Intelligence, pp. 18–27. PMLR (2020)

Güneş, T.D., Norman, T.J., Tran-Thanh, L.: Budget limited trust-aware decision making. In: International Conference on Autonomous Agents and Multiagent Systems, pp. 101–110. Springer (2017). https://doi.org/10.1007/978-3-319-71679-4_7

Jøsang, A.: Subjective logic, vol. 3. Springer (2016). https://doi.org/10.1007/978-3-319-42337-1

Kazantzidis, I., Norman, T., Du, Y., Freeman, C.: How to train your agent: active learning from human preferences and justifications in safety-critical environments. In: International Conference on Autonomous Agents and Multiagent Systems (2022)

Knox, W.B., Stone, P.: Tamer: training an agent manually via evaluative reinforcement. In: 2008 7th IEEE international conference on development and learning, pp. 292–297. IEEE (2008)

Knox, W.B., Stone, P.: Combining manual feedback with subsequent mdp reward signals for reinforcement learning. In: Proceedings of the 9th International Conference on Autonomous Agents and Multiagent Systems, vol. 1, pp. 5–12. Citeseer (2010)

Knox, W.B., Stone, P.: Framing reinforcement learning from human reward: reward positivity, temporal discounting, episodicity, and performance. Artif. Intell. 225, 24–50 (2015)

Kurenkov, A., Mandlekar, A., Martin-Martin, R., Savarese, S., Garg, A.: Ac-teach: a bayesian actor-critic method for policy learning with an ensemble of suboptimal teachers. In: Conference on Robot Learning, pp. 717–734. PMLR (2020)

Li, S., Zhang, C.: An optimal online method of selecting source policies for reinforcement learning. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32 (2018)

Ma, C., Li, J., Ding, M., Wei, K., Chen, W., Poor, H.V.: Federated learning with unreliable clients: performance analysis and mechanism design. IEEE Internet Things J. 8(24), 17308–17319 (2021)

MacGlashan, J., et al.: Interactive learning from policy-dependent human feedback. In: International Conference on Machine Learning, pp. 2285–2294. PMLR (2017)

Palmer, A.W., Hill, A.J., Scheding, S.J.: Methods for stochastic collection and replenishment (scar) optimisation for persistent autonomy. Robot. Auton. Syst. 87, 51–65 (2017)

Rummery, G.A., Niranjan, M.: On-line Q-learning using connectionist systems, vol. 37. University of Cambridge, Department of Engineering Cambridge, UK (1994)

Tittaferrante, A., Yassine, A.: Multi-advisor reinforcement learning for multi-agent multi-objective smart home energy control. IEEE Trans. Artif. Intell. 3(4), 581–594 (2021)

Zhan, Y., Ammar, H.B., Taylor, M.E.: Theoretically-grounded policy advice from multiple teachers in reinforcement learning settings with applications to negative transfer. In: Proceedings of the Twenty Fifth International Joint Conference on Artificial Intelligence (2016)

Zhong, X., Xu, X., Pan, B.: A non-threshold consensus model based on the minimum cost and maximum consensus-increasing for multi-attribute large group decision-making. Inf. Fusion 77, 90–106 (2022)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Guo, Z., Norman, T.J., Gerding, E.H. (2023). MTIRL: Multi-trainer Interactive Reinforcement Learning System. In: Aydoğan, R., Criado, N., Lang, J., Sanchez-Anguix, V., Serramia, M. (eds) PRIMA 2022: Principles and Practice of Multi-Agent Systems. PRIMA 2022. Lecture Notes in Computer Science(), vol 13753. Springer, Cham. https://doi.org/10.1007/978-3-031-21203-1_14

Download citation

DOI: https://doi.org/10.1007/978-3-031-21203-1_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-21202-4

Online ISBN: 978-3-031-21203-1

eBook Packages: Computer ScienceComputer Science (R0)