Abstract

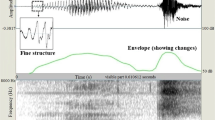

Traditional vocoder-based statistical parametric speech synthesis can be advantageous in applications that require low computational complexity. Recent neural vocoders, which can produce high naturalness, still cannot fulfill the requirement of being real-time during synthesis. In this paper, we experiment with our earlier continuous vocoder, in which the excitation is modeled with two one-dimensional parameters: continuous F0 and Maximum Voiced Frequency. We show on the data of 9 speakers that an average voice can be trained for DNN-TTS, and speaker adaptation is feasible 400 utterances (about 14 min). Objective experiments support that the quality of speaker adaptation with Continuous Vocoder-based DNN-TTS is similar to the quality of the speaker adaptation with a WORLD Vocoder-based baseline.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Agiomyrgiannakis, Y.: Vocaine the vocoder and applications in speech synthesis. In: ICASSP, pp. 4230–4234. IEEE (2015)

Airaksinen, M., Juvela, L., Bollepalli, B., Yamagishi, J., Alku, P.: A comparison between straight, glottal, and sinusoidal vocoding in statistical parametric speech synthesis. IEEE/ACM Trans. Audio Speech Lang. Process. 26(9), 1658–1670 (2018)

Al-Radhi, M.S., Abdo, O., Csapó, T.G., Abdou, S., Németh, G., Fashal, M.: A continuous vocoder for statistical parametric speech synthesis and its evaluation using an audio-visual phonetically annotated Arabic corpus. Comput. Speech Lang. 60, 101025 (2020)

Al-Radhi, M.S., Csapó, T.G., Németh, G.: Time-domain envelope modulating the noise component of excitation in a continuous residual-based vocoder for statistical parametric speech synthesis. In: INTERSPEECH, pp. 434–438 (2017)

Al-Radhi, M.S., Csapó, T.G., Németh, G.: A continuous vocoder using sinusoidal model for statistical parametric speech synthesis. In: Karpov, A., Jokisch, O., Potapova, R. (eds.) SPECOM 2018. LNCS (LNAI), vol. 11096, pp. 11–20. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-99579-3_2

Bakhturina, E., Lavrukhin, V., Ginsburg, B., Zhang, Y.: Hi-Fi multi-speaker English TTS dataset. arXiv preprint arXiv:2104.01497 (2021)

Beskow, J., Berthelsen, H.: A hybrid harmonics-and-bursts modelling approach to speech synthesis. In: SSW, pp. 208–213 (2016)

Black, A.W., Zen, H., Tokuda, K.: Statistical parametric speech synthesis. In: ICASSP, vol. 4, pp. IV-1229. IEEE (2007)

Csapó, T.G., Németh, G., Cernak, M.: Residual-based excitation with continuous F0 modeling in HMM-based speech synthesis. In: Dediu, A.-H., Martín-Vide, C., Vicsi, K. (eds.) SLSP 2015. LNCS (LNAI), vol. 9449, pp. 27–38. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-25789-1_4

Csapó, T.G., Németh, G., Cernak, M., Garner, P.N.: Modeling unvoiced sounds in statistical parametric speech synthesis with a continuous vocoder. In: EUSIPCO, pp. 1338–1342. IEEE (2016)

Habeeb, I.Q., Fadhil, T.Z., Jurn, Y.N., Habeeb, Z.Q., Abdulkhudhur, H.N.: An ensemble technique for speech recognition in noisy environments. Indones. J. Electr. Eng. Comput. Sci. 18(2), 835–842 (2020)

Hashimoto, K., Oura, K., Nankaku, Y., Tokuda, K.: The effect of neural networks in statistical parametric speech synthesis. In: ICASSP, pp. 4455–4459. IEEE (2015)

Hu, Q., Richmond, K., Yamagishi, J., Latorre, J.: An experimental comparison of multiple vocoder types. In: SSW8, pp. 135–140 (2013)

Hu, Q., Wu, Z., Richmond, K., Yamagishi, J., Stylianou, Y., Maia, R.: Fusion of multiple parameterisations for DNN-based sinusoidal speech synthesis with multi-task learning. In: INTERSPEECH, pp. 854–858 (2015)

Lanchantin, P., Gales, M.J., King, S., Yamagishi, J.: Multiple-average-voice-based speech synthesis. In: ICASSP, pp. 285–289. IEEE (2014)

Ling, Z.H., Deng, L., Yu, D.: Modeling spectral envelopes using restricted Boltzmann machines and deep belief networks for statistical parametric speech synthesis. IEEE Trans. Audio Speech Lang. Process. 21(10), 2129–2139 (2013)

Tokuda, K., Nankaku, Y., Toda, T., Zen, H., Yamagishi, J., Oura, K.: Speech synthesis based on hidden Markov models. Proc. IEEE 101(5), 1234–1252 (2013)

Wu, Z., Swietojanski, P., Veaux, C., Renals, S., King, S.: A study of speaker adaptation for DNN-based speech synthesis. In: Interspeech, pp. 879–883 (2015)

Wu, Z., Valentini-Botinhao, C., Watts, O., King, S.: Deep neural networks employing multi-task learning and stacked bottleneck features for speech synthesis. In: ICASSP, pp. 4460–4464. IEEE (2015)

Wu, Z., Watts, O., King, S.: Merlin: an open source neural network speech synthesis system. In: SSW, pp. 202–207 (2016)

Zen, H., Senior, A.: Deep mixture density networks for acoustic modeling in statistical parametric speech synthesis. In: ICASSP, pp. 3844–3848. IEEE (2014)

Zen, H., Tokuda, K., Black, A.W.: Statistical parametric speech synthesis. Speech Commun. 51(11), 1039–1064 (2009)

Acknowledgments

The research was partly supported by the European Union’s Horizon 2020 research and innovation programme under grant agreement No. 825619 (AI4EU), and by the National Research Development and Innovation Office of Hungary (FK 124584 and PD 127915). The Titan X GPU used was donated by NVIDIA Corporation. We would like to thank the subjects for participating in the listening test.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Mandeel, A.R., Al-Radhi, M.S., Csapó, T.G. (2021). Speaker Adaptation with Continuous Vocoder-Based DNN-TTS. In: Karpov, A., Potapova, R. (eds) Speech and Computer. SPECOM 2021. Lecture Notes in Computer Science(), vol 12997. Springer, Cham. https://doi.org/10.1007/978-3-030-87802-3_37

Download citation

DOI: https://doi.org/10.1007/978-3-030-87802-3_37

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-87801-6

Online ISBN: 978-3-030-87802-3

eBook Packages: Computer ScienceComputer Science (R0)