Abstract

This paper presents the main ideas behind deductive synthesis of heap-manipulating program and outlines present challenges faced by this approach as well as future opportunities for its applications.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

Just like a journey of a thousand miles begins with a single step, an implementation of a working operating system, cryptographic library, or a compiler begins with writing a single function. This is not quite so for verified software, whose development starts with three “steps”: a function specification (or, spec), followed by its implementation, and then by a proof that the implementation satisfies the spec. Although recent years have seen an explosion of increasingly diverse and sophisticated verified systems [14, 20, 26, 31, 41, 48, 71, 73, 96], their cost remains high, owing to the effort required to write formal specifications and proofs in addition to writing the code.

The good news is that in many cases the aforementioned three steps can be replaced by just one of them: writing the spec. The rest can be delegated to deductive program synthesis [52]—an emerging approach to automated software development, which takes as input a specifications, and searches for a corresponding program together with its proof.

Past approaches to deductive synthesis typically avoided low-level programs with pointers [43, 69, 83], which are notoriously difficult to reason about, making these approaches inapplicable to automating the development of verified systems code. The few techniques that did handle the heap [47, 72] had significant limitations in terms of expressiveness and/or efficiency. Our prior work on the SuSLik synthesizer [70], has introduced an alternative approach to synthesis of pointer-manipulating programs, whose key enabling component is the use of Separation Logic (SL) [66, 75] as the specification formalism. Due to its proof scalability, Separation Logic enabled modular verification of low-level imperative code and has been implemented in a large number of automated and interactive program verifiers [4, 7, 18, 37, 57, 62, 64, 68]. The main novelty of SuSLik was an observation that the structure of SL specifications can be used to efficiently guide the search for a program and its proof. Since then, our follow-up work has explored automatic discovery of recursive auxiliary functions [34], generating independently checkable proof certificates for synthesized programs [93], and giving the user more control over the synthesis using concise mutability annotations [19].

As an appetizer for SL-powered deductive program synthesis consider the problem of flattening a binary tree data structure into a doubly-linked list. Assume also that the programmer would prefer to perform this transformation in-place, without allocating new memory, which they conjecture is possible because the nodes of the two data structures have the same size (both are records with a payload and two pointers). With SuSLik, the programmer can describe this transformation using the following Hoare-style SL specification:

Here the precondition asserts that initially x points to the root of a tree, whose contents are captured by a set  . The postcondition asserts that after the execution of flatten, the same location x is a head of a doubly-linked list, with the same elements

. The postcondition asserts that after the execution of flatten, the same location x is a head of a doubly-linked list, with the same elements  as the initial tree (

as the initial tree ( denotes the existentially quantified back-pointer of the list). The definitions of the two predicates,

denotes the existentially quantified back-pointer of the list). The definitions of the two predicates,  and

and  , which constrain the symbolic heaps in the pre- and postcondition are standard for SL [75] and will be shown in Sect. 2.

, which constrain the symbolic heaps in the pre- and postcondition are standard for SL [75] and will be shown in Sect. 2.

Given the spec (1), SuSLik takes less than 20 s to generate the program in Fig. 1, written in a core C-like language, as well as a formal proof that the program satisfies the spec. Several things are noteworthy about this program. First, the code indeed does not perform any allocation, and instead accomplishes its goal by switching pointers (in lines 17, 18, 23, and 25); this makes it economical in terms of memory usage as only a low-level program can be: similar code written in a functional language like OCaml would inevitably rely on garbage collection. Second, the main function flatten relies on an auxiliary recursive function helper, which the programmer did not anticipate; in fact the need for this auxiliary—and its specification—is discovered by SuSLik completely automatically. All the programmer has to do to obtain a provably correct implementation of flatten is to write the spec (1) and define the two SL predicates it uses, which are, however, reusable across different programs.

At this point, a critical reader might be wondering whether this technology is mature enough to move past hand-crafted benchmarks and assist them in developing the next CompCert [48] or CertiKOS [31]. For one, the program in Fig. 1 does not seem optimal: a closer look reveals that the role of helper is to concatenate the lists obtained by flattening the two subtrees, resulting in the overall \(O(n^2)\) complexity wrt. the size of the original tree.Footnote 1 Apart from performance of synthesized programs, the reader might have the following concerns:

-

What is the class of programs this approach is fundamentally capable of synthesizing? How picky is it to the exact shape of input specifications?

-

Is the proof search predictably fast across a wide range of problems?

-

Will the synthesized code be concise and easy to understand?

-

Finally, what are the “killer apps” for this technology and in which domains can we hope for its adoption for practical need?

The goal of this manuscript is precisely to illustrate the remaining challenges in SL-based synthesis of heap-manipulating programs and outline some future research directions towards addressing these challenges. In the remainder of this paper we provide the necessary background and a survey of the results to date (Sect. 2); we then zoom in on the promising techniques for improving proof search (Sect. 3); in Sect. 4 we discuss the completeness of synthesis, outlining the work that needs to be done in order to formally characterize the class of programs that can and cannot be generated; in Sect. 5 we talk about possible extensions to the synthesis procedure for improving the quality of synthesized programs; finally, in Sect. 6 we discuss possible applications of SL-based synthesis, such as program repair, data migration, and concurrent programming.

2 State of the Art

2.1 Specifications

SuSLik takes as input a Hoare-style specification, i.e., a pair of a pre- and a post-condition. Consider, for example, a specification for a function swap,Footnote 2 which swaps the values of two pointers:

The precondition  states that the relevant part of the heap contains two memory locations, \(\mathtt{x}\) and \(\mathtt{y}\), which store values a and b, respectively. We also know that and \(\mathtt{x} \ne \mathtt{y}\), because the semantics of separating conjunction

states that the relevant part of the heap contains two memory locations, \(\mathtt{x}\) and \(\mathtt{y}\), which store values a and b, respectively. We also know that and \(\mathtt{x} \ne \mathtt{y}\), because the semantics of separating conjunction  require that the two heaps it connects be disjoint. The postcondition

require that the two heaps it connects be disjoint. The postcondition  demands that after executing the function, the values stored in \(\mathtt{x}\) and \(\mathtt{y}\) be swapped. This specification also implicitly guarantees that swap always terminates and executes without memory errors (e.g., null-pointer dereferencing). Note that \(\mathtt{x}\) and \(\mathtt{y}\) also appear as parameters to swap, and hence are program variables, i.e., can be mentioned in the synthesized program; the payloads a and b, on the other hand, are logical variables, implicitly universally quantified, and must not appear in the program. In the rest of this paper, we distinguish program variables from logical variables by using monotype font for the former.

demands that after executing the function, the values stored in \(\mathtt{x}\) and \(\mathtt{y}\) be swapped. This specification also implicitly guarantees that swap always terminates and executes without memory errors (e.g., null-pointer dereferencing). Note that \(\mathtt{x}\) and \(\mathtt{y}\) also appear as parameters to swap, and hence are program variables, i.e., can be mentioned in the synthesized program; the payloads a and b, on the other hand, are logical variables, implicitly universally quantified, and must not appear in the program. In the rest of this paper, we distinguish program variables from logical variables by using monotype font for the former.

In general, in a specification

, assertions

, assertions  ,

,  both have the form

both have the form  , where the spatial part

, where the spatial part  describes the shape of the heap, while the pure part

describes the shape of the heap, while the pure part  is a plain first-order formula that states the relations between variables (in (2) the pure part in both pre- and postcondition is trivially \(\mathsf {true}\), and hence omitted). For the spatial part, SuSLik employs the standard symbolic heap fragment of Separation Logic [66, 75]. Informally, a symbolic heap is a set of atomic formulas called heaplets joined with separating conjunction

is a plain first-order formula that states the relations between variables (in (2) the pure part in both pre- and postcondition is trivially \(\mathsf {true}\), and hence omitted). For the spatial part, SuSLik employs the standard symbolic heap fragment of Separation Logic [66, 75]. Informally, a symbolic heap is a set of atomic formulas called heaplets joined with separating conjunction  . The simplest kind of heaplet is a points-to assertion

. The simplest kind of heaplet is a points-to assertion  , describing a single memory location with address

, describing a single memory location with address  and payload

and payload  . An empty symbolic heap is represented with

. An empty symbolic heap is represented with  .

.

To capture linked data structures, such as lists and trees, SuSLik specifications use inductive heap predicates, which are standard in Separation Logic. For instance, the \(\mathsf {tree}\) predicate used in (1) is inductively defined as follows:

The predicate is parametrized by the root pointer  and the set of tree elements

and the set of tree elements  . This definition consists of two guarded clauses: the first one describes the empty tree (and applies when the root pointer is null), and the second one describes a non-empty tree. In the second clause, a tree node is represented by a three-element record starting at address

. This definition consists of two guarded clauses: the first one describes the empty tree (and applies when the root pointer is null), and the second one describes a non-empty tree. In the second clause, a tree node is represented by a three-element record starting at address  . Records are represented using a generalized form of the points-to assertion with an offset: for example, the heaplet

. Records are represented using a generalized form of the points-to assertion with an offset: for example, the heaplet  describes a memory location at the address

describes a memory location at the address  . The block assertion

. The block assertion  is an artifact of C-style memory management: it represents a memory block of three elements at address

is an artifact of C-style memory management: it represents a memory block of three elements at address  that has been dynamically allocated by malloc (and hence can be de-allocated by free). The first field of the record stores the payload

that has been dynamically allocated by malloc (and hence can be de-allocated by free). The first field of the record stores the payload  , while the other two store the addresses

, while the other two store the addresses  and

and  of the left and right subtrees, respectively. The two disjoint heaps

of the left and right subtrees, respectively. The two disjoint heaps  and

and  store the two subtrees. The pure part of the second clause indicates that the payload of the whole tree consists of

store the two subtrees. The pure part of the second clause indicates that the payload of the whole tree consists of  and the subtree payloads,

and the subtree payloads,  and

and  .

.

2.2 The Basics of Deductive Synthesis

The formal underpinning of SuSLik is a deductive inference system called Synthetic Separation Logic (SSL). Given a pre-/postcondition pair  ,

,  , deductive synthesis proceeds by constructing a derivation of the SSL synthesis judgment, denoted

, deductive synthesis proceeds by constructing a derivation of the SSL synthesis judgment, denoted  , for some program \(c\). In this derivation, \(c\) is the output program, constructed while searching for the proof of the synthesis goal

, for some program \(c\). In this derivation, \(c\) is the output program, constructed while searching for the proof of the synthesis goal  . Intuitively, the output program \(c\) should satisfy the Hoare triple

. Intuitively, the output program \(c\) should satisfy the Hoare triple  . The derivation is constructed by applying inference rules, a subset of which is presented in Fig. 2, and every inference rule “emits” a program fragment corresponding to this deduction.

. The derivation is constructed by applying inference rules, a subset of which is presented in Fig. 2, and every inference rule “emits” a program fragment corresponding to this deduction.

Figure 3 shows an SSL derivation for swap, using inference rules of Fig. 2. The derivation, read bottom-up, starts with the pre/post pair from (2) as the synthesis goal; each rule application simplifies the goal until both the pre- and the post-heap are empty, and might also prepend a statement (highlighted in grey) to the output program. In the initial goal, the Read rule can be applied to the heaplet  to read the logical variable

to read the logical variable  from location x into a fresh program variable a1; the second application of Read similarly reads from the location y. At this point, the Write rule is applicable to the post-heaplet

from location x into a fresh program variable a1; the second application of Read similarly reads from the location y. At this point, the Write rule is applicable to the post-heaplet  because its right-hand side only mentions program variables and can be directly written into the location \(\mathtt x\); note that this rule equalizes the corresponding heaplets in the pre- and post-condition. After two applications of Write, the pre- and the post-heap become equal and can be simply cancelled out by the Frame rule, leaving \(\mathsf {emp}\) on either side of the goal; the terminal rule Emp then concludes the derivation. Although very simple, this example demonstrates the secret behind SuSLik ’s efficiency: the shape of the specification restricts the set of applicable rules and thereby guides program synthesis.

because its right-hand side only mentions program variables and can be directly written into the location \(\mathtt x\); note that this rule equalizes the corresponding heaplets in the pre- and post-condition. After two applications of Write, the pre- and the post-heap become equal and can be simply cancelled out by the Frame rule, leaving \(\mathsf {emp}\) on either side of the goal; the terminal rule Emp then concludes the derivation. Although very simple, this example demonstrates the secret behind SuSLik ’s efficiency: the shape of the specification restricts the set of applicable rules and thereby guides program synthesis.

2.3 Synthesis with Recursion and Auxiliary Functions

We now return to our introductory example—flattening a binary tree into a doubly-linked list—whose specification (1) we repeat here for convenience:

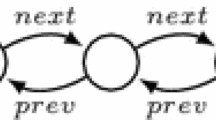

The definition of the  predicate has been shown above (3); the predicate

predicate has been shown above (3); the predicate  describes a doubly-linked list rooted at

describes a doubly-linked list rooted at  with back-pointer

with back-pointer  and payload set

and payload set  :

:

Note that in the spec (1) both the set  and the back-pointer

and the back-pointer  are logical variables, but

are logical variables, but  is implicitly universally quantified (a so-called ghost variable), because it occurs in the precondition, while

is implicitly universally quantified (a so-called ghost variable), because it occurs in the precondition, while  is existentially quantified (a so-called existential variable), because it only occurs in the postcondition. The reader might be wondering why use an existential here instead of a null pointer: as we show below, such weakening is required to obtain the solution in Fig. 1; we discuss the alternative spec and corresponding solution in Sect. 4.

is existentially quantified (a so-called existential variable), because it only occurs in the postcondition. The reader might be wondering why use an existential here instead of a null pointer: as we show below, such weakening is required to obtain the solution in Fig. 1; we discuss the alternative spec and corresponding solution in Sect. 4.

At a high level, the synthesis of flatten proceeds by eagerly making recursive calls on the left and the right sub-trees, l and r, as illustrated in Fig. 4, which leads to the following synthesis goal:

Now the synthesizer must concatenate the two doubly-linked lists, rooted at l and r, together with the parent node x into a single list. Since the spec gives us no access to the last element of either of the two lists, this concatenation requires introducing a recursive auxiliary function to traverse one of the lists to the end. We now demonstrate how SuSLik synthesizes recursive calls and discovers the auxiliary using a single mechanism we call cyclic program synthesis [34], inspired by cyclic proofs in Separation Logic [11, 76]. The main idea behind cyclic proofs is that, in addition to reaching a terminal rule like Emp, a sub-goal can be “closed off” by forming a cycle to an identical companion goal earlier in the derivation; in SSL these cycles give rise to recursive calls.

Figure 5 depicts a cyclic derivation of flatten. For now let us ignore the applications of the Proc rule, which do not modify the synthesis goal; their purpose will become clear shortly. Given the initial goal (1), SuSLik first applies the Open rule, which unfolds the definition of  in the precondition and emits a conditional with one branch per clause of the predicate. The first branch (

in the precondition and emits a conditional with one branch per clause of the predicate. The first branch ( ) is trivially solved by skip, since a null pointer is both an empty tree and an empty list. The second branch is shown in Fig. 5: its precondition contains two predicate instances

) is trivially solved by skip, since a null pointer is both an empty tree and an empty list. The second branch is shown in Fig. 5: its precondition contains two predicate instances  and

and  for the two sub-trees of \(\mathtt x\).

for the two sub-trees of \(\mathtt x\).

Now SuSLik detects that either of those instances can be unified with the precondition  of the top-level goal, so it fires the Call rule, which uses cyclic reasoning to synthesize recursive calls. More specifically, Call has two premises: the first one synthesizes a recursive call and the second one the rest of the program after the call. The spec of the first premise must be identical to some earlier goal, so that it can be closed off by forming a cycle; in our example, the back-link

of the top-level goal, so it fires the Call rule, which uses cyclic reasoning to synthesize recursive calls. More specifically, Call has two premises: the first one synthesizes a recursive call and the second one the rest of the program after the call. The spec of the first premise must be identical to some earlier goal, so that it can be closed off by forming a cycle; in our example, the back-link  connects the first premise back to the top-level goal. Once a companion goal is identified, SuSLik inserts an application of Proc right above it: its purpose is to delineate procedure boundaries, or, in other words, give a name to the piece of code that the Call rule is trying to call. To ensure that recursion is terminating, we must prove that

connects the first premise back to the top-level goal. Once a companion goal is identified, SuSLik inserts an application of Proc right above it: its purpose is to delineate procedure boundaries, or, in other words, give a name to the piece of code that the Call rule is trying to call. To ensure that recursion is terminating, we must prove that  in the precondition of the Call ’s premise is strictly smaller than

in the precondition of the Call ’s premise is strictly smaller than  in the precondition of the companion (see [34] for more details about our termination checking mechanism).

in the precondition of the companion (see [34] for more details about our termination checking mechanism).

After the second application of Call (to  ), SuSLik arrives at the goal (5), with two lists in the precondition (marked

), SuSLik arrives at the goal (5), with two lists in the precondition (marked

in Fig. 5). Ignoring again the application of Proc, which will be inserted later, SuSLik proceeds by unfolding the list

in Fig. 5). Ignoring again the application of Proc, which will be inserted later, SuSLik proceeds by unfolding the list  via Open, eventually arriving at the goal

via Open, eventually arriving at the goal

: this goal again has two lists in the precondition but one of them is now smaller (it is the tail of

: this goal again has two lists in the precondition but one of them is now smaller (it is the tail of  ). At this point Call detects that (a sub-heap of) goal

). At this point Call detects that (a sub-heap of) goal

can be unified,Footnote 3 with goal

can be unified,Footnote 3 with goal

thus forming the cycle

thus forming the cycle

, which this time links to an internal goal in the derivation instead of the top-level goal. As before SuSLik inserts an application of the Proc rule just above the companion goal

, which this time links to an internal goal in the derivation instead of the top-level goal. As before SuSLik inserts an application of the Proc rule just above the companion goal

, thereby abducing an auxiliary procedure with a fresh name.

, thereby abducing an auxiliary procedure with a fresh name.

2.4 Implementation and Empirical Results

The most up-to-date implementation of SuSLik is publicly available at:

Table 1 collects the results of running SuSLik on benchmarks from our prior work [19, 34, 70, 93] as well as seven new benchmarks, which we added to illustrate various challenges discussed in subsequent sections.Footnote 4 Most existing benchmarks had been adapted from the literature on verification and synthesis [24, 47, 50, 72]. In addition to standard textbook data structures, our benchmarks include operations on two less common data structures, which to the best of our knowledge cannot be handled by other synthesizers. A rose tree [51] is a variable-arity tree, where child nodes are stored in a linked list; it is described in SL by two mutually recursive predicates (

for the tree and

for the tree and

for the list of children), and our synthesized operations on rose trees are also mutually recursive. A packed tree is a binary tree serialized into an array; it is interesting because operations on packed trees use non-trivial pointer arithmetic (we discuss them in Sect. 6).

for the list of children), and our synthesized operations on rose trees are also mutually recursive. A packed tree is a binary tree serialized into an array; it is interesting because operations on packed trees use non-trivial pointer arithmetic (we discuss them in Sect. 6).

Apart from the size of each program (in statements), we also report the ratio of code size to spec size (both in AST nodes) as a measure of synthesis utility. For the majority of the benchmarks the generated code is larger than the specification, sometimes significantly (up to 12x); the only exceptions are benchmarks with very convoluted specs, such as BST rotations (benchmarks 43 and 44), or extremely simple programs, such swap from Fig. 3 (benchmark 1) and prepending an element to a sorted list (benchmark 19).

A number of benchmarks generate more than one procedure: those programs require recursive auxiliaries [34], such as our running example flatten from Fig. 1 (benchmark 40). It is worth mentioning that benchmarks 37 through 41 encode different versions of flattening a binary tree into a singly or doubly-linked list: 37 and 38 are simplified versions that do not require discovering auxiliaries because they contain additional hints from the user (a library function for appending lists in 37 and an inductive specification for flatten with a list accumulator in 38); 39 is similar to 40 but returns a singly-linked list (and hence requires allocation). Finally 41 is a version of 40 that uses

instead of

instead of

as the back-pointer of the output list; this precludes SuSLik from generating an auxiliary for appending two lists, and instead it discovers a slightly more complex, but linear-time solution, which we discuss in Sect. 4.

as the back-pointer of the output list; this precludes SuSLik from generating an auxiliary for appending two lists, and instead it discovers a slightly more complex, but linear-time solution, which we discuss in Sect. 4.

The missing synthesis times for some benchmarks indicate that they could not be synthesized automatically after 30 min, but were possible to solve in an “interactive” mode, where the search has been given hints on how to proceed in the case of multiple choices. We elaborate on the possibility of generating those programs automatically in subsequent sections. Apart from regular SuSLik time we also report time for two variations discussed in Sect. 3.

3 Proof Search

Similarly to existing deductive program synthesizers [43], SuSLik adopts best-first And/Or search [54] to search for a program derivation. The search space is represented as a tree with two types of nodes. An Or-node corresponds to a synthesis goal, whose children are alternative derivations, any of which is sufficient to solve the goal. An And-node corresponds to a rule application, whose children are premises, all of which need to be solved in order to build a derivation. Each goal has a cost, which is meant to estimate how difficult it is to solve. The search works by maintaining a worklist of Or-nodes that are yet to be explored. In each iteration, the node with the least cost is dequeued and expanded by applying all rules enabled according to a proof strategy; the node’s children are then added back to the worklist.

The proof strategy and the cost function are crucial to the performance of the proof search. In current SuSLik implementation both are ad-hoc and brittle; in the rest of the section we outline possible improvements to their design.

3.1 Pruning via Proof Strategies

A proof strategy is a function that takes in a synthesis goal and its ancestors in the search tree, and returns a list of rules enabled to expand that goal. Without strategies, the branching factor of the search would be impractically large. SuSLik ’s strategies are based on the observation that some orders of rule applications are redundant, and hence can be eliminated from consideration without loss of completeness. Identifying redundant orders is non-trivial and is currently done informally, increasing the risk of introducing incomplete strategies.

For example, SuSLik ’s proof strategy precludes applying Call if Close (a rule that unfolds a predicate in the postcondition) has been applied earlier in the derivation. The reasoning is that Call only operates on the precondition, while Close only operates on the postcondition, hence the two rule applications must be independent, and can always be reordered so that Call is applied first. But it gets more complicated once we let Call abduce auxiliaries: now applying Call after Close could be useful to give it access to more companion goals, whose postconditions differ from that of the top-level goal. Consider for example copying a rose tree with the following spec:

Copying a rose tree seems to require two mutually-recursive procedures: the main one (6) that copies an  and an auxiliary one that copies the list of its

and an auxiliary one that copies the list of its  , and hence has

, and hence has  instead of

instead of  in its postcondition. To our surprise, however, our proof strategy does not preclude the derivation of rtcopy (see benchmark 52 in Table 1): in this derivation, the auxiliary returns two

in its postcondition. To our surprise, however, our proof strategy does not preclude the derivation of rtcopy (see benchmark 52 in Table 1): in this derivation, the auxiliary returns two  s, which are then unfolded after the call to extract the relevant

s, which are then unfolded after the call to extract the relevant  .

.

Future Directions. To develop more principled yet efficient strategies, we need to turn to the proof theory community, which has accumulated a rich body of work on efficient proof search. One technique of particular interest—focusing [53]—defines a canonical representation of proofs in linear logic [29] (more precisely, a canonical ordering on the application of proof rules, which can be enforced during the search by tracing local properties). Existing program synthesis work [27, 79] has leveraged ideas from focusing, but only in the setting of type inhabitation for pure lambda calculi. SuSLik takes advantage of some of these ideas, too: it designates some rules, such as Read and logical normalization rules, to be invertible; these rules can be applied eagerly and need not be backtracked. Beyond focusing, we might explore the applicability of more advanced canonical representations of programs and proofs [1, 33, 79]. We believe that these techniques will help us formalize and leverage inherent SSL symmetries, such as that two programs operating on disjoint parts of the heap can be executed in any order.

3.2 Prioritization via a Cost Function

When selecting the next goal to expand, SuSLik ’s best-first search relies on a heuristic cost function of the form (with \(p, w > 1\)):

In other words, a cost of a synthesis goal is a (weighted) total cost of all heaplets in its pre- and postcondition. The intuition is that the synthesizer needs to eliminate all these heaplets in order to apply the terminal Emp rule, so each heaplet contributes to the goal being “harder to solve”. Predicates are more expensive than other heaplets, because they can be unfolded and produce more heaplets. In addition, for each predicate instance  SuSLik keeps track of the number of times it has been unfolded (

SuSLik keeps track of the number of times it has been unfolded ( ) or has gone through a call (

) or has gone through a call ( ); factoring this into the cost prevents the search from getting stuck in an infinite chain of unfolding or calls. Finally, it can be useful to give a higher weight to the heaplets in the precondition, because many rules that create expensive search branches (most notably Call) operate on the precondition.

); factoring this into the cost prevents the search from getting stuck in an infinite chain of unfolding or calls. Finally, it can be useful to give a higher weight to the heaplets in the precondition, because many rules that create expensive search branches (most notably Call) operate on the precondition.

Our implementation currently uses \(p = 3, w = 2\), which is a result of manual tuning. Column TimeSC in Table 1 shows how synthesis times change if we set \(p = 1\). As you can see, SuSLik ’s performance is quite sensitive even to this small change: four benchmarks, which originally took under 30 s, now time out after 30 minutes, while benchmark 24, on the contrary, is solved five times faster. These results suggest that different synthesis tasks benefit from different search parameters, and that we might need a mechanism to tune SuSLik ’s search strategy for a given synthesis task.

In addition, because the cost heuristic is not efficient enough at guiding the search, we introduce hard bounds on the number of unfoldings and calls  and

and  for a predicate instance. Column TimeNB in Table 1 shows the results of running SuSLik without these bounds: as you can see, 19 benchmarks time out (compared to only two in the original setup). The requirement to guess sufficient bounds for each benchmark hampers the usability of SuSLik, hence in the future we would like to replace them with a better cost function.

for a predicate instance. Column TimeNB in Table 1 shows the results of running SuSLik without these bounds: as you can see, 19 benchmarks time out (compared to only two in the original setup). The requirement to guess sufficient bounds for each benchmark hampers the usability of SuSLik, hence in the future we would like to replace them with a better cost function.

Future Directions. To guide the search in a more intelligent and flexible way, we turn to extensive recent work on using learned models to guide proof search [8, 28, 49, 78, 95] and program synthesis [5, 15, 39, 46, 55, 82]. Guiding deductive synthesis would most likely require a non-trivial combination of these two lines of work.

In the area of proof search, existing techniques are used to select the next strategy in a proof assistant script [59, 60, 78, 95], or select a subset of clauses to use in a first-order resolutions proof [9, 49]. Although these techniques are not directly applicable to our context, we can likely borrow some high-level insights, such as two-phased search [49], which applies a slow neural heuristic to make important decisions in early stages of search (e.g., which predicate instances to unfold), and then less accurate but much faster hand-coded heuristics take over. Among the many techniques for guiding program synthesis, neural-guided deductive search (NGDS) [39] might be the natural place to start, since it shows how to condition the next synthesis step on the current synthesis sub-goal.

At the same time we also expect the limited size of the available dataset (i.e., the benchmarks from Table 1) would hamper the application of deep learning to SuSLik. An alternative approach is to encode feature extractors [58] and apply machine learning algorithms to the result of such feature extractors. Another approach is to learn a coarse-grained model from available data and then adjust it during search, based on the feedback from incomplete derivations, as in [6, 15, 82].

4 Completeness

Soundness and completeness are desirable properties of synthesis algorithms. In our case, it is natural to formalize these properties relative to an underlying verification logic, which defines Hoare triples  , with the total correctness interpretation “starting from a state satisfying

, with the total correctness interpretation “starting from a state satisfying  , program c will execute without memory errors and terminate in a state satisfying

, program c will execute without memory errors and terminate in a state satisfying  ”. This logic can be defined in the style of Smallfoot [7], using a combination of symbolic execution rules and logical rules, with the addition of cyclic proofs to handle recursion [76].

”. This logic can be defined in the style of Smallfoot [7], using a combination of symbolic execution rules and logical rules, with the addition of cyclic proofs to handle recursion [76].

Relative soundness means that any solution SuSLik finds can be verified:  . Relative completeness means that whenever there exists a verifiable program, SuSLik can find one:

. Relative completeness means that whenever there exists a verifiable program, SuSLik can find one:  . Proving relative soundness is rather straightforward, because SSL rules are essentially more restrictive versions of verification rules, hence an SSL derivation can be rewritten by translating every

. Proving relative soundness is rather straightforward, because SSL rules are essentially more restrictive versions of verification rules, hence an SSL derivation can be rewritten by translating every  into

into  .Footnote 5 Completeness on the other hand is quite tricky, exactly because SSL rules impose more restrictions on the pre- and postconditions, in order to avoid blind enumeration of programs and instead guide synthesis by the spec. In the rest of this section we look into two major sources of relative incompleteness of SSL: recursive auxiliaries and pure reasoning.

.Footnote 5 Completeness on the other hand is quite tricky, exactly because SSL rules impose more restrictions on the pre- and postconditions, in order to avoid blind enumeration of programs and instead guide synthesis by the spec. In the rest of this section we look into two major sources of relative incompleteness of SSL: recursive auxiliaries and pure reasoning.

4.1 Recursive Auxiliaries

A common assumption and source of incompleteness in recursive program synthesis [43, 67, 69] is that (1) synthesis is performed one function f at a time: if auxiliaries are required, their specifications are supplied explicitly; and (2) the specification \(\varPhi \) of f is inductive: one can prove that \(\varPhi \) holds of f’s body assuming it holds of each recursive call. This restriction hampers the usability of synthesizers, because the user must guess all required auxiliaries and possibly generalize \(\varPhi \) to make it inductive, which in most cases requires knowing the implementation of f. As we have shown in Sect. 2, SuSLik mitigates these limitations to some extent, as it is able to discover auxiliary functions, such as helper in Fig. 1, automatically. To make the search tractable, however, cyclic synthesis restricts the space of auxiliary specifications considered by SuSLik to synthesis goals observed earlier in the derivation. Although this restriction is easy to state, we still do not have a formal characterization (or even a firm intuitive understanding) of the class of auxiliaries that SSL fundamentally can and cannot derive. Below we illustrate the intricacies on a series of examples.

Intersection of lists with unique elements. This implementation cannot be synthesized from (7), but a slight modification of it can, as explained in the text.

Generalizing Pure Specs. One reason SuSLik might fail to abduce an auxiliary is that the pure part of the companion’s goal might be too specific for the recursive call. Let us illustrate this phenomenon using the list intersection problem (benchmark 16 in Table 1) with the following specification, where  denotes a singly-linked list with unique elements:

denotes a singly-linked list with unique elements:

Given this specification, we expected SuSLik to generate the program shown in Fig. 6. To compute the intersection of two input lists rooted in x and y, this program first computes the intersection of y and the tail of x (line 9). The auxiliary insert then traverses y to check if it contains v (the head of x), and if so, inserts it into the intermediate result z (line 23), and otherwise, de-allocates the node x (line 15). This program, however, cannot be derived by SSL; to see why let us take a closer look at the synthesis goal after line 9, which serves as the spec for insert:

The issue here is that the pure spec is too specific: the precondition  and the postcondition

and the postcondition  define the behavior of this function in terms of the elements of input lists x and y, but the recursive call in line 23 replaces y with its tail n so these specifications do not hold anymore. The solution is to generalize the pure part of spec (8), so that it does not refer to \(S_x\):

define the behavior of this function in terms of the elements of input lists x and y, but the recursive call in line 23 replaces y with its tail n so these specifications do not hold anymore. The solution is to generalize the pure part of spec (8), so that it does not refer to \(S_x\):

Alas, such a transformation of the pure spec is beyond SuSLik ’s capabilities.

To our surprise, SuSLik was nevertheless able to generate a solution to this problem by finding an alternative implementation for insert, shown on the right. This implementation appends v to z instead of prepending it; more specifically, insert starts by traversing z, and once it reaches the base case, it calls another auxiliary, intersectOne (omitted for brevity), which traverses y and returns a list whose elements are  (i.e., a list with at most one element), which is then appended to the intersection. At a first glance it is unclear how this superfluous traversal of z can possibly help with generalizing the spec (8); the key to this mystery lies in the recursive call in line 22: note that as the second parameter, instead of the input list x, it actually uses z after replacing its head element with v! This substitution makes the overly restrictive spec of (8) actually hold.

(i.e., a list with at most one element), which is then appended to the intersection. At a first glance it is unclear how this superfluous traversal of z can possibly help with generalizing the spec (8); the key to this mystery lies in the recursive call in line 22: note that as the second parameter, instead of the input list x, it actually uses z after replacing its head element with v! This substitution makes the overly restrictive spec of (8) actually hold.

Of course this implementation is overly convoluted and inefficient, so in the future we plan equip SuSLik with the capability to generalize pure specs. To this end, we plan to combine deductive synthesis with invariant inference techniques via bi-abduction [86]. For instance, whenever the Call rule identifies a companion goal, we can replace its pure pre- and post-condition \(\phi \) and \(\psi \) with unknown predicates \(U_{\phi }\) and \(U_{\psi }\). During synthesis, we would maintain a set of Constrained Horn Clauses over these unknown predicates (starting with: \(\phi \Rightarrow U_{\phi }\) and \(U_{\psi } \Rightarrow \psi \)); these constraints can be solved incrementally, like in our prior work [69], pruning the current derivation whenever the constraints have no solution. If synthesis succeeds, the assignment to \(U_{\phi }\) and \(U_{\psi }\) corresponds to the inductive generalization of the original auxiliary spec. Since only the pure part of the spec is generalized, the spatial part can still be used to guide synthesis.

Accumulator Parameters. It is common practice to introduce an auxiliary recursive function to thread through additional data in the form of “accumulator” inputs or outputs. Cyclic program synthesis has trouble conjuring up arbitrary accumulators, since it constructs auxiliary specifications from the original specification via unfolding and making recursive calls.

Consider linked list reversal (23 in Table 1): SuSLik generates an inefficient, quadratic version of this program, which reverses the tail of the list and then appends its head to the result (hence discovering “append element” as the auxiliary). The canonical linear-time version of reversal requires an auxiliary with two list arguments—the already reversed portion and the portion yet to be reversed—and hence is outside of SuSLik ’s search space: cyclic synthesis cannot encounter a precondition with two lists, as it starts with a single list predicate in the precondition, and neither unfolding nor making a call can duplicate it.

There are examples, however, where SuSLik surprized us by inventing the necessary accumulator parameters. Consider again our running example, flattening a tree into a doubly-linked list. Recall that given the spec (1), SuSLik synthesizes an inefficient implementation with quadratic complexity. A canonical linear-time solution requires an auxiliary that takes as input a tree and a list accumulator, and simply prepends every traversed tree element to this list; because of the accumulator parameter, discovering this auxiliary seems to be outside of scope of cyclic synthesis. To our surprise, SuSLik is actually able to synthesize a linear-time version of flatten, shown in Fig. 7 (and encoded as benchmark 41 in Table 1), given the following specification:

Compared with (1), the existential back-pointer  of the output list is replaced with the null-pointer

of the output list is replaced with the null-pointer  , precluding SuSLik from traversing the output of the recursive call (cf. Sect. 2), which in this case comes in handy, since it enforces that every tree element is traversed only once.

, precluding SuSLik from traversing the output of the recursive call (cf. Sect. 2), which in this case comes in handy, since it enforces that every tree element is traversed only once.

The new solution starts the same way as the old one, by flattening the left sub-tree l, which leads to the following synthesis goal after line 6:

As you can see, the precondition now contains a tree and a list! Since it cannot recurse on the list  , the synthesizer instead proceeds to unfold the tree

, the synthesizer instead proceeds to unfold the tree  and then use (11) as a companion for two recursive calls on r’s sub-trees, turning (11) into a specification for helper in Fig. 7.

and then use (11) as a companion for two recursive calls on r’s sub-trees, turning (11) into a specification for helper in Fig. 7.

4.2 Pure Reasoning

To enable synthesis of the wide range of programs demonstrated in Sect. 2, SuSLik must support a sufficiently rich logic of pure formulas. Our implementation currently supports linear integer arithmetic and sets, but the general idea is to make SuSLik parametric wrt. the pure logic (as long as it can be translated into an SMT-decidable theory), and outsource all pure reasoning to an SMT solver.

In the context of synthesis, however, outsourcing pure reasoning is trickier than it might seem (or at least trickier than in the context of verification). Consider the following seemingly trivial goal:

This goal can be solved by incrementing the value stored in x, i.e., by the program let a1 = *x; *x = a1 + 1. Verifying this program is completely straightforward: a typical SL verifier would use symbolic execution to obtain the final symbolic state

, reducing verification to a trivial SMT query \(\exists a. a + 10 + 1 \ne a + 11\). Synthesizing this program, on the other hand, requires guessing the program expression a1 + 1, which does not occur anywhere in the specification.

, reducing verification to a trivial SMT query \(\exists a. a + 10 + 1 \ne a + 11\). Synthesizing this program, on the other hand, requires guessing the program expression a1 + 1, which does not occur anywhere in the specification.

To avoid blind enumeration of program expressions, SuSLik attempts to reduce the goal (12) to a syntax-guided synthesis (SyGuS) query [2]:

Queries like this can be outsourced to numerous existing SyGuS solvers [3, 32, 46, 77]; SuSLik uses CVC4 [74] for this purpose. Because SyGuS queries are expensive, the challenge is to design SSL rules to issue these queries sparingly.

SSL derivation for goal (12).

Figure 8 shows how two pure reasoning rules, \(\exists \) -Intro and Solve- \(\exists \), work together to solve the goal (12). \(\exists \) -Intro is triggered by the postcondition heaplet  , whose right-hand side is a ghost expression, which blocks the application of Write. \(\exists \) -Intro replaces the ghost expression with a program-level existential variable

, whose right-hand side is a ghost expression, which blocks the application of Write. \(\exists \) -Intro replaces the ghost expression with a program-level existential variable  (i.e., an existential which can only be instantiated with program expressions). Now Solve- \(\exists \) takes over: this rule constructs a SyGuS query using all existentials in the current goal as unknown terms and the pure pre- and post-condition as the SyGuS specification. In this case, the SyGuS query succeeds, replacing the existential

(i.e., an existential which can only be instantiated with program expressions). Now Solve- \(\exists \) takes over: this rule constructs a SyGuS query using all existentials in the current goal as unknown terms and the pure pre- and post-condition as the SyGuS specification. In this case, the SyGuS query succeeds, replacing the existential  with the program term \(\mathtt{a1} + 1\). From here on, the regular Write rule finishes the job.

with the program term \(\mathtt{a1} + 1\). From here on, the regular Write rule finishes the job.

Note that although the goal (12) is artificially simplified, it is extracted from a real problem: benchmark 32 in Table 1, length of a list of lists. In fact the versions of SuSLik reported in our previous work were incapable of solving this benchmark because they were lacking the \(\exists \) -Intro rule, which we only introduced recently. Although the current combination of pure reasoning rules works well for all our benchmarks, it is still incomplete (even modulo the completeness of the pure synthesizer), because, for efficiency reasons, Solve- \(\exists \) only returns a single solution to the SyGuS problem, even if the pure specification allows for many. This might be insufficient when Solve- \(\exists \) is called before the complete pure postcondition is known (for example, to synthesize actual arguments for a call). Developing an approach to outsourcing pure reasoning that is both complete and efficient is an open challenge for future work.

5 Quality of Synthesized Programs

Should we hope that the output of deductive synthesis will be directly integrated into high-assurance software, we need to make sure that the code it generates is not only correct, but also efficient, concise, readable, and maintainable. The current implementation of SuSLik does not take any of these considerations into account during synthesis; in this section we discuss two of these challenges, and outline some directions towards addressing them.

5.1 Performance

We have already mentioned examples of SuSLik solutions with sub-optimal asymptotic complexity in Sect. 4: for example, SuSLik generates quadratic programs for linked list reversal and tree flattening instead of optimal linear-time versions. Although a linear-time solution to tree flattening from Fig. 7 is actually within SuSLik ’s search space (even with the more general spec (1)), SuSLik opts for the sub-optimal one simply because it has no ability to reason about performance and hence has no reason to prefer one over the other.

To enable SuSLik to pick the more efficient of the two implementations, we can integrate SSL with a resource logic, such as [56], following the recipe from our prior work on resource-guided synthesis [44]. One option is to annotate each points-to heaplet  with non-negative potential

with non-negative potential  , which can be used to pay for execution of statements, according to a user-defined cost model. Predicate definitions can describe how potential is allocated inside the data structure; for example, we can define a tree with p units of potential per node as follows:

, which can be used to pay for execution of statements, according to a user-defined cost model. Predicate definitions can describe how potential is allocated inside the data structure; for example, we can define a tree with p units of potential per node as follows:

We can now annotate the specification (1) with potentials as follows:

If we define the cost of a procedure call to be 1, and the cost of other statements to be 0, this specification guarantees that flatten only makes a number of recursive calls that is linear in the size of the tree (namely, two calls per tree element). With this specification, the inefficient solution in Fig. 1 does not verify: since helper traverses the list r, it must assign some positive potential to every element of this list in order to pay for the call in line 24, but the specification (13) assigns no potential to the output list. On the other hand, the efficient solution in Fig. 7 verifies: after the recursive call to flatten in line 6 we obtain  ; helper verifies against this specification since it only traverses the tree r and hence can use the two units of potential stored in its root to pay for the two calls in lines 21 and 24. In fact, the user need not guess the precise amount of potential

; helper verifies against this specification since it only traverses the tree r and hence can use the two units of potential stored in its root to pay for the two calls in lines 21 and 24. In fact, the user need not guess the precise amount of potential  in the spec (13): any constant

in the spec (13): any constant  would work to reject the quadratic solution and admit the linear one.

would work to reject the quadratic solution and admit the linear one.

5.2 Readability

Although readability is hard to quantify, we have noticed several patterns in SuSLik-generated code that are obviously unnatural to a human programmer, and hence need to be addressed. Perhaps the most interesting problem arises due to inference of recursive auxiliaries: because SuSLik has no notion of abstraction boundaries, the allocation of work between the different procedures is often sub-optimal. One example is benchmark 39 in Table 1, which flattens a binary tree into a singly-linked list. This example is discussed in detail in our prior work [34]; the solution is similar to flatten from Fig. 1, except that this transformation cannot be performed in-place: instead, the original tree nodes have to be deallocated, and new list nodes have to be allocated. Importantly, in SuSLik ’s solution, tree nodes are deallocated inside the helper function, whose main purpose is to append two lists. A better design would be to perform deallocation in the main function, so that helper has no knowledge of tree nodes whatsoever. To address this issue in the future we might consider different quality metrics when abducing specs for auxiliaries, such as encouraging all heaplets generated by unfolding the same predicate to be processed by the same procedure.

6 Applications

6.1 Program Repair

In our statement of the synthesis problem, complete programs are generated from scratch from Hoare-style specifications. But what if the program is already written previously but is buggy—would it be possible to automatically find a fix for it if we know what its specification is? This line of research, employing deductive synthesis for automated program repair [30], known as deductive program repair, has been explored in the past for functional programs [42] and simple memory safety properties [90], and only recently has been extended to heap-manipulating programs using the approach pioneered by SuSLik [63].

The SL-based deductive repair relies on existing automated deductive verifiers [17] to identify a buggy code fragment (which breaks the verification), followed by the discovery of the correct specification, which is used for the subsequent synthesis of the patch. The main shortcoming of the existing SL-based repair tools is the need to provide the top-level specs for the procedures in order to enable their verification (and potential bug discovery) in the first place. As a way to improve the utility of those tools, a promising direction is to employ existing static analyzers, such as Infer [12], to derive those specifications by abducing them from the usages of the corresponding functions [13].

6.2 Data Migration and Serialization

The pay-off of deductive synthesis is especially high for programs like tree flattening, which change the internal representation of a data structure without changing its payload; these programs usually have a simple specification, while their implementations can get much more intricate. One example where such programs can be useful is migration of persistent data: thanks to recent advancements in non-volatile memory (NVM) [40, 45, 84], large amounts of data are now persistently stored in memory, in arbitrary programmer-defined data structures. If the programmer decides to change the data structure, data has to be migrated between the old and the new representations, and writing those migration functions by hand can be tedious. In addition, reallocating large data structures is often prohibitively expensive, so the migration needs to be performed in-place, without reallocation. As we have demonstrated in our running example, this is something that can be easily specified and synthesized in SuSLik.

Another real-world application of this kind of programs is data serialization and de-serialization, where data is transformed back and forth between a standard pointer-based representation and an array so that it can be written to disk or sent over the network [16, 91]. For example, Fig. 9 shows a pointer-based full binary tree and its serialized (or packed) representation, where the nodes are laid out sequentially in pre-order [92]. The right-hand-side of the figure shows an SL predicate \(\mathsf {ptree}\) that describes packed trees: every node x starts with a \( tag \) that indicates whether it is a leaf; if x is not a leaf, its left child starts at the address \(x + 2\) and its right child at \(x + 2 \cdot (1 + n_l)\), where \(n_l\) is the size of the left child, which is typically unknown at the level of the program.

Imagine a programmer wants to synthesize functions that translate between these two representations, i.e., pack and unpack the tree. The most natural specification for unpack would be:

This specification, however, cannot be implemented in SSL: when x is an internal node, we do not know the address of its right subtree, so we have nothing to pass into the second recursive call. Instead unpack must traverse the packed tree and discover the address of the right subtree by moving past the end of the left subtree; this can be implemented by returning the address past the end of the \(\mathsf {ptree}\) together with the root of the newly built \(\mathsf {tree}\), as a record:

With this specification, SuSLik is able to synthesize unpack in 20 s (benchmark 54 in Table 1); as for pack (benchmark 53), it is within the search space (which we confirmed in interactive mode) but automatic search currently times out after 30 minutes. In the future, it would be great if SuSLik could automatically discover an auxiliary with specification (15), given only (14) as inputs; this is similar to the problem of discovering accumulator parameters, which we discussed in Sect. 4, and is outside of capabilities of cyclic synthesis at the moment.

6.3 Fine-Grained Concurrency

Finally, we envision that deductive logic-based synthesis will make it possible to tackle the challenge of synthesizing provably correct concurrent libraries. The most efficient shared-memory concurrent programs implement custom synchronization patterns via fine-grained primitives, such as compare-and-set (CAS). Due to sophisticated interference scenarios between threads, reasoning about such programs is particularly challenging and error-prone, and is the reason for the existence of many extensions of Concurrent Separation Logic (CSL) [10, 65] for verification of fine-grained concurrency [22, 23, 36, 38, 61, 85, 87,88,89].

For instance, Fine-Grained Concurrent Separation Logic (FCSL) [61, 80, 81], takes a very specific approach to fine-grained concurrency verification, following the tradition of logics such as LRG [25] and CAP [22] and building on the idea of splitting the specification of a concurrent library to a resource protocol and Hoare-style pre/postconditions. State-of-the art automated tools for fine-grained concurrency verification require one to describe both the protocol and Hoare-style pre/postconditions for the methods to be verified [21, 94]. We believe, it should be possible to take those two components and instead synthesize the concurrent method implementations. The resource protocol will provide an extended set of language primitives to compose programs from. Those data structure-specific primitives can be easily specified in FCSL and contribute derived inference rules describing when these primitives can be used safely.

Notes

- 1.

In Sect. 4 we show what it takes to derive an alternative, linear-time solution.

- 2.

Our language has no return statement, hence all functions have return type void, which is omitted from the spec; return values are emulated by writing to the heap.

- 3.

This is where we rely on the existential back-pointer in (1): if we replace \(y_l\) with 0, then

and

and  would not unify.

would not unify. - 4.

The code and benchmarks accompanying this paper are available online [35].

- 5.

In our recent work we have developed an automatic translation from SSL derivations into three Coq-based verification logics [93].

References

Acclavio, M., Straßburger, L.: From syntactic proofs to combinatorial proofs. In: Galmiche, D., Schulz, S., Sebastiani, R. (eds.) IJCAR 2018. LNCS (LNAI), vol. 10900, pp. 481–497. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-94205-6_32

Alur, R., et al.: Syntax-guided synthesis. In: FMCAD, pp. 1–8. IEEE (2013)

Alur, R., Radhakrishna, A., Udupa, A.: Scaling enumerative program synthesis via divide and conquer. In: Legay, A., Margaria, T. (eds.) TACAS 2017. LNCS, vol. 10205, pp. 319–336. Springer, Heidelberg (2017). https://doi.org/10.1007/978-3-662-54577-5_18

Appel, A.W., et al.: Program Logics for Certified Compilers. Cambridge University Press (2014)

Balog, M., Gaunt, A.L., Brockschmidt, M., Nowozin, S., Tarlow, D.: Deepcoder: learning to write programs. arXiv preprint arXiv:1611.01989 (2016)

Barke, S., Peleg, H., Polikarpova, N.: Just-in-time learning for bottom-up enumerative synthesis. Proc. ACM Program. Lang. 4(OOPSLA), 227:1–227:29 (2020)

Berdine, J., Calcagno, C., O’Hearn, P.W.: Symbolic execution with separation logic. In: Yi, K. (ed.) APLAS 2005. LNCS, vol. 3780, pp. 52–68. Springer, Heidelberg (2005). https://doi.org/10.1007/11575467_5

Blaauwbroek, L., Urban, J., Geuvers, H.: Tactic learning and proving for the coq proof assistant. In: LPAR. EPiC Series in Computing, vol. 73, pp. 138–150. EasyChair (2020)

Blanchette, J.C., Greenaway, D., Kaliszyk, C., Kühlwein, D., Urban, J.: A learning-based fact selector for Isabelle/HOL. J. Autom. Reason. 57(3), 219–244 (2016)

Brookes, S., O’Hearn, P.W.: Concurrent separation logic. ACM SIGLOG News 3(3), 47–65 (2016)

Brotherston, J., Bornat, R., Calcagno, C.: Cyclic proofs of program termination in separation logic. In: POPL, pp. 101–112. ACM (2008)

Calcagno, C., Distefano, D.: Infer: an automatic program verifier for memory safety of C programs. In: Bobaru, M., Havelund, K., Holzmann, G.J., Joshi, R. (eds.) NFM 2011. LNCS, vol. 6617, pp. 459–465. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-20398-5_33

Calcagno, C., Distefano, D., O’Hearn, P.W., Yang, H.: Compositional shape analysis by means of bi-abduction. J. ACM 58(6), 26:1–26:66 (2011)

Chajed, T., Tassarotti, J., Kaashoek, M.F., Zeldovich, N.: Verifying concurrent, crash-safe systems with perennial. In: SOSP, pp. 243–258. ACM (2019)

Chen, Y., Wang, C., Bastani, O., Dillig, I., Feng, Yu.: Program synthesis using deduction-guided reinforcement learning. In: Lahiri, S.K., Wang, C. (eds.) CAV 2020. LNCS, vol. 12225, pp. 587–610. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-53291-8_30

Chilimbi, T.M., Hill, M.D., Larus, J.R.: Cache-conscious structure layout. In: PLDI, pp. 1–12. ACM (1999)

Chin, W.-N., David, C., Nguyen, H.H., Qin, S.: Automated verification of shape, size and bag properties via user-defined predicates in separation logic. Sci. Comput. Program. 77(9), 1006–1036 (2012)

Chlipala, A.: Mostly-automated verification of low-level programs in computational separation logic. In: PLDI, pp. 234–245. ACM (2011)

Costea, A., Zhu, A., Polikarpova, N., Sergey, I.: Concise read-only specifications for better synthesis of programs with pointers. In: ESOP 2020. LNCS, vol. 12075, pp. 141–168. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-44914-8_6

Delignat-Lavaud, A., et al.: Implementing and proving the tls 1.3 record layer. In: S&P, pp. 463–482. IEEE Computer Society (2017)

Dinsdale-Young, T., da Rocha Pinto, P., Andersen, K.J., Birkedal, L.: Caper: automatic verification for fine-grained concurrency. In: Yang, H. (ed.) ESOP 2017. LNCS, vol. 10201, pp. 420–447. Springer, Heidelberg (2017). https://doi.org/10.1007/978-3-662-54434-1_16

Dinsdale-Young, T., Dodds, M., Gardner, P., Parkinson, M.J., Vafeiadis, V.: Concurrent abstract predicates. In: D’Hondt, T. (ed.) ECOOP 2010. LNCS, vol. 6183, pp. 504–528. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-14107-2_24

Dodds, M., Feng, X., Parkinson, M., Vafeiadis, V.: Deny-guarantee reasoning. In: Castagna, G. (ed.) ESOP 2009. LNCS, vol. 5502, pp. 363–377. Springer, Heidelberg (2009). https://doi.org/10.1007/978-3-642-00590-9_26

Eguchi, S., Kobayashi, N., Tsukada, T.: Automated synthesis of functional programs with auxiliary functions. In: Ryu, S. (ed.) APLAS 2018. LNCS, vol. 11275, pp. 223–241. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-02768-1_13

Feng, X.: Local rely-guarantee reasoning. In: POPL, pp. 315–327. ACM (2009)

Ferraiuolo, A., Baumann, A., Hawblitzel, C., Parno, B.: Komodo: Using verification to disentangle secure-enclave hardware from software. In: SOSP, pp. 287–305. ACM (2017)

Frankle, J., Osera, P.-M., Walker, D., Zdancewic, S.: Example-directed synthesis: a type-theoretic interpretation. In: POPL, pp. 802–815. ACM (2016)

Gauthier, T., Kaliszyk, C., Urban, J.: TacticToe: learning to reason with HOL4 tactics. In: LPAR, EPiC Series in Computing, vol. 46, pp. 125–143. EasyChair (2017)

Girard, J.-Y.: Linear logic. Theor. Comput. Sci. 50, 1–102 (1987)

Le Goues, C., Pradel, M., Roychoudhury, A.: Automated program repair. Commun. ACM 62(12), 56–65 (2019)

Gu, R., et al.: Certikos: an extensible architecture for building certified concurrent OS kernels. In: OSDI, pp. 653–669. USENIX Association (2016)

Huang, K., Qiu, X., Shen, P., Wang, Y.: Reconciling enumerative and deductive program synthesis. In: PLDI, pp. 1159–1174. ACM (2020)

Hughes, D.J.D.: Unification nets: Canonical proof net quantifiers. In: LICS, pp. 540–549. ACM (2018)

Itzhaky, S., Peleg, H., Polikarpova, N., Rowe, RN.S., Sergey, I.: Cyclic program synthesis. In: PLDI. ACM (2021)

Itzhaky, S., Peleg, H., Polikarpova, N., Rowe, R.N.S., Sergey, I.: SuSLik (CAV 2021 Artifact): Code and Benchmarks, May 2021. https://doi.org/10.5281/zenodo.4850342

Jacobs, B., Piessens, F.: Expressive modular fine-grained concurrency specification. In: POPL, pp. 271–282. ACM (2011)

Jacobs, B., Smans, J., Philippaerts, P., Vogels, F., Penninckx, W., Piessens, F.: VeriFast: a powerful, sound, predictable, fast verifier for C and Java. In: Bobaru, M., Havelund, K., Holzmann, G.J., Joshi, R. (eds.) NFM 2011. LNCS, vol. 6617, pp. 41–55. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-20398-5_4

Jung, R., Krebbers, R., Jourdan, J.-H., Bizjak, A., Birkedal, L., Dreyer, D.: Iris from the ground up: a modular foundation for higher-order concurrent separation logic. J. Funct. Program. 28, E20 (2018)

Kalyan, A., Mohta, A., Polozov, O., Batra, D., Jain, P., Gulwani, S.: Neural-guided deductive search for real-time program synthesis from examples. In: ICLR. OpenReview.net (2018)

Kawahara, T., Ito, K., Takemura, R., Ohno, H.: Spin-transfer torque RAM technology: review and prospect. Microelectron. Reliab. 52(4), 613–627 (2012)

Klein, G.: SeL4: formal verification of an OS kernel. In: SOSP, pp. 207–220. ACM (2009)

Kneuss, E., Koukoutos, M., Kuncak, V.: Deductive program repair. In: Kroening, D., Păsăreanu, C.S. (eds.) CAV 2015. LNCS, vol. 9207, pp. 217–233. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-21668-3_13

Kneuss, E., Kuraj, I., Kuncak, V., Suter, P.: Synthesis modulo recursive functions. In: OOPSLA, pp. 407–426. ACM (2013)

Knoth, T., Wang, D., Polikarpova, N., Hoffmann, J.: Resource-guided program synthesis. In: PLDI, pp. 253–268. ACM (2019)

Lee, B.C., Ipek, E., Mutlu, O., Burger, D.: Architecting phase change memory as a scalable dram alternative. In: ISCA, pp. 2–13. ACM (2009)

Lee, W., Heo, K., Alur, R., Naik, M.: Accelerating search-based program synthesis using learned probabilistic models. In: PLDI. ACM (2018)

Rustan, K., Leino, M., Milicevic, A.: Program extrapolation with jennisys. In: OOPSLA, pp. 411–430. ACM (2012)

Leroy, X.: Formal certification of a compiler back-end or: programming a compiler with a proof assistant. In: POPL, pp. 42–54. ACM (2006)

Loos, S.M., Irving, G., Szegedy, C., Kaliszyk, C.: Deep network guided proof search. In: LPAR, EPiC Series in Computing, vol. 46, pp. 85–105. EasyChair (2017)

Madhusudan, P., Qiu, X., Stefanescu, A.: Recursive proofs for inductive tree data-structures. In: POPL, pp. 123–136. ACM (2012)

Malcolm, G.: Data structures and program transformation. Sci. Comput. Program. 14(2–3), 255–279 (1990)

Manna, Z., Waldinger, R.J.: A deductive approach to program synthesis. ACM Trans. Program. Lang. Syst. 2(1), 90–121 (1980)

Andreoli, J.: Logic programming with focusing proofs in linear logic. J. Logic Comput. 2, 297–347 (1992)

Martelli, A., Montanari, U.: Additive AND/OR graphs. In: IJCAI, pp. 1–11. William Kaufmann (1973)

Menon, A., Tamuz, O., Gulwani, S., Lampson, B., Kalai, A.: A machine learning framework for programming by example. In: International Conference on Machine Learning, pp. 187–195 (2013)

Mével, G., Jourdan, J.-H., Pottier, F.: Time credits and time receipts in iris. In: Caires, L. (ed.) ESOP 2019. LNCS, vol. 11423, pp. 3–29. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-17184-1_1

Müller, P., Schwerhoff, M., Summers, A.J.: Viper: a verification infrastructure for permission-based reasoning. In: Jobstmann, B., Leino, K.R.M. (eds.) VMCAI 2016. LNCS, vol. 9583, pp. 41–62. Springer, Heidelberg (2016). https://doi.org/10.1007/978-3-662-49122-5_2

Nagashima, Y.: LiFtEr: language to encode induction heuristics for Isabelle/HOL. In: Lin, A.W. (ed.) APLAS 2019. LNCS, vol. 11893, pp. 266–287. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-34175-6_14

Nagashima, Y.: Smart Induction for Isabelle/HOL (Tool Paper). In: FMCAD, pp. 245–254. IEEE (2020)

Nagashima, Y., He, Y.: PaMpeR: proof method recommendation system for Isabelle/HOL. In: ASE, pp. 362–372. ACM (2018)

Nanevski, A., Ley-Wild, R., Sergey, I., Delbianco, G.A.: Communicating state transition systems for fine-grained concurrent resources. In: Shao, Z. (ed.) ESOP 2014. LNCS, vol. 8410, pp. 290–310. Springer, Heidelberg (2014). https://doi.org/10.1007/978-3-642-54833-8_16

Nanevski, A., Vafeiadis, V., Berdine, J.: Structuring the verification of heap-manipulating programs. In: POPL, pp. 261–274. ACM (2010)

Nguyen, T.-T., Ta, Q.-T., Sergey, I., Chin, W.-N.: Automated repair of heap-manipulating programs using deductive synthesis. In: Henglein, F., Shoham, S., Vizel, Y. (eds.) VMCAI 2021. LNCS, vol. 12597, pp. 376–400. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-67067-2_17

Ni, Z., Shao, Z.: Certified assembly programming with embedded code pointers. In: POPL, pp. 320–333. ACM (2006)

O’Hearn, P.W.: Resources, concurrency, and local reasoning. Theor. Comput. Sci. 375(1–3), 271–307 (2007)

O’Hearn, P., Reynolds, J., Yang, H.: Local reasoning about programs that alter data structures. In: Fribourg, L. (ed.) CSL 2001. LNCS, vol. 2142, pp. 1–19. Springer, Heidelberg (2001). https://doi.org/10.1007/3-540-44802-0_1

Osera, P.-M., Zdancewic, S.: Type-and-example-directed program synthesis. In: PLDI, pp. 619–630. ACM (2015)

Piskac, R., Wies, T., Zufferey, D.: GRASShopper: complete heap verification with mixed specifications. In: Ábrahám, E., Havelund, K. (eds.) TACAS 2014. LNCS, vol. 8413, pp. 124–139. Springer, Heidelberg (2014). https://doi.org/10.1007/978-3-642-54862-8_9

Polikarpova, N., Kuraj, I., Solar-Lezama, A.: Program synthesis from polymorphic refinement types. In: PLDI, pp. 522–538. ACM (2016)

Polikarpova, N., Sergey, I.: Structuring the synthesis of heap-manipulating programs. Proc. ACM Program. Lang. 3(POPL), 72:1-72:30 (2019)

Protzenko, J., et al.: Evercrypt: a fast, verified, cross-platform cryptographic provider. In: S&P, pp. 983–1002. IEEE Computer Society (2020)

Qiu, X., Solar-Lezama, A.: Natural synthesis of provably-correct data-structure manipulations. PACMPL 1(OOPSLA), 65:1–65:28 (2017)

Ramananandro, T., et al.: Everparse: verified secure zero-copy parsers for authenticated message formats. In: USENIX Security Symposium, pp. 1465–1482. USENIX Association (2019)

Reynolds, A., Kuncak, V., Tinelli, C., Barrett, C.W., Deters, M.: Refutation-based synthesis in SMT. Formal Meth. Syst. Des. 55(2), 73–102 (2019)

Reynolds, J.C.: Separation logic: a logic for shared mutable data structures. In: LICS, pp. 55–74. IEEE Computer Society (2002)

Rowe, R.N.S., Brotherston, J.: Automatic cyclic termination proofs for recursive procedures in separation logic. In: CPP, pp. 53–65. ACM (2017)

Saha, S., Garg, P., Madhusudan, P.: Alchemist: learning guarded affine functions. In: Kroening, D., Păsăreanu, C.S. (eds.) CAV 2015. LNCS, vol. 9206, pp. 440–446. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-21690-4_26

Sanchez-Stern, A., Alhessi, Y., Saul, L., Lerner, S.: Generating correctness proofs with neural networks. In: Proceedings of the 4th ACM SIGPLAN International Workshop on Machine Learning and Programming Languages, pp. 1–10. ACM (2020)

Scherer, G., Rémy, D.: Which simple types have a unique inhabitant? In: ICFP, pp. 243–255. ACM (2015)

Sergey, I., Nanevski, A., Banerjee, A.: Mechanized verification of fine-grained concurrent programs. In: PLDI, pp. 77–87. ACM (2015)

Sergey, I., Nanevski, A., Banerjee, A., Delbianco, G.A.: Hoare-style specifications as correctness conditions for non-linearizable concurrent objects. In: OOPSLA, pp. 92–110. ACM (2016)

Si, X., Yang, Y., Dai, H., Naik, M., Song, L.: Learning a meta-solver for syntax-guided program synthesis. In: International Conference on Learning Representations (2019)

Srivastava, S., Gulwani, S., Foster, J.S.: From program verification to program synthesis. In: POPL, pp. 313–326. ACM (2010)

Strukov, D.B., Snider, G.S., Stewart, D.R., Williams, R.S.: The missing memristor found. Nature 453, 80–83 (2008)

Svendsen, K., Birkedal, L.: Impredicative concurrent abstract predicates. In: Shao, Z. (ed.) ESOP 2014. LNCS, vol. 8410, pp. 149–168. Springer, Heidelberg (2014). https://doi.org/10.1007/978-3-642-54833-8_9

Trinh, M.-T., Le, Q.L., David, C., Chin, W.-N.: Bi-abduction with pure properties for specification inference. In: Shan, C. (ed.) APLAS 2013. LNCS, vol. 8301, pp. 107–123. Springer, Cham (2013). https://doi.org/10.1007/978-3-319-03542-0_8

Turon, A.: Understanding and expressing scalable concurrency. Ph.D. thesis, Northeastern University (2013)

Turon, A.J., Thamsborg, J., Ahmed, A., Birkedal, L., Dreyer, D.: Logical relations for fine-grained concurrency. In: POPL, pp. 343–356. ACM (2013)

Vafeiadis, V., Parkinson, M.: A marriage of rely/guarantee and separation logic. In: Caires, L., Vasconcelos, V.T. (eds.) CONCUR 2007. LNCS, vol. 4703, pp. 256–271. Springer, Heidelberg (2007). https://doi.org/10.1007/978-3-540-74407-8_18

van Tonder, R., Le Goues, C.: Static automated program repair for heap properties. In: ICSE, pp. 151–162 ACM (2018)

Vollmer, M., Koparkar, C., Rainey, M., Sakka, L., Kulkarni, M., Newton, R.R.: LoCal: a language for programs operating on serialized data. In: PLDI, pp. 48–62. ACM (2019)

Vollmer, M., et al.: Compiling tree transforms to operate on packed representations. In: ECOOP. LIPIcs, , vol. 74, pp. 26:1–26:29. Schloss Dagstuhl (2017)

Watanabe, Y., Gopinathan, K., Pîrlea, G., Polikarpova, N., Sergey, I.: Certifying the synthesis of heap-manipulating programs (2021). Conditionally accepted at ICFP’21

Windsor, M., Dodds, M., Simner, B., Parkinson, M.J.: Starling: lightweight concurrency verification with views. In: Majumdar, R., Kunčak, V. (eds.) CAV 2017. LNCS, vol. 10426, pp. 544–569. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-63387-9_27

Yang, K., Deng, J.: Learning to prove theorems via interacting with proof assistants. In: ICML. PMLR, , vol. 97, pp. 6984–6994 (2019)

Zinzindohoué, J.-K., Bhargavan, K., Protzenko, J., Beurdouche, B.: HACL*: a verified modern cryptographic library. In: CCS, pp. 1789–1806. ACM (2017)

Acknowledgements

We thank Andreea Costea and Yutaka Nagashima for their feedback on the drafts of this paper. This research was supported by the National Science Foundation under Grant No. 1911149, by the Israeli Science Foundation (ISF) Grants No. 243/19 and 2740/19, by the United States-Israel Binational Science Foundation (BSF) Grant No. 2018675, by Singapore MoE Tier 1 Grant No. IG18-SG102, and by the Grant of Singapore NRF National Satellite of Excellence in Trustworthy Software Systems (NSoE-TSS).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions