Abstract

Subspace clustering has been gaining increasing attention in recent years due to its promising ability in dealing with high-dimensional data. However, most of the existing subspace clustering methods tend to only exploit the subspace information to construct a single affinity graph (typically for spectral clustering), which often lack the ability to go beyond a single graph to explore multiple graphs built in various subspaces in high-dimensional space. To address this, this paper presents a new spectral clustering approach based on subspace randomization and graph fusion (SC-SRGF) for high-dimensional data. In particular, a set of random subspaces are first generated by performing random sampling on the original feature space. Then, multiple K-nearest neighbor (K-NN) affinity graphs are constructed to capture the local structures in the generated subspaces. To fuse the multiple affinity graphs from multiple subspaces, an iterative similarity network fusion scheme is utilized to achieve a unified graph for the final spectral clustering. Experiments on twelve real-world high-dimensional datasets demonstrate the superiority of the proposed approach. The MATLAB source code is available at https://www.researchgate.net/publication/338864134.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Data clustering

- Spectral clustering

- Subspace clustering

- High-dimensional data

- Random subspaces

- Graph fusion

1 Introduction

Data clustering is a fundamental yet still very challenging problem in data mining and knowledge discovery [13]. A large number of clustering techniques have been developed in the past few decades [2,3,4,5,6, 8,9,10,11,12, 14,15,18, 21,22,24], out of which the spectral clustering has been a very important category with its effectiveness and robustness in dealing with complex data [3, 6, 14, 18, 22]. In this paper, we focus on the spectral clustering technique, especially for high-dimensional scenarios.

In high-dimensional data, it is often recognized that the cluster structures of data may lie in some low-dimensional subspaces [3]. Starting from this assumption, many efforts have been made to enable the spectral clustering for high-dimensional data by exploiting the subspace information from different technical perspectives [1, 3, 4, 15, 17, 21, 23]. Typically, a new affinity matrix is often learned with the subspace structure taken into consideration, upon which the spectral clustering process is then performed. For example, Liu et al. [17] proposed a low-rank representation (LRR) approach to learn an affinity matrix, whose goal is to segment the data points into their respective subspaces. Chen et al. [1] exploited K-nearest neighbor (K-NN) based sparse representation coefficient vectors to build an affinity matrix for high-dimensional data. He et al. [4] used information theoretic objective functions to combine structured LRRs, where the global structure of data is incorporated. Li et al. [15] presented a subspace clustering approach based on Cauchy loss function (CLF) to alleviate the potential noise in high-dimensional data. Elhamifar and Vidal [3] proposed the sparse subspace clustering (SSC) approach by incorporating the low-dimensional neighborhood information, where each data point is represented by a combination of other points in its own subspace and a new similarity matrix is then constructed. You et al. [23] extended the SSC approach by introducing orthogonal matching pursuit (OMP) to learn a subspace-preserving representation. Wang et al. [21] combined SSC and LRR into a novel low-rank sparse subspace clustering (LRSSC) approach.

Although these methods [1, 3, 4, 15, 17, 21, 23] have made significant progress in exploiting subspace information for enhancing spectral clustering of high-dimensional data, most of them tend to utilize a single affinity graph (associated with a single affinity matrix) by subspace learning, but lack the ability to go beyond a single affinity graph to jointly explore a variety of graph structures in various subspaces in the high-dimensional space. To overcome this limitation, this paper presents a new spectral clustering by subspace randomization and graph fusion (SC-SRGF) approach. Specifically, multiple random subspaces are first produced, based on which we construct multiple K-NN affinity graphs to capture the locality information in various subspaces. Then, the multiple affinity graphs (associated with multiple affinity matrices) are integrated into a unified affinity graph by using an iterative similarity network fusion scheme. With the unified graph obtained, the final spectral clustering result can be obtained by partitioning the this new affinity graph. We conduct experiments on twelve high-dimensional datasets, which have shown the superiority of our approach.

The rest of the paper is organized as follows. The proposed approach is described in Sect. 2. The experimental results are reported in Sect. 3. The paper is concluded in Sect. 4.

2 Proposed Framework

In this section, we describe the overall process of the proposed SC-SRGF approach. The formulation of the clustering problem is given in Sect. 2.1. The construction of multiple K-NN graphs (corresponding to multiple affinity matrices) in a variety of random subspaces is introduced in Sect. 2.2. Finally, the fusion of the multiple graphs into a unified graph and the spectral clustering process are described in Sect. 2.3.

2.1 Problem Formulation

Let \(X\in \mathbb {R}^{n\times d}\) be the data matrix, where n is the number of data points and d is the number of features. Let \(x_i\in \mathbb {R}^d\) denote the i-th data point, corresponding to the i-row in X. Thus the data matrix can be represented as \(X=(x_1,x_2,\cdots ,x_n)^\top \). Let \(f_j\in \mathbb {R}^n\) denote the j-th data feature, corresponding to the j-th column in X. Thus the data matrix can also be represented as \(X=(f_1,f_2,\cdots ,f_d)\). The purpose of clustering is to group the n data points into a certain number of subsets, each of which is referred to as a cluster.

2.2 Affinity Construction in Random Subspaces

In this work, we aim to enhance the spectral clustering for high-dimensional datasets with the help of the information of various subspaces. Before exploring the subspace information, a set of random subspaces are first generated. Note that each subspace consists of a certain number of features, and thereby corresponds to a certain number of columns in the data matrix X.

Multiple random subspaces are generated by performing random sampling (without replacement) on the data features with a sampling ratio r. Let m denote the number of generated random subspaces. Then the set of random subspaces can be represented as

where

denotes the i-th random subspace, \(f^{(i)}_j\) denotes the j-th feature in \(F^{(i)}\), and \(d'=\lfloor r\cdot d\rfloor \) is the number of features. Each subspace can be viewed as selecting corresponding columns in the original data matrix. Therefore, the data sub-matrix in a given subspace \(F^{(i)}\) can be represented as

where \(x^{(i)}_j\in \mathbb {R}^{d'}\) denotes the j-th data point in this subspace.

To explore the locality structures in various subspaces, multiple K-NN graphs are constructed. Specifically, given a subspace \(F^{(i)}\), its K-NN graph can be defined as

where \(V=\{x_1,x_2,\cdots ,x_n\}\) is the node set and \(E^{(i)}\) is the edge set. The weights of the edges in the graph are computed as

where \(e^{(i)}_{jk}\) is the edge weight between nodes \(x_j\) and \(x_k\) in \(G^{(i)}\), \(d(x_j,x_k)\) is the Euclidean distance between \(x^{(i)}_j\) and \(x^{(i)}_k\), \(KNN^i(x_k)\) is the set of K-NNs of \(x_k\) in the i-th subspace, and the kernel parameter \(\sigma \) is set to the average distance between all points.

With the m random subspaces, we can construct m affinity graphs (corresponding to m affinity matrices) as follows:

Note that these affinity graphs share the same node set (i.e., the set of all data points), but have different edge weights constructed in different subspaces, which enable them to capture a variety of underlying subspace structure information in high-dimensional space for enhanced clustering performance.

2.3 Fusing Affinity Graphs for Spectral Clustering

In this section, we proceed to fuse multiple affinity graphs (corresponding to multiple affinity matrices) into a unified affinity graph for robust spectral clustering of high-dimensional data.

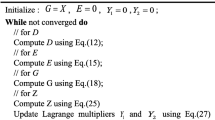

Specifically, we adopt the similarity network fusion (SNF) [20] scheme to fuse the information of multiple graphs. For simplicity, the set of the affinity matrices for the m graphs is represented as \(\mathcal {E}=\{E^{(1)},E^{(2)},\cdots ,E^{(m)}\}\). The goal here is to merge the m affinity matrices in \(\mathcal {E}\) into a unified affinity matrix \(\tilde{E}\).

By normalizing the rows in the affinity matrix \(E^{(i)}\), we have \(\bar{E}^{(i)}=\{\bar{e}^{(i)}_{jk}\}_{n\times n}=(D^{(i)})^{-1}E^{(i)}\), where \(D^{(i)}\) is the degree matrix of \(E^{(i)}\). Then the initial status matrix \(P^{(i)}_{t=0}\) can be defined as

And the kernel matrix \(S^{(i)}=\{s^{(i)}_{jk}\}_{n\times n}\) can be defined as

With the above two types of matrices defined, we can iteratively update the status matrices by exploiting the information of multiple affinity matrices. Particularly, in each iteration, the i-th status matrix is updated as follows [20]:

After each iteration, \(P^{(i)}_{t+1}\) will be normalized by \(P^{(i)}_{t+1}=(D^{(i)}_{t+1})^{-1}P^{(i)}_{t+1}\) with \(D^{(i)}_{t+1}\) being the degree matrix of \(P^{(i)}_{t+1}\).

When the status matrices converge or the maximum number of iterations is reached, the iteration process stops and the fused affinity matrix will be computed as

Then the unified matrix \(\tilde{E}\) will be symmetrized by \(\tilde{E}=(\tilde{E}+\tilde{E}^{\top })/2\). With the unified affinity matrix \(\tilde{E}\) obtained by fusing information of multiple affinity matrices from multiple subspaces, we can proceed to perform spectral clustering on this unified matrix to build the clustering result with a certain number of, say, \(k'\), clusters.

Let \(\tilde{D}\) be the degree matrix of \(\tilde{E}\). Its graph Laplacian can be computed as

After that, eigen-decomposition is performed on the graph Laplacian \(\tilde{L}\) to obtain the \(k'\) eigenvectors that correspond to its first \(k'\) eigenvalues. Then the \(k'\) eigenvectors are stacked to form a new matrix \(\tilde{U}\in \mathbb {R}^{n\times k'}\), where the i-th column corresponds to the i-th eigenvector. Then, by treating each row as a new feature vector for the data point, some discretization techniques like k-means [18] can be performed on the matrix \(\tilde{U}\) to achieve the final spectral clustering result.

3 Experiments

In this section, we conduct experiments on a variety of high-dimensional datasets to compare our approach against several other spectral clustering approaches.

3.1 Datasets and Evaluation Measures

In our experiments, twelve real-world high-dimensional datasets are used, namely, Armstrong-2002-v1 [19], Chowdary-2006 [19], Golub-1999-v2 [19], Alizadeh-2000-v2 [19], Alizadeh-2000-v3 [19], Bittner-2000 [19], Bredel-2005 [19], Garber-2001 [19], Khan-2001 [19], Binary-Alpha (BA) [14], Coil20 [14], and Multiple Features (MF) [5]. To simplify the description, the twelve benchmark datasets are abbreviated as DS-1 to DS-12, respectively (as shown in Table 1).

Number of times being ranked in the first position in Table 2.

To quantitatively evaluate the clustering results of different algorithms, two widely-used evaluation measures are used, namely, normalized mutual information (NMI) [7] and adjusted Rand index (ARI) [7]. Note that larger values of NMI and ARI indicate better clustering results.

In terms of the experimental setting, we use \(m=20\), \(K=5\), and \(r=0.5\) on all the datasets in the experiments. In the following, the robustness of our approach with varying values of the parameters will also be evaluated in Sect. 3.3.

Number of times being ranked in the first position in Table 3.

3.2 Comparison Against the Baseline Approaches

In this section, we compare the proposed SC-SRGF method against four baseline spectral clustering methods, namely, original spectral clustering (SC) [18], k-means-based approximate spectral clustering (KASP) [22], sparse subspace clustering (SSC) [3], and sparse subspace clustering by orthogonal matching pursuit (SSC-OMP) [23]. The detailed comparison results are reported in Tables 2, 3, and 4, and Figs. 1 and 2.

In terms of NMI, as shown in Table 2, the proposed SC-SRGF method obtains the best scores on the DS-1, DS-3, DS-4, DS-5, DS-6, DS-9, DS-10, DS-11, and DS-12 datasets. The average NMI score (across the twelve datasets) of our method is 0.626, which is much higher than the second highest average score of 0.527 (obtained by SSC). The average rank of our method is 1.25, whereas the second best method only achieves an average rank of 3.00. As shown in Fig. 1, our SC-SRGF method yields the best NMI scores on nine out of the twelve datasets in Table 2, whereas the second and third best methods only achieves the best scores on two and one benchmark datasets, respectively.

In terms of ARI, as shown in Table 3, our SC-SRGF method also yields overall better performance than the baseline methods. Specifically, our method achieves an average ARI score (across twelve datasets) of 0.587, whereas the second best score is only 0.466. Our method obtains an average rank of 1.17, whereas the second best average rank is only 3.08. Further, as can be seen in Fig. 2, our method achieves the best ARI score on ten out of the twelve datasets, which also significantly outperforms the other spectral clustering methods.

In terms of time cost, as shown in Table 4, it takes our SC-SRGF method less than 1 s to process the first nine smaller datasets and less than 30 s to process the other three larger datasets, which is comparable to the time costs of the SSC method. Therefore, with the experimental results in Tables 2, 3, and 4 taken into account, it can be observed that our method is able to achieve significantly better clustering results for high-dimensional datasets (as shown in Tables 2 and 3) while exhibiting comparable efficiency with the important baseline of SSC (as shown in Table 4).

All experiments were conducted in MATLAB R2016a on a PC with i5-8400 CPU and 64 GB of RAM.

3.3 Parameter Analysis

In this section, we evaluate the performance of our SC-SRGF approach with three different parameters, i.e., the number of affinity matrices (or random subspaces) m, the number of nearest neighbors K, and the sampling ratio r.

Influence of the Number of Affinity Matrices m. The parameter m controls the number of random subspaces to be generated, which is also the number of affinity matrices to be fused in the affinity fusion process. Figure 3 illustrates the performance (w.r.t. NMI) of our SC-SRGF approach as the number of affinity matrices goes from 5 to 30 with an interval of 5. As shown in Fig. 3, the performance of SC-SRGF is stable with different values of m. Empirically, a moderate value of m, say, in the interval of [10, 30], is preferred. In the experiments, we use \(m=20\) on all of the datasets.

Influence of the Number of Nearest Neighbors K. The parameter K controls the number of nearest neighbors when constructing the K-NN graphs for the multiple random subspaces. As can be seen in Fig. 4, a smaller value of K can be beneficial to the performance, probably due to the fact that the K-NN graph with a smaller K may better reflect the locality characteristics in a given subspace. In the experiments, we use \(K=5\) on all of the datasets.

Influence of the Sampling Ratio r. The parameter r controls the sampling ratio when producing the multiple random subspaces from the high-dimensional space. As shown in Fig. 5, a moderate value of r is often preferred on the benchmark datasets. Empirically, it is suggested that the sampling ratio be set in the interval of [0.2, 0.8]. In the experiments, we use \(r=0.5\) on all of the datasets.

Brief Summary. From the above experimental results, we can observe that the proposed SC-SRGF approach exhibits quite good consistency and robustness w.r.t. the three parameters, which do not require any sophisticated parameter tuning and can be safely set to some moderate values across different datasets.

4 Conclusion

In this paper, we propose a new spectral clustering approach termed SC-SRGF for high-dimensional data, which is able to explore diversified subspace information inherent in high-dimensional space by means of subspace randomization and affinity graph fusion. In particular, a set of multiple random subspaces are first generated by performing random sampling on the original feature space repeatedly. After that, multiple K-NN graphs are constructed to capture the locality information of the multiple subspaces. Then, we utilize an iterative graph fusion scheme to combine the multiple affinity graphs (i.e., multiple affinity matrices) into a unified affinity graph, based on which the final spectral clustering result can be achieved. We have conducted extensive experiments on twelve real-world high-dimensional datasets, which demonstrate the superiority of our SC-SRGF approach when compared with several baseline spectral clustering approaches.

References

Chen, F., Wang, S., Fang, J.: Spectral clustering of high-dimensional data via \(k\)-nearest neighbor based sparse representation coefficients. In: Proceedings of International Joint Conference on Neural Networks (IJCNN), pp. 363–374 (2015)

Chen, M.S., Huang, L., Wang, C.D., Huang, D.: Multi-view clustering in latent embedding space. In: Proceedings of AAAI Conference on Artificial Intelligence (2020)

Elhamifar, E., Vidal, R.: Sparse subspace clustering: algorithm, theory, and applications. IEEE Trans. Pattern Anal. Mach. Intell. 35(11), 2765–2781 (2013)

He, R., Wang, L., Sun, Z., Zhang, Y., Li, B.: Information theoretic subspace clustering. IEEE Trans. Neural Netw. Learn. Syst. 27(12), 2643–2655 (2016)

Huang, D., Wang, C.D., Lai, J.H.: Locally weighted ensemble clustering. IEEE Trans. Cybern. 48(5), 1460–1473 (2018)

Huang, D., Wang, C.D., Wu, J.S., Lai, J.H., Kwoh, C.K.: Ultra-scalable spectral clustering and ensemble clustering. IEEE Trans. Knowl. Data Eng. (2019). https://doi.org/10.1109/TKDE.2019.2903410

Huang, D., Cai, X., Wang, C.D.: Unsupervised feature selection with multi-subspace randomization and collaboration. Knowl.-Based Syst. 182, 104856 (2019)

Huang, D., Lai, J.H., Wang, C.D.: Combining multiple clusterings via crowd agreement estimation and multi-granularity link analysis. Neurocomputing 170, 240–250 (2015)

Huang, D., Lai, J.H., Wang, C.D.: Robust ensemble clustering using probability trajectories. IEEE Trans. Knowl. Data Eng. 28(5), 1312–1326 (2016)

Huang, D., Lai, J.H., Wang, C.D., Yuen, P.C.: Ensembling over-segmentations: from weak evidence to strong segmentation. Neurocomputing 207, 416–427 (2016)

Huang, D., Lai, J., Wang, C.D.: Ensemble clustering using factor graph. Pattern Recognit. 50, 131–142 (2016)

Huang, D., Wang, C.D., Peng, H., Lai, J., Kwoh, C.K.: Enhanced ensemble clustering via fast propagation of cluster-wise similarities. IEEE Trans. Syst. Man Cybern. Syst. (2018). https://doi.org/10.1109/TSMC.2018.2876202

Jain, A.K.: Data clustering: 50 years beyond \(k\)-means. Pattern Recogn. Lett. 31(8), 651–666 (2010)

Kang, Z., Peng, C., Cheng, Q., Xu, Z.: Unified spectral clustering with optimal graph. In: Proceedings of AAAI Conference on Artificial Intelligence, pp. 3366–3373 (2018)

Li, X., Lu, Q., Dong, Y., Tao, D.: Robust subspace clustering by Cauchy loss function. IEEE Trans. Neural Netw. Learn. Syst. 30(7), 2067–2078 (2019)

Liang, Y., Huang, D., Wang, C.D.: Consistency meets inconsistency: a unified graph learning framework for multi-view clustering. In: Proceedings of IEEE International Conference on Data Mining (ICDM) (2019)

Liu, G., Lin, Z., Yan, S., Sun, J., Ma, Y., Yu, Y.: Robust recovery of subspace structures by low-rank representation. IEEE Trans. Pattern Anal. Mach. Intell. 35(1), 171–184 (2013)

von Luxburg, U.: A tutorial on spectral clustering. Stat. Comput. 17(4), 395–416 (2007)

de Souto, M.C., Costa, I.G., de Araujo, D.S., Ludermir, T.B., Schliep, A.: Clustering cancer gene expression data: a comparative study. BMC Bioinformatics 9(1), 497 (2008)

Wang, B., et al.: Similarity network fusion for aggregating data types on a genomic scale. Nat. Methods 11, 333–337 (2014)

Wang, Y., Xu, H., Leng, C.: Provable subspace clustering: when LRR meets SSC. IEEE Trans. Inf. Theory 65(9), 5406–5432 (2019)

Yan, D., Huang, L., Jordan, M.I.: Fast approximate spectral clustering. In: Proceedings of ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 907–916 (2009)

You, C., Robinson, D.P., Vidal, R.: Scalable sparse subspace clustering by orthogonal matching pursuit. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016)

Zhang, G.Y., Zhou, Y.R., He, X.Y., Wang, C.D., Huang, D.: One-step kernel multi-view subspace clustering. Knowl.-Based Syst. 189, 105126 (2020)

Acknowledgments

This work was supported by NSFC (61976097 & 61876193) and A*STAR-NTU-SUTD AI Partnership Grant (No. RGANS1905).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Cai, X., Huang, D., Wang, CD., Kwoh, CK. (2020). Spectral Clustering by Subspace Randomization and Graph Fusion for High-Dimensional Data. In: Lauw, H., Wong, RW., Ntoulas, A., Lim, EP., Ng, SK., Pan, S. (eds) Advances in Knowledge Discovery and Data Mining. PAKDD 2020. Lecture Notes in Computer Science(), vol 12084. Springer, Cham. https://doi.org/10.1007/978-3-030-47426-3_26

Download citation

DOI: https://doi.org/10.1007/978-3-030-47426-3_26

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-47425-6

Online ISBN: 978-3-030-47426-3

eBook Packages: Computer ScienceComputer Science (R0)