Abstract

Recently, with additional information in the disparity variant, quality enhancement for stereo images has become an active research field. Current methods generally adopt cost volumes for stereo matching methods to learn correspondence between stereo image pairs. However, with the large disparity in the different viewpoints of stereo images, how to learn the accurate corresponding information remains a challenge. In addition, as the network deepens, traditional convolutional neural networks (CNNs) adopt cascading methods, which results in the high computational cost and memory consumption. In this paper, we propose an end-to-end effective CNN model. Channel-wise attention-based information distillation and long short-term memory (LSTM) are the basic components, which contribute to reconstruct high quality image (DCL network). Within a stereo image pair, we use high quality (HQ) image to guide the image reconstruction of low quality (LQ). To incorporate the stereo correspondence, information fusion-based LSTM module can be used to learn the disparity variant in stereo images. Specially, in order to distill and enhance effective features map, we introduce channel-wise attention-based a long distillation information module with the consideration of interdependencies among feature channels. Experimental results demonstrate that the proposed network achieves the best performance with comparatively less parameters.

Y. Peng and W. Zou—Equal contribution.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

With the different views of additional information, stereo images are used in various ranges of applications including 3D model reconstruction [1] and autonomous driving for vehicles [2]. Since the seminal work of super resolution convolutional neural network (SRCNN) [3] had proposed, learning-based methods [4, 5] are widely adopted to improve image quality. As CNN-based methods resize input before sending them in the network, and adopt a deeper and recursive network to gain better reconstruction performance. But it demands large computational cost and memory consumption, which are hard to applied in mobile phones and embedded devices. Moreover, the traditional convolutional methods [6, 7] design networks by cascading technologies, which lead to the features redundancy because features map of each layer sending to the sequence layer without difference. However, Hu et al. [8] demonstrated that the representational power of a network can be improved by recalibrating channel-wise feature responses. Recent video quality enhancement method [9] focuses on the exploitation of correspondence between adjacent frames in local region. Video quality enhancement methods cannot be directly applied to stereo image quality enhancement, since stereo images have a long-range dependency and non-local characteristic. Current stereo images enhancement methods leverage stereo matching [10,11,12] to learn correspondence between a stereo image pair. They use cost volumes to model long-range dependency in the network. But these methods are insufficient for estimating accurate correspondence in the large disparity.

To address these problems, we propose an end-to-end CNN model (DCL network) to incorporate stereo correspondence for the task of quality enhancement. Given a stereo image pair, a feature extraction block is firstly used to separately extract features from input images. Secondly, we employ long information distillation blocks (LDBlock) on the LQ image to distill useful information, and information distillation blocks (DBlock) [13] on the HQ image. Because the LQ image requires deeper network to learn more features than HQ image. Features are extracted from LQ and HQ image, and then fed to information fusion based LSTM [14] module to capture stereo correspondence. In addition, we use channel-wise attention following and embedded the information distillation block, which focuses on key information and neglects irrelevant information by considering interdependencies among channels.

The main contributions can be summarized as follows:

-

1.

HQ image is used to guide the image reconstruction of LQ image within stereo image in our network.

-

2.

The proposed long information distillation block extracts the LQ features and combines with channel-wise attention to distill and enhance useful and efficient features.

-

3.

We propose information fusion based LSTM to handle the disparity variations between two viewpoints in one stereo image pair.

2 Related Work

Stereo images quality enhancement methods have been extensively studied in the computer vision community. In this section, we focus on the works related to quality enhancement and long-range dependency learning.

2.1 Quality Enhancement

CNNs have shown to be the state-of-the-art methods for the task of quality enhancement over recent years. Model SRCNN [3], as a pioneer in image reconstruction by using deep learning, is an end-to-end CNN model with three layers: patch extraction and representation, non-linear mapping, reconstruction. But the feature extraction uses only one layer so that it has small receptive field and gets local features. To address this problem, Dong et al. [4] introduced Artifacts Reduction Convolutional Neural Network (called ARCNN), which added a feature enhancement layer. Yu et al. [5] proposed a faster CNN with five layers (FastARCNN). With the employment of deeper and wider networks, CNN-based methods [7] suffer from computational complexity and memory consumption in practice. Hui et al. [13] proposed information distillation block, and used few filters per layer. Although the information distillation block is deep, the convolutional network is compact. Thus, it achieves better results with higher speed and accuracy. In addition, to address the problem of noticeable visual artifacts with a high compression ratio, based on quality enhancement, Jin et al. [15] introduced a fully convolutional neural network. They extracted the corresponding high frequency information in HQ image and fused it with LQ image, which can enhance the LQ image quality in asymmetric stereo images by exploiting inter-view correlation.

2.2 Long-Range Dependency Learning

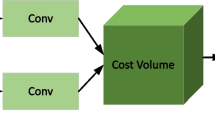

To leverage the disparity information from both right and left views in stereo images, long-range dependency learning has become an important concept in deep neural networks. With the development of a great number of algorithms for stereo correspondence, related works with stereo matching mainly aim to strive for better performance [16,17,18]. In recent years, Zbonta et al. [19] concatenate the left and right features using CNNs to compute the stereo matching cost by learning a similarity on small image patches. To address the problem that current networks depend on path-based network, these methods [10, 12] employ 4D cost volume to effectively exploit global context information. However, with the challenges of computational complexity and memory consumption, Liang et al. [11] proposed 4D cost volume by incorporating all steps into a single network for stereo matching with sharing the same features.

Attention mechanisms have been widely applied in diverse prediction tasks including localization and understanding in images [20, 21], image captioning [22] and so on. It was first introduced by Bahdanau et al. [23]. Visual attention can be seen as a dynamic feature extraction mechanism [24, 25]. These methods [26, 27] can process data in parallel and model complex contexts. SCA-CNN [28] demonstrated that existing visual spatial attention is only applied in the last conv-layer, where the size of receptive field will be quite large and the differences between each receptive field region are quite limited. Therefore, they proposed to incorporate spatial and channel-wise attention in a CNN model.

Inspired by visual attention model, and that stereo images can be seen as the consecutive frames in videos, we propose to combine attention with LSTM to learn the disparity variations in different stereo images. In particular however, our work directly extends [13, 15].

3 Proposed Method

In this section, we describe the proposed model architecture and the long information distillation module. In the following, we introduce the loss function adopted in our network.

3.1 Network Structure

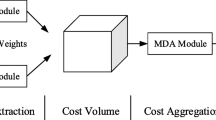

Our method takes a stereo image pair as input, which contains a LQ (right) image and a HQ (left) image. The output is the enhanced LQ (right) image. The architecture of our network is illustrated in Fig. 1 and Table 1. The network comprises of four modules: features extraction, information distillation, information fusion and image reconstruction.

Firstly, We adopt two 3 \(\times \) 3 convolutions to extract original features of input image by features extraction module (FBlock) [13]. The extracted features map is fed to the information distillation module to distill more useful information, whose results are 64 features map. Each information distillation module combines channel-wise attention module to focus on the key information. In order to reduce data dimension and further distill relevant information for following network, we use a 1 \(\times \) 1 convolutional layer. In addition, it can increase the nonlinear characteristics while maintaining the size of the image features. This process can be formulated as:

Where x denotes the input of right LQ image and left HQ image; \( f \) represents the operation of feature extraction; \(D_{i}\) indicates the i-th LDBlock or DBlock function; C, P represent the operation of the channel-wise attention and compression respectively.

Then, the extracted features of two streams for LQ and HQ image make a information fusion by a 4-layer CNN. The operation of these layers can be formulated as:

where \(I_{low}\), \(I_{high}\) denote the output of longer information distillation module and information distillation module, respectively; F represents the information fusion of the left HQ features and right LQ features; \(F_{0}\) denotes the output of information fusion module.

Finally, we use a LSTM network [14] that focuses on learning the corresponding information at the different views of location using features similarities, and keep the left and right of a stereo image pair in location consistency. In order to improve features utilization, we combine the previous features map from the input of LSTM with some current information, which can effectively reconstruct a HQ image. Here, the final enhanced LQ image can be expressed as:

where L denotes the function of LSTM, y represents a output of the network.

3.2 Long Information Distillation

Motivated by an enhancement unit in the IDN [13], we use stacked information distillation blocks to effectively extract image features. And inspired by inception model in GoogLeNet [29], we try to design a deeper and wider network to generate more features maps. Combined the above methods, we design a deeper and wider information distillation block called long information distillation (LDBlock). It is shown in Fig. 2. Based on enhancement unit in the DBlock, we use stacked convolution operation respectively after slicing features map to extract more information in LQ image. In order to reduce the parameters of our network, we leverage the grouped convolutional layers in the second convolutional layer in each enhancement unit with 4 groups. Specially, we adopt the channel-wise attention to adaptively rescale features by considering interdependencies among feature channels.

3.3 Loss Function

Our network is optimized with loss function. We design two loss functions including total loss \(L_{total}\) and LSTM loss \(L_{lstm}\). The total loss \(L_{total}\) is to measure the difference of predicted LQ image \(I_{low}\) and the corresponding uncompressed ground-truth image \(I_{GT}\). We use the mean square error (MSE) as our total loss, which is most widely applied in image restoration. To optimize the difference of left and right image location, we introduce LSTM loss \(L_{lstm}\). Aiming at improving the effectiveness of our network, we choose to optimize the same loss function as previous works.

Where \(\varTheta \) contains the parameter set of the network, including both weights and biases. F represents network to generate the predicted images. \(I_{lstm}^{i}\) denotes the reconstructed images in a LSTM module. Therefore, the overall loss function is formulated as:

Where \(\lambda \) is the weight balancing two losses. Here, \(\lambda _{1}\), \(\lambda _{2}\) is set to 0.8 and 0.2 in our experiment, respectively. More details of training is shown in Sect. 4.2.

4 Experiment

In this section, we first introduce the datasets and implementation details, and then analyze the proposed network architecture. We further compare our network to the state-of-the-art networks on two multiview datasets.

4.1 Dataset

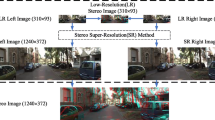

To train the proposed network, we follow [15] and adopt the Middlebury 2014 stereo image dataset including 18 images as our training data. For testing, we use 5 remaining images. Taking into account the training complexity, we leverage the small patch training strategy to crop the image size with 300 \(\times \) 300. Meanwhile, the corresponding patches in HQ images and ground-truth images are also obtained. There are 942 \(\times \) 2 images in the total training. In order to evaluate the performance of the proposed network, JPEG quality is set to 10 and 20 to generate image of a different compression quality. However, for testing, the larger size testing image is unable to process. We crop the test image into a set of \( l_{sub}\) x \( l_{sub}\) with same equal proportion in different sizes of the image.

4.2 Implementation Details

To improve the robustness and generalization ability of model, data augmentation is adopted in four ways: (1) rotate the image randomly by \(90^{\circ }\); (2) crop in a 160 size image; (3) flip images horizontally; (4) flip images vertically. In this work, our model is trained by Adam optimizer with \(\beta _{1}\) = 0.9, \(\beta _{2}\) = 0.999 and the batch size is 12. There are 800 epochs in total, since the learning rate approaches to zero if there are too many epochs. The learning rate is initially set to 0.0001 and decreases by the factor of 10 during fine-tune phase. In addition, the LeakyReLU is applied after each convolution operation, and the negative scope is set to 0.05. In order to focus on the quality enhancement of the image luminance, we adopt a single channel image. We conduct our experiment on a Nvidia GTX 1080Ti GPU and to train a model it need half a day. We implement our network on the Pytorch platform, where its flexibility and efficiency enable us to easily develop the network.

4.3 Network Architecture Analysis

Stereo Image vs Single Image. In order to validate the effectiveness of stereo information for image quality enhancement, we do an experiment based on our network to use a single image (i.e., LQ images), stereo image pairs (HQ and LQ) from the different view as the input. The result is shown in Table 2. It is demonstrated that HQ image contributes to improve LQ image reconstruction. Compared to use a LQ image as the input, restructured image trained by this network decreases 0.74 dB (from 41.12 to 41.38) in terms of peak signal-to-noise ratio (PSNR).

Effectiveness of Channel-Wise Attention. Information distillation module is utilized to distill and enhance features map from the feature extraction. More importantly, channel-wise attention is employed inside and outside of the information distillation block, which can learn the more representative features. To demonstrate its effectiveness, we introduce some implementations by removing channel-wise (CW) in different conditions. From the Table 3, our network only has 41.36 dB in PSNR and 0.9861 in structural similarity values (SSIM) by removing the CW inside the information distillation module of both LQ and HQ image stream. After inserting CW into the DBlock or LDBlock, the performance reaches 41.47 dB and 41.48 dB, respectively. As the same implementation, Table 4 also indicates that LQ image performance benefits from CW outside the information distillation. The increase of parameters is rarely though CW. Theses comparisons show that CW is essential to focus on the effective features in information distillation for deep networks. The results in Tables 3 and 4 denote that the channel-wise features really improve the performance.

Effectiveness of Long Short-Term Memory. In order to validate the effectiveness of LSTM module for image quality enhancement, we do a comparative experiment after the information fusion. From Table 5, it is shown that our network with LSTM gets a better performance. The PSNR value is higher than network without LSTM by 0.38 dB.

4.4 Comparison to State-of-the-Art Approaches

In order to evaluate the performance of our network, we compare with other methods including JPEG [30], SA-DCT [31], ARCNN [4], FastARCNN [5], Fusion-4 and Fusion-8 [15]. The comparison results of the PSNR and SSIM on the Middlebury dataset at JPEG quality 10 and 20 are shown in Table 5. Further more, the number of network parameters for the deep learning based methods are also given. From these results, it is clear that our method achieves the best performance than other methods except Fusion-8 because of the input of Fusion method [15] with the same view. It [15] neglects the different stereo images with large disparity variations. However, our method captures the more reliable correspondence. It can be observed that SSIM achieves the best performance. Compared with Fusion-8, our method reduces the parameters by three times while guaranteeing a higher PSNR (Table 6).

5 Conclusion

In this paper, an efficient deep-learning-based method is proposed to enhance LQ image quality by exploiting from a stereo image pair. We design a deeper and wider information distillation combined with channel-wise attention to extract abundant and efficient features for the LQ image reconstruction. Moreover, our method using information fusion based LSTM module can handle disparity in different views of stereo images. Experiments demonstrate that our method can capture correspondence in stereo image, and achieves the state-of-the-art performance.

References

Chen, X., et al.: 3D object proposals for accurate object class detection. In: Advances in Neural Information Processing Systems, pp. 424–432 (2015)

Zhang, C., Li, Z., Cheng, Y., Cai, R., Chao, H., Rui, Y.: Meshstereo: a global stereo model with mesh alignment regularization for view interpolation. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2057–2065 (2015)

Dong, C., Loy, C.C., He, K., Tang, X.: Image super-resolution using deep convolutional networks, vol. 38, pp. 295–307. IEEE (2015)

Dong, C., Deng, Y., Loy, C.C., Tang, X.: Compression artifacts reduction by a deep convolutional network. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 576–584 (2015)

Yu, K., Dong, C., Loy, C.C., Tang, X.: Deep convolution networks for compression artifacts reduction. arXiv preprint arXiv:1608.02778 (2016)

Kim, J., Lee, J.K., Lee, K.M.: Deeply-recursive convolutional network for image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1637–1645 (2016)

Kim, J., Lee, J.K., Lee, K.M.: Accurate image super-resolution using very deep convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1646–1654 (2016)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141 (2018)

Tao, X., Gao, H., Liao, R., Wang, J., Jia, J.: Detail-revealing deep video super-resolution. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 4472–4480 (2017)

Chang, J.-R., Chen, Y.-S.: Pyramid stereo matching network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5410–5418 (2018)

Liang, Z., et al.: Learning for disparity estimation through feature constancy. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2811–2820 (2018)

Kendall, A., et al.: End-to-end learning of geometry and context for deep stereo regression. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 66–75 (2017)

Hui, Z., Wang, X., Gao, X.: Fast and accurate single image super-resolution via information distillation network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 723–731 (2018)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997)

Jin, Z., Luo, H., Luo, L., Zou, W., Lil, X., Steinbach, E.: Information fusion based quality enhancement for 3D stereo images using CNN. In 2018 26th European Signal Processing Conference (EUSIPCO), pp. 1447–1451. IEEE (2018)

Barnard, S.T.: Stochastic stereo matching over scale. Int. J. Comput. Vis. 3(1), 17–32 (1989)

Scharstein, D., Szeliski, R.: A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. Int. J. Comput. Vision 47(1–3), 7–42 (2002)

Lee, S.H., Kanatsugu, Y., Park, J.-I.: Map-based stochastic diffusion for stereo matching and line fields estimation. Int. J. Comput. Vision 47(1–3), 195–218 (2002)

Zbontar, J., LeCun, Y., et al.: Stereo matching by training a convolutional neural network to compare image patches. J. Mach. Learn. Res. 17(1–32), 2 (2016)

Jaderberg, M., Simonyan, K., Zisserman, A., et al.: Spatial transformer networks. In: Advances in Neural Information Processing Systems, pp. 2017–2025 (2015)

Cao, C., et al.: Look and think twice: capturing top-down visual attention with feedback convolutional neural networks. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2956–2964 (2015)

Xu, K., et al.: Show, attend and tell: Neural image caption generation with visual attention. arXiv preprint arXiv:1502.03044 (2015)

Bahdanau, D., Cho, K., Bengio, Y.: Neural machine translation by jointly learning to align and translate. arXiv preprint arXiv:1409.0473 (2014)

Mnih, V., Heess, N., Graves, A., et al.: Recurrent models of visual attention. In: Advances in Neural Information Processing Systems, pp. 2204–2212 (2014)

Stollenga, M.F., Masci, J., Gomez, F., Schmidhuber, J.: Deep networks with internal selective attention through feedback connections. In: Advances in Neural Information Processing Systems, pp. 3545–3553 (2014)

Xu, H., Saenko, K.: Ask, attend and answer: exploring question-guided spatial attention for visual question answering. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9911, pp. 451–466. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46478-7_28

You, Q., Jin, H., Wang, Z., Fang, C., Luo, J.: Image captioning with semantic attention. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4651–4659 (2016)

Chen, L., et al.: SCA-CNN: spatial and channel-wise attention in convolutional networks for image captioning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5659–5667 (2017)

Szegedy, C., Ioffe, S., Vanhoucke, V., Alemi, A.A.: Inception-v4, inception-resnet and the impact of residual connections on learning. In: Thirty-First AAAI Conference on Artificial Intelligence (2017)

Wallace, G.K.: The JPEG still picture compression standard. IEEE Trans. Consum. Electron. 38(1), xviii–xxxiv (1992)

Foi, A., Katkovnik, V., Egiazarian, K.: Pointwise shape-adaptive dct for high-quality denoising and deblocking of grayscale and color images. IEEE Trans. Image Process. 16(5), 1395–1411 (2007)

Acknowledgement

This work was supported in part by the NSFC Project under Grants 61771321, 61701313, and 61871273, in part by the China Postdoctoral Science Foundation under Grants 2017M622778, in part by the Key Research Platform of Universities in Guangdong under Grants 2018WCXTD015, in part by the Natural Science Foundation of Shenzhen under Grants KQJSCX20170327151357330, JCYJ20170818091621856 and JSGG20170822153717702, and in part by the Interdisciplinary Innovation Team of Shenzhen University, in part by the China Postdoctoral Science Foundation Grants (2017M622778).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Peng, Y., Jin, Z., Zou, W., Tang, Y., Li, X. (2019). An Efficient Quality Enhancement Solution for Stereo Images. In: Zhao, Y., Barnes, N., Chen, B., Westermann, R., Kong, X., Lin, C. (eds) Image and Graphics. ICIG 2019. Lecture Notes in Computer Science(), vol 11903. Springer, Cham. https://doi.org/10.1007/978-3-030-34113-8_15

Download citation

DOI: https://doi.org/10.1007/978-3-030-34113-8_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-34112-1

Online ISBN: 978-3-030-34113-8

eBook Packages: Computer ScienceComputer Science (R0)