Abstract

The emerging fields of predictive and precision medicine are changing the traditional medical approach to disease and patient. Current discoveries in medicine enable to deepen the comprehension of diseases, whereas the adoption of high-quality methods such as novel imaging techniques (e.g. MRI, PET) and computational approaches (i.e. machine learning) to analyse data allows researchers to have meaningful clinical and statistical information. Indeed, applications of radiology techniques and machine learning algorithms rose in the last years to study neurology, cardiology and oncology conditions. In this chapter, we will provide an overview on predictive precision medicine that uses artificial intelligence to analyse medical images to enhance diagnosis, prognosis and treatment of diseases. In particular, the chapter will focus on neurodegenerative disorders that are one of the main fields of application. Despite some critical issues of this new approach, adopting a patient-centred approach could bring remarkable improvement on individual, social and business level.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

- Predictive medicine

- Precision medicine

- Computational psychometrics

- Neurodegenerative disorders

- P5 medicine

- Clinical informatics

1 Introduction

Medicine is an evolving field that updates its applications thanks to recent advances from a broad spectrum of sciences such as biology, chemistry, statistics, mathematics, engineering and life and social sciences. Generally, discoveries in such sciences are applied to medicine with three main aims of preventing, diagnosing and treating a wide range of medical conditions.

The current approach to diseases can be summarized with the “one-size-fits all” statement; although this view of medicine has been used for the past 30 years, applications of effective treatment, for example, can lack efficacy and have adverse or unpredictable reactions in individual patients (Roden 2016).

Precision medicine is the extension and the evolution of the current approach to patient’s management (Ramaswami et al. 2018). Unlike “one-size-fits all” approach, precision medicine is mainly preventive and proactive rather than reactive (Mathur and Sutton 2017) (cfr. Chap. 3). Barak Obama, who claimed the importance of “delivering the right treatments, at the right time, every time to the right person”, has highlighted the critical impact of this emerging initiative in healthcare practice. The personalized approach has been therefore emerged as a critique to an oversimplified and reductive medicine to disease categorization and treatment. Precision medicine uses a broad spectrum of data, ranging from biological to social information, tailoring diagnosis, prognosis and therapy on patient’s needs and characteristics, in accordance to the P5 approach.

Another crucial element of this initiative is the use of informatics: incorporating technology would allow to create a data ecosystem that merges biological information and clinical phenotypes, thanks to imaging, laboratory test and health records, in order to better identify and treat the disease affecting the individual, reducing financial and time efforts and improving the quality of life of the patients.

The present chapter will focus on the potential of predictive precision medicine as new approach to health sciences and clinical practice, giving an overview on the tools, the methodology and a concrete application of the P5 approach.

2 Predictive Medicine and Precision Medicine

Among healthcare applications, predictive medicine is a relatively new area, and it can be defined as the use of laboratory and genetic tests to predict either the onset of a disease in an individual or deterioration or amelioration of current disease, to estimate the risk for a certain outcome and predict which treatment will be the most effective on the individual (Jen and Teoli 2019; Jen and Varacallo 2019; Valet and Tárnok 2003). In this sense, biomarkers could be used to forecast disease onset, prognosis and therapy outcome. Biomarker or biological marker indicates a medical sign that can be measured in an objective way, accurately and reproducibly; the World Health Organization defined biomarker as “almost any measurement reflecting an interaction between a biological system and a potential hazard, which may be chemical, physical, or biological. The measured response may be functional and physiological, biochemical at the cellular level, or a molecular interaction” (Strimbu and Tavel 2010). Biomarkers are used for drug development and clinical outcome; if the current approach to clinical trials is “one-size-fits-all” (i.e. the effect of a treatment is similar for the whole sample), the future of medicine is to provide the “the right treatment for the right patient at the right time”, identifying different subgroups depending on certain biomarkers that respond to an optimal therapy (Chen et al. 2015).

As we have seen in the previous chapters, precision medicine is one of the P5 approach’s features that tailor healthcare applications on the basis of individual genes, environment and lifestyle (Hodson 2016). If personalized medicine takes into account patient’s genes but also beliefs, preferences and social context, precision medicine is a model heavily based on data, analytics and information; thus, the latter approach has a wide “ecosystem” that includes patients, clinicians, researchers, technologies, genomics and data sharing (Ginsburg and Phillips 2018). In order to realize precision medicine, it is crucial to determine biomarkers using either omic (i.e. genomic, proteomic, epigenetic and so on) data alone or in combination with environmental and lifestyle information (Wang et al. 2017) with the objective of creating prognostic, diagnostic and therapeutic interventions based on patient’s needs (Mirnezami et al. 2012).

This new concept of medicine involves the medical institutions that collect every day healthcare information, such as biomedical images or signals. New analytical methods computed by computer, such as machine learning, prompted the “Big Data Revolution”; thus Big Data analysis in predictive medicine (Jen and Teoli 2019; Jen and Varacallo 2019), computational psychometrics (Cipresso 2015; Cipresso et al. 2015; von Davier 2017) and precision medicine (Richard Leff and Yang 2015) may soon benefit from huge amount of medical information and computational techniques (Cipresso and Immekus 2017). New technologies such as virtual reality enable to extract online quantitative and computational data for each individual to deepen the study of cognitive processes (Cipresso 2015; Muratore et al. 2019; Tuena et al. 2019). eHealth generally is an accurate instrument in collecting data; furthermore, Big Data differ from conventional analyses in three ways according to Mayer-Schönberger and Ingelsson (Mayer-Schönberger and Ingelsson 2018): data of the phenomenon under question are extracted in a comprehensive manner; machine learning such as neural networks are preferred for statistical analyses compared to conventional methods; and finally, Big Data do not only answer to questions but generate new hypotheses.

Consequently, technologies and informatics will gradually become the future of medicine (Regierer et al. 2013). eHealth aims at using biomedical data for scientific questions, decision-making (cfr. Chap. 4) and problem-solving (Jen and Teoli 2019; Jen and Varacallo 2019) in accordance with the P5 approach. On the one hand, informatics is crucial for precision medicine since it manages Big Data, creates learning systems, gives access for individual involvement and supports precision intervention from translational research (Frey et al. 2016); on the other hand, clinical informatics is crucial for predictive medicine providing clinicians tools that able to give information about individual at risk, disease onset and how to intervene (Jen and Teoli 2019; Jen and Varacallo 2019). The importance of informatics in the field of medicine is confirmed by the fact that in the United States the use of electronic health records grew from 11.8% to 39.6% among physicians from 2007 to 2012 (Hsiao et al. 2014).

Besides the medical and scientific elements of precision medicine, this field has an impact also on patient and global population (Ginsburg and Phillips 2018; Pritchard et al. 2017). In particular, the precision medicine coalition’s healthcare working group defined novel challenges within this field:

-

Education and awareness: Precision medicine is complex and sometimes confusing; awareness should be improved in potential consumers and healthcare providers, and education within the scientific and clinic areas should integrate the precision medicine approach.

-

Patient empowerment: Precision medicine is a way to engage and empower the patient. However, consent form needs to clarify the use of molecular information, and providers do not properly involve patient in healthcare decision-making, and preferences are not always taken into account; lastly, privacy and security of the digital data must be improved and assured.

-

Value recognition: There are ambivalent sentiments concerning precision medicine, where stakeholders think that precision medicine can be beneficial for patients and healthcare system, whereas payers and providers are not sure to modify policies and practices without clear positive evidence of clinical and economic value.

-

Infrastructure and information management: In order to pursue, the precision medicine approach is needed to effectively manage the massive amount of data and the connections among the infrastructures; for instance, processes and policies should assure clear communications across healthcare providers, genetic patients’ data could be gathered with clinical information within electronic health records, and medical data need to be standardized across platforms.

-

Ensuring access to care: This point needs a shift in the perspective of stakeholders that is achieved by covering the aforementioned key points; at the moment, insurance companies do not cover high-quality diagnostic procedures, electronic health records should be upgraded to integrate complex biological data, some physicians avoid to embrace the precision medicine approach due to misleading perception (e.g. cost/benefit distortions), there is no guideline that coordinates the partners and products, and services cannot be used by population especially in rural environments.

Predictive precision medicine in the P5 approach can be defined as the merging of these two new fields of medical sciences by means of biomarkers to forecast disease onset, progression and its treatment tailored on individual features like omic, environmental and lifestyle elements that could lead to significant improvement from patients’ life to global population and healthcare systems.

3 Imaging Techniques, Artificial Intelligence and Machine Learning

3.1 Imaging Techniques

In the context of predictive medicine and precision medicine, biomedical imaging instruments used in radiology are the most promising techniques and methods (Herold et al. 2016; Jen and Teoli 2019; Jen and Varacallo 2019). In particular, with radiology techniques, it is possible to extract structural, functional and metabolic information that can be used for diagnostic, prognostic and therapeutic purposes (Herold et al. 2016). Imaging techniques (Jen and Varacallo 2019) acquire a vital role not only in applied medicine but also in system biology that attempts to model the structure and the dynamics of complex biological systems (Kherlopian et al. 2008). Model imaging techniques enable to visualize multidimensional and multiparametrical data, such as concentration, tissue characteristics, surfaces and also temporal information (Eils and Athale 2003). According to Kherlopian and colleagues (Kherlopian et al. 2008), the most promising imaging instruments will be microscopy methods, ultrasound, computed tomography (CT), magnetic resonance imaging (MRI) and positron emission tomography (PET); advances in biomedical engineering will improve spatial and temporal resolution of such images, but with the introduction of contrast agents and molecular probes, biomedical images will allow for the visualization of anatomical structures, cells and molecular dynamics, and consequently, microCT, microMRI and microPET are going to be at the centre of basic and applied research.

Multiphoton microscopy, atomic force microscopy and electron microscopy are capable of giving, respectively, cell structure, cell surface and protein structure with a spatial resolution of nanometres; enabling ultrasound had a great impact in cardiology, where, for instance, computer is used to interpret echo waveforms bouncing back from tissue and create images of the vascular system with a resolution of micrometres. CT by means of intrinsic differences in X-ray absorption provided imaging with a high spatial resolution (12–50 μm), that is, lung or bone imaging. Interestingly, microCT in combination with volumetric decomposition allows to represent bone microarchitecture. MRI, thanks to the use of strong magnetic field, creates anatomical images with a good spatial resolution; if MRI is combined with magnetic resonance spectroscopy, it can provide anatomical and biochemical information of a particular region of the organ; if one’s interest is functional activation of the brain, then functional MRI detects differences in oxygenated and deoxygenated haemoglobin that lead to a change in contrast of the image. MicroMRI is used in animal studies at the moment and uses higher magnetic field compared to MRI; with such technique, it is possible to track stem cells, monitor the proliferation of immune cells and follow embryological development. In PET radioactive tracers, the most used is fluorodeoxyglucose (FDG), which is incorporated in molecules to provide metabolic information and therapeutic effects on the disease as well. Even if it is possible to study a specific metabolic activity of interest, the spatial resolution (1–2 mm) is lower compared to the aforementioned techniques; however, microPET has a spatial volumetric of 8 mm3, with incoming scanners having higher resolution and bigger field of view.

The use of imaging tools such as CT, MRI and PET is growing increasingly, for instance, in the United States from 1996 to 2010 in six healthcare systems, the usage increased by, respectively, 7.8%, 10% and 57% (Smith-Bindman et al. 2012). However, imaging techniques are several in the present chapter; we only focused on the main technologies used in medical field and in particular radiology. This underlines the importance of technology and eHealth as opportunity for improving precision medicine at large.

3.2 Artificial Intelligence and Machine Learning

Artificial intelligence (AI) aims at simulating human cognition (Hassabis et al. 2017). One of the recent technologies used within the context of AI is machine learning (ML); ML enables machine to have a human-like intelligence without prior programming (Das et al. 2015). Moreover, ML is the most used method to analyse data and make prediction using models and algorithms (Angra and Ahuja 2017). Within the context of ML, deep learning (DL) and neural network (NN) assume great relevance (Ker et al. 2018; Schmidhuber 2015). The concept of NN was first introduced in 1943 by McCulloch and Pitts and found its application in the Rosenblat’s Perceptron; an artificial NN consists of a layer of neurons that links inputs that perceive a certain stimuli, hidden neurons that get activated via weighted connections of active neurons and output that gives the computation made by the NN. DL is multiple layers of artificial neural networks, wherein the machine can learn details and merge them in high-level features in brain-like manner. DL and NN enabled complex computations using supervised, unsupervised and reinforcement learning. The algorithms used in ML can be classified as follows (Das et al. 2015; Hassabis et al. 2017; Jiang et al. 2017):

-

Supervised learning: When a comparison between output and expected output is made, then error is computed and adjusted to give the wanted output; within the context of supervised learning, the most used DL algorithm is convolutional neural network (CNN), especially used in image recognition and visual learning in 2D and 3D images, enabling the analyses of X-ray and CT or MRI images; recurrent neural network (RNN) is used for text analysis task (e.g. machine translation, text prediction, image caption) similar to a working memory function and evolved into the long- and short -term memory networks to avoid vanishing gradient problem; indeed, an application of AI in this field is a natural language processing that can be used for extracting medical notes and connecting these to medical data. Other supervised learning methods are linear regression, logistic regression, naïve Bayes, decision tree, nearest neighbour, random forest, discriminant analysis, support vector machine (SVM) and NN.

-

Unsupervised learning: In this case, the machine discovers and adjusts itself based on input. For example, an autoencoder input codes the stimuli-gathered codings and reconstructs from these the output; in this case, the output must be as close as possible to the input information; restricted Boltzmann machines are composed of visible and hidden layers that reconstruct the input estimating the probability distribution of the original input; in deep belief network, the output of a restricted Boltzmann machine is the input of another Boltzmann machine; finally, generative adversarial networks are generative models that are composed of two competing CNNs: the first CNN generates artificial training images, and the second CNN discriminates real training images from artificial ones; the desired expectation is that the discriminator cannot tell the difference between the two images; this algorithm is very promising for medical image analyses; other unsupervised methods are clustering, which can be used to divide data in groups, and principal component analysis that reduces data dimension without losing critical information and then creating groups.

-

Reinforcement learning: In this case, learning is enhanced with a reward when the machine executes a “winning” choice; similarly, Q-learning algorithm (Rodrigues et al. 2008) allows to compute the future rewards when the machine is performing a certain action in a particular state in order to keep on acting in an optimal manner.

-

Recommender system: In this case, the online user customizes a site as what happens in e-commerce.

ML problems include pattern classification, regression, control, system identification and prediction that can be summarized into two main elements: developing algorithms that quantify relations among data and using these to make prediction on new data (Wernick et al. 2014).

3.3 Medical Imaging and Machine Learning

ML and AI find in medical imaging field a concrete application in order to analyse images and help physician with particular regard in the field of radiology in decision-making processes improving patient’s management (Jiang et al. 2017; Ker et al. 2018; Kim et al. 2018). Indeed, the P5 approach underlines the importance of decision-making process and the usage of eHealth in order to improve it. ML technology is used in the sector of medical imaging for computer-aided diagnosis (CADx) and computer-aided detection (CADe); the former can also help to identify region properties useful for surgery. In radiology, CADx and CADe usages are, respectively, classification and detection although ML techniques can be used for anatomy educational purposes (i.e. localization) and to facilitate surgery (i.e. segmentation, registration) (Kim et al. 2018).

According to the review conducted on PubMed from 2013 to 2016 by Jiang and co-authors (Jiang et al. 2017), AI applications critical in medical field are cardiology, cancer and neurology. In their report, Jiang and colleagues evidenced that the fields of medical application of AI are diagnostic imaging, genetic and electrodiagnosis, with diagnostic imaging showing the greater impact on research. As concerns the disease conditions, the order of impact on research activity are neoplasms, nervous system, cardiovascular, urogenital, pregnancy, digestive, respiratory, skin, endocrine and nutritional; finally, the most used algorithms in this field are NN and SVM, and in particular, DL technology is applied mostly in diagnostic imaging and electrodiagnosis; interestingly, from 2013 to 2016 CNN increased the application in literature, whereas RNN diminished, and deep belief network and deep neural network remained stable across the periods.

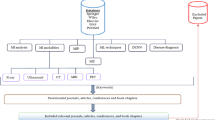

In particular, CNN, autoencoders and RNN are excellent algorithms for medical imaging analysis (Kim et al. 2018). Convolution that is based on addition and multiplication is suitable for image recognition; the procedure takes into account connected information (i.e. voxel or pixel); within convolution layers, there are pooling layers that increase the field of view, and then fully connected layers activate previous layers; autoencoders are composed of multiple perceptrons; encoders can be stacked and can be used to de-noise image of input data; and finally RNN uses feedback and current data enabling to model sequential data with spatial and temporal information. CNN architectures can be used to detect different organ (e.g. brain, liver, heart, prostate or retina) lesions or diseases; to predict disease course, treatment response and survival; and to classify disease, lesion and cell using CT, MRI, PET and other imaging techniques (e.g. retina image, mammography or fluorescent image). Autoencoders have been used in research to detect lesion with breast histology images, predict risk for cognitive deficits and classify lung and breast lesions, whereas stacked autoencoders have been applied for segmentation and image enhancement/generation. Also other algorithms are applied in radiology, for instance, reinforcement learning in combination with data mining helps in decision-making for physicians in cancer diagnosis, for segmentation tasks and for classification of lung nodules (Rodrigues et al. 2008). A critical element of these technologies is that before applying AI to medical imaging and more broadly to healthcare system, algorithms need to be trained with data derived from clinical activities and in different forms; for example, 1.2 million of training data are being used to teach DL algorithms on MRI brain imaging; by the way, the quality of DL techniques depends on the quality of training data; this issue can be improved by adopting multisite, standardized and methodologically adequate acquisition protocols (Jiang et al. 2017).

4 Predictive Precision Medicine in Neurodegenerative Diseases

An increasing lifespan and expectancy with a reduction of mortality result in an increment in aged population in our society; consequently, these factors have brought the attention of scientific and clinical community to chronic age-related or degenerative diseases. Due to the complexity of the aetiology and pathogenesis, resulting in interplay among genetic, epigenetic and environment, prevention (primary, secondary and tertiary) (cfr. Chap. 3) and, in particular, predictive and precision medicine assume a crucial role as features of the P5 approach, with omic approach to biology and computational methods acquiring a relevant position (Licastro and Caruso 2010; Reitz 2016). In particular, predictive genetic testing and molecular genetic diagnosis have well-established position in clinical practice and translational research in the field of neurodegenerative disorders (Paulsen et al. 2013). Indeed, neurodegenerative diseases have specific gene profiles (Bertram and Tanzi 2005). For instance, symptomatic testing in Alzheimer’s disease (AD) requires neurological and neuropsychological examination; then genetic counselling and risk administration are determined: either an autosomal dominant history is present or the onset is early or sporadic, or there is a nonautosomal dominant family clustering; in the first case, genetic testing is offered; if not or in the second case, it is possible to discuss availability of genetic research and/or DNA banking; always in the first case, post-test results are emitted and follow-up/predictive testing for relative is provided; conversely for predictive testing, family mutation in relevant genes (PSEN1, PSEN2 or APP) is known; genetic and risk counselling is provided; neurological, neuropsychological and psychiatric evaluations are offered; and then genetic test and follow-up follow the latter step (Goldman et al. 2011).

Neurological disorders account for 17% of global deaths, and precision medicine gathering genomics, electronic medical records and stem cell models might be vital for therapeutic interventions in neurology; in this sense, drugs tap common symptoms, but adopting a precision medicine approach, it is useful to create drugs that target group of people with similar genetic variation (Gibbs et al. 2018). Besides drug administration, precision medicine can be applied in the context of neurodegenerative diseases to evaluate preclinical stages, facilitate differential diagnosis and define the better treatment at the right moment taking into account genes, epigenetic modifiers and nongenetic factors on neurodegeneration; the combination of these elements should be used to create a patient’s omic profile (Strafella et al. 2018).

4.1 Current Application: From Dementias to Parkinson’s Disease

As already mentioned, ML approaches could be very useful in the field of brain imaging for classification and preventive aims. We will provide some interesting studies of ML within the context of preventive precision medicine for neurodegenerative disorders in order to highlight the importance of technology, and eHealth specifically, into precision medicine.

Katako and colleagues (Katako et al. 2018) used well-known FDG-PET metabolic biomarker (Dubois et al. 2007) from images from four datasets of the Alzheimer’s disease neuroimaging initiative. The researchers compared five machine-based classification (i.e. voxel-wise general linear model, subprofile modelling and SVM). Subprofile modelling is a type of PCA used for differential diagnosis and prognosis in neurodegeneration, whereas SVM is a form of supervised learning used to solve binary classification. Subprofile modelling was utilized with two PCA approaches (single principal component and linear combination of principal components); for SVM iterative single-data algorithm or sequential minimal optimization was applied. All five methods discriminated patients and controls, when compared with tenfold cross-validation SVA with iterative single-data algorithm gave the best results in terms of sensitivity (0.84) and specificity (0.95). In terms of prediction of AD from mild cognitive impairment (MCI), this SVA algorithm had the best performance; interestingly, when comparing PET and single-photon emission computed tomography (SPECT), the iterative single-data algorithm showed higher sensitivity compared to sequential minimal optimization SVM, whereas the latter has higher specificity compared to the former. To test clinical application of the method, a retrospective imaging study was conducted with MCI and subjective cognitive complaints individuals referred from the local memory clinic. All five methods classified as AD the majority of patients later diagnosed with this disease; however, patients who later developed dementia with Lewy body (DLB) and Parkinson’s disease dementia (PDD) were diagnosed as having AD, showing nonspecificity for different types of dementias. Despite that, FDG-PET images showed that DLB and PDD brain pathology suggest AD-like biomarker that is not present in non-demented Parkinson’s diseases (PDND) individuals when using SVM algorithms. Lama and co-authors (Lama et al. 2017) classified structural images from the Alzheimer’s disease neuroimaging initiative. Using structural MRI (i.e. grey matter tissue volume), the researchers compared brain images from patients with AD, MCI and healthy controls using SVM, import vector machine (IVM) based on kernel logistic regression and regularized extreme machine learning (RELM); moreover, to reduce the dimensionality of data, PCA was performed, and permutation testing such as 70/30 cross-validation, tenfold cross-validation and leave-one-out cross-validation was applied. The best classifiers appeared to be the RELM with PCA for feature selection approach; this machine improved classification of AD from MCI and controls. In particular, binary classification (AD vs. controls) with PCA revealed that in terms of accuracy, there was no significant difference, but RELM is better than others with tenfold cross-validation, whereas SVM is better than the latter with leave-one-out cross-validation. Sensitivity was 77.51% for SVM, and specificity was 90.63% for RELM with tenfold cross-validation, whereas with leave-one-out cross-validation, IVM has a sensitivity of 87.10% and RELM a specificity of 83.54%. Multiclass classification (AD vs. MCI vs. controls) with PCA showed that RELM with tenfold and leave-one-out cross-validation has an accuracy of 59.81% and 61.58% and a specificity of 62.25%. Another study that used MRI is the one of Donnelly-Kehoe et al. (Donnelly-Kehoe et al. 2018). In their research, neuromorphometric features from MRI classify controls, MCI, MCI converted to AD and AD. Participants were divided in three groups according to Mini-Mental State Examination (MMSE) scores with the aim of searching the main morphologic features; these were used to design a multiclassifier system (MCS) composed of three subclassifiers trained on data selected depending on MMSE; MCS was compared with three classification algorithms: random forest, SVM and Ada-Boost. MCS with three architectures each outperformed single classifiers in terms of accuracy, and for area under the receiver operating curve (AUC), multiclass AUC was 0.83 for controls, 0.76 for MCI converted to AD, 0.65 for MCI and 0.95 for AD. Accuracy for neurodegenerative detection (AD + MCI converted to AD) was 81%. Random forest and SVM had similar performances, but the former was chosen as the best algorithm since it has few parameters. In particular, accuracy on neurodegenerative detection for the three random forests was 0.71, 0.63 and 0.81. The authors claim that MCS based on cognitive scoring can help MRI AD diagnosis compared to well-established algorithms. Interestingly, Guo and colleagues (Guo et al. 2017) developed an ML technology that exploits hypernetwork able to overcome conventional network methods and fMRI data of AD individuals. After data acquisition, they built the hypernetwork’s connectivity and extracted brain regions with a nonparametric test method and subgraph features with frequently scoring feature selection algorithm; then, kernel (vector and graph, respectively) matrix classification with multikernel SVM was computed. Findings from brain regions and graph features are in line with previous network disruption in AD (Buckner et al. 2008). SVM classification was used for classification of the sample (AD, early MCI and late MCI), and the hypernetwork enabled to extract interactions and topological information. The results of the ML were compared with conventional methods based on partial and Pearson correlations. Findings reveal that the method identifies both interactive and representative high-order information; moreover, AUC for brain region features was 0.831 and 0.762 for graph features but for multifeature classification was 0.919. Multifeature classification can therefore ameliorate AD diagnosis based on biomarker.

Interestingly, ML can be used also to compute electroencephalography (EEG) biomarker in order to identify AD pathology and drug intervention (Simpraga et al. 2017). Data were used to calculate muscarinic acetylcholine receptor antagonist (mAChR) index in healthy participants who received scopolamine to simulate cognitive deficits from 14 EEG biomarkers (spatial and temporal biomarker algorithm); the index had cross-validated accuracy, sensitivity, specificity and precision ranging from 88% to 92% in classifying performances compared to single biomarkers. The mAChR index successfully classified AD patients with accuracy of 62%, 35% sensitivity, 91% specificity and 81% precision; also an AD index was computed from 12 EEG biomarker with accuracy, sensitivity, specificity and precision ranging from 87% to 97%. The findings are useful not only for diagnosis between healthy participants and patients with AD but also for experimental pharmacology because the index assesses the well-known AD cholinergic electrophysiology and drug penetration in this disease.

Frontotemporal dementia (FTD) is one of the most common causes of early onset dementia; among FTD profiles, behavioural FTD is the most frequent and is characterized by specific biomarker (Piguet et al. 2011). Meyer and co-authors used MRI from multicentre cohort to predict diagnosis in each single patient showing the potential of precision medicine (Meyer et al. 2017). They calculated brain atrophy differences between controls and patients and used SVM to differentiate these groups on an individual level. Grey matter density from the conjunction analyses of the cohorts evidenced an overlap in the frontal poles bilaterally. When using the algorithm to predict diagnosis individually, accuracy ranged from 71.1% to 78.9% in the same centre sample (19 behavioural FTD patients vs. 19 controls) and from 78.8% to 84.6% in the whole sample analyses (52 behavioural FTD patients vs. 52 controls). The better predictive region was the frontal lobe compared to the temporal area (80.7% vs. 78.8%); the accuracy increased when accuracy was computed for frontal and temporal regions together and furthermore ameliorated when adding other relevant brain regions such as insula and basal ganglia. Despite researchers found an intercentre variability, they encourage the use of ML and imaging techniques for predictive purposes based on biomarker for personalized early detection of brain degeneration.

Another neurodegenerative disease that represents a social burden is Parkinson’s disease (PD). Biomarkers and imaging can improve the diagnosis of neurodegenerative diseases such as PD (Pievani et al. 2011). Abós and co-authors used ML to define biomarker associated with cognitive status in PD individuals (Abós et al. 2017). Functional connectivity was used to assess PD depending on cognitive profile (with MCI or without MCI) with ML methods and resting-state fMRI. In their study, supervised SVM algorithm, functional connectomics data, neuropsychological profile, leave-one-out cross-validation and independent sample (training group vs. validation group) validation for the model were applied. Leave-one-out cross-validation for subject classification prediction for PD-MCI and PD-nonMCI was for both 82.6%; the independent sample validation correctly classified with the trained SVM machine the participants with AUC of 0.81. Leave-one-out cross-validation and randomized logistic regression were used to select the most relevant edges (21) and nodes (34) of the network. There was an alteration of the edges for the PD-MCI compared to PD-nonMCI group. For 16 edges, connectivity was reduced in the former group, for 13 of these edges, connectivity was impaired also compared to controls, but for the remaining 5, the network was stronger in PD-MCI compared to PD-nonMCI. For the 16 weakened edges, correlations were found with executive functions, visuospatial deficits, levadopa daily dosage and disease duration. This methodology proposed by the authors shows that ML and fMRI could be useful for PD cognitive diagnosis and assessment.

ML can successfully be applied also to nonimaging data to predict the risk for dementia from population-based surveys (de Langavant et al. 2018). Langavant and colleagues developed unsupervised ML classification with PCA and hierarchical clustering on the Health and Retirement Study (HRS; 2002–2003, N = 18,165 individuals) and validated the algorithm in the Survey of Health, Ageing and Retirement in Europe (SHARE; 2010–2012, N = 58,202 individuals). The accuracy of this method was assessed with a subgroup of the HRS with dementia diagnosis from previous study. The machine identified three clusters from HRS: individuals with no functional and motor (e.g. walking) impairment, with motor impairment only and with both functional and motor deficits. The latter group showed a high likelihood for dementia (probability of dementia >0.95; area under the curve [AUC] = 0.91) also when removing cognitive/behavioural measures. Similar clusters were found in SHARE. After 3.9 years follow-up, survival rate for HRS and SHARE in cluster 3 were 39.2% and 62.2%; surviving participants in this cluster showed functional and motor impairments over the same period. The authors claim that the algorithm is able to classify people at risk for dementia and survival and therefore use this classification for prevention and trial assignment.

In their review, Dallora and colleagues (Dallora et al. 2017) found that for the prognosis of dementia, the most applied ML technique is SVM; among ML, neuroimaging studies (i.e. MRI and PET) were most frequent compared to cognitive/behavioural, genetic, lab tests and demographic data with the main of predicating the proportion of MCI individuals that will develop AD. The researchers in terms of validation procedure, datasets used, number or records within the same dataset and follow-up period found limitations. However, defining biomarkers in the field of neurodegenerative diseases could improve diagnosis and treatment and consolidate the role of precision medicine and prediction of disease progress (Dallora et al. 2017; Reitz 2016; Rosenberg 2017). As we have seen, technologies (and eHealth especially) could be a good instrument, also in the context of the P5 approach and future medicine.

5 Conclusion

In this chapter, we elucidated the potential role of predictive precision medicine as a feature of the P5 approach with a particular focus on radiological imaging and ML algorithms applied in neurology. Despite the benefits of precision medicine, the complexity of this approach could be simplified with artificial intelligence methods that can reduce the amount of information and target specific biomarker useful for diagnosis, prognosis and treatment. We reported excellent evidences that this approach could improve the management of neurodegenerative disorders (i.e. AD, PD, FTD, MCI, DLB, PDD) from different perspectives: individual and whole sample and metabolic, structural, functional, electrophysiological and cognitive/behavioural methods. For this reason, we encourage the healthcare system that in this sense comprises of researchers, clinicians, institutions, providers and stakeholders to embrace this vision of medicine.

References

Abós, A., Baggio, H. C., Segura, B., García-díaz, A. I., Compta, Y., Martí, M. J., … Junqué, C. (2017). Discriminating cognitive status in Parkinson’s disease through functional connectomics and machine learning. Scientific Reports, 7(45347). https://doi.org/10.1038/srep45347.

Angra, S., & Ahuja, S. (2017). Machine learning and its applications: A review. In Machine learning and its applications: A review (pp. 57–60).

Bertram, L., & Tanzi, R. E. (2005). The genetic epidemiology of neurodegenerative disease. The Journal of Clinical Investigation, 115(6), 1449–1457.

Buckner, R. L., Andrews-Hanna, J. R., & Schacter, D. L. (2008). The brain’s default network: Anatomy, function, and relevance to disease. Annals of the New York Academy of Sciences, 1124, 1–38. https://doi.org/10.1196/annals.1440.011.

Chen, J. J., Lu, T. P., Chen, Y. C., & Lin, W. J. (2015). Predictive biomarkers for treatment selection: Statistical considerations. Biomarkers in Medicine, 9(11), 1121–1135.

Cipresso, P. (2015). Modeling behavior dynamics using computational psychometrics within virtual worlds. Frontiers in Psychology, 6, 1725.

Cipresso, P., & Immekus, J. C. (2017). Back to the future of quantitative psychology and measurement: Psychometrics in the twenty-first century. Frontiers in Psychology, 8, 2099.

Cipresso, P., Matic, A., Giakoumis, D., & Ostrovsky, Y. (2015). Advances in computational psychometrics. Computational and Mathematical Methods in Medicine, 2015, 1.

Dallora, A. L., Eivazzadeh, S., Mendes, E., Berglund, J., & Anderberg, P. (2017). Machine learning and microsimulation techniques on the prognosis of dementia: A systematic literature review. PLoS One, 12(6), e0179804.

Das, S., Dey, A., Pal, A., & Roy, N. (2015). Applications of artificial intelligence in machine learning: Review and Prospect. International Journal of Computer Applications, 115(9), 31–41.

de Langavant, L. C., Bayen, E., & Yaffe, K. (2018). Unsupervised machine learning to identify high likelihood of dementia in population-based surveys: Development and validation study. Journal of Medical Internet Research, 20(7), e10493.

Donnelly-Kehoe, P. A., Pascariello, G. O., & Gomez, J. C. (2018). Looking for Alzheimer’s disease morphometric signatures using machine learning techniques. Journal of Neuroscience Methods, 15(302), 24–34. https://doi.org/10.1016/j.jneumeth.2017.11.013.

Dubois, B., Feldman, H. H., Jacova, C., DeKosky, S. T., Barberger-Gateau, P., Cummings, J., … Scheltens, P. (2007). Research criteria for the diagnosis of Alzheimer’s disease: Revising the NINCDS-ADRDA criteria. Lancet Neurology, 6(8), 734–746. https://doi.org/10.1016/S1474-4422(07)70178-3.

Eils, R., & Athale, C. (2003). Computational imaging in cell biology. Journal of Cell Biology, 161(3), 477–481.

Frey, L. J., Bernstam, E. V., & Denny, J. C. (2016). Precision medicine informatics. Journal of the American Medical Informatics Association, 23(4), 668–670.

Gibbs, R. M., Lipnick, S., Bateman, J. W., Chen, L., Cousins, H. C., Hubbard, E. G., … Rubin, L. L. (2018). Forum toward precision medicine for neurological and neuropsychiatric disorders. Cell Stem Cell, 23(5), 21. https://doi.org/10.1016/j.stem.2018.05.019.

Ginsburg, G. S., & Phillips, K. A. (2018). Precision medicine: From science to value. Health Affairs, 37(5), 694–701. https://doi.org/10.1377/hlthaff.2017.1624.Precision.

Goldman, J. S., Hahn, S. E., Catania, J. W., Larusse-Eckert, S., Butson, M. B., Rumbaugh, M., … Bird, T. (2011). Genetic counseling and testing for Alzheimer disease: Joint practice guidelines of the American College of Medical Genetics and the National Society of Genetic Counselors. Genetics in Medicine, 13(6), 597.

Guo, H., Zhang, F., Chen, J., Xu, Y., & Xiang, J. (2017). Machine learning classification combining multiple features of a hyper-network of fMRI data in Alzheimer’s disease. Frontiers in Neuroscience, 11(615). https://doi.org/10.3389/fnins.2017.00615.

Hassabis, D., Kumaran, D., Summerfield, C., & Botvinick, M. (2017). Neuroscience-Inspired Artificial Intelligence. Neuron, 95(2), 245–258. https://doi.org/10.1016/j.neuron.2017.06.011.

Herold, C. J., Lewin, J. S., Wibmer, A. G., Thrall, J. H., Dixon, A. K., Schoenberg, S. O., … Muellner, A. (2016). Imaging in the age of precision medicine: Summary of the proceedings of the 10th biannual symposium of the International Society for Strategic Studies in Radiology. Radiology, 279(1), 226–238.

Hodson, R. (2016). Precision medicine. Nature, 537(7619), S49.

Hsiao, C.-J., Hing, E., & Ashman, J. (2014). Trendsinelectronichealthrecord system use among office-based physicians: United States, 2007–2012. National Health Statistics Report, 75, 1–18.

Jen, M.Y. & Teoli, D. Informatics. (2019 Jul 29. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2019 Jan-. Available from: https://www.europepmc.org/books/NBK470564

Jen, M.Y. & Varacallo, M. Predictive Medicine. (2019 Jul 30). In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2019 Jan-. Available from: https://www.ncbi.nlm.nih.gov/books/NBK441941/

Jiang, F., Jiang, Y., Zhi, H., Dong, Y., Li, H., Ma, S., … Wang, Y. (2017). Artificial intelligence in healthcare: Past, present and future. Stroke and Vascular Neurology, 2(4), 230–243. https://doi.org/10.1136/svn-2017-000101.

Katako, A., Shelton, P., Goertzen, A. L., Levin, D., Bybel, B., Aljuaid, M., … Ko, J. H. (2018). Alzheimer’s disease-related FDG- PET pattern which is also expressed in Lewy body dementia and Parkinson’s disease dementia. Scientific Reports, 8(13236), 13236. https://doi.org/10.1038/s41598-018-31653-6.

Ker, J., Lipo, W., Rao, J., & Lim, T. (2018). Deep learning applications in medical image analysis. IEEE Access, 6, 9375–9389.

Kherlopian, A. R., Song, T., Duan, Q., Neimark, M. A., Po, M. J., Gohagan, J. K., & Laine, A. F. (2008). A review of imaging techniques for systems biology. BMC Systems Biology, 2(74), 74. https://doi.org/10.1186/1752-0509-2-74.

Kim, J., Hong, J., & Park, H. (2018). Prospects of deep learning for medical imaging. Precision and Future Medicine, 2(2), 37–52.

Lama, R. K., Gwak, J., Park, J., & Lee, S. (2017). Diagnosis of Alzheimer’s disease based on structural MRI images using a regularized extreme learning machine and PCA features. Journal of Healthcare Engineering, 2017(5485080), 1.

Licastro, F., & Caruso, C. (2010). Predictive diagnostics and personalized medicine for the prevention of chronic degenerative diseases. Immunity & Ageing, 7(Suppl 1), S1. https://doi.org/10.1186/1742-4933-7-S1-S1.

Mathur, S., & Sutton, J. (2017). Personalized medicine could transform healthcare. Journal of Biomedical Reports, 7, 3–5. https://doi.org/10.3892/br.2017.922.

Mayer-Schönberger, V., & Ingelsson, E. (2018). Big data and medicine: A big deal? Journal of Internal Medicine, 283(5), 418–429.

Meyer, S., Mueller, K., Stuke, K., Bisenius, S., Diehl-schmid, J., Jessen, F., … Group FS. (2017). Predicting behavioral variant frontotemporal dementia with pattern classi fi cation in multi-center structural MRI data. NeuroImage: Clinical, 14, 656–662. https://doi.org/10.1016/j.nicl.2017.02.001.

Mirnezami, R., Nicholson, J., & Darzi, A. (2012). Preparing for precision medicine. New England Journal of Medicine, 366(6), 489–491.

Muratore, M., Tuena, C., Pedroli, E., Cipresso, P., & Riva, G. (2019). Virtual reality as a possible tool for the assessment of self-awareness. Frontiers in Behavioural Neuroscience, 13, 62.

Paulsen, J. S., Nance, M., Kim, J., Carlozzi, N. E., Panegyres, P. K., Erwin, C., … Williams, J. K. (2013). Progress in neurobiology a review of quality of life after predictive testing for and earlier identification of neurodegenerative diseases. Progress in Neurobiology, 110, 2–28. https://doi.org/10.1016/j.pneurobio.2013.08.003.

Pievani, M., De Haan, W., Wu, T., Seeley, W. W., Frisoni, G. B., & Giovanni, S. (2011). Functional network disruption in the degenerative dementias. The Lancet Neurology, 10(9), 829–843. https://doi.org/10.1016/S1474-4422(11)70158-2.

Piguet, O., Hornberger, M., Mioshi, E., & Hodges, J. R. (2011). Behavioural-variant frontotemporal dementia: Diagnosis, clinical staging, and management. The Lancet Neurology, 10(2), 162–172. https://doi.org/10.1016/S1474-4422(10)70299-4.

Pritchard, D. E., Moeckel, F., Villa, M. S., Housman, L. T., McCarty, C. A., & McLeod, H. L. (2017). Strategies for integrating personalized medicine into healthcare practice. Personalized Medicine, 14(2), 141–152.

Ramaswami, R., Bayer, R., & Galea, S. (2018). Precision medicine from a public health perspective. Annual Review of Public Health, 39(1.1), 1–16.

Regierer, B., Zazzu, V., Sudbrak, R., Kühn, A., & Lehrach, H. (2013). Future of medicine: Models in predictive diagnostics and personalized medicine. Advances in Biochemical, Engeneering/Biotechnology, 133, 15–33.

Reitz, C. (2016). Toward precision medicine in Alzheimer’s disease. Annals of Transaltional Medicine, 4(6), 107. https://doi.org/10.21037/atm.2016.03.05.

Richard Leff, D., & Yang, G.-Z. (2015). Big data for precision medicine. Engineering, 1(3), 277–279.

Roden, D. M. (2016). Cardiovascular pharmacogenomics: Current status and future directions. Journal of Human Genetics, 61(1), 79. https://doi.org/10.1038/jhg.2015.78.Cardiovascular.

Rodrigues, V., Leite, C., Silva, A., & Paiva, A. (2008). Application on reinforcement learning for diagnosis based on medical image. (M. E. and N. M. M. Cornelius Weber, Ed.). IntechOpen. https://doi.org/10.5772/5291.

Rosenberg, G. A. (2017). Binswanger’s disease: Biomarkers in the inflammatory form of vascular cognitive impairment and dementia. Journal of Neurochemistry, 144(5), 634–643. https://doi.org/10.1111/jnc.14218.

Schmidhuber, J. (2015). Deep learning in neural networks: An overview. Neural Networks, 61, 85–117. https://doi.org/10.1016/j.neunet.2014.09.003.

Simpraga, S., Alvarez-jimenez, R., Mansvelder, H. D., Van, J. M. A., Groeneveld, G. J., Poil, S., & Linkenkaer-hansen, K. (2017). EEG machine learning for accurate detection of cholinergic intervention and Alzheimer’s disease. Scientific Reports, 7, 5775. https://doi.org/10.1038/s41598-017-06165-4.

Smith-Bindman, R., Miglioretti, D., Johnson, E., Lee, C., Feigelson, H., Flynn, M., … Williams, A. E. (2012). Use of diagnostic imaging studies and associated radiation exposure for patients enrolled in large integrated health care systems, 1996–2010. JAMA, 307(22), 2400–2409.

Strafella, C., Caputo, V., Galota, M. R., Zampatti, S., Marella, G., Mauriello, S., … Giardina, E. (2018). Application of precision medicine in neurodegenerative diseases. Frontiers in Neurology, 9(701). https://doi.org/10.3389/fneur.2018.00701.

Strimbu, K., & Tavel, J. A. (2010). What are biomarkers? Current Opinion in HIV and AIDS, 5(6), 463–466. https://doi.org/10.1097/COH.0b013e32833ed177.What.

Tuena, C., Serino, S., Dutriaux, L., Riva, G., & Piolino, P. (2019). Virtual enactment effect in young and aged populations: A systematic review. Journal of Clinical Medicine, 8, 620.

Valet, G. K., & Tárnok, A. (2003). Cytomics in predictive medicine. Cytometry Part B: Clinical Cytometry, 53(1), 1–3.

von Davier, A. A. (2017). Computational psychometrics in support of collaborative educational assessments. Journal of Educational Measurement, 54(1), 3–11.

Wang, E., Cho, W. C. S., Wong, C., & Liu, S. (2017). Disease biomarkers for precision medicine: Challenges and future opportunities. Genomics, Proteomics & Bioinformatics, 15(2), 57–58.

Wernick, M. N., Yang, Y., Brankov, J. G., Yourganov, G., & Stephen Strother, C. S. (2014). Machine Learning in Medical Imaging, 27(4), 25–38. https://doi.org/10.1109/MSP.2010.936730.Machine.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2020 The Author(s)

About this chapter

Cite this chapter

Tuena, C., Semonella, M., Fernández-Álvarez, J., Colombo, D., Cipresso, P. (2020). Predictive Precision Medicine: Towards the Computational Challenge. In: Pravettoni, G., Triberti, S. (eds) P5 eHealth: An Agenda for the Health Technologies of the Future. Springer, Cham. https://doi.org/10.1007/978-3-030-27994-3_5

Download citation

DOI: https://doi.org/10.1007/978-3-030-27994-3_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-27993-6

Online ISBN: 978-3-030-27994-3

eBook Packages: Behavioral Science and PsychologyBehavioral Science and Psychology (R0)