Abstract

The field of liver transplantation has changed since the MELD scoring system became the most widely used donor allocation tool. Due to the MELD-based allocation system, sicker patients with higher MELD scores are being transplanted. Persistent organ donor shortages remain a challenging issue, and as a result, the wait-list mortality is a persistent problem for most of the regions. This chapter focuses on deceased donor and live donor liver transplantation in patients with complications of portal hypertension. Special attention will also be placed on donor-recipient matching, perioperative management of transplant patients, and the impact of hepatic hemodynamics on transplantation.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

Introduction

For a critically ill cirrhotic patient, liver transplant is the only treatment that can provide a chance at long-term survival. In patients who meet the criteria for transplant listing, the current allocation system is designed to direct the next available donor liver to the sickest patient on the list to reduce wait-list mortality. Liver allocation was originally based on overall wait times, the Child-Turcotte-Pugh (CTP) score, and ABO blood type compatibility [1]. However, this allocation scheme had limitations in that longer waiting times on the transplant list did not correspond with increased patient mortality and the CTP score did not adequately represent the general transplant population [1]. The CTP score is based on three laboratory values (prothrombin time, bilirubin, albumin) and two subjective clinical variables (ascites and encephalopathy). Despite the CTP scoring model initially being utilized as part of an organ allocation system, it has never been validated for estimating survival in patients with chronic liver disease [1]. The CTP score is rather reflective of complications of portal hypertension, and its lack of objectivity limited its application to transplant organ allocation [2].

The model for end-stage liver disease (MELD) score was initially developed to determine risk of mortality for the transjugular intrahepatic portosystemic shunt (TIPS) procedure within a 3-month period [3]. The MELD score has subsequently been validated as a severity of liver disease scoring system and predictive mortality tool independent of etiology or occurrence of portal hypertensive complications [1, 2]. Baseline MELD scores have been shown to be significantly associated with wait-list mortality [4]. Since its approval in 2002, the MELD score helps determine liver allocation for patients awaiting transplantation by providing 3-month predictive mortality [5] and has become the most commonly utilized liver organ allocation tool worldwide. Sicker patients are represented by a higher MELD score and therefore are assigned a higher priority on transplant waiting lists. The main advantage of the MELD score is that it is objective in that it is based on three laboratory values (serum INR, bilirubin, and creatinine). It is not a perfect system in that it doesn’t always reflect the urgency in patients with relatively low physiologic MELD score but who have clear indications for liver transplantation (e.g., hepatocellular carcinoma, hepatopulmonary syndrome, metabolic disorders) [6]. These patients are usually granted MELD exception points that would help them to be competitive for transplants depending on their region of residence. More recently, the MELD-Na has been introduced to provide a more accurate assessment of wait-list mortality and to take into account the complications of portal hypertension [7].

Despite the advancements within transplantation over the last 20 years, several challenges remain, as organ shortages persist and patients remain on wait-lists for extended periods of time. Due to the current allocation system based on MELD, transplant programs are often offering liver transplants for patients with high MELD scores. This raises new challenges and questions in today’s practice. This chapter aims to outline current evidence for transplantation of patients with high MELD scores, discuss transplant futility, address simultaneous multi-organ transplantation, discuss surgical techniques for complications of cirrhosis at the time of transplantation, discuss postoperative management, and outline the role of living-related transplantation in today’s environment.

Liver Transplantation in High MELD Patients

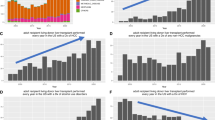

The MELD score has been validated as a scoring tool to prioritize patients on liver transplantation (LT) waiting lists by predicting 3-month mortality risk based on a scale from low scores of 6 to high scores capped at 40, with 83–87% accuracy [1]. Wait-list mortality is directly proportional to the MELD score, where a MELD score of <9 is associated with an approximate mortality of 2% and a MELD score ≥ 40 is associated with a wait-list mortality of 71% [1]. In general, for the patients with MELD scores ≤15, the risks of LT likely outweigh the benefit. In low MELD patients, the risk of mortality from LT is greater than remaining on the transplant wait-list [8]. These patients are therefore allocated to the bottom of the list and, depending on the program, often not listed until their MELD score increases. Application of the MELD score has reduced the number of patients awaiting LT and lowered peri-transplant mortality [6]. The MELD score has not been able to improve the shortage of available organs. Organ distribution is based on medical urgency, rather than expected posttransplant outcomes. Patients with MELD scores >35 are typically admitted to the ICU, potentially on dialysis, receive hemodynamic or respiratory support [9], and are potential candidates for urgent LT. In such situations, it may seem that sick patients would not benefit from operative intervention. However, based on a 5-year time frame, the higher the MELD score, the greater the benefit of LT [10]. Survival benefit posttransplantation is seen in MELD scores >40 because this population has the greatest risk of mortality while awaiting LT [11].

Patients with MELD scores >40 were previously thought to be “too sick” to undergo LT. It was believed that organ allocation to this higher risk population was futile and not beneficial for individual patient outcomes or for appropriate resource utilization. Currently, the pretransplant MELD score has not been able to reliably predict posttransplant outcomes [12, 13]. As patients linger on waiting lists, MELD scores continue to increase. It is common to see patients with MELD scores >40 awaiting LT. Low-MELD-score patients may also spend a prolonged amount of time on wait-lists, deteriorate, and become part of the sickest quartile of individuals awaiting LT. Interest lies in assessing which critically ill patients with high MELD scores derive the most benefit from LT. In patients with MELD scores >40, are there additional factors not captured by the MELD score that can predict successful or futile transplantation outcomes? In order to reduce wait times, in 2013, the United Sates adopted the Share 35 policy, which mandated that there would be an increase in regional sharing of organs to patients with MELD scores ≥35 [14].

A Canadian retrospective review assessed the outcomes of 198 critically ill ICU cirrhotic patients undergoing LT with a median MELD score of 34 on ICU admission [15]. The 90-day and 3-year survival were 84 and 62.5%, respectively, despite the fact that 88% of patients received vasopressors, 56% received renal replacement therapy, and 87% were mechanically ventilated prior to transplantation [15]. The same study found that patients >60 years of age had a significantly higher 90-day mortality (27% vs 13%) [15]. A multivariate analysis of 8070 transplant patients aged ≥60 identified that recipient albumin levels <2.5 mg/dL, serum creatine ≥1.6 mg/dL, hospitalization at the time of organ offer, ventilator dependence, presence of diabetes, and recent hepatitis C virus (HCV) positivity were independent predictors of poor patient survival [16]. In this study, the strongest prognostic factor was a recipient and donor age combination equal to or greater than 120 years [16]. Asrani et al. [17] retrospectively reviewed non-HCV cirrhotic LT recipients and identified that patients who had a survival of <50% at 5 years were above 60 years old with median MELD scores of 40. These patients also had multiple medical comorbidities and were on life support at the time of LT. Age > 60 in patients with elevated MELD scores has consistently been shown to be associated with worse posttransplantation survival compared to those patients with MELD scores >40 and age < 60.

Patients with MELD scores >40 have increased wait-list mortality compared to patients with lower MELD scores [1]; however, an elevated MELD score is no longer a contraindication to LT [18]. Studies have shown contradictory results for high MELD score patients and postoperative mortality. Retrospective analysis has demonstrated that cirrhotic patients with MELD scores ≥40 do benefit from LT and have similar 5-year cumulative survival posttransplantation compared to patients with MELD scores <40. However, these patients confer a higher burden of health-care costs [19]. In 2014, the University of California, Los Angeles, group showed similar findings, in which patients with MELD score > 40 LT was deemed beneficial with a 5-year patient survival rate above 50% [20]. The same group also identified that a subgroup of patients with MELD scores >40 did not benefit from transplantation. Patients with MELD scores >40 who had septic shock, cardiac risk factors, and other significant comorbidities, were found to have a predicted futility of LT of >75% [20]. Prospective analysis has confirmed the association of elevated health-care costs with MELD scores ≥28 is due to longer hospital and ICU admissions, despite no differences seen in postoperative survival or complications when compared to MELD scores <28 [18].

Panchal et al. [21] retrospectively reviewed a nationwide transplant database and found that the overall mortality was statistically higher in patients with MELD scores ≥40, compared to patients with a MELD score < 30 (30 versus 26%). Despite the significant difference in mortality, the MELD >40 group had a lower mortality rate than initially predicted, which was thought to be secondary to younger age of recipients, lower prevalence of diabetes, portal vein thrombosis (PVT), HCV, Epstein-Barr virus, TIPS, or prior upper abdominal surgery [21]. This group utilized greater hospital resources (longer pretransplant hospitalization, ICU admission, required mechanical ventilation, and longer hospital length of stay) [21]. Within the same study, MELD patients with a score of >40 and recipient age > 60, BMI > 30, pretransplant hospitalization, or use of extended criteria donors predicted LT futility. The risk of mortality increased by 95%, and graft failure was 60% higher when compared to patients with a MELD <30. Despite the significantly increased risk, there is a perceived benefit to transplanting such sick patients because they have expected survival of >50% at 5 years (64% graft and 69% patient survival) [21]. In recipients with satisfactory graft function, MELD scores >30 are significantly associated with prolonged ICU stay (defined as ≥3 days) which is associated with poor patient and graft survival at 3, 12, and 60 months [22]. However, good LT outcomes can be seen in patients with MELD scores ≥40, where overall 1-, 3-, 5-, and 8-year survival of 89, 79, 75, and 69% can be seen when futile deaths are excluded [20]. There is a definite subgroup of patients in this high-risk category in whom LT becomes futile despite optimal management. Michard et al. performed a single-center retrospective review and identified that in patients awaiting LT with MELD scores >40, those admitted to the ICU had elevated lactate (>5 mmol/L) or developed acute respiratory distress syndrome (ARDS) and had a poor 3-year survival rate of 29% [23]. In this subpopulation, LT is clearly not beneficial. A comparison of several studies with high MELD scores and associated variables predicting poor patient survival is summarized in Table 14.1.

There are several challenges of offering transplants to patients with high MELD scores. Selecting the most appropriate donor organ for the most appropriate recipient in order to provide the best postoperative survival can be challenging. Single-center experience has demonstrated that high-risk donor organs transplanted in low MELD patients has resulted in lower recipient transplant survival [26, 27]. Furthermore, the quality of the donor organ has not impacted recipient survival in recipients with MELD scores >30 [28]. The complexity of organ allocation systems in individual countries can further complicate organ allocation. In 2013, the Share 35 policy was implemented in order to enhance distribution of organs in the United States for patients with MELD scores ≥35 [29]. Since its implementation, there has been a 36% reduction of organ offers accepted for patients with MELD scores ≥35, while there was no change in organ acceptance for MELD scores <35 [29]. The most common reasons for declining an organ offer were “patient transplanted, transplant in progress, or other offer being considered,” indicating that programs had several offers to choose from and were selectively choosing donors that were deemed to be more optimal [29].

As high-MELD-score patients continue to be transplanted, ongoing study is required to assess how a multidisciplinary approach with surgeons, hepatologists, and anesthesiologists can continue to enhance perioperative care in order to improve short and long outcomes. It is imperative to establish a consensus of independently successful and futile predictors of transplant outcomes in patients with MELD scores ≥35, in order to optimize outcomes in high-risk patients and prevent futile LT.

Liver Transplantation and Futility

Medical futility can divided into four major types: physiological, imminent demise, lethal condition, and qualitative [30]. When LT was in its surgical infancy prior to becoming recognized as a life-altering treatment that should be offered to patients with end-stage liver disease (ESLD), the procedure was associated with physiologic futility. Physiologic futility is defined as a proposed treatment that cannot lead to its intended physiologic effect [31] such as the case if a patient with ESLD undergoes LT and the patient does not survive the operation.

Imminent demise futility is closely associated to physiologic futility. In imminent futility, a performed action may have prolonged an individual’s life, however, only for the very short term. For example, a patient with ESLD undergoes LT, and a few days or weeks later pass without being discharged from hospital. The intent of the transplant was to extend the patient’s life by years, and the result was below this expectation. In this situation there is a subjective perceived benefit; the patient’s life was prolonged; however, the patient may or may not have believed that the short extension of life was of benefit to them. Lethal condition futility is an extension of imminent demise where the expectation is that a patient will pass away in the near term regardless of receiving or undergoing an intervention; however, the short extension on life is deemed appropriate. For example, biliary stent placement in patients with advanced incurable biliary tree tumors does not reduce mortality but provides symptom reduction thus enhancing remaining quality of life. A controversial definition of futility is qualitative futility, because it requires the scientific assessment of the probability of success for a given treatment [32].

Quantitative futility is defined by Schneiderman et al. [33], “where a treatment should be considered futile is if it has been useless in the last 100 cases, only preserves permanent unconsciousness, or fails to end total dependence on intensive medical care.” Qualitative futility addresses the end result of the intervention performed and whether the functional outcome is acceptable or not [32]. How do we as a society universally agree on what is considered acceptable? Within today’s society there are diversely held cultural and religious beliefs on what defines an acceptable quality of life outcome after an intervention in the setting of potential imminent death. A consensus definition in such a setting would be a milestone achievement. Qualitative futility encompasses the current and future ethical ambiguity surrounding transplantation of very sick, physiologically deranged patients. In today’s environment, the ethical questions and dilemmas are typically no longer dominated by the technical aspects of “can it be done?” but have transitioned to “should it be done?” Performing a highly complicated anastomosis, transplanting a patient with a MELD score > 40 with adverse prognostic indicators, or re-transplanting a patient several times, is no longer technically impossible. The ability to withdraw from aggressive medical treatment in the setting of limitless options should propagate reflection on what we consider optimal versus futile care.

How can we identify what is currently considered futile but will no longer be considered futile in the next decade of LT? Identification and stratification of patients with MELD scores >40 with associated poor predictors of outcome is necessary in order to establish a consensus of specific conditions that independently provide significant postoperative challenges that may be insurmountable to the patient. In such situations, the focus should be on the application of qualitative futility: enhancing remaining quality of life, reducing hospital resource utilization, and preserving organs that might be of a more long-term benefit to other recipients. A consensus on how we define poor outcomes in situations of ESLD and imminent death is required. How do we determine what an acceptable survival rate is, and should it be based on being better than 50%, the flip of a coin? Should there be an objective evaluation, assessing success on a minimum 5-year survival and predetermined cost? We cannot solely focus on what is best for an individual patient. Consideration must also be given to what is best for the next patient awaiting LT. Unfortunately, resource utilization and medical costs are also a mandatory part of the conversation.

Many scoring systems predicting post-LT outcomes are available, and specific definitions of futility have been created by several groups. Petrowsky et al. [20] and Panchal et al. [21] defined futility as a 90-day mortality or in-hospital mortality in patients with MELD scores ≥40. Petrowsky et al. [20] also identified that in patients with MELD scores ≥40 who underwent LT, futility was significantly associated with greater pretransplant morbidity, higher cardiac risk, age-adjusted Charlson comorbidity index of ≥6, life support treatment, and pretransplant septic shock. In this population, cardiac and septic causes of death were significantly higher compared to patients without futility-associated risk factors and MELD scores ≥40. Based on their observed findings, Petrowsky and his group state that despite high medical acuity, patients with high MELD >40 without associated futile risk factors have successful long-term survival, and therefore such patients should be transplanted. Asrani et al. [17], on the other hand, defined futility as any adult recipient with a >50% mortality at 5 years posttransplant. Rana et al. [34] state that LT in any patient with MELD score > 40 is likely futile because the predicted posttransplant mortality is greater than any wait-list mortality as predicted by the MELD score. However, based on previously discussed data, there are subsets of patients with MELD scores >40 that have good posttransplant outcomes, and a general policy of no LT for MELD scores >40 would not be appropriate.

Despite multiple proposed definitions for transplant futility, there are no global consensus criteria that clearly define transplant futility or provide a consensus on LT futility-associated criteria. No guidelines currently propose delisting patients deemed futile for transplantation from wait-lists. Delisting may provide a benefit by optimizing remaining quality of life, rather than proceeding with LT despite poor expected outcomes. For example, should a patient with a MELD score > 40, age > 60 with extensive cardiac risk factors undergoing dialysis, be delisted in order to optimize organ reallocation to another individual? Would family consent be required? What body of governance would make such a decision, and would this be considered too paternalistic of an approach? In North America, institutions review these unfortunate patient situations on a case-by-case basis. Multidisciplinary conferences, where decisions regarding high-risk cases are reviewed, play an important role in assessing not only the recipient but also the potential donor. The Baylor College of Medicine established the Houston City-Wide Task Force on Medical Futility, where a committee was created to preserve and protect patient rights while establishing a fair procedural process for potentially futile clinical situations [30].

With the limited supply of organs, objective evaluation of a patient’s transplant candidacy should also take place and assessment if optimal allocation of organs is indeed to those critically ill patients at the top of the transplant list. Establishing a clear set of defined criteria that warrants a patient from being delisted from a transplant waiting list may help optimize organ allocation and globally improve outcomes. Linecker et al. [35] provide general definitions of futility and propose the concept of “potentially inappropriate” LT by risk profiling a patient’s clinical situation and probability of not surviving the early posttransplant recovery phase. If a predictive post-mortality score could be validated to accurately prognosticate posttransplant mortality risk and incorporate donor characteristics, enhanced allocation and minimization of futile transplants could occur.

Preoperative Preparation of a Sick Patient for Liver Transplantation

Hepatorenal Syndrome

Please refer to Chaps. 2 and 5 on this topic.

Porto-pulmonary Hypertension

Porto-pulmonary hypertension (POPH) is a disease where secondary pulmonary hypertension develops in the setting of portal hypertension with or without cirrhosis [36]. POPH occurs in 2–10% of all patients with cirrhosis, with approximately 1% of all patients with POPH demonstrating severe symptomatic disease [37]. The diagnosis of POPH is based on right heart catheterization findings and requires a mean pulmonary artery pressure of ≥25 at rest, an elevated pulmonary vascular resistance >240 dyne s/cm−5, and a normal pulmonary capillary wedge pressure (PCWP) <15 mmHg [38]. Classification of mild, moderate, and severe disease is based on mean pulmonary artery pressures of >25 to <35, ≥ 35 to <45, and ≥45, respectively [39].

Untreated POPH is considered to be a relative contraindication for LT, and mean pulmonary pressures >35 is an absolute contraindication to proceed with LT. After reperfusion of transplanted liver, the increased venous return will exert the volume and pressure to the right heart against high pulmonary resistance resulting in right heart failure and likely death. All the potential liver transplant patients are screened with transthoracic echocardiogram where right ventricular systolic pressure (RVSP) is estimated based on the tricuspid jets. If the RVSP is found to be elevated, these patients undergo further testing with a right heart catherization. It is important to distinguish between primary pulmonary hypertension and volume overload which can commonly occur in patients with cirrhosis. In centers that use Swann-Ganz catheters routinely in LT, this simple measurement can identify undiagnosed pulmonary hypertension prior to starting the operation, allowing the transplant team to abort the case if the pulmonary pressure is found to be too high (>35 mm Hg).

Pharmaceutical vasodilators such as prostacyclin analogues, phosphodiesterase inhibitors, and endothelin receptor antagonists lower mean pulmonary artery pressures and allow for clinical stability evidenced by improved pulmonary hemodynamics [38, 40, 41]. However, medically treated POPH patients’ 5-year survival is only 40–45% [42, 43], while pretreatment with prostacyclin therapy with LT can improve survival up to 67% [43].

Patients with mean pulmonary artery pressure ≤ 35 and peripheral vascular resistance <400 dynes/sec/cm−5 can be considered transplant candidates and can receive an exception MELD score of 22 points [44]. If patients do not meet transplant criteria, they can be medically treated to a mean pulmonary artery pressure < 35 mmHg and peripheral vascular resistance <400 dynes/sec/cm−5; then MELD exception points can be provided and increased by 10% every 3 months if there is continued hemodynamic improvements [45]. POPH patients who are transplant eligible also have significant mortality potential. It has been shown that wait-list mortality or removal from the wait-list secondary to clinical decompensation is 23.2% with a median wait-list time of 344 days [46]. Age, initial MELD score, and pulmonary vascular resistance are independent risk factors for wait-list mortality [46]. Patients with the lowest wait-list mortality are those with MELD score ≤ 12 and initial pulmonary vascular resistance of ≤450 dynes/s/cm−5 [46].

Data from the Scientific Registry of Transplant Recipients between 2002 and 2010 was retrospectively reviewed by Salgia et al., and they identified 78 out of 34,318 patients who underwent cadaveric transplantation for POHP with MELD exception points [38]. The unadjusted 1- and 3-year patient survival for recipients with POPH was 85 and 81%, while graft survival was 82 and 78% respectively. After adjusting for donor and recipient factors, POPH recipients have a significantly higher adjusted risk of death and graft failure within the first posttransplant year compared to non-POPH transplants [38]. DuBrock et al. have reported an unadjusted 1-year posttransplant mortality rate of 14% similar to Salgia et al. [46]. Rajaram et al. performed a 10-year retrospective review between 2005 and 2015 with the objective to compare posttransplant outcomes of patients diagnosed with POPH and pulmonary venous hypertension versus patients without pulmonary hypertension [47]. The authors identified 28 patients with POPH, 13 of which underwent LT with an average MELD score of 21 [47]. One patient passed away intraoperatively; 30-day survival was 92.3%, and 1-year survival was 69.2% compared to a 1-year survival of 100% in the non-pulmonary hypertension group [47]. A recent systematic review demonstrated a 1-year posttransplant mortality rate of 26% for POPH compared to 12.7% in non-POPH patients [48]. A retrospective national cohort study of 110 POPH patients in the United Kingdom identified no difference in survival between cirrhotic and non-cirrhotic patients, and the overall survival rate at 1, 2, 3, and 5 years was 85, 73, 60, and 35% [49].

Renal Failure and Liver Transplantation

Please refer to Chap. 5 on this specific topic.

An alternate treatment strategy for liver transplant candidates with renal insufficiency is to proceed with LT and assess for the development of postoperative renal insufficiency [51]. In high MELD patients undergoing SLKT, there is a high risk of renal allograft failure. As such, it has been suggested that liver-alone transplantation should be performed with assessment at 3 months posttransplant for potential prioritization for kidney allocation [53]. Fong et al. reported that renal allograft and patient survival were significantly lower in patients undergoing SLKT compared to isolated kidney transplantation [54].

The potential benefits of SLKT has been an ongoing debate, as there is no high-quality evidence demonstrating which patients benefit most from SLKT. A cited benefit of SLKT is immune protection of the renal allograft with lower rates of acute and chronic rejection compared to sequential kidney transplant [55]. The potential drawback of SLKT is that liver recipients receive a donor kidney when their native kidney might in fact recover, resulting in a lost organ for a patient waiting for kidney-only transplantation [53]. The ultimate goal in selecting patients for SLKT is to identify which ESLD transplant candidates will develop or have irreversible kidney damage at the time of transplantation and therefore will ultimately benefit from a single operation. The difficulty lies in that there is no reliable method to identify which liver transplant candidates with concurrent kidney injury will recover renal function or eventually require a renal transplant post LT [52]. Currently, there is no universal policy for SLKT. In 2015, Puri and Eason summarized the evolution of recommendations and guidelines for SLKT outlined below [56] [Table 14.2].

Combined Liver and Thoracic Transplantation

Combined liver thoracic transplantation is a rare phenomenon. From 1995 to 2016, there have been 17 single-center published reports [58]. Combined heart and liver transplant (CHLT) is only performed at a few select high-volume centers. From 1988 to 2015, there have been 192 CHLTs performed in the United States [59]. The rate of CHLTs being performed in the United States is rapidly increasing. A retrospective review of the UNOS database between 1987 and 2010 identified 97 reported cases of CHLTs [60]. The two most common primary cardiac diagnoses were amyloidosis (26.8%) and idiopathic dilated cardiomyopathy (14.4%), while the two most common primary liver diagnoses were amyloidosis (27.8%) and cardiac cirrhosis (17.5%) [60]. Other common indications for CHLT are for patients with heart and liver failure secondary to hemochromatosis and familial hypercholesterolemia and for patients with ESLD who have severe heart disease and are unfit for liver-alone transplantation [61]. Beal et al. summarized the following number of CHLTs performed at high-volume centers within the United States: Mayo clinic (n = 33), Hospital of the University of Pennsylvania (n = 31), University of Pittsburgh Medical Center (n = 14), University of Chicago Medical Center (n = 13), Methodist Hospital (n = 13), and Cedars-Sinai Medical Center (n = 9), with the remaining centers performing ≤7 CHLT each [59].

Cannon et al. reported that liver graft survival in 97 CHLT was 83.4, 72.8, and 71% at 1, 5, and 10 years, while cardiac graft survival was 83.5%, 73.2, and 71.5%, respectively [60]. An interesting observation was that patients who received CHLT had lower rates of acute rejection compared to patients undergoing isolated heart transplantation [60]. A retrospective study from Mayo Clinic demonstrated that the incidence of T-cell-mediated rejection was 31.8% in CHLT recipients compared to 84.8% in isolated heart transplant recipients with similar overall incidence of antibody-mediated rejection [62]. Cannon et al. note that the average MELD score at time the time of CHLT was 13.8; however, the wait-list mortality for these patients would have been higher compared to patients with isolated hepatic failure with similar MELD scores [60]. Between January 1997 and February 2004, there were 110 patients wait-listed for CHLT within the United States; 33 patients (30%) underwent CHLT, 30 patients (27%) died, 11 patients (10%) were still wait-listed, and 34 patients received single-organ, sequential organ transplant or were awaiting transplant of the second organ [59]. A large single-center case series from the University of Bologna reported on 14 patients with combined heart and liver failure where 13 patients underwent CHLT and 1 underwent combined heart-liver-kidney transplantation. The 1-month, 1-year, and 5-year survival rates were 93, 93, and 82%, respectively, while graft free rejection at 1, 5, and 10 years for the heart was 100, 91, 36, and 100% and 91 and 86% for the liver [61].

Patients with end-stage pulmonary disease and ESLD who are not expected to survive with only a single-organ transplant can be considered for combined lung and liver transplantation (CLLT).

Isolated lung transplantation should be considered if there is a >50% risk of mortality secondarily to the primary lung disease within 2 years if a lung transplant is not performed, >80% chance of survival at 90 days after lung transplantation, and a >80% chance of 5-year post transplant survival with adequate graft function [63]. In addition to the lung transplant indications mentioned, if there is biopsy proven cirrhosis with a portal pressure gradient >10 mmHg, a CLLT can be considered [63]. Contraindications to CLLT include albumin <2.0 g/dL, INR > 1.8, presence of severe ascites, or encephalopathy [63].

Similar to CHLT, CLLT is rarely performed, and experience is limited to single-center or multicenter case reports. Double lung transplant is most often performed during CLLT instead of single lung transplant. The most common indication for CLLT is cystic fibrosis with pulmonary and liver involvement. Other indications include POPH with ESLD, hepatopulmonary fibrosis, alpha-1 antitrypsin deficiency with advanced lung and liver involvement, and sarcoidosis [64]. As with CHLT, there is a postulated immunological benefit for combined transplant, where LT is immune protective [65, 66]. There are no standardized recommendations available for CLLT, and candidacy is evaluated at each center with a multidisciplinary board committee review [64]. Potential CLLT candidates need to be placed on individual organ wait-lists. Prior to 2005, the United States and the Euro transplant region donated lungs based on patient waiting time [67]. In May 2005, the lung allocation score (LAS) was introduced, which is comprised of several patient clinical and laboratory parameters, and in the United States the LAS has replaced waiting time for determining priority of donor lungs [67]. Other European countries have followed suit over the years.

Patients undergoing CLLT derive a significant survival benefit from CLLT; however, there is a higher risk of wait-list mortality compared to single-organ transplantation [64, 68]. Survival rates are improving for CLLT. In 2008, Grannas et al. reported the largest published single-center cohort of CLLT with 1- and 5-year mortality rates of 69 and 49% [69]. Retrospective review of 14 consecutive patients who underwent simultaneous liver and thoracic transplantation included 10 patients who underwent CLLT [58]. In seven CLLT patients, the lung was transplanted prior to the liver, and three patients underwent a liver first principle while the lungs were perfused ex vivo [58]. One hundred percent of the CLLT patients were alive at 1 and 5 years with 10% suffering acute liver rejection, 40% acute lung rejection, and 10% chronic liver/lung rejection [58]. One of the largest single-center American series included 8 patients who underwent CLLT with reported patient and graft survival of 87.5, 75, and 71% at 30 days, 90 days, and 1 year [70].

CLLT can be performed with a liver first, then lung transplant approach or alternatively with a lung-first approach. Theoretical advantages of the liver first principle include reduced complications of hepatic reperfusion, potentially reduced need for blood products, reduced incidence of donor pulmonary edema, and reduced incidence of biliary strictures [58]. Advances are continuing to evolve for CLLT in critically ill patients. Extracorporeal membrane oxygenation with central cannulation has successfully been implemented after lung transplantation and prior to orthotopic LT in order to manage extensive pulmonary reperfusion edema and right heart insufficiency [71].

Intraoperative Preparation of a Critically Ill Recipient for Liver Transplant

Historically, adult orthotopic LT has been associated with massive hemorrhage with median red blood cell (RBC) transfusion rates of 28.5 units per case [72]. With improved surgical technique, intraoperative anesthetic management, transfusion medicine, and improved understanding of coagulation abnormalities [73] associated with cirrhosis, intraoperative transfusion rates have been steadily decreasing over the last 20 years [74]. Patients with low MELD scores can undergo transplantation with 0.3 units of packed RBCs without plasma, platelet, or cryoprecipitate transfusion [75], while increased INR and presence of ascites have been independently correlated with increased intraoperative blood product utilization [76, 77].

With reduced blood product transfusions, survival posttransplantation has improved [79,80,80]. In fact, transfusion of one or more units of plasma has been shown to have a 5.1 increased mortality risk compared to no plasma received [81]. A retrospective analysis of 286 transplant recipients found that the strongest predictor of overall survival was the number of blood transfusions after a mean follow-up of 32 months [77]. In order to identify which transplant recipients are at an increased risk of requiring intraoperative blood products, McCluskey et al. developed a risk index score for massive blood transfusion and identified 7 preoperative variables including age > 40 years, hemoglobin ≤10 g/dL, INR 1.2–1.99 and >2, platelet count ≤70 × 109/L, creatinine >110 umol/L (females) and >120 umol/L (for males), and repeat LT [82].

Normal hemostasis requires a balance between the coagulation and the fibrinolytic systems. One of the pathophysiologic complications of end-stage cirrhosis is the reduced ability or inability of the liver to synthesize new or clear activated coagulation factors [83]. During technically challenging cases, surgical bleeding can be magnified by the inability of the recipient liver to produce coagulation factors and platelets for necessary clot formation. A majority of cirrhotic patients will exhibit some form of thrombocytopenia, which is secondary to increased platelet activation, consumption, and splenic sequestration of platelets associated with portal hypertension [84]. Although total number of platelets are reduced, it has been shown that in the remaining platelets, there is increased activity secondary to increased levels of von Willebrand factor and decreased levels of ADAMTS 13 [85]. All the coagulation factors are synthesized by the liver, the only exception being factor 8. In cirrhotic patients the levels of vitamin K-dependent factors fall by 25–70% [86].

Cirrhosis induced thrombocytopenia in conjunction with prolonged prothrombin time (PT), and activated partial thromboplastin time (aPPT) was previously thought to be indicative of an increased bleeding risk [85, 86]. However, cirrhotic patients have a “rebalanced” homeostasis of anticoagulant and procoagulant cascades [85]. Furthermore, the etiology of cirrhosis can impact the balance between coagulopathy and thrombosis [83]. In the critically ill cirrhotic recipient prior to LT, superimposed infections, renal injury, endotoxins, and imbalances of coagulation factors [87] contribute to the coagulopathy seen intraoperatively. Understanding the coagulopathic profile of severely cirrhotic patients and the impact of the phases of LT is important in order to anticipate intraoperative challenges.

The initial abdominal incision made is based on surgeon preference. Commonly utilized incisions for opening the abdomen include a bilateral subcostal incision with upper midline laparotomy (Mercedes incision) or an upper midline laparotomy with a right lateral extension (Cheney incision). Table-mounted Thompson, Omni, or Bookwalter retractors are used to help facilitate intra-abdominal exposure, and choice of retractor is typically also dependent on surgeon preference. When the abdomen is opened, it is important to be cognizant of patients with ascites. Quick removal of large-volume ascites upon entering the abdomen can potentially result in a rapid shift of recipient hemodynamics.

The general steps of LT are divided into pre-anhepatic, anhepatic, and neohepatic/reperfusion phases. The pre-anhepatic phase refers to recipient hepatectomy and is completed once the vascular inflow/outflow has been controlled and clamped. Once vascular inflow and outflow have been clamped, the anhepatic phase begins, and the recipient liver is removed. The anhepatic phase continues with implantation of the new donor liver and subsequent IVC and portal vein anastomosis. The neohepatic/ reperfusion phase begins with unclamping of the venous inflow and outflow, perfusion of the donor liver, and venous return to the heart. Subsequently the hepatic arterial and biliary anastomoses are performed, and the neohepatic phase is complete. Recipient warm ischemia time generally refers to the time that the recipient liver has been explanted to the time that the donor liver has been implanted and flow through the donor graft has been established.

During the pre-anhepatic phase, the recipient liver is completely mobilized by taking down the falciform, triangular, and coronary ligaments of the liver. Once the liver has been mobilized, portal dissection is performed in order to identify and isolate the common bile duct, right/left and common hepatic arteries, and the portal vein. Dissection of the gastrohepatic ligament provides access to the portal structures. The common bile duct, right and left hepatic arteries, and portal vein are subsequently ligated. The common bile duct should be resected just distal to the cystic duct. The gastroduodenal artery should be identified; however, it does not routinely need to be ligated.

In severely cirrhotic patients with portal hypertension, the pre-anhepatic phase is usually associated with the greatest amount of bleeding. The surgeon may encounter several potentially large portosystemic collaterals in the setting of the previously described hyperdynamic circulation [88], complicating mobilization, and dissection of the recipient liver. Adhesions secondary to prior upper abdominal surgery can further complicate the hepatectomy phase [89], and previous abdominal surgery has been found to be an independent risk factor for blood transfusion requirements [90]. Reduced availability of coagulation factors and platelets inhibits the liver’s normal ability to deal with surgical bleeding.

During the recipient hepatectomy measurement and prophylactic treatment of abnormal laboratory bleeding time (BT), PT, INR, and aPTT have been common practice in order to help control anticipated surgical bleeding. However, as early as 1997, it was identified that aggressively correcting laboratory coagulation abnormalities prior to the anhepatic phase of transplantation is not required and that over-resuscitation during the pre-anhepatic phase may lead to extensive blood loss [91]. Prophylactic administration of FFP and RBCs contributes to blood loss by increasing splanchnic pressure in an already hyperdynamic circulatory state. Infusion of additional volume will eventually circulate back to the heart during the neohepatic phase [92]. As such, the utility of prophylactic treatment of abnormal laboratory values in cirrhotic patients has been questioned [84, 87, 93]. An evolving trend is the minimization of blood product transfusions during LT.

In general, there are two anhepatic techniques of LT: caval interposition and caval sparing (i.e., piggyback technique). The classic caval interposition technique begins with a retrohepatic caval dissection with cross-clamping of both the suprahepatic and infrahepatic inferior vena cava (IVC). This is followed by removal of the recipient liver, interposition and anastomosis of the donor IVC, and liver graft to the recipient suprahepatic and infrahepatic IVC. Suprahepatic and infrahepatic IVC reconstruction is performed with 3-0 or 4-0 Prolene sutures in a running fashion. Prior to completion of the infrahepatic caval anastomosis, the donor portal vein is flushed with a preservation solution in order to rid the liver of accumulated toxins that may contribute to reperfusion syndrome. Once flushing is complete, the donor portal vein is reconstructed to the recipient portal vein in an end-to-end fashion with 6-0 Prolene sutures. Typically, a shorter portal vein reconstruction is preferred over a longer donor/recipient portal vein reconstruction with the hope of reducing kinking or development of postoperative portal vein thrombosis.

Re-establishment of blood flow with unclamping of the IVC and portal anastomosis completes the anhepatic phase, and reperfusion of the liver begins. There are alternative flushing techniques described and are based on surgeon preference. Historically, venovenous bypass was used in conjunction with classic caval reconstruction. The purpose was to provide venous return when the usual caval venous return to the heart is interrupted [94]. Nowadays, it would be commonplace to perform caval interposition technique without venovenous bypass.

Alternate caval reconstruction techniques such as the piggyback [95] or side-to-side [96] caval anastomosis only require partial occlusion of the recipient suprahepatic IVC. The recipient liver is mobilized off of the recipient IVC, while the IVC is left intact. Care must be taken while dissecting the liver off the IVC as retrohepatic veins may easily tear, cause further bleeding, and potentially damage the IVC.

In the piggyback technique, the donor hepatic vein can be anastomosed to two or three recipient hepatic veins. If only two hepatic veins are used, then the right hepatic vein is ligated. The piggyback technique with partial IVC occlusion provides a theoretical advantage of maintaining venous blood flow from the infrahepatic IVC to the heart. Maintaining cardiac preload is believed to stabilize hemodynamic stability and therefore avoids large intraoperative fluid infusions and potential need for vasopressors. Additional suggested advantages of partial caval occlusion include shorter anhepatic phase and possible decreased incidence of renal injury [97]. Moreno-Gonzalez et al. retrospectively identified that the piggyback technique was associated with longer operative times but also with less intraoperative hemodynamic instability, RBC transfusions, pressors, and fluid administration [98]. Graft outflow obstruction and increased incidence of bleeding from the caval anastomosis are recognized potential complications of the piggyback technique [97]. Caval obstruction associated with the piggyback technique is thought to be secondary to a large donor graft causing compression or an inadequate graft size that can result in twisting of the caval anastomosis, ultimately leading to hepatic venous outflow obstruction [99].

The transition from the anhepatic to neohepatic phase is critically important as there is no functioning liver during the anhepatic phase. No clotting factors are produced, and the concentration of tissue plasminogen activator increases, which contributes to fibrinolysis [100]. The accumulation of citrate leads to increased binding of ionized calcium, and calcium is an important cofactor for proper hemostasis [101]. Pooled systemic blood below the IVC clamp becomes cold and hyperkalemic as lactic acid, toxic metabolites, cytokines, and free radicals accumulate and cannot be removed [102]. When the IVC and portal vein clamps are removed, circulation is restored, and the donor liver receives the systemic blood and forwards it toward the recipient heart while the portal vein provides a fresh inflow of blood.

At this critical time, reperfusion syndrome can induce recipient hemodynamic instability as the pooled systemic blood is returned to the heart. Hilmi et al. classified postreperfusion syndrome (PRS) as mild or severe [102]. Mild PRS occurs when the decrease in blood pressure and or heart rate is <30% of the anhepatic blood pressure levels, lasts for ≤5 minutes, and is responsive to a 1 g intravenous bolus of calcium chloride and or intravenous boluses of epinephrine (≤100ug) without requiring continuous infusion of vasopressor agents [102]. Severe PRS is defined as the presence of persistent hypotension >30% of the anhepatic level, asystole, significant arrhythmias, and requirement of intraoperative or postoperative vasopressor support [102]. Severe PRS is additionally defined as prolonged (>30 minutes) or recurrent fibrinolysis requiring treatment with antifibrinolytics. The three main categories that contribute to the development of PRS are donor/organ related, recipient related, and procedure related [103]. Prolonged warm ischemia time typically >90 minutes is a procedure related factor that can contribute to the increased risk of developing PRS.

The reality is that there is an interplay between many risk factors that contribute to PRS. In the setting of a technically straightforward transplant with an optimal donor, the new liver begins to produce coagulation factors immediately, and is able to metabolize systemic toxins, thus avoiding PRS and potential primary graft nonfunction. In the setting of a technically challenging transplant and higher donor risk index organ, the newly implanted liver may have difficulty in initially metabolizing the pooled systemic blood while simultaneously synthesizing necessary coagulation and antithrombotic factors.

During the neohepatic phase, the donor and recipient hepatic arteries are reconstructed with 6-0 to 7-0 Prolene sutures depending on size of the hepatic artery. Various arterial reconstruction techniques can be employed along with different recipient and donor arteries depending on donor and recipient anatomy [104]. Commonly, an end-to-end parachute technique (between donor and recipient common hepatic arteries) is performed. Alternatively, a Carrel patch of donor celiac artery can be anastomosed to the recipient common hepatic artery. A cholecystectomy and bile duct reconstruction are performed, and the abdomen is closed. If technically feasible, an end-to-end bile duct anastomosis is preferred. Alternatively, a Roux-en-Y hepaticojejunostomy can be performed. Intra-abdominal drains are placed at the surgeon’s discretion.

Point-of-care coagulation monitoring with thromboelastography (TEG) or rotational thromboelastometry (ROTEM) has become commonly utilized within LT. Both TEG and ROTEM measure the viscoelastic properties of clot formation via whole blood assay tests that analyze the phases of clot formation [105] and fibrinolysis [106]. Both technologies can measure coagulopathy more accurately than standardized laboratory tests. Additionally, TEG and ROTEM have fast turnaround times. Standard laboratory tests measure coagulation in plasma, are associated with a 40–60-minute delay, and platelet function is not concurrently assessed [107].

Preoperative TEG has been shown prospectively to help predict which patients will require massive transfusion within 24 hours of surgery [108]. Preoperative ROTEM has also shown promise in predicting bleeding risk during LT [109]. A prospectively randomized trial of 28 patients undergoing orthotopic LT was performed utilizing intraoperative TEG compared to standard laboratory measures. Intraoperative TEG monitoring was shown to significantly reduce transfusion rates of plasma (12.8 U vs 12.5 U); however, 3-year survival was not affected [110]. Furthermore, intraoperative use of prothrombin complex and cryoprecipitate guided by ROTEM has shown to result in significantly less RBCs and FFP being transfused [111]. Overall, ROTEM and TEG have shown to help reduce perioperative blood loss and blood transfusions and are rapidly becoming indispensable adjuncts during LT [106].

With the wide adoption of tranexamic acid (TXA) to help reduce bleeding in trauma, there has been interest of adopting the use of TXA during LT. A large systematic review and meta-analysis of liver transplant recipient outcomes comparing the use of antifibrinolytics to placebo found that there was no increased risk for hepatic artery thrombosis, venous thromboembolic events, or perioperative mortality [112]. However, international recommendations advise against the prophylactic use of tranexamic acid [113], unless fibrinolysis is detected clinically or with point-of-care testing. ROTEM has also demonstrated to be helpful in guiding resuscitation in response to hyperfibrinolysis [114].

Postoperative Management After Liver Transplantation

Systemic and renal vascular changes associated with cirrhosis-induced hyperdynamic circulation have been demonstrated to return to normal after LT. However, several authors have also demonstrated that cirrhosis-induced hyperdynamic circulation persists for a long period of time post-LT despite normalization of liver function and portal pressure [115, 116]. Living donor liver transplant (LDLT) recipients with a good postoperative course have been found to have significantly higher portal venous velocity and volume compared to LDLT recipients with graft failure, while no significant differences were observed in absolute cardiac output, cardiac index, blood volume, mean arterial pressure, and hepatic arterial flow [116].

Postoperative LT complications can be divided into acute and chronic and further divided into vascular and nonvascular complications. The rates of the complications include hepatic artery stenosis (2–13%), portal vein stenosis (2–3%), arterial dissection, pseudoaneurysm (most commonly at the hepatic arterial anastomosis), or hepatic artery rupture (0.64%) [117]. Hepatic pseudoaneurysms typically appear in the second to third weeks post-LT with an incidence of 2.5% [118].

Hepatic artery thrombosis (HAT) is the most common acute vascular complication and is considered to be the most devastating as it contributes to bile duct necrosis, graft loss requiring re-transplantation, and overall mortality rates between 27 and 58% [119]. Early HAT is defined as occurring within 1 month of LT and has a higher reported mortality rate compared to late HAT (defined as >1 month post-LT [120]. Early HAT incidence can range from 0 to 12% [117]. A systematic review of 21,822 liver transplants identified 843 cases of early HAT with a mean incidence of 3.9% and without any significant difference between transplant centers worldwide [121]. Of note, this large review defined early HAT as occurring within 2 months of LT. The authors identified that low-volume centers (<30 transplants per year) had a higher incidence of early HAT compared to high-volume centers (5.8% vs 3.2%) [121]. Furthermore, it was demonstrated that pediatric HATs occurred with significantly higher incidence compared to adults (8.3% vs 2.9%) [121]. There is also no significant difference in the incidence of HAT between deceased donor LT (4.6%) compared to LDLT (3.1%) [121]. The median time to diagnosis of early HAT is 6.9 days and of late HAT is 6 months [121, 122].

Risk factors for HAT include increased graft ischemia time, ABO incompatibility, CMV infection, acute rejection, and use of aortohepatic conduit anastomosis, although this can be overcome with experience [123]. Surgical causes for early HAT include retrieval injuries, technical failure, hepatic artery kinking, and small or multiple arteries requiring arterial reconstruction [118]. The type of arterial reconstruction impacts graft function likely secondary to kinking. Long-artery grafts are an independent risk factor for early HAT, and short-graft artery reconstruction is recommended [124].

A systematic review of 19 studies identified that when standard revascularization techniques were not feasible and arterial conduits were utilized, there was an independent increased risk for the development of HAT and increased risk of ischemic cholangiopathy and lower graft survival compared to LT without arterial conduits [125]. Schroering et al. performed a 10-year retrospective analysis of 1145 transplants and identified that nontraditional donor arterial anatomy did not result in any significant difference in HAT or 1-year graft survival [126]. Sixty-eight percent of livers had standard anatomy, 222 donor livers required back table reconstruction, and the most common reconstruction (161 cases) was of the accessory/replaced right hepatic artery to the gastroduodenal artery [126].

Routine early postoperative doppler ultrasonography (US) for the evaluation of HAT has been previously proposed [127], and it is routinely used in many centers for screening for postoperative vascular complications. It is common to obtain postoperative day 1 doppler US to rule out an obvious HAT as one can develop within a few hours of LT. Doppler US is also useful to establish baseline hepatic flows for future comparison. Protocols for postoperative US are variable from center to center. Typical symptoms of early HAT include fever, elevated WBC, elevated transaminases, and possible septic shock; however, patients are often asymptomatic [119]. Doppler US remains as the first-line imaging modality to detect vascular complications as it is relatively quick, inexpensive, and noninvasive [128].

A noncomplicated hepatic arterial anastomosis on US should demonstrate arterial waveforms with swift upstrokes lasting <0.08 seconds, continuous anterograde diastolic flow, and a normal resistive index (RI) of 0.5–0.8 [123]. Transient increases of RI > 0.8 are common within 48–72 hours posttransplantation and are typically due to edema, vasospasm, or the new graft’s initial response to portal hyperperfusion [123]. Increased peak systolic velocities or absent diastolic flow can be seen on US within the first 72 hours and eventually return to normal; however, one must be suspicious of HAT when absent or reversed diastolic flow in combination with low or decreasing peak systolic velocity are present [123, 129]. Additionally, presence of low RI in the initial postoperative period is 100% sensitive for a vascular (arterial, portal, or hepatic) complication [119]. Marin-Gomez et al. identified that low intraoperative hepatic artery blood flow of 93.3 ml/min was an independent risk factor for early HAT compared to an intraoperative blood flow of 187.7 ml/min without HAT [130].

The development of early HAT will require re-transplantation in approximately 50% of patients [131]. Re-transplantation has traditionally been the primary approach; however, surgical and endovascular revascularization are alternative options. Surgical revascularization has the benefit that the patient does not necessarily need to be re-listed if revascularization is successful. Especially in the current climate of limited organs, surgical revascularization with donor and recipient hepatic artery reconstruction is optimal. Scarinci et al. have reported that when revascularization is performed within the first week of LT, graft salvage approaches 81% [132]. However, successful surgical revascularization rates are variable across the literature [119]. In such situations where surgical revascularization fails, immediate re-listing and re-transplantation are required. In overtly symptomatic patients, with significant hepatic infarction or biliary necrosis, re-transplantation becomes the default primary option. Patients are eligible for immediate re-listing if they are diagnosed with HAT within 7 days of LT, along with an AST ≥ 3000 and/or an INR ≥ 2.5 or arterial pH of ≤7.30, venous pH of 7.25, and/or lactate ≥4 mmol/L [133].

Endovascular treatments for HAT include intra-arterial thrombolysis and percutaneous transluminal angioplasty with or without stent placement and have been used with increased frequency with some authors reporting high success rates [119, 133]. Endovascular approaches remain somewhat controversial, lack high-quality evidence, and require ongoing further study [117]. Late HAT has a reported incidence rate of 1.7%, and patients typically present with fever, jaundice, and hepatic abscesses [122]. Late HAT with evidence of arterial collateralization should be managed conservatively [118]. However, many patients with late HAT develop ischemic cholangiopathy which requires subsequent re-transplantation [120].

Hepatic artery stenosis (HAS) is defined as narrowing of the hepatic artery by >50% on angiogram with an RI of <0.5 and peak systolic velocity > 400 cm/s [117]. There has been an increasing trend for the management of HAS via interventional procedures. A meta-analysis of case series for HAS was performed by Rostambeigi et al., which identified that percutaneous balloon angioplasty and stent placement have similar success rates (89% and 98%), complications (16% and 19%), arterial patency (76% versus 68%), re-intervention (22% versus 25%), and re-transplantation (20% versus 24%) [134].

Vascular outflow complications include hepatic and IVC thrombosis. Patient symptoms/signs include the need for ongoing diuretic therapy, persistent ascites, or abnormal liver function tests. Persistent ascites has been found to be the most common symptom resulting in investigation for hepatic venous outflow obstruction [135]. Untreated hepatic venous outflow obstruction (HVOO) can lead to graft congestion, portal hypertension, and cirrhosis, which may ultimately compromise graft function and patient survival [136]. HVOO has been reported in about 1–3.5% of patients receiving full-sized grafts and found with increased frequency in re-transplanted patients [135, 137]. Doppler US is the initial radiographic investigation of choice. Incidence of HVOO for orthotopic LT with partial grafts range from 5 to 13% and 12.5% in LDLT [136]. Early (within 1 month) HVOO is thought to occur secondary to kinking at the donor hepatic vein and recipient suprahepatic IVC anastomosis, technical factors resulting in a narrow anastomosis, or large graft compression of the IVC [137]. Delayed HVOO is related to fibrosis and intimal hyperplasia.

In order to evaluate the incidence of HVOO, a retrospective review of 777 consecutive liver transplants including 695 cadaveric transplants with a mean MELD score of 14, of which 88% underwent piggy back technique was performed [138]. Early hepatic vein outflow obstruction occurred in 1% (7/695) of cases with all occurrences in the piggyback technique with 2 hepatic veins [138]. Two of seven cases were successfully managed medically with diuretics, while five of seven cases required operative cavoplasty [138]. In patients with high-pressure gradients or hepatic vein stenosis at the anastomotic site, hepatic venoplasty alone has been used as the initial management strategy followed by hepatic vein stenting if symptoms or elevated pressure gradient persist [135]. Other centers have successfully performed venoplasty with stenting as a primary option rather than venoplasty alone [137, 139, 140]. Endovascular management for HVOO is preferred over surgical repair because of the increased morbidity and mortality associated with surgical repair [139]. In LDLT recipients diagnosed with early and late HVOO managed with stent placement, patency in the early HVOO group was 76, 46, and 46%, while late HVOO patency rates were 40, 20, and 20% at 1, 3, and 5 years, respectively [141].

Nonvascular complications are further subdivided into biliary complications, graft dysfunction/rejection, infectious, drug toxicity, and increased future risk of malignancy. Biliary complications are the most common complications post-LT, and duct ischemia is closely related to hepatic arterial complications. The biliary system is supplied only by the hepatic arterial system, and arterial anastomotic complications may lead to secondary biliary complications. Common biliary complications include strictures, leaks, stones, bile debris, and ischemia. Bile leaks and strictures occur in 2–25% of cases and comprise the majority of postoperative complications [142]. In a large American data set of 12,803 liver transplants, the incidence of bile duct complications was significantly higher in donation after cardiac death (DCD) recipients (23%) compared to neurologic death donor (NDD) recipients (19%) [143]. Within the same database, DCD recipients required more frequent diagnostic/therapeutic procedures (18.8% vs 14.4%), surgical revision of biliary anastomosis (4.1% vs 2.8%), and re-transplantation (9.1% vs 3.8%) when compared to NDD recipients [143]. A large meta-analysis also identified that biliary complications were significantly increased in DCD recipients compared to NDD recipients (26% versus 16%) [144]. Overall incidence of ischemic cholangiopathy was 16% in DCD recipients compared to 3% in NDD recipients [144].

Early bile leaks are defined as those occurring within 4 weeks of LT and usually occur at the site of the anastomosis. Patients may be asymptomatic or present with nonspecific symptoms such as fever and abdominal/shoulder pain and may develop peritonitis with or without superimposed infection. Elevated bilirubin is usually present along with elevations in lab values (GGT/ALP). Diagnosis can be made with ultrasound, with CT scan, or with magnetic resonance cholangiopancreatography (MRCP) [144]. Several management options are available. Endoscopic retrograde cholangiopancreatography (ERCP) with or without stent placement is typically utilized. Radiographically guided percutaneous drainage can be effectively used in addition to ERCP to drain a biloma. ERCP has the advantage that it is simultaneously both diagnostic and therapeutic. If the bile duct is reconstructed in an end-to-end fashion, ERCP is technically feasible. When a hepaticojejunostomy has been performed; ERCP is more challenging, requiring a skilled endoscopist, and not always technically possible. If ERCP cannot be performed, or is unable to reach the area of concern, percutaneous transhepatic cholangiography (PTC) and drainage are required. If ERCP is unable to adequately stent or reach a leak at the biliary anastomosis, PTC can be additionally performed to control the leak. If a large biliary anastomotic defect or biliary necrosis is present early in the postoperative period, surgical revision with a redo end-to-end anastomosis, choledochojejunostomy, or hepaticojejunostomy is required. The biliary defect may be too large or degree of the biliary necrosis too significant to preserve enough bile duct length for a redo end-to-end anastomosis. Bile duct strictures mostly develop at the anastomotic site; however, non-anastomotic strictures may develop and are alternatively known as ischemic type strictures [145]. Non-anastomotic strictures can be caused by microangiopathic factors (prolonged cold/warm ischemia, hemodynamic instability) or secondary to HAT [145]. Extraction of the native recipient liver results in loss of arterial collateral circulation, and the newly implanted donor liver will not have arterial collateral circulation to supply the biliary system. Therefore, its blood supply is dependent on the hepatic arterial anastomosis. It takes approximately 2 weeks for collaterals to start to form. When blood flow is reduced to the biliary system, ischemic strictures may develop anywhere along the bile duct. Ischemic bile duct strictures are typically longer than anastomotic biliary strictures, are present in multiple locations, and are usually found at the hepatic hilum; however, they may be present throughout the intrahepatic biliary system [142].

Periportal edema, residual ascites, or fluid around the peri-hepatic space is expected and usually resolves within a few weeks. Normal postoperative US findings consist of periportal edema, reperfusion edema, and fluid stasis [129]. Periportal edema seen on ultrasound can be mistaken for biliary dilatation and was initially thought to correlate with rejection; this has since been disproved [129]. The incidence of acute graft rejection increases with time. Eighteen percent will experience acute rejection within the first 6 months, and this will increase to 33% by 24 months posttransplant [146].

Scoring Systems for Transplantation

The survival outcomes following liver transplant (SOFT) score is based on 4 donors, 13 recipient, and 1 operative factor [34]. It was designed in an attempt to improve organ allocation by avoiding transplantation of organs into patients when predicted survival is below accepted levels. The SOFT score is composed of two components. There is the pre-allocation score to predict survival outcomes following LT (P-SOFT) and a SOFT score that is used to predict survival posttransplantation [34]. The SOFT score has additional variables with allotted points that can be added or subtracted from the P-SOFT score. The SOFT score can be used by the physician as an adjunct in deciding whether to accept a liver organ by estimating the 3-month postoperative mortality rate compared to a MELD estimated 3-month wait-list mortality rate. The SOFT score is the most accurate predictor of 3-month recipient survival and is also accurate for predicting 1-, 3-, and 5-year post-LT survival [34]. It was determined by the authors that the SOFT score was most accurate based on area under the curve analysis. Furthermore, the SOFT score can be used to improve donor-recipient matching [34].

The balance of risk (BAR) score was developed with a similar goal as the other prognostic scoring systems, and that was to assess post-LT recipient survival. Dutkowski et al. [147] wanted to develop a score based on donor, graft, and recipient factors that were readily available pretransplant and that would have a good correlation to 3-month posttransplant survivorship. Dutkowski et al. used the UNOS database and showed that receiver operator characteristic curves were 0.5, 0.6, 0.6, 0.7, and 0.7 for DRI, MELD, D-MELD, SOFT, and BAR for predicting 3-month patient survival [147]. The BAR score discriminated between survival and mortality with a score of 18. A cited advantage of the BAR score is that its included variables are collected in a standard method internationally and that with less variables compared to other scoring systems, it lends itself to quick and readily accessible calculations [147].

The UCLA group wanted to identify predictors of futility and long-term survival in adult recipients undergoing primary cadaveric orthotropic LT for patients with ESLD and MELD scores >40 [20]. They created a posttransplant futility risk score based entirely on independently verified recipient factors that predicted futility. The variables were MELD score, pretransplant septic shock, cardiac risk, and age-adjusted Charlson comorbidity index [20]. Various calibrated coefficients were added to the included recipient variables. A review of currently available scoring systems with associated variables and pertinent points regarding each scoring system is listed in Table 14.3.

Donor-Recipient Matching for a Sick Patient

Briceno et al. [151] summarize the historical and current realities of donor-recipient matching based on different organ allocation systems, from patient-based, donor-based, or combined donor-recipient-based policies. The higher the MELD score, the lower the mortality risk for deceased donor transplant recipients compared to wait-list candidates, as mortality was more likely to occur while awaiting LT, rather than from risk of mortality at 1 year posttransplantation [11]. Alternatively, deceased donor transplant recipients with MELD scores <15 had a higher risk of post-LT mortality at 1 year compared to candidates (with MELD <15) awaiting transplantation. In this analysis, the quality of donor organ was not accounted for. When high MELD score patients receive high-risk or optimal organ donors, there is a survival benefit regardless of the DRI [10]; however, in patients with low MELD scores that received high DRI organs, there is an overall decrease in posttransplant survival [26].

As transplant wait-lists continue to increase along with patients accumulating higher MELD scores and limited organ supply, the use of extended donor criteria has increased. The importance of optimal donor-recipient matching has heightened. Recent data has revealed that 20-year survival for post-LT recipients is significantly influenced by the DRI (≤1.4 and >1.4) and donor age independently (<30 vs ≥30) [152]. It has been suggested that the ideal liver transplant recipient is a young woman with acute liver failure or cholestatic liver disease/autoimmune hepatitis, who has as BMI < 25, normal kidney function, and no dyslipidemia, while the optimal donor organ is <30 years old with an ET-DRI of <1.2 [152]. This optimal match is a rarity in today’s clinical practice, and identifying donors that provide the best match for the sickest first or high MELD priority allocation system is paramount. An ideal match between donor and recipient would ensure that recipient survival and graft survival were optimized, where the probability of death on wait-lists, posttransplant survival, overall cost-effectiveness, and global survival benefit are all accounted for [151]. The question arises, should or shouldn’t a liver be accepted for a particular patient while being cognizant of not just the immediate survival benefit of the particular recipient but also of the factors previously mentioned?

Previously, it was believed that high DRI organs should not be transplanted into patients with high MELD scores [27]. Further study revealed that in patients with high MELD scores, the donor organ quality measured by the DRI did not affect graft or patient outcomes, while in low to intermediate MELD score patients, the DRI did impacts graft/recipient survival [28]. Rana et al. [34] provide recommendations for donor-recipient matching according to recipient MELD score and donor quality as per SOFT score, displayed in Table 14.4.

Rauchfuss et al. [153] reviewed 45 patients who underwent LT with a MELD score of ≥36; their goal was to assess if DRI was associated with 1-year recipient survival post-LT. It was identified that the median duration of waiting time (2 days versus 4 days) was the only significant factor on univariate analysis that differentiated survivors from non-survivors. The overall survival in the group’s study was 69.8% at 1 year. The DRI (median survivors 1.72 vs median non-survivors 1.89), mechanical ventilation status, use of vasopressors, renal replacement therapy prior to LT, or presence of the lethal triad (coagulopathy, hypothermia, acidosis) did not significantly differentiate between survivors and non-survivors [153]. The overall DRI was quite high; however, there was no significant difference between survivors and non-survivors for extended donor criteria. The definition of extended donor criteria included donor age > 65, donor BMI >30, ICU stay >7 days, histologic proven graft steatosis >40%, donor sodium >165 mmol/l, or more than three times increased AST, ALT or bilirubin, donor malignancy history, positive hepatitis serology, drug abuse, sepsis, or meningitis [153].

Liver Transplantation in Patients with Portal Vein Thrombosis

Portal vein thrombosis (PVT) is usually diagnosed incidentally in patients with underlying cirrhosis and may affect those with compensated or decompensated cirrhosis. PVT most commonly occurs in patients with cirrhosis with a prevalence of 1–16% [154]. PVT can also occur in patients with hepatocellular carcinoma (HCC) and other hepatobiliary malignancies. Different series report a 2.1–26% incidence of PVT in ESLD patients awaiting LT [155]. More recent data has reported that HCC and cirrhosis carry a 23–28% risk of PVT [156].

Cirrhosis is the clinical manifestation of derangements in the hepatic architecture secondary to fibrosis leading to an increased portal resistance, decreased velocity of blood flow, and subsequent development of collateral venous circulation. Reduced flow and increased pressure within vessels create stasis and potential for clot formation. PVT in an underlying cirrhotic patient may contribute to further increase in venous pressures, leading to worsening portal hypertension and decreased synthetic liver function [157]. Cirrhotic patients with PVT have an increased association with factor 5 Leiden and prothrombin gene mutations. Mutation in the 20,210 gene has been shown to be an independent risk factor for the development of PVT [158].

Regardless of the underlying etiology of PVT, patients may present with an acute, subacute, or chronic PVT which may result in a partial or complete portal vein occlusion. PVT is further subdivided into benign versus malignant and intrahepatic versus extrahepatic thrombosis [159]. Extrahepatic PVT is exceedingly more common than intrahepatic PVT. For brevity, when discussing PVT, it will be inferred that it is an extrahepatic PVT unless stated otherwise. It is important to distinguish between acute versus chronic PVT and partial versus occlusive thrombus as management strategies, morbidity, and mortality vary accordingly.

Chronic PVT usually presents in an asymptomatic fashion and is incidentally found on imaging performed for other indications or during screening of cirrhotic patients awaiting LT. Chronic PVT in the setting of cirrhosis may eventually lead to accelerated sequala of portal hypertension manifested by ascites, variceal bleeding, ectopic varices, anemia, thrombocytopenia, or splenomegaly [160]. In the setting of a symptomatic PVT, gastrointestinal hemorrhage may be the first sign of underlying portal hypertension. Historically, there was an increased risk of death related to bleeding complications secondary to portal hypertension; however, improvements in prophylactic management of esophageal varices have reduced patient morbidity and mortality [161]. Malignant venous thrombus is diagnosed by an enhancement of the thrombus with direct contiguous extension of the tumor into the portal vein with disruption of the vessel continuity on CT, arterial pulsatile flow on doppler US, or by an increased uptake on PET scan [159]. Patients with malignant PVT are not candidates for LT, and therefore malignant PVT must be distinguished from nonmalignant PVT during the transplant evaluation.

Cirrhosis associated PVT treated with therapeutic LMWH has been shown to be safe and successful with complete or partial recanalization in 60% of patients [162]. Patients need to be continued on LMWH despite image documented recanalization. Patients who demonstrate complete recanalization and stop anticoagulation early have up to 38% re-thrombosis risk [163]. In cirrhotic patients, lifelong anticoagulation maybe required to maintain a patent portal vein post recanalization. A small randomized control trial of 70 outpatients with advanced cirrhosis randomized patients to receive 12 months of enoxaparin (dosed at 4000 IU/day) versus no treatment. Patients who received enoxaparin had a significantly lower rate of PVT development (8.8% versus 27.7%) at a 12-month follow-up [164]. An interesting finding of the study was that patients who received enoxaparin had a delayed occurrence of decompensated cirrhosis and improved survival compared to controls.