Abstract

As there are as many clients as many usages of an Image Processing library, each one may expect different services from it. Some clients may look for efficient and production-quality algorithms, some may look for a large tool set, while others may look for extensibility and genericity to inter-operate with their own code base...but in most cases, they want a simple-to-use and stable product. For a C++ Image Processing library designer, it is difficult to conciliate genericity, efficiency and simplicity at the same time. Modern C++ (post 2011) brings new features for library developers that will help designing a software solution combining those three points. In this paper, we develop a method using these facilities to abstract the library components and augment the genericity of the algorithms. Furthermore, this method is not specific to image processing; it can be applied to any C++ scientific library.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

As many other numerical fields of computer science, Computer Vision and Image Processing (IP) have to face the constantly varying form of the input data. The data are becoming bigger and comes from a wider range of input devices: the current issue is generally not about acquiring data, but rather about handling and processing it (in a short time if possible...). In image processing, the two-dimensional RGB model has become too restrictive to handle the whole variety of kinds of image that comes with the variety of images fields. A non-exhaustive list of them includes: remote sensing (satellites may produce hyperspectral images with some thousands of bands), medical imaging, (scans may provide 3D and 3D+t volumes with several modalities), virtual reality (RGB-D cameras used for motion capture provide 2D/3D images with an extra 16-bits depth channel), computational photography (some high-dynamic-range sensors produce 32-bits images to preserve details in all ranges of the luminance)...

These examples already highlight the need for versatility, but some more domain-oriented applications attempt to broaden further the definition of images. For example, in digital geometry, one would define images over non-regular domains as graphs, meshes or hexagonal grids. The increase of image type should not require to write several implementation of the algorithm. A single version should be able to work on several image types. The Fig. 1 illustrates this idea with the same watershed implementation applied on an image 2D, a graph as well as a mesh.

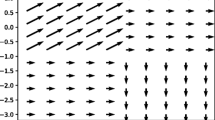

Tools able to handle many data representations are said to be generic. In the particular case of a library providing a set of routines, genericity means that the routines can be applied to a variety of inputs (as opposed to specific routines that support inputs of unique predefined type). As an example, consider the morphological dilation that takes two inputs: an image and a flat structuring element (SE). Then, the set of some possible inputs is depicted in Fig. 2. Note that in this example, the image is already a type product between the underlying structure kind and the value kind. Let s be the number of structures, v the number of types of values, and k the number of structuring elements. With no generalization, one would have to write \(s \times v \times k\) dilation routines.

Many IP libraries have emerged, developed in many programming languages. They all faced this problem and tried to bring solutions, some of which are reviewed in Sect. 2. Among these solutions, we see that generic programming is good starting point [15] to design a generic library but still has many problem. In particular, we focus on the case of Milena [16, 22], a generic pre-modern C++ IP libray and its shortcomings that led to the design of Pylena [5]. The work presented in this paper contrasts with the previous works on obtaining genericity for mathematical morphology operators [8, 21] and digital topology operators [23].

In Sect. 3, we present this new generic design, that emerged with the evolution of the Modern C++ and allowed solving some Milena’s shortcomings. Not only does this new design re-conciliate simplicity and performance, but it also promotes extensibility as it enables easily creating custom image types as those shown in Sect. 3.5.

2 Bringing Genericity in IP Libraries: Solutions and Problems

Generic programming aims at providing more flexibility to programs. It is itself a very generic term that means different things to different people. It may refer to parametric polymorphism (which is the common sense in C++), but it may also refer to data abstraction and reflection /meta-programming [17]. The accordance on a strict definition of generic programming is not our objective, but we can observe a manifestation of the generic programming: a parametrization of the routines to augment flexibility.

To tackle the problem of a generic dilation from the introduction, several programming techniques have been reviewed by Levillain et al. [24], Géraud [13]. We discuss these techniques w.r.t. some criteria: usability (simplicity from the end-user), maintainability (simplicity from the library developer stand-point), run-time availability (running routines on images whose kind is unknown until run-time), efficiency (speed and binary size tradeoff).

Ad-Hoc Polymorphism and Exhaustivity. The straightforward brute-force solution is the one that enumerates every combination of parameters. This means one implementation for each couple (‘image ‘kind, ‘structuring element ‘kind) and involves much code duplication. Both run-time or compile-time selection of the implementation are possible depending on the parametrization. If the kind of parameters are known at compile time (through their type), routine overload selection is automatically done by the compiler. On the contrary, if the kind of parameters are known at run-time (or if the language does not support overloading, the user has to select the right implementation by hand (a manual dispatch) as illustrated below:

Such a strategy is simple and efficient as the best implementation is written for each case. However it cannot scale, as any addition of a new kind (either a SE, a structure or a value type) would require duplicating many routines and lead to maintenance issues that is why no IP library has chosen such a strategy.

Generalization. A second approach is to generalize to the greatest common type (a type to rule them all) instead of augmenting their number. For example, one can consider that all value types are double since uint8, int16, ...can roughly be represented by double as in MegaWave [10]. Even, if a library supports different value kinds for images, it is also common to use an adapter that performs a value conversion before calling a specific routine with the most general type. OpenCV [4] uses such an approach where one has to adapt his value types from one routine to another, which makes it painful to extend due to the wide variety of types and algorithms that this library has to offer. Dynamic data analysis framework as Matlab, Octave, Numpy/SciPy have many routines implemented for a single value type and convert their input if it does not fit the required type. The major drawback to this approach is a performance penalty due to conversions and processing on larger type.

Structures can also be generalized to a certain extent. In the image processing library CImg, all images are 3-dimensional with an arbitrary number of channels. It leads to an interface where users can write ima(x,y,z,channel) even if the image has a single dimension. There are three drawbacks to this approach: the number of dimensions is bounded (cannot handle 3D+t for example), the interface allows the image to be used incorrectly (weak type-safety), every algorithm has to be written following a 4D pattern even if the image is only 2D. Moreover, generalization of structures is not trivial when they are really different (e.g. finding the common type between a 3D-buffer encoded image and an image over a graph).

Inclusion & Parametric Polymorphism. A common conception of generic programming relates the definitions of abstractions and template methods.

A first programming paradigm that enables such a distinction is object oriented programming (OOP). In this paradigm, template methods, as defined by [11], are general routines agnostic to the implementation details and specific properties of a given type. A template method defines the skeleton of an algorithm with customization points (calls can be redefined to our own handlers) or we can plug our own types. Hence, template methods are polymorphic. They rely on the abality to abstract the behavior of objects we handle. The abstraction thus declares an interface: a set of services (generally abstract methods) which are common to all the kinds. The concrete types have then to define abstract methods with implementation details.

On the other hand, generic programming, in the sense of [25] provides another way of creating abstraction and template methods. In this paradigm, the abstraction is replaced by a concept that defines the set of operations a type must provide. OOP template methods are commonly refered as template functions and implement the algorithm in terms of the concepts.

While similar in terms of idea, the two paradigms should not be confused. On one hand, OOP relies on the inclusion polymorphism. A single routine (implementation) exists and supports any sub-type that inherits from the abstract type (which is the way of defining an interface in C++). Also, the kind of entities is not known until run-time, types are dynamic and so is the selection of the right method. This has to be compared to generic programming that relies on the parametric polymorphism, which is static. The kinds of entities have to be known at compile time and a version of the template function is created for each input types. In Fig. 3, we illustrate these differences through the implementation of the simple routine copy (dilate would require a more advanced abstraction of an image that will be detailed in Sect. 3). Basically, copy just has to traverse an input image and stores the values in an output image. The way of abstracting the traversal is done with iterators.

Run-time polymorphism offers a greater flexibility in dynamic environment, where the types of the image to handle are not known until the execution of the program. For example, scipy.ndimage, a python image processsing library for interactive environments, uses a C-stylished version of the iterator abstraction [28] and value abstraction given above (C-style stands for an hand-made switch dispatch instead of virtual methods). GEGL [1], used in GIMP, is also written in C ans uses C-style run-time polymorphism to achieve abstraction over colors and data buffers (e.g. to model graphs).

Nevertheless, this flexibility comes at the cost of degraded performances due to the dynamic dispatches. On the other hand, static polymorphism provides better performances because concrete types are known at compile time and there is no need to resolve methods at run-time. As there is never no free lunch, performance comes at the cost of less run-time flexibility. Moreover, since parametric polymorphism is implemented through templates in C++, many instanciations of the same code occur for different input types and may lead to code bloat and large executables.

Parametric Polymorphism in C++ Image Processing Libraries. Parametric polymorphism is common in many C++ IP libraries to a certain extent. Many of them, (e.g. CImg [34], Video++ [12], ITK [19]) provide value type genericity (e.g. \(\texttt {image2d<T>}\)) while a few provide a full structural genericity (DGTal [7], GrAL [2], Milena [24], VIGRA [20], Boost.GIL [3]). To reach such a level a genericity, these libraries have been written in a complex C++ which remains visible from the user standpoint. Erich Gamma [11] notice that dynamic, highly parameterized software is harder to understand than more static software. In particular, errors in highly templated code is hard to read and to debug because they show up very deep in the compiler error trace.

Also, they have not been written with the modern user-friendly features that the new C++ offers. Worst, in the case of Milena, some design choices made in pre-C++ 11, makes the library not compatible with the current standard and prevents usage of these new features.

Additionally, there exists other, non-library approach, such as designing a whole new DSL (Domain Specific Language) to reach a specific goal. For instance, Halide [29] chose this approach to fully focus on the underlying generated code to optimize it for vectorization, parallelism and data locality. Unfortunately this implies trade-offs on genericity and interoperability as we are not dealing with native C++ anymore.

3 C++ Generic Programming and Concepts

C++ is a multi-paradigm language that enables the developer to write code that can be object oriented, procedural, functional and generic. However, there were limitations that were mostly due to the backward compatibility constraint as well as the zero-cost abstraction principle. In particular the generic programming paradigm is provided by the template metaprogramming machinery which can be rather obscure and error-prone. Furthermore, when the code is incorrect, due to the nature of templates (and the way they are specified) it is extremely difficult for a compiler to provide a clear and useful error message. To solve this issue, a new facility named concepts was brought to the language. It enables the developer to constraint types: we say that the type models the concept(s). For instance, to compare two images, a function compare would restrict its input image types to the ones whose value type provides the comparison operator ==.

In spite of the history behind the concept checking facilities being very turbulent [30, 32, 33], it will finally appear in the next standard [35] (C++20).

3.1 From Algorithms to Concepts

The C++ Standard Template Library (STL) is a collection of algorithms and data structures that allow the developer to code with generic facilities. For instance, there is a standard way to reduce a collection of elements: std:: accumulate that is agnostic to the underlying collection type. The collection just needs to provide a facility so that it can work. This facility is called iterator. All STL algorithms behave this way: the type is a template parameter so it can be anything. What is important is how this type behaves. Some collection requires you to define a hash functions (std::map), some requires you to set an order on your elements (std::set) etc. This emphasis the power of genericity. The most important point to remember here (and explained very early in 1988 [25]) is the answer to: “What is a generic algorithm?”. The answer is: “An algorithm is generic when it is expressed in the most abstract way possible”.

Later, in his book [31], Stepanov explained the design decision behind those algorithms as well as an important notion born in the early 2000s: the concepts. The most important point about concepts is that it constraints the behavior. Henceforth: “It is not the types that define the concepts: it is the algorithms”.

The Image Processing and Computer Vision fields are facing this issue because there are a lot of algorithms, a lot of different kind of images and a lot of different kind of requirements/properties for those algorithms to work. In fact, when analyzing the algorithms, you can always extract those requirements in the form of one or several concepts.

3.2 Rewriting an Algorithm to Extract a Concept

Gamma Correction. Let us take the gamma correction algorithm as an example. The naive way to write this algorithm can be:

This algorithm here does the job but it also makse a lot of hypothesis. Firstly, we suppose that we can write in the image via the = operator (l.9–11): it may not be true if the image is sourced from a generator function. Secondly, we suppose that we have a 2D image via the double loop (l.6–7). Finally, we suppose we are operating on 8bits range (0–255) RGB via ’.r’, ’.g’, ’.b’ (l.9–11). Those hypothesis are unjustified. Intrinsically, all we want to say is “For each value of ima , apply a gamma correction on it.”. Let us proceed to make this algorithm the most generic possible by lifting those unjustified constraints one by one.

Lifting RGB constraint: First, we get rid of the 8bits color range (0–255) RGB format requirement. The loops become:

By lifting this constraint, we now require the type Image to define a nested type Image::value_type (returned by ima(x, y)) on which std::numeric_limits and std::pow are defined. This way the compiler will be able to check the types at compile-time and emit warning and/or errors in case it detects incompatibilities. We are also able to detect it beforehand using a static_assert for instance.

Lifting bi-dimensional constraint: Here we need to introduce a new abstraction layer, the pixel. A pixel is a couple (point, value). The double loop then becomes:

This led to us requiring that the type Image requires to provide a method Image::pixels() that returns something we can iterate on with a range-for loop: this something is a Range of Pixel. This Range is required to behave like an iterable: it is an abstraction that provides a way to browse all the elements one by one. The Pixel is required to provide a method Pixel::value() that returns a Value which is Regular (see Sect. 3.3). Here, we use auto&& instead of auto& to allow the existence of proxy iterator (think of \(\texttt {vector<bool>}\)). Indeed, we may be iterating over a lazy-computed view Sect. 3.5.

Lifting writability constraint: Finally, the most subtle one is the requirement about the writability of the image. This requirement can be expressed directly via the new C++20 syntax for concepts. All we need to do is changing the template declaration by:

In practice the C++ keyword const is not enough to express the constness or the mutability of an image. Indeed, we can have an image whose pixel values are returned by computing \(cos(x+y)\) (for a 2D point). Such an image type can be instantiated as non-const in C++ but the values will not be mutable: this type will not model the WritableImage concept.

When re-writing a lot of algorithms this way: lifting constraints by requiring behavior instead, we are able to deduce what our concepts needs to be. The real question for a concept is: “what behavior should be required?”

Dilation Algorithm. To show the versatility of this approach, we will now attempt to deduces the requirements necessary to write a classical dilate algorithm. First let us start with a naive implementation:

Here we are falling into the same pitfall as for the gamma correction example: there are a lot of unjustified hypothesis. We suppose that we have a 2D image (l.7–8), that we can write in the output_image (l.10, 13). We also require that the input image does not handle borders, (cf. loop index arithmetic l.7-8, 11-12). Additionally, the structuring element is restricted to a \(5 \times 5\) window (l.11-12) whereas we may need to dilate via, for instance, a \(11 \times 15\) window, or a sphere. Finally, the algorithm does not exploit any potential properties such as the decomposability (l.11-12) to improve its efficiency. Those hypothesis are, once again, unjustified. Intrinsically, all we want to say is “For each value of input_ima, take the maximum of the \(X \times X\) window around and then write it in output_ima”.

To lift those constraints, we need a way to know which kind of structuring element matches a specific algorithm. Thus, we will pass it as a parameter. Additionally, we are going to lift the first two constraints the same way we did for gamma correction:

We now do not require anything except that the structuring element returns the neighbors of a pixel. The returned value must be an iterable. In addition, this code uses the zip utility which allows us to iterate over two ranges at the same time. Finally, this way of writing the algorithm allows us to delegate the issue about the border handling to the neighborhood machinery. Henceforth, we will not address this specific point deeper in this paper.

3.3 Concept Definition

The more algorithms we analyze to extract their requirements, the clearer the concepts become. They are slowly appearing. Let us now attempt to formalize them. The formalization of the concept Image from the information and requirements we have now is shown in Table 1 for the required type definitions and valid expressions.

The concept Image does not provide a facility to write inside it. To do so, we have refined a second concept named WritableImage that provides the necessary facilities to write inside it. We say “WritableImage refines Image”.

The sub-concept ForwardRange can be seen as a requirement on the underlying type. We need to be able to browse all the pixels in a forward way. Its concept will not be detailed here as it is very similar to concept of the same name [26, 27] (soon in the STL). Also, in practice, the concepts described here are incomplete. We would need to analyze several other algorithms to deduce all the requirements so that our concepts are the most complete possible. One thing important to note here is that to define a simple Image concept, there are already a large amount of prerequisites: Regular, Pixel and ForwardRange. Those concepts are basic but are also tightly linked to the concept in the STL [6]. We refer to the STL concepts as fundamental concepts. Fundamentals concepts are the basic building blocks on which we work to build our own concepts. We show the C++20 code implementing those concepts in the code below.

3.4 Specialization Vs. Properties

Another advantage of concepts are that they allow a best match machinery over requirement(s) met by a type. We call this mechanic the property specialization. It allows to select the correct overload (best match machinery) when the given template parameter satisfies the requirement(s) expressed via the concept(s). Historically we used the template specialization mechanism to achieve the same thing (via inheritance of specialized types and other tricks) but it came with lot of disadvantages. Those are the cost of the abstraction and indirection, the difficulty to extend as well as to inject new type or behavior for a new user, being tied to a type and finally, each new type needs its own new implementation. Switching to a property-based approach with an automatic best match machinery is much more efficient and user-friendly.

This machinery could be emulated pre-C++20 via cryptic template metaprogramming tricks (i.e. type-traits, SFINAE and enable_if). However, C++20 brings a way to remove the need of these need, making it widely accessible. The code in Fig. 4 shows this difference in action.

The result about which code is clearer, easier to read and less error-prone should be obvious. The first advantage is that the compiler do the type-checking pass early when instantiating the template instead of waiting until the overload resolution pass (the improper functions candidate are removed from overload resolution thanks to SFINAE). This directly enhances the error messages emitted by the compiler. Instead of having a long error trace one needs to scroll down to find the error within, the compiler will now emits the error first at the top with the incorrect behavior requirement that does not match the concept for a given instantiated type.

Also, in the C++17 code, with heavy metaprograming trick, informations about function prototypes such as return type, parametric types and input types are fuzzy and not very clear. It needs carefully designed and written user documentation to be usable by a tier. Furthermore, this documentation is often difficult to generate and documentation generators do not help because they have a very limited understanding of templated code. However, we can see in the C++20 code that, with concepts, we just have two different overloads with a single piece of information changing: the \(2^{nd}\) input parameter. The information is clear and can be easily understood by documentation generators.

In addition, as concepts are working with a best-match machinery, we can notice that it is not the case with the SFINAE tricks version. Each time you add a new variant, every possibilities, incompatibilities and ambiguities between all the overloads have to be manually lifted. Not doing so will lead to multiple overloads ambiguities (or none selected at all). Also, the compiler will issue a non-friendly error message difficult to address.

In Image Processing we are able to make use of this machinery, in particular with a property on structuring elements: the decomposability. For reminder a multi-dimensional structuring element is decomposable if it can be rewritten as many simpler structuring elements.

Indeed when the structuring element window is tiny, it makes little sense to exploit this property for efficiency. If instead of browsing the image once while selecting 4 neighbors for each pixel, then we browse the image twice while selecting 2 neighbors for each pixel, the difference is not relevant. However, the more the structuring element window grows, the more neighboring pixels are selected for each pixel. With a multi-dimensional structuring element the growth is quadratic whereas it is linear if the structuring element is decomposed.

Henceforth, bringing the property best-match machinery with concepts as well as this decomposable property lead us to this dilate algorithm version:

It is much more efficient as it reduces the complexity dramatically when the structuring element has a large selection window.

3.5 Practical Genericity for Efficiency: The Views

Let us introduce another key point enabled by genericity and concepts: the Views. A View is defined by a non-owning lightweight image, inspired by the design introduced in Ranges for the Standard Library [9] proposal for non-owning collections. A similar design is also called Morphers in Milena [13, 21]. Views feature the following properties: cheap to copy, non-owner (does not own any data buffer), lazy evaluation (accessing the value of a pixel may require computations) and composition. When chained, the compiler builds a tree of expressions (or expression template as used in many scientific computing libraries such as Eigen [18]), thus it knows at compile-time the type of the composition and ensures a 0-overhead at evaluation.

There are four fundamental kind of views, inspired by functional programming paradigm: transform(input, f) applies the transformation f on each pixel of the image input, filter(input, pred) keeps the pixels of input that satisfy the predicate pred, subimage(input, domain) keeps the pixels of input that are in the domain domain, zip(\(input_1\), \(input_2\), ..., \(input_n\)) allows to pack several pixel of several image to iterate on them all at the same time.

Lazy-evaluation combined with the view chaining allows the user to write clear and very efficient code whose evaluation is delayed till very last moment as shown in the code below (see [14] for additional examples). Neither memory allocation nor computation are performed; the image i has just recorded all the operations required to compute its values.

4 Conclusion and Perspectives

Through a simple example, we have shown a step-by-step methodology to make an algorithm generic with zero overheadFootnote 1. To reach such a level of genericity and be able to write versatile algorithms, we had to abstract and define the most simple and fundamental elements of the libray (e.g. image, pixel, structuring element). We have shown that some tools of the Modern C++, such as concepts, greatly facilitate the definition and the usage of such abstractions. These tools enable the library designer to focus on the abstraction of the library components and on the user-visible development. The complex template meta-programming layer that used to be a large part of C++ generic programming is no more inevitable. In this context, it is worth pointing out the approach is not limited Image Processing libraries but works for any library that wants to be modernized to augment its productivity.

As one may have noticed, the solution presented in this paper is mostly dedicated to C++ developer and C++ end-user. Unlike dynamic environments (such as Python), C++ is not the most appropriate language when one has to prototype or experiment an IP solution. As a future work, we will study the conciliation of the static genericity from C++ (where types have to be known at compile time) with a dynamic language (with a run-time polymorphism) to allows the interactive usage of a C++ generic library.

Notes

- 1.

The zero-cost abstraction of our approach is not argued here but will be discussed in an incoming paper with a comparison with the state of the art libraries.

References

Generic Graphic Library

Berti, G.: GrAL-the grid algorithms library. Future Gener. Comput. Syst. 22(1–2), 110–122 (2006)

Bourdev, L., Jin, H.: Boost generic image library. In: Adobe Stlab (2006). Available at https://stlab.adobe.com/gil/index.html

Bradski, G.: The OpenCV Library. Dr. Dobb’s J. Softw. Tools 120, 122–125 (2000)

Carlinet, E., et al.: Pylena: a modern C++ image processing generic library. EPITA Research and Developement Laboratory (2018). Available at https://gitlab.lrde.epita.fr/olena/pylene

Carter, C., Niebler, E.: Standard library concepts June 2018. https://wg21.link/p0898r3

Coeurjolly, D., Lachaud, J.O.: DGtal: Digital geometry tools and algorithms library. http://dgtal.org

Darbon, J., Géraud, T., Duret-Lutz, A.: Generic implementation of morphological image operators. In: Proceedings of the 6th International Symposium of Mathematical Morphology (ISMM), pp. 175–184. Sydney, Australia (2002)

Eric N., Sean Parent, A.S.: Ranges for the standard library: Revision 1, October 2014. https://ericniebler.github.io/std/wg21/D4128.html

Froment, J.: MegaWave. In: IPOL 2012 Meeting on Image Processing Libraries (2012)

Gamma, E.: Design Patterns: Elements of Reusable Object-Oriented Software. Pearson Education India, Chennai (1995)

Garrigues, M., Manzanera, A.: Video++, a modern image and video processing C++ framework. In: Conference on Design and Architectures for Signal and Image Processing (DASIP), pp. 1–6. IEEE (2014)

Géraud, T.: Outil logiciel pour le traitement d’images: Bibliothèque, paradigmes, types et algorithmes. Habilitation thesis, Université Paris-Est (2012). (in French)

Géraud, T., Carlinet, E.: A modern C++ library for generic and efficient image processing. Journée du Groupe de Travail de Géométrie Discrète et Morphologie Mathématique, Lyon, France, June 2018. https://www.lrde.epita.fr/theo/talks/geraud.2018.gtgdmm_talk.pdf

Géraud, T., Fabre, Y., Duret-Lutz, A., Papadopoulos-Orfanos, D., Mangin, J.F.: Obtaining genericity for image processing and pattern recognition algorithms. In: Proceedings of the 15th International Conference on Pattern Recognition (ICPR). vol. 4, pp. 816–819. Barcelona, Spain (2000)

Géraud, T., Levillain, R., Lazzara, G.: The Milena image processing library. IPOL meeting, ENS Cachan, France, June 2012. https://www.lrde.epita.fr/~theo/talks/geraud.2012.ipol_talk.pdf

Gibbons, J.: Datatype-generic programming. In: Backhouse, R., Gibbons, J., Hinze, R., Jeuring, J. (eds.) SSDGP 2006. LNCS, vol. 4719, pp. 1–71. Springer, Heidelberg (2007). https://doi.org/10.1007/978-3-540-76786-2_1

Guennebaud, G., Jacob, B., et al.: Eigen v3. http://eigen.tuxfamily.org (2010). Available at http://eigen.tuxfamily.org

Ibanez, L., Schroeder, W., Ng, L., Cates, J.: The ITK software guide (2005)

Köthe, U.: Generic Programming for Computer Vision: The VIGRA Computer Vision Library. University of Hamburg, Cognitive Systems Group, Hamburg (2003)

Levillain, R., Géraud, T., Najman, L.: Milena: write generic morphological algorithms once, run on many kinds of images. In: Wilkinson, M.H.F., Roerdink, J.B.T.M. (eds.) ISMM 2009. LNCS, vol. 5720, pp. 295–306. Springer, Heidelberg (2009). https://doi.org/10.1007/978-3-642-03613-2_27

Levillain, R., Géraud, T., Najman, L.: Why and how to design a generic and efficient image processing framework: the case of the milena library. In: Proceedings of the IEEE International Conference on Image Processing (ICIP), pp. 1941–1944. Hong Kong September 2010 (2010)

Levillain, R., Géraud, T., Najman, L.: Writing reusable digital topology algorithms in a generic image processing framework. In: Köthe, U., Montanvert, A., Soille, P. (eds.) WADGMM 2010. LNCS, vol. 7346, pp. 140–153. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-32313-3_10

Levillain, R., Géraud, T., Najman, L., Carlinet, E.: Practical genericity: writing image processing algorithms both reusable and efficient. In: Proceedings of the 19th Iberoamerican Congress on Pattern Recognition (CIARP). LNCS, vol. 8827, pp. 70–79. Puerto Vallarta, Mexico (2014)

Backhouse, R., Gibbons, J. (eds.): Generic Programming. LNCS, vol. 2793. Springer, Heidelberg (2003). https://doi.org/10.1007/b12027

Niebler, E., Carter, C.: Deep integration of the ranges TS, May 2018. https://wg21.link/p1037r0

Niebler, E., Carter, C.: Merging the ranges TS, May 2018. https://wg21.link/p0896r1

Oliphant, T.E.: Multidimensional iterators in NumPy. In: Oram, A., Wilson, G. (eds.) Beautiful Code, vol. 19. O’reilly, Sebastopol (2007)

Ragan-kelley, J., Barnes, C., Adams, A., Durand, F., Amarasinghe, S., et al.: Halide: a language and compiler for optimizing parallelism, locality, and recomputation in image processing pipelines. In: PLDI 2013, (2013)

Seymour, B.: LWG papers to re-merge into C++0x after removing concepts July 2009. https://wg21.link/n2929

Stepanov, A., McJones, P.: Elements of Programming. Addison-Wesley Professional, Boston (2009)

Stroustrup, B., Reis, G.D.: Concepts - Design choices for template argument checking, October 2003. https://wg21.link/n1522

Sutton, A.: Working draft, C++ extensions for concepts, June 2017. https://wg21.link/n4674

Tschumperlé, D.: The CImg library. IPOL 2012 Meeting on Image Processing Libraries (2012)

Voutilainen, V.: Merge the concepts TS working draft into the C++20 working draft, June 2017. https://wg21.link/p0724r0

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Roynard, M., Carlinet, E., Géraud, T. (2019). An Image Processing Library in Modern C++: Getting Simplicity and Efficiency with Generic Programming. In: Kerautret, B., Colom, M., Lopresti, D., Monasse, P., Talbot, H. (eds) Reproducible Research in Pattern Recognition. RRPR 2018. Lecture Notes in Computer Science(), vol 11455. Springer, Cham. https://doi.org/10.1007/978-3-030-23987-9_12

Download citation

DOI: https://doi.org/10.1007/978-3-030-23987-9_12

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-23986-2

Online ISBN: 978-3-030-23987-9

eBook Packages: Computer ScienceComputer Science (R0)