Abstract

High-resolution particle imaging techniques have had a large impact in fluid mechanics over the last decades. In this chapter, we concentrate on particle tracking velocimetry in which trajectories of particles are reconstructed from sets of flow visualisation images recorded in high-speed video. We describe some recent advances stemming from our research in the major steps of the technique: camera calibration and particle stereo-matching, particle tracking algorithms, and noiseless estimation of statistical quantities. Note that this does not intend to be an inclusive review of the literature on the topic. Applications range from the understanding of single phase turbulence to the dispersion of inertial particles.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

6.1 Introduction

Flow velocity measurements based on the analysis of the motion of particles imaged with digital cameras have become the most commonly used measurement technique in contemporary fluid mechanics research [1, 2]. Particle image velocimetry (PIV) and particle tracking velocimetry (PTV) are two widely used methods that enable the characterisation of a flow based on the motion of particles, from Eulerian (PIV) or Lagrangian (PTV) points of view. Several aspects influence the accuracy and reliability of the measurements obtained with these techniques [2]: resolution (temporal and spatial), dynamical range, the capacity to measure 2D or 3D components of velocity in a 2D or 3D fluid domain, statistical convergence, etc. These imaging and analysis considerations depend on the hardware (camera resolution, repetition rate, on-board memory, optical system, etc.) but also on the software (optical calibration relating real-world coordinates to pixel coordinates, particle identification and tracking algorithms, image correlation, dynamical post-processing, etc.) used in the measurements. In this context, particle tracking velocimetry can provide highly resolved, spatially and temporally, measurements of the flow velocity (if the particles are flow tracers) or of particle velocities (if the particles immersed in the flow have their own dynamics) in experimental fluid mechanics research and applications [2,3,4,5]. A frequent implementation of this method in the laboratory is based on taking a pair of images (with double exposure cameras, typical of PIV) in rapid succession followed by a larger time interval before the next pair of images. A second common implementation of this method starts with the capture of a long sequence of images, all equally separated by a small time interval (with high-speed cameras). In the first case, the particle tracking velocimetry technique provides a single vector per particle in a pair of consecutive images, with subsequent velocity measurements in other image pairs being uncorrelated. The high-speed image sequence, on the contrary, provides the opportunity to track the same particle over multiple (n) images and provides several (n-1) correlated velocity (or n-2 acceleration) measurements, at different locations but along the same particle trajectory.

There are three recent contributions implemented by the authors and summarised in this chapter that apply equally to both versions of the particle tracking velocimetry technique: each one advances important aspects in one of the stages of the measurement of velocity from particle images. The first contribution (Sect. 6.2) provides an optical-model-free calibration technique for multi-camera particle tracking velocimetry and potentially also for particle image velocimetry. This method is simpler to apply and provides equal or better results than the pinhole camera model originally proposed by Tsai in 1987 [6]. In the context of particle tracking with applications in fluid mechanics, particle centre detection and tracking algorithms have been the focus of more studies [7, 8] than optical calibration and 3D position determination. Although many strategies with various degrees of complexity have been developed for camera calibration [9,10,11,12,13], most existing experimental implementations of multi-camera particle tracking use Tsai pinhole camera model as the basis for calibration. Using plane-by-plane transformations, it defines an interpolant that connects each point in the camera sensor to the actual light beam across the measurement volume. As it does not rely on any a priori model, the method easily handles potential complexity and non-linearity in an optical setup while remaining computationally efficient in stereo-matching 3D data. In opposition, Tsai approach, sketched in Fig. 6.1, is based on the development on a physical model for the cameras arrangement with several parameters (the number depending on the complexity). The model assumes that all ray of light received on the camera sensor pass through an optical centre (pinhole) for each camera. The quality of the inferred transformation will therefore be sensitive to variations of the setup leading to calibration data which may no longer match the model due to optical distortions, for instance. Besides, Tsai model requires non-linear elements to account for each aspect of the optical path. In practice, realistic experimental setups are either complex and time-consuming to model via individual optical elements in the Tsai method or over-simplified by ignoring certain elements such as windows, or compound lenses, with loss of accuracy.

Sketch of Tsai pinhole camera model and stereo-matching: the position of a particle in the real world corresponds to the intersection of 2 lines Δ1 and Δ2, each emitted by the camera centres O 1 and O 2 and passing through the position of particles P 1 and P 2 detected on each camera plane Π1 and Π2

The second contribution (Sect. 6.3) addresses the reconstruction of trajectories from the set of particle positions detected in the image sequence, an important aspect of particle tracking velocimetry [8, 14,15,16,17]. It describes the practical implementation of two recent developments: shadow particle velocimetry using parallel light combined with pattern tracking [18, 19] and trajectory reconstruction based on an extension of the four-frame best estimate (4BE) method. While the former was developed originally to access the size, orientation, or shape of the tracked particles, the latter is an extension of previous tracking algorithms [17] (which also extended previous algorithms) and which can be easily implemented as an add-on to an existing tracking code.

Finally, Sect. 6.4 describes a method to estimate noiseless velocity and acceleration statistics from particle tracking velocimetry tracks. This is a crucial step because imaging techniques may introduce noise into the detection of particle centres, which is then amplified when computing successive temporal or spatial derivatives. The position signal is then usually time-filtered prior to differentiation [5, 20], a procedure that increases the signal-to-noise ratio at the cost of signal alteration. The method described here, inspired by work in this area [21, 22], is based on computing the statistics of the particles displacements with increasing time lag, does not require any kind of filter, and allows for the estimation of noiseless statistical quantities both in the Lagrangian framework (velocity and acceleration time correlation functions) and in the Eulerian framework (statistics of spatial velocity increments) [23, 24].

Note that this chapter does not intend to review all the possible extensions of particle tracking velocimetry and has been limited to some recent developments from the authors’ groups, which we believe can be useful and easily implemented to improve the accuracy of already operational PTV systems in other groups or which may help users developing new PTV experiments. Many other interesting advances have been developed over the past decade. We can, for instance, mention the use of inverse-problem digital holography [25,26,27], which allows to track particles in 3D with one single camera, new algorithms allowing to track particles in highly seeded flows such as the shake the box method [28] or the tracking of particles with rotational dynamics [29, 30], which allows to investigate simultaneously the translation and rotation of large objects transported in a flow.

6.2 A Model-Free Calibration Method

6.2.1 Principle

3D particle imaging methods require an appropriate calibration method to perform the stereo-matching between the 2D positions of particles in the pixel coordinate system for each camera and their absolute 3D positions in the real-world coordinate system. The accuracy of the calibration method directly impacts the accuracy of the 3D positioning of the particles in real-world coordinates.

The calibration method proposed here (further discussed in [31]) is based on the simple idea that no matter how distorted a recorded image is, each bright point on the pixel array is associated with the ray of light that produced it. As such, the corresponding light source (typically a scatterer particle) can lie anywhere on this ray of light. An appropriate calibration method should be able to directly attribute to a given doublet (x p, y p) of pixel coordinates its corresponding ray path. If the index of refraction in the measurement volume of interest is uniform (so that light propagates along a straight line inside the measurement volume) each doublet (x p, y p) can be associated with a straight line d (defined by 6 parameters in 3D: a position vector O Δ(x p, y p) and a displacement vector V Δ(x p, y p)), regardless of the path outside the volume of interest, which can be very complex as material interfaces and lenses are traversed. The calibration method described here builds a pixel-to-line interpolant \(\mathcal {I}\) that implements this correspondence between pixel coordinates and each of the 6 parameters of the ray of light: \((x_p,y_p) \xrightarrow {{\mathcal {I}}} ({\mathbf {O}}_\Delta ,{\mathbf {V}}_\Delta )\). While this method may seem similar to Tsai approach which also designates a ray of light for each doublet (x p, y p), there is a significant difference in that Tsai approach assumes a camera model and is sensitive to deviations in the actual setup from this idealised optical model. The proposed approach does not rely on any a priori model and is only based on empirical interpolations from the actual calibration data. Thus, the new method implicitly takes into account optical imperfections, media inhomogeneities (outside the measurement volume) or complex lens arrangements. Additionally, the generalisation of the method to cases where light does not propagate in a straight line is straightforward: it is sufficient to build the interpolant with the parameters required to describe the expected curved path of light in the medium of interest (for instance, a parabola in the case of linear stratification).

6.2.2 Practical Implementation

An implementation of the method proposed is used to build the interpolant \(\mathcal {I}\) from experimental images of a calibration target with known patterns at known positions. The process described here concerns only one camera for clarity. In general, in a realistic multi-camera system, the protocol has to be repeated for each camera independently.

A calibration target, consisting of a grid of equally separated dots, is translated perpendicularly to its plane (along the OZ axis) using a micropositioning stage, and is imaged at several known Z positions by every camera simultaneously. In total, N Z images are taken by each camera: I j is the calibration image when the plane is at position Z j (with j ∈ [1, N Z]). For an example highlighting the quality of the calibration method, N Z = 13 planes were collected across the measurement volume. The calibration protocol, sketched in Fig. 6.2, then proceeds as follows:

-

1.

Dot centres detection. For each calibration image I j the dot centres are detected, giving a set \((x_{j}^k,y_{j}^k)_{k\in [1;N_{j}]}\) of pixel coordinates. N j is the number of dots actually detected on each image I j. Real-world coordinates of the dots \((X_j^k,Y_j^k,Z_j^k)_{k\in [1;N_{j}]}\) are known. Lowercase coordinates represent pixel coordinates, while uppercase coordinates represent absolute real-world coordinates.

-

2.

2D Plane-by-plane transformations. For each position Z j of the calibration target, the measured 2D pixel coordinates \((x_{j}^k,y_{j}^k)_{k\in [1;N_{j}]}\) and the known 2D real-world coordinates \((x_{j}^k,y_{j}^k)_{k\in [1;N_{j}]}\) are used to infer a spatial transformation \({\mathcal {T}}_{j}\) projecting 2D pixel coordinates onto 2D real-world coordinates in the plane XOY at position Z j. Different type of transformations can be inferred, from a simple linear projective transformation, to high order polynomial transformations if non-linear optical aberrations need to be corrected (common optical aberrations are adequately captured by a third-order polynomial transformation). This is a standard planar calibration procedure, where an estimate of the accuracy of the 2D plane-by-plane transformation can be obtained from the distance, in pixel coordinates, between \((x_{j}^k,y_{j}^k)_{k\in [1;N_{j}]}\) and \({\mathcal {T}}^{-1}_{j}\left (X_{j}^k,Y_{j}^k,Z_j\right )_{k\in [1;N_{j}]}\). The maximum error for the images used here is less than 2 pixels, corresponding in the present case to a maximum error of about 1/10th of the diameter of the dots in the calibration image.

-

3.

Building the pixel-line interpolant and stereo-matching. The key step in the calibration method is building the pixel-to-line transformation. For a given pixel coordinate (for instance, corresponding to the centre of a detected particle), this is simply done by applying the successive inverse plane-by-plane transformations \({\mathcal {T}}^{-1}_{j}\) to project the pixel position to real space at each plane. This builds a set of points (one per plane) which define the line of sight corresponding to the considered pixel coordinate. The line is then determined by a linear fit of these points. For practical purposes, instead of repeating this procedure every time for every detected particle, we rather chose to build a pixel-line interpolant, \(\mathcal {I}\), which directly connects pixels coordinates to a ray path. To achieve this, a grid of \(N_{\mathcal {I}}\) interpolating points in pixel coordinates \((x_{l}^{\mathcal {I}},y_{l}^{\mathcal {I}})_{l\in [1,N_{\mathcal {I}}]}\) is defined, for which the ray paths have to be computed. The inverse transformations \({\mathcal {T}}^{-1}_{j}\) are then used to project each point of this set back onto the real-world planes (X, Y, Z j), for each of the N Z positions Z j. Each interpolating point \((x_{l}^{\mathcal {I}},y_{l}^{\mathcal {I}})\) is therefore associated with a set of N Z points in real world \((X_{l}^{\mathcal {I}},Y_{l}^{\mathcal {I}},Z_j)\). Conversely, these points in real world can be seen as a discrete sampling of the ray path which impacts the sensor of the camera at \((x_{l}^{\mathcal {I}},y_{l}^{\mathcal {I}})\). If light propagates along a straight line, the N Z points \((X_{l}^{\mathcal {I}},Y_{l}^{\mathcal {I}},Z_j)\) should be aligned. By a simple linear fit of these points, each interpolating point \((x_{l}^{\mathcal {I}},y_{l}^{\mathcal {I}})\) is related to a line Δl, defined by a point \({\mathbf {O}}_{\Delta _{l}}=(X^0_{l},Y^0_{l},Z^0_{l})\) and a vector \({\mathbf {V}}_{\Delta _{l}}=(Vx_{l},Vy_{l},Vz_{l})\) (hence 6 parameters for each interpolating point). Each of these rays from the \(N_{\mathcal {I}}\) interpolation points is used to compute the interpolant \({\mathcal {I}}\), which allows any pixel coordinate (x, y) in the camera to be connected to its ray path (O Δ, V Δ) corresponding to all possible positions of light sources that could produce a bright spot in (x, y). Stereo-matching, or finding the 3D position of a point (or particle), is performed by finding a set of rays from each camera that cross (or almost cross) in the vicinity of the same spot in the volume of interest. The most probable 3D location of the corresponding particle is then taken as the 3D position that minimises the total distance to all those rays. The interpolant described in the method is created using every pixel in the cameras, as this step is done only once, but the method can be applied with a subset of the pixel array. For a setup with moderate optical distortion, a loose interpolating grid with a few hundreds points (typically, 20 × 20) is largely sufficient. As a matter of fact, using the interpolant is not mandatory, as all the calibration information is embedded in the plane-by-plane transformations. Third-order polynomial plane-by-plane transformation embeds 10 parameters each (5 polynomial coefficients for each of the X and Y transformations). If, instead, 7 calibration planes are used, the calibration information embeds about 70 parameters in total. Using the interpolant approach is above all a practical solution, while the interpolation information embeds a massive number of hidden parameters (6 per interpolation point) and is therefore expected to be highly redundant. Therefore, it is generally unnecessary to build the interpolant on a too refined grid (however, the added computational cost is minimal as the interpolant is only built once per calibration procedure, and can be stored in a small file for later use). This may happen for systems with important small-scale and heterogeneous optical distortions, in which case higher order plane-by-plane transformations (hence embedding more parameters) would also be necessary.

Sketch of the calibration method. (a) Image I j of the calibration target (over N x × N y pixels) located at one position Z j (in real-world coordinates). From this image the centre of the images of the calibration dots in pixel coordinates (\((x^k_j,y^k_j)_{k\in [1;N_j]}\)) is determined. (b) Corresponding known location of the centre of the calibration points in real-world coordinates (\((X^k_j,X^k_j)_{k\in [1;N_j]}\)). From \((x^k_j,y^k_j)_{k\in [1;N_j]}\) and \((X^k_j,X^k_j)_{k\in [1;N_j]}\), the coefficients of the transformation \({\mathcal {T}}_j\) connecting pixel and real-world coordinates of the target located at Z j are evaluated (the procedure is repeated for several target positions \(Z_{j\in [1,N_z]}\)) using least squares methods [32]. From a practical point of view the transformations \({\mathcal {T}}_j\) can be easily determined using ready to use algorithms, such as the fitgeotrans function in Matlab®. Note that for the simplicity of the illustration of the method, we show here a situation with no optical distortion and no perspective deformation, where the plane-by-plane transformation \({\mathcal {T}}_j\) is just given by a magnification factor M j between pixel and real-world coordinates. In an actual experiment, perspective effects would require at least a linear projective transformation, defined by a 2 × 2 matrix \(M^{\alpha \beta }_j\) with at least 4 coefficients to be estimated for each plane position Z j. More realistic situations would require higher order polynomial transformations including a larger number of coefficients [32]; a third polynomial transformation embeds, for instance, 10 coefficients per plane). (c) Stacks of calibration planes at 3 different positions (Z j=1,2,3) in 3D real-world coordinates (for simplicity, only 3 planes are illustrated, although in an actual calibration more planes may be used for better accuracy). The 3 coloured crosses illustrate the 3 projections (one on each of the 3 planes, the colour of the points corresponds to the colour of the plane onto which it is projected) in real-world coordinates ((X, Y, Z)j=1,2,3) of an arbitrary point (x, y) in pixel coordinates to which the 3 transformations \({\mathcal {T}}_{j=1,2,3}\) have been applied. These projections are distributed along a path of light corresponding to the line in real-world coordinates that projects onto the point (x, y) in the camera pixel coordinates. Since in a homogeneous medium light propagates in straight lines, the path of light is simply determined by a linear fit (dashed line), in 3D real-world coordinates, of the three points ((X, Y, Z)j=1,2,3). Using more calibration planes leads to more points for the linear fit and hence to a better accuracy. This procedure then directly connects the pixel coordinate (x, y) into the corresponding ray of light that produces it. Note that the fit is only done within the calibration volume where the target is translated along the N z planes and does not extend to the cameras

6.2.3 Results: Comparison with Tsai Model

The calibration procedure proposed by Tsai [6] has been widely used to recover the optical characteristics of an imaging system to reconstruct the 3D position of an object. The accuracy of the proposed imaging calibration procedure is assessed by comparing it with a simple implementation of Tsai model. A camera model accounting only for radial distortion is used. While improved optical elements in Tsai model could increase the accuracy, they come at an increased operator workload.

Our stereoscopic optical arrangement (see Refs. [31, 33] for more details), typical of PTV in a 1 cm thick laser sheet, focuses on the geometrical centre of a water flow inside an icosahedron, with both cameras objectives mounted in a Scheimpflug configuration. A plate mounted parallel to the laser sheet with 2 mm dots, attached to a micrometric traverse (with 10 μm accuracy), is used as a target. Both calibration methods use 13 target images, 1 mm apart from each other along the Z axis.

The calibration method uses the 2D positions of the target dots, and provides a series of positions that cannot exactly match the 3D real coordinates because, in both methods, the model parameters are obtained by solving an over-constrained linear system in the least-square sense. The calibration error, i.e., the absolute difference between the (known) real coordinates and the transformed ones, is computed to evaluate the calibration accuracy. This error can be estimated along each direction or as a norm: \(d=\sqrt { d_X^2+ d_Y^2+ d_Z^2}\) (Table 6.1). Figure 6.3 plots the total 3D error averaged over the 13 planes used, for both the proposed method and Tsai model.

The accuracy of the proposed calibration is superior to that of the Tsai method (in its simplest implementation). The error is at least 300% smaller (depending on which component is considered) and is reduced to barely 0.5 pixel. It is important to note that the error map obtained with the Tsai method (Fig. 6.3b) seems to display a large bias along Y that could be due to the use of Scheimpflug mounts, which are typically not included in this Tsai calibration, and to the angle between the cameras and the tank windows. This hypothesis was verified by comparing the two calibrations procedures in more conventional conditions, where they give similar results with a very small error.

For the present optical arrangement and the new calibration method, the error in the Y positioning is the smallest. Indeed, due to the shape of the experiment (an icosahedron), the y axis of the camera sensor is almost aligned with the Y direction so that this coordinate is fully redundant between the cameras, while the x axes of each camera sensor form an angle α ≃ π∕3 with the X direction so that the precision on X positioning is lower. This directly impacts the precision on the Z positioning, whose error is almost equal to the X positioning error.

6.2.4 Discussion

Up to 13 planes were used to build the operator that yields the camera calibration. While two planes are the minimum required for the method, a larger number of planes imaged provide better accuracy. In this case study, the major sources of optical distortion were the Scheimpflug mounts, the imperfect lenses, and the non-perpendicular interfaces. 7 planes provided an optimal trade-off between high accuracy and simplicity, with an error only 2% larger than the 13 planes setup, while using only 3 planes yields an 10% larger error. The fact that few planes are sufficient to obtain a good accuracy of the calibration is likely related to the fact that the third-order polynomial plane-by-plane transformations are sufficient to handle most of the distortions, including those originating from the optics, from the tilt and shift system and from the refraction at the air–water interface, so that the projection of a pixel position to real space is accurately aligned along a line which defines the corresponding line of sight. Few points are then needed to accurately fit the line parameters (using more points essentially ensures a more robust fit with respect to small errors in the plane-by-plane transformations). When dealing with a more complex experiment, i.e., with a refraction index gradient, increasing the number of planes in the calibration would improve the results allowing to accurately capture the curvature of the light rays.

The proposed calibration method has several advantages that make it worth implementing in a multi-camera particle imaging setup. First, it requires no model or assumption about the properties of the optical path followed by the light in the different media outside the volume of interest. It only requires light to propagate in straight line. The method simply computes the equation for propagation of light in space. This ray line equation is fully determined by the physical location of the calibration dots located at known positions in space. Note that the present calibration method is versatile enough so that the linear propagation constraint can be easily relaxed. This can be useful, for instance, to calibrate stratified flows, with spatial variations of optical index. It is then sufficient to change the linear fit used to determine the line of sight (from the projected pixel coordinates to the planes), by an appropriate curved path of light (a polynomial fit may often be a good enough approximation). Second, this method is turnkey for any typical optical system. The implementation of the new method is easily done and can be used retroactively using previous calibration images.

Let us briefly discuss the improved accuracy of the calibration, compared to the model of Tsai. The reason for the improved accuracy is mainly hidden in the higher number of (hidden) parameters actually defining both calibration methods. As pointed out earlier, in the new proposed calibration all the calibration parameters are embedded in the plane-by-plane transformations, with 10 parameters for each third-order polynomial transformation. Using 13 calibration planes ends up with 130 hidden calibrating parameters. These reduce to 70 when using 7 planes. In any case this is much larger than the number of parameters embedded in the Tsai model (which has typically 6 external parameters defining the position and the orientation of the equivalent pinhole camera) and several internal parameters (focal length, pixel aspect ratio, optical distortion parameters, etc.), typically of order 10. It is therefore not surprising that the present method gives better accuracy. Note also that the present comparison may be unfair to the Tsai model, as we have not considered more sophisticated pinhole camera models, properly accounting, for instance, for tilt and shift corrections, and which would naturally embed a larger number of parameters and an increased accuracy. Such extension of the pinhole approach is based on sophisticated physical and geometrical models, with algorithms that tend to be tedious to implement. A big advantage of the present calibration is its versatility and ease of algorithmic implementation, which remains identical whatever the complexity of the optical path. Finally, note that while the proposed method has a larger number of parameter, they only come from empirical determination and are obtained automatically through the calibration process, and there is no need to prescribe a priori a set of parameters tightened to a specific model requiring choices from the user. This makes the method not only more accurate but also adaptable and objective.

To conclude, the model-free calibration method proposed can be easily implemented with both the calibration image acquisition and spatial detection of target points currently standard in the field. The calibration algorithm and the operator calculation to convert pixel locations to physical locations, with minimal errors, can easily be programmed in any language available to experimentalists (the reader can contact the authors for source codes to implement the calibration algorithms). The new method is at least equally, and frequently more, accurate than the commonly used Tsai model, and it can be used more easily and in a wider range of optical configurations. As experimental setups become more complicated with more optical and light refraction elements, this method should prove simpler to implement and more accurate than the model-based Tsai one.

6.3 Particle Tracking Algorithms

Section 6.3.1 describes the implementation of particle tracking velocimetry in a von Kármán flow using parallel light beams and two cameras forming an angle of 90∘. As described below, the originality of this implementation of PTV is in the combination of parallel illumination and of pattern tracking (rather than particle tracking), which makes the calibration and the matching particularly simple and accurate. It is well suited to the tracking of small objects in a large volume using only two standard LEDs as light sources. In this setup, tracking is performed independently on the 2 views using a nearest neighbour algorithm prior to stereo-matching 2D tracks. Section 6.3.2 describes recent improvements of the tracking algorithms which use more than two consecutive frames in order to increase track lengths.

6.3.1 Shadow Particle Tracking Velocimetry

6.3.1.1 Experimental Setup

Particle tracking has been performed in a tank with a 15 cm × 15 cm square cross-section, where a von Kármán flow is created between two bladed discs, of radius R = 7.1 cm and separated by 20 cm, counter-rotating at constant frequency Ω (Fig. 6.4a). The flow has a strong mean spatial structure arising from the counter-rotation of the discs. The azimuthal component resulting from this forcing is of order 2πR Ω near the discs’ edge and zero in the mid-plane (z = 0), creating a strong axial gradient (Fig. 6.4a). The discs also act as centrifugal pumps ejecting fluid radially outward in their vicinity, resulting in a large-scale poloidal recirculation with a stagnation point in the geometrical centre of the cylinder (Fig. 6.4b). Using water to dilute an industrial lubricant, UconTM, a mixture with a viscosity ν = 8.2 10−6 m2 s−1 and a density of ρ = 1000 kg m−3 allows for the production of an intense turbulence with a Taylor-based Reynolds number R λ = 200 and a dissipative length scale η = 130 microns (see Table 6.2 for more details on the flow parameters). This setup allows for the tracking of Lagrangian tracers (250 μm polystyrene particles with density ρ p = 1060 kg m−3) in a large volume 6 × 6 × 5.5 cm3 centred around the geometrical centre of the flow ((x, y, z) = (0, 0, 0)). Two high-speed video cameras (Phantom V.12, Vision Research, Wayne, NJ.) with a resolution of 800 × 768 pixels, and a frame rate up to f s = 12 kHz are used. This sampling frequency is sufficient to resolve particle accelerations, calculated by taking the second derivative of the trajectories.

(a) Sketch of the counter-rotating von Kármán flow. Arrows indicate the topology of the mean flow, the dashed line indicates the mid-plane of the vessel. (b) Schematic cut of the vessel along the (z, x) or (z, y) plane. (c) Optical setup for S-PTV with 2 identical optical arrangements forming an angle θ = 90 degrees (only the vertical arm is described). The 1W LED source is imaged in the focus of a parabolic mirror to form a large collimated beam. A converging lens and a diaphragm are used to make the LED a better point-like source of light. Light propagates through the flow volume passing through a beam splitter (BS) before being collected using a 15 cm large lens that redirects the collimated light into the camera objective. The optical system [L 2 + objective] is focused on the camera sides of the vessel, marked with a dashed-dotted line

The camera setup uses a classical ombroscopy configuration [34], with parallel illumination. We have recently used such a setup (depicted in Fig. 6.4c) for Lagrangian studies of turbulence [35]; we will use the data from this experiment to illustrate the present section. It consists of 2 identical optical configurations with a small LED located at the focal point of a large parabolic mirror (15 cm diameter, 50 cm focal length) forming 2 collimated beams which are perpendicular to each other in the measurement volume. A converging lens and a diaphragm are used to make the LED a better point-like source of light. This large parallel ray of light then reflects on a beam splitter and intersects the flow volume before being collected by the camera sensor using a doublet consisting of a large lens (15 cm in diameter, 50 cm focal length) and a 85 mm macro camera objective. All optical elements are aligned using large (homemade) reticles, which also precisely measure the magnification in each arrangement. When placing an object in the field of view, it appears as a black shadow on a white background, corresponding to the parallel projection of the object on the sensor. Thanks to the parallel illumination, the system has telecentric properties. The particle size and shape do not depend then on the object-to-camera distance, as opposed to classical lighting schemes where due to perspective the apparent object size changes with the object-to-camera distance. The telecentricity also makes the calibration of each camera trivial as there is a simple, unique, and homogeneous magnification factor relating the (x, y) pixel coordinates to the (X, Z) real-world coordinates for one camera and to (Y, Z) real-world coordinates for the other camera. In addition, the optical arrangement is rigorously implemented so that the Z real-world coordinate is exactly redundant between the 2 cameras. This makes the matching step (detailed below) both simple and accurate. When particles are tracked, camera 1 will provide their (x 1, z 1) 2D positions, while camera 2 will measure their (y 2, z 2) positions. As the z coordinate is redundant, a simple equation z 2 = az 1 + b accounts for slight differences in the magnification and centring between both arrangements.

6.3.1.2 The Trajectory Stereo-Matching Approach

Given the magnification of the setup (1∕4, 1 px equals 90 μm), the depth of field of the optical arrangement is larger than the experiment. As both beams do not overlap in the entire flow domain, particles situated in one light beam but outside the common measurement volume can give a well-contrasted image on one camera while not being seen by the other. Such a situation could lead to an incorrect stereo-matching event when many particles are present. This is illustrated in Fig. 6.5a, where the shadows left by two particles situated at the same z position but outside of the beams overlap (black dots) could be interpreted as one “ghost” particle within the overlapping region (dashed circle). To mitigate these errors, we construct 2D trajectories for each camera using the (x 1, z 1) and (y 2, z 2) coordinates separately. Once tracked in time, these trajectories, instead of individual particle positions, may be stereo-matched. This approach is similar to the “pattern matching” originally proposed by Guezennec et al. [16], in contrast with the particle-matching strategy, used in many recent studies, which perform stereo-matching on individual particles before tracking. The advantage of this method, in particular when it is combined with telecentric illumination, is that neither stereo-matching nor tracking errors are made, as will be detailed below. However, one must track many more 2D trajectories that are stereo-matched. Another drawback is the projection of 3D positions into a plane, which strongly decreases the inter-particle distance making the apparent particle overlap an issue when the particle diameter becomes large with respect to the effective measurement volume. However, the presence of redundancy in the z coordinate may be used to overcome such indetermination when the apparent proximity results only from the projection.

(a) Scheme of the intersecting parallel light beams showing individual particle stereo-matching is not reliable. The black dots are two particles at the same z position outside of the beams overlapping region and the dashed circle is a particle at the same z position within the region (both situations being measured identically by the cameras). (b) Time evolution of the raw z (redundant) coordinate of the same particles as obtained with 2D tracking with camera 1 and camera 2. Only 38 matched trajectories are plotted. (c) Affine relation between z 2 = az 2 + b (a = 0.98, b = 15.6 px) measured with 1900 trajectories corresponding to 6 × 105 data points. (d) A random sample of 150 trajectories in the vessel obtained from the same movie

We implement a 2D tracking scheme using a simple method inspired from previous works [8, 17, 20]. This tracking procedure searches for particles in frame n + 1 whose distance from particles in frame n is smaller than a specified value. If only one particle is found in the vicinity of the last point of a track, this track is continued. When multiple candidates are found, the track is stopped and new tracks are initiated with these new particles. Particles in frame n+1 which do not match with any of the existing tracks in frame n initiate new trajectories. This procedure, whose improvement is described in the next subsection, results in a collection of 2D trajectories with various lengths.

Stereo-matching is then performed by identifying trajectories with z 1(t) ≃ z 2(t) using the relation z 2 = az 1 + b as shown in Fig. 6.5b. This calibration relation is determined recursively using a dilute ensemble of particles for which the initial identification of a single pair of 2D trajectories gives a first estimate of the relationship between z 2 and z 1. As more trajectories are found, the affine relationship is refined until the maximum possible amount of trajectories for a single experiment is obtained. In this recursive manner, the tracking algorithm is self-calibrating. Here, the parameters are a = 0.98, b = 15.6 px estimated from 1900 matched trajectories, corresponding to 6 106 data points as shown in Fig. 6.5c. Together with the pixel-to-mm conversion from one of the cameras, this method provides all relevant information about particle positions in world coordinates. Note that the temporal support for the 2D tracks z 1(t) and z 2(t) for a given particle may not be identical (the track may be longer on one camera than on the other or may start and end at slightly different times). When it comes to analysing 3D Lagrangian statistics, only the portions of trajectories over a common temporal interval are kept. In addition, only trajectories with sufficient temporal overlap (typically 70 time-steps, i.e., approximately 2.5τ η) are matched, in order to prevent anomalous trajectories due to possible ambiguities when matching short patterns. Such an occurrence becomes increasingly unlikely as the trajectory duration threshold is increased. A false trajectory can only occur when the relationship z 2 = az 1 + b becomes undetermined, which may happen, for instance, when two particles are close to colliding and the matching of the two nearby particles becomes ambiguous. Such a situation remains however an extraordinarily rare event in dilute situations. After tracking and stereo-matching, each pair of movies gives an ensemble of trajectories from which all single particle statistics can be computed as shown in Fig. 6.5d.

6.3.1.3 Flow Measurements

Measurements are performed in a volume (6 × 6 × 5.5 cm3) larger than one integral scale (\(L_v=\mathrm{v}^{\prime {{ }^3}}\epsilon ^{-1}\simeq 4.8\) cm) of an inhomogeneous flow. As the statistics are subsampled spatially and temporally, a large number of trajectories are then needed to achieve a good statistical convergence. We record 200 sets of movies with a duration of 1.3 s at 12 kHz and obtain \(\mathcal {O}(1000)\) tracer trajectories per set. A statistical ensemble of \(\mathcal {O}(10^5)\) trajectories with mean durations 〈t〉∼ 0.25∕ Ω permits the spatial convergence of both Eulerian and Lagrangian statistics. The flow properties are obtained from the PTV data and are given in Table 6.2 together with the energy dissipation ε. The latter quantity is estimated by calculating the zero-crossing time τ 0 of the acceleration auto-correlation curves which is empirically known to be related to the Kolmogorov time scale τ η (τ 0 ≃ 2.2τ η) [36] and thus, energy dissipation. The fluctuating velocity of the flow is found to be proportional to the propeller frequency Ω (Table 6.2) due to inertial steering at the bladed discs which forces the turbulence that becomes full-developed, provided \(Re=\frac {\displaystyle 2 \pi R^2 \Omega }{\displaystyle \nu } > 3300\) [37]. In what follows, we focus our analysis on the case Ω = 5.5 Hz.

of trajectories, each containing the temporal evolution of the Lagrangian velocity at the particle position. Based on this ensemble of trajectories, one may reconstruct the mean velocity field in 3D,

and the rms fluctuations of each velocity component \((v^{\prime }_x,v^{\prime }_y,v^{\prime }_z)\). This is achieved by an Eulerian averaging of the Lagrangian dataset on a Cartesian grid of size 123, which corresponds to a spatial resolution of 5 mm in each direction. The choice of the grid size must fulfil several criteria: it must be small compared to the typical scale of the mean flow properties (here, L v ∼ 4.8 cm), but large enough so that statistical convergence is achieved. Here, the grid size was chosen so that there are at least \(\mathcal {O}(1000)\) trajectories in each bin, enough to converge both mean and rms values.

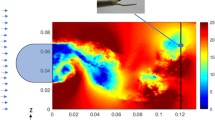

Figure 6.6a, b displays two cross-sections of the reconstructed mean flow in two perpendicular planes, the mid-plane Πxy = (x, y, z = 0) and Πyz = (x = 0, y, z), a horizontal plane containing the axis of rotation of the discs. We observe a mean flow structure that is close to the schematic view of Fig. 6.4a. The flow is almost radial and convergent with 〈v z〉∼ 0 in Πxy, with a z component which reverses under the transformation z →−z (Fig. 6.6b). We also observe a strong y-component of the velocity in Πyz which reverses under the transformation y →−y and corresponds to the differential rotation imposed by the discs. These cross-sections also reveal that the flow has the topology of a stagnation point at the geometric centre (0, 0, 0), as was shown in another von Kármán flow with a circular section [38]. With a 3D measurement of the mean flow, it is possible to compute spatial derivatives along all directions. This leads to ∂ x〈v x〉∼ ∂ y〈v y〉≃−1.5 Ω for the stable directions, and ∂ z〈v z〉∼ 3.0 Ω for the unstable direction. Note that the sum of these terms must be zero because this quantity is the divergence of the mean flow. This condition is found to be well satisfied although the velocity components were computed independently without any constraint. The verification that the flow is divergence-free is then an a posteriori test that the reconstruction of the mean flow is physically sound. Figure 6.6c, d displays rms values of velocity fluctuations \(v'=\sqrt {({v^{\prime }_x}^2+{v^{\prime }_y}^2+{v^{\prime }_z}^2)/3}\) in Πxy = (x, y, z = 0) and Πyz = (x = 0, y, z). These maps reveal that the flow properties are anisotropic and inhomogeneous at large scales, as previously observed in similar setups [39].

Cuts of the 3D reconstructed Eulerian mean velocity (a, b) field and rms velocity (c, d). The reconstruction is achieved by computing the mean 〈 v 〉 and rms values \(({v^{\prime }_x},{v^{\prime }_y},{v^{\prime }_z})\) of the velocity in each bin of a Cartesian grid of size 123. (a) Πxy = (x, y, z = 0) plane. Arrows are (〈v x〉, 〈v y〉), the colour coding for the 〈v z〉. (b) Πyz = (x = 0, y, z) plane. Arrows are (〈v y〉, 〈v z〉), the colour coding for the 〈v x〉. (c) rms value of velocity fluctuations \(v'= \sqrt {({v^{\prime }_x}^2+{v^{\prime }_y}^2+{v^{\prime }_z}^2)/3}\) in the Πxy = (x, y, z = 0) plane. (d) rms value of velocity fluctuations in the Πyz = (x = 0, y, z) plane

6.3.2 Improved Four-Frame Best Estimate

As mentioned in the previous section, using only two frames and a nearest neighbour criterion may lead to multiple candidates for a given track or wrong matches when increasing the number of particles in the field of view. To overcome such limitation, four-frame tracking methods were developed, as, for instance, the “four-frame minimal acceleration method” (4MA), developed by Maas et al. [14], which minimises the change in acceleration along the track, or the further extension by Ouellette et al., known as “four-frame best estimate” particle tracking method (4BE) which minimises the distance between the prediction of particle position two time-step forward in time and all the particles detected at that time [17]. The 4BE method was shown [17] to have an improved tracking accuracy compared to the 4MA method. The 4BE method builds on a nearest neighbour approach and three-frame tracking methods to improve tracking performance by utilising location predictions based on velocities and accelerations.

The 4BE method uses four frames (n − 1, n, n + 1, and n + 2) to reconstruct particle trajectories, as illustrated in Fig. 6.7a. Individual tracks are initialised by using the nearest neighbour method, which minimises the distance between a particle in frame n − 1 and frame n. Once a track is started, the first two locations in the track are used to predict the position \(\tilde {x}_{i}^{n+1}\) of the particle in frame n + 1:

where \(x_{i}^{n}\) is the position of the particle in frame n, \(\tilde {v}_{i}^{n}\) is the predicted velocity, and Δt is the time between frames. A search box is then drawn around this predicted location to look for candidates to continue the track. The size of the search box is set to be as small as possible (usually a few pixels) since it is expected that the actual particle location will be close to the prediction. Additionally, if the flow statistics are anisotropic, the search box can be adjusted to be larger along the axis with higher velocity fluctuations and smaller in the directions with smaller fluctuations. This decreases computational costs because it limits the number of particles found in the initial search, thus limiting possible track continuations. The particles found within this bounding box can then be used to predict a set of positions \(\tilde {x}_{i}^{n+2}\) in frame n + 2:

where \(x_{i}^{n}\), \(\tilde {v}_{i}^{n}\), and Δt are the same as above, and \(\tilde {a}_{i}^{n}\) is the predicted acceleration. As in the previous frame, n + 1, a search box is drawn around each of the \(\tilde {x}_{i}^{n+2}\) predicted locations. Each of these bounding boxes is then interrogated for particles. Using these particle locations, the track is determined by minimising the cost function \(\phi _{ij}^{n}\):

Equation (6.3) minimises the distance between the actual (\(x_{j}^{n+2}\)) and predicted (\(\tilde {x}_{i}^{n+2}\)) particle locations, thus minimising changes in acceleration for a given track. An optional upper threshold, typically half the length of the search box, can be set on the cost function to help limit tracking error. The particle, and, therefore, the track that minimises this cost function and falls within the threshold is then defined as the correct track and all other possible tracks are discarded. It is also important to note that a track is discarded if at any point it does not contain any particles in the search box in frames n + 1 or n + 2.

(a) 4BE-NN. Particle locations are denoted with filled symbols, whereas predicted particle locations are denoted with hollow symbols. The boxes represent the bounding boxes used in the algorithm. The predicted path is overlaid in the figure. (b) 4BE-MI. The initial bounding box (now shown in the figure) allows for more potential tracks to be examined when searching for the correct track. (c) Comparison of tracking performance for 4BE-NN and 4BE-MI methods. At values of ξ < 0.2, the tracking error is zero for 4BE-MI

While 4BE with nearest neighbour initialisation (4BE-NN) is a very good compromise between tracking accuracy and efficiency (low computational cost), there are certain cases where it starts to fail. For instance, it is not suitable for situations where the particle displacement starts to be comparable to the inter-particle distance. Therefore, we have developed a modified initialisation (MI) method for 4BE (4BE-MI) that is more effective at detecting tracks than the current nearest neighbour initialisation [40]. Figure 6.7b shows the modified 4BE algorithm. This method uses a search box based on the estimated maximum particle displacement between two frames to initialise tracks. The size of this search box is determined based on the flow characteristics (instantaneous spatial-averaged velocity, velocity fluctuations in all three directions, etc.), but it is always larger than the size of the search box used for track continuation (which is only aimed at accounting for the error in evaluating the next position in the track). This allows the algorithm to explore multiple possible trajectories for each particle and eliminates the assumption that the closest particle in the next frame is the only option when starting a track. It also enables a track to be constructed based on knowledge of the flow physics as a feature of the initialisation.

The performance of the 4BE algorithm both with and without the modified initialisation scheme was analysed using direct numerical simulation (DNS) data of a turbulent channel available through the Johns Hopkins University Turbulence Databases [41]. The DNS was performed in a 8π × 2 × 3π domain using periodic boundary conditions. The Reynolds number was \(Re = \frac {U_{c}h}{\nu }=2.2625 \times 10^{4}\), where U c and h are, respectively, the channel centre-line velocity and height. The flow was initially seeded with tracer particles throughout the entire volume. The particles were then advected through the channel for each time-step based on the resolved DNS flow field. The trajectories were cut in a subdomain of the channel, creating an ersatz of particle entering and leaving the measurement volume as is typical in experiments. The trajectories generated were then used to benchmark the tracking scheme by comparing tracking results to the known trajectories.

Several datasets were generated by varying the distances that the particles moved between frames. This generated data over a wide range of ξ, defined as the ratio of the average distance each particle moves between frames to the average separation between particles in a frame. When ξ is small, tracking is easy because the particles move very little between frames and there are not many particles to consider for track continuation. However, as this ratio increases, tracking becomes more difficult because the particles move a large amount between frames and there are many particles per frame. Figure 6.7c shows the tracking error E track plotted against ξ. The tracking error is defined as:

where N imperfect is the total number of imperfect tracks and N total is the total number of tracks in the dataset generated. A perfect track must start at the same point as the actual track and must contain no spurious locations.

Figure 6.7c shows how the tracking error E track is decreased when using the modified initialisation scheme. E track is equal to zero, meaning there are no erroneous tracks computed, up to approximately ξ = 0.2 for the modified initialisation scheme. Additionally, at all values of ξ, the modified initialisation scheme performs better than the nearest neighbour initialisation scheme. This shows the advantage of the modified initialisation scheme in creating trajectories in flow with large particle displacements or high particle density.

6.4 Noise Reduction in Post-Processing Statistical Analysis

Particle tracking velocimetry leads to a collection of tracks, (x j(t))j ∈ [1,N], from which turbulent statistics, such as the mean flow and velocity fluctuations, may be computed. Most of the desired quantities have in common that they require taking the derivative of the particle positions, which inevitably leads to noise amplification. In the Lagrangian framework, single particle (two-time) statistics such as velocity or acceleration auto-correlation functions are of great interest; they will be considered in Sect. 6.4.1. In the Eulerian framework, moments of velocity differences separated by a distance r (structure functions) are of great importance; these two particle statistics will be addressed in Sect. 6.4.2.

The method presented below seeks to obtain unbiased one- and two-point statistics of experimental signal derivatives without introducing any filtering. It is valid for any measured signal whose typical correlation scale is much larger than the noise correlation scale. While one aims to obtain the real signal \(\hat {x}\), the presence of noise b implies that one actually measures \(x(t) = \hat {x}+b\). For simplicity, we consider the case of a temporal signal x(t) that is centred, i.e., \(\left \langle x \right \rangle =0\), and is obtained by considering \(x(t)-\left \langle x \right \rangle \), where \(\left \langle \cdot \right \rangle \) is an ensemble average.

The method is based on the temporal increment dx of the signal x over a time dt that we express as \(dx = x(t+dt)-x(t) = d\hat {x} +db\). Assuming that the increments of position and noise are uncorrelated, the position increment variance is written as \(\left \langle (dx)^2\right \rangle = \left \langle (d\hat {x})^2\right \rangle + \left \langle (db)^2\right \rangle \). Introducing the velocity \(\hat {v}\) and acceleration \(\hat {a}\) through a second-order Taylor expansion \(\hat {x}(t+dt) = \hat {x}(t)+{\hat {v}} ~dt+\hat {a} ~dt^2/2 + o(dt^2)\), one obtains:

where \(\left \langle (db)^2\right \rangle =2\left \langle b^2\right \rangle \) in the case of a white noise [24, 42]. In Eq. (6.5) \(\left \langle (dx)^2\right \rangle \) is a function of dt so that one can recover the value of the velocity variance \(\langle \hat {v}^2 \rangle \) by calculating time increments of \(\left \langle (dx)^2\right \rangle (dt)\) over different values of dt followed by a simple polynomial fit in the form of Eq. (6.5). If the noise is coloured, \(\left \langle (db)^2\right \rangle =2\left \langle b^2\right \rangle -2\left \langle b(t)b(t+dt)\right \rangle \). In this case, the method requires the noise to be correlated over short times when compared to the signal correlation time. As a result, only the lowest values of \(\left \langle (dx)^2\right \rangle (dt)\) are biased by \(\left \langle b(t)b(t+dt)\right \rangle \) and a fit still successfully allows for the evaluation of the root mean square (rms) velocity, \(\hat {v}'=\sqrt {\langle \hat {v}^2 \rangle }\). For an experimentally measured signal x, equally spaced at an acquisition rate f s, the minimal value of dt is 1∕f s; we can then obtain the values of dx for different values of dt = n∕f s. For this method, a value of the acquisition rate f s higher than usual is required, in order to be able to access derivatives of the signal without aliasing error.

We can extend the previous calculation to higher order derivative statistics by considering higher order increments. The second-order increment d 2 x = x(t + dt) + x(t − dt) − 2x(t), which is related to the acceleration variance \({\langle \hat {a}^2 \rangle }\) here, yields, for instance:

where \(\left \langle (d^2b)^2\right \rangle =6\left \langle b^2\right \rangle \) in the case of a white noise [24, 42], but otherwise introduces additional noise correlation terms which are functions of dt.

6.4.1 Lagrangian Auto-Correlation Functions

The approach developed above is not restricted to one-time statistics of the signal derivatives but can be generalised to estimate the noiseless first- and second-order derivative auto-correlation functions of the signal \(C_{\hat {v}\hat {v}}=\left \langle \hat {v}(t) \hat {v}(t+\tau )\right \rangle \) and \(C_{\hat {a}\hat {a}}=\left \langle \hat {a}(t) \hat {a}(t+\tau )\right \rangle \). This is done by considering the correlations of first- and second-order increments \(\left \langle dx(t) dx(t+\tau )\right \rangle \) and \(\left \langle d^2x(t) d^2x(t+\tau )\right \rangle \) which are functions of dt and τ. Noiseless velocity and acceleration correlation functions are estimated, respectively, for each time lag τ using a polynomial fit of the signal time increment dt with the following expressions:

where \(C_{fg}=\left \langle f(t) g(t+\tau )\right \rangle \) is a cross-correlation function. It can be noted that the case of the rms values corresponds to τ = 0 and it is noted that 〈(dx)2〉 and 〈(d 2 x)2〉 are functions of dt. In the previous expressions and in the case of a white noise, we can write auto-correlation functions of the first- and second-order increments of the noise. With the signal sampled at a frequency f s, one has dt = n∕f s and τ = m∕f s. The correlation functions of the digitised noise increments are written as:

where δ m,n is the Kronecker symbol. For both derivatives, the white noise magnitude in the first-order derivative auto-correlation functions is the highest for τ = 0 and is an additive term. The noise then yields a negative term for m = n. In the case of second-order derivatives (for acceleration in the case of Lagrangian tracks), the noise magnitude has a larger weight and the noise also contributes to a third time point of the function (m = 2n) with a positive term of smaller amplitude. Considering white noise terms up to dt 6, all other values of τ will directly yield the function without noise.

6.4.1.1 Results

The method has been applied to the material particle trajectories from Ref. [43]. It has been tested successfully for different particle diameters (from 6 to 24 mm), Reynolds numbers (350 < Re λ < 520), and two density ratios (0.9 and 1.14), as well as for neutrally buoyant particles from Ref. [44]. We will focus only on the case of particles 6 mm in diameter and of density ratio 1.14 at a Reynolds number Re λ = 520 in this example. The position trajectories are obtained by stereo-matching of successive image pairs obtained, thanks to two cameras and ambient lighting. The particles appear as large, bright discs on a uniform dark background which yields sub-pixel noise for the trajectories (the apparent particle diameter is about 20 pixels) and is not correlated with the particle position as the background is uniform (nor with its velocity as the exposure time is short enough to fix the particles on the images). In practical situations, the presence of sub-pixel displacements can lead to a short-time correlation of the noise, typically over a few frames.

Figure 6.8 shows the evolution of \(\left \langle (dx)^2 \right \rangle \) and \(\left \langle (d^2x)^2 \right \rangle \) with dt. A simple linear function of dt 2 is enough for \(\left \langle (dx)^2 \right \rangle \) and a sixth-order one suits better \(\left \langle (d^2x)^2 \right \rangle \). The first points of \(\left \langle (d^2x)^2 \right \rangle \) do not follow Eq. (6.6), which may be due to the fact that we are not dealing with a purely white noise as will be shown in Fig. 6.9b. Using the estimated values of the rms acceleration, a′, and 〈(d 2 b)2〉, we can define a noise-to-signal ratio \(b' f_s^2/a'=11.9\), where we have defined \(b'=\sqrt {\langle (d^2b)^2 \rangle /6}\) by analogy with the white noise case. When considering the noise weight on the velocity signals, we of course find a much smaller magnitude b′f s∕v′ = 0.14 as it is only a first-order derivative (v′ being the rms of the velocity estimated with this method).

(a) Evolution of \( \left \langle (dx)^2 \right \rangle \) with (dt∕τ a)2, where τ a = 8.1 ms is the particle acceleration time scale (integral of the positive part of the particle acceleration auto-correlation function). The dashed line is a linear fit over the range 0 < dt∕τ a ≤ 0.25. (b) Evolution of \( \left \langle (d^2x)^2 \right \rangle \) with dt∕τ a. The dashed-dotted and dashed lines are fourth and sixth order fits (α + β(dt∕τ a)4 resp. α + β(dt∕τ a)4 + γ(dt∕τ a)6) over the range 0 < dt∕τ a ≤ 0.62. The insets are zooms on low values of dt∕τ a

(a) Auto-correlation functions of the velocity or acceleration (b) estimated from the proposed method (dashed line) and directly computed by differentiating the position signal obtained by PTV (continuous line). The insets are zooms on the low values of τ. The fit ranges used to obtain the functions are the same that used in Fig. 6.8. The dashed-dotted line in figure (b) is the correlation estimated from filtered trajectories using a Gaussian kernel \(K=A_w\exp (-t^2/2w^2)\), where w = 12 points and A w is a normalisation factor

Figure 6.9 shows the auto-correlation function of both the velocity and acceleration estimated with the proposed method, compared to the raw functions. With the low level of noise in this configuration, the velocity is almost unbiased and both functions are indistinguishable except for the first points of the raw function that are offset by the noise. On the second-order derivative, it can be observed in Fig. 6.9b that the raw acceleration auto-correlation function is biased for more than the three first points only (see inset). This is because the noise is not white but has a short correlation time compared to the signal. Combined with the finite duration of the trajectories, the raw correlation function is noisy over the whole range of time lags τ. This curve is plotted together with the one estimated with the method, fitting the coefficient up to dt = 5 ms which corresponds to 30% correlation loss in acceleration signals (same range as in Fig. 6.8b, but the precise choice is not critical). Although the signal-to-noise ratio is poor, the estimated correlation function seems to be following the median line between the peaks caused by noise and crosses zero at the location that seems to be indicated by the raw function. It is also close to the auto-correlation function from Ref. [43], estimated by filtering the data with a Gaussian kernel \(K=A_w\exp (-t^2/2w^2)\) (with w = 12 points and a compact support of width 2w, A w is a normalisation factor). It should be stressed that the value w = 12 was chosen arbitrarily as a compromise between suppressing oscillations at small lags without altering too much the shape of the function at larger lags.

With the new method, we compute an acceleration time scale τ a = 8.1 ms and an acceleration magnitude a′ = 12.4 mmss−2, which is close to the values τ a = 8.8 ms and a′ = 12.9 mmss−2 found for the filtered data [43]. However, in the latter case, the value of a′ depends strongly on the choice of the filter width w, so that one usually estimates a′ by computing it for different filter widths which can then allow to extrapolate a best estimate value (as introduced in [5]).

6.4.1.2 Discussion

The present de-noising method estimates moments and auto-correlation functions of experimental signal derivatives. This method relies on two main assumptions:

-

1.

The signal is correlated on a longer time scale than the noise.

-

2.

The sampling frequency, f s, is high enough so that the first and second derivatives of the signal can be computed by taking increments over several (N) points.

We have tested the method in the context of Lagrangian particle tracks in turbulence for which the noise is correlated on times much shorter than the signal, considering both first- and second-order derivatives of a time dependent signal. The results are in good agreement to what is obtained by classical filtering processes which require a long bias study specific to the data type [5, 45], and we believe them to be more accurate. The method avoids subjective tuning of the filter width and choice of filter type while yielding unbiased quantities by requiring data fits in an appropriate range. While the fit range is still an adjustable parameter, we observed its impact on the results to be smaller than when filtering the data. Another advantage of the method is an easy access to the noise magnitude. While building a new experimental setup, one can gather just enough statistics to converge second-order moments to estimate the noise magnitude and try and improve the setup iteratively.

6.4.2 Eulerian Structure Functions

6.4.2.1 Method

The method presented above can be extended to compute Eulerian statistics, such as structure functions, from the collection of tracks (that can be two-frame displacement vectors in PIV). From particle positions x, which are measured with some noise b (\(\mathbf {x}=\hat {\mathbf {x}} + \mathbf {b}\), where \(\hat {\mathbf {x}}\) are the actual positions), we define a 3D Lagrangian displacement field between two consecutive images taken at instants t and t + dt is then \(d \mathbf {x}= \mathbf {x}(t+d t)-\mathbf {x}(t)=d \hat {\mathbf {x}} +d \mathbf {b}\). This displacement field can be conditioned on a Cartesian grid so that its first moment

is computed in each bin of the grid to compute the mean flow \(\left \langle \hat {\mathbf {v}} \right \rangle \). We then compute the centred second-order moment of the displacement field

where the prime stands for fluctuating quantities. Note that this formula is easily extended to centred cross-component second-order moments which are linked to the components of Reynolds stress tensor in each point of the grid.

The de-noising strategy is applied to data obtained from a pair of images taken with standard PIV cameras, one experimental set corresponds to a single value of dt. The moments \(\left \langle d \mathbf {x}\right \rangle \) and \(\left \langle (d \mathbf {x})^{\prime 2}\right \rangle =\left \langle (d \mathbf {x} - \left \langle d \mathbf {x}\right \rangle )^2\right \rangle \) are then calculated for multiple experimental sets where images of the particles in the flow are collected at increasing values of dt. When the evolution of \(\left \langle (d \mathbf {x})^{\prime 2}\right \rangle \) with dt is fitted by a polynomial of the form c 1 dt 2 + c 2 in each bin, the leading coefficient is the field \(\left \langle \hat {\mathbf {v}}^{\prime 2}\right \rangle \). The third-order correction is negligible because dimensional analysis gives \(\left \langle \hat {\mathbf {v}}^{\prime 2}\right \rangle / \left \langle \hat {\mathbf {a}}' \cdot \hat {\mathbf {v}}'\right \rangle \tau _\eta \sim Re_\lambda \), where \(\tau _\eta = \sqrt {\nu /\varepsilon }\) is the dissipative time and Re λ is the Reynolds number at the Taylor length scale. In turbulent flows, \(\left \langle \hat {\mathbf {a}}' \cdot \hat {\mathbf {v}}'\right \rangle \) is well approximated by the dissipation rate ε. Taking dt smaller than the dissipative time ensures that the displacement field variance is well approximated. The advantage of this method is that it uses all the measurements taken at different values of dt without having to choose any particular dt, as would be done in a classical PIV experiment. And unlike PIV, there is no filtering of the data in the form of windowing.

This method can be extended to higher order moments of the displacement field, as well as to recover increment statistics, for example, the longitudinal second-order structure function of the velocity (\(\hat {S_2}=\left \langle [(\hat {\mathbf {v}}(\mathbf {x}+\mathbf {r})-\hat {\mathbf {v}}(\mathbf {x}))\cdot {\mathbf {e}}_r]^2\right \rangle \), with e r = d x∕|d x|), by fitting the evolution of \(\left \langle [(d \mathbf {x}(\mathbf {x}+\mathbf {r})-d\mathbf {x}(\mathbf {x}))\cdot {\mathbf {e}}_r]^2\right \rangle \) with a polynomial \(\hat {S_2}(|\mathbf {r}|)d t^2 + c_2\):

Note that the structure function computation does not require the conversion of displacements to Eulerian coordinates, but rather to bin the inter-particle distance |r|. This means that measuring structure functions is possible at arbitrarily small separations |r|, without any requirements on the Eulerian spatial binning. This method requires only a statistical convergence in the number of particles N at a certain range of inter-particle distance (a number that is proportional to N 2). This represents a significant advantage over methods for structure function computation that carry an associated increase in measurement noise at small separations |r|.

The second-order moment of the velocity fluctuations and second-order structure function are presented here as examples of what the expansion of statistical moments, combined with data collected at different dt can achieve. Higher order moments for the velocity fluctuations and higher order structure functions can be easily computed by this method with reduced noise, although they will contain residual noise from the computation of lower order moments (o(dt 3) terms above).

6.4.2.2 Results

Particle displacements measured in a homogeneous, isotropic turbulence experiment [33, 46] are used to demonstrate the validity and accuracy of the method. Two CMOS cameras with a resolution of 2048 × 1088 pixels were used in a stereoscopic arrangement. Images were collected in double-frame mode, separated by a time-step dt from 0.05τ η to 0.2τ η. Alternatively, using a very fast acquisition/illumination rate using high-speed camera and kHz pulsed lasers allows us to collect a single image sequence and then take a variable dt in the analysis by skipping an increasing number of images in the sequence. Measurements were obtained in a volume of 10 × 10 × 1 cm3 using a Nd:YAG laser. For each experiment, approximately 10,000 pairs of image sets per time-step (each set providing the 3D position of several hundred particles in the flow) were collected to ensure statistical convergence.

The different results for the longitudinal second-order structure function of d x (Fig. 6.10a) at different time-steps, dt, show a strong dependency on how the noise affects the signal for different values of dt. The displacement correlation plotted at fixed separations (five different values) are all quadratic in dt (Fig. 6.10b), showing that this approximation is robust for different levels of measurement noise. The trend c 1 dt 2 + c 2 from Eq. (6.11) is followed at different values of the separation |r|, with the positive values of c 2 being proportional to the variance of the noise (Eq. (6.10)). The quadratic coefficient c 1 is the second-order function of the velocity with the noise removed. The presence of the inertial range is highlighted by the 2/3 slope in Fig. 6.11a, over approximately one decade, in good agreement with the prediction of Kolmogorov for the second-order structure function in homogeneous isotropic turbulence (\(\hat {S_2}\sim \varepsilon ^{2/3}|\mathbf {r}|{ }^{2/3}\)) [47]. Turbulence variables extracted from velocity measurements would be subject to a significant level of uncertainty and inaccuracy (seen in Fig. 6.10a) if the noise were not removed by the method proposed here.

(a) Longitudinal second-order structure functions of the raw displacement field against the separation |r| normalised by the Kolmogorov length scale η for different values of dt i equally spaced from 0.05 to 0.2 τ η at Re λ = 291. (b) Same quantities but plotted at a given separation |r| (indicated by the vertical dashed lines on (a); ascending order is for different values of dt), as a function of the inter-frame time-step value dt. The lines are fits of the form c 1 dt 2 + c 2

Figure 6.11b shows the estimation of the dissipation rate of turbulent kinetic energy, \(\varepsilon _r=\hat {S_2}^{3/2}/|\mathbf {r}|\) for three different Reynolds numbers studied in this experimental implementation of this de-noising method. The plateau values obtained confirm the presence of the inertial range and their values correspond to the ensemble average of the local dissipation rate. The estimations of ε, as well as u′ (spatial average of the fluctuating velocity map), for different Reynolds numbers compare well with those in [33], obtained by 2D3C PIV, confirming the accuracy of the method. In fact, the values of u′ and ε are slightly lower than those obtained by PIV. This discrepancy can be explained, qualitatively, based on the physics of the measurements and the effect of the noise on these metrics when it is not eliminated from the displacement measurements. Previous velocity measurements in the same experiment, conducted by traditional PIV [33], corresponded well with the actual velocity measured with this technique, but with the noise variance retained. The structure function (and hence ε) measured with traditional techniques was also subject to this erroneous increase in the value due to the contribution of noise to the computation of this statistical value. Equation (6.11) shows that the term \(\left \langle [(d \mathbf {b}(\mathbf {x}+\mathbf {r})-d\mathbf {b}(\mathbf {x}))\cdot \mathbf {e_r}]^2\right \rangle \) will increase the value ε due to noise. To determine the importance of this term, it is expanded into \(4\left \langle {\mathbf {b}}^2\right \rangle (1-C_b(|\mathbf {r}|))\), where C b(|r|) is the noise spatial correlation, bounded between (−1, 1). Regardless of the value of C b, it will erroneously increase the value of the structure function yielding a higher value of ε. As the value of C b depends on spatial separation, it will not uniformly raise it for all values of |r| and the slope of the structure function may evolve with separation, making the value of ε noisier.

6.4.2.3 Discussion

The comparison of the flow statistics with a previous 2D3C PIV study [33] allows for the validation of the proposed method. In fact, the measurements show better results, with no need to tune arbitrary filtering parameters to remove noise (the interrogation window size, for instance). The only parameters that must be chosen for the method proposed here are the different values of dt that are accessible for a given flow and camera/illumination available, the form of the fit function, and finally the binning in space to compute the Eulerian average and fluctuating velocities (if so desired), and in separation distance to compute the structure function.

The values of dt are subject to two limitations. They must be high enough so that particles move more than the measurement error while keeping the large displacements associated with highest dt from interfering with the ability of the particle tracking algorithm to identify individual particles [48]. As mentioned, a maximum value of \(d t \lesssim \tau _\eta \) ensures that the third-order correction remains small, \(\left \langle \hat {\mathbf {v}}^{\prime 2}\right \rangle \big / \left \langle \hat {\mathbf {a}}' \cdot \hat {\mathbf {v}'}\right \rangle \tau _\eta \sim Re_\lambda \). This was verified in the present experimental setup and we found this correction to be negligible when compared to the second-order term. This was also the case for the structure function provided the separation lies in the inertial range |r|≫ η. In such cases, the best agreement between fit functions and the data overall was found when using a quadratic function of dt. As for the number of time-step values needed, the value of ε when using only the three larger values of dt was only 5% lower than when using all five datasets. Using only the lowest value and largest values of dt allowed for a simple calculation of ε that was only 2% higher than with the full experimental set.

The displacement vector field obtained from particle tracking in this multiple time-step method is computed in a Lagrangian frame of reference. To compute the values of \(\left \langle (d \mathbf {x})^2\right \rangle \) against dt, the displacement field must be binned into a spatial grid, converting it to an Eulerian frame of reference. Although the number of particles per image, or Eulerian grid cell, is relatively small in these PTV images, the velocity is estimated independently for each particle pair. Thus, the statistical convergence in the method is reached relatively quickly (without the need for a very large number of image pairs). The computation of the structure functions highlights this advantage. As pointed out above, the structure function could in principle be computed to arbitrarily small separation between particles. However, great care should be taken in doing so because: (1) it is difficult to achieve statistical convergence in finding particles with small separations; (2) the second and third-order terms in Eq. (6.11) are of the same order of magnitude when the separation is in the dissipation range (|r|∼ 10η). These reasons explain why an increase of the structure functions at small separations is observed in Fig. 6.10a.

6.5 Conclusions

We have presented recent developments in the characterisation of flows in laboratory experiments using particle tracking velocimetry, one of the most accurate techniques in experimental fluid mechanics. By tracking simultaneously hundreds of particles in 3D, it allows the experimentalist to address crucial questions related, for instance, to mixing and transport properties of flows.

The main aspects of particle tracking are addressed. A new optical calibration procedure based on a plane-by-plane transformation, without any camera model, is presented. It is at least as precise as Tsai model though more versatile as it naturally accounts for optical distortions and can be used in very complex configurations (such as Scheimpflug arrangement, for instance). Tracking algorithms are at the heart of PTV, and the practical implementation of two of their recent development is described: shadow particle tracking velocimetry using parallel light and trajectory reconstruction based on a four frames best estimate method (4BE) with improved initialisation. While the former was developed originally to access the size, orientation, or shape of the tracked particles, the latter is a natural extension of classical PTV setup and can be easily implemented as an add-on of any existing code.