Abstract

During a research survey, it is very important to quickly find suitable papers. It is common practice for researchers to select relevant papers by searching using query keywords, ranking those papers by citation number, and checking in order from the highest ranked papers. However, if a paper that had a query keyword as a non-primary word had many citations, it would hinder any attempt to quickly find the appropriate paper. We have already proposed a Focused Citation Count (FCC) that supports the finding of suitable papers by setting the number of citations as a more appropriate evaluation index by properly focusing on cited papers which are the sources of citation counts. In this study, we propose an improved method of FCC. Since FCC is easily affected by the size of the cited number, this proposal aims to reduce its characteristics. We evaluate the proposed method using actual paper data and try to confirm its effectiveness.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

When promoting their own research, researchers need to show their own research and superiority by studying related research. From an enormous number of academic papers, it is necessary for researchers to quickly and appropriately find existing studies related to their own research, that is, in a way that emphasizes the important papers. Studies of research facilities and researchers that we know as rivals are capable of checking papers, but there is always a need to further investigate related papers. Many academic paper databases provide a search function using query keywords, and it is possible to select papers according to the query. For example, Scopus provides citation numbers of papers obtained through searches as additional information for paper selection. Many papers are still selected, but since selected papers can be ranked using citation counts, many researchers check papers according to their ranking. It is necessary to check the author, title, and abstract and to obtain the main body of the paper if necessary to determine the relevance and importance of the research. The appropriate ranking of papers is an important technique that reduces waste. While there are some criticisms, the number of citations still holds an important position as a method of directly evaluating the value of a paper.

However, there is no guarantee that the number of citations is an appropriate criterion. Even if a paper has an unimportant word as a query keyword, many citations will give it a high evaluation. We need to read such a paper and remove it. Investigation of a paper will become more efficient if there is an evaluation index that is more appropriate than the number of citations.

We propose the Focused Citation Count (FCC) [9] that utilizes only appropriately cited papers in order to find other relevant research. We propose this method because we think that a paper cited from ones without appropriate content is inappropriate. Of course, such a judgment is not always easy to make. However, it is possible to make a statistical decision from many citations.

However, the FCC is easily affected by the size of the original number of citations. In the case of a paper with a large number of citations, even if the relevance is relatively small, the FCC value tends to be large. For example, we can simply show the relationship with the field you are looking for in a percentage and assume that it denotes the proportion of citations from that field. I would like to evaluate paper (A) when there is a paper (B) with a relation of 90% to paper (A) with a 10% relationship. However, if the citation number of paper (A) is 10 and the citation number of the paper (B) is 100, the FCC is 9 in paper (A) and 10 in the paper (B), and the evaluation is reversed.

In this study, we propose an enhanced method of FCC which improves this point. In order to strengthen the evaluation of relevance between the field under investigation and the thesis, we aim to reduce the influence of the number of citations by emphasizing the proportion of quotations from related fields. Experiments are carried out using actual thesis data, and the effectiveness of the proposed method is tested.

2 Focused Citation Count and Its Improvement

2.1 Focused Citation Count

FCC [9] is an evaluation index that modifies the number of citations to values useful for the proper selection of papers, by limiting the papers used for counting citations to only papers suitable for research purposes. Various methods are considered for restricting papers for this purpose, but in this study, we limit papers cited using query keywords used to search for papers. This is formulated as follows.

Let FP(q) be a set of papers selected by the query keyword q in the target where all papers are set as A. Next, let CP(p) be a set of papers citing the paper p. That is, \(PF(q)\subset A, CP(p)\subset A.\)

The total citation count cc(p) of paper p is: \(cc(p) = \left| CP(p) \right| .\)

Let CFP(p, q) be the paper set selected by the query keyword q out of the paper set CP(p) citing the paper p. That is, \( CFP(p,q) = CP(p) \cap FP(q). \)

The value fcc(p, q) of the FCC which is the evaluation index is obtained by Eq. (1).

2.2 Basic Idea of Improvement

Let us suppose that we are looking for related papers in a certain field. With respect to the research field of the papers citing the finished paper, I will show the proportion in which the field of both papers is the same with a simple numerical value. Suppose there is a paper (A) with a citation rate of 90% from the same field and a paper (B) with a rate of 10%. If the citation number of paper (A) is 10 and the citation number of paper (B) is 100, the FCC is 9 for paper (A) and 10 for paper (B). That is, the FCC of paper (B) is higher. However, from the viewpoint of expertise, I would like to evaluate whether paper (A) is more useful.

To make this possible, we propose using the ratio of citation counts from related fields for evaluation. In other words, since a paper with a high proportion of FCC to CC (citation count) is a paper that ought to be highly evaluated, we think that an evaluation combined with FCC and percentages could solve the problem described in this section. Proposals for concrete calculation methods are provided in the next section.

2.3 Improvement of Focused Citation Count

First, we calculate the ratio r of the number of CP(p) and CFP(p, q) as the rate of the relevant field of the paper citing paper p. That is,

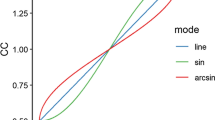

In order to combine the FCC with the r as weight, we propose a new evaluation index, Revised FCC (RFCC), by multiplying F(p, q) by r(p, q) raised to \(\alpha \). The value rfcc(p, q) of the RFCC is given by the following equation:

However, \(\alpha \) is a parameter for adjusting the weight. In this paper, we set \(\alpha =1\).

3 Evaluation

3.1 Gathering Paper Data and Basic Analysis

In this section, we explain the collection method of the papers used for analysis, and conduct basic analyses of the data.

The data was gathered from Scopus. In this experiment, “bibliometrics” was chosen as a query keyword, and 10,186 papers published from 1976 to 2015 were gathered using search API. This data is written in JSON format. The items are as follows: “Content Type,” “Search identifier,” “Complete author list,” “Resource identifiers,” “Abstract Text,” “First author,” “Page range,” “SCOPUS Cited-by URI,” “Result URL,” “Document identifier,” “Publication date,” “Source title,” “Article title,” “Cited by count,” “ISSN,” “Issue number,” and “Volume.”

Although 4,533 papers have no citation at all, there is also a paper with 4,483 citations. They are cited from 258,332 papers in total. Table 1 shows a part of the number of citations of the papers. It has the number of citations of the top 10 papers, and of the bottom 10 papers.

Figure 1 plots the data of Table 1 as a log-log graph. In this graph, the frequency of citations seems to follow the power law.

Next, I gathered papers citing each paper stated in the acquired JSON data. Information on citing papers is posted in the URL indicated in the “link” of a JSON item. Since information on the URL cannot be acquired with the API, we obtained the HTML file using the “wget” of the UNIX command. Information on 20 citing papers is published in one HTML file. However, as can be seen from Table 1, there were also citing papers exceeding 20. Regarding those, it was necessary to repeatedly obtain HTML files while changing the “wget” parameters.

Since there were 4,533 papers without a citation, we obtained the papers that cited 13,667 papers. The information on 116,743 papers on 13,667 cited papers was obtained through the execution of 10,719 wget. There were 62,265 papers when duplication was removed.

3.2 Evaluation of Revised Focused Citation Count

For the papers collected by Sect. 3.1, the following three rankings were applied. First, the top 20 papers are shown in Table 2 with the citation count indicated by Scopus. Additionally, Table 3 shows the top 20 papers in a ranking using FCC [9]. Furthermore, Table 4 shows the top 20 papers in the Revised Focused Citation Count (RFCC) proposed in this study. In these tables, “Paper ID” is the paper ID (eid) of Scopus. By replacing the \({<}\)eid\({>}\) part of the URLFootnote 1 with this ID, it is possible to acquire the data of the corresponding thesis.

The extraction precision of the top 20 papers extracted by CC, FCC, and RFCC was evaluated as follows. Two testers judged whether they were appropriate as papers on “bibliometrics.” They gave a rating of “1” to appropriate papers and “0” to inappropriate papers. We totaled the judgments as the number of votes, and calculated Precision@N.

The result of the CC is shown in Table 2, the result of the FCC is shown in Table 3, and the result of the RFCC is shown in Table 4. Moreover, the graph of Precision@N is shown in Fig. 2.

The result of CC was shown in Table 2, the result of FCC was shown in Table 3, and the result of RFCC was shown in Table 4. Moreover, the graph of Precision@N is shown in Fig. 2.

4 Discussion

Despite having good results in preliminary sample experiments, the RFCC could not demonstrate performance exceeding the FCC in the experiment conducted in this study. This seems to be due to the fact that the FCC shows sufficient performance, rather than the RFCC not working well. Moreover, in this experiment, using a single query keyword “bibliometrics” with a wide range of subjects may be a contributing factor.

Depending on the academic field to be searched and the kind and number of query keywords, the performance of the FCC and RFCC may differ from the results of this study. Furthermore, having two testers is a small number and may lead to biased results. As a future task, it is necessary to conduct more detailed evaluation experiments.

5 Related Work

Objective evaluation of a paper by the contents is difficult. Therefore, it is common to instead perform an evaluation using the following data of a paper: the evaluation of the journal in which the paper was published, and the paper’s citation count.

The Journal Impact Factor (JIF) [2, 3] is one of the most popular evaluation measures of scientific journals. Thomson Reuters updates and provides the scores for journals in annual Journal Citation Reports (JCR). The JIF of a journal describes the citation counts of an average paper published in the journal. JIF is considered to be the de facto standard to evaluate not only a journal, but also a researcher, research organization, and paper.

However, some problems were pointed out with JIF. Pudovkin and Garfield [8] pointed out that JIF is not appropriate to be used as a measure to compare different disciplines. Modification of JIF by normalization has been studied as one of the key issues [6, 8]. Bergstrom proposed EigenFactor [1] which solves the problem of JIF by adjusting the weight of citations. Nakatoh et al. [7] proposed to combine the relatedness of a journal to the user’s query with JIF.

The citation count is used as more direct criteria of a paper. However, some researchers have pointed out problems. Martin [5] reported that the citation count gained support as criteria. Kostoff [4] showed that the citation count as a criterion of research evaluation has the following characteristics: (a) theoretical correlation is not necessarily between a citing paper and the cited paper, (b) incorrect research may be cited, (c) a methodical paper is easy to cite, and (d) the citation count will be raised by self-citation.

Our concern is the citation count not as criteria of performance evaluation, but as criteria for selecting papers. However, the problem noted by Kostoff is common to both. The solution proposed by our study is including the quality of a citation in the evaluation. Eliminating the citation from papers with a low relation decreases the influence of the problems of (a) and (b). We think that this enables the selection of more appropriate papers.

6 Conclusion

When conducting a research survey, it is very important to quickly find suitable papers. It is common practice by researchers to select relevant papers by searching with query keywords, ranking papers by citation number, and checking in order from the highest ranked papers. However, if a paper that had a query keyword as a non-primary word has many citations, it would impede a researcher’s ability to quickly find the appropriate paper. We have already proposed a Focused Citation Count (FCC) that supports the finding of suitable papers by setting the number of citations as a more appropriate evaluation index by properly focusing on cited papers which are the sources of citation counts. In this study, we proposed an improved method of FCC. An empirical evaluation of “bibliometrics” related to 10,186 papers showed that the FCC method was effective, but that the proposed RFCC method was not as effective. As a future task, a more detailed evaluation is necessary.

Notes

- 1.

http://www.scopus.com/record/display.url?eid=<eid>&origin=resultslist.

References

Bergstrom, C.: Eigenfactor: measuring the value and prestige of scholarly journals. Coll. Res. Libr. News 68(5), 314–316 (2007)

Garfield, E.: Citation indexes for science. Science 122(3159), 108–111 (1955)

Garfield, E.: The history and meaning of the journal impact factor. J. Am. Med. Assoc. 295(1), 90–93 (2006)

Kostoff, R.N.: Performance measures for government-sponsored research: overview and background. Scientometrics 36(3), 281–292 (1996)

Martin, B.R.: The use of multiple indicators in the assessment of basic research. Scientometrics 36(3), 343–362 (1996)

Marshakova-Shaikevich, I.: The standard impact factor as an evaluation tool of science fields and scientific journals. Scientometrics 35(2), 283–290 (1996)

Nakatoh, T., Nakanishi, H., Hirokawa, S.: Journal impact factor revised with focused view. In: Neves-Silva, R., Jain, L.C., Howlett, R.J. (eds.) Intelligent Decision Technologies. SIST, vol. 39, pp. 471–481. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-19857-6_40

Pudovkin, A.I., Garfield, E.: Rank-normalized impact factor: a way to compare journal performance across subject categories. Proc. ASIST Annu. Meet. 41, 507–515 (2004)

Nakatoh, T., Nakanishi, H., Baba, K., Hirokawa, S.: Focused citation count: a combined measure of relevancy and quality. In: Proceedings of the 4th International Congress on Advanced Applied Informatics (IIAI-AAI), pp. 166-170 (2015). https://doi.org/10.1109/IIAI-AAI.2015.282

Acknowledgement

This work was partially supported by JSPS KAKENHI Grant Number 18K11990.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

List of papers used for evaluation.

-

2-s2.0-0000742089 Garfield E.: “Is citation analysis a legitimate evaluation tool?,” Scientometrics, 1(4), pp.359–375, (1979)

-

2-s2.0-0032256758 Kleinberg Jon M.: “Authoritative sources in a hyperlinked environment,” Proceedings of the Annual ACM-SIAM Symposium on Discrete Algorithms, pp.668–677, (1998)

-

2-s2.0-0033584703 Garfield E.: “Journal impact factor: A brief review,” CMAJ, 161(8), pp.979–980, (1999)

-

2-s2.0-0033721503 Broder A., Kumar R., Maghoul F., Raghavan P., Rajagopalan S., Stata R., Tomkins A., Wiener J.: “Graph structure in the Web,” Computer Networks, 33(1), pp.309–320, (2000)

-

2-s2.0-0035021707 Gambhir S.S., Czernin J., Schwimmer J., Silverman D.H.S., Coleman R.E., Phelps M.E.: “A tabulated summary of the FDG PET literature,” Journal of Nuclear Medicine, 42(5 SUPPL.), pp.1S–93S, (2001)

-

2-s2.0-0035079213 Cronin B.: “Bibliometrics and beyond: some thoughts on web-based citation analysis,” Journal of Information Science, 27(1), pp.1–7, (2001)

-

2-s2.0-0035981386 Borgman C.L., Furner J.: “Scholarly communication and bibliometrics,” Annual Review of Information Science and Technology, 36 3 72 (2002)

-

2-s2.0-10944272139 Adamic L.A., Adar E.: “Friends and neighbors on the Web,” Social Networks, 25(3), pp.211–230, (2003)

-

2-s2.0-15444370852 Weingart P.: “Impact of bibliometrics upon the science system: Inadvertent consequences?,” Scientometrics, 62(1), pp.117–131, (2005)

-

2-s2.0-15444371414 Van Raan A.F.J.: “Fatal attraction: Conceptual and methodological problems in the ranking of universities by bibliometric methods,” Scientometrics, 62(1), pp.133–143, (2005)

-

2-s2.0-22144431885 Ioannidis J.P.A.: “Contradicted and initially stronger effects in highly cited clinical research,” Journal of the American Medical Association, 294(2), pp.218–228, (2005)

-

2-s2.0-0000994704 Lane P.J., Lubatkin M.: “Relative absorptive capacity and interorganizational learning,” Strategic Management Journal, 19(5), pp.461–477, (1998)

-

2-s2.0-27144502742 Lee S., Bozeman B.: “The impact of research collaboration on scientific productivity,” Social Studies of Science, 35(5), pp.673–702, (2005)

-

2-s2.0-27844542383 Larson R.R.: “Bibliometrics of the world wide web: An exploratory analysis of the intellectual structure of cyberspace,” Proceedings of the ASIS Annual Meeting, 33 71 78 (1996)

-

2-s2.0-29944438252 Garfield E.: “The history and meaning of the journal impact factor,” Journal of the American Medical Association, 295(1), pp.90–93, (2006)

-

2-s2.0-3142699221 King D.A.: “The scientific impact of nations,” Nature, 430(6997), pp.311–316, (2004)

-

2-s2.0-33748074153 Daim T.U., Rueda G., Martin H., Gerdsri P.: “Forecasting emerging technologies: Use of bibliometrics and patent analysis,” Technological Forecasting and Social Change, 73(8), pp.981–1012, (2006)

-

2-s2.0-33846834126 Josang A., Ismail R., Boyd C.: “A survey of trust and reputation systems for online service provision,” Decision Support Systems, 43(2), pp.618–644, (2007)

-

2-s2.0-34249309179 Wuchty S., Jones B.F., Uzzi B.: “The increasing dominance of teams in production of knowledge,” Science, 316(5827), pp.1036–1039, (2007)

-

2-s2.0-36849014874 Meho L.I., Yang K.: “Impact of data sources on citation counts and rankings of LIS faculty: Web of science versus scopus and google scholar,” Journal of the American Society for Information Science and Technology, 58(13), pp.2105–2125, (2007)

-

2-s2.0-37649007281 Hirsch J.E.: “Does the h index have predictive power?,” Proceedings of the National Academy of Sciences of the United States of America, 104(49), pp.19193–19198, (2007)

-

2-s2.0-38549127657 Bornmann L., Daniel H.: “What do citation counts measure? A review of studies on citing behavior,” Journal of Documentation, 64(1), pp.45–80, (2008)

-

2-s2.0-0001327392 Narin F.: “Patent bibliometrics,” Scientometrics, 30(1), pp.147–155, (1994)

-

2-s2.0-39549086558 Rosvall M., Bergstrom C.T.: “Maps of random walks on complex networks reveal community structure,” Proceedings of the National Academy of Sciences of the United States of America, 105(4), pp.1118–1123, (2008)

-

2-s2.0-4243148480 Kleinberg J.M.: “Authoritative sources in a hyperlinked environment,” Journal of the ACM, 46(5), pp.604–632, (1999)

-

2-s2.0-47749113622 Abraham C., Michie S.: “A Taxonomy of Behavior Change Techniques Used in Interventions,” Health Psychology, 27(3), pp.379–387, (2008)

-

2-s2.0-70149091772 Kulkarni A.V., Aziz B., Shams I., Busse J.W.: “Comparisons of citations in web of science, Scopus, and Google Scholar for articles published in general medical journals,” JAMA - Journal of the American Medical Association, 302(10), pp.1092–1096, (2009)

-

2-s2.0-78650989464 Chen Y.-C., Yeh H.-Y., Wu J.-C., Haschler I., Chen T.-J., Wetter T.: “Taiwan’s National Health Insurance Research Database: Administrative health care database as study object in bibliometrics,” Scientometrics, 86(2), pp.365–380, (2011)

-

2-s2.0-78951494661 D’Angelo C.A., Giuffrida C., Abramo G.: “A heuristic approach to author name disambiguation in bibliometrics databases for large-scale research assessments,” Journal of the American Society for Information Science and Technology, 62(2), pp.257–269, (2011)

-

2-s2.0-84859429914 Aguillo I.F.: “Is Google Scholar useful for bibliometrics? A webometric analysis,” Scientometrics, 91(2), pp.343–351, (2012)

-

2-s2.0-84903289127 Rodriguez A., Laio A.: “Clustering by fast search and find of density peaks,” Science, 344(6191), pp.1492–1496, (2014)

-

2-s2.0-84928532180 Hicks D., Wouters P., Waltman L., De Rijcke S., Rafols I.: “Bibliometrics: The Leiden Manifesto for research metrics,” Nature, 520(7548), pp.429–431, (2015)

-

2-s2.0-84989591524 MacRoberts M.H., MacRoberts B.R.: “Problems of citation analysis: A critical review,” Journal of the American Society for Information Science, 40(5), pp.342–349, (1989)

-

2-s2.0-0011001807 Hood W.W., Wilson C.S.: “The literature of bibliometrics, scientometrics, and informetrics,” Scientometrics, 52(2), pp.291–314, (2001)

-

2-s2.0-85008492587 Todeschini R., Consonni V.: “Molecular Descriptors for Chemoinformatics,” Molecular Descriptors for Chemoinformatics, 2 1 252 (2010)

-

2-s2.0-0016996037 Price D.D.S.: “A general theory of bibliometric and other cumulative advantage processes,” Journal of the American Society for Information Science, 27(5), pp.292–306, (1976)

-

2-s2.0-0029783235 Garfield E.: “Fortnightly Review: How can impact factors be improved?,” BMJ, 313(7054), pp.411–413, (1996)

-

2-s2.0-0030960168 Wenneras C., Wold A.: “Nepotism and sexism in peer-review,” Nature, 387(6631), pp.341–343, (1997)

-

2-s2.0-0031049280 Seglen P.O.: “Why the impact factor of journals should not be used for evaluating research,” British Medical Journal, 314(7079), pp.498–502, (1997)

-

2-s2.0-0032047559 White H.D., McCain K.W.: “Visualizing a discipline: An author co-citation analysis of information science, 1972-1995,” Journal of the American Society for Information Science, 49(4), pp.327–355, (1998)

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Nakatoh, T., Hirokawa, S. (2019). Evaluation Index to Find Relevant Papers: Improvement of Focused Citation Count. In: Yamamoto, S., Mori, H. (eds) Human Interface and the Management of Information. Visual Information and Knowledge Management. HCII 2019. Lecture Notes in Computer Science(), vol 11569. Springer, Cham. https://doi.org/10.1007/978-3-030-22660-2_41

Download citation

DOI: https://doi.org/10.1007/978-3-030-22660-2_41

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-22659-6

Online ISBN: 978-3-030-22660-2

eBook Packages: Computer ScienceComputer Science (R0)