Abstract

With the development of the self-driving technology, more diverse in-car interaction design has become an essential tendency for the future. In order to enhance the interaction experience between driver and back-seat passenger, we propose the concept of “AEIC” (Augmented Emoji in Car). By modifying the existing in-car equipment with information augment, we can offer multi-modal ways of interaction inside the car for the driver and back-seat passenger, so that they can have a better mutual understanding of each other under the premise of safe drive regulations. By optimizing the current central rear-view mirror, the mood of back-seat passenger can be detected and judged by means of facial recognition. Though the sound, light and Emoji, the passenger’s current emotional state will be fed back to the driver. In order to test the designed scheme, we constructed a vehicle driving simulator experimental platform with highly free scalability to obtain interactive data to conduct the user research. We invited 20 participants to participate in our prototype test, by observing them simulating the driving scenes we designed in the platform, their interactive feedback information can be precisely collected. Then we conducted depth interviews with the users about the earlier experience of interactions in the simulated scenario to obtain the reference information for further design iterations.

On the basis of these results we concluded that: a. With the conversion of light source color and the intervention of Emoji (symbolic expression), the driver can have a better understanding of the back-seat passenger’s emotional state. b. The information interface, which is designed based on the attention span and short-term memory, can optimizing information output in the car without increasing the cognitive load. However, we found that there are significant differences in interactive feedbacks of different user combinations in scenario simulation. Accordingly, we should design according to different users. At the same time, we believe that the experiments based on the vehicle driving simulator platform is conducive to the preliminary basic research on in-car interaction. By amplifying interaction feedback in the driving scenes, the designers can identify the potential design needs more conveniently. Therefore, we will continue to use the platform to conduct the simulation experiments on the design prototype in the follow-up research to gradually address the gap in the interaction between the front-seat driver and back-seat passenger.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

1.1 In-car Interaction Change Caused by the Development of Self-driving Technology

With the popularization of L1 and L2 self-driving technology, the driving system are gradually taking on the driving tasks [18]. The relationship among drivers, passengers and system has been changed fundamentally [11]. Which means that the role of driving system for drivers will gradually change from a tool to the collaborative partner [7]. Predictably, with the reduction of driving tasks, there will be more communication between drivers and passengers in the car, therefore, the design for back-seat passengers need to be devoted to more attention on it.

However, to date the design for back-seat passengers are mainly based on players and game consoles. Compared with other aspects of the car, many auto manufacturing companies only offer limited possibilities of personalization and adaptability for the back seat [3], which is due to the current in-car interaction design, which is trying to avoid the interference from the back to the front. However, such an idea shall not apply to the future self-driving. We should work on eliminating the gap between the front-seat driver and the back-seat passenger, and offer more opportunities to the interaction between them.

1.2 In-car Interaction: Difficulties in Communication

Driving can be regarded as a social activity, since we do not drive the car alone in most cases, sometimes passengers may distract the driver’s attention, or help the driver complete the task [3]. Sharing the same space by multiple people in a car can be considered as a kind of social activities. However, the space arrangement of the car weakens the observation between the people in it. For example, the design of seats makes people unable to see each other’s face due to the visual barriers, which results the fragility and distrust of the in-car social communication. The interior space of a car can be divided into the following areas: driver, front seat passenger and rear seat. Because of the space barrier, it is generally agreed that there is little communication between the front-seat and the back-seat people [9]. Moreover, we also find that there is obvious difference between their conversation experience during driving: In fact, it’s difficult for people in the car to truly understand what the other side said as a result of the driving noise, the acoustic characteristics of in-car space and the facial orientation of passengers [2].

1.3 Difficulties in Emotional Understanding of Language Dialogue

Human communication is naturally influenced by emotions [14]. Darwin believed that the face is the most important medium of emotional expression for human beings. Facial expressions can express all the predominant emotions, as well as every subtle change of them [16]. People are used to integrating facial and voice information to manage the emotional cognition, lacking either of them may lead to some misunderstandings [10]. However, the barrier between the front and back seats makes it impossible for people to judge others’ emotions based on their faces, which is relatively easier to cause the in-car interaction develops to negative direction.

Accordingly, we put forward the concept of AEIC (Augmented Emoji in Car). By displaying simple symbols which represent the expressions of the back-seat passengers in the central rear-view mirror, we can enhance driver’s comprehension of the emotions from the back-seat passengers without increasing the cognitive load. We expect to make up for the “neglected” back-seat scenarios in the current in-car interaction field to propose some valuable design directions and targets, as well as study their pain points by exploring the interactive scenes of the communication among people in a car. We made a prototype according to the design schemes and invite users to experiment with it, and draw conclusions through analyzing the data and interviews to support further design.

2 Related Work

In this section, we present an overview of related work on driver, front seat passenger and rear seat. These cases either introduce how to enhance the information exchange among people in a car or how to provide some activities for passengers to improve the riding experience:

nICE is an in-car game played by everyone in a car, including the driver, in accordance with their capabilities [17]. But it is mainly is designed to pass the long-distance travel time, rather than enhance the emotional communication.

HomeCar Organiser is a connected system that enables families to coordinate schedules, activities, and artifacts between the home and activities placed in the car [8]. However, it is mainly used for sharing information among families and eliminating the boundary between the home and family car to create a seamless experience rather than enhancing emotional connections.

RiddleRide investigated the activities and the technology usage in the rear seat as social and physical space by a cultural probing study [9], but the interaction between the rear seat and the driver has remained to be explored.

Backseat Games is an in-car augmented reality game [6]. It is designed to entertain children during long journeys. Unlike it mainly focus on the passengers’ experience rather than the driver’s participation, we extended our researches into the relationship between passengers and drivers.

Although these works are based on similar requirements with Emo-view, such as improving passengers’ experience or facilitating communication among people in a car. But very few of these works have been resolved the problems of emotional communication during riding. So far, little attention has been devoted to study and test the emotional communication between front and rear seats and driving efficiency of drivers. We focus on the driver to test and compare the prototypes to explore how to enhance in-car emotional communication to achieve good emotional interaction and driving experience in the premise of reasonable driver’s attention distribution.

3 Concept

Based on our design motivation and previous research, we believe that the interaction design for the car should be based on the following concepts:

-

1.

Our design allows drivers to complete our experiments while doing the main task without completely changing their visual focus, so the prototype needs to be at a similar level to the driver’s eyes. By locating the information in the driver’s line of sight, we can minimize his/her scanning distance from the road to the mirror [4]. On the basis of this concept, we believe that we can modify the existing equipment, rather than adding new pieces of equipment and interactions to reduce the driver’s visual burden.

-

2.

We consider that taking simple Emoji, color and voice interaction as the main features is more suitable and reliable for the driving. Given that the way of in-car interaction must adapt to the automation era of high development, it is difficult for traditional user interfaces to produce a coherent user experience in this complex environment [12], so we should consider multi-modal ways of interaction as the main method. We should not simply add more information on the screen, but adopt a simpler as well as more effective way to enhance the information in the car.

-

3.

Taking the safety into account, the key is to distribute the driver’s attention which is ought to have for the driving task after following information display and interaction control [17]. Based on Wickens’ research on multi-resource load theory [22], and the fixed capacity hypothesis [24], it can be concluded that it is more likely to lead to the shortage of cognitive resources in a mobile environment [5]. Therefore, the design should offer the least information that requires drivers to pay attention to [15]. We suggest that by limiting the number of the focus points, the visual scanning time can be reduced to allow the driver focus on the main task (driving task) [21], so as to enhance the in-car information output without increasing the cognitive load.

4 Design

We consider that the key to in-car interactions is the ways of emotional communication and expression, since the improper understanding of emotions may lead to obstacles in language communication [19]. Using the Emoji, especially the positive one, properly is beneficial to the formation of interpersonal relationships and cognitive understanding. They not only help participants express emotions and manage relationships, but also serve as the words to help people understand information [20]. Based on the concept of AEIC, we modified the central rear-view mirror to make it as an output interface to enhance the Emoji information.

Our design is also called Emo-view, which can detect the emotional state of the back-seat passenger by facial recognition, and display the Emoji on the left side of central rear-view mirror to show the emotions of them. Emo-view means the combination of Emoji and the view of the driver, as well as the concept of AEIC to allow drivers look in their rear-view mirror along with the information enhancement, rather than increasement during usual driving.

5 Prototype and Test

5.1 Introduction of Prototype

This prototype is assembled by Microduino’s mCookie suite, and the display function of it is realized by LED Strip and Dot Matrix-Color (see Fig. 1).

The LED Strip is attached on the bottom left side of the rear-view mirror, close to the driver. If the back-seat passenger is in the positive mood, it will turn green, or yellow if he/she is in the neutral mood, and red represents the negative mood.

The Dot Matrix-Color is installed on the left side of the rear-view mirror, which can display 6 emotions: calm, happy, exciting, bored, lost and angry.

5.2 The Experimental Platform

For evaluation of interaction design and user experience (UX), using laboratory equipment to capture and record the subjective performance of real users dynamically is particularly important and effective, which is also the advantage of field and laboratory experiments [23]. However, it’s unrealistic for the early prototype to have the early field test, due to the high cost and low efficiency, lack of conditional control, the difficulties in making prototypes, high-risk to participants and so on. Especially in the experiment of automobile interaction technology, security issues of participants will be extraordinarily magnified. Therefore, these studies mainly rely on laboratory experiments [1].

We hence determine to build a driving simulator platform for product tests and user experience experiments in the laboratory (see Fig. 2). The driving platform is equipped with large screens and speakers to simulate different scenarios, and placeholders of steering wheel, touchable screen and rear-view mirror to ensure multiple diversified tests.

In order to simulate the main task of driving, it is also necessary to record the accuracy and response time of the users’ driving tasks. The platform contains a unique driving task simulation system to finish the main task of driving simulation through a pedal and animations on the screen. If a red light (or any custom event) appears in these animations, the driver needs to step on the pedal, and the system will record the reaction time of it. The red light shows up randomly in this experiment, and the driver need to step on the pedal within 3 s after it appears.

5.3 Introduction of Experiments

Research Through Design.

User-centered design is aimed to develop products that meet users’ needs, the point is identifying and providing solutions to meet users’ needs. We adopt the concept of research through design to explore users’ needs, and take our design as the experimental subject to explore them through experiments.

Our experiment was conducted in Haidian District, Beijing, China. 8 groups of experiments had been done, each of them consists 1 driver and 1 back-seat passenger, with a total of 16 people, whose age ranged from 19 to 30, including 10 females and 6 males.

Wizard of Oz (WoZ) is a technique for prototyping and experimenting dynamically with a system’s performance that uses a human in the design loop. It was originally developed by HCI researchers in the area of speech and natural language interfaces as a means to understand how to design systems before the underlying speech recognition or response generation systems were mature [13].

We use Wizard of OZ to understand the real-time characteristics of our design for in-car interaction so that we can get the response during driving simulation. We arrange human “wizard” to play the driving environment and sound, the back-seat passenger to simulate his/her emotions with sound, and the driver to participate in natural language dialogue for observation.

Each group of users has 2 free conversations, each for 5 min in the test. The back-seat passenger needs to deduce 3 kinds of emotions according to the prompt in every conversation, and every time the driver should take the simulated driving as the main task while communicating with the back-seat passenger. The difference between 2 tests is that for the first time, there is no Emo-view, and for the second time, Emo-view was added.

6 Analysis

After the experiments, we analyze the results by data analysis and video analysis and conducted unstructured interviews with each group of subjects.

-

1.

First of all, we analyzed the overall response time of the main task with or without Emo-view. On the basis of single factor analysis of variance we concluded that the response time of main task with Emo-view (M = 180.89, SD = 55.51) was significantly shorter than that without Emo-view (M = 211.26, SD + 96.45), F(1,297) = 46.327, P < 0.001). Soon afterward, by observing the distribution of reaction time, we found that in most cases, the reaction time of the main task is more stable in the early stage, and fluctuates greatly in the later stage. Therefore, we compared the response time of the first 1/3 and the last 1/3 of the experiments, it can be concluded that when there was no Emo-view, the response time of main task in early stage (M = 182.47, SD = +58.52) was significantly shorter than that in later stage (M = 245.83, SD = +120.64), F(1,98) = 40.375, P < 0.001; and when there was Emo-view, the response time of main task in early stage (M = 184.36, SD = +52.13) had no significant difference (F(1,98) = 0.073, p = 0.787) with that in later stage (M = 183.12, SD = +6. 1.12). From the results we have obtained, one can conclude that his/her fatigue effect will show up as the experiment is carried out, which can lead to a longer reaction time without Emo-view, in contrast, with the help of Emo-view, the driver’s cognitive processing of the back-seat passenger’s emotion can be easier, which can be helpful to relieve his/her fatigue effect.

-

2.

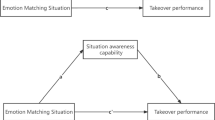

We take the driver as the main object of observation and record the performance of each group on the timeline, which includes the change of the back-seat passenger’s emotion, speaking, the point-in-time when the driver looks at Emo-view, and the reaction time he/she used to complete the main task. Here are 3 typical timelines (Fig. 3).

We analyzed the timelines of each group, mainly the situation of drivers watching Emo-view:

-

a.

Drivers’ reaction time of driving task has no obvious relationship with mood change, with or without Emo-view and dialogue.

-

b.

When drivers look at Emo-view, 67.5% of these actions occur when they speak and 27.3% when they listen. It can be concluded that drivers need to pay more attention to the emotion of the back-seat passenger when expressing their views.

-

c.

78.8% of the cases in which drivers look at Emo-view while whey talk occurred at the beginning (32.7%) and the end (46.1%). We conclude that drivers need to confirm the effect of their conversation by observing the passenger’s emotion when they start and end a topic.

-

d.

61.9% of the cases in which drivers look at Emo-view while they listening occurred in the middle period. It can be assumed that in most cases, the driver feel they need to rely on Emo-view to judge the emotion of the back-seat passenger during the listening.

-

e.

When drivers look at Emo-view, only 36.3% of the cases occur when Emo-view is switching emotion, which leads us to conclude that Emo-view doesn’t cause the drivers do not often notice switching, so it can be inferred that Emo-view does not cause the excessive cognitive load.

-

a.

-

3.

The content of our interviews is aimed at the cognitive difference between drivers and back-seat passengers about the dialogic emotion and the driver’s actual user experience. Each group was interviewed for about 3–5 min after the experiment. The results can be summarized as follows:

-

a.

With the help of Emo-view, the driver’s perception of the back-seat passenger’s emotion can be more accurate and reliable. The emotional cognition of both sides become more consistent.

-

b.

Drivers think that with the help of Emo-view, they can change the topic according to the emotional state of the back-seat passenger, so as to lead the topic to a more positive one.

-

c.

Emo-view can help drivers understand the back-seat passenger more easily and reduce the distraction cost of drivers. Some drivers said that without Emo-view it would affect their driving task when considering the emotion of the back-seat passenger.

-

d.

Drivers generally believe that Emo-view will not cause too much psychological burden. They only need to look at it when necessary and ignore it in normal times.

-

a.

The analysis showed that Emo-view did not occupy the driver’s attention resource allocation, nor did it cause the driver’s cognitive load. And users generally believe that Emo-view is helpful for in-car communication, and the driver is more dependent on Emo-view when expressing.

7 Discussion and Future Work

According to the test of real users, without changing the space arrangement of the car, that is, the driver can not face the back-seat passenger directly, Emo-view can still be positive and effective. We have considered comprehensively whether it can assist the front and back seats to understand each other better, in which cases it is more needed by the driver, and whether it will affect the safety of driving.

First of all, the data makes us conclude that Emo-view won’t occupy the driver’s attention allocation, nor does it cause his/her cognition load. Since the interaction design of cars has always been accompanied with the severe safety problems, we concentrate the driver’s perception of emotional understanding into a simple Emoji based on the attention span theory and let the interaction happens in the driver’s most comfortable parallel line of sight, that is, the central rear-view mirror. So the driver does not need to do the additional interactive operation when looking at Emo-view, he/she can complete the action of viewing it and understand the emotions it expressed instantaneously at the same time in normal driving situations.

Secondly, on the basis of video analysis and interviews, we concluded that drivers need Emo-view. They generally believe that with the help of Emo-view, they can communicate better with the back-seat passenger and managed to bring the conversation to positive topics. However, without Emo-view, some drivers may ignore the driving task because of the deep thinking. And we find that Emo-view can be a reference for drivers’ in-car conversation. They will subconsciously look at Emo-view to confirm whether they have “wrong-talking” when they want to start or end a conversation.

However, Emo-view doesn’t take into account the relationship within the dialogue. We think that there should be different responses when they are parents, friends, couples, and strangers, since not everyone wants the driver to observe their emotions, and some people prefer to show positive emotions. Therefore, the future work will focus on the following aspects to make some breakthroughs:

-

a.

In order to make Emo-view applicable for people with different relationships, we set up several Emo-view modes, and invite participants with different relationships to take experiments to estimate the referentiality of interpersonal relationships to Emo-view modes’ settings.

-

b.

Considering that the back-seat passengers can debug Emo-view themselves during the experiment, such as avoid displaying emotions on certain topics. Emo-view can gradually learn the expression habits of back-seat passengers after several operations. Emo-view can be customized to adapt to any back-seat user.

-

c.

Particular emphasis should be placed on parent-child users. The car is the most frequent way for children to travel, and when the front and back seats are in a parent-child relationship, the driver will pay much more attention to the back-seat passenger than an ordinary one. Thus, we believe that parent-child users need Emo-view more than ordinary ones.

In view of the encouraging results of experiments in laboratory environment presented by now, we will continue to test in a higher fidelity environment in the future, as well as in a car for real world testing. Although laboratory testing has many advantages and is safer, we believe that the real driving environment can help us to reveal new discoveries about the application effect of Emo-view.

8 Implications for In-car Interaction

Our research inspired and expanded the possibility of the interaction between the front and back seats. We believe that the primary issue of in-car interaction is “how to make the communication between front and back seats more smoothly”. To improve the situation, we conducted a survey, and locate the main problem at the emotional understanding of the passengers in the car. The experiments demonstrate that Emo-view is effective and needed, and it won’t distract but optimize the driver’s concentration on driving tasks. We believe that our main design findings are as follows:

First, the output of emotional expression from the back-seat passenger based on the concept of AEIC can indeed lead the communication between the front and the back seats to a positive state. Drivers can be well accustomed to using Emo-view as a reference for conversation when expressing their views.

Second, minimizing the realizing focal points and the amount of information based on the attention span theory can almost eliminate the driver’s extra cognitive allocation beyond driving tasks. And the data shows that drivers’ observation of Emo-view can actually lighten their cognitive burden of back-seat emotions.

Third, modifying existing equipment to display information in it can minimize the driver’s scanning distance, so as to reduce the difficulties in operation and visual burden of the driver.

Last, for in-car interaction experiments, laboratory testing would be a better choice. Indoor driving simulator platform cannot only guarantee participants‘safety, but also allow researchers to customize many scenarios and variable conditions, and observe more detailed interactive data of subjects.

9 Conclusion

In order to adapt to the development of self-driving, we consider that as a social space, the in-car interaction will face notable variations. So we designed Emo-view to assist the communication between the front and back seats. With the help of Emo-view, the driver can easily observe the emotional state of the back-seat passenger in the rear-view mirror to have a more active dialogue with he/she. In this paper, we use Arduino to build a simple prototype, set up 6 emotional characteristics, and build a driving simulator platform to do some test by means of Wizards of OZ.

The good results of Emo-view make us confident about the concept of AEIC. From the results of indoor driving simulator platform we have obtained, it can be concluded that if we pay attention to both cognitive load theory and human-machine interaction design theory, we can enhance the in-car information output to drivers without increasing the cognitive load to ensure safe driving, as well as improve the dialogue experience between the front and back seats.

Future work will focus on the comprehension of emotions and interaction among the front and back seats. Not only study the applicability of Emo-view in various other situations, but also explore a range of possibilities of more different ways of interaction to gradually fill the gap in the in-car interaction design for the front and back seats.

References

Soro, A., Rakotonirainy, A., Schroeter, R., Wollstädter, S.: Using augmented video to test in-car user experiences of context analog HUDs. In: Adjunct Proceedings of the 6th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, pp. 1–6. ACM, New York (2014). https://doi.org/10.1145/2667239.2667302

Mahr, A., Pentcheva, M., Müller, C.: Towards system-mediated car passenger communication. In: Proceedings of the 1st International Conference on Automotive User Interfaces and Interactive Vehicular Applications, pp. 79–80. ACM, New York (2009). https://doi.org/10.1145/1620509.1620525

Meschtscherjakov, A., et al.: Active corners: collaborative in-car interaction design. In: Proceedings of the 2016 ACM Conference on Designing Interactive Systems, pp. 1136–1147. ACM, New York (2016). https://doi.org/10.1145/2901790.2901872

Meschtscherjakov, A., Wilfinger, D., Gridling, N., Neureiter, K., Tscheligi, M.: Capture the car!: qualitative in-situ methods to grasp the automotive context. In: Proceedings of the 3rd International Conference on Automotive User Interfaces and Interactive Vehicular Applications, pp. 105–112. ACM, New York (2011). https://doi.org/10.1145/2381416.2381434

Oulasvirta, A., Tamminen, S., Roto, V., Kuorelahti, J.: Interaction in 4-second bursts: the fragmented nature of attentional resources in mobile HCI. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 919–928. ACM, New York (2005). https://doi.org/10.1145/1054972.1055101

Brown, B., Laurier, E.: The trouble with autopilots: assisted and autonomous driving on the social road. In: Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, pp. 416–429. ACM, New York (2017). https://doi.org/10.1145/3025453.3025462

Brunnberg, L., Hulterström, K.: Designing for physical interaction and contingent encounters in a mobile gaming situation (2003)

Cycil, C., Eardley, R., Perry, M.: The HomeCar organiser: designing for blurring home-car boundaries. In: Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct Publication, pp. 955–962. ACM Press, New York (2014). https://doi.org/10.1145/2638728.2641556

Wilfinger, D., Meschtscherjakov, A., Murer, M., Osswald, S., Tscheligi, M.: Are we there yet? A probing study to inform design for the rear seat of family cars. In: Campos, P., Graham, N., Jorge, J., Nunes, N., Palanque, P., Winckler, M. (eds.) INTERACT 2011. LNCS, vol. 6947, pp. 657–674. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-23771-3_48

De Gelder, G.B., Böcker, K.B., Tuomainen, J., Hensen, M., Vroomen, J.: The combined perception of emotion from voice and face: early interaction revealed by human electric brain responses. Neurosci. Lett. 260(2), 133–136 (1999). https://doi.org/10.1016/S0304-3940(98)00963-X

Flemisch, F., Heesen, M., Hesse, T., Kelsch, J., Schieben, A., Beller, J.: Towards a dynamic balance between humans and automation: authority, ability, responsibility and control in shared and cooperative control situations. Cogn. Technol. Work 14(1), 3–18 (2012). https://doi.org/10.1007/s10111-011-0191-6

Pettersson, I., Ju, W.: Design techniques for exploring automotive interaction in the drive towards automation. In: Proceedings of the 2017 Conference on Designing Interactive Systems, pp. 147–160. ACM, New York (2017). https://doi.org/10.1145/3064663.3064666

Kelley, J.F.: An empirical methodology for writing user-friendly natural language computer applications. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 193–196. ACM, New York (1983). https://doi.org/10.1145/800045.801609

Lubis, N., Sakti, S., Neubig, G., Toda, T., Purwarianti, A., Nakamura, S.: Emotion and its triggers in human spoken dialogue: recognition and analysis. In: Rudnicky, A., Raux, A., Lane, I., Misu, T. (eds.) Situated Dialog in Speech-Based Human-Computer Interaction. SCT, pp. 103–110. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-21834-2_10

Sodhi, M., Reimer, B., Cohen, J.L., Vastenburg, E., Kaars, R., Kirschenbaum, S.: On-road driver eye movement tracking using head-mounted devices. In: Proceedings of the 2002 Symposium on Eye Tracking Research & Applications, pp. 61–68. ACM, New York (2002). https://doi.org/10.1145/507072.507086

Newmark, C.: Charles Darwin: the expression of the emotions in man and animals. In: Senge, K., Schützeichel, R. (eds.) Hauptwerke der Emotionssoziologie, pp. 85–88. Springer, Wiesbaden (2013). https://doi.org/10.1007/978-3-531-93439-6_11

Broy, N., et al.: A cooperative in-car game for heterogeneous players. In: Proceedings of the 3rd International Conference on Automotive User Interfaces and Interactive Vehicular Applications, pp. 167–176. ACM Press, New York (2011). https://doi.org/10.1145/2381416.2381443

van der Heiden, R.M.A., Iqbal, S.T., Janssen, C.P.: Priming drivers before handover in semi-autonomous cars. In: Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, pp. 392–404. ACM, New York (2017). https://doi.org/10.1145/3025453.3025507

Saarni, C., Buckley, M.: Children’s understanding of emotion communication in families. Marriage Fam. Rev. 34(3–4), 213–242 (2002). https://doi.org/10.1300/J002v34n03_02

Tang, Y., Hew, K.F.: Emoticon, emoji, and sticker use in computer-mediated communications: understanding its communicative function, impact, user behavior, and motive. In: Deng, L., Ma, W.W.K., Fong, C.W.R. (eds.) New Media for Educational Change. ECTY, pp. 191–201. Springer, Singapore (2018). https://doi.org/10.1007/978-981-10-8896-4_16

Mancuso, V.: Take me home: designing safer in-vehicle navigation devices. In: Extended Abstracts on Human Factors in Computing Systems, CHI 2009, pp. 4591–4596. ACM, New York (2009). https://doi.org/10.1145/1520340.1520705

Wickens, C.D.: Processing resources in attention. In: Parasuraman, R., Davies, R. (eds.) Varieties of Attention. Academic Press, New York (1984)

Sun, X., May, A.: A comparison of field-based and lab-based experiments to evaluate user experience of personalised mobile devices. Adv. Hum.-Comput. Int. 2013, 1, Article no. 2 (2013). https://doi.org/10.1155/2013/619767

Young, M.S., Stanton, N.A.: Malleable attentional resources theory: a new explanation for the effects of mental underload on performance. Hum. Factors: J. Hum. Factors Ergon. Soc. 44(3), 365–375 (2002). https://doi.org/10.1518/0018720024497709

Acknowledgments

This research is a phased achievement of “Smart R&D Design System for Professional Technology” project and supported by the Special Project of National Key R&D Program——“Research and Application Demonstration of Full Chain Collaborative Innovation Incubation Service Platform Based on Internet+” (Question ID: 2017YFB1402000), “Study on the Construction of Incubation Service Platforms for Professional Technology Fields” sub-project (Subject No. 2017YFB1402004).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Chao, C., He, X., Fu, Z. (2019). Emo-View: Convey the Emotion of the Back-Seat Passenger with an Emoji in Rear-View Mirror to the Driver. In: Rau, PL. (eds) Cross-Cultural Design. Culture and Society. HCII 2019. Lecture Notes in Computer Science(), vol 11577. Springer, Cham. https://doi.org/10.1007/978-3-030-22580-3_9

Download citation

DOI: https://doi.org/10.1007/978-3-030-22580-3_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-22579-7

Online ISBN: 978-3-030-22580-3

eBook Packages: Computer ScienceComputer Science (R0)