Abstract

The current paper examined the construct of Autonomy Agent Teammate Likeness (AAT). Advanced technologies have the potential to serve as teammates versus as mere tools during tasks involving human partners, yet little research has been done to quantify the factors that shape teaming perceptions in a human-machine domain. The current study used a large online sample to test a set of candidate measures related to the AAT model. Psychometric analyses were used to gauge their utility. The results demonstrate initial support for the validity of the AAT model.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Advancements in autonomy are beginning to allow humans to partner with machines in order to accomplish work tasks in various settings. These technological advances necessitate novel approaches toward human-machine interaction. Machines are believed to help enable efficiency and in some cases decision superiority and adaptability, yet in dynamic environments such as those that exemplify the real-world, communication and understanding of intent and maintaining shared awareness are critical for collaboration [1]. As human-agent teaming (HAT) becomes more prevalent in the field and as a research topic [1, 2], the need to understand humans’ psychological perceptions of the intelligent agent (technological work partner) is increasingly important, especially in terms of the agent’s perceived role, which may impact trust processes and, ultimately, HAT effectiveness. Specifically, it remains unclear how humans actually perceive intelligent agents and how consistent these perceptions are with existing taxonomies found in the psychology of teams. In particular, as autonomous technology becomes more sophisticated, do human operators come to see their intelligent partners more as teammates than as tools, and what are the key factors leading to such perceptions? These global research questions are the focal topics of interest that we begin to address in the present study.

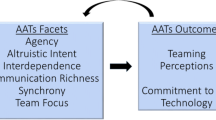

The present research builds on nascent theoretical foundations on the construct of autonomous agent teammate-likeness (AAT) and initiates a direct test of a recently published novel conceptual model of AAT [3], which defines the AAT construct and presents a comprehensive model of its proposed antecedents and outcomes (see Fig. 1 below). The overarching goal is to contribute to the extant literature on HAT by conducting the first known empirical tests of the AAT construct with the explicit goal of developing a valid measure of AAT, an important advancement from which researchers can further progress research on HAT.

2 Theoretical Background and Current Research

2.1 Human-Machine Teaming

HMT is a relatively recent phenomenon, and research on the process of HAT is relatively nascent. According to Chen and Barnes [4], an agent refers to an entity that has some level of autonomy, the ability to sense and act on the surrounding environment, and the ability and authority to direct activities toward goals. While teaming and research on teams of people has been an omnipresent topic of inquiry within the management literature for decades, the concept of human-agent teams is novel [1,2,3,4].

Nass et al. [5,6,7] were among the first to systematically theorize and empirically study perceptions of intelligent agents as potential teammates. Around the time personal computing was gaining popularity, Nass et al. [7] conducted five studies demonstrating that interactions with non-humans (computers) are indeed fundamentally social. Interestingly, a recent study had a similar finding, discovering that people felt uncomfortable giving a robot a response that would be potentially upsetting or distressing to the robot [8].

Nass, Fogg and Moon [6] conducted a study focusing on people’s perceptions of personal computers (PCs) specifically as teammates, with the understanding that PCs have become more than merely tools (e.g., dialogue partners, secretaries). These authors attempted to trigger team perceptions with manipulations built around team formation (as evidenced in social psychology literature). Overall, the study demonstrated that at least some human-computer teaming processes mirror human-human teaming processes.

Groom and Nass [5] argued that, according to universal definitions of what constitutes a successful team (e.g., sharing mental models, subjugating individual needs for group needs, trusting one another), human-robot partners based on current technology lacked “humanness” and thus cannot yet be considered as teammates. When implementing human-agent teams, Groom and Nass recommend a complementary model or approach in which intelligent agents and human partners compensate for one another’s weaknesses, rather than forcibly substituting an intelligent agent for a human partner. However, an important caveat to note about the Groom and Nass research paper is that these authors focused on objective features of teams—based on common definitions of teams—rather than humans’ perceptions of their partners. While intelligent agents may not yet have the full capabilities of a human partner, Wynne and Lyons [3] contend that humans may very well come to perceive “teammate-likeness” in their counterparts, as suggested by Nass et al.’s [6] earlier work. In fact, interestingly, “humanness” was the #1 most cited requirement for an extant instrumental relationship to be viewed as a teammate-based relationship, based on recent analyses of qualitative data [9]. Additionally, a recent article found that humans may have different attitudes toward their human teammates versus their robot teammates in heterogeneous teams [10].

The aforementioned early research on HMT sets the stage for human-machine interaction in future applications. Yet, human perception of machine teammates is still relatively unexplored and thus unknown. The present research builds on emergent theory on AAT (Wynne and Lyons [3]) in the quest to understand psychological perceptions of machine teammates and the consequences of these perceptions.

2.2 Autonomous Agent Teammate-Likeness

Autonomous agent teammate-likeness (AAT) is defined as, “the extent to which a human operator perceives and identifies an autonomous, intelligent agent partner as a highly altruistic, benevolent, interdependent, emotive, communicative, and synchronized agentic teammate, rather than simply an instrumental tool” [3; p. 3]. Wynne and Lyons [3] conceptualized the AAT construct to have six distinct dimensions (reflecting each aspect of the definition above), two general clusters of antecedents, and two general clusters of outcomes (as well as boundary conditions, contextual factors, and a feedback loop), as illustrated in Fig. 1. Antecedents can be grouped into characteristics of each of the partners in the human-agent team (i.e., human operator characteristics and intelligent agent characteristics). Outcomes that theoretically may manifest from human operators’ psychological perceptions of AAT can be grouped into cognitive-affective outcomes and behavioral outcomes.

The AAT construct is predicated on the notion that shared awareness among teammates is central to effective teamwork. Collaboration is driven by shared awareness of intent between team members [11], while this is often noted in the domain of collaborating human partners, this is also very relevant in the domain of collaboration between humans and intelligent agents [2]. The AAT construct helps to align team process factors to the domain of human-machine teaming.

The AAT taxonomy has support from a recent qualitative study. Lyons et al. [9] asked an online sample to describe the reasons why they view an intelligent technology (one that they have experience with) as a teammate versus as a tool. These open-ended responses were then coded based on a set of trained coders. The overall results of the study showed that the six dimensions of the AAT model played prominently in participants’ rationale for teammates versus tools explanations. Humanness was also heavily mentioned, yet it is plausible that this dimension represents a higher-order combination of the other 6 dimensions. In sum, it appears that these six factors—perceived agentic capability, perceived benevolence/altruistic intent, perceived task interdependence, task-independent relationship-building, richness of communication, synchronized mental model (see Table 1 for construct definitions)—might, in theory, explain the variance in human operators’ perceptions of AAT, or the perception that the intelligent agent is more like a teammate than like a tool. The current research begins the process of answering Wynne and Lyons’ (3) call to advance the AAT model with empirical examination of the various proximal and distal outcomes likely to emanate from perceptions of AAT. Specifically, we attempt to extend research in this line of inquiry by (1) developing and (2) validating appropriate methods to measure and confirm these dimensions. After a robust instrument is created and tested, it can be used in the future to empirically examine the relationships proposed in Wynne and Lyons [3].

2.3 Current Research: Purpose

In sum, despite the proliferation of autonomous technology with which humans partner to accomplish tasks in the field (in military, industrial, and civilian/consumer contexts), to date there is not yet an existing validated instrument that measures perceptions of HMT. Until recently, the concept of AAT has been noticeably absent in the research literature. The purpose of the current paper, therefore, is to begin to establish the validity of a novel measure of teammate perceptions (AAT scale, or AATS) to be used to measure humans’ perceptions of their technological work partners.

The immediate next step in this stream of research, thus, was to conduct a set of empirical studies as an initial test of the AAT model. The present paper is the first of such studies. Specifically, activities in the present research involves building a psychological instrument with which AAT can be measured. The current paper describes research that involves data collection and findings across an online sample. We expect the measure to demonstrate strong psychometric properties. Specifically, we expect results to demonstrate initial support for the proposed factor structure.

3 Method

3.1 Analytical Approach

The methodology in the present research is based on a well-established process of psychological scale development [12]. This process, which represents professional best practices and guidelines on scale development, involves six primary steps or stages: (1) Item Generation, (2) Questionnaire Administration, (3) Initial Item Reduction, (4) Confirmatory Factor Analysis, (5) Convergent/Discriminant Validity, and (6) Replication. According to Hinkin [12], first, a large number of items are written by researchers based on prior theory and/or empirical findings (e.g., quantitative or qualitative results from pilot studies, etc.), such that the breadth of the construct of interest is exhaustively represented. These items are then put into a questionnaire (survey)—along with measures of constructs that are purported to be related and unrelated to the construct of interest (see step 5)—and administered to a sample of respondents in order to gather data on the items and test them. Next, the performance/quality of the items is tested using various item analyses (e.g., inspection of means, item-total correlations) and scale analyses (exploratory factor analysis, or EFA); the pool of items is reduced based on the removal of poorly performing items that demonstrate poor psychometric properties. At this point, the researchers may choose to re-test the revised pool of items with another data collection from a new sample of respondents. The performance of the resulting scale is tested using scale analyses, and a confirmatory factor analysis (CFA) is conducted to confirm the proposed factor structure of the developing scale. Then, researchers attempt to demonstrate construct validity by examining relationships between constructs that are purported to be related (convergent validity) and unrelated (discriminant validity) to the construct of interest. Finally, the items and scale may be refined further and then the process is replicated with data collected from a new sample of respondents to confirm the results.

As a first step in the AAT scale development process, grounded in theory [3] and informed by preliminary empirical findings [(focus group data and qualitative data collection results) 9], item content was written by the present authors in order to develop a first draft of the AATS. Specifically, we had generated an exhaustive list of potential scale items through a process of writing, reviewing, revising, and refining a large number of items.

The next major step in this process involved a quantitative data collection (i.e., survey with closed-ended questions administered to individuals online) to develop the AATS, whereby the theoretically derived initial AAT measure was tested with scale and item analyses, to be followed by an iterative series of revision, additional data collection, re-testing, and refinement until the scale shows adequate psychometric properties. The present paper (Study 1) describes the quantitative data collection (i.e., Step 2 in Hinkin’s guidelines); notably, a follow-up study (Study 2) is currently in progress to validate the final, developed version of the scale and replicate prior results (i.e., Steps 3–6 in Hinkin’s guidelines).

3.2 Participants and Procedure

To collect data for Study 1, a participant recruitment advertisement was placed on Amazon Mechanical Turk (MTurk) using a third-party web-based application called TurkPrime, which is a data collection platform designed by and for behavioral science researchers [13]. MTurk is an online system that connects researchers—and others seeking participants to engage in certain tasks (“requesters”)—with individuals (“workers”) who wish to participate in various activities for remuneration (“Human Intelligence Tasks” or HITs), such as filling out survey-based questionnaires for research purposes. The recruitment advertisement solicited individuals who had some type of experience with a sophisticated technology (autonomous system, smart technology, technological partner, etc.). In order to be eligible to participate, respondents were required to be at least 18 years of age and a U.S. citizen.

The survey questionnaire was created using Qualtrics, a web-based surveying platform, and then it was administered to workers via a web link to the Qualtrics survey within the MTurk HIT. The survey had been previously pilot-tested with internal subject matter experts to ensure it was error-free and functioned as designed upon launch. Two-hundred and thirty-two participants responded to all items and completed the study.

3.3 Measures

Autonomous Agent Teammate-Likeness.

AAT was measured with a comprehensive initial list of items created by the current authors, based on the AAT theoretical framework [3]. Participants were first asked to think of and describe the single most technologically advanced equipment that he or she works with “as part of your job or one that you use regularly in your life.” Then they were asked to rate their agreement with a series of statements (each item representing one of the six AAT dimensions) using a 5-point Likert-type scale ranging from “Strongly Disagree” to “Strongly Agree.” The Agency scale had 10 items (α = .75), benevolence had 10 items (α = .91), communication (α = .71) and interdependence (α = .83) each started with 12 items, synchrony used 8 items (α = .80), and relationship-building started with 13 items (α = .89). The content of the actual items used in the study are listed in the tables below (Tables 3, 4, 5, 6, 7 and 8).

4 Results

Item analyses (e.g., means) and scale analyses (e.g., EFA) were conducted to assess the psychometric quality of the initial AATS instrument. Means, reliabilities, and intercorrelations across each of the six AAT dimensions are shown in Table 2. All dimension subscales demonstrated adequate internal consistency. Means tended to be near the mid-point of each scale, indicating that respondents perceived a moderate level of AAT in their interactions with their respective technological partners. Thus, a fairly high base rate of AAT perceptions was evidenced.

Additionally, the six AAT dimensions were fairly strongly and positively correlated within one another, indicating that the six dimensions were related. Notably, multicollinearity, which represents redundancy among variables, was not present, suggesting the six dimensions were indeed distinct constructs [14].

Results from the EFAs for each AAT dimension (shown in Tables 3, 4, 5, 6, 7 and 8) were used to identify and eliminate poorly performing items. First, principle components analysis (PCA) plots were produced and interpreted, which suggest how many “factors” are present in a particular scale for each dimension. Then, EFAs were conducted; factor loadings were inspected to determine how each item tended to fit in the factor(s). Generally, items that did not fit neatly into a factor (e.g., weak loadings, cross-loading) were flagged to be dropped from the scales, after their content was reviewed for fit with the construct in light of the empirical results. In theory, items that do not load well onto a factor—and thus do not fit well into the factor—express and represent something different than the other items in the factor [12]. These types of items are essentially interpreted by respondents as distinct from the other items in the scale. Refer to Tables 3 through 8 for EFA results (factor loadings) and item content for each of the six AAT dimensions.

Upon inspection of analysis results, three of the original 10 items were dropped from the agency dimension; two of the original 10 items were dropped from the benevolence dimension; six of the original 12 items were dropped from the communication dimension; none of the original 12 items were dropped (all retained for further testing) from the interdependence dimension; three of the original 8 items were dropped from the synchrony dimension; and two of the original 13 items were dropped from the relationship-building (teaming) dimension.

In sum, results from the current study (Study 1) demonstrated initial support for the proposed factor structure of the AATS. Furthermore, item elimination from these data analyses resulted in a revised, shortened AAT scale to be further tested and validated in subsequent studies.

5 Discussion

Despite the proliferation of autonomous technology with which humans partner to accomplish tasks in the field (in military, industrial, and civilian/consumer contexts), to date there is not yet an existing validated instrument that measures perceptions of human-machine teaming (HMT). Until recently, the concept of AAT has been noticeably absent in the research literature. The purpose of the present research was to begin to establish the validity of a novel measure of teammate perceptions (AAT scale, or AATS) to be used to measure humans’ perceptions of their technological work partners.

In the current study, we conducted an initial test of the AAT model with the development of a psychometrically sound measure of AAT perceptions. Early results suggest that, not only do humans have fairly strong perceptions of AAT in regards to their technological partners, but also that the AAT model appears to have some empirical support. Our results provide an early indication that the AATS generally is a strong reflection of the AAT theoretical model and offers promise that it is a good measure of what it is purported to measure (i.e., AAT perceptions).

A theoretical review paper advanced the new AAT theory by introducing and defining the AAT construct, presenting a conceptual framework, and proffering a number of testable propositions to guide future research [3]. The purpose of the present research is to follow up on the seminal Wynne and Lyons [3] paper; specifically, the goal was to conduct a set of empirical studies as an initial test of the AAT model. Data are still being collected, but the eventual contributions will be: (1) building a psychological instrument with which AAT can be measured, and (2) validating the instrument by examining relationships with various germane constructs using a “vignette” or narrative-based study that presented different AAT scenarios to participants (low-teaming vs. high-teaming manipulations) though the latter is an ongoing activity (i.e., Study 2). Human-machine teaming (HMT) is a relatively recent phenomenon, but it will continue to garner significant interest in the coming years, and the AATS will contribute to our knowledge of how people will come to trust and appropriately use increasingly human-like autonomous agent teammates.

5.1 Practical Implications: Applications and Future Directions

HMT is a priority topic for human factors psychology research, yet there does not exist a valid measure for evaluating the psychological manifestation of teammate perceptions. Thus, while many researchers tout “teaming” as the objectives, few will truly understand if “teaming” was accomplished unless measures related to teaming are employed in research designs. The AATS will be a highly useful tool to measure perceptions of technological work partners and how they may change over the course of the coming decades as autonomy evolves and becomes increasingly prevalent and relevant in military and other contexts. In the future, we hope that scientist-practitioners will (1) use the (soon-to-be) validated AATS to measure perceptions of AAT and (2) continue to test the elements of the AAT model [3] and implement the findings in applications of HMT.

Ultimately, we expect the final, refined version of the AATS to demonstrate adequate psychometric properties—as defined by gold standards outlined in the scale development literature [12] —as well as strong construct validity. Once data collection and analysis for Study 2 are complete, we expect findings will be of great interest to researchers focused on trust, teams, and/or HMT. We expect that the outcome of this research will have great potential in spurring additional research on trust in autonomy. In particular, we exhort researchers in this space to build off of these studies and test one (or more) of the many theoretical propositions in the Wynne and Lyons AAT conceptual model [3]. Future research should aim to further empirically test the model and investigate AAT relationships with affect, trust behaviors, commitment, team performance, and other phenomena relevant to HMT.

5.2 Conclusion

In conclusion, autonomous technologies—and human interaction with those technologies—are rapidly proliferating in both military and non-military environments. Despite the continuing growth of HMT and the emergence of HAT specifically, an understanding of how human operators perceive their machine partners is lacking. In particular, it remains unknown how humans perceive the AAT of their machine partners, or the extent to which the autonomous technology is more like a tool versus like a teammate. The overarching goal of this line of research is to understand how people perceive their machine partners over time, what affects synchrony and trust within human-agent teams, and what factors may influence HAT effectiveness. The AAT model is intended to motivate dialogue and future research in this expanding literature to support more effective HAT research and applications.

References

Chen, J.Y.C., Lakhmani, S.G., Stowers, K., Selkowitz, A., Wright. J., Barnes, M.: Situation awareness-based agent transparency and human-autonomy teaming effectiveness. Theoret. Issues Ergon. Sci. 19, 259–282 (2018)

Barnes, M.J., Lakhmani, S., Holder, E., Chen, J.Y.C.: Issues in human-agent communication. Aberdeen Proving Ground (MD): Army Research Laboratory, Report No: ARL-TR-8336 (2019)

Wynne, K.T., Lyons, J.B.: An integrative model of autonomous agent teammate-likeness. Theoret. Issues Ergon. Sci. 19, 353–374 (2018)

Chen, J.Y.C., Barnes, M.J.: Human-agent teaming for multirobot control: a review of the human factors issues. IEEE Trans. Hum.-Mach. Syst. 44, 13–29 (2014)

Groom, V., Nass, C.: Can robots be teammates? Benchmarks human-robot teams. Interact. Stud. 8, 493–500 (2007)

Nass, C., Fogg, B.J., Moon, Y.: Can computers be teammates? Int. J. Hum.-Comput. Stud. 45, 669–678 (1996)

Nass, C., Steuer, J., Tauber, E.R.: Computers are social actors. In: Proceedings of Human Factors in Computing Systems, pp. 72–78 (1994)

Hamacher, A., Bianchi-Berthouze, N., Pipe, A.G., Eder, K.: Believing in BERT: using expressive communication to enhance trust and counteract operational error in physical human-robot interaction. In: Proceedings of IEEE International Symposium on Robot and Human Interaction Communication (RO-MAN). IEEE, New York (2016)

Lyons, J.B., Wynne, K.T., Mahoney, S., Roebke, M.A.: Trust and human-machine teaming: a qualitative study. In: Lawless, W., Mittu, R., Sofge, D., Moskowitz, I., Russell, S. (eds.) Artificial Intelligence for the Internet of Everything. Elsevier (in press)

Perelman, B.S., Evans, A.W., Schaefer, K.E., Hill, S.G.: Attitudes toward risk and effort tradeoffs in human-robot heterogeneous team operations. In: Proceedings of the Human Factors and Ergonomics Society Annual Meeting (2018)

Salas, E., Cooke, N.J., Rosen, M.A.: On teams, teamwork, and team performance: discoveries and developments. Hum. Factors 50, 540–547 (2008)

Hinkin, T.R.: A brief tutorial on the development of measures for use in survey questionnaires. Organ. Res. Methods 1, 104–121 (1998)

Litman, L., Robinson, J., Abberbock, T.: TurkPrime.com: a versatile crowdsourcing data acquisition platform for the behavioral sciences. Behav. Res. Methods 45, 1–10 (2016)

Tabachnick, B.G., Fidell, L.S.: Using Multivariate Statistics. Pearson Education, Boston (2007)

Funding

This research was supported an AFRL Summer Faculty Fellowship award to Dr. Kevin Wynne and by Air Force Research Laboratory contracts FA8650-16-D-6616-0001.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Wynne, K.T., Lyons, J.B. (2019). Autonomous Agent Teammate-Likeness: Scale Development and Validation. In: Chen, J., Fragomeni, G. (eds) Virtual, Augmented and Mixed Reality. Applications and Case Studies . HCII 2019. Lecture Notes in Computer Science(), vol 11575. Springer, Cham. https://doi.org/10.1007/978-3-030-21565-1_13

Download citation

DOI: https://doi.org/10.1007/978-3-030-21565-1_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-21564-4

Online ISBN: 978-3-030-21565-1

eBook Packages: Computer ScienceComputer Science (R0)