Abstract

Building a reliable and efficient collision avoidance system for unmanned aerial vehicles (UAVs) is still a challenging problem. This research takes inspiration from locusts, which can fly in dense swarms for hundreds of miles without collision. In the locust’s brain, a visual pathway of LGMD-DCMD (lobula giant movement detector and descending contra-lateral motion detector) has been identified as collision perception system guiding fast collision avoidance for locusts, which is ideal for designing artificial vision systems. However, there is very few works investigating its potential in real-world UAV applications. In this paper, we present an LGMD based competitive collision avoidance method for UAV indoor navigation. Compared to previous works, we divided the UAV’s field of view into four subfields each handled by an LGMD neuron. Therefore, four individual competitive LGMDs (C-LGMD) compete for guiding the directional collision avoidance of UAV. With more degrees of freedom compared to ground robots and vehicles, the UAV can escape from collision along four cardinal directions (e.g. the object approaching from the left-side triggers a rightward shifting of the UAV). Our proposed method has been validated by both simulations and real-time quadcopter arena experiments.

J. Zhao and X. Ma—Contributed equally.

This research is funded by the EU HORIZON 2020 project, STEP2DYNA (grant agreement No. 691154) and ULTRACEPT (grant agreement No. 778062).

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

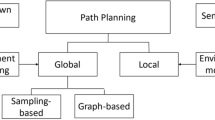

UAV is one of the most attractive but vulnerable robot platform, which has the potential to be applied in tons of scenarios, such as geography survey, agriculture fertilization, exploration in dangerous or disaster regions, products delivery, shooting photos. Safety of the UAV is always a vital property in a UAV application. Thus, researchers always seeking for better Sense and Avoidance (SAA) technics for UAVs. Classic UAVs use GPS or optic flow [12, 18] to navigate, and onboard distance sensor like ultra sonic, infrared, laser, or a cooperative system to avoid obstacles as reviewed by [23]. However, these distance sensors are largely dependent on obstacles’ materials, texture and backgrounds’ complexity, thus, they can only work in simple and structured environment [6]. Lidar and Vision based methods is more diverse and applicable. One popular vision based method is to detect and locate obstacles in a reconstructed map, mark out the frontiers of the obstacles as banded fields in the map, and then use specified pathfinding algorithm (e.g. heuristic algorithm) to generate safe trajectories to avoid collision [1, 2, 16]. This is also named as Simultaneous Localization and Mapping (SLAM), but its high demand of computational burden prevent it from small or micro UAVs.

On the other hand, bio-inspired vision based collision detecting methods are standing out for their efficiency. For example, Optic Flow (OF) is a widely used vision based motion detecting method inspired by biological mechanism in flies and bees [21]. It is also introduced in collision avoidance technology, e.g. Zufferey [30] applied 1D OF sensor onto a 30 g light weight fixed wing UAV and achieved automatic obstacle avoidance in indoor (GPS denied) structured environment. And later in 2009 [5] their group achieved autonomous avoidance towards trees with 7 OF sensor on a fixed wing platform. Griffiths [11] used optical mouse (key-point matching) converted OF sensor to fly through Canyon, besides the OF sensor, it also integrated a laser ranger for directly approaching obstacles. Serres [20] used a pair of EMD based OF sensor to avoid lateral obstacles for a hovercraft. Sabo [18] applied OF onto quadcopters and repeated some benchmark experiments to analysis the behaviours for honeybee-like flying robot, however, the algorithm was still computed off board. Stevens [22] achieved collision avoidance in cluttered 3D environments.

Lobula Giant Movement Detector (LGMD) is another bio-inspired neural network inspired by Locusts vision system, and especially, superior in detecting approaching obstacles and avoiding imminent collisions. Compared to Optic Flow, LGMD is more specialised for detecting directly approaching obstacles and eliminate redundant image difference caused by shifting things and backgrounds. The LGMD neuron and its presynaptic neural network has been modeled [17] and promoted by many researchers [8, 9, 24]. As a collision detecting model, LGMD has been introduced to mobile robots [4, 13], embedded systems [10, 14], hexapod walking robot [7], blimp [3] and cars [25, 26].

Basic LGMD model provide the threat level of collision in the whole field of view (FoV), but it is not enough to make wise avoidance behaviour, hence, early research generated randomly turn direction in mobile robots [13]. Shigang [27] divided the field of view into two bilateral halves, and discussed both winner-take-all and steering-wheel network in direction control system of the mobile robot. Compared to mobile robots, UAV has more degree of freedom, and is more vulnerable during flight. In the extremely limited literature of LGMD research on UAV platforms, Salt [19] implemented a neuromorphic LGMD model using recording from a UAV platform, and divided the FoV into half twice for direction information. But there is no real-time flight conducted. Our previous research has proved the applicability of LGMD on Quadcopter [28] real-time flight and collision avoidance. Previously, the quadcopter can only avoid obstacles by randomly turn left or right in horizontal plane. To acquire the information about the coming direction of imminent obstacles, this research proposed a new image partition strategy, especially for LGMD application on UAVs, and a corresponding steering method for 3D avoidance behaviour. Both video simulation and real-time flight demonstrated the performance of this method.

2 Model Description

2.1 LGMD Process

The LGMD process algorithm used in this paper is inherited from our previous research [28]. The LGMD process is composed of five groups of cells, which are P-cells (photoreceptor), I-cells (inhibitory), E-cells (excitatory), S-cells (summing) and G-cells (grouping), compared to previous model, we added four single competitive LGMD cells representing LGMD output of four sections: Left, Right, Up, and Down. The image is divided as shown in Fig. 1.

A schematic illustration of the proposed LGMD based competitive neuron network for collision detection. \([\#]\) denotes the inherited LGMD process as described in our previous research [28].

The first layer of the neuron network is composed of P cells, which are arranged in a matrix, formed by luminance change between adjacent frames. The output of a P cell is given by:

where \(P_f(x,y)\) is the luminance change of pixel(x, y) at frame f, \(L_f(x,y)\) and \(L_{f-1}(x,y)\) are the luminance at frame f and the previous frame.

The output of the P cells forms the input of the next layer and is processed by two different types of cells, which are I (inhibitory) cells and E (excitatory) cells. The E cells pass the excitatory flow directly to S layer so that the E cells has the same value to its counterpart in P Layer; While the I cells pass the inhibitory flow convoluted by surrounded delayed excitations.

The I layer can be described in a convolution operation:

where \( [w]_I \) is the convolution mask representing the local inhibiting weight distribution from the centre cell of P layer to neighbouring cells in S layer, a neighbouring cell’s local weight is reciprocal to its distance from the centre cell. To adapt fast image motion during UAV flight, \( [w]_I \) is set differently to it in mobile robot [13], the inhibition radius is expanded to 2 pixels:

The next layer is the Sum layer, where the excitation and inhibition from the E and I layer is combined by linear subtraction, and after summation. Next, Group layer is involved to reduce the noise caused by sporadic image change or backgrounds. Detailed equation and parameters can be found in our previous work [28].

When it comes to G layer, The unnormalized membrane potential of four C-LGMDs are Calculated respectively:

where Diag1, Diag2, denote the coordinates in y axis of the two diagonals, and \(G_f(x,y)\) is the cells value of G layer, as illustrated in Fig. 2. For more details about the process from \(P_{f}(x,y)\) to \(G_f(x,y)\) please looks in our previous work [28].

Previously, the membrane potential of the LGMD cell \(K_{f0}\) is the summation of every pixel in G layer:

Now it also equals to the summation of the four C-LGMD neurons:

and then \(K_f\) is adjusted in range (0, 255) by a sigmoid equation:

where \(C_1\) and \(C_2\) are constants to shape the normalizing function, limiting the excitation \(\kappa _f\) varies within [0, 255], \(n_{cell}\) represents the total number of pixels in one frame of image. The membrane potential of the four C-LGMDs, is also limited in (0, 255) by calculating their proportion in \(K_{f0}\), instead of modified with sigmoid function again:

If \(\kappa _f\) exceeds its threshold, then an LGMD spike is produced:

An impending collision is confirmed if successive spikes last consecutively no less than \(n_{sp}\) frames:

And then, based on the result of the competitive C-LGMDs, DCMD will switch to the corresponding escape command, and the command is sent through USART interface to the flight control system. The process from DCMD to PID based motor control system is shown in pseudocode Algorithm 1.

3 System Overview

In this section, the outline of the whole system is described. A system composed of Quadcopter, embedded LGMD detector, Ground Station, Remote and auxiliary sensors is depicted in Fig. 3. Luminance information is collected by the camera on the detector board, and then involved into the LGMD algorithm, the output command is passed through a USART port into the flight control to monitor avoiding tasks.

3.1 Quadcopter Platform

The UAV platform used in this research is a customized quadcopter with the skeleton size of 33 cm between diagonally rotors. The flight control module we used is based on a STM32F407V and provides 5 USART interface for extra peripheral. Multiple sensors are applied for data collection and enhance the stability of the quadcopter, including an IMU (Inertial Measurement Unit), an ultrasonic sensor, an optic flow sensor and the LGMD detector, as illustrated in Fig. 3. The Pix4Flow optic flow module [12] is occupied as a position and velocity feedback in horizontal plane. The flight control module works as the central controller to combine the other parts together. It receives source data from the embedded IMU module (MPU6050), the Pix4flow optic flow sensor, and the LGMD detector, calculates out the PWM (Pulse-Width Modulation) values as the output to the four motors and it also sends back real time data for analysis through the nRF24L01 module.

4 Experiments and Results

To verify the performance of the proposed algorithm, both video simulation and arena real-time flight are conducted.

4.1 Video Simulation

The algorithm is firstly implemented on matlab and tested by a series recorded video, to verify whether the algorithm can distinguish stimulus from different directions. The results in Fig. 4 indicate that the new network is able to respond differently towards coming objects from different directions.

4.2 Hovering and Features Analysis

To further analyze the performance on quadcopter platform, we transplanted the algorithm into the embedded LGMD detector, and mounted the detector onto the quadcopter, stimulated the detector with test patterns while the quadcopter hovering in the air. Object is manually pushed towards the detector from four direction respectively, and each direction repeated 10 times. Figure 5 is an example of the trial scene, in which object is pushed towards the detector from left. According to the results in Fig. 6, the four competitive LGMD distinguished the coming direction of the object accurately. In all the four types of trials, when LGMD exceeds its threshold, the C-LGMD indicated the main direction is leading the other average values, even if the lowest performance (lower boundary of the shadow).

4.3 Arena Real-Time Flight

Finally, real-time flight and obstacle avoidance experiments are conducted to test the performance and robustness of the proposed directionally obstacle avoiding method. Trials reflecting four directions of coming object are set in two types: obstacles on the left and right side or on the upside and downside on the UAV’s route. The quadcopter is first challenged by a static obstacle and then challenged by a dynamic intruder. The results showed that the system is able to make smart escape behaviour based on the coming direction of the obstacle. The trajectories of these trials have been extracted and overlaid on a screenshot from the video, as shown in Fig. 7. Trajectories are detected by a python program using background subtractor [29] and template matching [15] method, and then printed onto a screen shot from the recorded video.

5 Conclusion

To conclude, a novel competitive LGMD and corresponding UAV control algorithm is proposed to address practical problems meet in UAV’s LGMD application. Both simulation and realtime flight experiments were conducted to analyze the proposed method, and the results showed high robustness. Based on the proposed competitive LGMD, quadcopter’s Real-time 3D collision avoidance is achieved in indoor environment. For the future work, totally autonomous flight in a larger arena should be take to analyze the boundary of this new method.

References

Achtelik, M., Bachrach, A., He, R., Prentice, S., Roy, N.: Stereo vision and laser odometry for autonomous helicopters in GPS-denied indoor environments. In: Unmanned Systems Technology XI, vol. 7332, p. 733219. International Society for Optics and Photonics (2009)

Bachrach, A., He, R., Roy, N.: Autonomous flight in unknown indoor environments. Int. J. Micro Air Veh. 1(4), 217–228 (2009)

Bermúdez i Badia, S., Pyk, P., Verschure, P.F.: A fly-locust based neuronal control system applied to an unmanned aerial vehicle: the invertebrate neuronal principles for course stabilization, altitude control and collision avoidance. Int. J. Rob. Res. 26(7), 759–772 (2007)

Bermúdez i Badia, S., Bernardet, U., Verschure, P.F.: Non-linear neuronal responses as an emergent property of afferent networks: a case study of the locust lobula giant movement detector. PLoS Comput. Biol. 6(3), e1000701 (2010)

Beyeler, A., Zufferey, J.C., Floreano, D.: Vision-based control of near-obstacle flight. Autonom. Rob. 27(3), 201 (2009)

Chee, K., Zhong, Z.: Control, navigation and collision avoidance for an unmanned aerial vehicle. Sens. Actuators A Phys. 190, 66–76 (2013)

Čížek, P., Milička, P., Faigl, J.: Neural based obstacle avoidance with CPG controlled hexapod walking robot. In: 2017 International Joint Conference on Neural Networks (IJCNN), pp. 650–656. IEEE (2017)

Fu, Q., Hu, C., Liu, T., Yue, S.: Collision selective LGMDs neuron models research benefits from a vision-based autonomous micro robot. In: 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 3996–4002. IEEE (2017)

Fu, Q., Hu, C., Peng, J., Yue, S.: Shaping the collision selectivity in a looming sensitive neuron model with parallel on and off pathways and spike frequency adaptation. Neural Netw. 106, 127–143 (2018)

Fu, Q., Yue, S., Hu, C.: Bio-inspired collision detector with enhanced selectivity for ground robotic vision system. In: BMVC (2016)

Griffiths, S., Saunders, J., Curtis, A., Barber, B., McLain, T., Beard, R.: Obstacle and terrain avoidance for miniature aerial vehicles. In: Valavanis, K.P. (ed.) Advances in Unmanned Aerial Vehicles, pp. 213–244. Springer, Dordrecht (2007). https://doi.org/10.1007/978-1-4020-6114-1_7

Honegger, D., Meier, L., Tanskanen, P., Pollefeys, M.: An open source and open hardware embedded metric optical flow CMOS camera for indoor and outdoor applications. In: 2013 IEEE International Conference on Robotics and Automation (ICRA), pp. 1736–1741. IEEE (2013)

Hu, C., Arvin, F., Xiong, C., Yue, S.: A bio-inspired embedded vision system for autonomous micro-robots: the LGMD case. IEEE Trans. Cogn. Dev. Syst. PP(99), 1 (2016)

Hu, C., Arvin, F., Yue, S.: Development of a bio-inspired vision system for mobile micro-robots. In: Joint IEEE International Conferences on Development and Learning and Epigenetic Robotics, pp. 81–86. IEEE (2014)

Lewis, J.P.: Fast normalized cross-correlation. In: Vision Interface, vol. 10, pp. 120–123 (1995)

Richter, C., Bry, A., Roy, N.: Polynomial trajectory planning for aggressive quadrotor flight in dense indoor environments. In: Inaba, M., Corke, P. (eds.) Robotics Research. STAR, vol. 114, pp. 649–666. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-28872-7_37

Rind, F.C., Bramwell, D.: Neural network based on the input organization of an identified neuron signaling impending collision. J. Neurophysiol. 75(3), 967–985 (1996)

Sabo, C., Cope, A., Gurny, K., Vasilaki, E., Marshall, J.: Bio-inspired visual navigation for a quadcopter using optic flow. In: AIAA Infotech@ Aerospace 404 (2016)

Salt, L., Indiveri, G., Sandamirskaya, Y.: Obstacle avoidance with LGMD neuron: towards a neuromorphic UAV implementation. In: 2017 IEEE International Symposium on Circuits and Systems (ISCAS), pp. 1–4. IEEE (2017)

Serres, J., Dray, D., Ruffier, F., Franceschini, N.: A vision-based autopilot for a miniature air vehicle: joint speed control and lateral obstacle avoidance. Autonom. Rob. 25(1–2), 103–122 (2008)

Serres, J.R., Ruffier, F.: Optic flow-based collision-free strategies: from insects to robots. Arthropod Struct. Dev. 46(5), 703–717 (2017)

Stevens, J.L., Mahony, R.: Vision based forward sensitive reactive control for a quadrotor VTOL. In: 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 5232–5238. IEEE (2018)

Yu, X., Zhang, Y.: Sense and avoid technologies with applications to unmanned aircraft systems: review and prospects. Prog. Aerosp. Sci. 74, 152–166 (2015)

Yue, S., Rind, F.C.: Collision detection in complex dynamic scenes using an LGMD-based visual neural network with feature enhancement. IEEE Trans. Neural Netw. 17(3), 705–716 (2006)

Yue, S., Rind, F.C.: A synthetic vision system using directionally selective motion detectors to recognize collision. Artif. Life 13(2), 93–122 (2007)

Yue, S., Rind, F.C., Keil, M.S., Cuadri, J., Stafford, R.: A bio-inspired visual collision detection mechanism for cars: optimisation of a model of a locust neuron to a novel environment. Neurocomputing 69(13), 1591–1598 (2006)

Yue, S., Santer, R.D., Yamawaki, Y., Rind, F.C.: Reactive direction control for a mobile robot: a locust-like control of escape direction emerges when a bilateral pair of model locust visual neurons are integrated. Autonom. Rob. 28(2), 151–167 (2010)

Zhao, J., Hu, C., Zhang, C., Wang, Z., Yue, S.: A bio-inspired collision detector for small quadcopter. In: 2018 International Joint Conference on Neural Networks (IJCNN), pp. 1–7. IEEE (2018)

Zivkovic, Z.: Improved adaptive Gaussian mixture model for background subtraction. In: Proceedings of the 17th International Conference on Pattern Recognition, ICPR 2004, vol. 2, pp. 28–31. IEEE (2004)

Zufferey, J.C., Floreano, D.: Toward 30-gram autonomous indoor aircraft: vision-based obstacle avoidance and altitude control. In: Proceedings of the 2005 IEEE International Conference on Robotics and Automation, ICRA 2005, pp. 2594–2599. IEEE (2005)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 IFIP International Federation for Information Processing

About this paper

Cite this paper

Zhao, J., Ma, X., Fu, Q., Hu, C., Yue, S. (2019). An LGMD Based Competitive Collision Avoidance Strategy for UAV. In: MacIntyre, J., Maglogiannis, I., Iliadis, L., Pimenidis, E. (eds) Artificial Intelligence Applications and Innovations. AIAI 2019. IFIP Advances in Information and Communication Technology, vol 559. Springer, Cham. https://doi.org/10.1007/978-3-030-19823-7_6

Download citation

DOI: https://doi.org/10.1007/978-3-030-19823-7_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-19822-0

Online ISBN: 978-3-030-19823-7

eBook Packages: Computer ScienceComputer Science (R0)